Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Hubbard Information MNGMNT Measure Anything

Caricato da

Ekaterina PolyanskayaDescrizione originale:

Titolo originale

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Hubbard Information MNGMNT Measure Anything

Caricato da

Ekaterina PolyanskayaCopyright:

Formati disponibili

Copyright HDR 2007

dwhubbard@hubbardresearch.com

1

How To

Measure Anything:

Finding the Value of

Intangibles in Business

Copyright HDR 2007

dwhubbard@hubbardresearch.com

2

How to Measure Anything

It started 12 years ago

I conducted over 60 major risk/return

analysis projects so far that included a

variety of impossible measurements

I found such a high need for

measuring difficult things that I

decided I had to write a book

The book was released in July 2007

with the publisher John Wiley & Sons

This is a sneak preview of many of

the methods in the book

Copyright HDR 2007

dwhubbard@hubbardresearch.com

3

How To Measure Anything

I love this book. Douglas Hubbard helps us create a path to know the

answer to almost any question, in business, in science or in life. Peter

Tippett, Ph.D., M.D. Chief Technology Officer at CyberTrust and inventor

of the first antivirus software

Doug Hubbard has provided an easy-to-read, demystifying explanation of

how managers can inform themselves to make less risky, more profitable

business decisions. Peter Schay, EVP and COO of The Advisory Council

As a reader you soon realize that actually everything can be measured

while learning how to measure only what matters. This book cuts through

conventional clichs and business rhetoric and it offers practical steps to

using measurements as a tool for better decision making. Ray Gilbert,

EVP Lucent

This book is remarkable in it's range of measurement applications and it's

clarity of style. A must read for every professional who has ever exclaimed

Sure, that concept is important but can we measure it? Dr. Jack Stenner,

CEO and co-founder of MetaMetrics, Inc.

A Few Examples

IT

Risk of IT

The value of better information

The value of better security

The Risk of obsolescence

The value of productivity when headcount is not reduced

The value of infrastructure

Business

Market forecasts

The risk/return of expanding operations

Business valuations for venture capital

Military

Forecasting fuel for Marines in the battlefield

Measuring the effectiveness of combat training to reduce roadside

bomb/IED casualties

Copyright HDR 2007

dwhubbard@hubbardresearch.com

4

Does Your Model Consider

1. Research shows most subject matter experts are statistically

overconfident. If you dont account for this, you will underestimate

risk in every model you make.

2. Most Monte Carlo models are created with little or no empirical

measures of any kind.

3. If a model includes the results of empirical measurements, its

usually not the measures the are the high payoff measures.

4. There is a way to make tradeoffs between higher risk/higher return

investments and lower risk/lower return investments that result in

an optimal portfolio. The final deliverable in most Monte Carlos is

a histogram not a risk/return optimized recommendation.

@RISK can support anything we are talking about!

Copyright HDR 2007

dwhubbard@hubbardresearch.com

5

Copyright HDR 2007

dwhubbard@hubbardresearch.com

6

Three Illusions of Intangibles

(The howtomeasureanything.com approach)

The perceived impossibility of

measurement is an illusion caused by not

understanding:

the Concept of measurement

the Object of measurement

the Methods of measurement

See my Everything is Measurable

article in CIO Magazine (go to articles

link on www.hubbardresearch.com

Copyright HDR 2007

dwhubbard@hubbardresearch.com

7

The Approach

Model what you know now

Compute the value of additional

information

Where economically justified, conduct

observations that reduce uncertainty

Update the model and optimize the

decision

Copyright HDR 2007

dwhubbard@hubbardresearch.com

8

Uncertainty, Risk & Measurement

Measuring Uncertainty, Risk and the Value of Information are closely

related concepts, important measurements themselves, and

precursors to most other measurements

The Measurement Theory definition of measurement: A

measurement is an observation that results in information

(reduction of uncertainty) about a quantity.

9

Calibrated Estimates

Decades of studies show that most managers are

statistically overconfident when assessing their

own uncertainty

Studies showed that bookies were great at assessing odds

subjectively, while doctors were terrible

Studies also show that measuring your own

uncertainty about a quantity is a general skill that

can be taught with a measurable improvement

Training can calibrate people so that of all the

times they say they are 90% confident, they will be

right 90% of the time

Copyright HDR 2007

dwhubbard@hubbardresearch.com

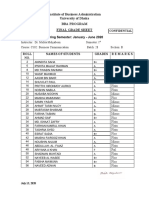

Group Subject % Correct (target 90%)

Harvard MBAs General Trivia 40%

Chemical Co. Employees General Industry 50%

Chemical Co. Employees Company-Specific 48%

Computer Co. Managers General Business 17%

Computer Co. Managers Company-Specific 36%

AIE Seminar (before training) General Trivia & IT 50%

AIE Seminar (some training) General Trivia & IT 80%

90% Confidence

Interval

Calibrated probability assessment

results from various studies

Calibrated Estimates: Ranges

Copyright HDR 2006

dwhubbard@hubbardresearch.com

10

Calibration Results

73% of people who go through calibration training achieve calibration

The remaining 27% seem stuck in overconfidence

for these we use calibration factors to adjust all ranges or we seek confirmation

from calibrated persons

Fortunately, they are not usually the persons relied on for most estimates

Copyright HDR 2006

dwhubbard@hubbardresearch.com

11

0%

20%

40%

60%

80%

90%

100%

Test 1 Test 2 Test 3 Test 4 Test 5*

7% 38% 55% % finished on this test 0% 0%

P

e

r

c

e

n

t

w

i

t

h

i

n

e

x

p

e

c

t

e

d

9

0

%

c

o

n

f

i

d

e

n

c

e

i

n

t

e

r

v

a

l

Target

12

Giga Analysts

Giga Clients

Statistical Error

Ideal Confidence

30%

40%

50%

60%

70%

80%

90%

100%

50% 60% 80% 90% 100%

25

75

71

65

58

21

17

68

152

65

45

21

70%

Assessed Chance Of Being Correct

P

e

r

c

e

n

t

C

o

r

r

e

c

t

99 # of Responses

In January 1997, I conducted a calibration training experiment with 16 IT Industry Analysts

and 16 CIOs to test if calibrated people were better at putting odds on uncertain future events.

The analysts were calibrated and all 32 subjects were asked To Predict 20 IT Industry events

Example: Steve Jobs will be CEO of Apple again, by Aug 8, 1997 - True or False? Are you

50%, 60%...90%, 100% confident?

1997 Calibration Experiment

Source: Hubbard Decision Research

Copyright HDR 2007

dwhubbard@hubbardresearch.com

The Value of Information

Copyright HDR 2007

dwhubbard@hubbardresearch.com

13

EVI p r V p r V p r V p r EV

i j j i

j

z

j j i l j j i

j

z

j

z

i

k

=

(

= = = =

( ) max ( | ), ( | ),... ( | ), *

, , , 1

1

2

1 1 1

O O O

The formula for the value of information has been around for almost

60 years. It is widely used in many parts of industry and government

as part of the decision analysis methods but still mostly unheard

of in the parts of business where it might do the most good.

What it means:

1. Information reduces uncertainty

2. Reduced uncertainty improves decisions

3. Improved decisions have observable consequences with

measurable value

The EOL Method

The simplest approach computes the change in Expected Opportunity

Loss

Opportunity Loss is the loss (compared to the alternative) if it turns out you

made the wrong decision

Expected Opportunity Loss (EOL) is the cost of being wrong times the

chance of being wrong

The reduction in EOL from more information is the value of the information.

In the case of perfect information (if that were possible) the value of

information is equal to the EOL.

Simple Binary Example: You are about to make a $20 million investment to

upgrade the equipment in a factory to make a new product. If the new

product does well, you save $50 million in manufacturing. If not, you lose

(net) $10 million. There is a 20% chance of the new product failing. Whats

it worth to have perfect certainty about this investment if that were possible?

Answer: 20% x $10 million = $2 million

Copyright HDR 2007

dwhubbard@hubbardresearch.com

14

The value of information is computed a little differently with a distribution,

but the same basic concepts apply

For each variable, there is a Threshold where the investment just breaks

even

If the threshold is within the range of possible values, then there is a chance

that you would make a different decision with better measurements

90%

Confidence

Interval

Threshold Mean

5% tail 5% tail

Threshold

Probability

Information Value w/Ranges

Copyright HDR 2007

dwhubbard@hubbardresearch.com

15

Normal Distribution Information Value

The expected value of the variable is the mean of the range of

possible values

A threshold is a point where the value just begins to make some

difference in a decision a breakeven

The expected value is on one side of the threshold

If the true value is on the opposite side of the threshold from the

mean then the best decision would have been different then one

based on the mean

The Threshold Probability is the chance that this variable could

have a value that would change the decision

Productivity Improvement in Process X

Example Threshold: 18% Productivity Improvement

Probability that true value is under required

threshold: 16.25%

25% 20% 15%

10%

5%

Copyright HDR 2007

dwhubbard@hubbardresearch.com

16

Normal Distribution VIA

The curve on the other side of the threshold is divided up into

hundreds of slices

Each slice has an assigned quantity (such as a potential productivity

improvement) and a probability of occurrence

For each assigned quantity, there is an Opportunity Loss

Each slices Opportunity Loss is multiplied by probability to compute

its Expected Opportunity Loss

Productivity Improvement in Process X

Example:

Productivity Improvement: 15%

Opportunity Loss: $1,855,000

Probability: 0.0053%

EOL: $98.31

25% 20% 15%

10%

5%

Copyright HDR 2007

dwhubbard@hubbardresearch.com

17

Normal Distribution VIA

Total EOL for all slices equals the EOL for the variable

Since EOL=0 with perfect information, then the Expected Value of Perfect

Information (EVPI) =sum(EOLs)

Even though perfect information is not usually practical, this method gives

us an upper bound for the information value, which can be useful by itself

Many of the EVPIs in a business case will be zero

I do this with a macro in Excel but it can also be estimated

25% 20% 15%

10%

5%

Productivity Improvement in Process X

Total of all EOLs = $58,989

This is the value of perfect

information about the

potential productivity

improvement

Copyright HDR 2007

dwhubbard@hubbardresearch.com

18

D

o

l

l

a

r

V

a

l

u

e

/

C

o

s

t

EVI

Maximum

ENBI

ECI

ENBI

Increasing Value & Cost of Info.

EVPI Expected Value of Perfect

Information

ECI Expected Cost of

Information

EVI Expected Value of

Information

ENBI Expected Net Benefit of

Information

$0

$$$

Low accuracy

High accuracy

EVPI

Aim for this range

Copyright HDR 2007

dwhubbard@hubbardresearch.com

19

The value of information levels off while the cost of information accelerates

Information value grows fastest at the beginning of information collection

Use iterative measurements that err on the side of small bites at the steep

part of the slope

Copyright HDR 2006

dwhubbard@hubbardresearch.com

20

The Measurement Inversion

T

y

p

i

c

a

l

A

t

t

e

n

t

i

o

n

E

c

o

n

o

m

i

c

R

e

l

e

v

a

n

c

e

Measurement Attention

vs. Relevance

After the information values for over 4,000

variables was computed, a pattern emerged.

The highest value measurements were almost

never measured while most measurement effort

was spent on less relevant factors

Costs were measured more than the more uncertain

benefits

Small hard benefits would be measured more than

large soft benefits

Also, we found that, if anything, fewer

measurements were required after the

information values were known.

See my article The IT Measurement Inversion in CIO Magazine

(its also on my website at www.hubbardresearch.com under the articles link)

Copyright HDR 2007

dwhubbard@hubbardresearch.com

21

Next Step: Observations

Now that we know what to measure, we

can think of observations that would

reduce uncertainty

The value of the information limits what

methods we should use, but we have a

variety of methods available

Take the Nike Method: Just Do It dont

let imagined difficulties get in the way of

starting observations

Copyright HDR 2007

dwhubbard@hubbardresearch.com

22

Some Useful Suggestions for

Making Empirical Observations

It has been done before

You have more data than you think

You need less data than you think

It is more economical than you think

The existence of noise is not a lack of signal

Its amazing what you can see when you look Yogi Berra

Copyright HDR 2006

dwhubbard@hubbardresearch.com

23

Statistics Goes to War

Several clever sampling methods exist that can

measure more with less data than you might

think

Examples: estimating the population of fish in

the ocean, estimating the number of tanks

created by the Germans in WWII, extremely

small samples, etc.

Copyright HDR 2007

dwhubbard@hubbardresearch.com

24

Measuring to the Threshold

Measurements have

value usually

because there is

some point where

the quantity makes a

difference

Its often much

harder to ask How

much is X than Is X

enough

Samples Below Threshold

20%

30%

40%

50%

0.1%

1%

10%

4 5 6 7 8 9 10

2 4 6 8 10 12 16 20

Number Sampled

C

h

a

n

c

e

t

h

e

M

e

d

i

a

n

i

s

B

e

l

o

w

t

h

e

T

h

r

e

s

h

o

l

d

1 2 3

18 14

2%

5%

0.2%

0.5%

0

Samples Below Threshold

20%

30%

40%

50%

0.1%

1%

10%

4 5 6 7 8 9 10

2 4 6 8 10 12 16 20

Number Sampled

C

h

a

n

c

e

t

h

e

M

e

d

i

a

n

i

s

B

e

l

o

w

t

h

e

T

h

r

e

s

h

o

l

d

1 2 3

18 14

2%

5%

0.2%

0.5%

0

1. Find the curve beneath the number of

samples taken

3. Follow the curve

identified in step 1

until it intersects the

vertical dashed line

identified in step 2.

2. Identify the dashed line marked by the number of samples that fell below the threshold

4. Find the

value on the

vertical axis

directly left of

the point

identified in

step 3; this

value is the

chance the

median of

the

population is

below the

threshold

Measuring to the threshold

Use this chart when using small

samples to determine the

probability that the median of a

population is below a defined

threshold

Example: You want to

determine how much time your

staff spends on one activity.

You sample 12 of them and

only two spend less than 1 hour

a week at this activity. What is

the chance that the median time

all staff spend is more than 1

hour per week? Look up 12 on

the top row, following the curve

until it intersects the 2 line on

the bottom row, and look up the

number to the left. The answer

is just over 1%.

The Math-less Statistics Table

Measurement is based on

observation and most

observations are just

samples

Reducing your uncertainty

with random samples is not

made intuitive in most

statistics texts

This table makes computing

a 90% confidence interval

easy

Copyright HDR 2006

dwhubbard@hubbardresearch.com

26

Copyright HDR 2007

dwhubbard@hubbardresearch.com

27

The Simplest Method

Bayesian methods in statistics use new information to update prior

knowledge

Bayesian methods can be even more elaborate that other statistical

methods BUT

It turns out that calibrated people are already mostly instinctively

Bayesian

The instinctive Bayesian approach:

Assess your initial subjective uncertainty with a calibrated probability

Gather and study new information about the topic (it could be qualitative or

even tangentially related)

Give another subjective calibrated probability assessment with this new

information

In studies where people were asked to do this, there results were

usually not irrational compared to what would be computed with

Bayesian statistics calibrated people do even better

Comparison of Methods

Traditional non-Bayesian

statistics (what you

probably learned in the first

semester of stats) assumes

you knew nothing prior to

the samples you took - this

is almost never true in

reality

Most un-calibrated experts

are overconfident and

slightly overemphasize new

information

Calibrated experts are not

overconfident, but slightly

ignore prior knowledge

Bayesian analysis is the

perfect balance; neither

under- nor over- confident,

uses both new and old

information

Copyright HDR 2007

dwhubbard@hubbardresearch.com

28

Ignores Prior Knowledge;

Emphasizes new data

Ignores New data;

Emphasizes Prior

Knowledge

Under-confident

(Stated

uncertainty is

higher than

rational)

Overconfident

(Stated

uncertainty is

lower than

rational)

Calibrated

Expert

Bayesian

Typical

Un-calibrated

Expert

Non-

Bayesian

Statistics

Stubborn Gullible

Overly

Cautious

Vacillating,

Indecisive

Analyzing the Distribution

29

25% 50% 75% 100% 125% -25% 0%

Risk of

Negative

ROI

Expected ROI

ROI = 0%

Probability of Positive ROI

Return on Investment (ROI)

Copyright HDR 2006

dwhubbard@hubbardresearch.com

How are you assessing the resulting histogram from a Monte Carlo

simulation?

Is this a good distribution or a bad one? How would you know?

Quantifying Risk Aversion

Copyright HDR 2007

dwhubbard@hubbardresearch.com

30

Acceptable Risk/Return

Boundary

Investment Region

The simplest element of Harry Markowitzs Nobel Prize-winning method

Modern Portfolio Theory is documenting how much risk an investor

accepts for a given return.

The Investment Boundary states how much risk an investor is willing to

accept for a given return.

For our purposes, we modified Markowitzs approach a bit.

Investment

Define Decision

Model

Calibrate

Estimators

Conduct Value

of Information

Analysis (VIA)

Measure

according to VIA

results and

update model

Populate Model

with Calibrated

Estimates &

Measurements

Analyze

Remaining Risk

Optimize

Decision

Approach Summary

31 Copyright HDR 2007

dwhubbard@hubbardresearch.com

Copyright HDR 2006

dwhubbard@hubbardresearch.com

32

Connecting The Dots

The EPA needed to compute the ROI of the Safe Drinking Water

Information System (SDWIS)

As with any AIE project, we built a spreadsheet model that connected the

expected effects of the system to relevant impacts in this case public

health and its economic value

Copyright HDR 2006

dwhubbard@hubbardresearch.com

33

Reactions: Safe Water

I didnt think that just defining the problem quantitatively would

result in something that eloquent. I wasnt getting my point across

and the AIE approach communicated the benefits much better. Jeff

Bryan, SDWIS Program Chief

Until [AIE], nobody understood the concept of the value of the

information and what to look for. They had to try to measure

everything, couldnt afford it, so opted for nothing

Translating software to environmental and health impacts was

amazing. I think people were frankly stunned anyone could make

that connection

The result I found striking was the level of agreement of people with

disparate views of what should be done. From my view, where

consensus is difficult to achieve, the agreement was striking Mark

Day, Deputy CIO and CTO for the Office of Environmental

Information

Copyright HDR 2006

dwhubbard@hubbardresearch.com

34

Forecasting Fuel for Battle

The US Marine Corps with

the Office of Naval Research

needed a better method for

forecasting fuel for wartime

operations

The VIA showed that the big

uncertainty was really supply

route conditions, not whether

they are engaging the enemy

Consequently, we performed

a series of experiments with

supply trucks rigged with

GPS and fuel-flow meters.

Copyright HDR 2006

dwhubbard@hubbardresearch.com

35

Reactions: Fuel for the Marines

The biggest surprise was that we can save so much

fuel. We freed up vehicles because we didnt have to

move as much fuel. For a logistics person that's critical.

Now vehicles that moved fuel can move ammunition.

Luis Torres, Fuel Study Manager, Office of Naval

Research

What surprised me was that [the model] showed most

fuel was burned on logistics routes. The study even

uncovered that tank operators would not turn tanks off if

they didnt think they could get replacement starters.

Thats something that a logistician in a 100 years

probably wouldnt have thought of. Chief Warrant

Officer Terry Kunneman, Bulk Fuel Planning, HQ

Marine Corps

Copyright HDR 2007

dwhubbard@hubbardresearch.com

36

Final Tips

Learn about calibration, computing information

values, and risk/return tradeoffs

You can use the information value calculations

within @RISK

Dont let exception anxiety cause you to avoid

any observations at all the existence of noise

does not mean the lack of signal

Just do it you learn about how to measure it by

just starting to take some observations

37

Questions?

Doug Hubbard

Hubbard Decision Research

dwhubbard@hubbardresearch.com

www.hubbardresearch.com

630 858 2788

Copyright HDR 2007

dwhubbard@hubbardresearch.com

Potrebbero piacerti anche

- The Yellow House: A Memoir (2019 National Book Award Winner)Da EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Valutazione: 4 su 5 stelle4/5 (98)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDa EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeValutazione: 4 su 5 stelle4/5 (5795)

- Shoe Dog: A Memoir by the Creator of NikeDa EverandShoe Dog: A Memoir by the Creator of NikeValutazione: 4.5 su 5 stelle4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDa EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureValutazione: 4.5 su 5 stelle4.5/5 (474)

- Grit: The Power of Passion and PerseveranceDa EverandGrit: The Power of Passion and PerseveranceValutazione: 4 su 5 stelle4/5 (588)

- On Fire: The (Burning) Case for a Green New DealDa EverandOn Fire: The (Burning) Case for a Green New DealValutazione: 4 su 5 stelle4/5 (74)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDa EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryValutazione: 3.5 su 5 stelle3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDa EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceValutazione: 4 su 5 stelle4/5 (895)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDa EverandNever Split the Difference: Negotiating As If Your Life Depended On ItValutazione: 4.5 su 5 stelle4.5/5 (838)

- The Little Book of Hygge: Danish Secrets to Happy LivingDa EverandThe Little Book of Hygge: Danish Secrets to Happy LivingValutazione: 3.5 su 5 stelle3.5/5 (400)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDa EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersValutazione: 4.5 su 5 stelle4.5/5 (345)

- The Unwinding: An Inner History of the New AmericaDa EverandThe Unwinding: An Inner History of the New AmericaValutazione: 4 su 5 stelle4/5 (45)

- Team of Rivals: The Political Genius of Abraham LincolnDa EverandTeam of Rivals: The Political Genius of Abraham LincolnValutazione: 4.5 su 5 stelle4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDa EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyValutazione: 3.5 su 5 stelle3.5/5 (2259)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDa EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaValutazione: 4.5 su 5 stelle4.5/5 (266)

- The Emperor of All Maladies: A Biography of CancerDa EverandThe Emperor of All Maladies: A Biography of CancerValutazione: 4.5 su 5 stelle4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDa EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreValutazione: 4 su 5 stelle4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Da EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Valutazione: 4.5 su 5 stelle4.5/5 (121)

- Her Body and Other Parties: StoriesDa EverandHer Body and Other Parties: StoriesValutazione: 4 su 5 stelle4/5 (821)

- Lala Har DayalDocumento6 pagineLala Har DayalSoorya VanshamNessuna valutazione finora

- Richard Burton-Notes On Zanzibar, 1858Documento30 pagineRichard Burton-Notes On Zanzibar, 1858dianabujaNessuna valutazione finora

- Sagan-The Origins of The Pacific WarDocumento31 pagineSagan-The Origins of The Pacific WarSpmail AmalNessuna valutazione finora

- Business Communication - MMJ - 2Documento4 pagineBusiness Communication - MMJ - 2DemiGodShuvoNessuna valutazione finora

- Foreign Policies During Zulfiqar Ali Bhutto EraDocumento20 pagineForeign Policies During Zulfiqar Ali Bhutto EraFarooq Shah67% (3)

- Hiroshima: By: John HerseyDocumento10 pagineHiroshima: By: John Herseydarkfairy3901Nessuna valutazione finora

- TM 43-0001-39 (Cads)Documento239 pagineTM 43-0001-39 (Cads)jbart2520% (1)

- No Quarter - 7 PDFDocumento100 pagineNo Quarter - 7 PDFGorka Diaz Pernas100% (1)

- US Marine Corps Entertainment Liaison Office File On JarheadDocumento15 pagineUS Marine Corps Entertainment Liaison Office File On JarheadSpyCultureNessuna valutazione finora

- Treaty of Versailles and The Impact On Germany EconomyDocumento2 pagineTreaty of Versailles and The Impact On Germany Economy1ChiaroScuro1Nessuna valutazione finora

- Test Bank For Invitation To Psychology 7th Edition Carole Wade Carol Tavris Samuel R Sommers Lisa M ShinDocumento36 pagineTest Bank For Invitation To Psychology 7th Edition Carole Wade Carol Tavris Samuel R Sommers Lisa M Shingallopbarogrampqvp100% (46)

- 52 Brief Book - Oluwatobi Bayo-DavidDocumento76 pagine52 Brief Book - Oluwatobi Bayo-Davidkawe443Nessuna valutazione finora

- CNN Student News TranscriptDocumento4 pagineCNN Student News Transcriptmisshg034Nessuna valutazione finora

- Minas Tirith: Wargames Rules For Ancient and Medieval BattleDocumento34 pagineMinas Tirith: Wargames Rules For Ancient and Medieval BattlePeter StallenbergNessuna valutazione finora

- Casesse - States - Rise - and - Decline - of - The - Primary - Subjects - of - The - International - Community PDFDocumento15 pagineCasesse - States - Rise - and - Decline - of - The - Primary - Subjects - of - The - International - Community PDFAntoNessuna valutazione finora

- Gimmick TF 2Documento32 pagineGimmick TF 2ctaraina rinconNessuna valutazione finora

- CEMETERIES AS TOURIST ATTRACTION - Amelia TomasevicDocumento12 pagineCEMETERIES AS TOURIST ATTRACTION - Amelia TomasevicBarquisimeto 2.0Nessuna valutazione finora

- India and Its Neighbours.Documento10 pagineIndia and Its Neighbours.Anayra KapoorNessuna valutazione finora

- Remote Sensing in Modern Military OperationsDocumento17 pagineRemote Sensing in Modern Military OperationsVishnu JagarlamudiNessuna valutazione finora

- Modern World History - Chapter 16 - 25-31Documento7 pagineModern World History - Chapter 16 - 25-31setopi8888Nessuna valutazione finora

- Nato Standard AJP-4.9 Allied Joint Doctrine For Modes of Multinational Logistic SupportDocumento78 pagineNato Standard AJP-4.9 Allied Joint Doctrine For Modes of Multinational Logistic SupportKebede MichaelNessuna valutazione finora

- Sufi Novel - Yearning of The Bird.Documento4 pagineSufi Novel - Yearning of The Bird.abusulavaNessuna valutazione finora

- Alexander The Great in Fact and Fiction 2000 Ebook PDFDocumento378 pagineAlexander The Great in Fact and Fiction 2000 Ebook PDFpichku100% (2)

- Changes in Organization: Conflict, Power, and Politics: Henndy GintingDocumento100 pagineChanges in Organization: Conflict, Power, and Politics: Henndy GintingAdhi Antyanto NaratamaNessuna valutazione finora

- 2nd International Grand Masters Chess Tournament: Under The Aegis of Organised byDocumento3 pagine2nd International Grand Masters Chess Tournament: Under The Aegis of Organised byPranav ShettyNessuna valutazione finora

- 2.peace Treaties With Defeated PowersDocumento13 pagine2.peace Treaties With Defeated PowersTENDAI MAVHIZANessuna valutazione finora

- DwarfSheet Fill PDFDocumento1 paginaDwarfSheet Fill PDFJohnNessuna valutazione finora

- Olson, Charles - The DistancesDocumento97 pagineOlson, Charles - The DistancesBen Kossak100% (1)

- LIST OF FALLEN HEROES 7th AUGUST 1915Documento47 pagineLIST OF FALLEN HEROES 7th AUGUST 1915Henry HigginsNessuna valutazione finora

- A Map of Kara-Tur - Atlas of Ice and FireDocumento11 pagineA Map of Kara-Tur - Atlas of Ice and FireTou YubeNessuna valutazione finora