Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Logit Probit

Caricato da

Marko NikolicTitolo originale

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Logit Probit

Caricato da

Marko NikolicCopyright:

Formati disponibili

1

Logit/Probit Models

2

Making sense of the decision rule

Suppose we have a kid with great scores,

great grades, etc.

For this kid, x

i

is large.

What will prevent admission? Only a large

negative

i

What is the probability of observing a large

negative

i

? Very small.

Most likely admitted. We estimate a large

probability

3

Distribution of Epsilon

0.00

0.10

0.20

0.30

0.40

0.50

-3 -2 -1 0 1 2 3

x -x

Values of

That will prevent admission

Values of that would allow admission

4

Another example

Suppose we have a kid with bad scores.

For this kid, x

i

is small (even negative).

What will allow admission? Only a large

positive

i

What is the probability of observing a large

positive

i

? Very small.

Most likely, not admitted, so, we estimate

a small probability

5

Distribution of Epsilon

0.00

0.10

0.20

0.30

0.40

0.50

-3 -2 -1 0 1 2 3

x

-x

Values of

that would

allow

admission

Values of

that would

prevent

admission

6

Normal (probit) Model

is distributed as a standard normal

Mean zero

Variance 1

Evaluate probability (y=1)

Pr(y

i

=1) = Pr(

i

> - x

i

) = 1 (-x

i

)

Given symmetry: 1 (-x

i

) = (x

i

)

Evaluate probability (y=0)

Pr(y

i

=0) = Pr(

i

- x

i

) = (-x

i

)

Given symmetry: (-x

i

) = 1 - (x

i

)

7

Summary

Pr(y

i

=1) = (x

i

)

Pr(y

i

=0) = 1 -(x

i

)

Notice that (a) is increasing a.

Therefore, if the xs increases the

probability of observing y, we would

expect the coefficient on that variable to

be (+)

8

The standard normal assumption

(variance=1) is not critical

In practice, the variance may be not equal

to 1, but given the math of the problem, we

cannot separately identify the variance.

9

Logit

PDF: f(x) = exp(x)/[1+exp(x)]

2

CDF: F(a) = exp(a)/[1+exp(a)]

Symmetric, unimodal distribution

Looks a lot like the normal

Incredibly easy to evaluate the CDF and PDF

Mean of zero, variance > 1 (more variance

than normal)

10

Evaluate probability (y=1)

Pr(y

i

=1) = Pr(

i

> - x

i

) = 1 F(-x

i

)

Given symmetry: 1 F(-x

i

) = F(x

i

)

F(x

i

) = exp(x

i

)/(1+exp(x

i

))

11

Evaluate probability (y=0)

Pr(y

i

=0) = Pr(

i

- x

i

) = F(-x

i

)

Given symmetry: F(-x

i

) = 1 - F(x

i

)

1 - F(x

i

) = 1 /(1+exp(x

i

))

In summary, when

i

is a logistic

distribution

Pr(y

i

=1) = exp(x

i

)/(1+exp(x

i

))

Pr(y

i

=0) = 1/(1+exp(x

i

))

12

STATA Resources

Discrete Outcomes

Regression Models for Categorical

Dependent Variables Using STATA

J. Scott Long and Jeremy Freese

Available for sale from STATA website for

$52 (www.stata.com)

Post-estimation subroutines that translate

results

Do not need to buy the book to use the

subroutines

13

In STATA command line type

net search spost

Will give you a list of available programs to

download

One is

Spostado from http://www.indiana.edu/~jslsoc/stata

Click on the link and install the files

14

Example: Workplace smoking

bans

Smoking supplements to 1991 and 1993

National Health Interview Survey

Asked all respondents whether they

currently smoke

Asked workers about workplace tobacco

policies

Sample: indoor workers

Key variables: current smoking and

whether they faced a workplace ban

15

Data: workplace1.dta

Sample program: workplace1.doc

Results: workplace1.log

16

Description of variables in data

. desc;

storage display value

variable name type format label variable label

------------------------------------------------------------------------

> -

smoker byte %9.0g is current smoking

worka byte %9.0g has workplace smoking bans

age byte %9.0g age in years

male byte %9.0g male

black byte %9.0g black

hispanic byte %9.0g hispanic

incomel float %9.0g log income

hsgrad byte %9.0g is hs graduate

somecol byte %9.0g has some college

college float %9.0g

-----------------------------------------------------------------------

17

Summary statistics

sum;

Variable | Obs Mean Std. Dev. Min Max

-------------+--------------------------------------------------------

smoker | 16258 .25163 .433963 0 1

worka | 16258 .6851396 .4644745 0 1

age | 16258 38.54742 11.96189 18 87

male | 16258 .3947595 .488814 0 1

black | 16258 .1119449 .3153083 0 1

-------------+--------------------------------------------------------

hispanic | 16258 .0607086 .2388023 0 1

incomel | 16258 10.42097 .7624525 6.214608 11.22524

hsgrad | 16258 .3355271 .4721889 0 1

somecol | 16258 .2685447 .4432161 0 1

college | 16258 .3293763 .4700012 0 1

18

. * run a linear probability model for comparison purposes;

. * estimate white standard errors to control for heteroskedasticity;

. reg smoker age incomel male black hispanic

> hsgrad somecol college worka, robust;

Regression with robust standard errors Number of obs = 16258

F( 9, 16248) = 99.26

Prob > F = 0.0000

R-squared = 0.0488

Root MSE = .42336

------------------------------------------------------------------------------

| Robust

smoker | Coef. Std. Err. t P>|t| [95% Conf. Interval]

-------------+----------------------------------------------------------------

age | -.0004776 .0002806 -1.70 0.089 -.0010276 .0000725

incomel | -.0287361 .0047823 -6.01 0.000 -.03811 -.0193621

male | .0168615 .0069542 2.42 0.015 .0032305 .0304926

black | -.0356723 .0110203 -3.24 0.001 -.0572732 -.0140714

hispanic | -.070582 .0136691 -5.16 0.000 -.097375 -.043789

hsgrad | -.0661429 .0162279 -4.08 0.000 -.0979514 -.0343345

somecol | -.1312175 .0164726 -7.97 0.000 -.1635056 -.0989293

college | -.2406109 .0162568 -14.80 0.000 -.272476 -.2087459

worka | -.066076 .0074879 -8.82 0.000 -.080753 -.051399

_cons | .7530714 .0494255 15.24 0.000 .6561919 .8499509

------------------------------------------------------------------------------

Heteroskedastic consistent

Standard errors

Very low R

2

, typical in LP models

Since OLS

Report t-stats

19

. * run probit model;

. probit smoker age incomel male black hispanic

> hsgrad somecol college worka;

Iteration 0: log likelihood = -9171.443

Iteration 1: log likelihood = -8764.068

Iteration 2: log likelihood = -8761.7211

Iteration 3: log likelihood = -8761.7208

Probit estimates Number of obs = 16258

LR chi2(9) = 819.44

Prob > chi2 = 0.0000

Log likelihood = -8761.7208 Pseudo R2 = 0.0447

------------------------------------------------------------------------------

smoker | Coef. Std. Err. z P>|z| [95% Conf. Interval]

-------------+----------------------------------------------------------------

age | -.0012684 .0009316 -1.36 0.173 -.0030943 .0005574

incomel | -.092812 .0151496 -6.13 0.000 -.1225047 -.0631193

male | .0533213 .0229297 2.33 0.020 .0083799 .0982627

black | -.1060518 .034918 -3.04 0.002 -.17449 -.0376137

hispanic | -.2281468 .0475128 -4.80 0.000 -.3212701 -.1350235

hsgrad | -.1748765 .0436392 -4.01 0.000 -.2604078 -.0893453

somecol | -.363869 .0451757 -8.05 0.000 -.4524118 -.2753262

college | -.7689528 .0466418 -16.49 0.000 -.860369 -.6775366

worka | -.2093287 .0231425 -9.05 0.000 -.2546873 -.1639702

_cons | .870543 .154056 5.65 0.000 .5685989 1.172487

------------------------------------------------------------------------------

Same syntax as REG but with probit

Converges rapidly for most

problems

Report z-statistics

Instead of t-stats

Test that all non-constant

Terms are 0

20

. dprobit smoker age incomel male black hispanic

> hsgrad somecol college worka;

Probit regression, reporting marginal effects Number of obs = 16258

LR chi2(9) = 819.44

Prob > chi2 = 0.0000

Log likelihood = -8761.7208 Pseudo R2 = 0.0447

------------------------------------------------------------------------------

smoker | dF/dx Std. Err. z P>|z| x-bar [ 95% C.I. ]

---------+--------------------------------------------------------------------

age | -.0003951 .0002902 -1.36 0.173 38.5474 -.000964 .000174

incomel | -.0289139 .0047173 -6.13 0.000 10.421 -.03816 -.019668

male*| .0166757 .0071979 2.33 0.020 .39476 .002568 .030783

black*| -.0320621 .0102295 -3.04 0.002 .111945 -.052111 -.012013

hispanic*| -.0658551 .0125926 -4.80 0.000 .060709 -.090536 -.041174

hsgrad*| -.053335 .013018 -4.01 0.000 .335527 -.07885 -.02782

somecol*| -.1062358 .0122819 -8.05 0.000 .268545 -.130308 -.082164

college*| -.2149199 .0114584 -16.49 0.000 .329376 -.237378 -.192462

worka*| -.0668959 .0075634 -9.05 0.000 .68514 -.08172 -.052072

---------+--------------------------------------------------------------------

obs. P | .25163

pred. P | .2409344 (at x-bar)

------------------------------------------------------------------------------

(*) dF/dx is for discrete change of dummy variable from 0 to 1

z and P>|z| correspond to the test of the underlying coefficient being 0

21

. mfx compute;

Marginal effects after probit

y = Pr(smoker) (predict)

= .24093439

------------------------------------------------------------------------------

variable | dy/dx Std. Err. z P>|z| [ 95% C.I. ] X

---------+--------------------------------------------------------------------

age | -.0003951 .00029 -1.36 0.173 -.000964 .000174 38.5474

incomel | -.0289139 .00472 -6.13 0.000 -.03816 -.019668 10.421

male*| .0166757 .0072 2.32 0.021 .002568 .030783 .39476

black*| -.0320621 .01023 -3.13 0.002 -.052111 -.012013 .111945

hispanic*| -.0658551 .01259 -5.23 0.000 -.090536 -.041174 .060709

hsgrad*| -.053335 .01302 -4.10 0.000 -.07885 -.02782 .335527

somecol*| -.1062358 .01228 -8.65 0.000 -.130308 -.082164 .268545

college*| -.2149199 .01146 -18.76 0.000 -.237378 -.192462 .329376

worka*| -.0668959 .00756 -8.84 0.000 -.08172 -.052072 .68514

------------------------------------------------------------------------------

(*) dy/dx is for discrete change of dummy variable from 0 to 1

Males are 1.7 percentage points more likely to smoke

Those w/ college degree 21.5 % points

Less likely to smoke

10 years of age reduces smoking rates by

4 tenths of a percentage point

10 percent increase in income will reduce smoking

By .29 percentage points

22

. * get marginal effect/treatment effects for specific person;

. * male, age 40, college educ, white, without workplace smoking ban;

. * if a variable is not specified, its value is assumed to be;

. * the sample mean. in this case, the only variable i am not;

. * listing is mean log income;

. prchange, x(male=1 age=40 black=0 hispanic=0 hsgrad=0 somecol=0 worka=0);

probit: Changes in Predicted Probabilities for smoker

min->max 0->1 -+1/2 -+sd/2 MargEfct

age -0.0327 -0.0005 -0.0005 -0.0057 -0.0005

incomel -0.1807 -0.0314 -0.0348 -0.0266 -0.0349

male 0.0198 0.0198 0.0200 0.0098 0.0200

black -0.0390 -0.0390 -0.0398 -0.0126 -0.0398

hispanic -0.0817 -0.0817 -0.0855 -0.0205 -0.0857

hsgrad -0.0634 -0.0634 -0.0656 -0.0310 -0.0657

somecol -0.1257 -0.1257 -0.1360 -0.0605 -0.1367

college -0.2685 -0.2685 -0.2827 -0.1351 -0.2888

worka -0.0753 -0.0753 -0.0785 -0.0365 -0.0786

23

Min->Max: change in predicted probability as x changes from its

minimum to its maximum

0->1: change in pred. prob. as x changes from 0 to 1

-+1/2: change in predicted probability as x changes from 1/2

unit below base value to 1/2 unit above

-+sd/2: change in predicted probability as x changes from 1/2

standard dev below base to 1/2 standard dev above

MargEfct: the partial derivative of the predicted

probability/rate with respect to a given independent variable

24

. * using a wald test, test the null hypothesis that;

. * all the education coefficients are zero;

. test hsgrad somecol college;

( 1) hsgrad = 0

( 2) somecol = 0

( 3) college = 0

chi2( 3) = 504.78

Prob > chi2 = 0.0000

25

. * how to run the same tets with a -2 log like test;

. * estimate the unresticted model and save the estimates ;

. * in urmodel;

. probit smoker age incomel male black hispanic

> hsgrad somecol college worka;

Iteration 0: log likelihood = -9171.443

Iteration 1: log likelihood = -8764.068

Iteration 2: log likelihood = -8761.7211

Iteration 3: log likelihood = -8761.7208

Delete some results

. estimates store urmodel;

. * estimate the restricted model. save results in rmodel;

. probit smoker age incomel male black hispanic

> worka;

Iteration 0: log likelihood = -9171.443

Iteration 1: log likelihood = -9022.2473

Iteration 2: log likelihood = -9022.1031

Delete some results

. lrtest urmodel rmodel;

likelihood-ratio test LR chi2(3) = 520.76

(Assumption: rmodel nested in urmodel) Prob > chi2 = 0.0000

26

Comparing Marginal Effects

Variable LP Probit Logit

age -0.00040 -0.00048 -0.00048

incomel -0.0289 -0.0287 -0.0276

male 0.0167 0.0168 0.0172

Black -0.0321 -0.0357 -0.0342

hispanic -0.0658 -0.0706 -0.0602

hsgrad -0.0533 -0.0661 -0.0514

college -0.2149 -0.2406 -0.2121

worka -0.0669 -0.0661 -0.0658

27

When will results differ?

Normal and logit PDF/CDF look:

Similar in the mid point of the distribution

Different in the tails

You obtain more observations in the tails

of the distribution when

Samples sizes are large

approaches 1 or 0

These situations will more likely produce

differences in estimates

28

probit smoker worka age incomel male black hispanic hsgrad somecol college;

matrix betat=e(b); * get beta from probit (1 x k);

matrix beta=betat';

matrix covp=e(V); * get v/c matric from probit (k x k);

* get means of x -- call it xbar (k x 1);

* must be the same order as in the probit statement;

matrix accum zz = worka age incomel male black hispanic hsgrad somecol college,

means(xbart);

matrix xbar=xbart'; * transpose beta;

matrix xbeta=beta'*xbar; * get xbeta (scalar);

matrix pdf=normalden(xbeta[1,1]); * evaluate std normal pdf at xbarbeta;

matrix k=rowsof(beta); * get number of covariates;

matrix Ik=I(k[1,1]); * construct I(k);

matrix G=Ik-xbeta*beta*xbar'; * construct G;

matrix v_c=(pdf*pdf)*G*covp*G'; * get v-c matrix of marginal effects;

matrix me= beta*pdf; * get marginal effects;

matrix se_me1=cholesky(diag(vecdiag(v_c))); * get square root of main diag;

matrix se_me=vecdiag(se_me1)'; *take diagonal values;

matrix z_score=vecdiag(diag(me)*inv(diag(se_me)))'; * get z score;

matrix results=me,se_me,z_score; * construct results matrix;

matrix colnames results=marg_eff std_err z_score; * define column names;

matrix list results; * list results;

29

results[10,3]

marg_eff std_err z_score

worka -.06521255 .00720374 -9.0525984

age -.00039515 .00029023 -1.3615156

incomel -.02891389 .00471728 -6.129356

male .01661127 .00714305 2.3255154

black -.03303852 .0108782 -3.0371321

hispanic -.07107496 .01479806 -4.8029926

hsgrad -.05447959 .01359844 -4.0063111

somecol -.11335675 .01408096 -8.0503576

college -.23955322 .0144803 -16.543383

_cons .2712018 .04808183 5.6404217

------------------------------------------------------------------------------

smoker | dF/dx Std. Err. z P>|z| x-bar [ 95% C.I. ]

---------+--------------------------------------------------------------------

age | -.0003951 .0002902 -1.36 0.173 38.5474 -.000964 .000174

incomel | -.0289139 .0047173 -6.13 0.000 10.421 -.03816 -.019668

male*| .0166757 .0071979 2.33 0.020 .39476 .002568 .030783

black*| -.0320621 .0102295 -3.04 0.002 .111945 -.052111 -.012013

hispanic*| -.0658551 .0125926 -4.80 0.000 .060709 -.090536 -.041174

hsgrad*| -.053335 .013018 -4.01 0.000 .335527 -.07885 -.02782

somecol*| -.1062358 .0122819 -8.05 0.000 .268545 -.130308 -.082164

college*| -.2149199 .0114584 -16.49 0.000 .329376 -.237378 -.192462

worka*| -.0668959 .0075634 -9.05 0.000 .68514 -.08172 -.052072

---------+--------------------------------------------------------------------

30

* this is an example of a marginal effect for a dichotomous outcome;

* in this case, set the 1st variable worka as 1 or 0;

matrix x1=xbar;

matrix x1[1,1]=1;

matrix x0=xbar;

matrix x0[1,1]=0;

matrix xbeta1=beta'*x1;

matrix xbeta0=beta'*x0;

matrix prob1=normal(xbeta1[1,1]);

matrix prob0=normal(xbeta0[1,1]);

matrix me_1=prob1-prob0;

matrix pdf1=normalden(xbeta1[1,1]);

matrix pdf0=normalden(xbeta0[1,1]);

matrix G1=pdf1*x1 - pdf0*x0;

matrix v_c1=G1'*covp*G1;

matrix se_me_1=sqrt(v_c1[1,1]);

* marginal effect of workplace bans;

matrix list me_1;

* standard error of workplace a;

matrix list se_me_1;

31

symmetric me_1[1,1]

c1

r1 -.06689591

. * standard error of workplace a;

. matrix list se_me_1;

symmetric se_me_1[1,1]

c1

r1 .00756336

------------------------------------------------------------------------------

smoker | dF/dx Std. Err. z P>|z| x-bar [ 95% C.I. ]

---------+--------------------------------------------------------------------

age | -.0003951 .0002902 -1.36 0.173 38.5474 -.000964 .000174

incomel | -.0289139 .0047173 -6.13 0.000 10.421 -.03816 -.019668

male*| .0166757 .0071979 2.33 0.020 .39476 .002568 .030783

black*| -.0320621 .0102295 -3.04 0.002 .111945 -.052111 -.012013

hispanic*| -.0658551 .0125926 -4.80 0.000 .060709 -.090536 -.041174

hsgrad*| -.053335 .013018 -4.01 0.000 .335527 -.07885 -.02782

somecol*| -.1062358 .0122819 -8.05 0.000 .268545 -.130308 -.082164

college*| -.2149199 .0114584 -16.49 0.000 .329376 -.237378 -.192462

worka*| -.0668959 .0075634 -9.05 0.000 .68514 -.08172 -.052072

---------+--------------------------------------------------------------------

32

Logit and Standard Normal CDF

0.00

0.05

0.10

0.15

0.20

0.25

0.30

0.35

0.40

0.45

-7 -5 -3 -1 1 3 5 7

X

Y

Standard Normal

Logit

33

Pseudo R

2

LL

k

log likelihood with all variables

LL

1

log likelihood with only a constant

0 > LL

k

> LL

1

so | LL

k

| < |LL

1

|

Pseudo R

2

= 1 - |LL

1

/LL

k

|

Bounded between 0-1

Not anything like an R

2

from a regression

34

Predicting Y

Let b be the estimated value of

For any candidate vector of x

i

, we can predict

probabilities, P

i

P

i

= (x

i

b)

Once you have P

i

, pick a threshold value, T, so

that you predict

Y

p

= 1 if P

i

> T

Y

p

= 0 if P

i

T

Then compare, fraction correctly predicted

35

Question: what value to pick for T?

Can pick .5 what some textbooks

suggest

Intuitive. More likely to engage in the activity

than to not engage in it

When is small (large), this criteria does a

poor job of predicting Y

i

=1 (Y

i

=0)

36

*predict probability of smoking;

predict pred_prob_smoke;

* get detailed descriptive data about predicted

prob;

sum pred_prob, detail;

* predict binary outcome with 50% cutoff;

gen pred_smoke1=pred_prob_smoke>=.5;

label variable pred_smoke1 "predicted smoking, 50%

cutoff";

* compare actual values;

tab smoker pred_smoke1, row col cell;

37

. predict pred_prob_smoke;

(option p assumed; Pr(smoker))

. * get detailed descriptive data about predicted prob;

. sum pred_prob, detail;

Pr(smoker)

-------------------------------------------------------------

Percentiles Smallest

1% .0959301 .0615221

5% .1155022 .0622963

10% .1237434 .0633929 Obs 16258

25% .1620851 .0733495 Sum of Wgt. 16258

50% .2569962 Mean .2516653

Largest Std. Dev. .0960007

75% .3187975 .5619798

90% .3795704 .5655878 Variance .0092161

95% .4039573 .5684112 Skewness .1520254

99% .4672697 .6203823 Kurtosis 2.149247

Mean of predicted

Y is always close to actual mean

(0.25163 in this case)

Predicted values close

To sample mean of y

No one predicted to have a

High probability of smoking

Because mean of Y closer to 0

38

Some nice properties of the Logit

Outcome, y=1 or 0

Treatment, x=1 or 0

Other covariates, x

Context,

x = whether a baby is born with a low weight

birth

x = whether the mom smoked or not during

pregnancy

39

Risk ratio

RR = Prob(y=1|x=1)/Prob(y=1|x=0)

Differences in the probability of an event

when x is and is not observed

How much does smoking elevate the chance

your child will be a low weight birth

40

Let Y

yx

be the probability y=1 or 0 given

x=1 or 0

Think of the risk ratio the following way

Y

11

is the probability Y=1 when X=1

Y

10

is the probability Y=1 when X=0

Y

11

= RR*Y

10

41

Odds Ratio

OR=A/B = [Y

11

/Y

01

]/[Y

10

/Y

00

]

A = [Pr(Y=1|X=1)/Pr(Y=0|X=1)]

= odds of Y occurring if you are a smoker

B = [Pr(Y=1|X=0)/Pr(Y=0|X=0)]

= odds of Y happening if you are not a smoker

What are the relative odds of Y happening if you do or

do not experience X

42

Suppose Pr(Y

i

=1) = F(

o

+

1

X

i

+

2

Z) and

F is the logistic function

Can show that

OR = exp(

1

) = e

1

This number is typically reported by most

statistical packages

43

Details

Y

11

= exp(

o

+

1

+

2

Z) /(1+ exp(

o

+

1

+

2

Z) )

Y

10

= exp(

o

+

2

Z)/(1+ exp(

o

+

2

Z))

Y

01

= 1 /(1+ exp(

o

+

1

+

2

Z) )

Y

00

= 1/(1+ exp(

o

+

2

Z)

[Y

11

/Y

01

] = exp(

o

+

1

+

2

Z)

[Y

10

/Y

00

] = exp(

o

+

2

Z)

OR=A/B = [Y

11

/Y

01

]/[Y

10

/Y

00

]

= exp(

o

+

1

+

2

Z)/ exp(

o

+

2

Z)

= exp(

1

)

44

Suppose Y is rare, mean is close to 0

Pr(Y=0|X=1) and Pr(Y=0|X=0) are both close

to 1, so they cancel

Therefore, when mean is close to 0

Odds Ratio Risk Ratio

Why is this nice?

45

Population Attributable Risk

PAR

Fraction of outcome Y attributed to X

Let x

s

be the fraction use of x

PAR = (RR 1)x

s

/[(1-x

s

) + RRx

s

]

Derived on next 2 slides

46

Population attributable risk

Average outcome in the population

y

c

= (1-x

s

) Y

10

+ x

s

Y

11

= (1- x

s

)Y

10

+ x

s

(RR)Y

10

Average outcomes are a weighted average of

outcomes for X=0 and X=1

What would the average outcome be in the

absence of X (e.g., reduce smoking rates to 0)?

Y

a

= Y

10

47

Therefore

y

c

= current outcome

Y

a

= Y

10

outcome with zero smoking

PAR = (y

c

Y

a

)/y

c

Substitute definition of Y

a

and y

c

Reduces to (RR 1)x

s

/[(1-x

s

) + RRx

s

]

48

Example: Maternal Smoking and

Low Weight Births

6% births are low weight

< 2500 grams

Average birth is 3300 grams (5.5 lbs)

Maternal smoking during pregnancy has

been identified as a key cofactor

13% of mothers smoke

This number was falling about 1 percentage

point per year during 1980s/90s

Doubles chance of low weight birth

49

Natality detail data

Census of all births (4 million/year)

Annual files starting in the 60s

Information about

Baby (birth weight, length, date, sex, plurality, birth

injuries)

Demographics (age, race, marital, educ of mom)

Birth (who delivered, method of delivery)

Health of mom (smoke/drank during preg, weight

gain)

50

Smoking not available from CA or NY

~3 million usable observations

I pulled .5% random sample from 1995

About 12,500 obs

Variables: birthweight (grams), smoked,

married, 4-level race, 5 level education,

mothers age at birth

51

Notice a few things

13.7% of women smoke

6% have low weight birth

Pr(LBW | Smoke) =10.28%

Pr(LBW |~ Smoke) = 5.36%

RR

= Pr(LBW | Smoke)/ Pr(LBW |~ Smoke)

= 0.1028/0.0536 = 1.92

Raw

Numbers

52

Asking for odds ratios

Logistic y x1 x2;

In this case

xi: logistic lowbw smoked age

married i.educ5 i.race4;

53

PAR

PAR = (RR 1) x

s

/[(1- x

s

) + RR x

s

]

x

s

= 0.137

RR = 1.96

PAR = 0.116

11.6% of low weight births attributed to

maternal smoking

| |

0 1

0

1 1

*

Pr( 1) *

1 1

0.045 5.3/ 22.222 0.239

22.222

D

Y D

| |

o o

|

o

| |

(

= = u = u +

(

= = = = = =

Endowment effect

Ask group to fill out a survey

As a thank you, give them a coffee mug

Have the mug when they fill out the survey

After the survey, offer them a trade of a

candy bar for a mug

Reverse the experiment offer candy bar,

then trade for a mug

Comparison sample give them a choice

of mug/candy after survey is complete

Contrary to simply consumer

choice model

Standard util. theory model assume MRS

between two good is symmetric

Lack of trading suggests an endowment

effect

People value the good more once they own it

Generates large discrepancies between WTP

and WTA

Policy implications

Example:

A) How much are you willing to pay for clean

air?

B) How much do we have to pay you to allow

someone to pollute

Answer to B) orders of magnitude larger than

A)

Prior estimate WTP via A and assume

equals WTA

Thought of as loss aversion

Problem

Artificial situations

Inexperienced may not know value of the

item

Solution: see how experienced actors

behave when they are endowed with

something they can easily value

Two experiments: baseball card shows

and collectible pins

Baseball cards

Two pieces of memorabilia

Game stub from game Cal Ripken Jr set the

record for consecutive games played (vs. KC,

June 14, 1996)

Certificate commemorating Nolan Ryans

300th win

Ask people to fill out a 5 min survey. In

return, they receive one of the pieces, then

ask for a trade

Potrebbero piacerti anche

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDa EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeValutazione: 4 su 5 stelle4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDa EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreValutazione: 4 su 5 stelle4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDa EverandNever Split the Difference: Negotiating As If Your Life Depended On ItValutazione: 4.5 su 5 stelle4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDa EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceValutazione: 4 su 5 stelle4/5 (894)

- Grit: The Power of Passion and PerseveranceDa EverandGrit: The Power of Passion and PerseveranceValutazione: 4 su 5 stelle4/5 (587)

- Shoe Dog: A Memoir by the Creator of NikeDa EverandShoe Dog: A Memoir by the Creator of NikeValutazione: 4.5 su 5 stelle4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDa EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureValutazione: 4.5 su 5 stelle4.5/5 (474)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDa EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersValutazione: 4.5 su 5 stelle4.5/5 (344)

- Her Body and Other Parties: StoriesDa EverandHer Body and Other Parties: StoriesValutazione: 4 su 5 stelle4/5 (821)

- Laser B2 Teacher 39 S BookDocumento266 pagineLaser B2 Teacher 39 S Bookkunawo69% (36)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Da EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Valutazione: 4.5 su 5 stelle4.5/5 (119)

- The Emperor of All Maladies: A Biography of CancerDa EverandThe Emperor of All Maladies: A Biography of CancerValutazione: 4.5 su 5 stelle4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingDa EverandThe Little Book of Hygge: Danish Secrets to Happy LivingValutazione: 3.5 su 5 stelle3.5/5 (399)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDa EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyValutazione: 3.5 su 5 stelle3.5/5 (2219)

- The Yellow House: A Memoir (2019 National Book Award Winner)Da EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Valutazione: 4 su 5 stelle4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDa EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaValutazione: 4.5 su 5 stelle4.5/5 (265)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDa EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryValutazione: 3.5 su 5 stelle3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnDa EverandTeam of Rivals: The Political Genius of Abraham LincolnValutazione: 4.5 su 5 stelle4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealDa EverandOn Fire: The (Burning) Case for a Green New DealValutazione: 4 su 5 stelle4/5 (73)

- The Unwinding: An Inner History of the New AmericaDa EverandThe Unwinding: An Inner History of the New AmericaValutazione: 4 su 5 stelle4/5 (45)

- Nursing Care PlansDocumento10 pagineNursing Care PlansGracie S. Vergara100% (1)

- Chapter 24 - Logistic RegressionDocumento21 pagineChapter 24 - Logistic RegressionNikhil Gandhi100% (7)

- Chapter 24 - Logistic RegressionDocumento21 pagineChapter 24 - Logistic RegressionNikhil Gandhi100% (7)

- Use Acupressure to Conceive FasterDocumento15 pagineUse Acupressure to Conceive Fastersale18100% (1)

- Rudraksha - Scientific FactsDocumento20 pagineRudraksha - Scientific FactsAkash Agarwal100% (3)

- Copia de Tissue Response To Dental CariesDocumento7 pagineCopia de Tissue Response To Dental Cariesjorefe12Nessuna valutazione finora

- Black Girl Magic: Over 200+ AffirmationsDocumento229 pagineBlack Girl Magic: Over 200+ AffirmationsDestiny S. Harris100% (2)

- Pit AnjaDocumento1 paginaPit AnjaMarko NikolicNessuna valutazione finora

- REPORT Marko NikolicDocumento5 pagineREPORT Marko NikolicMarko NikolicNessuna valutazione finora

- Email 1Documento1 paginaEmail 1Marko NikolicNessuna valutazione finora

- Essay FCEDocumento13 pagineEssay FCEMarko NikolicNessuna valutazione finora

- Exit! Place Where You Enter To Experience Farfetched MemoriesDocumento1 paginaExit! Place Where You Enter To Experience Farfetched MemoriesMarko NikolicNessuna valutazione finora

- English WritingDocumento4 pagineEnglish WritingMarko NikolicNessuna valutazione finora

- ReciDocumento1 paginaReciMarko NikolicNessuna valutazione finora

- FilmoviDocumento1 paginaFilmoviMarko NikolicNessuna valutazione finora

- Hi PatDocumento2 pagineHi PatMarko NikolicNessuna valutazione finora

- Esej 1Documento1 paginaEsej 1Marko NikolicNessuna valutazione finora

- English WritingDocumento4 pagineEnglish WritingMarko NikolicNessuna valutazione finora

- Travel Time Reliability: Making It There On Time, All The TimeDocumento6 pagineTravel Time Reliability: Making It There On Time, All The TimeMarko NikolicNessuna valutazione finora

- A Day in BelgradeDocumento2 pagineA Day in BelgradeMarko NikolicNessuna valutazione finora

- Ej 919857Documento11 pagineEj 919857Marko NikolicNessuna valutazione finora

- Literatur ADocumento2 pagineLiteratur AMarko NikolicNessuna valutazione finora

- JMP Part023Documento92 pagineJMP Part023Marko Nikolic100% (1)

- Logistic Regression Problems with LPMDocumento11 pagineLogistic Regression Problems with LPMMarko NikolicNessuna valutazione finora

- Zao Glas I Ptice Nose.Documento1 paginaZao Glas I Ptice Nose.Marko NikolicNessuna valutazione finora

- TemperaturaDocumento40 pagineTemperaturaMarko NikolicNessuna valutazione finora

- Using Microsoft Excel Worksheet Functions in Visual BasicDocumento1 paginaUsing Microsoft Excel Worksheet Functions in Visual BasicMarko NikolicNessuna valutazione finora

- A Laplace TransformDocumento1 paginaA Laplace TransformMarko NikolicNessuna valutazione finora

- Sub KorakDocumento4 pagineSub KorakMarko NikolicNessuna valutazione finora

- Aço X6NiCrTiMoVB25!15!2 - 1.4980 Austenitic SteelDocumento2 pagineAço X6NiCrTiMoVB25!15!2 - 1.4980 Austenitic SteelMoacir MachadoNessuna valutazione finora

- Hotel Housekeeping EQUIPMENTDocumento3 pagineHotel Housekeeping EQUIPMENTsamahjaafNessuna valutazione finora

- Impact of Covid-19 On Audit Quality: Presented byDocumento13 pagineImpact of Covid-19 On Audit Quality: Presented byMST. SADIYA SULTANANessuna valutazione finora

- Moral Character ViolationsDocumento2 pagineMoral Character ViolationsAnne SchindlerNessuna valutazione finora

- Subaru Forester ManualsDocumento636 pagineSubaru Forester ManualsMarko JakobovicNessuna valutazione finora

- EcoLettsandSOM, Dulvy Et Al 2004Documento25 pagineEcoLettsandSOM, Dulvy Et Al 2004Nestor TorresNessuna valutazione finora

- EO On Ban of Fireworks (Integrated)Documento2 pagineEO On Ban of Fireworks (Integrated)Mario Roldan Jr.Nessuna valutazione finora

- WSO 2022 IB Working Conditions SurveyDocumento42 pagineWSO 2022 IB Working Conditions SurveyPhạm Hồng HuếNessuna valutazione finora

- Retail Management PPT1Documento14 pagineRetail Management PPT1Srilekha GubbalaNessuna valutazione finora

- Health and Safety Awareness For Flower Farm WorkersDocumento1 paginaHealth and Safety Awareness For Flower Farm WorkersGerald GwambaNessuna valutazione finora

- Manual Masina de Spalat Slim SamsungDocumento1.020 pagineManual Masina de Spalat Slim SamsungPerfectreviewNessuna valutazione finora

- December - Cost of Goods Sold (Journal)Documento14 pagineDecember - Cost of Goods Sold (Journal)kuro hanabusaNessuna valutazione finora

- SM RSJ 420 800Documento77 pagineSM RSJ 420 800elshan_asgarovNessuna valutazione finora

- Valvula de Leve MasterDocumento20 pagineValvula de Leve Masterguillermo trejosNessuna valutazione finora

- Insurance Principles, Types and Industry in IndiaDocumento10 pagineInsurance Principles, Types and Industry in IndiaAroop PalNessuna valutazione finora

- Pet - WikipediaDocumento12 paginePet - Wikipediabdalcin5512Nessuna valutazione finora

- Montgomery County Ten Year Comprehensive Water Supply and Sewerage Systems Plan (2003)Documento228 pagineMontgomery County Ten Year Comprehensive Water Supply and Sewerage Systems Plan (2003)rebolavNessuna valutazione finora

- Request For Review FormDocumento11 pagineRequest For Review FormJoel MillerNessuna valutazione finora

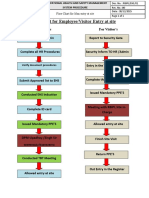

- fLOW CHART FOR WORKER'S ENTRYDocumento2 paginefLOW CHART FOR WORKER'S ENTRYshamshad ahamedNessuna valutazione finora

- 3 Types of Chemical BondsDocumento12 pagine3 Types of Chemical BondsSaediRisquéBriskeyNessuna valutazione finora

- 1 Colmac DX Ammonia Piping Handbook 4th EdDocumento64 pagine1 Colmac DX Ammonia Piping Handbook 4th EdAlbertoNessuna valutazione finora

- SCE Research Paper PDFDocumento12 pagineSCE Research Paper PDFmuoi2002Nessuna valutazione finora

- Forest Fire Detection and Guiding Animals To A Safe Area by Using Sensor Networks and SoundDocumento4 pagineForest Fire Detection and Guiding Animals To A Safe Area by Using Sensor Networks and SoundAnonymous 6iFFjEpzYjNessuna valutazione finora

- XDocumento266 pagineXTrần Thanh PhongNessuna valutazione finora

- Sub Erna RekhaDocumento2 pagineSub Erna Rekhasurabhi mandalNessuna valutazione finora