Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Vector and Vector Space: Is Called The Euclidian Norm or 2-Norm of Vector V

Caricato da

Rajmohan AsokanTitolo originale

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Vector and Vector Space: Is Called The Euclidian Norm or 2-Norm of Vector V

Caricato da

Rajmohan AsokanCopyright:

Formati disponibili

3.1.

2 Matrix algebra and eigen-

problem

Vector and Vector Space

Vector Norm

We can always find a scalar o such that

u = o v

u

T

u = 1

1

2

n

v

v

=

...

v

v

`

)

T 2 2

1 1 n

1 n

n

1 1 1

= = =

v v +...v

[v ... v ] ...

v

v v

`

)

1/o= is called the Euclidian norm or 2-norm of vector v

T

v v

Example

x

x

y y

A

v

v

1

B

v

u

2

B

q

v

2

1 A

q

u

u

1

1

2

v

=

v

v

`

)

1

2

u

u

u v

= =

`

)

1

1

2 2

1 2

v

u = cos

v v

=

+

2

2

2 2

1 2

v

u = sin

v v

=

+

1

1

2

v

tan

v

=

2 2

1 2

v v +

Q: what if the coordinate rotates

u degree?

Orthonormal Vectors and Matrices

Unity Vector

An nx1 unity vector e

i

has all its element equal to zero except the

i

th

element which equals to unity. That is,

An identity matrix I has its columns (rows) arranged as a

combination of nx1 unit vector as

I = [e

1

e

2

e

n

]

T

i j

1, i = j

=

0, i j

u u

U

T

U = UU

T

= I

U

T

= U

-1

T

T

i

i

= 0, 0.... 0, 1, 0, ...0 e

(

For an arbitrary non-zero vector v, we can always find an

orthonormal matrix U such that

U

T

v = o e

i

o is the norm of vector v.

Example

v = a

1

e

1

+ a

2

e

2

+ . a

n

e

n

1 2

2 2 2 2

1 2 1 2

1 2

2 1 2 1

2 2 2 2

1 2 1 2

v v

v +v v +v

u -u cos -sin

= =

u u v v sin cos

v +v v +v

U

(

(

( (

(

=

( (

(

(

(

1 2

1 2

2 2 2 2

1 2 1 2

T

1

2 1

1 2

2 2 2 2

1 2 1 2

v v

v v

v +v v +v

1

v v 0

v v

v +v v +v

U v e

+

= = =

` `

)

+

)

1 2

1 2

T 2 2 2 2

1 2 1 2

1 2 1

2

2 1 2 2 1

1 2

2 2 2 2

1 2 1 2

v v

v v

v +v v +v

u u v 0

=

-u u v v v 1

v v

v +v v +v

e

(

= =

` ` `

(

) )

+

)

x' cos -sin x

=

y' sin cos y

(

` `

(

) )

Decoupling of Monic 2-DOF system,

Principal Axes

2 1 1 2 T

U U U U

O = O = M

2 T T T -1

, , U U U U U U O = = = I

1 2

2 1

1 2 T

2 1

2 2

T T

2 2

u -u cos -sin

=

u u sin cos

u u cos sin

=

-u u -sin cos

1 0 cos +sin cossin-sincos

=

0 1 sincos-cossin cos +sin

U

U

UU U U

( (

=

( (

( (

=

( (

( (

= =

( (

1 1

2 2

r x cos -sin

=

r x sin cos

(

` `

(

) )

Coordinate Rotation from (x

1

,x

2

) to (r

1

r

2

)

u

r

2

x

2

r

1

x

1

Decoupling of Monic 2-DOF system, Principal Axes (contd)

let x = U r (2)

Substituting (2) into (1) and pre-multiplying U

T

on both sides of the

resulted equation

0 Kx x I = +

0

x

x

x

x

2

1

2

1

=

)

`

+

)

`

K

)

`

=

)

`

2

1

2

1

r

r

cos sin

sin cos

x

x

(1)

0

r

r

cos sin

sin cos

cos sin -

sin cos

r

r

cos sin

sin cos

cos sin -

sin cos

2

1

2

1

=

)

`

+

)

`

K

0 r r

0 r r

0

r

r

0

0

r

r

2

2

n2 2

1

2

n1 1

2

1

2

n2

2

n1

2

1

= e +

= e +

=

)

`

(

(

e

e

+

)

`

1: Decoupled

2: Decoupling by a rotation with angle u

3: New coordinates (r

1

, r

2

) : since decoupled

Input from direction r

1

does not have output

along direction r

2

Principle axes

4: 2DOF principal axes always exist

Decoupling of Monic M-DOF system,

Principal Axes

1. Direction (1-2): Principal Axes

2. Generally a matrix K cannot be decoupled as above, (such U does

not exist)

3. But there always exists orthogonal matrix U for symmetric K such

that U

T

KU = O

2

if i j e

i

e

j

K is always decouplable

(

=

(

=

=

(

=

22

11

22 21

12 11 T

nxn

T

0

0

1/2n) x (1/2n :

cos sin

sin cos

k

K

k K

K K

K

I

I I

I I

U U

I U U

U

Decoupling of Monic M-DOF Systems

0 Kx x I = +

let x = U r where U

T

KU = O

2

0 r r = +

2

O

0 r r

0 r r

0

r

r

...

r

...

r

n

2

nn n

1

2

n1 1

n

1

2

n2

2

n1

n

1

= e +

= e +

=

(

(

(

e

e

+

...

...

Vector Space

a(u + v) = au +av

(a+b) u = au + bu

a(bu)=(ab)u

1u = u

u and v are nx1 vectors, so will be w = a u +b v

Q1: In n x 1 vector space, how many vectors exists

Q2: using the sum of vector a

1

u

1

, a

2

u

2

, to represent a vector

w

nx1 ,

how many of them are needed?

Linear Independence

Suppose we have two nx1 vectors v and u. If

v = b u

A set of nx1 vectors u

i

is linearly dependent if there exists n scalars b

1

, b

2

,

b

n

that are not all zero, such that

b

1

u

1

+ b

2

u

2

+ . b

n

u

n

= 0

where 0 is a null nx1 vector. If the above equation does not hold, then the set

of u

i

is linearly independent.

The linear combination of all the u

i

s will be a nonzero vector v, that is

b

1

u

1

+ b

2

u

2

+ . b

n

u

n

= v

b

1

e

1

+ b

2

e

2

+ . b

n

e

n

0 not hold.

b

1

e

1

+ b

2

e

2

+ . b

n

e

n

0 (linearly independent)

1.

[e

1

e

2

e

n

]

2. rank(A) = n

3. det(A) 0

4. A is non-singular

5. A

-1

exists

6. All column (row) vectors of A are linearly independent

1 1 1

2 2 2

n n n

b b b

b b b

... ... ...

b b b

0

= = =

` ` `

) ) )

I

vector v = [v

1

, v

2

, v

n

]

T

[u

1

u

2

u

n

]

1 1

2 2

n n

b v

b v

... ...

b v

=

` `

) )

[u

1

u

2

u

n

]

-1

1

2

n

b

b

...

b

=

`

)

1

2

n

v

v

...

v

`

)

Q1: Again, What is the condition that

[(.)]

-1

exists?

Q2: What is the essence of u

i

and u

j

to

be linearly independent?

Q3: Linearly independent and

orthogonal, which one is more

important?

Natural Frequency and Mode

shape

From

Generally, e

ni

u

i

Degenerate modes, e

ni

u

i,,

, u

j,

(u

i,,

u

j,

)

Rigid-body motion, e

n1

= 0 u

1

Frequency

Scalar

Easy to measure

Mode shapes

Vector

Spatial aliasing

2 1

ni i i

( ) u u

= M

Natural frequency

and

Mode shape

Mode Shape and Eigenvectors

Essence of eigenvector

Vector vs. Eigenvector, concept of direction

Why do we have eigenvector?

Eigenvalue vs. Eigenvector

General cases

Non-uniqueness of eigenvectors

Mode Shape Functions

Proportionally damped system eigenvector = mode shape function

Special Mode Shapes

Example of mode shapes

Q: hammer test, which locations

should we use?

Supporting points

Q: Mode shape: what kind can you imagine?

Example of mode shapes

X

11 ,

X

21

X

31

X

41

X

51

X

61

X

71

Rigid Body Motion

1

= 0

Repeated Eigenvalues, |

i

= |

j

, e

i

= e

j

Special Mode Shapes

Special Mode Shapes

Local mode

Ford GT

Local mode (contd)

Roll over of a car

Centripetal

Acceleration

Debris fence

Penstock

Hydroelectric dam

Potrebbero piacerti anche

- Application of Derivatives Tangents and Normals (Calculus) Mathematics E-Book For Public ExamsDa EverandApplication of Derivatives Tangents and Normals (Calculus) Mathematics E-Book For Public ExamsValutazione: 5 su 5 stelle5/5 (1)

- Geometric Construction of Four-Dimensional Rotations Part I. Case of Four-Dimensional Euclidean SpaceDocumento12 pagineGeometric Construction of Four-Dimensional Rotations Part I. Case of Four-Dimensional Euclidean SpaceShinya MinatoNessuna valutazione finora

- Mathematics 1St First Order Linear Differential Equations 2Nd Second Order Linear Differential Equations Laplace Fourier Bessel MathematicsDa EverandMathematics 1St First Order Linear Differential Equations 2Nd Second Order Linear Differential Equations Laplace Fourier Bessel MathematicsNessuna valutazione finora

- Chpt05-FEM For 2D SolidsnewDocumento56 pagineChpt05-FEM For 2D SolidsnewKrishna MyakalaNessuna valutazione finora

- 4 Node QuadDocumento27 pagine4 Node QuadMathiew EstephoNessuna valutazione finora

- Transformation of Axes (Geometry) Mathematics Question BankDa EverandTransformation of Axes (Geometry) Mathematics Question BankValutazione: 3 su 5 stelle3/5 (1)

- Document Hw4Documento12 pagineDocument Hw4kalyan.427656Nessuna valutazione finora

- De Moiver's Theorem (Trigonometry) Mathematics Question BankDa EverandDe Moiver's Theorem (Trigonometry) Mathematics Question BankNessuna valutazione finora

- Mechanical Engineering Formula SheetDocumento4 pagineMechanical Engineering Formula Sheetrdude93Nessuna valutazione finora

- Ten-Decimal Tables of the Logarithms of Complex Numbers and for the Transformation from Cartesian to Polar Coordinates: Volume 33 in Mathematical Tables SeriesDa EverandTen-Decimal Tables of the Logarithms of Complex Numbers and for the Transformation from Cartesian to Polar Coordinates: Volume 33 in Mathematical Tables SeriesNessuna valutazione finora

- Multivariable Calculus (MATH-234/MAST218) Winter 2014 Midterm Test SolutionsDocumento4 pagineMultivariable Calculus (MATH-234/MAST218) Winter 2014 Midterm Test SolutionsshpnacctNessuna valutazione finora

- Lect2 07web PDFDocumento19 pagineLect2 07web PDFYug SharmaNessuna valutazione finora

- TensorsDocumento46 pagineTensorsfoufou200350% (2)

- CSTDocumento33 pagineCSTVasthadu Vasu KannahNessuna valutazione finora

- Chap 1 Preliminary Concepts: Nkim@ufl - EduDocumento20 pagineChap 1 Preliminary Concepts: Nkim@ufl - Edudozio100% (1)

- Constant Strain Triangle (CST) : BY R.PONNUSAMY (2008528)Documento33 pagineConstant Strain Triangle (CST) : BY R.PONNUSAMY (2008528)hariharanveerannanNessuna valutazione finora

- Conformal MappingsDocumento53 pagineConformal MappingsTritoy Mohanty 19BEE1007Nessuna valutazione finora

- L-6 de Series SolutionDocumento88 pagineL-6 de Series SolutionRiju VaishNessuna valutazione finora

- Elementary Tutorial: Fundamentals of Linear VibrationsDocumento51 pagineElementary Tutorial: Fundamentals of Linear VibrationsfujinyuanNessuna valutazione finora

- Calculus 2 SummaryDocumento2 pagineCalculus 2 Summarydukefvr41Nessuna valutazione finora

- Rotational Motion 2009 7Documento85 pagineRotational Motion 2009 7Julian BermudezNessuna valutazione finora

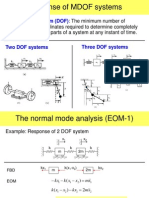

- Response of MDOF SystemsDocumento28 pagineResponse of MDOF SystemsSalvador SilveyraNessuna valutazione finora

- Discrete Random Variables and Probability DistributionsDocumento36 pagineDiscrete Random Variables and Probability DistributionskashishnagpalNessuna valutazione finora

- Spectral Expansion Solutions For Markov-Modulated Queues: Unbounded Queue in A Finite-State Markovian EnvironmentDocumento19 pagineSpectral Expansion Solutions For Markov-Modulated Queues: Unbounded Queue in A Finite-State Markovian EnvironmentAbhilash SharmaNessuna valutazione finora

- Var SvarDocumento31 pagineVar SvarEddie Barrionuevo100% (1)

- CS Module 7Documento96 pagineCS Module 7divya jilhewarNessuna valutazione finora

- 4 Node QuadDocumento7 pagine4 Node QuadSachin KudteNessuna valutazione finora

- Final Review Henry Wan)Documento2 pagineFinal Review Henry Wan)Henry WanNessuna valutazione finora

- 02 SolutiondiscreteDocumento17 pagine02 SolutiondiscreteBharathNessuna valutazione finora

- Lecture 4Documento51 pagineLecture 4Trần Hoàng ViệtNessuna valutazione finora

- Ath em Ati CS: L.K .SH Arm ADocumento9 pagineAth em Ati CS: L.K .SH Arm APremNessuna valutazione finora

- Use of Moment Generating FunctionsDocumento38 pagineUse of Moment Generating FunctionsAdil AliNessuna valutazione finora

- Questions?: Superposition of Periodic MotionsDocumento31 pagineQuestions?: Superposition of Periodic MotionsHarsha DuttaNessuna valutazione finora

- Normed Linear Spaces Notes by DR E - Prempeh - GhanaDocumento13 pagineNormed Linear Spaces Notes by DR E - Prempeh - GhanaNdewura JakpaNessuna valutazione finora

- Mechanics of Solids Week 10 LecturesDocumento9 pagineMechanics of Solids Week 10 LecturesFlynn GouldNessuna valutazione finora

- Multi-Degree of Freedom Systems: 1. GeneralDocumento23 pagineMulti-Degree of Freedom Systems: 1. GeneralRajueswarNessuna valutazione finora

- Qua Tern IonsDocumento10 pagineQua Tern IonsPrashant SrivastavaNessuna valutazione finora

- Z Transform and It's ApplicationsDocumento11 pagineZ Transform and It's Applicationsলাজ মাহমুদNessuna valutazione finora

- 6) Systems of ODESDocumento14 pagine6) Systems of ODESSümeyyaNessuna valutazione finora

- Curs 7Documento13 pagineCurs 7wexlerNessuna valutazione finora

- Solutions For Homework 1 MA510: Vector Calculus: Yuliang Wang January 21, 2015Documento5 pagineSolutions For Homework 1 MA510: Vector Calculus: Yuliang Wang January 21, 2015ffppxxzzNessuna valutazione finora

- Final 20 21iDocumento9 pagineFinal 20 21ialikhalidd23Nessuna valutazione finora

- E e e e e E: We Continue On The Mathematical Background. Base Tensors: (Dyadic Form)Documento6 pagineE e e e e E: We Continue On The Mathematical Background. Base Tensors: (Dyadic Form)Thangadurai Senthil Ram PrabhuNessuna valutazione finora

- Vector Space and SubspaceDocumento66 pagineVector Space and Subspaceriniprem9444Nessuna valutazione finora

- Linear Algebra CH 1 and 2 NotesDocumento25 pagineLinear Algebra CH 1 and 2 NoteskidarchuNessuna valutazione finora

- Modal AnalysisDocumento40 pagineModal AnalysisSumit Thakur100% (1)

- NFNMS 5Documento87 pagineNFNMS 5wanpudinNessuna valutazione finora

- Final Formula SheetDocumento3 pagineFinal Formula SheetwhatnononoNessuna valutazione finora

- Discretization-Finite Difference, Finite Element Methods: D Dy Ax BX DX DX Dy DX Dy Ax A DXDocumento6 pagineDiscretization-Finite Difference, Finite Element Methods: D Dy Ax BX DX DX Dy DX Dy Ax A DXratchagar aNessuna valutazione finora

- EE - 210 - Exam 3 - Spring - 2008Documento26 pagineEE - 210 - Exam 3 - Spring - 2008doomachaleyNessuna valutazione finora

- Llinear Dep TheoramsDocumento17 pagineLlinear Dep Theoramsmarishanrohit.georgeNessuna valutazione finora

- 2nd To 1st OrderDocumento29 pagine2nd To 1st OrderAdithya ChandrasekaranNessuna valutazione finora

- DRM SolutionsDocumento116 pagineDRM SolutionsCésar TapiaNessuna valutazione finora

- Lecture 2 Successive DifferentiationDocumento31 pagineLecture 2 Successive Differentiationgoutam sanyalNessuna valutazione finora

- Spectral Estimation ModernDocumento43 pagineSpectral Estimation ModernHayder MazinNessuna valutazione finora

- Haykin, Xue-Neural Networks and Learning Machines 3ed SolnDocumento103 pagineHaykin, Xue-Neural Networks and Learning Machines 3ed Solnsticker59253% (17)

- D.E Asss Group No 6 R - 113315Documento5 pagineD.E Asss Group No 6 R - 113315arbazsaghir35Nessuna valutazione finora

- MAE 152 Computer Graphics For Scientists and Engineers: Splines and Bezier CurvesDocumento76 pagineMAE 152 Computer Graphics For Scientists and Engineers: Splines and Bezier CurvesPrateek ChakrabortyNessuna valutazione finora

- Index Notation SummaryDocumento5 pagineIndex Notation SummaryFrank SandorNessuna valutazione finora

- State Space Representa On: Mae 571 Systems Analysis Fall 2013 M. A. Karami, PHDDocumento32 pagineState Space Representa On: Mae 571 Systems Analysis Fall 2013 M. A. Karami, PHDRajmohan AsokanNessuna valutazione finora

- Mae 546 Lecture 16Documento8 pagineMae 546 Lecture 16Rajmohan AsokanNessuna valutazione finora

- CR Rigged Rules Double Standards 010502 enDocumento276 pagineCR Rigged Rules Double Standards 010502 enRajmohan AsokanNessuna valutazione finora

- Practice 8Documento8 paginePractice 8Rajmohan AsokanNessuna valutazione finora

- Sets Functions and Groups: Animation 2.1: Function Source & Credit: Elearn - PunjabDocumento27 pagineSets Functions and Groups: Animation 2.1: Function Source & Credit: Elearn - PunjabMuhammad HamidNessuna valutazione finora

- Evaluating Expressions With Exponents PDFDocumento2 pagineEvaluating Expressions With Exponents PDFJillNessuna valutazione finora

- Mathematics: Quarter 2 - Module 3: Introducing Language in AlgebraDocumento26 pagineMathematics: Quarter 2 - Module 3: Introducing Language in AlgebraBernadette Vergara100% (1)

- Taylor Series & Maclaurin's Series Notes by TrockersDocumento24 pagineTaylor Series & Maclaurin's Series Notes by TrockersFerguson Utseya100% (1)

- G8 MIMs # 3 - WEEK 1 - 1st QUARTERDocumento3 pagineG8 MIMs # 3 - WEEK 1 - 1st QUARTERLarry BugaringNessuna valutazione finora

- Step Up Math 7 Integer8Documento1 paginaStep Up Math 7 Integer8zfrlNessuna valutazione finora

- Aops Community 1969 Amc 12/ahsmeDocumento7 pagineAops Community 1969 Amc 12/ahsmeQFDq100% (1)

- Exponential and Logaritmic FunctionsDocumento9 pagineExponential and Logaritmic FunctionsJamelle ManatadNessuna valutazione finora

- Telengana Board of Intermediate Education II Year Math IIA SyllabusDocumento2 pagineTelengana Board of Intermediate Education II Year Math IIA SyllabusYagna Panuganti100% (1)

- Buku Kertas 2 02Documento236 pagineBuku Kertas 2 02Asyikin BaserNessuna valutazione finora

- Strang Linear Algebra NotesDocumento13 pagineStrang Linear Algebra NotesKushagraSharmaNessuna valutazione finora

- Trigonometric Equations in EquationsDocumento11 pagineTrigonometric Equations in EquationsMamta KumariNessuna valutazione finora

- Tensor Algebra: 2.1 Linear Forms and Dual Vector SpaceDocumento13 pagineTensor Algebra: 2.1 Linear Forms and Dual Vector SpaceashuNessuna valutazione finora

- Determinants Important Questions UnsolvedDocumento10 pagineDeterminants Important Questions UnsolvedSushmita Kumari PoddarNessuna valutazione finora

- Factor and Remaimder TheoremDocumento4 pagineFactor and Remaimder TheoremJoann NgNessuna valutazione finora

- Journey From Natural Numbers To ComplexDocumento24 pagineJourney From Natural Numbers To ComplexdimaswiftNessuna valutazione finora

- Resume BookDocumento1 paginaResume BookMandela Bright QuashieNessuna valutazione finora

- Properties of Determinants - Detailed Explanation With ExamplesDocumento5 pagineProperties of Determinants - Detailed Explanation With Examplesshahid aliNessuna valutazione finora

- Linear Algebra Via Complex Analysis: Alexander P. Campbell and Daniel Daners Revised Version September 13, 2012Documento19 pagineLinear Algebra Via Complex Analysis: Alexander P. Campbell and Daniel Daners Revised Version September 13, 2012J Luis MlsNessuna valutazione finora

- Digit Sum MethodDocumento10 pagineDigit Sum MethodKalaNessuna valutazione finora

- Course Title Instructor E Mail Office EL Office Hours ExtbookDocumento2 pagineCourse Title Instructor E Mail Office EL Office Hours ExtbookKhairi Saleh100% (1)

- AA HL Paper 1 1Documento46 pagineAA HL Paper 1 1SagarNessuna valutazione finora

- Grade 8 Mathematics-ACTIVITY SHEETSDocumento144 pagineGrade 8 Mathematics-ACTIVITY SHEETSFlorence Tangkihay75% (12)

- Linear Algebra Economics Computer ScienceDocumento2 pagineLinear Algebra Economics Computer ScienceDebottam ChatterjeeNessuna valutazione finora

- VectorDocumento5 pagineVectorSeema JainNessuna valutazione finora

- Maths - Factors and MultiplesDocumento8 pagineMaths - Factors and Multiplesabhishek123456Nessuna valutazione finora

- Math7 q2 Mod3of8 Translating English Phrases and Sentences To Mathematical Phrases and Sentences and Algebraic Expressions v2Documento25 pagineMath7 q2 Mod3of8 Translating English Phrases and Sentences To Mathematical Phrases and Sentences and Algebraic Expressions v2Vanessa PinarocNessuna valutazione finora

- Special Types of MatricesDocumento2 pagineSpecial Types of MatricesIannah MalvarNessuna valutazione finora

- 2 Homework Set No. 2: Problem 1Documento3 pagine2 Homework Set No. 2: Problem 1kelbmutsNessuna valutazione finora

- Em 2 Module 2 Lesson 5 Transformation by Trigonometric FormulasDocumento9 pagineEm 2 Module 2 Lesson 5 Transformation by Trigonometric FormulasAcebuque Arby Immanuel GopeteoNessuna valutazione finora