Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Lecture 3 - Problem-Solving (Search) Agents: Dr. Muhammad Adnan Hashmi

Caricato da

Arsalan AhmedTitolo originale

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Lecture 3 - Problem-Solving (Search) Agents: Dr. Muhammad Adnan Hashmi

Caricato da

Arsalan AhmedCopyright:

Formati disponibili

Lecture 3 Problem-Solving (Search) Agents

Dr. Muhammad Adnan Hashmi

1 January 2013

Problem-solving agents Problem types Problem formulation Example problems Basic search algorithms

1 January 2013

Suppose an agent can execute several actions immediately in a given state It doesnt know the utility of these actions Then, for each action, it can execute a sequence of actions until it reaches the goal The immediate action which has the best sequence (according to the performance measure) is then the solution Finding this sequence of actions is called search, and the agent which does this is called the problem-solver. NB: Its possible that some sequence might fail, e.g., getting stuck in an infinite loop, or unable to find the goal at all.

3

1 January 2013

You can begin to visualize the concept of a graph Searching along different paths of the graph until you reach the solution The nodes can be considered congruous to the states The whole graph can be the state space The links can be congruous to the actions

1 January 2013

1 January 2013

On holiday in Romania; currently in Arad. Flight leaves tomorrow from Bucharest Formulate goal: Be in Bucharest Formulate problem: States: various cities Actions: drive between cities Find solution: Sequence of cities, e.g., Arad, Sibiu, Fagaras, Bucharest.

1 January 2013

1 January 2013

Static: The configuration of the graph (the city map) is unlikely to change during search Observable: The agent knows the state (node) completely, e.g., which city I am in currently Discrete: Discrete number of cities and routes between them Deterministic: Transiting from one city (node) on one route, can lead to only one possible city Single-Agent: We assume only one agent searches at one time, but multiple agents can also be used.

8

1 January 2013

A problem is defined by five items:

1. 2.

An Initial state, e.g., In Arad Possible actions available, ACTIONS(s) returns the set of actions that can be executed in s. A successor function S(x) = the set of all possible {ActionState} pairs from some state, e.g., Succ(Arad) =

{<Arad Zerind, In Zerind>, }

3.

4.

Goal test, can be

explicit, e.g., x = "In Bucharest implicit, e.g., Checkmate(x) e.g., sum of distances, number of actions executed, etc. c(x,a,y) is the step cost, assumed to be 0

5.

Path cost (additive)

A solution is a sequence of actions leading from the initial state to a goal state. 1 January 2013

13

Real world is absurdly complex: State space must be abstracted for problem solving Abstract state space = compact representations of the real world state space, e.g., In Arad = weather, traffic conditions etc. of Arad Abstract action = a complex combination of real world actions, e.g., "Arad Zerind" represents a complex set of possible routes, detours, rest stops, etc. In order to guarantee that an abstract solution works, any real state In Arad must be representative of some real state "in Zerind An abstract solution is valid if we can expand it into a solution of the real world An abstract solution should be useful, i.e., easier than the solution in the real world.

14

1 January 2013

States? Actions? Goal test? Path cost?

15

1 January 2013

1 January 2013

States? dirt and robot location Actions? Left, Right, Pick Goal test? no dirt at all locations Path cost? 1 per action

16

States? Actions? Goal test? Path cost?

1 January 2013

17

States? locations of tiles Actions? move blank left, right, up, down Goal test? = goal state (given) Path cost? 1 per move

[Note: optimal solution of n-Puzzle family is NP-hard]

1 January 2013

18

States?: real-valued coordinates of robot joint angles, parts of the object to be assembled, current assembly Actions?: continuous motions of robot joints Goal test?: complete assembly Path cost?: time to execute

19

1 January 2013

Basic idea: Offline (not dynamic), simulated exploration of state space by generating successors of alreadyexplored states (a.k.a. expanding the states) The expansion strategy defines the different search algorithms.

1 January 2013

20

1 January 2013

21

1 January 2013

22

1 January 2013

23

Fringe: The collection of nodes that have been generated but not yet expanded Each element of the fringe is a leaf node, with (currently) no successors in the tree The search strategy defines which element to choose from the fringe

fringe

1 January 2013

fringe

24

The fringe is implemented as a queue MAKE_QUEUE(element,): makes a queue with the given elements EMPTY?(queue): checks whether queue is empty FIRST(queue): returns 1st element of queue REMOVE_FIRST(queue): returns FIRST(queue) and removes it from queue INSERT(element, queue): add element to queue INSERT_ALL(elements,queue): adds the set elements to queue and return the resulting queue

1 January 2013

25

1 January 2013

26

A state is a representation of a physical configuration A node is a data structure constituting part of a search tree includes state, parent node, action, path cost g(x), depth The Expand function creates new nodes, filling in the various fields and using the SuccessorFn of the problem to create the corresponding states.

1 January 2013

27

A search strategy is defined by picking the order of node expansion Strategies are evaluated along the following dimensions:

Completeness: Does it always find a solution if one exists? Time complexity: Number of nodes generated Space complexity: Maximum number of nodes in memory Optimality: Does it always find a least-cost solution?

Time and space complexity are measured in terms of

b: maximum no. of successors of any node d: depth of the shallowest goal node m: maximum length of any path in the state space.

28

1 January 2013

1 January 2013

29

Potrebbero piacerti anche

- Solving Problems by SearchingDocumento27 pagineSolving Problems by Searchingoromafi tubeNessuna valutazione finora

- Lecture 4Documento17 pagineLecture 4SHARDUL KULKARNINessuna valutazione finora

- SearchDocumento11 pagineSearchtoysy2020Nessuna valutazione finora

- Unit-2 Problem SolvingDocumento129 pagineUnit-2 Problem Solvinglosojew161Nessuna valutazione finora

- Unit II Sub: Artificial Intelligence Prof Priya SinghDocumento25 pagineUnit II Sub: Artificial Intelligence Prof Priya SinghAbcdNessuna valutazione finora

- Module 2Documento115 pagineModule 2J Christopher ClementNessuna valutazione finora

- PAI Module 2Documento15 paginePAI Module 2rekharaju041986Nessuna valutazione finora

- Problem-Solving: Solving Problems by SearchingDocumento40 pagineProblem-Solving: Solving Problems by SearchingIhab Amer100% (1)

- Solving Problems by SearchingDocumento131 pagineSolving Problems by SearchingPrashaman PokharelNessuna valutazione finora

- Solving Problems by SearchingDocumento78 pagineSolving Problems by SearchingTariq IqbalNessuna valutazione finora

- Solving Problems by Searching: Reflex Agent Is SimpleDocumento91 pagineSolving Problems by Searching: Reflex Agent Is Simplepratima depaNessuna valutazione finora

- Ai - Chapter 3Documento10 pagineAi - Chapter 3sale msgNessuna valutazione finora

- AI Lecture 3 PDFDocumento18 pagineAI Lecture 3 PDFJabin Akter JotyNessuna valutazione finora

- NetworkingDocumento23 pagineNetworkingteshu wodesaNessuna valutazione finora

- My AI CH 3Documento27 pagineMy AI CH 3Dawit BeyeneNessuna valutazione finora

- AI Chapter 3Documento36 pagineAI Chapter 3Mulugeta HailuNessuna valutazione finora

- IntroductionDocumento51 pagineIntroductionAhmed KhaledNessuna valutazione finora

- AI UNIT 1 BEC Part 2Documento102 pagineAI UNIT 1 BEC Part 2Naga sai ChallaNessuna valutazione finora

- Problem Solving AgentsDocumento2 pagineProblem Solving Agentssahana2904Nessuna valutazione finora

- 2 Lecture2 AIDocumento9 pagine2 Lecture2 AIAmmara HaiderNessuna valutazione finora

- AI Unit-2 NotesDocumento22 pagineAI Unit-2 NotesRajeev SahaniNessuna valutazione finora

- Problem Formulation & Solving by SearchDocumento37 pagineProblem Formulation & Solving by SearchtauraiNessuna valutazione finora

- Csen - 2031 - Ai (Solving Problems by Searching)Documento94 pagineCsen - 2031 - Ai (Solving Problems by Searching)losojew161Nessuna valutazione finora

- AI - AssignmentDocumento14 pagineAI - AssignmentlmsrtNessuna valutazione finora

- Artificial Intelligence Lecture No. 6Documento30 pagineArtificial Intelligence Lecture No. 6Syed SamiNessuna valutazione finora

- Solving Problems by SearchingDocumento58 pagineSolving Problems by SearchingjustadityabistNessuna valutazione finora

- Question Bank For CAT1 - 2mksDocumento36 pagineQuestion Bank For CAT1 - 2mksfelix777sNessuna valutazione finora

- Chapter 3 Searching and PlanningDocumento104 pagineChapter 3 Searching and PlanningTemesgenNessuna valutazione finora

- Aiml 6Documento8 pagineAiml 6Suma T KNessuna valutazione finora

- Unit II-AI-KCS071Documento45 pagineUnit II-AI-KCS071Sv tuberNessuna valutazione finora

- cs4811 ch03 Search A UninformedDocumento60 paginecs4811 ch03 Search A UninformedEngr Othman AddaweNessuna valutazione finora

- Ai Notes - 15-21Documento7 pagineAi Notes - 15-21Assoc.Prof, CSE , Vel Tech, ChennaiNessuna valutazione finora

- cs5811 ch03 Search A UninformedDocumento60 paginecs5811 ch03 Search A Uninformedodd elzainyNessuna valutazione finora

- Part 3 Problem Formulation and Search Methods FinalDocumento77 paginePart 3 Problem Formulation and Search Methods FinalAdil KhanNessuna valutazione finora

- 4 - C Problem Solving AgentsDocumento17 pagine4 - C Problem Solving AgentsPratik RajNessuna valutazione finora

- Solving Problems by Searching: Dr. Azhar MahmoodDocumento38 pagineSolving Problems by Searching: Dr. Azhar MahmoodMansoor QaisraniNessuna valutazione finora

- AI Lecture 2Documento19 pagineAI Lecture 2Hanoee AbdNessuna valutazione finora

- Unit 2 SearchDocumento51 pagineUnit 2 Searchujjawalnegi14Nessuna valutazione finora

- Searching in Problem Solving AI - PPTX - 20240118 - 183824 - 0000Documento57 pagineSearching in Problem Solving AI - PPTX - 20240118 - 183824 - 0000neha praveenNessuna valutazione finora

- Lecture 2 (B) Problem Solving AgentsDocumento10 pagineLecture 2 (B) Problem Solving AgentsM. Talha NadeemNessuna valutazione finora

- Artificial Intelligence: (Report Subtitle)Documento18 pagineArtificial Intelligence: (Report Subtitle)عبدالرحمن الدعيسNessuna valutazione finora

- AI Unit 2 NotesDocumento10 pagineAI Unit 2 NotesSatya NarayanaNessuna valutazione finora

- CH 3 SearchingDocumento18 pagineCH 3 SearchingYonatan GetachewNessuna valutazione finora

- Lecture-3 Problems Solving by SearchingDocumento79 pagineLecture-3 Problems Solving by SearchingmusaNessuna valutazione finora

- CS361 AI Week2 Lecture1Documento21 pagineCS361 AI Week2 Lecture1Mohamed HanyNessuna valutazione finora

- AI2 Problem-SolvingDocumento30 pagineAI2 Problem-SolvingAfan AffaidinNessuna valutazione finora

- Artificial Intelligence: RequirementsDocumento19 pagineArtificial Intelligence: RequirementsPreetam RajNessuna valutazione finora

- Lecture 3 Problems Solving by SearchingDocumento78 pagineLecture 3 Problems Solving by SearchingMd.Ashiqur RahmanNessuna valutazione finora

- Unit-2.1 Prob Solving Methods - Search StrategiesDocumento29 pagineUnit-2.1 Prob Solving Methods - Search Strategiesmani111111Nessuna valutazione finora

- Solving Problems by SearchingDocumento82 pagineSolving Problems by SearchingJoyfulness EphaNessuna valutazione finora

- Unit II SOLVING PROBLEMS BY SEARCHINGDocumento63 pagineUnit II SOLVING PROBLEMS BY SEARCHINGPallavi BhartiNessuna valutazione finora

- (WEEK 2) - LECTURE-2 - Search-2022Documento55 pagine(WEEK 2) - LECTURE-2 - Search-2022Precious OdediranNessuna valutazione finora

- Search Algorithms in Artificial IntelligenceDocumento5 pagineSearch Algorithms in Artificial IntelligenceSIDDHANT JAIN 20SCSE1010186Nessuna valutazione finora

- AM Unit3 ProblemSolvingBySearchingDocumento90 pagineAM Unit3 ProblemSolvingBySearchingRohan MehraNessuna valutazione finora

- Unit 2Documento48 pagineUnit 2Kishan KumarNessuna valutazione finora

- Informed and Uninformed SearchDocumento74 pagineInformed and Uninformed Searchਹਰਪਿ੍ਤ ਸਿੰਘNessuna valutazione finora

- 2-1 CAI Unit II Q & ADocumento6 pagine2-1 CAI Unit II Q & Aluciferk0405Nessuna valutazione finora

- CS-1351 Artificial Intelligence - Two MarksDocumento24 pagineCS-1351 Artificial Intelligence - Two Marksrajesh199155100% (1)

- Solving Problem by SearchingDocumento53 pagineSolving Problem by SearchingIqra ImtiazNessuna valutazione finora

- Google Adsense UrduDocumento18 pagineGoogle Adsense UrduArsalan AhmedNessuna valutazione finora

- Oracle Webfolder Technetwork Tutorials OBE FormsDocumento47 pagineOracle Webfolder Technetwork Tutorials OBE FormsArsalan AhmedNessuna valutazione finora

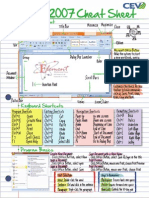

- Word 2007 Cheat SheetDocumento4 pagineWord 2007 Cheat SheetArsalan AhmedNessuna valutazione finora

- Manual Setting All PK Mobile NetworksDocumento2 pagineManual Setting All PK Mobile NetworksArsalan AhmedNessuna valutazione finora

- Course Outline (Artificial Intelligence)Documento2 pagineCourse Outline (Artificial Intelligence)Arsalan AhmedNessuna valutazione finora

- Oracle Forms MaterialDocumento88 pagineOracle Forms Materialwritesmd96% (28)

- AI Lecture 14Documento26 pagineAI Lecture 14Arsalan AhmedNessuna valutazione finora

- Lecture 7 - Adversarial Search: Dr. Muhammad Adnan HashmiDocumento25 pagineLecture 7 - Adversarial Search: Dr. Muhammad Adnan HashmiArsalan AhmedNessuna valutazione finora

- Personality VariationsDocumento17 paginePersonality VariationsArsalan AhmedNessuna valutazione finora

- AI Lecture 13Documento29 pagineAI Lecture 13Arsalan AhmedNessuna valutazione finora

- Lecture 10 - First Order Logic: Dr. Muhammad Adnan HashmiDocumento20 pagineLecture 10 - First Order Logic: Dr. Muhammad Adnan HashmiArsalan AhmedNessuna valutazione finora

- Lecture 9 - Knowledge-Based Agents and Logic: Dr. Muhammad Adnan HashmiDocumento67 pagineLecture 9 - Knowledge-Based Agents and Logic: Dr. Muhammad Adnan HashmiArsalan AhmedNessuna valutazione finora

- AI Lecture 11Documento42 pagineAI Lecture 11Arsalan AhmedNessuna valutazione finora

- AI Lecture 12Documento30 pagineAI Lecture 12Arsalan AhmedNessuna valutazione finora

- Lecture 8 - Adversarial Search: Dr. Muhammad Adnan HashmiDocumento15 pagineLecture 8 - Adversarial Search: Dr. Muhammad Adnan HashmiArsalan AhmedNessuna valutazione finora

- Lecture 5 - Informed Search: Dr. Muhammad Adnan HashmiDocumento21 pagineLecture 5 - Informed Search: Dr. Muhammad Adnan HashmiArsalan AhmedNessuna valutazione finora

- Lecture 6 - Local Search: Dr. Muhammad Adnan HashmiDocumento13 pagineLecture 6 - Local Search: Dr. Muhammad Adnan HashmiArsalan AhmedNessuna valutazione finora

- AI Lecture 1Documento20 pagineAI Lecture 1Arsalan AhmedNessuna valutazione finora

- AI Lecture 4Documento34 pagineAI Lecture 4Arsalan AhmedNessuna valutazione finora

- Methods of PsychologyDocumento24 pagineMethods of PsychologyArsalan Ahmed100% (2)

- Experimental MethodDocumento28 pagineExperimental MethodArsalan AhmedNessuna valutazione finora

- Lecture 2 - Rational Agents: Dr. Muhammad Adnan HashmiDocumento31 pagineLecture 2 - Rational Agents: Dr. Muhammad Adnan HashmiArsalan AhmedNessuna valutazione finora

- PersonalityDocumento43 paginePersonalityArsalan AhmedNessuna valutazione finora

- Using ViewsDocumento9 pagineUsing ViewsArsalan AhmedNessuna valutazione finora

- Oracle Single Row FunctionsDocumento7 pagineOracle Single Row FunctionsArsalan AhmedNessuna valutazione finora

- Introduction To Psychology RevisedDocumento38 pagineIntroduction To Psychology RevisedArsalan AhmedNessuna valutazione finora

- Naturalistic ObservationDocumento15 pagineNaturalistic ObservationArsalan AhmedNessuna valutazione finora

- SQL JoinsDocumento3 pagineSQL JoinsArsalan AhmedNessuna valutazione finora

- 8i Vs 9i Oracle JoinsDocumento3 pagine8i Vs 9i Oracle JoinsArsalan Ahmed100% (1)

- Graph Theory: Lebanese University Faculty of Science BS Computer Science 2 Year - Fall SemesterDocumento77 pagineGraph Theory: Lebanese University Faculty of Science BS Computer Science 2 Year - Fall SemesterAli MzayhemNessuna valutazione finora

- LP Graphical SolutionDocumento23 pagineLP Graphical SolutionSachin YadavNessuna valutazione finora

- Data Structure: Edusat Learning Resource MaterialDocumento91 pagineData Structure: Edusat Learning Resource MaterialPrerna AgarwalNessuna valutazione finora

- TutorialSheet 01Documento2 pagineTutorialSheet 01fuckingNessuna valutazione finora

- Unit - Chomsky HierarchyDocumento12 pagineUnit - Chomsky HierarchySHEETAL SHARMANessuna valutazione finora

- Bit MagicDocumento2.139 pagineBit MagicHarsh YadavNessuna valutazione finora

- Truth Tables 2Documento16 pagineTruth Tables 2Jaymark S. GicaleNessuna valutazione finora

- Domain Specific Language Implementation With Dependent TypesDocumento34 pagineDomain Specific Language Implementation With Dependent TypesEdwin BradyNessuna valutazione finora

- Github Copilot Ai Pair Programmer: Asset or Liability?Documento20 pagineGithub Copilot Ai Pair Programmer: Asset or Liability?yetsedawNessuna valutazione finora

- Worksheet Sig Fig 9 11 08 PDFDocumento2 pagineWorksheet Sig Fig 9 11 08 PDFzoohyun91720Nessuna valutazione finora

- 3.5 Relations and Functions: BasicsDocumento7 pagine3.5 Relations and Functions: BasicsMark J AbarracosoNessuna valutazione finora

- Programming With Pascal NotesDocumento28 pagineProgramming With Pascal NotesFred LisalitsaNessuna valutazione finora

- Machine Learning Lecture 1Documento10 pagineMachine Learning Lecture 1Sanath MurdeshwarNessuna valutazione finora

- Lecture 6: Optimality Conditions For Nonlinear ProgrammingDocumento28 pagineLecture 6: Optimality Conditions For Nonlinear ProgrammingWei GuoNessuna valutazione finora

- SSK5204 Chapter 6: Pushdown AutomataDocumento27 pagineSSK5204 Chapter 6: Pushdown AutomatascodrashNessuna valutazione finora

- Genetic Algorithm Programming in MATLAB 7.0Documento6 pagineGenetic Algorithm Programming in MATLAB 7.0Ananth VelmuruganNessuna valutazione finora

- Tutorial Sheet 8Documento3 pagineTutorial Sheet 8Barkhurdar SavdhanNessuna valutazione finora

- Peng-Analysis of CommDocumento8 paginePeng-Analysis of Commapi-3738450Nessuna valutazione finora

- Thomas Method Viviana Marcela Bayona CardenasDocumento16 pagineThomas Method Viviana Marcela Bayona CardenasvimabaNessuna valutazione finora

- Oop Lab9Documento6 pagineOop Lab9SirFeedNessuna valutazione finora

- Non-Uniform Discrete Fourier TransformDocumento4 pagineNon-Uniform Discrete Fourier TransformK.U. KimNessuna valutazione finora

- Chapter 5b Difference EquationDocumento15 pagineChapter 5b Difference Equationfarina ilyanaNessuna valutazione finora

- Data Structure - Shrivastava - IbrgDocumento268 pagineData Structure - Shrivastava - IbrgLaxmi DeviNessuna valutazione finora

- Part2 PredoptDocumento35 paginePart2 PredoptKhadija M.Nessuna valutazione finora

- AVL Trees: Muralidhara V N IIIT BangaloreDocumento26 pagineAVL Trees: Muralidhara V N IIIT Bangalorefarzi kaamNessuna valutazione finora

- SJSU EE288 Lecture18 SAR ADCDocumento15 pagineSJSU EE288 Lecture18 SAR ADCZulfiqar AliNessuna valutazione finora

- Solved Long Division Problems With Step-By-Step Walkthrough: Solutions Are On Page 2Documento2 pagineSolved Long Division Problems With Step-By-Step Walkthrough: Solutions Are On Page 2khuranaamanpreet7gmailcomNessuna valutazione finora

- Midterm CS-435 Corazza Solutions Exam B: I. T /F - (14points) Below Are 7 True/false Questions. Mark Either T or F inDocumento8 pagineMidterm CS-435 Corazza Solutions Exam B: I. T /F - (14points) Below Are 7 True/false Questions. Mark Either T or F inRomulo CostaNessuna valutazione finora

- DFT and FFT Chapter 6Documento45 pagineDFT and FFT Chapter 6Egyptian BookstoreNessuna valutazione finora

- ShgsfjhgfsjhfsDocumento3 pagineShgsfjhgfsjhfsohmyme sungjae0% (2)