Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Solaris Crash Recovery and Fault Analysis

Caricato da

Subba ReddyDescrizione originale:

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Solaris Crash Recovery and Fault Analysis

Caricato da

Subba ReddyCopyright:

Formati disponibili

The system is down - where do I begin ?

1.0 - Failed System Analysis. Start by verifying if the machine is infact down. If panic messages are streaming by, smoke pouring out of the machine, all the power off, etc, you can be confident that the machine has faulted. However, things are not always so obvious. The machine could be hung, the network down, some services could be broken, or even the machine is fine - your user expectations could be wrong, so they perceive that the machine is down. 1.1 Test Network Connectivity Firstly, if possible, ping the machine. If the machine responds, you know that at least the core of the OS is functional, and the network is up. If not, you know you need to do more investigation. Failure to respond to a ping can be caused by broken routing, bad netmasks, interface problems or any of a range of network failures. Usually a good time to head to the computer room and start investigating. If you can ping the machine, can you telnet or rlogin into the machine ? If you can, the machine is probably generally healthy, and it is time to find out what the user observed, and what is causing this. There are several possibilities as to why you may not be able to login to the machine via the network. If the machine is in single user mode, you usually get a message telling you that you cannot login at this time. Similarly, running out of pseudo terminals will normally give a message indicating that the machine cannot create another device. 1.2 Checking the console Next, check the console. Make sure it has power. If it is not on, you can halt the machine when you turn it on. If the machine has a key and you can put the machine into lock mode, do so before turning on the power to the terminal. Read the messages. Carefully. They are trying to tell you something. If there are error messages, note them down. They may not survive a reboot. They may be the only clue to the failure, and losing them could well mean the difference between "Please do this to fix the fault" and "Please phone us next time the problem reoccurs." One of my favourite debugging tools is the extremely rare console terminal printer. So many failures would be captured in all their glory the first time with this tool. A 30 line panic message cannot scroll over the top of a printer, whereas it can on a terminal. Naturally, with a console printer, you do not want to do much work on the console, but this is true anyway - the console is for system administration, and should only be used as such.

1.3 Checking for System Hang Ok - no messages on the console, the network appears to be down. Press the return key on the console then wait a few seconds. Did anything happen ? Usually, if the console responds, even if the console just moved down a line, the machine is probably still alive. If the machine has a graphic head, press the caps lock key. If the caps lock light responds, the machine is atleast not hard hung. If the machine does not respond, you are rapidly running out of options. The next action might be a surprise - go and observe the machine. Listen to it - is the disk hammering away ? Are the lights flashing on the machine ? Are the disk lights flashing ? Are the network lights flashing ? Is the machine exhibiting any signs of life ? By this time, you should be able to make a reasonable guess as to whether the machine is still running at all, or whether it is hung. 1.4 Recover or Reboot ? If the machine still appears to be running, but denying service, you need to do an environment check. Start at the network. There are a number of network services that can hang the machine. Make sure the network connection is firmly plugged in. Make sure the hub has power. See if the link light is on at the hub. If the link light is on, is the active light on, flashing or doing nothing ? Possibly try a different port in the hub. Unplug the machine from the hub and see if the console gets any messages. Doing this investigation may give vital clues as to what the failure is. These can be vital if you find that rebooting the machine does not fix the failure, and are searching for some external agent impacting the machine. If this seems like a lot of things to look at and relatively few direct fixes, I apologise, but the operating system is over 5.5 million lines of code at last count, and given the nature of this size and the accompanying flexibility of system, the range of failures that can impact the machine are simply immeasurable. This is the same for any unix, and all modern operating systems. Quick sales spiel - the sheer range of possible failures is a good reason to have a maintenance contract so that you have people specializing in this stuff backing you up when you really need them. 1.5 Capturing Failure Analysis Data If the machine is hung or so severely broken that you cannot proceed, it is probably a good time to either call your support supplier or try to dump the state of the machine for later analysis. If you really are on the ball, you can possibly break into the prom and rummage around in the machine to see what is broken. I won't bother to elaborate on that option - if you can't do it already, nothing I can say now will help you. We do have a training course that will give you a reasonable shot at doing this stuff, and more. I will give some details at the end if anyone is interested. We want to get to the prom prompt to tell the machine to dump the

memory image into swap space. Hopefully you have adequate swap space to cope with the dump, or we may simply not be able to capture all of the dump, and the dump will be worthless. Even then, you will need have savecore turned on and you have not striped your swap space to avoid going into single user mode and manually capturing the savecore. More on this later. First, if you are on a graphic head, hit the stop and A keys simultaneously. If you are on a terminal, generate a break. Since this is terminal dependant, this exercise is left to the student. Also, if you are on a machine with a key control on the front, for example, an enterprise server or a sparc centre, make sure the key switch is not in the locked position, as this disables the console break. All going well, you will be rewarded with an ok prompt. This means the machine was "soft" hung, and there is a good chance that we will be able to get a core for subsequent analysis. If you do not have an ok prompt, time to get a bit more brutal. Unplug the keyboard, then plug it back in if you are on a graphic head. If you are using a terminal, powercycle it. Please note - any messages on the screen will be lost make sure you have noted them down before hand. If you still have no ok prompt, either the machine is hard hung or someone has seriously disabled console breaks. At this time, your only option is to powercycle the machine. The only available debugging will be your observations, the system logs if they are intact and possibly sar. More on that later. If you have got an ok prompt, type sync and hit return. The machine should then dump the memory image to swap. Usually, the only reason this will fail is gross SCSI chain failure. You will usually know this because either the machine will hang on the sync or you will get major scsi errors on the write. This means the core is not likely to be captured, but we do have more clues as to the nature of the failure.

The machine is down.

2.0 - System Recovery.

How do we get it back online ?

The immediate choice you need to make at this point is whether to bring the machine up to multiuser first or into single user and look around, possibly doing some repairs first. Going to single user will take more time, but is generally safer, and if you need to recover the savecore and have some issues as to why you may not be able to do this on a normal boot, you may need to bring the machine up in single user. A third option exists for really sick machines - booting off the network or cdrom and running off an in memory, minimal image of unix. You will need to boot to an in memory image of the operating system to recover root or user from backup, repair the device tree or do some serious debugging of why the machine cannot boot to single user mode. 2.1 - Pre-recovery planning Before we begin system recovery, take a couple of minutes and think

about what you are going to do. This is especially true if you are using disk striping on swap, and is critical if your root and user file systems are mirrored. What is a good option for system resilience to hardware failure also makes system recovery much more complex. Now is a good time to take stock of what is required. If you have got mirrored root and user file systems, you want to endeavour to recover the operating system from single user mode or multi-user mode - why ? Because when you boot off the cdrom, you cannot deal with the operating system via the mirroring software. You must mount one half of the mirror and repair it. Even if you repair both sides of the mirror, it is very unlikely that they will both be repaired to the same state. When you boot the machine up and the mirroring software comes online, it has no idea which side of the mirror is correct, and it will scramble the data on both sides of the mirror until they are equally smashed. You can safely assumes that this is very bad, and you will be restoring your machine from backup shortly afterwards. 2.2 - Power cycle the whole machine Generally, in the event of a major failure of the system, if you are at the ok prompt, power cycle the system. This includes all disks, tape drives, etc. External devices can get seriously confused and stop the machine booting by jamming a scsi channel. This may even be what caused the failure initially. The only fix is a powercycle. Turn on all external devices and, in the case of devices like the storage arrays, wait until all the disks are online. Then power on the cpu. If you want to boot to a runlevel other than the default, hit stop A or break on the console. This should get you to an ok prompt. If you get to a greater than (>), type n then hit return to get to the ok prompt. If you are on a machine with a key switch, and you are not sure that the hardware is behaving correctly, you can always set the switch into diagnostic mode. Diagnostic mode will print all of the power on self test information as the machine boots, giving you a chance to view what is happening within the core of the machine at boot time, and hopefully highlighting any component failues on the machine. Almost all component failures will be caught by either the power on self test or be seen by the operating system and logged in the system messages files. You can also turn the diag-switch option on in the NVRam, but unless you change the diag-device entry, the machine will attempt to boot off the net. Break to the ok prompt at this point and turn the diag-switch to false to complete booting. 2.3 - NVram options and issues If the machine hangs at boot or seems to be trying to do something odd, e.g. boot off an unexpected device, it is conceivable that the machine has scrambled the NVRAM that the prom uses to boot from. There are 2 ways to fix this - if the machine is hanging on boot, powercycle the machine and hit the stop N key combination repeatedily. This will reset the NVRAM and hopefully get you to the ok prompt. If you are already at the ok prompt, enter set-defaults and the NVRAM will be reset. This will clear out any NVRAM programming you have done. Make sure you have noted down what you have done and restore it if required. This may also set the diagnostic switch on. You will know this is on when the machine tries to boot from the

network. Type set diag-switch? false at the ok prompt to turn this off. The NVRam and boot prom are reasonably sophisticated on the sparc hardware. It is capable of a range of testing and system diagnosis, and system configuration. You can view the system device tree to check for items you are expecting to see, configure devices aliases, load firmware, configure boot options, etc. This is all documented in the Solaris System Adminsitrator Answerbook. Particular things of note are the ability including the scsi devices via probe-scsi the on-board network connection to see if wire via watch-net. You can traverse the to test various options and probe-scsi-all, and check it sees any packets on the device tree with cd and ls.

There are also a range of switches for the boot command, most notably -v - verbose option - report the device testing at boot time -r - reconfiguration boot - rebuild the device tree on boot -s - boot to single user mode -w - do not start up any windowing, normally used for in-memory boots -a - check with the user which of the boot files to use on boot. 2.4 Booting to an in-memory image I will cover the cdrom or network boot of the machine first, as this is the most drastic and low level boot, and has the most powerful recover options. If you have a network boot server for the local machine configured, boot net -sw will boot you up to a single user shell, no password required. If you do not have this previously configured, pull out the manual on jumpstart and start reading. If the machine has a Sun cdrom drive, load the operating system cdrom into the drive and enter boot cdrom -sw at the ok prompt. If you are worried about the security implications of the above, either lock the machine in a secure room (in real terms, there is no security for a physically accessable machine, ever) or set the prom password on. Do not forget it. If you do forget it, the only way to unset it without knowing the password is via the eeprom command when the machine is running and you are logged in as root. If you can't get the machine up, you are history. Other things to note - when you boot up to a memory image, the device tree is not built with historic information. This means that the device tree may differ radically from that on a machine that has been reconfigured several times, as the machine when running will attempt to retain device numbers over time. For example, you always want your database to live on c5t2d0d3, even if you remove controller four from the system. 2.5 Cleaning up after the crash Normally, the first thing to do here is to fsck the root and user file systems, unless you are running a mirrored root or user file system and

do not intend to make any changes on it. This is the only time when you can safely fsck the raw root and user file systems. This can fix seriously corrupt file systems. However, fsck is not a panacea. I have seen a number of examples of fsck incorrectly recovering a corrupt root and the only fix has been to recover the file system from backup. Backups are important, do them. The fsck will show what mount point the file system was last mounted as, assuming you are fscking a file system. Check to see that you are indeed fscking the file system you intend to fsck. You can then mount the root file system on the mount point /a and the user file system as /a/usr. Do an ls to verify that they are infact the correct file systems. If user is wrong, at least you can look in /a/etc/vfstab and see what it should be. At this point, we can chroot to /a and perform maintenance on the disk based operating system root filesytem image while it is quiescent. Note, however, that you have not mounted /var or other file systems. If you need them, fsck them then mount them before you do the chroot. You will want /var if you intend to use the vi editor on the root file system. Also note that you will need to set the TERM type when in single user mode. If you have to recover the root and user file systems, this is the point to do it. Assuming that you have a ufsdump of the file systems, newfs the raw partition, mount it as /a and ufsrestore to it. You will also need to run the installboot command to tell the boot loader where to find the boot block. Otherwise, the machine will complain that the boot file does not appear to be executable when you try and bring the machine up. If you are dealing with a mirrored root or user file system, you immediately need to edit /a/etc/vfstab and comment out the mirrored device entries, replace both the raw and cooked mirrored file system entries with the raw and cooked disk device entries, then edit /a/etc/system and comment out the metadevice entries if they exist. When the machine is back up, you will need to rebuild the devices to get the machine fully operational. Generally, if you are in this situation, call your support provider before attempting the recovery and get help. The penalties for failure are high enough that you want to verify that you are taking the correct measures. If you are not sure what you are doing, you are in over your head. 2.6 Rebuilding the device tree and the /dev structures Running on the in memory unix image is the best time to rebuilt the operating system device tree, especially in the event of corruption of various nodes under /dev. /dev under solaris is merely a series of symbolic links into /devices. The entries in /devices are complex device pointers into the in-memory device tree. To rebuild the device tree and the /dev structures, you need to know if the operating system is carrying device history. I will cover how to rebuild the device tree without history first. Firstly, chroot onto the root file system by chroot /a /bin/sh cd to /dev and remove the rmt, dsk and rdsk directories. Remove any other suspect entries in /dev, but do not remove the following entries :

/dev/null, /dev/zero, /dev/mem, /dev/kmem and /dev/ksyms. cd to /devices and remove all the directories except pseudo. /devices/pseudo has the pointers for the previously mentioned devices. Next, rebuild the /devices entries, then the symlinks for the various required devices via the following commands: drvconfig devlinks disks This will rebuild the devices required for rebooting the operating system. Touch /reconfigure to get the operating system to complete the device tree rebuild on the next boot. If you are trying to rebuild the device tree on a machine with some historic device entries, which is generally most important for disk controller numbers, you will need to retain the first entry in /dev/rdsk and /dev/dsk for that disk chain, e.g c5t0d0s0 in the above example, before you rebuild the device tree. If you wish to change a disk controller number, you use a similar mechanism. For example, to change a controller from c4 to c5 where there is no c5 currently, cd to /dev/rdsk, move c4t0d0s0 to c5t0d0s0, remove all the other c4 entries, do the same in /dev/dsk and then run the command disks. You can do some manipulations of the device tree on a multiuser running system, but this is a form of russian roulette that I would not advise unless you are fully aware of what the system is doing. 2.7 Preparing the machine to boot You can also turn on savecore at this time. I will cover this in a later section, as this is certainly not the best time to turn on savecore. You may also want to turn on various boot up debugging at this time. The most useful tool I find for debugging boot failures is a quick hack to the /etc/rc scripts. The main script of interest are /etc/rc2. If you have a quick look through this script, you will find a small section of code that runs the S scripts in the directory /etc/rc2.d with the start option. I suggest that you place an echo of the script name just before the case statement in which this is done in, so that you can see which script is hanging or failing. You can also run the scripts with the -x option to debug more gnarly failures, or set the -x option within a problem script. Turning the -x option on for all scripts will overload your screen with information, and is generally not a good idea. When you have finished with the root file system, exit out of chroot, cd to / and unmount the usr, var and root file systems before rebooting the machine. 2.8 Booting up to single user mode To boot the machine to single user mode, get to the ok prompt and type boot -sw This should bring the machine up to the point where you are asked for the root password to proceed with system maintenance. If the machine does not accept the root password at this point, you may well find that the shadow or password file has been damaged or destroyed by the system failure. The only recovery option you have is to boot to an in-memory version of the operating system, and repair these files. Remember the

issues involved if you are using mirroring. 2.9 Cleaning up when forced into single user mode Another situation that would normally cause the machine to enter single user mode is the failure of a file system to fsck cleanly when booting. The machine will usually have the root and user file systems mounted read only when this occurs, and the mnttab file will usually indicate that all file systems are mounted. When this occurs, you first need to remount the root and user file systems as writeable, then clean up the mnttab file before proceeding with other work. Start by fscking the root and user file systems, or just run fsck to fsck all the filesystems with fsck entries in the vfstab. Then remount the root and user file systems as writeable via the following commands mount -o remount,rw / and mount -o remount,rw /usr Follow this by a umountall command, which should clean up the mnttab file. 2.10 Manually Dumping the savecore image With the machine in single user mode, you can dump the save core image into a file before swap is mounted and possibly trashes the image. Since the savecore image can be up to the size of physical memory, you will want to dump this on a partition that has enough space. By default, the operating system attempts to dump this in the /var/crash directory. You will need to mount the partition you wish to dump the image in, if it is not already mounted. Create a directory for the image if it does not already exist, and use the command savecore image-directory to dump the image. 2.11 Booting to multiuser mode Once you have finished whatever single user administration work you wish to do, you can exit single mode to immediately boot to multiuser mode. There are two places that this process will normally hang. 2.12 Nfs and the automounter The first and most common is when there is a nfs mount in the vfstab file, and the remote server is not running. The simple work around to this is to add either the bg or soft mount option in the vfstab, or use the automounter. When you are on a server, the automounter needs to be used with great care if you are using direct maps. Personally, I am religiously opposed to direct maps, but they are a common choice. The problem arises in the fact that the automounter takes control of a mount point in a direct map. If, for example, you wish to mount /usr/local from the main server as /usr/local on all of your client machines. If you are using nis or nisplus maps to manage the automounter, when the server runs the automounter, the automounter tries to take control of /usr/local, which already has a file system mounted under it, and in use. This has caused the mount point to hang when I have run into this problem. I prefer to use the automounter in combination with symbolic links to get

around this, so that at work, my /usr/local is a symbolic link to /wgtn/apps/local. This map system also allows me to do some neat things with lan and wan links with one consistent set of maps. 2.13 Problems in the device tree Another fault that can cause a hang at boot is a problem in the device tree. One common bug is that the ps command will hang due to a corruption in the device tree caused by buttons and dials package. If you have the device /dev/bd.off, remove it, then get rid of the buttons and dials software via pkgrm SUNWdialh SUNWdial unless you have a buttons and dials board on your machine. I am not aware of any of these in New Zealand. The other problem is normally related to the permissions of /dev/null or /dev/zero. You can check the permissions of these via the following command ls -lL /dev/null /dev/zero which should return something like crw-rw-rw1 root sys 13, 2 Apr 19 21:04 /dev/null crw-rw-rw1 root sys 13, 12 May 4 1996 /dev/zero These need to be readable and writable to all, otherwise processes will block when trying to access them.

Now that we are up, why did we fail ?

3.0 Failure Analysis Tools Now that you have the machine up and running again, it is time for a quick look around to see what possibly caused the failure. 3.1 The system log files The first place to look are the system message files. The current messages can be pulled from the machine via the dmesg command. If the machine has been rebooted recently, the boot details of the machine, including the hardware configuation at that time, can be seen. These messages are also saved in the messages files in /var/adm, the older files having a numeric postfix on them. Hardware problems seen by the operating system will also be seen in these files. It is worthwhile monitoring these files and being aware of what the messages in them mean on your machine. These files are updated via the syslog daemon. How the daemon works and what it logs can be modified via the syslogd.conf file. If, for example, you find that the file is continuously being filled with sendmail messages, you may elect to put the sendmail messages in a separate file and allocate the system messages file to more critical messages. As a side note, if the last message from the previous boot is from syslogd indicating it was shutdown with a signal 15, this tells you that someone halted the machine manually. Use the last command to give you clues as to who the culprit may be. 3.2 System Accounting

One very useful tool to consider using if you are not already is sar, or system accounting. As the adm user, you can use cron to run the command /usr/lib/sa/sa1 periodically to check point various operating system parameters, including cpu usage, system memory and swap usage, networking and disk utilization, etc. You can then use the sar command to interogate the files captured to get information and trends from these files. The trends are particularly useful for system performance tuning and system debugging. This is often the only way to spot a slow memory leak in the kernel. Two things to be aware of with sar. It can chew through space in the /var partition, and it is not particularly good at cleaning up after itself. The second problem is that it often does not report correctly after a reboot. The data is being saved, but sar does not report it. I believe you can use the time options on sar to get around this. 3.3 Crash analysis You have captured the system crash and now want to look at it. There are two tools that ship with the operating system to do this, crash and adb. Neither are pretty, but crash is the most user friendly, which is not saying much. Crash at least has a help. Once again, grovelling around in the kernel is for the trained expert, and not much I can say will help here. There are some internal tools being developed to do light weight crash dump analysis, but I am not sure when or if these will be available outside Sun. Generally, call your service provider and they should be able to analyse the core file and figure out why the machine failed. This can be a very time consuming exercise, so please be patient. Digging through hundreds of threads to find which has the critical mutex can be very painful. 3.4 Streams Error reporting Another useful tool that you can turn on is streams error reporting. To turn this on, create a directory /var/adm/streams and run the command strerr. You can run this up in the background on boot to get the machine to continually record the messages. While primarily useful for network problems, streams errors can highlight other system problems and can pick up the odd intermittant fault that has otherwise been missed. Run it, have a look at what it produces. 3.5 SunSolve SunSolve is a database of various system issues, including bug reports, patch information, symptoms and resolutions and other useful documentation that Sun packages up for contract customers. There are two main distribution mechanisms for sunsolve, a cdrom which is distributed once every 6 weeks and via the internet. Both mechanisms have a fairly powerful search engine with them. If you have a error message you wish to check up on, sunsolve is a very good point to do the initial search to see what you can do about it, and whether you should be concerned. 3.6 Patches

Both sunsolve mechanisms also have full access to the Sun patch database, enabling you to install any patches indicated by sunsolve, or the current recommended patch cluster to bring your machine up to date with the current recommended revision of you operating system. Patching the machine to the latest recommended revisions is required before SunService can escalate a problem over to Engineering for analysis and repair. If your machine does become unstable, I would generally recommend bringing the patches up to date incase the problem is known and a fix has already been released for it, which is often the case. The recommended and security patches are also available for anonymous download to all Sun customers from sunsolve1.sun.com. However, access to the non-recommended patches is limited to machines which are covered under a maintenance contract for legal reasons. A patch is a fix to a recognised problem or problems within the operating system or it's attendant software. Patches are normally named with a 6 digit identifier code, followed by a 2 digit revision code. If you cd into the top level of a patch directory, you will see a README file detailing what issues the patch addresses, what special instructions need to be performed when installing the patch and any other issues you will need to be aware of. There will also be an installpatch script and a backoutpatch script. Note that the backout is only possible where that patch has been installed without the -d option. The -d option stops installpatch backing up the files it is patching, so it cannot restore those files if you wish to backout the patch. The -d option is chosen when you install the recommended patches if you elect not to save the original versions of the software. To check which patches are on the machine, you can use the command showrev -p on solaris machines. Under SunOS machines, patches are installed manually, so you need to keep accurate records as to what has been installed. Patching is managed by a wrapper over the package system at the present moment, although this is likely to change with Solaris 2.6. 3.7 Package information The system package database is stored in /var/sadm. Removing, moving or otherwise fiddling with this directory can be fatal to the long term system health, and at minimum will require you to do a full install rather than an upgrade when you next come to upgrade the operating system. If showrev core dumps on you, you will normally find that a core file has been dropped in the /var/sadm directories somewhere. It is safe to clean this up. Under solaris, the whole operating system is managed by packages. You can see what packages are installed via pkginfo and you can get detailed information on a package via pkginfo -l packagename Sun packages almost always start with SUNW, which is our trading stock name. I have no idea why they used this, incase anyone wants to know.

When you install patches, these will install as packages with a numeric postfix to the main packages that they update. You should be able to see this on a pkginfo command.

What can we do to improve reliability ?

4.0 Preventative Maintenance Much of this section borders on what are religious issues for most system administrators. I will cover the least contentious issue first. 4.1 Turning on savecore The savecore command is commented out in the default configuration of the operating system, primarily because of the mayhem dumping a large file in /var can cause when the system administrator is not prepared for it. In the script /etc/init.d/sysetup, you will find the following block commented out ## Default is to not do a savecore ## #if [ ! -d /var/crash/`uname -n` ] #then mkdir -m 0700 -p /var/crash/`uname -n` #fi # echo 'checking for crash dump...\c ' #savecore /var/crash/`uname -n` # echo '' This dumps the savecore file in the /var/crash directory after creating a directory for the image. If you enable the savecore into a different file system, remember that this process is run before the mount of non-core file systems, so you will probably need to mount the file system before running savecore. Also, if you mount the file system now, turn off the automatic mount in vfstab. 4.2 file system Layout Two major schools of thought come into play when discussing the operating system file system layout, those who believe disk space is a problem and want to put everything in the root and those who like to compartmentalise the operating system, putting everything into it's own partition. There have been several major debates about this within Sun, and the general consensus is that the single partition is good for work stations, but not appropriate for servers. I prefer compartmentalisation, and I feel that any reasonably experienced system adminstator should be able to allocate space where required in the first place. Also, disk is cheap these days. However, it really makes very little difference except for a select few file systems. The most important file systems to the operating system are the root and user file systems, whether as distinct partitions or combined. These two file systems should generally be relatively static. On the other hand is the /var file system, which is where the operating system does a lot of scribbling and creating files. In the event of a crash, the /var partition will almost always need an fsck to clean it up

before remounting. Since fsck can also be a liability, you do not want to run it on the root or user file systems if possible. Therefore, it is strongly advised that you keep /var, and any volatile file systems, as distinct file systems from the root and user partitions. This also makes the machine much quicker to recover in the event of major corruption where you need to recover those two partitions. The other separate partition you should have is swap. Although you can run the machine with a swap partition, or you could swap onto a file within a file system, you are not going to catch a dump in the event of failure. This means that you may not find out why the machine is crashing, so you may not get a fix. 4.3 Backup Strategies Backups are obviously important. If you do not know this lesson already, you will learn it in the same painful way that every other unix administrator has learned it. What is almost as important as doing backups is what type of backups you use. tar and cpio are not adequate for backing up the root and user file systems. You need a backup that will guarantee to recover the file system exactly as it was, including holey files, device entries, directory sizes, etc. tar and cpio cannot do this. For the root and user file systems, assuming that they are ufs file systems, use ufsdump periodically. Even if you have the solstice backup product, aka networker, you want to separately backup these partitions via ufsdump, and include the /opt partition. You need networker installed to recover networker backups, and the operating system cd does not have this option, so you would have to build the operating system, then install networker, then license and configure it, before you could begin to recover the machine. With the ufsdump backups, you recover the root, usr and opt file systems from a in-memory version of unix, run installboot, then boot the machine and recover the rest of the system. 4.4 Metadevice Myths A surprising number of people are confused by what mirroring does, and why you use it. One particular confusion relates to whether you need a backup of the root file system if you have it mirrored. What mirroring does is duplicate a partition, including all changes made to it. What mirroring is good for is the continued service of the machine in the event of a hardware failure of half of a mirror, or the ability to backup a partition while the partition is still in use. What it does not protect against is user error or software failure. If someone deletes /etc/passwd on the mirror, it is guaranteed to be deleted from both sides of the mirror, making both sides of the mirror equally useless. Due to various problems with maintenance of a mirrored root file system, I would state that if your machine is mission critical, in that it must provide service between certain hours, mirroring root could well be useful to you. Otherwise, it is often a hinderance, as you are much more likely to suffer a software failure that could be an order of

magnitude more difficult to fix due to mirroring. This comes back to the old rule, which always applies to computers as much as anything else, which is Keep it simple.

Conclusion

There is a lot of complexity within the Solaris operating system, as there is in virtually all modern operating systems. Knowledge and planning are the keys to managing this complexity, especially in times of crisis. If you can take the time to learn about your machine, talk to your users, observe your machine and do a little exploring, reading and thinking, and you will have a less stressful work life. Ofcourse, how you make time for this while you are running around dealing with the current disasters it the big question. Thanks for your time, and I hope you get some value from this course, and that you feel a bit more in control next time everything turns to jello and 400 users are ringing to find out why they can't surf the web ...

Potrebbero piacerti anche

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDa EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceValutazione: 4 su 5 stelle4/5 (895)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDa EverandNever Split the Difference: Negotiating As If Your Life Depended On ItValutazione: 4.5 su 5 stelle4.5/5 (838)

- The Yellow House: A Memoir (2019 National Book Award Winner)Da EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Valutazione: 4 su 5 stelle4/5 (98)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDa EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeValutazione: 4 su 5 stelle4/5 (5794)

- Shoe Dog: A Memoir by the Creator of NikeDa EverandShoe Dog: A Memoir by the Creator of NikeValutazione: 4.5 su 5 stelle4.5/5 (537)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDa EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaValutazione: 4.5 su 5 stelle4.5/5 (266)

- The Little Book of Hygge: Danish Secrets to Happy LivingDa EverandThe Little Book of Hygge: Danish Secrets to Happy LivingValutazione: 3.5 su 5 stelle3.5/5 (400)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDa EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureValutazione: 4.5 su 5 stelle4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDa EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryValutazione: 3.5 su 5 stelle3.5/5 (231)

- Grit: The Power of Passion and PerseveranceDa EverandGrit: The Power of Passion and PerseveranceValutazione: 4 su 5 stelle4/5 (588)

- The Emperor of All Maladies: A Biography of CancerDa EverandThe Emperor of All Maladies: A Biography of CancerValutazione: 4.5 su 5 stelle4.5/5 (271)

- The Unwinding: An Inner History of the New AmericaDa EverandThe Unwinding: An Inner History of the New AmericaValutazione: 4 su 5 stelle4/5 (45)

- On Fire: The (Burning) Case for a Green New DealDa EverandOn Fire: The (Burning) Case for a Green New DealValutazione: 4 su 5 stelle4/5 (74)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDa EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersValutazione: 4.5 su 5 stelle4.5/5 (345)

- Team of Rivals: The Political Genius of Abraham LincolnDa EverandTeam of Rivals: The Political Genius of Abraham LincolnValutazione: 4.5 su 5 stelle4.5/5 (234)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDa EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreValutazione: 4 su 5 stelle4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDa EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyValutazione: 3.5 su 5 stelle3.5/5 (2259)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Da EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Valutazione: 4.5 su 5 stelle4.5/5 (121)

- Her Body and Other Parties: StoriesDa EverandHer Body and Other Parties: StoriesValutazione: 4 su 5 stelle4/5 (821)

- Delphi Database Application Developers BookDocumento207 pagineDelphi Database Application Developers BookAditya Putra Ali Dasopang100% (1)

- SqlnotesDocumento19 pagineSqlnotesKarthee KartiNessuna valutazione finora

- Login SPRINGbootDocumento45 pagineLogin SPRINGbootLincolnNessuna valutazione finora

- XII - CS - Practical RecordDocumento35 pagineXII - CS - Practical RecordAnant Mathew SibyNessuna valutazione finora

- Firebird TuningDocumento60 pagineFirebird TuningHendri ArifinNessuna valutazione finora

- MastekDocumento77 pagineMastekmanish121Nessuna valutazione finora

- 5configuring Windows Server 2008 R2 File SharingDocumento12 pagine5configuring Windows Server 2008 R2 File SharingWilma Arenas MontesNessuna valutazione finora

- Computer FileDocumento9 pagineComputer FileParidhi AgrawalNessuna valutazione finora

- Pinnacle Solutions: Project ProposalDocumento4 paginePinnacle Solutions: Project Proposalmadhawar71% (7)

- Shankar Sahu-17Documento1 paginaShankar Sahu-17Vaibhav Moreshwar KulkarniNessuna valutazione finora

- Xii Cs BengaloreDocumento11 pagineXii Cs BengaloreajayanthielangoNessuna valutazione finora

- DBMS Module 1Documento7 pagineDBMS Module 1F07 Shravya GullodyNessuna valutazione finora

- Programming The Semantic WebDocumento32 pagineProgramming The Semantic Web69killerNessuna valutazione finora

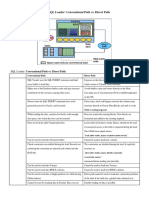

- Oracle SQL Loader - Conventional Path vs. Direct PathDocumento2 pagineOracle SQL Loader - Conventional Path vs. Direct PathFrancesHsiehNessuna valutazione finora

- Informatica: Process Control / Audit of Workflows in InformaticaDocumento7 pagineInformatica: Process Control / Audit of Workflows in Informaticaraj meNessuna valutazione finora

- SAP MDG (Master Data Governance) Online TutorialDocumento14 pagineSAP MDG (Master Data Governance) Online TutorialUday LNessuna valutazione finora

- Okc 115 TRMDocumento546 pagineOkc 115 TRMdavidapps12Nessuna valutazione finora

- Soal AWSDocumento16 pagineSoal AWSSaad Mohamed SaadNessuna valutazione finora

- Security of The DatabaseDocumento16 pagineSecurity of The DatabasePratik TamgadgeNessuna valutazione finora

- Cleanup SQL Backups in Azure StorageDocumento3 pagineCleanup SQL Backups in Azure StorageNathan SwiftNessuna valutazione finora

- FYP (Sugantha Kumaaran)Documento28 pagineFYP (Sugantha Kumaaran)Sugantha KumaaranNessuna valutazione finora

- Project Management SystemDocumento62 pagineProject Management SystemDevika PetiwalaNessuna valutazione finora

- Tibco Iprocess Tips and Troble ShootingDocumento8 pagineTibco Iprocess Tips and Troble Shootingsurya43Nessuna valutazione finora

- Lecture 9 - Database Normalization PDFDocumento52 pagineLecture 9 - Database Normalization PDFChia Wei HanNessuna valutazione finora

- Databases Part 1Documento41 pagineDatabases Part 1Sultan MahmoodNessuna valutazione finora

- Tera Data Vs OtherrdbmsDocumento4 pagineTera Data Vs OtherrdbmsrameshsamarlaNessuna valutazione finora

- ExamView - Quiz 1Documento6 pagineExamView - Quiz 1Amanya AllanNessuna valutazione finora

- Decision Table Data FoundationDocumento4 pagineDecision Table Data Foundationmajid khanNessuna valutazione finora

- Database Design Using The REA Data ModelDocumento18 pagineDatabase Design Using The REA Data ModelNigussie BerhanuNessuna valutazione finora

- Chris Olinger, D-Wise Technologies, Inc., Raleigh, NC Tim Weeks, SAS Institute, Inc., Cary, NCDocumento20 pagineChris Olinger, D-Wise Technologies, Inc., Raleigh, NC Tim Weeks, SAS Institute, Inc., Cary, NCクマー ヴィーンNessuna valutazione finora