Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Tof Summary

Caricato da

djc12536Descrizione originale:

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Tof Summary

Caricato da

djc12536Copyright:

Formati disponibili

Time-of-Flight Depth Sensor based

3D Imaging for Future Display

2011.12.12

Hyunjung Shim, Seungkyu Lee

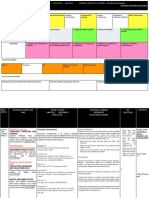

Session I (8:30 am - 10:15 am)

Introduction to ToF Sensor Research

Principle of ToF Depth Sensor

Image Processing Algorithms for Depth Image Quality

Improvement

Session II (10:30 am - 12:15 pm)

Color/Depth Image based 3D Capturing and Modeling

Lighting and Reflectance Extraction from Color/Depth

Image: Inverse problem

Conclusion / Q & A

Course Schedule

Session I (8:30 am - 10:15 am)

Introduction to ToF Sensor Research

Principle of ToF Depth Sensor

Image Processing Algorithms for Depth Image Quality

Improvement

Session II (10:30 am - 12:15 pm)

Color/Depth Image based 3D Capturing and Modeling

Lighting and Reflectance Extraction from Color/Depth

Image: Inverse problem

Conclusion / Q & A

Course Schedule

Depth Sensors

Sharp

Canesta

MESA

Fraunhofer

(&SIEMENS)

ITC-irst

EPFL

MIT

3DV Systems

NHK

Range: 15m

Resolution: 336252

Accuracy: >23.5@1m

Range : 3m

Resolution : 176144

Accuracy : >30

Range : 7.5m

Resolution : 176144

Accuracy : 22@3m

Range : 8m

Resolution : 644

Accuracy : >10

Range : 9m

Resolution : 1616

Accuracy : >90

Range : 4m

Resolution : 128128

Accuracy : 1.4@1m

Range :12m

Resolution : 3232

Accuracy : >30

Range : 2.5m

Resolution : 320240

Accuracy : >20

Range : 10m

Resolution : 1280720

Accuracy : 25@1m

External shutter

SPAD

Photogate

Photodiode

Depth Cameras

2001 2004 2007 2005 2002 2003 2006 2008

EPFL

MIT

MESA

3DV Sys.

CANESTA

Sharp

NHK

Fraunhofer

PMD Tech.

Z-CamTM Z-MiniTM DMC-100TM Z-SenseTM

SR2-A SR2-B SR-3000

DP200 DP300

Axi-Vision Camera HDTV Axi-Vision Camera

PMD[vision]19k

160120

3D CAM

Depth camera

PMD[vision]1k

6416

TOF Camera

Depth camera prototype

HDTV Axi-Vision Camera

ITC-irst

ITC-irst

A

P

D

P

h

o

t

o

g

a

t

e

P

h

o

t

o

d

i

o

d

e

I

n

t

e

n

s

i

f

i

e

r

Depth camera prototype

SR-4000

ZCamTM

# of Publications From IEEEXplore

ToF Depth in Published Papers

0

5

10

15

20

25

30

2005 2006 2007 2008 2009 2010 2011

Calibration

Image Processing

3D Cap./Recon.

Interaction

Recognition,Detection(syst

em)

Overall

ToF-CV Workshop

(CVPR2008)

Special issue on Time-of-Flight Camera Based Computer Vision

(CVIU2010)

Human Activity Recognition from 3D Data Workshop

(CVPR2011)

IEEE Workshop on Consumer Depth Cameras for

Computer Vision (ICCV2011)

Applications on YouTube

Skeleton Detection

Body motion recognition & Interaction

Pictorial body model fitting on the segmented foreground of depth image

Real-time application for 3D game

Applications on YouTube

3D Point Cloud

3D Scene Reconstruction from 3D Point Cloud

2D pixel of depth image is a 3D geometry point seen from camera view

3D Imaging

Applications on YouTube

3D Body Scanning & Virtual fashion using multiple Kinects

(www.tc2.com)

3D reconstruction of real world objects

Depth camera calibration & Point Clouds merging

Space Interface for remote control by body gesture

Applications on YouTube

Intel Labs (http://ils.intel-research.net/projects/rgbd)

Virtual World

3D Modeling

3D Capturing UX (1/3)

3D DSC/Camcorder 3D UCC on VW

Racing

Boxing

Embedded

3D Camera

80

UD/3D Display

100Mbps

Network

Human Motion

Capture Sensor

VW

3D Capturing UX (2/3)

Action Based Game Virtual World on TV

Depth Camera

Video

Analysis

Video

Encoder

Broadband

Network

Event

Database

Video

Archive

Manager

Intelligent

Video

Security

Solution

Real-time

Alerts

Event Search/

Event Statistics

Video

Analysis

Video

Encoder

Broadband

Network

Event

Database

Video

Archive

Manager

Intelligent

Video

Security

Solution

Real-time

Alerts

Real-time

Alerts

Event Search/

Event Statistics

Event Search/

Event Statistics

Real-time

Alert !!!

!!!

Motion Detection

Pedestrian

Detection

Alert

Depth Camera

Security Automotive

3D Capturing UX (3/3)

Session I (8:30 am - 10:15 am)

Introduction to ToF Sensor Research

Principle of ToF Depth Sensor

Image Processing Algorithms for Depth Image Quality

Improvement

Session II (10:30 am - 12:15 pm)

Color/Depth Image based 3D Capturing and Modeling

Lighting and Reflectance Extraction from Color/Depth

Image: Inverse problem

Conclusion / Q & A

Course Schedule

3D Sensing Technologies

3D Sensing Technologies

Interferometry

Triangulation

Time-of-Flight

Depth (m)

R

e

s

o

l

u

t

i

o

n

(

m

)

10

-6

10

-3

10

0

10

3

10

-9

10

-6

10

-3

10

0

Application Example

2D image

Depth map

3D model

Multi-view generation

New contents generation for 3D display

Time of Flight Sensing

Measure the round-trip time of emitted IR light

Object

R

Detector

IR Emitter

C

o

n

t

r

o

l

l

e

r

Depth map

Distance Measurement

IR Modulation

( )

ir TOF

ir TOF on

i nT N

i T T n N

=

=

1

0

1 0

1

1

0

N N

N

T T

T

T T

N

N

on TOF

TOF

TOF on

+

=

=

TX0

TX1

Emitted

Light

Reflected

Light

T

on

T

TOF

N

0

/n

N

1

/n

TX0

TX1

Emitted

Light

Reflected

Light

T

TOF

N

0

/n

N

1

/n

( ) ( )

( ) ( ) |

.

|

\

|

+ = + =

|

.

|

\

|

+ = + =

}

}

2

cos 2 sin

2

cos 2 sin

2

1

0

0

t

u u

t

u u

t

t

t

TOF TOF

TOF TOF

T a d a T a N

T a d a T a N

1 0

1

1

0

2

cos

2

cos

N N

N

T T

T

T

N

N

on TOF

TOF

TOF

+

=

+

+

=

t

t

Pulse Light

Sinusoidal Light

Session I (8:30 am - 10:15 am)

Introduction to ToF Sensor Research

Principle of ToF Depth Sensor

Image Processing Algorithms for Depth Image Quality

Improvement

1. Intrinsic parameter acquisition and non-linear calibration

2. Range ambiguity

3. Depth noise modeling and denoising

4. Superresolution

5. ToF Motion Blur

Course Schedule

Demodulation-related Error

Caused by irregularity in the modulation process

use look-up table, B-Spline [1], and Polynomials [2]

Systematic Depth Errors

Figure 1. PMD, 1-4 m, B-Spline fitting [1]

Figure 2. SR3100, 1-5m, IT 2ms - 32ms,

6 degrees polynomial fitting [2]

E

r

r

o

r

(

c

m

)

Distance(m)

10

0

1 4

20

15

25

5

-5

-10

1.5 2 2.5 3 3.5 4.5

Demodulation-related Error

use 4 stage depth calculation [1]

Systematic Depth Errors

Gate1

Gate2

Emitted NIR

Reflected NIR

Gate3

Gate4

A0

T0

t

d

rA0

Q1 Q1

Q2 Q2

Q3 Q3

Q4 Q4

t

d

Y10, Y20 Y1<0, Y20 Y1<0, Y2<0 Y10, Y2<0

0 T0/2 T0 3T0/2 2T0

Y

Y1 = nQ1-nQ2

Y2 = nQ3-nQ4

|

|

.

|

\

|

=

1

2

d

Y

Y

arctan t

Figure 3. Four Phase depth calculation Figure 4. Difference in depth calculation

Integration time-related Error

Due to the number of collected electrons during the

integration time worsen the repeatability problem

Systematic Depth Errors

Figure 5. Colored 3D point cloud of a flat wall at a constant distance of 1 meter [2].

IT: 2ms IT: 4ms IT: 8ms

X(m) X(m) X(m)

Z

(

m

)

Z

(

m

)

Z

(

m

)

0.5 -0.5 0.5 -0.5 0.5 -0.5

1

0.9

1.1

0.8

1

0.9

1.1

0.8

1

0.9

1.1

0.8

1.15

0.95

0.85

1.15

0.95

0.85

1.15

0.95

0.85

0 0 0

Pixel-related Error

Due to the different material properties of CMOS-gate and

amplitude-related error

use a Fixed Pattern Noise table

Systematic Depth Errors

Figure 6. Fixed pattern noise, SR-2. IT

100ms, nominal distance 2.452 m [3]

Figure 7. Depth-colored amplitude-related errors.

Depth image of a flat wall at 0.43 meters. Depth

overestimation can be observed due to low

illumination (borders of the image) [2].

0.41

meter

0.43

0.45

Amplitude-related Error

Due to the non-uniformity of IR illumination and reflectivity

variation of objects

use a polynomial fitting model

Systematic Depth Errors

Figure 8. Amplitude image of a planar

object with a ramp image. Parts of the

ramp are selected for calibration (blue

rectangle).

Figure 9. The depth samples (blue)

and the fitted model (green) to the

error

x(pixel)

y

(

p

i

x

e

l

)

Amplitude

E

r

r

o

r

(

m

)

0

0.001

0.003

0.004

0.002

0

1

0.5

Amplitude Correction

Light attenuates according to the law of inverse square

Systematic Depth Errors

Figure 20. Distance-based intensity correction [18]

Temperature-related Error

Due to the response of the semiconductor to temperature

change

Wait for at least 4 min after turn on

Systematic Depth Errors

Figure 10. Distance offset drift (fixed target) caused

by self-induced heating of the sensor. SR-2 [3]

Time(min)

E

r

r

o

r

(

m

)

0.4

0.3

0 10 20 30 40 50 60 70

0.35

0.45

Light Scattering

Multiple light reflections between the lens and the sensor

Use scattering model [4] or anti-reflection material on lens

Non-systematic Depth Errors

Figure 11. Light scattering in TOF camera [4]

Figure 12. Scattering artifacts (a) Color image

(b) Background range image (c) Range image

with foreground. (d) Range image difference [4]

Multiple Light Reception

Due to interference of multiple light reflections

Use multipath interference model [5]

Non-systematic Depth Errors

IR LED

Sensor

Figure 14. Top view of the corner. The green points (a

laser scanner). The red points ( the ToF camera with

MPI, RMSE 57mm). The black points (compensated

by MPI, RMSE17mm ) [5]

Figure 13. Multipath Interference

Jump Edge Error

Due to multiple light reception

Use outlier rejection method [6][7]

Non-systematic Depth Errors

Figure 15. Jump Edge Error

Motion Artifact (Blur)

Due to the movement during integration time

Detect pixels with phase deviation [14]

Non-systematic Depth Errors

Figure 16. Motion blurring Figure 17. Motion artifact [14]

Consecutive positions Consecutive positions

Dynamic case Static case

D

i

s

t

a

n

c

e

(

m

)

Depth Folding (Phase Wrapping, Range Folding)

Due to the modular error in phase delay

Use multiple frequencies [17] or MRF with continuous

surface assumption [8]

Non-systematic Depth Errors

Figure 20. Depth unfolding [8]

Emitted signal Incoming signal

t 2

max

2R R+

) 2 sin(

| t

ft A

|

R

max

R R+

) 2 sin( ft t

Figure 18. Depth folding

Figure 19. Depth unfolding

using multiple frequencies [17]

No unified solution for handling all the errors!

Correct FPN and distance offset first

Utilize amplitude and color information (if exist)

Use constant integration time

Open research issues are here!

Depth range ambiguity, multiple light reception effect,

motion artifact in the complex scenes

Comments on Depth Measurement Errors

Bilateral Filter

uses weighted average of depth values from nearby pixels

Depth Noise Reduction

Figure 21. Bilateral filter kernel [9]

Figure 22. Bilateral filtering result

Non-Local Means Filter

uses weighted average of all pixels using the similarity of

squared neighborhoods

Depth Noise Reduction

Figure 23. Non-local denoising result [7]

Learning-based Method

addresses depth discontinuity or low infrared reflectivity

uses Random Forest regressor trained with real-world

data [5]

Depth Noise Reduction

Figure 24. Flying pixels due to

unsuitable reflectivity and large

depth discontinuities [6]

Figure 25. Artifact pixels [6]

Joint Bilateral Filter-based Method

refines depth values using color similarity [15]

Depth Super-resolution

(a) Color image 640x640 (b) Input depth map 64x64 (c) Refined depth maps 640x640 [15]

Markov Random Field-based Method

assumes that discontinuities in depth and color coexist

Depth Super-resolution

Figure 27. MRF-based depth super-resolution result [12]

Multiframe-based Method

models the image formation process from multiple depth

images

Depth Super-resolution

Figure 28. super-resolution result [11]

Filtering-based Method

smoothens the disparity map to hide occluded regions.

Novel View Synthesis

Figure 29. Depth map smoothing [17] Figure 30. Depth map smoothing [18]

Inpainting-based Method

fills the disoccluded region using image-inpainting

techniques.

Novel View Synthesis

Figure 27. Bi-layer inpainting [15]

Image warping Occlusion boundary

labeling

Foreground/background

segmentation

Exemplar-based inpainting

[19]

Region

to be inpainted

Foreground

Region

Background

Region

Segmented region Inpainted result Original image

Warped disparity

map

Labeled occlusion

boundary

ToF Motion Blur

Moving camera/object cause wrong depth calculation

Motion blur

Image sensor

Moving Object

Moving Object

ToF Motion Blur

The characteristic of Tof motion blur is different from color

motion blur

Overshoot Blur

Undershoot Blur

Overshoot Blur

ToF Motion Blur

The characteristic of Tof motion blur is different from color

motion blur

Reflected IR

4-Phase signals inside ToF camera

Time Integ.

1

Q

2

Q

3

Q

4

Q

Radiated IR

|

|

.

|

\

|

=

2 1

4 3

arctan

nQ nQ

nQ nQ

t

d

Depth calculation using the relation of 4-Phase signals

ToF Motion Blur

ToF motion blur model

|

|

.

|

\

|

=

2 1

4 3

arctan

nQ nQ

nQ nQ

t

d

|

|

.

|

\

|

+ +

=

) ) ( ( ) ) ( (

arctan ) (

2 2 1 1

4 3

Q m n mQ Q m n mQ

Q n Q n

m t

d

2

2 1 2 2 1 1

4 3

) ( ) (

1

1

) ( '

|

|

.

|

\

|

+ +

+

=

Q Q n Q Q Q Q m

Q n Q n

m t

d

) (

) (

2 2 1 1

1 2

Q Q Q Q

Q Q

n m

+

=

ToF Motion Blur

) 2 2 (

) 2 1 (

) (

) (

1 1

1

2 2 1 1

1 2

Q Q

Q

n

Q Q Q Q

Q Q

n m

=

+

=

(Normalized)

1

Q

1

Q

1

) 2 2 (

) 2 1 (

0

1 1

1

<

<

Q Q

Q

ToF Motion Blur

How ToF motion blur can be detected / removed?

- Hardware modification

- Image processing methods using blur model

US7450220 Canesta, Method and system to correct motion

blur and reduce signal transients in time-of-flight sensor

systems

S. Hussmann et al. "Real-Time Motion Artifact Suppression in

TOF Camera Systems" IEEE Tran. on Instrumentation and

Measurement, 2011

O. Lottner et al. "Movement artefacts in range images of time-of-flight cameras" Int. Symposium Signals,

Circuits and Systems, 2007

M. Lindner and A. Kolb "Compensation of motion artifacts for time of flight cameras" Dynamic 3D Vision

Workshop, 2009

[1] New Insights into the Calibration of ToF-Sensors, CVPRW08

[2] Lock-in Time-of-Flight(ToF) Cameras A Survey, SJ11

[3] Calibration for Increased Accuracy of the Range Image Camera Swissranger, ISPRS06

[4] Real-time scattering compensation for time-of-flight camera, CVS07

[5] Multipath Interference Compensation in Time-of-Flight Camera Images, ICPR10

[6] Capturing Time-of-Flight Data with Confidence, CVPR11

[7] Robust Non-Local Denoising of Colored Depth Data, CVPRW08

[8] Range unfolding for time-of-flight depth cameras, ICIP10

[9] Bilateral Filtering for Gray and Color Images, ICCV98

[10] Spatial-Depth Super Resolution for Range Images, CVPR07

[11] High-quality Scanning using Time-Of-Flight Depth Superresolution, CVPRW08

[12] An Application of Markov Random Fields to Range Sensing, NIPS05

[13] Stereoscopic Image Generation Based on Depth Images for 3D TV, TBC05

[14] Movement Artefacts in Range Images of Time-of-Flight Cameras, ISSCS07

[15] Bi-layer inpainting for novel view synthesis, ICIP11

[16] Discontinuity-adaptive Depth Map Filtering for 3D View Generation

[17] A Time-Of-Flight Depth Sensor System Description, Issues and Solutions, CVPRW04

[18] Measurements with ToF Cameras and Their Necessary Corrections, ISSCS07

[19] Region Filling and Object Removal by Exemplar-Based Image Inpainting, TIP04

[20] Extrinsic and Depth Calibration of ToF-cameras, CVPR08

References

Time-of-Flight Depth Sensor based

3D Imaging for Future Display

Session II

Geometry, Material, Lighting for Realistic Synthesis

Session I (8:30 am - 10:15 am)

Introduction to ToF Sensor Research

Principle of ToF Depth Sensor

Image Processing Algorithms for Depth Image Quality

Improvement

Session II (10:30 am - 12:15 pm)

Color/Depth Image based 3D Capturing and Modeling

Lighting and Reflectance Extraction from Color/Depth

Image: Inverse problem

Conclusion / Q & A

Course Schedule

Camera Array based 3D Modeling (CMU)

- Synchronized 49 Cameras

(9 on the ceiling + 10 on each wall)

- Video format: S-video, YCbCr 422, 640x480

- One control PC + 17 digitizing PCs

- Real time 3D Video/Audio Capturing

- Arbitrary view synthesis

Input Color

3D Voxel Model

View Synthesis

a

b

c

a

b

c

Camera/Lens Array

-Angular/Spatial resolution trade-off

problem

-Camera size and usability problem

Camera Array, Stanford Univ.

Plenoptic Camera, Adobe

Integral Photography

2D Projection image set capturing

(4D LF 2D image set)

3D Volume -> Surface

(6D(,,x,y,z,) LF 4D(X,Y,x,y) LF)

2D Projection image set capturing

(4D LF 2D image set)

Lens Array Camera

Conventional

Color Camera

Lens Array

Camera

Multiview from Lens Array

A

B

C

2

1

3

2

1

3

2

1

3

Multiview Display

Integral Photography

Display

Color + Depth Camera Set (HHI)

- Heterogeneous Camera Set (Color + Depth Camera)

- Layered Depth Video (LDV) format creation

Bilateral Filtering

4Color + 1Depth Color Input

Depth Warping

Layered Depth Video

Occlusion Map

Depth based view synthesis

CGH from Multi-view / Color+Depth

NICT, Japan, 2008

Hokkaido University, Japan,

2007

Hokkaido University

NICT

3D Reconstruction from Color+Depth

- Color + Depth is one potential future 3D Camera

Multi-view Display

Integral Imaging Display

Holography Display

Examples

http://lightfield.stanford.edu/aperture.swf?lightfield=data/amethyst_lf/p

review.zip&zoom=1

Color Discontinuity

Mixed Camera-Systems

ToF/RGB Geometric Alignment

Camera Calibration

Reprojection Results

Full Four-System Configuration

Multi-System Alignment

Segmented Figure

Rendered Figure & Background

Reprojected Figure-Mesh

Session I (8:30 am - 10:15 am)

Introduction to ToF Sensor Research

Principle of ToF Depth Sensor

Image Processing Algorithms for Depth Image Quality

Improvement

Session II (10:30 am - 12:15 pm)

Color/Depth Image based 3D Capturing and Modeling

Lighting and Reflectance Extraction from Color/Depth

Image: Inverse problem

Conclusion / Q & A

Course Schedule

Considering the usability, we anticipate that the 3D sensing

architecture with scene analysis and synthesis algorithm will

be the most probable solution. Inverse rendering!

Realistic Rendering in Computer Graphics

3D Imaging for Future Display

Autostereoscopic Display

Stereoscopic Display

Stereo Camera

?

Geometry,

Reflectance,

Lighting

Rendering

multiviews

Scene representation for realistic visualization

Realistic Rendering in Computer Graphics

Inverse Problem

Photographs

Forward

Rendering

Inverse

Rendering

This slide is adapted from Koppal and Narasimhan

Geometry

Lighting

Camera

Materials

Elements for realistic scene visualization

Realistic Rendering in Computer Graphics

Inverse Problem

Geometry

Material

Lighting

Rendering

This slide is adapted from Xin Tongs slide for material modeling,

originally from Ravi Ramamoothi.

70

s

, 80

s

: Splines

90

s

: Range Data

10

s

: ToF Depth Sensing

Elements for realistic scene visualization

Realistic Rendering in Computer Graphics

Inverse Problem

Geometry

Material

Lighting

Rendering

This slide is adapted from Xin Tongs slide for material modeling.

Elements for realistic scene visualization

Realistic Rendering in Computer Graphics

Inverse Problem

Geometry

Material

Lighting

Rendering

This slide is adapted from Xin Tongs slide for material modeling.

Elements for realistic scene visualization

Realistic Rendering in Computer Graphics

Inverse Problem

Geometry

Material

Lighting

Rendering

This slide is adapted from Xin Tongs slide for material modeling.

Considering depth sensors for geometry modeling, we focus

on the inverse lighting and inverse reflectometry problems.

Realistic Rendering in Computer Graphics

Inverse Problem

Geometry

Material

Lighting

Rendering

This slide is adapted from Xin Tongs slide for material modeling.

Inverse problem is very challenging.

Materials exhibits complex characteristics, represented by high

dimensional functions.

It is hard to factorize the lighting and reflectance simultaneously.

( ill-posed )

Existing approach often assumes the surface

characteristics/illumination conditions to reduce the unknowns.

Realistic Rendering in Computer Graphics

Challenges

When dealing with real scenes, we need to account for

the noise of geometric model.

Existing approach assumes the ideal/high resolution geometric

model while the true geometric model from real measurements do

suffer from noise and poor resolution.

Realistic Rendering in Computer Graphics

Challenges

Geometry

Material

Lighting

Rendering

Inverse Reflectometry

Material Model

BSSRDF (8D)

BTF (6D)

BRDF (4D)

Homogeneous

BSSRDF (5D)

Texture (2D)

Spatially Varying

BRDF (6D)

Lambertian (1D)

Q: Give the limited resource, how far can we achieve?

S

i

m

p

l

e

M

a

t

e

r

i

a

l

s

C

o

m

p

l

e

x

M

a

t

e

r

i

a

l

s

Inverse Reflectometry

# of Inputs

Few Many

Debevec et al. 01, 04

Ramamoorthi and Hanrahan 01 Boivin and Gagalowicz 01

(# of Inputs)

Georghiades 03

Hara et al. 05 Nishino et al. 01, 05

Mahajan et al. 08 Yu et al. 97 Zickler et al. 05

Gardner et al. 03 Mcmillan et al. 03

Munoz et al. 11

Weyrich et al. 09 Dong et al. 10

Hullin et al. 10 Tong et al. 05

S

i

m

p

l

e

M

a

t

e

r

i

a

l

s

C

o

m

p

l

e

x

M

a

t

e

r

i

a

l

s

Jensen 01

# of Inputs determines the possible applications

Few inputs : Suitable for simple materials, dynamic scenes, consumer

electronics (CE) devices etc.

Many : Suitable for complex materials, static scenes, movie/scientific

applications etc.

Recent research trends move from many to few inputs,

from simple to complex materials.

Inverse Reflectometry

Many Inputs

Capturing many photographs of real scenes (>1000) for

acquiring the reflectance of real scene

Possible to model the real scene and to achieve the photorealism

Applied for movie special effects (e.g. Light Stage)

Impractical for general users (Not suitable for CE)

Inverse Reflectometry

Many Inputs

Inverse Reflectometry

Few Inputs

Photographs

Geometric model

Inverse

Rendering

Algorithm

Lighting

BRDF

Reflectance

Inputs

Approximating the material parameters from a sparse set

of photographs taken under uncontrolled environment

Attractive to practitioners and general users

Quite challenging problems due to too many unknowns

Limited to homogeneous surfaces or simple materials

Inverse Reflectometry

Few Inputs

Input Photographs

View Synthesis Illumination Synthesis

Light probe for inverse lighting

Recovering high dynamic range imaging

Matte, mirror light probes

Assuming a class object (e.g. eye, face etc.), estimating

the lighting distributions

Inverse Lighting

# of Inputs

Few Many

Ramamoorthi and Hanrahan 01

Boivin and Gagalowicz 01

(# of Inputs)

Georghiades 03

Nishino and Nayar 05, 06

Mahajan et al. 08

Yu et al. 97

Zickler et al. 05

S

p

e

c

i

f

i

c

S

c

e

n

e

G

e

n

e

r

a

l

S

c

e

n

e

Debevec 98,01, 02

Wang and Samaras 02

Ramamoorthi et al. 01

Okabe et al. 04

Nishino et al. 01

Li et al. 09

Unger et al.03

Cohen et al. 01

Corsini et al. 08

Inverse Lighting

General Scenes

Various light probes/light goniometrics are employed to

measure the lighting

Impractical for general users (need to install the light probes while

capturing the scenes)

Shadows are another useful key for estimating the lighting

distribution

Performance will depend on the shadow detection algorithm

Inverse Lighting

General Scenes

A class-object model is assumed.

Performance depends on the object detection/segmentation etc.

Lighting can be recovered up to the reflected light.

Inverse Lighting

Specific Scenes

Inverse Problem In Practice

Inverse problem using a color/depth camera

A Color/Depth camera ready for the market

Applying the inverse rendering algorithm onto such a device to reveal the

appearance of scenes

Few inputs

Compact parametric representation for materials

Complex materials and global illumination

Diffuse (Texture), glossy, specular (Direct illumination)

Translucency, subsurface scattering (Indirect illumination)

Lack in details

Geometry enhancement ( motivated by super-resolution )

Semantic approach

Noisy inputs

Accounting for noise models during inference

Robust algorithm ( motivated by photometric stereo)

Inverse Problem In Practice

+

Few inputs

Compact parametric representation for materials

Noisy inputs

Accounting for noise models during inference

Robust algorithm ( motivated by photometric stereo)

Lack in details

Geometry enhancement ( motivated by super-resolution )

Semantic approach

Complex materials and global illumination

Diffuse, glossy, specular (Direct illumination)

Translucency, subsurface scattering (Indirect illumination)

Inverse Problem In Practice

Inverse Reflectometry with Few Inputs

[Homogeneous BRDF]

Given the geometry and few input images, solving the

inverse problem

Recovering both reflectance as well as lighting

Assumptions: Known geometry, Distant illumination, Homogenous isotropic materials,

No shadows and interreflection

Ramamoorthi and Hanrahan [01] employed a signal

processing framework to formulize the forward/inverse

rendering

=

Light Image

BRDF

Input Signal Output Signal

System

Inverse Reflectometry with Few Inputs

[Homogeneous BRDF]

Reflection as convolution

2

2

t

t

} i

du ( )

i

L u

Lighting

( )

o

B u =

Reflected

Light Field

( , )

i o

u u

BRDF

( )

i

L o u +

( , )

i o

u u

i

du

2

2

t

t

}

( , )

o

B o u =

o

L

L

i

u

o

u

Global Coordinate = Local Coordinate

Global Coordinate Local Coordinate

'

o

u

'

i

u

( ) ( ) ( )

'

2 /

2 /

' ' '

, ,

i o i i o

d L B u u u u u o

t

t

}

=

( ) ( )

'

2 /

2 /

' ' '

,

i o i i

d L u u u u o

t

t

}

+ =

Linear Shift Invariant System

Inverse Reflectometry with Few Inputs

[Homogeneous BRDF]

Reflection as convolution

Analyzing well-posed conditions for inverse

lighting/reflectometry.

High frequency in BRDF (Sharp highlights) Well-posed in inverse lighting

High frequency in Lighting distribution (Sharp features like point lights, edges, etc.)

Well posed in inverse reflectometry

2

2

t

t

}

i

du ( )

i

L u

Lighting

( )

o

B u =

Reflected Light Field

( , )

i o

u u

BRDF

( )

i

L o u +

( , )

i o

u u

i

du

2

2

t

t

}

( , )

o

B o u =

p l l l p l

L B

, ,

A =

= L B

LSI SYSTEM Convolution operator

Inverse Reflectometry with Few Inputs

[Homogeneous BRDF]

Applying a nested algorithm for estimating unknown

lighting/BRDF

Photograph

Rendered

Photograph

Rendered

p l l

p l

l

B

L

,

,

A

=

l l

p l

p l

L

B

A

=

,

,

Inverse Reflectometry with Few Inputs

[Homogeneous BSSRDF]

Estimating the BSSRDF from a single image [Munoz 11]

Assumption: Optically thick materials, Dipole diffusion, A fixed a refraction index

After all simplification, the unknown is the diffuse reflectance function R

d

( ) ( ) ( ) ( )

i

x

A

i i o d o t o o

dA x E x x R F x B

}

= u q u , ,

( ) ( )

A + A ~

j

b b j b d f f j f d

A E r R A E r R

, ,

Dipole diffusion approximation

Sum of front & back irradiance maps

Inverse Reflectometry with Few Inputs

[Homogeneous BSSRDF]

Estimating a diffuse reflectance function

Supposed that the lighting/shape is given, the front/back irradiance maps can be

computed

R

d

are expressed as a linear combination of basis functions, the piece-wise constant.

Solving a linear equation to compute coefficients c

j

( ) ( )

=

~

J

j

j j d

r e c r R

1

( ) ( ) ( )

+ =

k

b j k b f j k f ij

r e K r e K a

, ,

( ) ( )

=

~

J

j

j j d

r c r R

1

e

b Ax =

Inverse Reflectometry with Few Inputs

[Homogeneous BSSRDF]

Given the coefficients of diffuse reflectance function, it is

possible to rendering the target materials under varying

illumination/geometric conditions.

Inverse Lighting with Few Inputs

[General scene]

Capturing an incident light field using a mirror-like light probe

0 stop -3.5 stop -7 stop

Mirror light probe

Generating HDR map for

incident lighting

Response function

[Debevec 97]

Multiple images with varying exposure time for HDR recovery

Capturing high frequencies in light distribution

Inverse Lighting with Few Inputs

[General scene]

Capturing an incident light field using a matte light probe

[Wang and Samaras 02]

Input

Detecting critical

boundaries

Reconstructed

A set of points

perpendicular to the light

direction

A single image with Lambertian object

Low frequencies in light distribution

Inverse Lighting with Few Inputs

[General scene]

Capturing an incident light field using a planar light probe

[Alldrin and Kriegman 06]

A single image with a three-layered light probe

Low frequencies and some high frequencies in light distribution

Top Pattern: Sinusoidal printed on translucent sheet

Medium: Glass (0.096 in, Refractive index 1.52)

Bottom: Sinusoidal printed on Lambertian sheet

Inverse Lighting with Few Inputs

[General scene]

Capturing an incident light field from shadows [Sato et al. 02]

Input

Regenerating shadows

Inverse Lighting with Few Inputs

[General scene]

Lighting distribution over the hemisphere

Estimating the light distribution

Inverse Lighting with Few Inputs

[Specific scene]

Estimating an incident light field from a class-object

Faces, eyes, etc.

R=7.6mm

Anatomical model for human eye

Gaze detection

Inverse Lighting with Few Inputs

[Specific scene]

Estimating an incident light field from a class-object

Faces, eyes, etc.

Extracting an environment map (incident light)

From many to few inputs

Compact parametric representation for materials

Complex materials and global illumination

Diffuse (Texture), glossy, specular (Direct illumination)

Translucency, subsurface scattering (Indirect illumination)

Lack in details

Geometry enhancement ( motivated by super-resolution )

Semantic approach

Noisy inputs

Accounting for noise models during inference

Robust algorithm ( motivated by photometric stereo)

Problem Definition - Reminder

Inverse Problem with Complex Illumination

Estimating both the lighting and reflectance from a single

image

Lambertian surfaces to Non-Lambertian surfaces

Recent study extends the work to recover a semiparametric reflectance

model, more general than empirical models [Chandraker and Ramamoorthi 11]

Necessary to recover the texture

Photograph Rendered

Related work : Ramamoorthi and Hanrahan [01]

Existing work derived the theoretical analysis of

inverse problem, factorizing the reflectance and

lighting from a single image

Inverse Problem with Complex Illumination

[General BRDF]

General BRDF for accounting a variety of materials

Proposing a semiparametric reflectance model, more general than empirical

models, as a sum of univariate functions

They proved that 2-lobe BRDF can be uniquely identified by a single input

image

In various empirical model, can correspond to light vector, half way vector

or view vector

Estimating the reflectance functions

Unknowns become i and the form of function fi

Solving a regression problem

=

=

K

i

T

i i

n f n

1

) ( ) ( o

2

1

,

) ( ) ( min

=

K

i

T

i i

f

n f n

i i

o

o

[Chandraker and Ramamoorthi 11]

Inverse Problem with Complex Illumination

[General BRDF]

Comparable to ground truth

Better than the empirical

model

Input Relighting

Ground truth

Error

Input Proposed

Ground truth

T-S Model

Inverse Problem with Complex Illumination

[Global Illumination]

Inverse light transport to extract the global illumination

effects (e.g. interreflection)

Exploiting the duality of forward/inverse light transport

[Carroll et al. 11]

Inverse Problem with Complex Illumination

[Global Illumination]

Extracting an indirect illumination iteratively

Coefficients of S-1 is the result of Neumann series

Inverse Problem with Complex Illumination

[Global Illumination]

Separating the indirect illumination out

Inverse Problem with Complex Illumination

[Textured Surface]

Accounting for spatial variants in BRDF

Assuming Bivariate BRDF

Input : About one hundred images under varying illumination conditions

[Alldrin et al. 08]

Few inputs

Compact parametric representation for materials

Complex materials and global illumination

Diffuse (Texture), glossy, specular (Direct illumination)

Translucency, subsurface scattering (Indirect illumination)

Lack in details

Geometry enhancement ( motivated by super-resolution )

Semantic approach

Noisy inputs

Accounting for noise models during inference

Robust algorithm ( motivated by photometric stereo)

Inverse Problem In Practice

+

Inverse Problem with Noises

Depth/Color misalignment

To achieve the same vantage points for a depth image and a color

image

Depth noises upon material properties

Most depth sensors suffer from the depth distortion upon the

material characteristics

Missing data

Inverse Problem with Noises

Robust approach for photometric stereo

A class PS problem assumes the Lambertian materials, without

shadows

In reality, the surface is mostly non-Lambertian and includes

shadows

Robust approach handles the non-Lambertian illumination and

shadows as errors

Rank minimization problem

Convex Lambertian surfaces represented by at most 3 rank structure

Formulating the problem by minimizing the rank with sparse error

constraint

Solution via convex programming

[Wu et al., ACCV10]

E A D t s E

E A

+ = + . . min

1 *

,

A

( ) E A D t s E rank

E A

+ = + . . min

0

,

A

[Candes, Li, Ma, and Wright09]

Inverse Problem with Noises

[Wu et al., ACCV10]

Conclusion

It is time to merge the advanced graphics and vision

research for 3D imaging technology.

Practical issues remain for general scenes, complex

materials, few input images.

Parametric vs. Data-driven representation for materials

Complex illumination

Physically accurate constraints for appearance

Robust approach for geometry reconstruction

For outdoor scenery, we need to resolve the range limit on

depth camera

Interference on IR

Range limit

Q&A

Thank you!

Potrebbero piacerti anche

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDa EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeValutazione: 4 su 5 stelle4/5 (5795)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDa EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreValutazione: 4 su 5 stelle4/5 (1091)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDa EverandNever Split the Difference: Negotiating As If Your Life Depended On ItValutazione: 4.5 su 5 stelle4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDa EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceValutazione: 4 su 5 stelle4/5 (895)

- Grit: The Power of Passion and PerseveranceDa EverandGrit: The Power of Passion and PerseveranceValutazione: 4 su 5 stelle4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeDa EverandShoe Dog: A Memoir by the Creator of NikeValutazione: 4.5 su 5 stelle4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDa EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersValutazione: 4.5 su 5 stelle4.5/5 (345)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDa EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureValutazione: 4.5 su 5 stelle4.5/5 (474)

- Her Body and Other Parties: StoriesDa EverandHer Body and Other Parties: StoriesValutazione: 4 su 5 stelle4/5 (821)

- The Emperor of All Maladies: A Biography of CancerDa EverandThe Emperor of All Maladies: A Biography of CancerValutazione: 4.5 su 5 stelle4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Da EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Valutazione: 4.5 su 5 stelle4.5/5 (121)

- The Little Book of Hygge: Danish Secrets to Happy LivingDa EverandThe Little Book of Hygge: Danish Secrets to Happy LivingValutazione: 3.5 su 5 stelle3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDa EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyValutazione: 3.5 su 5 stelle3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)Da EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Valutazione: 4 su 5 stelle4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDa EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaValutazione: 4.5 su 5 stelle4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDa EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryValutazione: 3.5 su 5 stelle3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnDa EverandTeam of Rivals: The Political Genius of Abraham LincolnValutazione: 4.5 su 5 stelle4.5/5 (234)

- CinePrint16 User GuideDocumento27 pagineCinePrint16 User GuideChristopher Radyaputra50% (2)

- On Fire: The (Burning) Case for a Green New DealDa EverandOn Fire: The (Burning) Case for a Green New DealValutazione: 4 su 5 stelle4/5 (74)

- The Hotel by Sophie CalleDocumento5 pagineThe Hotel by Sophie CallepalpacuixesNessuna valutazione finora

- The Unwinding: An Inner History of the New AmericaDa EverandThe Unwinding: An Inner History of the New AmericaValutazione: 4 su 5 stelle4/5 (45)

- Physics For Entertainment Volume 1 (Yakov Perelman) PDFDocumento211 paginePhysics For Entertainment Volume 1 (Yakov Perelman) PDFlalbaghNessuna valutazione finora

- 1 Sarah Hermanson Meister, They Like The Real World' Photographic Practices After (Bilingue)Documento11 pagine1 Sarah Hermanson Meister, They Like The Real World' Photographic Practices After (Bilingue)Rodrigo ZúñigaNessuna valutazione finora

- Reading For Speed and Fluency 4Documento8 pagineReading For Speed and Fluency 4Malak Ziyad50% (2)

- Contemporary AnimatorsDocumento3 pagineContemporary Animatorsapi-263705039Nessuna valutazione finora

- A Review On Implementation of Image Processing in Agriculture SectorDocumento8 pagineA Review On Implementation of Image Processing in Agriculture SectorMogleNessuna valutazione finora

- DesignMagazine No 19Documento224 pagineDesignMagazine No 19DESIGN Magazine100% (9)

- Botanical Art and Illustration Certificate Program, Winter/Spring 2012 CatalogDocumento20 pagineBotanical Art and Illustration Certificate Program, Winter/Spring 2012 CatalogMervi Hjelmroos-KoskiNessuna valutazione finora

- Filto Laser RamanDocumento12 pagineFilto Laser RamancesarNessuna valutazione finora

- Redefining The Image Mimesis Convention and SemioticsDocumento17 pagineRedefining The Image Mimesis Convention and SemioticsfrankNessuna valutazione finora

- MS2040 Constitution Parts ListDocumento6 pagineMS2040 Constitution Parts ListTemptationNessuna valutazione finora

- Architectural Record 2003-02 PDFDocumento131 pagineArchitectural Record 2003-02 PDFangel venegasNessuna valutazione finora

- LS07 Chassis Service ManualDocumento33 pagineLS07 Chassis Service ManualnaughtybigboyNessuna valutazione finora

- Magazine 1 Cod - Compressed PDFDocumento26 pagineMagazine 1 Cod - Compressed PDFArm CStudioNessuna valutazione finora

- Rashid MahdiDocumento2 pagineRashid Mahdiandreea9827Nessuna valutazione finora

- Memory Watercolor Painting Lesson Plan AutosavedDocumento8 pagineMemory Watercolor Painting Lesson Plan Autosavedapi-431714883Nessuna valutazione finora

- TG in Entrep-Based Photo EditingDocumento10 pagineTG in Entrep-Based Photo EditingTimoy CajesNessuna valutazione finora

- Post Test in Criminalistics Personal Identification: D. Robert HeindleDocumento20 paginePost Test in Criminalistics Personal Identification: D. Robert HeindleKizel Marial HaliliNessuna valutazione finora

- PoetryDocumento23 paginePoetryAndrew JonesNessuna valutazione finora

- EOS Magazine January 2020Documento86 pagineEOS Magazine January 2020skretu100% (1)

- SX12 QSG Eng 57115032 Rev D Screen AwDocumento10 pagineSX12 QSG Eng 57115032 Rev D Screen AwJeline AmanteNessuna valutazione finora

- New Snapshot Placement TestDocumento13 pagineNew Snapshot Placement TestOana Adriana Stroe100% (2)

- Lesson 2 - Planning ImotionDocumento3 pagineLesson 2 - Planning Imotionapi-335773558Nessuna valutazione finora

- This Is Cinerama Booklet 4printDocumento14 pagineThis Is Cinerama Booklet 4printkichutesNessuna valutazione finora

- Boston Fakery - An Expose of The Boston Marathon Bombings HoaxDocumento29 pagineBoston Fakery - An Expose of The Boston Marathon Bombings HoaxScott Odam100% (1)

- 2MP Lens ChartsDocumento16 pagine2MP Lens Chartskürşad ersagunNessuna valutazione finora

- Inked Etc - No.5Documento44 pagineInked Etc - No.5pier aaronNessuna valutazione finora

- ICOR-Technologies Canada UGV CALIBERDocumento4 pagineICOR-Technologies Canada UGV CALIBERSidhant SidanaNessuna valutazione finora

- J&K Services Selection Board: Government of Jammu and KashmirDocumento32 pagineJ&K Services Selection Board: Government of Jammu and KashmirErr33Nessuna valutazione finora