Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Speech Interaction System

Caricato da

premsagar483Descrizione originale:

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Speech Interaction System

Caricato da

premsagar483Copyright:

Formati disponibili

Phanikumar v v and Sagar Geete

S.I.S

Project Report

March, 2008

S.I.S.

(Speech Interactive System)

Phanikumar V V

&

Sagar Geete

(Mining 2010, IT-BHU)

Page 1 of 12

March 2008

Phanikumar v v and Sagar Geete CONTENTS

S.I.S

1. Introduction 2. Utility 2. Speech Recognition 3. Speech Synthesizer 4. Speech Interactive System 5. Future Considerations

3 4 6 8 10 11

Page 2 of 12

March 2008

Phanikumar v v and Sagar Geete INTRODUCTION

S.I.S

Human Interaction with ROBOTS AND ELECTRONIC GADGETS using SPEECH is the basic motto of the S.I.S. (Speech Interactive System). The Voice Interface System (VIS) was at the heart of the project. The VIS consists of the voice response and speech recognition subsystems. The Speech interactive System is a complete easy to build programmable speech recognition & synthesis circuit. Programmable, in the sense that you train the words (or vocal utterances) you want the circuit to recognize. The synthesis of unlimited number of English words can made using the circuit.

Page 3 of 12

March 2008

Phanikumar v v and Sagar Geete UTILITY

S.I.S

In the near future, speech recognition will become the method of choice for controlling appliances, toys, tools, computers and robotics. There is a huge commercial market waiting for this technology to mature. This project details the construction and building of a stand alone trainable speech recognition circuit that may be interfaced to control just about anything electrical, such as; appliances, robots, test instruments, VCRs TVs, etc. The circuit is trained (programmed) to recognized words you want it to recognize. To control and command an appliance (computer, VCR, TV security system, etc.) by speaking to it, will make it easier, while increasing the efficiency and effectiveness of working with that device. At its most basic level speech recognition allows the user to perform parallel tasks, (i.e. hands and eyes are busy elsewhere) while continuing to work with the computer or appliance. Applications

Command and control of appliances and equipment Telephone assistance systems Data entry Speech controlled toys Speech and voice recognition security systems

Software Approach Currently most speech recognition systems available today are programs that use personal computers. The add-on programs operate continuously in the background of the computers operating system (windows, OS/2, etc.). These programs require the computer to be equipped with a compatible sound card. The disadvantage in this approach is the necessity of a computer. While these speech programs are impressive, it is not economically viable for manufacturers to add full blown computer systems to control a washing machine or VCR. At best the programs add to the processing required of the computer's CPU. There is a noticeable slow down in the operation and function of the computer when voice recognition is enabled. Example of circuit implementation The Talking Toaster It's 3:00am. You're hungry. You've been up all night implementing a threads package for your Operating Systems course project. You stumble into the kitchen. Can you really be troubled with setting the toaster's heat setting, or activating the toaster's heating coils?

Page 4 of 12

March 2008

Phanikumar v v and Sagar Geete

S.I.S

Of course not! That's where the Talking Toaster comes in. Instead of fiddling with the toastquality dial or hitting the down level, the toaster will actually ask you for the settings. Even better, you can simply respond by speaking your reply -- no buttons to push, dials to spin, or lights to watch. The operating instructions for the toaster are quite simple. When you want toast, ask the toaster for some toaster: You: Toast. The toaster will then ask you what your preferred toasting level is: Toaster: How light? Respond with light, medium, or dark. You: Medium. The toaster will then lower its bread tray, engaging the heating coils: Toaster: Using setting medium. Lowering... When the temperature has reached the desired threshold, the toaster raises the bread tray and disengages the heating coils: Toaster: Raising... done! That's all there is to it. Isn't that cool?!? Not only does the toaster talk to you, but you can talk to the toaster, and it understands you! The technologies that the toaster would employ include:

Speech recognition, using the HM2007 speech chip. Speech Synthesizer SPO256 chip. Microcontroller system control, via ATMEGA16. Appendix of the Final Report is the microcontroller code. An old toaster. Servo motor. Plain and simple ingenious engineering.

Speech Recognition The speech recognition subsystem was built using Hualon Microelectronics Corporation's HM2007 speech recognition chip. This chip allowed for words to be recognized from a vocabulary of up to 40 1-second long words. The vocabulary was stored on an external 8K

Page 5 of 12 March 2008

Phanikumar v v and Sagar Geete

S.I.S

SRAM. This SRAM was not powered by a battery, this removed a design constraint that otherwise would have hampered the project. To use the speech recognition system of the HM2007, the user must train their voice prints on the chip. In the current version of Project code, the user is instructed on how to do this when the circuit is first plugged in. For each word that is to be recognized, the microcontroller asks the user to speak that word. Because the user may say the word differently (i.e., with slightly different inflections, etc.), the user is asked to say the word more than once (usually three times). For each time the user says the word, the HM2007 integrates this word into a neural network (this network is stored in the off-chip SRAM). Later, in recognition mode, the HM2007 tries to match the spoken word against other words in its neural net. If a match is made, the index of that word in the vocabulary is returned. If no match is found, or if the user spoke too quickly or too slowly, an appropriate error code is returned. Thus, the HM2007 does not recognize a spoken word as an actual word, but rather as sounding like a word that it knows about. The HM2007 has no a priori knowledge of what the word 'back' should sound like. The implementation of the speech recognition system was by far the most difficult engineering feat in the entire project. The chip came with a data sheet, but the information contained there in was in many instances unclear and even incorrect. Fortunately, after two weeks of intensive experimenting and designing, we had an understanding of the basic program flow required to train and recognize words. The HM2007 is a 48-pin PDIP chip. The HM2007L has 4 I/O ports, a microphone system, and several control pins. To communicate with the 8K SRAM, there is a 13-bit address bus and a 8bit data bus (two of the four I/O ports), as well as a memory read/write pin and a memory enable pin. To communicate with the keypad, there is a 4-bit wide K-bus, used for passing data to the HM2007, and a 3-bit S-bus, used for sending commands to the HM2007 (mainly commands to control the meaning of the K-bus).

Page 6 of 12

March 2008

Phanikumar v v and Sagar Geete

S.I.S

Page 7 of 12

March 2008

Phanikumar v v and Sagar Geete Speech Synthesizer

S.I.S

The voice response subsystem was implemented with an SPO256 NARRATOR SPEECH PROCESSOR. The SPO256 (Speech Processor) is a single chip N-Channel MOS LSI device that is able, using its stored program, to synthesize speech or complex sounds. The achievable output is equivalent to a flat frequency response ranging from 0 to 5 kHz, a dynamic range of 42dB, and a signal to noise ratio of approximately 35dB. The SP0256 incorporates four basic functions: A software programmable digital filter that can be made to model a VOCALTRACT. A 16K ROM which stores both data and Instructions (THE PROGRAM). A MICROCONTROLLER which controls the data flow from the ROM to the digital filter, the assembly of the word strings necessary for linking speech elements together, and the amplitude and pitch information to excite the digital filter. A PULSE WIDTH MODULATOR that creates a digital output which is converted to an analog signal when filtered by an external low pass filter.

Page 8 of 12

March 2008

Phanikumar v v and Sagar Geete

S.I.S

Page 9 of 12

March 2008

Phanikumar v v and Sagar Geete SPEECH INTERACTIVE SYSTEM

S.I.S

Interaction of speech recognisation with speech synthesis is like mile stone in our project; because both recognisation & synthesis looks independently less effective. But when we interfaced both with one another its applicability increases suddenly. We can feel actual part of humanoid robot. With the help microcontroller (mcu) we have interact both the circuit. We used ATMEGA16 for cascading purpose. Output from the recognisation circuit is feed to microcontroller as an input and relative output is given to the synthesizing part. E. g. as mentioned in the above task of toast, if we have to make it then We will save word toast into digit 1 in the recognisation part, means If you speak toast then output from recognisation circuit will be 1. Microcontroller will be programmed in such a way that if it gets input 1 at specific port then it have to send array of allophones to synthesizer part. And of course that array will mean how light as mentioned above. Then synthesizer circuit will pronounce those allophones and it sound like how light? time difference between the two allophones of single word may give you feeling of Question Also we can interact some task with this, means if user say medium then the task of lowering of bread tray which were engaged to the heating coil will be scheduled in the microcontroller itself. Some ports of microcontroller may carry output to toaster for control. In the next article of utility we have given you some working examples of this SPEECH INTERACTIVE SYSTEM with some task to make this project more and more useful. FUTURE CONSIDERATIONS 1. Speech and voice recognition security systems If you want to use this speech interaction system in security system then you can also do this. You have to do only that just put face recognisation system with this. Face recognisation system will provide security features and our speech interaction will perform require task. Lets take one example for understanding this utility. Suppose our task is to control robot or just small toy car but in security system, then we will allow user to used this system if passes through face recognisation. That is if users images had been already saved in the database then only his login image may match with it. And if matched then users login code will send to speech interaction system from face recognisation, and through that code specific commands which was allowed to that user will only work. Also his profile will be pronounced by speech interaction system after login the user for better understanding.

Page 10 of 12

March 2008

Phanikumar v v and Sagar Geete

S.I.S

This application is not very difficult because face recognisation is not new thing to understand. And hence we can consider this application. 2. Catching moving ball by blind person Catching moving ball by blind person seems impossible, but it can be worked out with help of additional image processing system with our speech interaction system. image processing system will guide the dynamic positions of ball and speech interaction will order the path to blind person. Image processing: Through the camera it will detect the position changes of the ball, and robot will move accordingly for searching the ball with the help of image processing. Algorithm is very clear; simply code will be programmed such that it will always search the same properties of the ball which had been previously appeared and will give changes in the positions of the ball accordingly to the robot. Robot will move accordingly with the help of interfacing between the robot & computer. Actions to do in image processing: 1. Image acquisition 2. Image Processing 3. Data communication

Page 11 of 12 March 2008

Phanikumar v v and Sagar Geete

S.I.S

Algorithm of Ball Follower: Step 1: Image acqusition,with the help of web cam mounted on the robot.through it image will be grab at specific time interval. Step 2: Find the center of the ball (ball is of definate color and that color will be different than background ). Step 3: Locate the ball and assume its center as orgin of new coordinate system which is impose on the image. Step 4: Now again & again check wheather ball center is moving coordianate system or not,by processing the input image.image will be taken at specific interval of time. Step 5: If ball position seems to be shift according to new coordinate system then move your robot accordingly. Step 6: Same procedure will be repeat again again. Note: It nay be vary difficult to determine the dimenssions on the plane by which ball has been moved by image processing since its 3-D image processing & it require more than one camera in different directions. On robot there will be pretty difficult to mount atleast two camera which may give us X- , Y& Z- directional movements of ball on the plane. But if camera is mounted on roof then it will become comparatively easier to give the exact position of the ball with respect to user. ______________________________________________________________________________

Page 12 of 12

March 2008

Potrebbero piacerti anche

- Speech Recognition System Using Ic Hm2007Documento21 pagineSpeech Recognition System Using Ic Hm2007Nitin Rawat100% (1)

- Speech Recog Board DocumentDocumento11 pagineSpeech Recog Board DocumentDhanmeet KaurNessuna valutazione finora

- Voice Controlled Robotic Vehicle: AbstractDocumento11 pagineVoice Controlled Robotic Vehicle: AbstractNikhil GarrepellyNessuna valutazione finora

- 8085 Microprocessor SimulatorDocumento30 pagine8085 Microprocessor Simulatorapi-3798769100% (4)

- Speech Recognition System: Surabhi Bansal Ruchi BahetyDocumento5 pagineSpeech Recognition System: Surabhi Bansal Ruchi Bahetyrp5791Nessuna valutazione finora

- ST Final Report TOMMOROW 4-4-2011 ReportDocumento57 pagineST Final Report TOMMOROW 4-4-2011 Reportsureshkumar_scoolNessuna valutazione finora

- VOICE RECOGNITION KIT USING HM2007Documento5 pagineVOICE RECOGNITION KIT USING HM2007Giau Ngoc HoangNessuna valutazione finora

- 1180 DatasheetDocumento9 pagine1180 DatasheetVasudeva RaoNessuna valutazione finora

- Text To Speech DocumentationDocumento61 pagineText To Speech DocumentationSan DeepNessuna valutazione finora

- Introduction To Embedded SystemsDocumento1 paginaIntroduction To Embedded SystemsSrinath KvNessuna valutazione finora

- Introduction To The 8051 MicrocontrollerDocumento17 pagineIntroduction To The 8051 Microcontrollerقطب سيدNessuna valutazione finora

- Softube FET Compresso ManualDocumento27 pagineSoftube FET Compresso ManualdemonclaenerNessuna valutazione finora

- Speech Based Wheel Chair Control Final Project ReportDocumento77 pagineSpeech Based Wheel Chair Control Final Project ReportMohammed Basheer100% (4)

- IvrsDocumento11 pagineIvrsMuskan JainNessuna valutazione finora

- Prophet 08 Manual v1.3Documento64 pagineProphet 08 Manual v1.3pherrerosmNessuna valutazione finora

- 2 Design and Implementation of Speech Recognition Robotic SystemDocumento7 pagine2 Design and Implementation of Speech Recognition Robotic SystemSuresh BabuNessuna valutazione finora

- Microcontroller Implementation of Voice Command Recognition System For Human Machine Interface in Embedded SystemDocumento4 pagineMicrocontroller Implementation of Voice Command Recognition System For Human Machine Interface in Embedded Systemnimitjain03071991Nessuna valutazione finora

- Udp Project CdacDocumento33 pagineUdp Project CdacArun Kumar Reddy PNessuna valutazione finora

- A002 The CS9000 Microcomputer SandfelterDocumento4 pagineA002 The CS9000 Microcomputer SandfelterkgrhoadsNessuna valutazione finora

- Voice Recognition System SeminarDocumento37 pagineVoice Recognition System Seminar07l61a0412Nessuna valutazione finora

- Manual: SPL Analog Code Plug-InDocumento12 pagineManual: SPL Analog Code Plug-InLorenzo GraziottiNessuna valutazione finora

- Research Papers On Embedded Systems PDFDocumento4 pagineResearch Papers On Embedded Systems PDFwtdcxtbnd100% (1)

- Department of Engineering Project Report Title:-8051 Based Digital Code LockDocumento18 pagineDepartment of Engineering Project Report Title:-8051 Based Digital Code LockamitmaheshpurNessuna valutazione finora

- MScontrol ManualDocumento35 pagineMScontrol Manualdescarga1Nessuna valutazione finora

- Monark Manual EnglishDocumento49 pagineMonark Manual EnglishEzra Savadious100% (1)

- Special Function RegisterDocumento44 pagineSpecial Function Registercoolkanna100% (2)

- Digital Bike Operating System Sans KeyDocumento8 pagineDigital Bike Operating System Sans KeyArjun AnduriNessuna valutazione finora

- Gesture VocalizerDocumento20 pagineGesture Vocalizerchandan yadavNessuna valutazione finora

- Deaf-Mute Communication InterpreterDocumento6 pagineDeaf-Mute Communication InterpreterEditor IJSETNessuna valutazione finora

- GSM-Based Electronic Voting MachineDocumento17 pagineGSM-Based Electronic Voting MachineAbhishek IyengarNessuna valutazione finora

- TVTCBDocumento260 pagineTVTCBAndrew Ayers100% (1)

- SPL Vitalizer MK2-T ManualDocumento18 pagineSPL Vitalizer MK2-T ManualogirisNessuna valutazione finora

- SimputerDocumento19 pagineSimputerrock_000% (1)

- Mp&i Course File - Unit-IDocumento56 pagineMp&i Course File - Unit-IvenkateshrachaNessuna valutazione finora

- Design Home Automation Using Bluetooh (Speech Recognition)Documento4 pagineDesign Home Automation Using Bluetooh (Speech Recognition)vijayrockz06Nessuna valutazione finora

- Interactgenics Final Report 20bce1496 1609 1816Documento56 pagineInteractgenics Final Report 20bce1496 1609 1816shreyanair.s2020Nessuna valutazione finora

- Case Study On Microprocessor and Assembly LanguageDocumento7 pagineCase Study On Microprocessor and Assembly LanguageTanjimun AfrinNessuna valutazione finora

- Thesis Audio HimaliaDocumento5 pagineThesis Audio HimaliaPaperWritersMobile100% (2)

- Ohmicide Ref ManDocumento33 pagineOhmicide Ref ManJeff J Roc100% (1)

- SBSK 4Documento31 pagineSBSK 4SWETHA0% (1)

- Seminar ReportDocumento22 pagineSeminar ReportVaibhav BhurkeNessuna valutazione finora

- Wheelchair Control Using Voice RecognitionDocumento4 pagineWheelchair Control Using Voice RecognitionIJARBEST100% (1)

- DensityLITE User ManualDocumento28 pagineDensityLITE User ManualJohn Wesley BarkerNessuna valutazione finora

- FonaDyn Handbook 2-4-9Documento70 pagineFonaDyn Handbook 2-4-9Viviana FlorezNessuna valutazione finora

- VR-Stamp Voice Recognition Module High Performance Embedded SystemDocumento2 pagineVR-Stamp Voice Recognition Module High Performance Embedded SystemRamu SatheeshNessuna valutazione finora

- GPS Voice AlertDocumento13 pagineGPS Voice AlertRavi RajNessuna valutazione finora

- Audio DelayDocumento31 pagineAudio DelayaaandradeNessuna valutazione finora

- Project Proposal: FPGA Based Speech Recognition ProjectDocumento9 pagineProject Proposal: FPGA Based Speech Recognition ProjectHassan Shahbaz100% (1)

- Brey-The Intel Microprocessors 8EDocumento944 pagineBrey-The Intel Microprocessors 8EJulian RNessuna valutazione finora

- QUAN-Microprocessor DescriptiveDocumento25 pagineQUAN-Microprocessor DescriptiveDebanjan PatraNessuna valutazione finora

- Shop Floor Issues: Department of Industrial Engineering Owens Corning Fiberglas, Inc. Aiken, South Carolina 29801Documento5 pagineShop Floor Issues: Department of Industrial Engineering Owens Corning Fiberglas, Inc. Aiken, South Carolina 29801ciaomiucceNessuna valutazione finora

- MicroprocessorsDocumento25 pagineMicroprocessorsthereader94Nessuna valutazione finora

- Generate robotic or vocoded voice with MVocoderDocumento109 pagineGenerate robotic or vocoded voice with MVocoderE.M. RobertNessuna valutazione finora

- Blind Transmitter Turns TVs On/Off from PocketDocumento11 pagineBlind Transmitter Turns TVs On/Off from Pocketdummy1957jNessuna valutazione finora

- Metro Train Prototype: A Summer Training Report OnDocumento34 pagineMetro Train Prototype: A Summer Training Report OnPooja SharmaNessuna valutazione finora

- Foundation Course for Advanced Computer StudiesDa EverandFoundation Course for Advanced Computer StudiesNessuna valutazione finora

- Understanding Computers, Smartphones and the InternetDa EverandUnderstanding Computers, Smartphones and the InternetValutazione: 5 su 5 stelle5/5 (1)

- Digital Signal Processing for Audio Applications: Volume 2 - CodeDa EverandDigital Signal Processing for Audio Applications: Volume 2 - CodeValutazione: 5 su 5 stelle5/5 (1)

- 04 Mel-Spectrum ComputationDocumento9 pagine04 Mel-Spectrum Computationpremsagar483Nessuna valutazione finora

- ZigbeeDocumento37 pagineZigbeerealgoodnewsNessuna valutazione finora

- DSP 2 - Signals in MatlabDocumento27 pagineDSP 2 - Signals in Matlabpremsagar483Nessuna valutazione finora

- Bluetooh Based Smart Sensor Networks - PresentationDocumento27 pagineBluetooh Based Smart Sensor Networks - PresentationTarandeep Singh100% (7)

- Automatic Speech RecognitionDocumento34 pagineAutomatic Speech RecognitionRishabh PurwarNessuna valutazione finora

- Design of AVR - Based Embedded Ethernet InterfaceDocumento13 pagineDesign of AVR - Based Embedded Ethernet Interfacepremsagar483Nessuna valutazione finora

- MARS Motor Cross Reference InformationDocumento60 pagineMARS Motor Cross Reference InformationLee MausNessuna valutazione finora

- She Walks in BeautyDocumento6 pagineShe Walks in Beautyksdnc100% (1)

- The DHCP Snooping and DHCP Alert Method in SecurinDocumento9 pagineThe DHCP Snooping and DHCP Alert Method in SecurinSouihi IslemNessuna valutazione finora

- IT Department - JdsDocumento2 pagineIT Department - JdsShahid NadeemNessuna valutazione finora

- Che 430 Fa21 - HW#5Documento2 pagineChe 430 Fa21 - HW#5Charity QuinnNessuna valutazione finora

- Introduction to Philippine LiteratureDocumento61 pagineIntroduction to Philippine LiteraturealvindadacayNessuna valutazione finora

- Jurnal Aceh MedikaDocumento10 pagineJurnal Aceh MedikaJessica SiraitNessuna valutazione finora

- OE & HS Subjects 2018-19Documento94 pagineOE & HS Subjects 2018-19bharath hsNessuna valutazione finora

- Microsoft PowerPoint Presentation IFRSDocumento27 pagineMicrosoft PowerPoint Presentation IFRSSwati SharmaNessuna valutazione finora

- DP4XXX PricesDocumento78 pagineDP4XXX PricesWassim KaissouniNessuna valutazione finora

- BV14 Butterfly ValveDocumento6 pagineBV14 Butterfly ValveFAIYAZ AHMEDNessuna valutazione finora

- Ford Taurus Service Manual - Disassembly and Assembly - Automatic Transaxle-Transmission - 6F35 - Automatic Transmission - PowertrainDocumento62 pagineFord Taurus Service Manual - Disassembly and Assembly - Automatic Transaxle-Transmission - 6F35 - Automatic Transmission - Powertraininfocarsservice.deNessuna valutazione finora

- Handout 4-6 StratDocumento6 pagineHandout 4-6 StratTrixie JordanNessuna valutazione finora

- Brief History of Gifted and Talented EducationDocumento4 pagineBrief History of Gifted and Talented Educationapi-336040000Nessuna valutazione finora

- Principles of The Doctrine of ChristDocumento17 paginePrinciples of The Doctrine of ChristNovus Blackstar100% (2)

- SPXDocumento6 pagineSPXapi-3700460Nessuna valutazione finora

- Laplace Transform solved problems explainedDocumento41 pagineLaplace Transform solved problems explainedduchesschloeNessuna valutazione finora

- Cp-117-Project EngineeringDocumento67 pagineCp-117-Project Engineeringkattabomman100% (1)

- Lab ReportDocumento11 pagineLab Reportkelvinkiplaa845Nessuna valutazione finora

- Life and Works or Rizal - EssayDocumento2 pagineLife and Works or Rizal - EssayQuince CunananNessuna valutazione finora

- Blasting 001 Abb WarehouseDocumento2 pagineBlasting 001 Abb WarehouseferielvpkNessuna valutazione finora

- Apostolic Faith: Beginn NG of World REV VALDocumento4 pagineApostolic Faith: Beginn NG of World REV VALMichael HerringNessuna valutazione finora

- Record of Appropriations and Obligations: TotalDocumento1 paginaRecord of Appropriations and Obligations: TotaljomarNessuna valutazione finora

- ABV Testing Performa For ICF CoachesDocumento2 pagineABV Testing Performa For ICF Coachesmicell dieselNessuna valutazione finora

- SYKES Home Equipment Agreement UpdatedDocumento3 pagineSYKES Home Equipment Agreement UpdatedFritz PrejeanNessuna valutazione finora

- Yealink Device Management Platform: Key FeaturesDocumento3 pagineYealink Device Management Platform: Key FeaturesEliezer MartinsNessuna valutazione finora

- Role and Function of Government As PlanningDocumento6 pagineRole and Function of Government As PlanningakashniranjaneNessuna valutazione finora

- Parameter Pengelasan SMAW: No Bahan Diameter Ampere Polaritas Penetrasi Rekomendasi Posisi PengguanaanDocumento2 pagineParameter Pengelasan SMAW: No Bahan Diameter Ampere Polaritas Penetrasi Rekomendasi Posisi PengguanaanKhamdi AfandiNessuna valutazione finora

- TK17 V10 ReadmeDocumento72 pagineTK17 V10 ReadmePaula PérezNessuna valutazione finora

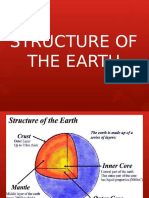

- Earth's StructureDocumento10 pagineEarth's StructureMaitum Gemark BalazonNessuna valutazione finora