Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

0-Robust Low Complexity Corner Detector

Caricato da

keshab782960Descrizione originale:

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

0-Robust Low Complexity Corner Detector

Caricato da

keshab782960Copyright:

Formati disponibili

IEEE TRANSACTIONS ON CIRCUITS AND SYSTEMS FOR VIDEO TECHNOLOGY, VOL. 21, NO.

4, APRIL 2011

435

Robust Low Complexity Corner Detector

Pradip Mainali, Student Member, IEEE, Qiong Yang, Member, IEEE, Gauthier Lafruit, Member, IEEE, Luc Van Gool, Member, IEEE, and Rudy Lauwereins, Senior Member, IEEE

AbstractCorner feature point detection with both the highspeed and high-quality is still very demanding for many realtime computer vision applications. The Harris and KanadeLucas-Tomasi (KLT) are widely adopted good quality corner feature point detection algorithms due to their invariance to rotation, noise, illumination, and limited view point change. Although they are widely adopted corner feature point detectors, their applications are rather limited because of their inability to achieve real-time performance due to their high complexity. In this paper, we redesigned Harris and KLT algorithms to reduce their complexity in each stage of the algorithm: Gaussian derivative, cornerness response, and non-maximum suppression (NMS). The complexity of the Gaussian derivative and cornerness stage is reduced by using an integral image. In NMS stage, we replaced a highly complex sorting and NMS by the efcient NMS followed by sorting the result. The detected feature points are further interpolated for sub-pixel accuracy of the feature point location. Our experimental results on publicly available evaluation data-sets for the feature point detectors show that our low complexity corner detector is both very fast and similar in feature point detection quality compared to the original algorithm. We achieve a complexity reduction by a factor of 9.8 and attain 50 f/s processing speed for images of size 640480 on a commodity central processing unit with 2.53 GHz and 3 GB random access memory. Index TermsCorner, feature point, Harris, interest point, KLT.

I. Introduction

EATURE point detection at a low computational cost is a fundamental problem for many real-time computer vision applications, such as image matching, robot navigation, video stabilization, video frames mosaicing, and others. The usefulness of the feature point detector in real-world applications are mainly inuenced by the feature point detection quality, often measured by the repeatability [1], and execution time. The feature point detectors mainly detect corners [2][4] or blobs [5], [6], which are typical salient feature point regions in an image to detect and can be detected repeatedly on images

Manuscript received June 7, 2010; revised September 3, 2010; accepted October 2, 2010. Date of publication March 10, 2011; date of current version April 1, 2011. This paper was recommended by Associate Editor J. Zhang. P. Mainali and R. Lauwereins are with the Department of Electrical Engineering, Katholieke Universiteit Leuven, and Interuniversitair Micro-Electronica Centrum VZW, Leuven 3001, Belgium (e-mail: pradip.mainali@imec.be; rudy.lauwereins@imec.be). G. Lafruit and Q. Yang are with the Digital Component Group, Interuniversitair Micro-Electronica Centrum VZW, Leuven 3001, Belgium (e-mail: gauthier.lafruit@imec.be; qiong.yang@imec.be). L. Van Gool is with the Department of Electrical Engineering, Katholieke Universiteit Leuven, Leuven 3001, Belgium (e-mail: luc.vangool@esat.kuleuven.be). Color versions of one or more of the gures in this paper are available online at http://ieeexplore.ieee.org. Digital Object Identier 10.1109/TCSVT.2011.2125411

taken with different viewing conditions. The corner detectors have wide applications, due to the corner region being suitable to align images with a small baseline and a wide baseline by iteratively tracking feature points [3] and by feature point matching [7], respectively. The Harris and KLT are the two most important corner detectors. But, both the algorithms are computationally expensive and can achieve only 5 f/s for images of size 640480 on a commodity central processing unit (CPU) running at 2.53 GHz [8], thus limiting its usefulness for real-time applications. Therefore, the Harris and the KLT corner detectors were implemented on graphics processing unit (GPU) to achieve a realtime performance [9], [10], as the algorithms were parallelized to utilize the large number of parallel processors present in GPU. However, a GPU is not always applicable for many real-time applications due to its high power consumption. For example, achieving real-time performance for applications, such as video stabilization, video image mosaicing, and others, still remain challenging on constrained setups such as digital signal processor in surgery rooms without active cooling, which require low and moderate power processing architectures. Hence, for embedded applications, the Harris and KLT algorithms need to be redesigned to reduce the number of operations to achieve high-speed feature point detection at similar quality. We introduced a low complexity corner detector, named LOCOCO, by reducing the complexity of both the Harris and KLT in our previous paper [11]. In this paper, we provide a more in-depth study and we further improve the stability of the LOCOCO. To improve the stability and performance of the algorithm, the corner feature points extracted by the LOCOCO are further interpolated for a sub-pixel accuracy. With our modication, we can achieve 50 f/s processing speed with no drop in quality for images of size 640480 on a commodity CPU running at 2.53 GHz. The key idea behind our method to reduce the complexity of the Harris and the KLT corner detectors originates from two observations: the computations existing among the pixels within the integration window are overlapping and the sorting operation used for non-maximum suppression (NMS) is highly complex [2], [3]. There are three major steps involved in the original algorithms to nd out the corner feature points. First, the image gradients are computed by convolving the image with the rst-order Gaussian derivative kernel. Second, compute the cornerness response. Last, Quick-sort and a NMS are conducted to retain a single maximum point for each corner region. The complexity is reduced in each stage

1051-8215/$26.00 c 2011 IEEE

436

IEEE TRANSACTIONS ON CIRCUITS AND SYSTEMS FOR VIDEO TECHNOLOGY, VOL. 21, NO. 4, APRIL 2011

of the algorithm. First, the rst order Gaussian derivative kernel is approximated by the Box kernel, which can be calculated quickly in combination with the integral image. Second, the computation of the cornerness response of each pixel creates a sliding window effect with many calculations overlapping over successive pixels, which can be reduced drastically by using the integral image. Last, Quick-sort was used to rank the response before NMS. However, the ordering is not important as the NMS will always nd out the local maximum no matter which pixel we start from. Additionally, the comparison results of NMS windows can be shared on successive pixels. So, the complexity is reduced by using the efcient NMS [12], which optimally reuses the results in the successive pixels. Once the maximum points are extracted, they are sorted according to their cornerness response. The remainder of this paper is organized as follows. Section II describes the literature survey on corner detectors. In Section III, we briey discuss the Harris and KLT corner detectors. In Section IV, we present our new approach to reduce the complexity of these corner detectors with sub-pixel accuracy, named as (S-LOCOCO) and Section V describes memory complexity. In Section VI, we briey describe the repeatability evaluation criteria of the feature point detectors. Section VII provides experimental results. Section VIII provides an evaluation in image registration applications and Section IX concludes this paper. II. Related Work In the past, several corner detectors were proposed to improve quality or execution time performance. The corner detectors can be broadly categorized into two methods: contourbased and intensity-based. The contour-based corner detectors rst detect the edges in the image. The edge map is used to locate corner feature points at intersections of the line segments [13], at high curvatures formed by ridges and valley [14] and at T-junctions [15]. However, methods in this category do not detect every corner region in an image. In particular, they fail to detect corner regions formed by a texture. Thus, the other category of corner detector operates on the intensity of each pixel. The intensity-based techniques can be further subdivided into two categories based on operating directly on intensity and derivatives of intensity. The former category, operates directly on the intensities in the image patch. The corner detectors in this category are SUSAN [16] and FAST [4]. The SUSAN detects corner points if the number of pixels that have same brightness as a center pixel in a circular disc are below some threshold. The FAST also uses the circular window to compute the darker and brighter pixel compared to a center pixel and uses machine-learning method to classify the pixel as a corner. These methods were mainly designed for a high speed. The latter category, does not operate on pixel directly but uses the image derivatives to locate a corner point. In [17], second derivative of the image is computed and the corner point is located by measuring the determinant of Hessian, which is also rotationally invariant. In [18], the curvature of planar curves is used to detect a corner point. The corner detectors in [2], [3], [19], and [20] are based on computing the auto-correlation function

which averages the derivatives of the image in a small window. Harris [2] and KLT [3] locate corner regions if both eigenvalues of the auto-correlation function are signicantly large. The improved version of the Harris corner detector was proposed by replacing the template used for computing derivatives by derivatives of a Gaussian [1]. Among all these corner detectors, Harris [2] and KLT [3] are the two most important corner detectors, which were shown to have good performance due to their invariance to rotation, illumination variation, and image noise as compared to other existing corner detectors [1], [21]. III. Harris and KLT Corner Detectors Both the Harris [2] and KLT [3] corner detectors are based on computing the cornerness response of each pixel as in (1), which measures the change in intensities due to shifts of a local integration window in all directions giving peaks in cornerness response for the corner pixels as follows: 2 gx (x)gy (x) gx (x) C(p) = v(x) 2 gx (x)gy (x) gy (x)

xW

= Here

Gxx Gyx

Gxy Gyy

(1)

gi = i (g I) = (i g) I, i (x, y), g = G(x, y; ) and v(x) is a weighting function which is usually Gaussian or uniform, p is a center pixel, W is an integration window centered at p, I is the image, g is a 2-D Gaussian function, and gx and gy are image gradients obtained by the convolution of Gaussian rst order partial derivatives in x and y directions with I, respectively. The Harris corner detector evaluates the cornerness of each pixel without explicit eigenvalue decomposition as in (2) as follows: R = |C| k (trace(C))2 . (2)

Since |C| = 1 2 and trace(C) = 1 + 2 , where 1 and 2 are eigenvalues of C, thus the Harris corner detector does not require to do eigenvalue decomposition explicitly. The value of k is chosen between [0.04, 0.06]. The image pixel is a corner if both the eigenvalues are large, thus resulting in a peak response in R. The KLT explicitly computes the eigenvalues of C(p). It selects those points for which the minimum eigenvalue computed as in (3) is larger than a given threshold [3] as follows: R = min = min(1 , 2 ) (3) 1 min = (Gxx + Gyy (Gxx Gyy )2 + 4 Gxy 2 ). 2 In fact, both the Harris and KLT corner detectors follow a similar approach to detect corner points, but differ only in the way the cornerness functions are evaluated as in (2) and (3), respectively. Thus, both the Harris and KLT algorithms can be divided into three main steps [2], [3]: 1) the Gaussian derivatives of the image I, gx , and gy , are computed by convolution with the Gaussian derivative kernel; 2) the matrix

MAINALI et al.: ROBUST LOW COMPLEXITY CORNER DETECTOR

437

TABLE I Execution Time of Each Stage of the KLT Algorithm for the Image of Size 640480 on Commodity 2.53 GHz CPU Gaussian Derivative 40 Cornerness 122 Sorting 46

Execution Time (ms)

Algorithm 1 Robust LOCOCO detector

1: 2: 3: 4:

5: 6: 7:

Compute an integral image, ii, of the original image I. Compute image gradients, gx and gy , by using a Box kernel and the integral image ii. 2 2 Compute the integral images, iixx , iiyy , and iixy of gx , gy , and gx gy , respectively. Compute Gxx , Gyy , and Gxy by using the integral images in step 3. Evaluate the cornerness response R as in (2) and (3) for SLC-Harris and SLC-KLT, respectively. Perform efcient NMS on cornerness response image R to extract maximum points for each corner pixel. Perform interpolation of R about feature locations to nd the sub-pixel accurate corner point location. For the SLC-KLT, sort the feature points according to their cornerness response R. (F is the number of feature points.)

Fig. 1. Using the integral image takes three operations and four memory accesses to sum pixels within a rectangular window.

of all the pixels contained within the rectangle formed by the origin and the location (x, y) [22], as follows: ii(x, y) =

x x, y y

I(x , y ).

(4)

With a recursive approach, two operations per pixel are required to compute the integral image as follows: s(x, y) = s(x, y 1) + I(x, y) ii(x, y) = ii(x 1, y) + s(x, y) (5)

C(p) and cornerness measure R are evaluated individually for each pixel; and 3) Quick-sort and NMS are used to suppress the locally less strong points. The complexity is reduced by two aspects in our approach. First, the integral image is used to reduce the complexity of both convolution and evaluation of cornerness response. Second, efcient NMS is adopted for NMS, thus avoiding highly complex sorting. Table I shows the complexity for each stage of the algorithm, where cornerness response is computationally most expensive.

Once the integral image is computed, the summation of all the pixel values S within a rectangular window can be calculated in three operations and four memory accesses as shown in Fig. 1. B. Gaussian Derivative The rst stage in detecting the corner feature point requires computing the partial image derivatives, gx and gy , of an image I in x and y directions, respectively. The Gaussian kernels need to be discretized and it is often approximated with the nite impulse response lter, hence requiring a lter length of at least 4. The performance of the Harris corner detector was improved by using the Gaussian derivative kernel [1] as indicated by the improved repeatability. For faster computations of convolutions, the recursive implementation of the Gaussian derivative kernel was used. Also in the recursive lter approach, the Gaussian derivative lter is approximated with the innite impulse response lter [23], which also allows to x the length of lter. In this approach, the Gaussian derivative kernel calculation for different scales are performed in constant time. However, this approach is still computationally more expensive than our method. Inspired by SURF [6], we also approximate the rst order Gaussian derivative kernel by a Box kernel. Fig. 2(a) and (b) shows the rst order Gaussian derivative kernel in the x-direction and its approximation by the Box kernel, respectively. The gray regions are set to zero. The black and white regions are approximated by 1 and +1, respectively. With the integral image, gradients are calculated at a low computational cost and in constant time. Computing the summation with the left black region and right white region requires three operations each, as described in Section IV-A. Hence, in total only seven operations and eight memory accesses are required to compute the gradient. The lter response is further normalized with the lter size. Convolution with the Gaussian derivative kernel combines two steps together: low-pass

IV. Robust LOCOCO Detector The S-LOCOCO algorithm comprises of sub-pixel accurate low complexity Harris (SLC-Harris) and sub-pixel accurate low complexity KLT (SLC-KLT). Since both the Harris and KLT algorithms follow a similar approach to detect corner pixels in the image, hence our method is applicable to both algorithms. To speedup the algorithm, the rst order Gaussian derivative kernel is approximated by the Box kernel, where the convolution can be calculated at low computational cost by using the integral image as described in Section IV-B. We 2 2 created the integral images of gx , gy , and gx gy to speedup the computation of the cornerness response in (1) required by (2) and (3) as described in Section IV-C. The highly complex sorting operation is replaced by the efcient NMS as described in Section IV-D. Finally, for feature point stability, we perform the interpolation to localize the feature points at sub-pixel accuracy as described in Section IV-E. The steps involved in the S-LOCOCO algorithm are summarized in Algorithm 1. A. Integral Image In this section, we rst describe the integral image briey to make this article self-contained. The integral image ii(x, y) at point (x, y), as shown in Fig. 1, is given by the summation

438

IEEE TRANSACTIONS ON CIRCUITS AND SYSTEMS FOR VIDEO TECHNOLOGY, VOL. 21, NO. 4, APRIL 2011

TABLE III Computational Complexity Comparison in Computing the Cornerness for Each Pixel C(p) Multiplications 3 W2 3 W2 3 3 R 7 9 7 9 Total 6 W2 + 7 6 W2 + 9 25 27

Harris KLT SLC-Harris SLC-KLT Fig. 2. (a) Discrete Gaussian partial rst order derivative kernel. (b) Box kernel for the partial rst order derivative =1.2. The kernel size is 99 (4 9).

Additions 3 W2 3 W2 3 (2+3) 3 (2+3)

C. Cornerness Response The cornerness stage is computationally the most intensive and time-consuming stage of the Harris and KLT algorithms. The cornerness C(p) of the pixel is evaluated by summing the squares and products of gradients within an integration window W as in (1), which is evaluated at each pixel. Consequently, overlapping of the computation among the pixels lying within the integration window W occurs. Hence, this stage can be accelerated drastically by using the integral image. Therefore, to accelerate the computation of the corner response, we create the integral image of the gradients in (1), 2 2 gx , gy , and gx gy as follows: iixx (x, y) =

x x, y y

TABLE II Computational Complexity of Convolution with the First Order Gaussian Derivative Kernel, Recursive Filter, and the Box Kernel Additions 2N 14 2+32 Multiplications 2N 16 1 Total 4N 30 9

Gaussian Filter Recursive Filter Box Filter

gx 2 (x , y ) gy 2 (x , y )

x x, y y

(6) (7) (8)

iiyy (x, y) = iixy (x, y) =

x x, y y

gx (x , y )gy (x , y ).

Fig. 3. Computational cost reduction factor in computing image derivative by using the Box kernel and integral image as compared to original separable Gaussian derivative kernel for different kernel lengths (N).

ltering with a Gaussian and differentiation. The Gaussian function is well approximated by a triangle function after the subsequent integration with the integral imaging technique. Table II shows the complexity of the convolution of the original separable Gaussian derivative kernel, of the recursive lter (with fourth order approximation) and of the Box kernel with our method, respectively. Here, N is the length of the Gaussian kernel. For the example in Fig. 2, using our method, two operations are required to create the integral image and six operations to calculate the lter response. Thus, for a 99 kernel corresponding to = 1.2, the speedup factor is at least 49/9=4. Moreover, the number of operations required by the Box kernel is independent of the kernel size, thus the gain will increase linearly with the kernel size as shown in Fig. 3. The number of multiplication operations are reduced to 1 (for the normalization) and all other multiplications are replaced by the addition and subtraction operations. For a lter of size N > 2, as shown in Fig. 3, our method starts performing better. In general, lter lengths greater than this are required.

We assume a rectangular window for both the algorithms, as commonly done [3], [8], thus v(x) = 1, v W. Once the integral images are created as in (6)(8), the summations Gxx , Gyy , and Gxy in (1), can be evaluated at a low computational cost, simply with three operations and four memory accesses. As a result, the repeated multiplication and summation operations within the integration window W across the image for each pixel are replaced by one time creation of the integral image and simple addition and subtraction operations afterward. Moreover, there is no loss of efciency of the detector with this modication and at the same time contributing huge speedup on performance of the algorithm. Table III shows the complexity of the original algorithm and S-LOCOCO algorithm for per pixel, where W is the integration window size. For a 99 window, the upper limit of the speedup factor is 15.4 and 14.5 for Harris and KLT, respectively. But, practically this speedup is slightly penalized by extra memory accesses for iixy , not present in the original algorithm. In the original algorithm, once gradients are read from the memory, the gx gy can be calculated at the same time as both the elements are already present in the internal registers of CPU, which is not the case with the integral image as it has been pre-computed and located in the memory. In addition, the operations required to evaluate the cornerness are independent of the integration window size W, thus the speedup factor will increase parabolically with the increase in the window size as shown in Fig. 4.

MAINALI et al.: ROBUST LOW COMPLEXITY CORNER DETECTOR

439

Fig. 4. Computational cost reduction factor in computing cornerness response by using the integral image as compared to the original approach for different integration window sizes.

Fig. 5. Efcient NMS algorithm. d is a minimum distance between the feature points. Black dots are maximum within each block and these points are tested for maximum locally as shown by dotted window for points a, b, c, and so on.

Hence, we can expect even larger speed for a feature point detection at larger scales. D. NMS NMS is performed over the cornerness response image to preserve one single location for each corner feature point. NMS for the KLT detector was performed in two stages [3]. First, Quick-sort [24] was used to arrange the cornerness response R over the image in descending order [3], [8], [10], which is computationally expensive operation due to the sorting of a response point list with the size of the image. Afterward, non-maximum points were suppressed by picking up the strong response points from the sorted response list and removing the less strong response points successive in the list within the distance d around this feature. As a result, a minimum distance between the feature points were enforced. The naive implementation of NMS in the local neighborhood of (2d+1)(2d+1), around each cornerness response also leads to a higher complexity for the Harris [2]. To nd a maximum point, for each pixel, the comparison was performed with all the pixels lying in a window (2d + 1)(2d + 1). The maximum point was selected if it is higher than all the pixels and above the threshold. This process was repeated for all the pixels in the cornerness response image. Once a maximum point is found, this would imply that we can skip all the neighboring pixels up to distance d in all directions, as they are smaller by construction. Such information is explored effectively in the efcient NMS [12]. For the S-LOCOCO algorithm, we adopt the efcient nonmaximum suppression (E-NMS) method proposed in [12] to efciently extract unique feature locations for each corner region. The E-NMS performs the NMS in the image blocks instead of pixel by pixel, thus reducing the computational complexity. Intuitively, both the Quick-sort and minimum distance enforcement stages are mainly aimed to track [3] a single location for each corner region. This is equivalent to NMS. However, we can switch the order by rst performing efcient NMS, which is computationally less complex, and only then sorting the feature points according to their cornerness response. Since, sorting is performed on a small number of points, the complexity is reduced drastically. The E-NMS optimally reuses the comparison results across the NMS window to perform NMS at a minimal cost. The E-

Fig. 6. Computation cost reduction factor in performing NMS by using E-NMS as compared to Quick-sort for different image sizes (complexity of sorting feature points is small and hence not shown). TABLE IV Computational Complexity of Quick-Sort and E-NMS Quick-Sort Complexity 1.39bh log2 (b h) Efcient NMS + Feature Point Sorting 2+

2.5 d+1

0.5 (d+1)2

bh

+ 1.39Flog2 (F)

NMS algorithm, as shown in Fig. 5, works as follows. First, it partitions the image into blocks of size (d + 1)(d + 1). Then, the maximum element is searched within each block individually. Finally, for each maximum within a block, the full neighborhood is tested for a maximum as shown in Fig. 5 with the dotted window. The feature point location is retained if it passes the local maximum test and is larger than the threshold. Finally, for the KLT, we perform sorting of the feature points according to the cornerness response associated with them. The average theoretical computational complexity for an image of size bh for Quick-sort and E-NMS is shown in Table IV, where d is the distance between features. For the image of size 1000700 and d = 10, the theoretical speedup factor is 12 and the speedup factor increases logarithmically with the image size as shown in Fig. 6. E. Sub-Pixel Accurate Feature Point Localization The corner feature point located by the above three stages are at the extremum of the cornerness response at the pixel location. The sub-pixel accuracy minimizes the projection error. The feature point localization at the sub-pixel accuracy is needed for accurate estimation of the correspondence between the frames, while estimating the correspondence parameters

440

IEEE TRANSACTIONS ON CIRCUITS AND SYSTEMS FOR VIDEO TECHNOLOGY, VOL. 21, NO. 4, APRIL 2011

[25], because the projection error is used as an objective function for optimization. For example, after correspondences between feature points are obtained, RANSAC [26] uses the projection error of the feature points to nd inlier subset of feature points and LevenbergMarquardt (LM) [27] further utilizes projection error as an optimization function to estimate correspondence parameters accurately. Thus, accurately localizing the feature point at sub-pixel accuracy is required to estimate the correspondence parameters precisely. The repeatability of feature point detector was improved by localizing the interest point at sub-pixel accuracy in scalespace [5], [28]. To localize the corner feature point location at the sub-pixel accuracy, the cornerness response image R computed in Section IV-C is interpolated up to the quadratic term by Taylor series expansion. For each feature point, the cornerness response image R is interpolated around the feature point location to compute the sub-pixel location. Since the cornerness response R is a nonlinear function of intensity, the Taylor series expansion of R up to the second term is performed about the feature point location (x, y) as follows: RT 1 2 R x + xT 2 x (9) x 2 x where R and its derivatives are evaluated at the corner feature point and x = (x, y)T . The derivatives of cornerness response are calculated by taking the difference of neighboring samples. The location of the extremum x of R is obtained by taking its derivative with respect to x and setting it to zero. After solving the equation, the sub-pixel location is calculated as follows: R(x) = R + x= 2 R x2

1

TABLE V Memory Complexity Comparison Memory Blocks I+gx +gy +T a +C+3Sb +Mc +2F I + gx + gy + T a + C + 2F I+ii+iixx +iiyy +iixy +C+3Fd +2F Total Memory 9WH+2F 5WH+2F 6WH+5F

KLT [8] Harris S-LOCOCO

a Buffer required by convolution. b Point list required by Quick-sort c Mask d Quick-sort

to sort cornerness response. table for each pixel to perform NMS. feature points (F is the number of feature points) W=image width, H=image height.

with the original approach. The repeatability is an important property of a feature point detection algorithm. The repeatability measures the usefulness of the corner detection algorithm, by checking whether it can accurately detect the same physical region in the images taken under various viewing conditions. The repeatability (r) is dened as follows: r= No. of features repeated 100. No. of useful features detected

R . x

(10)

The sub-pixel location x is added to the feature location to obtain the sub-pixel accurate feature point location.

V. Memory Complexity Table V shows the memory requirement of the original and S-LOCOCO. The memory required by feature points are negligible compared to the image size. We successfully removed the memory required by Quick-sort and NMS for the KLT algorithm, where Quick-sort requires three times the size of image to store the cornerness and image coordinates (x and y) for sorting. We also removed the extra buffer required by convolution (T) for both Harris and KLT. For S-LOCOCO, the gradients, gx and gy , are not used on later execution phase of the algorithm, hence they are reused to store their integral images. Since the computation of an integral image requires summation of the previous integral value with the current data, the same memory location can be overwritten, thus saving two image size blocks of memory. Thus, S-LOCOCO requires one extra image size buffer compared to Harris. Whereas, compared to KLT we saved three image size of memory.

We used the data-sets and software provided by Mikolajczyk [29] to evaluate the repeatability. The evaluation data-sets provide ground-truth homographies and include the images with decreasing illumination variation, increasing blur, view point angle changes, and scaling. To compute the repeatability, the feature points in each image are detected individually. Then, the repeated feature points within the overlapped region of the images are counted by projecting the feature point location between the images using the ground truth homography of the data-sets. After projection, the feature points are searched in the other image around the vicinity with an distance <= 1.5 pixels. The repeatability is measured in percentages, which expresses the percentage of the total number of features detected around the same physical location within an distance , out of the total useful features detected in the overlapped region of the image.

VII. Experimental Results and Discussion The S-LOCOCO algorithm comprises of SLC-Harris and SLC-KLT. The LOCOCO algorithm comprises of LC-Harris and LC-KLT [11]. We compared our SLC-Harris and SLCKLT corner detection algorithms with the original Harris and KLT corner detectors in terms of execution speed and the quality of the feature point detection. We also evaluate the S-LOCOCO with the LOCOCO algorithm to measure the performance improvement with the sub-pixel accurate feature point localization. We measured the quality of the feature point detectors based on the repeatability as described in Section VI. We used the KLT code provided in [8] and our implementation of Harris similarly as in the KLT code. We also performed the sub-pixel interpolation of feature point location for the original Harris and KLT algorithms to compare the performance of the algorithms without the inuence of the sub-pixel accurate localization. Fig. 7 shows the comparison of the execution time and speedup factor for each stage between the original and our

VI. Repeatability We used the repeatability criteria, as introduced in [1], to compare the quality of feature point detection of S-LOCOCO

MAINALI et al.: ROBUST LOW COMPLEXITY CORNER DETECTOR

441

Fig. 7. Comparison of execution time (Intel i5, 2.53 GHz, 3 GB RAM, and N = 9, W = 9, d = 10, feature count 1000). TABLE VI Comparison with Other Methods Execution Time (ms) Platform Image Size = 768288 13.9 2.53 GHz i5 CPU 1.34 2.6 GHz Opteron CPU 7.58 Image Size = 800640 32.1 2.53 GHz i5 CPU 400 3 GHz Pentium 4 CPU 70

SLC-Harris FAST [30] SUSAN [30] SLC-Harris SIFTa [6] SURFa [6]

a Only feature point detection. Note that SIFT and SURF are scale-invariant

robust LOCOCO algorithm (SLC-Harris and SLC-KLT). We achieved the speedup by a factor of 9.80.6 for the Harris and 8.60.6 for the KLT with the code implemented in C. The speedup factor for KLT is lower than the Harris due to square root operation needed by the eigenvalue decomposition for KLT on computing cornerness response R, which requires a higher cycle count. The extracted feature points are sorted according to their cornerness response for the KLT which takes a very limited amount of time. For the images of size 640480, we achieve 50 f/s on i5 CPU with 2.53 GHz and 3 GB random access memory (RAM) for SLC-Harris corner detector whereas the original Harris algorithm achieves only 5 f/s, thus enabling many real-time applications, such as video stabilization, video mosaicing, and others, to detect corner feature points at an acceptable frame rate. Table VI shows the execution time comparison with other feature point detectors. Next, we evaluate the repeatability, to measure quality, of the S-LOCOCO, LOCOCO [11], and the original Harris and KLT algorithms on the data-sets provided by Mikolajczyk [29]. We used the blur (Bike) and illumination variation (Leuven) data-sets for evaluation. We also evaluated the repeatability on planar rotated image and by adding increasing Gaussian noise to data-sets for illumination variation (Leuven). As shown in Fig. 8(a)(d), the repeatability for S-LOCOCO is either comparable or slightly better compared to the Harris and KLT algorithm for rotation, increasing Gaussian noise, increasing blur, and decreasing illumination variation settings, respectively, for <= 1.5. With the sub-pixel interpolation, the repeatability of S-LOCOCO is improved by 5% on an average compared to the LOCOCO algorithm. For image rotation as shown in Fig. 8(a), the repeatability is lowest

at an rotation angle of /4 due to the square shape of the lter. For image rotations, the repeatability for S-LOCOCO is also improved compared to LOCOCO due to sub-pixel interpolation of the feature point location. The repeatability stays above 70% which shows its invariance to rotation is still preserved. For the images with the increasing blur, as shown in Fig. 8(c), repeatability result drops drastically for the nal blur setting due to all the detectors not being scale invariant. With the increasing blur the feature point regions previously detected are blurred, changing the scale of the corresponding region, which ultimately adds error in feature point localization for all the algorithms due to feature point detection performed at the single scale, thus impacting the repeatability results. For illumination variation, as shown in Fig. 8(d), the repeatability of S-LOCOCO is slightly better compared to the Harris and KLT. The repeatability remains above 55% for all the algorithms, which shows its strong invariance to illumination variation, due to corner detectors operate only on gradients instead of operating on the image pixels directly. There is no loss of quality with our approach in evaluating the corner response by an integral image technique and efcient NMS, while at the same time enabling a major speedup in these stages of the corner detector. The major change that would likely impact the quality of the detector is the replacement of Gaussian derivative kernel by the Box kernel in computing the image gradients. But, the experimental results show good repeatability indicating a good quality in feature point detection. The convolution of the image with the Gaussian derivative kernel combines two steps together: rst, the image is ltered with a low-pass Gaussian lter and second, the image is differentiated. Thus computing image derivative with the Box kernel, which approximates Gaussian derivative kernel as shown in Fig. 2(b), is equivalent to smoothing the image with a triangle function, followed by differentiation. Moreover, the Gaussian kernel is discretized, which is similar to the discretization of the triangle function, at least for smaller sigma. Thus, our algorithm gives similar detection results as the original algorithm. The repeatability only measures the feature points detected within a certain distance, but does not indicate the actual performance of the algorithms on actual projection error. The average projection error is computed to measure the exact inuence of the sub-pixel interpolation of the feature point location. Consequently, to evaluate the actual performance improvement between S-LOCOCO and LOCOCO due to subpixel interpolation, we compute the average projection error. To compute the average projection error, feature points are detected in each image individually. Then, the feature point correspondences with a distance <= 1.5 are computed by using the ground truth homography of the data-sets. So, by using the ground truth homography, the effect of the tracking error on estimating correspondences can be avoided, which also allows to consider only errors from the feature point localization. Then, RANSAC [26] and LM [27] are applied on the correspondence set to estimate the homography parameters. Finally, the average projection error is computed by using this homography on projecting feature points between the images. Table VII shows the average projection error of the

442

IEEE TRANSACTIONS ON CIRCUITS AND SYSTEMS FOR VIDEO TECHNOLOGY, VOL. 21, NO. 4, APRIL 2011

Fig. 8. Repeatability evaluated on (a) planar rotation of an image, (b) increasing Gaussian noise added in second image of Leuven data-set, (c) increasing Blur settings, and (d) decreasing illumination variation settings. TABLE VII Comparison of the Average Projection Error on Registering the Images with the Blur, Illumination, and Rotation for With and Without Sub-Pixel Interpolation a SLC-Harris 0.274 0.341 0.269 0.430 0.161 0.260 0.361 0.462 0.526 0.465 0.274 0.135 0.003 LC-Harris 0.547 0.635 0.553 0.674 0.477 0.544 0.614 0.733 0.797 0.741 0.580 0.448 0.008 SLC-KLT 0.309 0.401 0.372 0.520 0.253 0.450 0.549 0.572 0.538 0.517 0.443 0.203 0.004 LC-KLT 0.601 0.607 0.637 0.765 0.525 0.652 0.775 0.768 0.775 0.744 0.692 0.517 0.008 TABLE VIII Average Projection Error on Registering the Successive Images Taken from the Video Average Projection Error SLC-Harris + KLT Tracking 0.960.28 1.080.25 1.120.30 1.150.30 0.290.15 0.280.14 0.320.15

Illumination Blur

Rotation (degree)

2b 3b 2c 3c 10 20 30 40 50 60 70 80 90

Laparoscopy video 1a Laparoscopy video 2a Laparoscopy video 3a Endoscopy colonb Leuven Libraryc Arenberg Castlec Aerial viewc

a Laparoscopy video in digital video disc (DVD) [31]. b Endoscopic video of colon with narrow band light [32]. c Video captured by hand-held camera by sweeping over the

scene.

a Interpolation b On c On

time Ti = 0.20 ms for 1000 feature points. registering Leuven data-set images 2 and 3 with the image 1. registering Bike data-set images 2 and 3 with the image 1.

with the sub-pixel interpolation is two to three times smaller than without sub-pixel interpolation. Meanwhile, the execution time overhead added by the interpolation is also very small. VIII. Application to Image Registration

feature point correspondences, with and without the sub-pixel accurate feature point localization, on the illumination variation (Leuven) and Blur (Bike) data-sets by registering images 2 and 3 with image 1. The average projection error obtained

We used the SLC-Harris to detect corner feature points while registering the video frames, which is the basic step for applications such as video stabilization and video frames mosaicing [33]. To perform image registration, we used our

MAINALI et al.: ROBUST LOW COMPLEXITY CORNER DETECTOR

443

Fig. 9. Image registration of the successive images of laparoscopic surgery video. (a)(d) Feature points detected by SLC-Harris. (e)(h) KLT tracking of the feature points in consecutive frame and RANSAC to estimate homography (black inliers and red outliers). (i)(l) Registration of the second image with the rst image (second frame transparent and at the back).

SLC-Harris to detect corner feature points. Then, the corner feature points are tracked by using the KLT tracking algorithm [8] on the successive frames. The feature point correspondences obtained from tracking are rened using the RANSAC scheme [25] to estimate the homography between the frames. Then, LM optimization [25], [27] is applied to optimize the homography parameters using the inlier subset from RANSAC. We measured the average projection error on registering the second frame with the rst frame and by averaging the resulting projection error. Experiments were performed on various laparoscopy [31], endoscopy [32], and outdoor scene videos. For medical videos, the video is captured at high frame rates hence rigidity between the frames

can be assumed [34]. On average 20 random image pairs were considered from each medical video and 50 random image pairs from each outdoor video. The outdoor videos were captured manually by holding a camera and sweeping over the scene so that camera motion includes translation as well as rotation. The medical video is taken from a video captured during surgery and provided in DVD in [31]. Table VIII shows the average projection error and its variance in registering the frames using SLC-Harris feature points in various pair of frames of laparoscopy and outdoor video. Figs. 9 and 10 show feature point detection, KLT tracking, and image registration using SLC-Harris on few images from the laparoscopy and outdoor video used in evaluation in Table VIII.

444

IEEE TRANSACTIONS ON CIRCUITS AND SYSTEMS FOR VIDEO TECHNOLOGY, VOL. 21, NO. 4, APRIL 2011

Fig. 10. Image registration of successive video frames of University Library of Leuven, Arenberg Castle Leuven, and Aerial view of parking, respectively. (a)(c) Feature points detected by SLC-Harris. (d)(f) KLT tracking of the feature points in consecutive frame and RANSAC to estimate homography (black inliers and red outliers). (g)(i) Registration of the second image with the rst image (second frame transparent and at the back).

IX. Conclusion In this paper, we developed a method, named as S-LOCOCO, to speedup the Harris and KLT corner detectors. We cropped the Gaussian derivative kernel and represented it with the Box kernel to speedup the convolution by using the integral image. We further used an integral image representation to speedup the computation of the cornerness response. We adopted an E-NMS for NMS of the cornerness response points, thus avoiding the highly complex sorting operation. The feature point locations are interpolated for sub-pixel accuracy. We evaluated the quality of the detectors based on repeatability. The repeatability results indicated that our algorithm and the original algorithms have a similar quality of feature point detection. Thus, we achieved both a high speed and a good quality feature point detection algorithm. Future work will focus on extending our method for high-speed scale-invariant corner detection. Acknowledgment The authors would like to thank B. Geelen for his help while preparing this manuscript. References

[1] C. Schmid, R. Mohr, and C. Bauckhage, Evaluation of interest point detectors, Int. J. Comput. Vision, vol. 37, no. 2, pp. 151172, 2000.

[2] C. Harris and M. Stephens, A combined corner and edge detection, in Proc. 4th Alvey Vision Conf., 1988, pp. 147151. [3] C. Tomasi and T. Kanade, Detection and tracking of point features, Dept. Comput. Sci., Carnegie Mellon Univ., Pittsburgh, PA, Tech. Rep. CMU-CS-91-132, Apr. 1991. [4] E. Rosten, R. Porter, and T. Drummond, Faster and better: A machine learning approach to corner detection, IEEE Trans. Patt. Anal. Mach. Intell., vol. 32, no. 1, pp. 105119, Jan. 2010. [5] D. G. Lowe, Distinctive image features from scale-invariant keypoints, Int. J. Comput. Vision, vol. 60, no. 2, pp. 91110, 2004. [6] H. Bay, A. Ess, T. Tuytelaars, and L. Van Gool, Speeded-up robust features (surf), Comput. Vision Image Understand., vol. 110, no. 3, pp. 346359, 2008. [7] K. Mikolajczyk and C. Schmid, Scale and afne invariant interest point detectors, Int. J. Comput. Vision, vol. 60, no. 1, pp. 6386, 2004. [8] S. Bircheld. (2007, Aug.). KLT: An Implementation of the KanadeLucas-Tomasi Feature Tracker [Online]. Available: http://www.ces. clemson.edu/stb/klt [9] L. Teixeira, W. Celes, and M. Gattass, Accelerated corner-detector algorithms, in Proc. British Mach. Vision Conf., Sep. 2008. [10] S. N. Sinha, J. M. Frahm, M. Pollefeys, and Y. Genc, GPU-based video feature tracking and matching, in Proc. Workshop Edge Comput. Using New Commodity Architect., 2006. [11] P. Mainali, Q. Yang, G. Lafruit, R. Lauwereins, and L. V. Gool, LOCOCO: Low complexity corner detector, in Proc. IEEE Int. Conf. Acou., Speech Signal Process., Mar. 2010, pp. 810813. [12] A. Neubeck and L. Van Gool, Efcient non-maximum suppression, in Proc. IEEE Int. Conf. Patt. Recog., vol. 3. Sep. 2006, pp. 850855. [13] R. P. Horaud, T. Skordas, and F. Veillon, Finding geometric and relational structures in an image, in Proc. 1st Eur. Conf. Comput. Vision, vol. 427. Apr. 1990, pp. 374384. [14] F. Shilat, M. Werman, and Y. Gdalyahn, Ridges corner detection and correspondence, in Proc. IEEE Conf. Comput. Vision Patt. Recog., Jun. 1997, pp. 976981.

MAINALI et al.: ROBUST LOW COMPLEXITY CORNER DETECTOR

445

[15] F. Mokhtarian and R. Suomela, Robust image corner detection through curvature scale space, IEEE Trans. Patt. Anal. Mach. Intell., vol. 20, no. 12, pp. 13761381, Dec. 1998. [16] S. M. Smith and J. M. Brady, Susan: A new approach to low level image processing, Int. J. Comput. Vision, vol. 23, no. 1, pp. 4578, 1997. [17] P. Beaudet, Rotational invariant image operators, in Proc. Int. Conf. Patt. Recog., 1978, pp. 579583. [18] L. Kitchen and A. Rosenfeld, Gray-level corner detection, in Proc. Patt. Recog. Lett., 1982, pp. 95102. [19] H. P. Morevec, Toward automatic visual obstacle avoidance, in Proc. 5th Int. Joint Conf. Artif. Intell., 1977, p. 584. [20] W. Forstner, A framework for low level feature extraction, in Proc. 3rd Eur. Conf.-Vol. II Comput. Vision, 1994, pp. 383394. [21] L.-H. Zou, J. Chen, J. Zhang, and L.-H. Dou, The comparison of two typical corner detection algorithms, in Proc. 2nd Int. Symp. Intell. Inform. Tech. Applicat., 2008, pp. 211215. [22] P. A. Viola and M. J. Jones, Rapid object detection using a boosted cascade of simple features, in Proc. IEEE Comput. Vision Patt. Recog., Apr. 2001, pp. 511518. [23] R. Deriche, Recursively implementing the Gaussian and its derivatives, INRIA, Unit de Recherche Sophia-Antipolis, Sophia-Antipolis, France, Tech. Rep. 1893, 1993. [24] C. A. R. Hoare, Quicksort, Comput. J., vol. 5, no. 1, pp. 1016, 1962. [25] R. Hartley and A. Zisserman, Multiple View Geometry in Computer Vision. Cambridge, U.K.: Cambridge Univ. Press, 2000, pp. 87127. [26] M. A. Fischler and R. C. Bolles, Random sample consensus: A paradigm for model tting with applications to image analysis and automated cartography, Commun. ACM, vol. 24, no. 6, pp. 381395, 1981. [27] D. W. Marquardt, An algorithm for least-squares estimation of nonlinear parameters, J. Soc. Indust. Appl. Math., vol. 11, no. 2, pp. 431441, 1963. [28] M. Brown and D. Lowe, Invariant features from interest point groups, in Proc. British Mach. Vision Conf., 2002, pp. 656665. [29] K. Mikolajczyk. (2007, Jun.). Afne Covariant Features [Online]. Available: http://www.robots.ox.ac.uk/vgg/research/afne [30] E. Rosten and T. Drummond, Machine learning for high-speed corner detection, in Proc. Eur. Conf. Comput. Vision, vol. 1. May 2006, pp. 430443. [31] A. L. Covens and R. Kupets, Laparoscopic Surgery for Gynecologic Oncology. New York: McGraw Hill, 2009. [32] J. East, N. Suzuki, and B. Saunders, Narrow-band imaging-NBI in the colon, Wolfson Unit Endoscopy, St. Marks Hospital, London, U.K., 2006. Available: http://www.wolfsonendoscopy.org.uk/st-markmultimedia-nbi-in-the-colon.html [33] P. Suchit, S. Ryusuke, E. Tomio, and Y. Yagi, Deformable registration for generating dissection image of an intestine from annular image sequence, in Proc. Comput. Vision Biomedical Image Applicat., 2005, pp. 271280. [34] R. Miranda-Luna, C. Daul, W. Blondel, Y. Hernandez-Mier, D. Wolf, and F. Guillemin, Mosaicing of bladder endoscopic image sequences: Distortion calibration and registration algorithm, IEEE Trans. Biomedical Eng., vol. 55, no. 2, pp. 541553, Feb. 2008. Pradip Mainali (S10) received the B.E. degree from the National Institute of Technology, Surat, India, in 2002, and the Master of Technology Design in Embedded Systems degree jointly awarded by the National University of Singapore, Singapore, and Technical University of Eindhoven, Eindhoven, The Netherlands, in 2006. He is currently pursuing the Ph.D. degree from the Katholieke Universiteit Leuven, Leuven, Belgium, and in collaboration with Interuniversitair Micro-Electronica Centrum VZW, Leuven. His current research interests include computer vision and machine learning. Qiong Yang (M03) received the Ph.D. degree from Tsinghua University, Beijing, China, in 2004. From 2004 to 2007, she was an Associate Researcher with Microsoft Research Asia, Beijing. In September 2007, she joined VISICS Corporation, Leuven, Belgium. She has been with Interuniversitair Micro-Electronica Centrum VZW, Leuven, as a Senior Research Scientist since January 2009. Her current research interests include pattern recognition and machine learning, feature detection/extraction and matching, visual tracking and detection, object

segmentation and cutout, stereo and multiview vision, and multichannel fusion and visualization. Gauthier Lafruit (M99) is currently a Principle Scientist Multimedia with the Department of Nomadic Embedded Systems, Interuniversitair Micro-Electronica Centrum VZW (IMEC), Leuven, Belgium. He has acquired image processing expertise in various applications (video coding, multicamera acquisition, multiview rendering, stereo matching, image analysis, and others) applying the Triple-A philosophy, i.e., nding the appropriate tradeoff between Application specications, Algorithm complexity, and Architecture (platform) support. From 1989 to 1994, he was a Research Scientist with the Belgian National Foundation for Scientic Research, Brussels, Belgium, mainly active in the area of wavelet image compression. Subsequently, he was a Research Assistant with the Vrije Universiteit Brussel (Free University of Brussels), Brussels. In 1996, he joined IMEC, where he was a Senior Scientist for the design of low-power very large-scale integration for combined JPEG/wavelet compression engines. In this role, he has made decisive contributions to the standardization of 3-D-implementation complexity management in MPEG-4. Since 2006, he has been actively contributing to the 3-DTV and multiview video research domain. This presentation will mainly focus on the latter, following the aforementioned Triple-A philosophy with Application-Algorithm-Architecture tradeoffs. His current research interests include progressive transmission in still image, video and 3-D object coding, as well as scalability and resource monitoring for video stereoscopic applications and advanced 3-D graphics. Luc Van Gool (M85) received a Masters degree in electromechanical engineering and the Ph.D. degree from the Katholieke Universiteit Leuven, Leuven, Belgium, in 1981 and 1991, respectively. Currently, he is a Full Professor with the Katholieke Universiteit Leuven and the Eidgen so sische Technische Hochschule, Zurich, Switzerland. He leads computer vision research at both places, where he also teaches computer vision. He is the CoFounder of ve spin-off companies. He has authored over 200 papers in this eld. His current research interests include 3-D reconstruction and modeling, object recognition, and tracking and gesture analysis. Prof. Gool is the recipient of several Best Paper Awards. He has been a program committee member of several, major computer vision conferences. Rudy Lauwereins (SM97) received the Ph.D. degree in electrical engineering in 1989. He is currently the Vice President of Interuniversitair Micro-Electronica Centrum VZW (IMEC), Leuven, Belgium, which performs world-leading research and delivers industry-relevant technology solutions through global partnerships in nanoelectronics, information and communication technologies, healthcare, and energy. He is responsible for IMECs Smart Systems Technology Ofce, covering energy-efcient green radios, vision systems, (bio)medical and lifestyle electronics, as well as wireless autonomous transducer systems and large area organic electronics. He is also a part-time Full Professor with the Department of Electrical Engineering, Katholieke Universiteit Leuven, Leuven, where he teaches computer architectures in the M.S. in Electrotechnical Engineering Program. Before joining IMEC in 2001, he held a tenure professorship with the Faculty of Engineering, Katholieke Universiteit Leuven, since 1993. He has authored and co-authored more than 350 publications in international journals, books and conference proceedings. Dr. Lauwereins has served on numerous international program committees and organizational committees, and has given many invited and keynote speeches. He was the General Chair of the Design, Automation and Test in Europe Conference in 2007.

Potrebbero piacerti anche

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDa EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeValutazione: 4 su 5 stelle4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDa EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreValutazione: 4 su 5 stelle4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDa EverandNever Split the Difference: Negotiating As If Your Life Depended On ItValutazione: 4.5 su 5 stelle4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDa EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceValutazione: 4 su 5 stelle4/5 (895)

- Grit: The Power of Passion and PerseveranceDa EverandGrit: The Power of Passion and PerseveranceValutazione: 4 su 5 stelle4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeDa EverandShoe Dog: A Memoir by the Creator of NikeValutazione: 4.5 su 5 stelle4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDa EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersValutazione: 4.5 su 5 stelle4.5/5 (345)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDa EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureValutazione: 4.5 su 5 stelle4.5/5 (474)

- Her Body and Other Parties: StoriesDa EverandHer Body and Other Parties: StoriesValutazione: 4 su 5 stelle4/5 (821)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Da EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Valutazione: 4.5 su 5 stelle4.5/5 (121)

- The Emperor of All Maladies: A Biography of CancerDa EverandThe Emperor of All Maladies: A Biography of CancerValutazione: 4.5 su 5 stelle4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingDa EverandThe Little Book of Hygge: Danish Secrets to Happy LivingValutazione: 3.5 su 5 stelle3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDa EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyValutazione: 3.5 su 5 stelle3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)Da EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Valutazione: 4 su 5 stelle4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDa EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaValutazione: 4.5 su 5 stelle4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDa EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryValutazione: 3.5 su 5 stelle3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnDa EverandTeam of Rivals: The Political Genius of Abraham LincolnValutazione: 4.5 su 5 stelle4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealDa EverandOn Fire: The (Burning) Case for a Green New DealValutazione: 4 su 5 stelle4/5 (74)

- The Unwinding: An Inner History of the New AmericaDa EverandThe Unwinding: An Inner History of the New AmericaValutazione: 4 su 5 stelle4/5 (45)

- 2022 WR Extended VersionDocumento71 pagine2022 WR Extended Versionpavankawade63Nessuna valutazione finora

- Standard Answers For The MSC ProgrammeDocumento17 pagineStandard Answers For The MSC ProgrammeTiwiNessuna valutazione finora

- 50114a Isolemfi 50114a MonoDocumento2 pagine50114a Isolemfi 50114a MonoUsama AwadNessuna valutazione finora

- 7TH Maths F.a-1Documento1 pagina7TH Maths F.a-1Marrivada SuryanarayanaNessuna valutazione finora

- 2016 Closing The Gap ReportDocumento64 pagine2016 Closing The Gap ReportAllan ClarkeNessuna valutazione finora

- Ccoli: Bra Ica Ol A LDocumento3 pagineCcoli: Bra Ica Ol A LsychaitanyaNessuna valutazione finora

- Cyber Briefing Series - Paper 2 - FinalDocumento24 pagineCyber Briefing Series - Paper 2 - FinalMapacheYorkNessuna valutazione finora

- Thesis PaperDocumento53 pagineThesis PaperAnonymous AOOrehGZAS100% (1)

- Tool Charts PDFDocumento3 pagineTool Charts PDFtebengz100% (2)

- Jackson V AEGLive - May 10 Transcripts, of Karen Faye-Michael Jackson - Make-up/HairDocumento65 pagineJackson V AEGLive - May 10 Transcripts, of Karen Faye-Michael Jackson - Make-up/HairTeamMichael100% (2)

- SweetenersDocumento23 pagineSweetenersNur AfifahNessuna valutazione finora

- An Evaluation of MGNREGA in SikkimDocumento7 pagineAn Evaluation of MGNREGA in SikkimBittu SubbaNessuna valutazione finora

- QuexBook TutorialDocumento14 pagineQuexBook TutorialJeffrey FarillasNessuna valutazione finora

- Acute Appendicitis in Children - Diagnostic Imaging - UpToDateDocumento28 pagineAcute Appendicitis in Children - Diagnostic Imaging - UpToDateHafiz Hari NugrahaNessuna valutazione finora

- Development Developmental Biology EmbryologyDocumento6 pagineDevelopment Developmental Biology EmbryologyBiju ThomasNessuna valutazione finora

- Genetics Icar1Documento18 pagineGenetics Icar1elanthamizhmaranNessuna valutazione finora

- Applications SeawaterDocumento23 pagineApplications SeawaterQatar home RentNessuna valutazione finora

- Sveba Dahlen - SRP240Documento16 pagineSveba Dahlen - SRP240Paola MendozaNessuna valutazione finora

- (1921) Manual of Work Garment Manufacture: How To Improve Quality and Reduce CostsDocumento102 pagine(1921) Manual of Work Garment Manufacture: How To Improve Quality and Reduce CostsHerbert Hillary Booker 2nd100% (1)

- PDF Chapter 5 The Expenditure Cycle Part I Summary - CompressDocumento5 paginePDF Chapter 5 The Expenditure Cycle Part I Summary - CompressCassiopeia Cashmere GodheidNessuna valutazione finora

- Module 2 MANA ECON PDFDocumento5 pagineModule 2 MANA ECON PDFMeian De JesusNessuna valutazione finora

- Chapter 4 Achieving Clarity and Limiting Paragraph LengthDocumento1 paginaChapter 4 Achieving Clarity and Limiting Paragraph Lengthapi-550339812Nessuna valutazione finora

- The Covenant Taken From The Sons of Adam Is The FitrahDocumento10 pagineThe Covenant Taken From The Sons of Adam Is The FitrahTyler FranklinNessuna valutazione finora

- Unsuccessful MT-SM DeliveryDocumento2 pagineUnsuccessful MT-SM DeliveryPitam MaitiNessuna valutazione finora

- (Jones) GoodwinDocumento164 pagine(Jones) Goodwinmount2011Nessuna valutazione finora

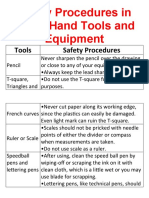

- Safety Procedures in Using Hand Tools and EquipmentDocumento12 pagineSafety Procedures in Using Hand Tools and EquipmentJan IcejimenezNessuna valutazione finora

- Lesson 3 - ReviewerDocumento6 pagineLesson 3 - ReviewerAdrian MarananNessuna valutazione finora

- PSA Poster Project WorkbookDocumento38 paginePSA Poster Project WorkbookwalliamaNessuna valutazione finora

- An Annotated Bibliography of Timothy LearyDocumento312 pagineAn Annotated Bibliography of Timothy LearyGeetika CnNessuna valutazione finora

- SASS Prelims 2017 4E5N ADocumento9 pagineSASS Prelims 2017 4E5N ADamien SeowNessuna valutazione finora