Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Text Document Clustering Based On Neighbors

Caricato da

Ram ChandruDescrizione originale:

Titolo originale

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Text Document Clustering Based On Neighbors

Caricato da

Ram ChandruCopyright:

Formati disponibili

Data & Knowledge Engineering 68 (2009) 12711288

Contents lists available at ScienceDirect

Data & Knowledge Engineering

journal homepage: www.elsevier.com/locate/datak

Text document clustering based on neighbors

Congnan Luo a, Yanjun Li b, Soon M. Chung c,*

a b c

Teradata Corporation, San Diego, CA 92127, USA Department of Computer and Information Science, Fordham University, Bronx, NY 10458, USA Department of Computer Science and Engineering, Wright State University, Dayton, OH 45435, USA

a r t i c l e

i n f o

a b s t r a c t

Clustering is a very powerful data mining technique for topic discovery from text documents. The partitional clustering algorithms, such as the family of k-means, are reported performing well on document clustering. They treat the clustering problem as an optimization process of grouping documents into k clusters so that a particular criterion function is minimized or maximized. Usually, the cosine function is used to measure the similarity between two documents in the criterion function, but it may not work well when the clusters are not well separated. To solve this problem, we applied the concepts of neighbors and link, introduced in [S. Guha, R. Rastogi, K. Shim, ROCK: a robust clustering algorithm for categorical attributes, Information Systems 25 (5) (2000) 345366], to document clustering. If two documents are similar enough, they are considered as neighbors of each other. And the link between two documents represents the number of their common neighbors. Instead of just considering the pairwise similarity, the neighbors and link involve the global information into the measurement of the closeness of two documents. In this paper, we propose to use the neighbors and link for the family of k-means algorithms in three aspects: a new method to select initial cluster centroids based on the ranks of candidate documents; a new similarity measure which uses a combination of the cosine and link functions; and a new heuristic function for selecting a cluster to split based on the neighbors of the cluster centroids. Our experimental results on real-life data sets demonstrated that our proposed methods can signicantly improve the performance of document clustering in terms of accuracy without increasing the execution time much. 2009 Elsevier B.V. All rights reserved.

Article history: Received 17 February 2008 Received in revised form 20 June 2009 Accepted 22 June 2009 Available online 1 July 2009 Keywords: Document clustering Text mining k-means Bisecting k-means Performance analysis

1. Introduction How to explore and utilize the huge amount of text documents is a major question in the areas of information retrieval and text mining. Document clustering (also referred to as text clustering) is one of the most important text mining methods that are developed to help users effectively navigate, summarize, and organize text documents. By organizing a large amount of documents into a number of meaningful clusters, document clustering can be used to browse a collection of documents or organize the results returned by a search engine in response to a users query [27]. It can signicantly improve the precision and recall in information retrieval systems [19,27,28,31], and it is an efcient way to nd the nearest neighbors of a document [3]. The problem of document clustering is generally dened as follows: given a set of documents, we would like to partition them into a predetermined or an automatically derived number of clusters, such that the documents assigned to each cluster are more similar to each other than the documents assigned to different clusters. In other words, the documents in one cluster share the same topic, and the documents in different clusters represent different topics.

* Corresponding author. Tel.: +1 (937) 775 5119; fax: +1 (937) 775 5133. E-mail address: soon.chung@wright.edu (S.M. Chung). 0169-023X/$ - see front matter 2009 Elsevier B.V. All rights reserved. doi:10.1016/j.datak.2009.06.007

1272

C. Luo et al. / Data & Knowledge Engineering 68 (2009) 12711288

There are two general categories of clustering methods: agglomerative hierarchical and partitional methods. In previous research, both methods were applied to document clustering. Agglomerative hierarchical clustering (AHC) algorithms initially treat each document as a cluster, use different kinds of distance functions to compute the similarity between the pairs of clusters, and then merge the closest pair [11]. This merging step is repeated until the desired number of clusters is obtained. Comparing with the bottom-up method of AHC algorithms, the family of k-means algorithms [6,23,24,29], which belong to the category of partitional clustering, create one-level partitioning of the documents. The k-means algorithm is based on the idea that a centroid can represent a cluster. After selecting k initial centroids, each document is assigned to a cluster based on a distance measure (between the document and each of the k centroids), then k centroids are recalculated. This step is repeated until an optimal set of k clusters are obtained based on a criterion function. For document clustering, Unweighted Pair Group Method with Arithmetic Mean (UPGMA) [11] is reported to be the most accurate one in the AHC category. Bisecting k-means [26,34] is reported outperforming k-means as well as the agglomerative approach in terms of accuracy and efciency. In bisecting k-means, initially the whole data set is treated as a cluster. Based on a rule, it selects a cluster to split into two by using the basic k-means algorithm. This bisecting step is repeated until the desired number of clusters is obtained. Generally speaking, the partitional clustering algorithms are well-suited for the clustering of large text databases due to their relatively low computational requirement and high quality. A key characteristic of the partitional clustering algorithms is that a global criterion function is used, whose optimization drives the entire clustering process. The goal of this criterion function is to optimize different aspects of intra-cluster similarity, inter-cluster dissimilarity, and their combinations. A well-known similarity measure is the cosine function, which is widely used in document clustering algorithms and is reported performing very well [34]. The cosine function can be used in the family of k-means algorithms to assign each document to a cluster with the most similar cluster centroid in an effort to maximize the intra-cluster similarity. Since the cosine function measures the similarity of two documents, only the pairwise similarity is considered when we determine whether a document is assigned to a cluster or not. However, when the clusters are not well separated, partitioning them just based on the pairwise similarity is not good enough because some documents in different clusters may be similar to each other. To avoid this problem, we applied the concepts of neighbors and link, introduced in [14], to document clustering. In general, if two data points are similar enough, they are considered as neighbors of each other. Every data point can have a set of neighbors in the data set for a certain similarity threshold. The link between two data points represents the number of their common neighbors [14]. For example, linkpi ; pj is the number of common neighbors of two data points pi and pj . In [14], the link function is used in an agglomerative algorithm for clustering data with categorical attributes and obtained better clusters than traditional algorithms. Each text document can be viewed as a tuple with boolean attribute values, where each attribute corresponds to a unique term. An attribute value is true if the corresponding term exists in the document. Since a boolean attribute is a special case of the categorical attribute, we could treat documents as data with categorical attributes. With this assumption, the concepts of neighbors and link could provide valuable information about the documents in the clustering process. We believe that the intra-cluster similarity better be measured not only based on the distance between the documents and the centroid, but also based on their neighbors. The link function can be used to enhance the evaluation of the closeness between documents because it takes the information of surrounding documents into consideration. In this paper, we propose to use the neighbors and link along with the cosine function in different aspects of the k-means and bisecting k-means algorithms for clustering documents. The family of k-means algorithms have two phases: initial clustering and cluster renement [36]. The initial clustering phase is the process of choosing a desired number k of initial centroids and assigning documents to their closest centroids in order to form initial partitions. The cluster renement phase is the optimization process which adjusts the partitions by repeatedly calculating the new cluster centroids based on the documents assigned to them and reassigning documents. First, we propose a new method of selecting the initial centroids. It is well known that the performance of the family of kmeans algorithms is very sensitive to the initial centroids [11]. It is very important that the initial centroids are distributed well enough to attract sufcient nearby, topically related documents [24]. Our selection of the initial centroids is based on three values: the pairwise similarity value calculated by the cosine function, the link function value, and the number of neighbors of documents in the data set. This combination helps us nd a group of initial centroids with high quality. Second, we propose a new similarity measure to determine the closest cluster centroid for each document during the cluster renement phase. This similarity measure is composed of the cosine and link functions. We believe that, besides the pairwise similarity, involving the documents in the neighborhood can improve the accuracy of the closeness measurement between a document and a cluster centroid. Third, we propose a new heuristic function for the bisecting k-means algorithm to select a cluster to split. Unlike the kmeans algorithm, which splits the whole data set into k clusters at each iteration step, the bisecting k-means algorithm splits only one existing cluster into two subclusters. Our selection of a cluster to split is based on the neighbors of the centroids, instead of the sizes of clusters, because the concept of neighbors provides more information about the intra-cluster similarity of each cluster. We evaluated the performance of our proposed clustering algorithms on various real-life data sets extracted from the Reuters-21578 Distribution 1.0 [30], the Classic text database [5], and a corpus of the Text Retrieval Conference (TREC) [16]. Our clustering algorithms demonstrated very signicant improvement in the clustering accuracy.

C. Luo et al. / Data & Knowledge Engineering 68 (2009) 12711288

1273

The rest of this paper is organized as follows. In Section 2, we review the vector space model of documents, the cosine function, the concepts of neighbors and link, and the k-means and the bisecting k-means algorithms. In Section 3, our proposed applications of the neighbors and link in the k-means and bisecting k-means algorithms are described in detail. In Section 4, experimental results of our clustering algorithms are compared with those of original algorithms in terms of the clustering accuracy. Section 5 reviews related work, and Section 6 contains some conclusions and future work. 2. Background 2.1. Vector space model of text documents For most existing document clustering algorithms, documents are represented by using the vector space model [31]. In this model, each document d is considered as a vector in the term-space and represented by the term frequency (TF) vector:

dtf tf1 ; tf2 ; . . . ; tfD

where tfi is the frequency of term i in the document, and D is the total number of unique terms in the text database. Normally there are several preprocessing steps, including the removal of stop words and the stemming on the documents. A widely used renement to this model is to weight each term based on its inverse document frequency (IDF) in the document collection. The idea is that the terms appearing frequently in many documents have limited discrimination power, so they need to be deemphasized [31]. This is commonly done by multiplying the frequency of each term i by logn=dfi , where n is the total number of documents in the collection, and dfi is the number of documents that contain term i (i.e., document frequency). Thus, the tfidf representation of the document d is:

dtf idf tf1 logn=df1 ; tf2 logn=df2 ; . . . ; tfD logn=dfD

To account for the documents of different lengths, each document vector is normalized to a unit vector (i.e., kddf idf k 1). In the rest of this paper, we assume that this vector space model is used to represent documents during the clustering. Given a set C j of documents and their corresponding vector representations, the centroid vector cj is dened as:

cj

1 X di jC j j d 2C

i j

where each di is the document vector in the set C j , and jC j j is the number of documents in C j . It should be noted that even though each document vector di is of unit length, the centroid vector cj is not necessarily of unit length. 2.2. Cosine similarity measure For document clustering, there are different similarity measures available. The most commonly used is the cosine function [31]. For two documents di and dj , the similarity between them can be calculated as:

cosdi ; dj

di dj kdi kkdj k

Since the document vectors are of unit length, the above equation is simplied to:

cosdi ; dj di dj

The cosine value is 1 when two documents are identical, and 0 if there is nothing in common between them (i.e., their document vectors are orthogonal to each other). 2.3. Neighbors and link The neighbors of a document d in a data set are those documents that are considered similar to it [14]. Let simdi ; dj be a similarity function capturing the pairwise similarity between two documents, di and dj , and have values between 0 and 1, with a larger value indicating higher similarity. For a given threshold h, di and dj are dened as neighbors of each other if

simdi ; dj P h;

with 0 6 h 6 1:

Here h is a user-dened threshold to control how similar a pair of documents should be in order to be considered as neighbors of each other. If we use the cosine as sim and set h to 1, a document is constrained to be a neighbor of only other identical documents. On the other hand, if h is set to 0, any pair of documents would be neighbors. Depending on the application, the user can choose an appropriate value for h. The information about the neighbors of every document in the data set can be represented by a neighbor matrix. A neighbor matrix for a data set of n documents is an n n adjacency matrix M, in which an entry Mi; j is 1 or 0 depending on whether documents di and dj are neighbors or not [14]. The number of neighbors of a document di in the data set is denoted by Ndi , and it is the number of entries whose values are 1 in the ith row of the matrix M.

1274

C. Luo et al. / Data & Knowledge Engineering 68 (2009) 12711288

The value of the link function linkdi ; dj is dened as the number of common neighbors between di and dj [14]. And it can be obtained by multiplying the ith row of the neighbor matrix M with its jth column:

linkdi ; dj

n X m1

Mi; m Mm; j

Thus, if linkdi ; dj is large, then it is more probable that di and dj are close enough to be in the same cluster. Since the cosine measures only the similarity between two documents, using it alone can be considered as a local approach for clustering. Involving the link function could be considered as a global approach for clustering [14], because it uses the knowledge of neighbor documents in evaluating the relationship between two documents. Thus, the link function also is a good candidate for measuring the closeness of two documents. 2.4. k-Means and bisecting k-means algorithms for document clustering k-means is a popular algorithm that partitions a data set into k clusters. If the data set contains n documents, denoted by d1 ; d2 ; . . . ; dn , then the clustering is the optimization process of grouping them into k clusters so that the global criterion function

k n XX j1 i1

simdi ; cj

is either minimized or maximized, depending on the denition of simdi ; cj . cj represents the centroid of cluster C j , for j 1; . . . ; k, and simdi ; cj evaluates the similarity between a document di and a centroid cj . When the vector space model is used to resent the documents and the cosine is used for simdi ; cj , each document is assigned to the cluster whose centroid vector is more similar to the document than those of other clusters, and the global criterion function is maximized in that case. This optimization process is known as an NP-complete problem [12], and the k-means algorithm was proposed to provide an approximate solution [17]. The steps of k-means are as follows: 1. Select k initial cluster centroids, each of which represents a cluster. 2. For each document in the whole data set, compute the similarity with each cluster centroid, and assign the document to the closest (i.e., most similar) centroid. (assignment step) 3. Recalculate k centroids based on the documents assigned to them. 4. Repeat steps 2 and 3 until convergence. The bisecting k-means algorithm [34] is a variant of k-means. The key point of bisecting k-means is that only one cluster is split into two subclusters at each step. This algorithm starts with the whole data set as a single cluster, and its steps are as follows: 1. Select a cluster C j to split based on a heuristic function. 2. Find 2 subclusters of C j using the k-means algorithm: (bisecting step) (a) Select 2 initial cluster centroids. (b) For each document of C j , compute the similarity with the 2 cluster centroids, and assign the document to the closer centroid. (assignment step) (c) Recalculate 2 centroids based on the documents assigned to them. (d) Repeat steps 2b and 2c until convergence. 3. Repeat step 2 I times, and select the split that produces the best clustering result in terms of the global criterion function. 4. Repeat steps 1, 2 and 3 until k clusters are obtained. I denotes the number of iterations for each bisecting step, and usually it is specied in advance.

3. Applications of the neighbors and link in the k-means and bisecting k-means algorithms 3.1. Selection of initial cluster centroids based on the ranks The family of k-means algorithms start with initial cluster centroids, and documents are assigned to the clusters iteratively in order to minimize or maximize the value of the global criterion function. It is known that the clustering algorithms based on this kind of iterative process are computationally efcient but often converge to local minima or maxima of the global criterion function. There is no guarantee that those algorithms will reach a global optimization. Since different sets of initial cluster centroids can lead to different nal clustering results, starting with a good set of initial cluster centroids is one way to overcome this problem.

C. Luo et al. / Data & Knowledge Engineering 68 (2009) 12711288

1275

There are three algorithms available for the selection of initial centroids: random, buckshot [6], and fractionation [6]. The random p algorithm randomly chooses k documents from the data set as the initial centroids [11]. The buckshot algorithm picks kn documents randomly from the data set of n documents, and clusters them using a clustering algorithm. The k centroids resulting from this clustering become the initial centroids. The fractionation algorithm splits the documents into buckets of the same size, and the documents within each bucket are clustered. Then these clusters are treated as if they are individual documents, and the whole procedure is repeated until k clusters are obtained. The centroids of the resulting k clusters become the initial centroids. In this paper, we propose a new method of selecting initial centroids based on the concepts of neighbors and link in addition to the cosine. The documents in one cluster are supposed to be more similar to each other than the documents in different clusters. Thus, a good candidate for a initial centroid should be not only close enough to a certain group of documents but also well separated from other centroids. By setting an appropriate similarity threshold h, the number of neighbors of a document in the data set could be used to evaluate how many documents are close enough to the document. Since both the cosine and link functions can measure the similarity of two documents, here we use them together to evaluate the dissimilarity of two documents which are initial centroid candidates. First, by checking the neighbor matrix of the data set, we list the documents in descending order of their numbers of neighbors. In order to nd a set of initial centroid candidates, each of which is close enough to a certain group of documents, the top m documents are selected from this list. This set of m initial centroid candidates is denoted by Sm with m k nplus , where k is the desired number of clusters and nplus is the extra number of candidates selected. Since these m candidates have the most neighbors in the data set, we assume they are more likely the centers of clusters. For example, lets consider a data set S containing 6 documents, fd1 ; d2 ; d3 ; d4 ; d5 ; d6 g, whose neighbor matrix is as shown in Fig. 1. When h 0:3; k 3 and nplus 1; Sm has four documents: Sm fd4 ; d1 ; d2 ; d3 g. Next, we obtain the cosine and link values between every pair of documents in Sm , and then rank the document pairs in ascending order of their cosine and link values, respectively. For a pair of documents di and dj , lets dene rankcosdi ;dj be its rank based on the cosine value, ranklinkdi ;dj be its rank based on the link value, and rankdi ;dj be the sum of rankcosdi ;dj and ranklinkdi ;dj . For both rankcosdi ;dj and ranklinkdi ;dj , a smaller value represents a higher rank, and 0 corresponds to the highest rank. As a result, a smaller rankdi ;dj value also represents a higher rank. The ranks of document pairs are shown in Table 1. Initial centroids better be well separated from each other in order to represent the whole data set. Thus, the document pairs with high ranks could be considered as good initial centroid candidates. For the selection of k initial centroids out of m candidates, there are m C k possible combinations. Each combination is a k-subset of Sm , and we calculate the rank value of each combination comk as:

rankcomk

rankdi ;dj ;

for di 2 comk and dj 2 comk

That means, the rank value of a combination is the sum of the rank values of the k C 2 pairs of initial centroid candidate documents in the combination. In this example, there are 4 combinations available, and their rank values are shown in Table 2. Then, we choose the combination with the highest rank (i.e., the smallest rank value) as the set of initial centroids for the kmeans algorithm. In this example, fd1 ; d2 ; d3 g is chosen since its rank value is the smallest among four different combinations.

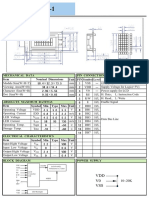

Fig. 1. Neighbor matrix M of data set S with h 0:3.

Table 1 Similarity measurement between initial centroid candidates. di ; dj d1 ; d2 d 1 ; d3 d 1 ; d4 d 2 ; d3 d2 ; d4 d 3 ; d4 cos 0.35 0.10 0.40 0 0.50 0.60 rankcos 2 1 3 0 4 5 link 3 1 3 1 3 2 ranklink 3 0 3 0 3 2 rankdi ;dj 5 1 6 0 7 7

1276

C. Luo et al. / Data & Knowledge Engineering 68 (2009) 12711288

Table 2 Rank values of the candidate sets of initial centroids. comk fd1 ; d2 ; d3 g fd1 ; d2 ; d4 g fd1 ; d3 ; d4 g fd2 ; d3 ; d4 g

k C2

pairs of centroid candidates

rankcomk 6 18 14 14

fd1 ; d2 g; fd1 ; d3 g; fd2 ; d3 g fd1 ; d2 g; fd1 ; d4 g; fd2 ; d4 g fd1 ; d3 g; fd1 ; d4 g; fd3 ; d4 g fd2 ; d3 g; fd2 ; d4 g; fd3 ; d4 g

The documents in this combination are considered to be well separated from each other, while each of them is close enough to a group of documents, so they can serve as the initial centroids of the k-means algorithm. The effectiveness of this proposed method depends on the selection of nplus and the distribution of the cluster sizes. In Section 4.3.1, we will discuss how to select an appropriate nplus to achieve the best clustering result. For the data sets having a large variation in the cluster sizes, the initial centroids selected by this proposed method may not distribute over all the clusters, and some of them may be within a large cluster. Our experimental results showed that our proposed similarity measure described in the following Section 3.2 could be adopted to improve the clustering results of those data sets. 3.2. Similarity measure based on the cosine and link functions For document clustering, the cosine function is a very popular similarity measure. It measures the similarity between two documents as the correlation between the document vectors representing them. This correlation is quantied as the cosine value of the angle between the two vectors, and a larger cosine value indicates that the two documents share more terms and are more similar. When the cosine is adopted in the family of k-means algorithms, the correlation between each pair of a document and a centroid is evaluated during the assignment step. However, the similarity measure based on the cosine may not work well for some document collection. Usually, the number of unique terms in a document collection is very large while the average number of unique terms in a document is much smaller. In addition, documents that cover the same topic and belong to a single cluster may contain a small subset of the terms within the much larger vocabulary of the topic. Here we give two examples to explain this situation. The rst example is regarding the relationship between a topic and a subtopic. A cluster about the family tree is related to a set of terms such as parents, brothers, sisters, aunts, uncles, etc. Some documents in this cluster may focus on brothers and sisters, while the rest covers other branches of the family tree. Thus, those documents do not contain all the relevant terms listed above. Another example is regarding the usage of synonyms. Different terms are used in different documents even if they cover the same topic. The documents in a cluster about the automobile industry may not use the same word to describe the car. There are many terms available for the same meaning, such as auto, automobile, vehicle, etc. Thus, it is quite possible that a pair of documents in a cluster have few terms in common, but have connections with other documents in the same cluster as those documents have many common terms with each of the two documents. In this case, the concept of link may help us identify the closeness of two documents by checking their neighbors. When a document di shares a group of terms with its neighbors, and a document dj shares another group of terms with many neighbors of di , even if di and dj are not considered similar by the cosine function, their common neighbors show how close they are. Another fact is the number of unique terms may be quite different for different topics as their vocabularies are different. In a cluster involving a large vocabulary, since document vectors are spread over a larger number of terms, most document pairs would share a small number of terms. In this case, if the cosine function is used, the similarity between a document and a centroid could be very small because the centroid is dened as the mean vector of all the document vectors in the cluster. The cluster renement phase of the k-means algorithm is the process of maximizing the global criterion function when the cosine function is used for similarity measurement, so it prefers to split the clusters with large vocabularies. However, it is not desirable because the documents in those clusters may be strongly related to each other. On the other hand, if the global criterion function is based on the concept of link, which captures the information about the connections between documents in a cluster in terms of their neighbors, a cluster will not be split just because it involves a large vocabulary. As long as the documents in a cluster are strongly linked (i.e., sharing many neighbors), it will not be split regardless of its vocabulary size. However, there is a case the link function may not perform well as the similarity measure by itself. In the cluster renement phase, if a document is assigned to the cluster whose centroid shares the largest number of neighbors with this document (i.e., the largest link function value), this document has more chance to be assigned to a large cluster than to a small cluster. For a xed similarity threshold h, the centroid of a large cluster, say ci , has more neighbors than the centroid of a small cluster, say cj . Thus, for a document di , it is quite probable that linkdi ; ci is larger than linkdi ; cj . In the worst case scenario, the global criterion function is maximized when most of the documents are assigned to one cluster while all the other clusters are almost empty. Based on these discussions, we propose a new similarity measure for the family of k-means algorithms by combining the cosine and link functions as follows:

f di ; cj a

linkdi ; cj 1 a cosdi ; cj ; Lmax

with 0 6 a 6 1

10

C. Luo et al. / Data & Knowledge Engineering 68 (2009) 12711288

1277

where Lmax is the largest possible value of linkdi ; cj , and a is the coefcient set by the user. For the k-means algorithm, since all the documents in the data set are involved in the whole clustering process, the largest possible value of linkdi ; cj is the number of documents in the data set n, which means all the documents in the data set are neighbors of both di and cj . For the bisecting k-means algorithm, only the documents in the selected cluster are involved in each bisecting step. Thus, the largest possible value of linkdi ; cj is the number of documents in the selected cluster. However, for both k-means and bisecting k-means, the smallest possible value of linkdi ; cj is 0, which means di and cj do not have any common neighbors. We use Lmax to normalize the link values so that the value of linkdi ; cj =Lmax always falls in the range of [0, 1]. With 0 6 a 6 1, the value of f di ; cj is between 0 and 1 for all the cases. Eq. (10) shows that we use the sum of weighted values of the cosine and link functions to evaluate the closeness of two documents, and a larger value of f di ; cj indicates that they are closer. When a is set to 0, the similarity measure becomes the cosine function; and it becomes the link function when a is 1. Our experiments on various test data sets showed that a in the range of [0.8, 0.95] produces the best clustering results, and more details are given in Section 4.3.2. Since the cosine function and the link function evaluate the closeness of two documents in different aspects, our new similarity measure is more comprehensive. During the clustering process, iteratively each document is assigned to the cluster whose centroid is most similar to the document, so that the global criterion function is maximized. To calculate linkdi ; cj , we add k columns to the neighbor matrix M of the data set. The new matrix is an n n k matrix, denoted by M 0 , in which an entry M 0 i; n j is 1 or 0 depending on whether a document di and a centroid cj are neighbors or not. The expanded neighbor matrix for the example data set S is shown in Fig. 2. The value of linkdi ; cj can be obtained by multiplying the ith row of M 0 with its n jth column as:

linkdi ; cj

n X m1

M 0 i; m M0 m; n j

11

3.3. Selection of a cluster to split based on the neighbors of the centroids For the bisecting k-means algorithm, in each bisecting step, one existing cluster is selected to be split based on a heuristic function. Basically, this heuristic function is to nd an existing cluster with the poorest quality. A cluster with poor quality means its documents are not closely related to each other, and the bonds between them are weak. Therefore, our selection of a cluster to split should base on the compactness of clusters. A widely used method of evaluating the compactness of a cluster is the cluster diameter, i.e., the maximum document-to-document distance within the cluster [1,2,8,18]. However, as the shapes of document clusters in the vector space may be quite irregular (i.e., not spherical), a large cluster diameter does not necessarily mean that the cluster is not compact. In [34], they measured the compactness of a cluster by its overall similarity, the size of the cluster, or the combination of both. But they found the difference between those different measurements is usually small in terms of the nal clustering result. Thus, they recommended to split the largest remaining cluster. However, in our experiments, we found that this method may not produce the best clustering result because the size of a cluster is not necessarily a good measurement of its compactness. When we have a choice between two clusters, one is loose and the other is compact, we better split the rst one even if its size is smaller than that of the second one. The concept of neighbors, which is based on the similarity of two documents, provides more information about the compactness of a cluster than the size of the cluster. So, we create a new heuristic function which compares the neighbors of the centroids of remaining clusters as described below. Our experimental results show that the performance of bisecting k-means is improved, compared to the case of splitting the largest cluster. Since we want to measure the compactness of a cluster, only the local neighbors of the centroid are counted. In other words, we just count those documents that are similar to the centroid and existing in that cluster. For a cluster C j , the number of local neighbors of the centroid cj s denoted by Ncj local , and it can be obtained by counting every entry M 0 i; n j whose value is 1 for di 2 C j . For the same cluster size and the same similarity threshold h, the centroid of a compact cluster should have more neighbors than that of a loose cluster. By the denition of the centroid, when the similarity threshold h is xed, the centroid of a

Fig. 2. Expanded neighbor matrix M 0 of data set S with h 0:3 and k = 3.

1278

C. Luo et al. / Data & Knowledge Engineering 68 (2009) 12711288

large cluster tends to have more neighbors than that of a small cluster. Thus, we divide the number of local neighbors of the centroid by the size of the cluster to get a normalized value, denoted by Vcj for cj , which is always in the range of [0, 1]:

Vcj Ncj local =jC j j

When we choose a cluster to split, we choose the one with the smallest V value. 4. Experimental results

12

In order to show that our proposed methods can improve the performance of k-means and bisecting k-means in document clustering, we ran the modied k-means and bisecting k-means algorithms using (1) the selection of initial centroids based on the ranks, (2) the similarity measure based on the cosine and link functions, and (3) the selection of a cluster to split based on the neighbors of the centroids, individually as well as in combinations, on real-life text data sets. The clustering results were compared with those of original k-means and bisecting k-means. The time and space complexities of the modied algorithms are discussed, and we also compared the cosine and the Jaccard index as a similarity measure. We implemented all the algorithms in C++ on a SuSE Linux workstation with a 500 MHz processor and 384 MB memory. 4.1. Data sets We used 13 test data sets extracted from three different types of text databases, which have been widely used by the researchers in the information retrieval area. The rst group of six test data sets, denoted by CISI1, CISI2, CISI3, CISI4, CACM1 and MED1, were extracted from the CISI, CACM and MEDLINE abstracts, respectively, which are included in the Classic text database [5]. The second group of four test data sets, denoted by EXC1, ORG1, PEO1 and TOP1, were extracted from the EXCHANGES, ORGS, PEOPLE and TOPICS category sets of the Reuters-21578 Distribution 1.0 [30]. The third group of test data sets were prepared by ourselves. We tried to simulate the case of using a search engine to retrieve the desired documents from a database, and we adopted the Lemur Toolkit [25] as the search engine. The English newswire corpus of the HARD track of the Text Retrieval Conference (TREC) [16] was used as the database. This corpus includes about 652,309 documents (in 1575 MB) from eight different sources, and there are 29 test queries. Among the 29 queries, HARD-306, HARD-309 and HARD-314 queries were sent to the search engine, and the top 200 results of these queries were collected and classied as three test data sets, denoted by SET1, SET2 and SET3, for our evaluation. The reason why we chose only top 200 documents is that usually users do not read more than 200 documents for a single query. Each document in the test data set has been already pre-classied into one unique class. But, this information was hidden during the clustering processes and was used just to evaluate the clustering accuracy of each clustering algorithm. Before the experiments, the removal of stop words and the stemming were performed as preprocessing steps on the data sets. Table 3 summarizes the characteristics of all the test data sets used for our experiments. The last column shows the average similarity of all the pairs of documents in each data set, and the cosine function is used for the measurement. 4.2. Evaluation methods of document clustering We used the F-measure and purity values to evaluate the accuracy of our clustering algorithms. The F-measure is a harmonic combination of the precision and recall values used in information retrieval [31]. Since our data sets were prepared as described above, each cluster obtained can be considered as the result of a query, whereas each pre-classied set of documents can be considered as the desired set of documents for that query. Thus, we can calculate the precision Pi; j and recall Ri; j of each cluster j for each class i.

Table 3 Summary of data sets. Data set CISI1 CISI2 CISI3 CISI4 CACM1 MED1 EXC1 ORG1 PEO1 TOP1 SET1 SET2 SET3 Num. of doc. 163 282 135 148 170 287 334 733 694 2279 200 200 200 Num. of classes 4 4 4 3 5 9 7 9 15 7 4 3 4 Min. class size 4 31 15 24 26 26 28 20 11 23 18 44 10 Max. class size 102 92 85 78 51 39 97 349 143 750 88 92 81 Num. of unique terms 1844 2371 1824 1935 1260 4255 3258 6172 5046 10,719 9301 8998 12,368 Avg. doc. length 66 63 63 67 56 77 67 138 102 113 940 664 1637 Avg. pairwise similarity by cosine 0.04 0.04 0.04 0.04 0.04 0.02 0.03 0.03 0.04 0.03 0.06 0.05 0.06

C. Luo et al. / Data & Knowledge Engineering 68 (2009) 12711288

1279

If ni is the number of the members of class i, nj is the number of the members of cluster j, and nij is the number of the members of class i in cluster j, then Pi; j and Ri; j can be dened as:

Pi; j

nij nj nij Ri; j ni

13 14

The corresponding F-measure Fi; j is dened as:

Fi; j

2 Pi; j Ri; j Pi; j Ri; j

15

Then, the F-measure of the whole clustering result is dened as:

X ni maxFi; j n j i

16

where n is the total number of documents in the data set. In general, the larger the F-measure is, the better the clustering result is [34]. The purity of a cluster represents the fraction of the cluster corresponding to the largest class of documents assigned to that cluster, thus the purity of cluster j is dened as:

Purityj

1 maxnij nj i

17

The purity of the whole clustering result is a weighted sum of the cluster purities:

Purity

X nj Purityj n j

18

In general, the larger the purity value is, the better the clustering result is [36]. 4.3. Clustering results Figs. 36 show the F-measure values of the clustering results of all the algorithms on 13 data sets, and Tables 4 and 5 show the purity values of the clustering results. In the original k-means (KM) and bisecting k-means (BKM) algorithms, the initial centroids are selected randomly, and the cosine function is used as the similarity measure. For BKM, the largest cluster is selected to split at each bisecting step, and the number of iterations for each bisecting step is set to 5. In the gures, Rank denotes that the initial centroids are selected based on the ranks of the documents; CL denotes that the similarity measure is based on the cosine and link functions; and NB denotes that the selection of a cluster to split is based on the local neighbors of the centroids. We ran each algorithm 10 times to obtain the average F-measure and purity values. The experimental results demonstrate that our proposed methods of using the neighbors and link on KM and BKM can improve the clustering accuracy signicantly. 4.3.1. Results of the selection of initial centroids based on the ranks From the experimental results, we can see that the selection of initial centroids by using the ranks of documents performs much better than the random selection in terms of the clustering accuracy.

0.8 0.75 0.7 F-measure 0.65 0.6 0.55 0.5 0.45 0.4 CISI1 CISI2 CISI3 CISI4 CACM1 MED1

KM with Rank & CL KM

KM with Rank KM with CL

Fig. 3. Results of k-means algorithms on Classic data sets.

1280

C. Luo et al. / Data & Knowledge Engineering 68 (2009) 12711288

0.8 0.75 0.7 F-measure 0.65 0.6 0.55 0.5 0.45 0.4 0.35

EXC1 ORG1 PEO1 TOP1 SET1 SET2 SET3

KM with Rank & CL KM KM with Rank KM with CL

Fig. 4. Results of k-means algorithms on Reuters and search-result data sets.

1 0.9 0.8 F-measure 0.7 0.6 0.5 0.4 0.3 CISI1 CISI2 CISI3 CISI4 CACM1 MED1

BKM BKM with Rank BKM with CL BKM with NB BKM with Rank, CL & NB

Fig. 5. Results of bisecting k-means algorithms on Classic data sets.

0.9 0.8 F-measure 0.7 0.6 0.5 0.4 0.3 EXC1 ORG1 PEO1 TOP1 SET1 SET2 SET3

BKM

BKM with Rank BKM with CL

BKM with NB BKM with Rank, CL & NB

Fig. 6. Results of bisecting k-means algorithms on Reuters and search-result data sets.

Since our rank-based method selects k centroids from k nplus candidates, the setting of nplus is very important. If nplus is too small, our choice of initial centroids is limited to a small set of documents. Even if these documents have the largest numbers of neighbors, which indicates that they are close to a large number of documents, they may not be distributed evenly across the whole data set. A larger nplus will help us nd better initial centroids, but if there are too many candidates, the computation cost is high. We tried various nplus for KM and BKM to balance the clustering accuracy and the computation cost. The test results show that for KM, within the range of 0; k, the larger nplus is, the better the clustering result is. Thus, we decided to select k initial centroids from 2k candidates. For BKM, the optimal range of nplus is 0; 4 regardless of k. This is because only two initial centroids are needed at each bisecting step of BKM.

C. Luo et al. / Data & Knowledge Engineering 68 (2009) 12711288 Table 4 Purity values of k-means algorithms. Data set CISI1 CISI2 CISI3 CISI4 CACM1 MED1 EXC1 ORG1 PEO1 TOP1 SET1 SET2 SET3 KM 0.534 0.504 0.760 0.561 0.593 0.652 0.434 0.711 0.474 0.759 0.525 0.495 0.590 KM with rank 0.546 0.592 0.822 0.642 0.689 0.693 0.587 0.744 0.634 0.803 0.545 0.710 0.700 KM with CL 0.595 0.571 0.785 0.567 0.696 0.742 0.452 0.727 0.527 0.808 0.545 0.675 0.645

1281

KM with rank and CL 0.625 0.606 0.778 0.561 0.800 0.756 0.596 0.769 0.676 0.818 0.545 0.720 0.695

Table 5 Purity values of bisecting k-means algorithms. Data set CISI1 CISI2 CISI3 CISI4 CACM1 MED1 EXC1 ORG1 PEO1 TOP1 SET1 SET2 SET3 BKM 0.595 0.539 0.755 0.574 0.689 0.711 0.476 0.727 0.506 0.839 0.535 0.620 0.700 BKM with rank 0.607 0.631 0.815 0.642 0.778 0.689 0.530 0.754 0.576 0.856 0.570 0.690 0.699 BKM with CL 0.607 0.596 0.763 0.561 0.733 0.812 0.503 0.753 0.555 0.830 0.545 0.630 0.699 BKM with NB 0.619 0.543 0.756 0.574 0.689 0.777 0.533 0.749 0.586 0.866 0.535 0.625 0.700 BKM with Rank, CL and NB 0.619 0.624 0.807 0.655 0.793 0.899 0.575 0.754 0.618 0.866 0.575 0.655 0.700

Our rank-based method involves several steps, and the time complexity of each step is analyzed in detail as follows: Step 1: Creation of the neighbor matrix. The neighbor matrix is created only once by calculating the similarity for each pair of documents, and we use the cosine function to measure the similarity. The time complexity of calculating each similarity could be represented as F 1 dt, where F 1 is a constant for the calculation of the cosine function, D is the number of unique words in the data set, and t is the unit operation time for all basic operations. Then, each entry of the neighbor matrix is obtained with two more unit operations, including the thresholding. Since the neighbor matrix is symmetric, only its upper triangle part is needed. Thus, the time complexity of creating the neighbor matrix is:

T matrix

F 1 Dt 2tn2 F 1 D=2 1n2 t 2

19

where n is the number of documents in the data set. Step 2: Obtaining the top m documents with most neighbors. First, the number of the neighbors of each document is calculated by using the neighbor matrix, which takes n2 t. It takes F 2 n logn operations to sort n documents, where F 2 is the constant for each operation of the sorting. Obtaining the top m documents from the sorted list takes m operations, and m k nplus 2k in our experiments. The set of these m initial centroid candidates is denoted by Sm , and the time complexity of this step is:

T Sm n2 t F 2 n lognt 2kt

20

Step 3: Ranking the document pairs in Sm based on the cosine and link values. There are mm 1=2 document pairs in Sm . We rst rank them based on their cosine and link values, respectively; then the nal rank of each document pair is the sum of those two ranks. The time complexity of ranking the document pairs based on their cosine values is:

T rankcosd ;d F 2 mm 1=2 logmm 1=2t

i j

21 22

F 2 k2k 1 logk2k 1t

1282

C. Luo et al. / Data & Knowledge Engineering 68 (2009) 12711288

It takes 2nmm 1=2 operations to compute the link function for all document pairs by using the neighbor matrix created in step 1. Combined with the computation cost for sorting, the time complexity of ranking the document pairs based on their link values is:

T ranklinkd ;d 2nmm 1=2t F 2 mm 1=2 logmm 1=2t

i j

23 24

2k2k 1nt F 2 k2k 1 logk2k 1t

Thus, the time complexity of step 3 is:

T rankdi ;dj T rankcosd ;d T ranklinkd ;d T add

i j i j

ranks

25 26

2k2k 1nt 2F 2 k2k 1 logk2k 1t k2k 1t

Step 4: Finding the best k-subset out of Sm . There are m C k k-subsets of the documents in Sm , and we need to nd the best k-subset based on the aggregated ranks of all the document pairs in it. For each k-subset, it takes kk 1=2 1 operations to check if it is the best one. Thus, the time complexity of nding the best k-subset is:

T best

combination

kk 1=2 1m!=m k!k!t kk 1=2 12k!=k!k!t

27 28

And the total time required for the selection of k initial centroids is:

T init T matrix T Sm T rankdi ;dj T best

2

combination

29 30 31

F 1 D=2 2n t F 2 n lognt 2k2k 1nt k2k 1t 2F 2 k2k 1 logk2k 1t kk 1=2 12k!=k!k!t

Since we can always have 2k ( n and 2k2 ( n for a given data set with n documents, the time complexity of the rst three steps is On2 . The time complexity of step 4 is in an exponential form of k. Since k is small in most real-life applications, step 4 would not increase the total computation cost much, and the time complexity of whole process is On2 in that case. However, if k is large, the computation time of step 4 would be very large. So, we propose a simple alternative step 4 that can remove the exponential component in the time complexity. When k is large, instead of checking all the possible k-subsets of the documents in Sm to nd the best one, we can create a k-subset, S0 , incrementally. After step 3, rst the document pair with the highest rank are inserted into S0 . Then we perform k 2 selections; and at each selection, the best document out of k randomly selected documents from Sm is added to S0 . The goodness of each candidate document di is evaluated by the rankcom value of the current subset S0 when di is inserted. In other words, for each candidate document di , we compute the rankcom value of the current S0 by adding rankdi ;dj for every document dj in S0 . Finally, when we have k documents in S0 , they are considered as the initial centroids. The time complexity of this 3 alternative step 4 is Ok , and the time complexity of our whole method would be still On2 . We performed this alternative selection method on PEO1 data set (with k 15) 10 times, and the average F-measure and purity values of the k-means algorithm are 0.5535 and 0.5936, respectively. They are slightly lower than the case of original proposed selection method, but much better than the case of randomly selected initial centroids, where the average F-measure and purity values are 0.451 and 0.474, respectively. For the bisecting k-means algorithm, since only two clusters are created at each bisecting step, the time complexity of selecting initial centroids is always On2 no matter how large k is. 4.3.2. Results of the similarity measure based on the cosine and link functions The rst step of our similarity measure based on the cosine and link functions is to nd the neighbors of each document. To determine if two documents are neighbors, we compare their cosine value with the threshold h (refer to Eq. (6)). In order to nd the right h, rst we tried the average pairwise similarity of the documents in the data set, but some results are good and others are not. Then, we tried h between 0.02 and 0.5, and the effect of h on the F-measure and purity values of the kmeans with CL on EXC1, CISI1 and SET2 data sets is shown in Figs. 7 and 8, respectively. We also performed this test on other data sets, and the results are quite similar. As we can see, when h is 0.1, we can achieve very good clustering results. So, h was set to 0.1 to obtain other experimental results reported in this paper. In our new similarity measure, we use the linear combination of the cosine and link functions to measure the closeness between a document and a centroid, as dened in Eq. (10). The range of the coefcient a of the link function is [0, 1]. We tried different coefcient values for the k-means with CL on EXC1, CISI1 and SET2 data sets. The results shown in Figs. 9 and 10 suggest that when the coefcient is set between 0.8 and 0.95, the clustering results are better than the case of using the cosine alone. The tests on other data sets showed the same trend, so we set the coefcient to 0.9 to obtain other experimental results reported in this paper. This high optimal coefcient value can be explained by the fact that the link value is calculated using the similarity value given by the cosine. In the other words, since the link value contains the cosine value already, the weight of the cosine in the linear combination should be much smaller than that of the link.

C. Luo et al. / Data & Knowledge Engineering 68 (2009) 12711288

1283

0.7 0.65 0.6 0.55 0.5 0.45 0.4 0.35 0.3 0.25 0.2

F-measure

EXC1

CISI1

SET2

0.02 0.04 0.06 0.1 0.15 0.2 0.25 0.3 0.35 0.4 0.45 0.5 Sim ilarity Threshold

Fig. 7. Effect of the similarity threshold h on the F-measure of the k-means with CL.

0.7 0.65 Purity Value 0.6 0.55 0.5 0.45 0.4 0.35 0.3 0.25 0.2 EXC1 CISI1 SET2

0.02 0.04 0.06 0.1 0.15 0.2 0.25 0.3 0.35 0.4 0.45 0.5 Sim ilarity Threshold

Fig. 8. Effect of the similarity threshold h on the purity value of the k-means with CL.

The time complexity of our new similarity measure is determined by the computation of the cosine and link functions. For each iteration of the loop in the k-means algorithm, the time complexity with the cosine function alone could be represented as:

T cos F 1 kDnt

32

The computation of the link function contains three parts: creating the neighbor matrix, expanding the neighbor matrix with the columns for k centroids, and calculating the link value for every document with each of k centroids at each iteration of the loop. The time complexity of creating the neighbor matrix is derived in Section 4.3.1 as: T matrix F 1 D=2 1n2 t. The n n neighbor matrix is created just once before the rst iteration of the loop; and at each iteration, it is expanded into an

0.7 0.65 0.6

F-measure

0.55 0.5 0.45 0.4 0.35 0.3 0.25

0.52 0.56 0.6

EXC1

0.64 0.68 0.72

CISI1

0.76 0.8 0.84

SET2

0.88 0.92 0.96 1

Coefficient of the Link Function

Fig. 9. Effect of the coefcient a on the F-measure of the k-means with CL.

1284

C. Luo et al. / Data & Knowledge Engineering 68 (2009) 12711288

0.75 0.65 Purity Value 0.55 0.45 0.35 0.25 0.15

0.52 0.56 0.6

EXC1

CISI1

0.8

SET2

0.84 0.88 0.92 0.96 1

0.64 0.68 0.72 0.76

Coefficient of the Link Function

Fig. 10. Effect of the coefcient a on the purity value of the k-means with CL.

n n k matrix, including the columns for k centroids. So, the computation for this expansion involves only the entries in those k columns:

T matrix

expansion

F 1 Dt 2tkn F 1 D 2knt

33

The calculation of the link value for every document with each of k centroids can be done by multiplying the vectors in the expanded neighbor matrix as described in Section 3.2, and its time complexity could be represented as:

T link 2kn t

Thus, the time complexity for the new similarity measure is:

34

T CL T matrix T cos T matrix

2 2

expansion

T link L

2

35 36 37

F 1 D=2 1n t F 1 kDnt F 1 D 2knt 2kn tL F 1 D=2 2kL 1n t 2F 1 D 1kLnt

where L is the number of iterations of the loop in k-means. From the above equations, we can see that the time complexity of our new similarity measure is On2 for a data set containing n documents, and it is quite acceptable. By adopting the new similarity measure to KM and BKM, both algorithms outperform the original ones on all 13 test data sets. We can thus conclude that the new similarity measure provides a more accurate measurement of the closeness between a document and a centroid. 4.3.3. Results of the selection of a cluster to split based on the neighbors of the centroids From the experimental results of the BKM with NB, shown in Figs. 5 and 6 and Table 5, we can nd that the new method of selecting a cluster to split based on the neighbors of the centroids works very well on traditional document data sets, such as the Reuters and Classic data sets. However, there is only slight improvement on the search-result data sets in terms of the F-measure, while the purity values of their clustering results are almost the same. At each bisecting step, the original BKM splits the largest cluster. Our new cluster selection method is based on the compactness of clusters measured by the number of local neighbors of the centroids. Since SET1, SET2 and SET3 data sets are simulated search results, there are more terms shared between the documents in these data sets. In the other words, the documents in these data sets are more similar to each other than those in traditional data sets. Table 3 shows that the average pairwise similarities of the documents in these data sets are higher than those in other data sets. Thus, the clusters in search-result data sets are more compact than those in traditional data sets. This characteristic leads to the experimental results showing that there is no big difference between the two methods of selecting a cluster to split. The time complexity of the BKM with NB is not much different from that of BKM, because the cost of selecting a cluster to split based on the number of local neighbors of the centroids is very small. The experimental results proved that our measurement of the compactness of clusters by using the neighbors of the centroids is more accurate than just using the cluster size. For the data sets whose clusters are not compact, our BKM with NB performs much better than BKM. 4.3.4. Results of the combinations of proposed methods We combined the three proposed methods, utilizing the neighbors and link, and ran the modied algorithms on all the test data sets. As shown in Figs. 36 and Tables 4 and 5, the combinations achieve the best results on all the test data sets. Since all of our proposed methods are utilizing the same neighbor matrix, adopting them into one algorithm is computationally meritorious. The average execution times per loop of different k-means algorithms on EXC1 and SET2 data sets are shown in Fig. 11. We can see that the k-means using both ranks and CL does not require much extra time.

C. Luo et al. / Data & Knowledge Engineering 68 (2009) 12711288

1285

2 Execution Time (seconds) 1.8 1.6 1.4 1.2 1 0.8 0.6 0.4 0.2 0 EXC1 SET2

KM with Rank & CL KM with CL KM with Rank KM

Fig. 11. Average execution time per loop of k-means on EXC1 and SET2 data sets.

For most data sets, we found that the best clustering result obtained is close to the result of using the ranks alone. It proves that the selection of initial centroids is critical for the family of k-means algorithms. For those data sets having a large variation in the cluster sizes, even if the selection of initial centroids is not good enough, the clustering result is improved by adopting our new similarity measure based on the cosine and link functions. An example case is CISI1 data set containing 163 documents. Its maximum class size is 102 documents, and the minimum class size is 4 documents. Fig. 3 shows that, for k-means, the selection of the initial centroids by using the ranks of the documents performs slightly better than the random selection, as the corresponding F-measure values are 0.478 and 0.475, respectively. By adopting the new similarity measure (CL), the clustering result is improved as expected (F-measure value is 0.556), and the combination of these two methods achieves a much better clustering result (F-measure value is 0.5953). 4.3.5. Comparison between the cosine and the Jaccard index In our proposed methods, we used the cosine as the similarity measure between documents. The Jaccard index, also known as Jaccard similarity coefcient, is another similarity measure and, for document clustering, it can be dened as the ratio between the number of common terms in two documents and the number of terms in the union of two documents. So, for two documents di and dj , their Jaccard index is:

Jaccarddi ; dj

j di \ dj j j di [ dj j

38

For the comparison between the cosine and the Jaccard index, we used the Jaccard index in the place of the cosine as follows. First, when we build the neighbor matrix, we used the Jaccard index to determine whether two documents are neighbors of each other. Second, we also measured the similarity between a document and a cluster centroid by replacing the cosine with the Jaccard index in Eq. (10) as:

f 0 di ; cj a

linkdi ; cj 1 a Jaccarddi ; cj ; Lmax

with 0 6 a 6 1

39

Then, we performed the k-means with the Jaccard index and the k-means with f 0 di ; cj on CISI1, CACM1, PEO1 and SET1 data sets. Their results are compared with the cases of using the cosine in terms of the F-measure, as shown in Table 6. In Table 6, rst we can see that the cosine performs better than the Jaccard index for document clustering when they are used with the original k-means. Second, when the link function is combined with the Jaccard index, it also improves the performance of k-means in most cases. Third, the cosine works better than the Jaccard index when each of them is combined with the link function. In [15], they reported that, in most practical cases, the cosine value is about twice of the Jaccard index value. So, we adjusted the similarity threshold h from 0.1 to 0.05, and a from 0.9 to 0.8. The F-measure values for this case are listed in the last column of Table 6, and we can see that the cosine still performs better than the Jaccard index.

Table 6 F-measure values of k-means algorithms. Data set CISI1 CACM1 PEO1 SET1 KM with cosine 0.475 0.558 0.502 0.573 KM with Jaccard index 0.350 0.552 0.425 0.548 KM with cosine and link h 0:1; a 0:9 0.594 0.632 0.536 0.589 KM with Jaccard index and link h 0:1; a 0:9 0.374 0.620 0.438 0.533 KM with cosine and link h 0:05; a 0:8 0.494 0.622 0.488 0.536

1286

C. Luo et al. / Data & Knowledge Engineering 68 (2009) 12711288

5. Related Work The general concepts of neighbors and link have been used in other clustering algorithms [9,10,13,22] with different definitions for neighbors and link. In the clustering algorithm proposed in [22], for each data point, k nearest neighbors are found. Then, two data points are placed in the same cluster if they are nearest neighbors of each other and also have more than a certain number of shared nearest neighbors. In [13], they modied the clustering algorithm proposed in [22] and applied it to agglomerative clustering. If a pair of data points are nearest neighbors of each other, then their mutual neighborhood value is evaluated by adding their ranks in their individual lists of nearest neighbors. Since the nearest neighbors of a data point are ranked based their similarity with the data point, a pair of data points with a high mutual neighborhood value would be clustered together. In [9], a pair of data points have a link if both have each other in their lists of nearest neighbors. The strength of a link between two data points is dened as the number of shared nearest neighbors, and if the strength is higher than a certain threshold, it is called a strong link. For clustering, they used not only the strength of the links between data points, but also the number of strong links of each data point. In [10], a density-based clustering algorithm was proposed, where the denition of a cluster is based on the notion of density reachability. A data point q is density-reachable from a data point p if q is in the neighborhood of p and also p is surrounded by more than a certain number of data points. In that case, we can consider that p and q are in the same cluster. However, unlike our proposed methods, these previous clustering algorithms do not use the concepts of neighbors and link for the selection of initial centroids for k-means; do not use the linear combination of the cosine and link functions to measure the similarity between a data point a centroid; and do not use the neighbors of each centroid to measure the compactness of the corresponding cluster. Recently, attention has been given to exploring the semantic information, like synonyms, polysemy and semantic hierarchy, for document clustering. Two techniques have been reported in many literature. One is ontology-based document clustering [20,21,27]. Ontology represents the semantic relationship between the terms, and it can be used to rene the vector space model by weighting, replacing or expanding the terms. Another technique is Latent Semantic Analysis (LSA), which is also called Latent Semantic Index (LSI) [4,7,32,33,35]. LSA takes the term-document matrix as an input and uses the Singular Value Decomposition (SVD) to project the original high-dimensional termdocument vector space to a low-dimensional concept vector space, in which the dimensions are orthogonal; i.e., statistically uncorrelated. One problem of LSA is the high computation cost of the SVD process for a large term-document matrix. For both techniques, after a new vector space is obtained, conventional document clustering algorithms can be used. It has been reported that both techniques can improve the clustering accuracy signicantly [20,21,27,32,33,35].

6. Conclusions In this paper, we proposed three different methods of using the neighbors and link in the k-means and bisecting k-means algorithms for document clustering. Comparing with the local information given by the cosine function, the link function provides the global view in evaluating the closeness between two documents by using the neighbor documents. We enhanced the k-means and bisecting k-means algorithms by using the ranks of documents for the selection of initial centroids, by using the linear combination of the cosine and link functions as a new similarity measure between a document and a centroid, and by selecting a cluster to split based on the neighbors of the centroids. All these algorithms are compared with the original k-means and bisecting k-means on real-life data sets. Our experimental results showed that the clustering accuracy of k-means and bisecting k-means is improved by adopting the new methods individually and also in combinations. First, the test results proved that the selection of initial centroids is critical to the clustering accuracy of k-means and bisecting k-means. The initial centroids selected by our method are well distributed, and each one is close to a sufcient number of topically related documents, so they improve the clustering accuracy. Second, the test results showed that our new method of measuring the closeness between a document and a centroid based on the combination of the pairwise similarity and their common neighbors performs better than using the pairwise similarity alone. Third, the compactness of a cluster could be measured accurately by the neighbors of the centroid. Thus, for bisecting k-means, a cluster whose centroid has the smallest number of local neighbors can be split. Moreover, since all of our proposed methods are utilizing the same neighbor matrix, they can be easily combined and result in better clusters without increasing the execution time much. In our proposed methods, there are a few steps that can be parallelized for scalability, such as nding the neighbors of the documents, computing the link between documents by using the neighbor matrix, selecting the most similar cluster centroid for each document based on the new similarity measure, and computing the compactness of each cluster based on the number of local neighbors of its centroid. Ontology and Latent Semantic Analysis (LSA) are known to be useful for document clustering, so we plan to investigate how they can be integrated with the concepts of neighbors and link to improve the clustering accuracy.

C. Luo et al. / Data & Knowledge Engineering 68 (2009) 12711288

1287

References

[1] Y. Bartal, M. Charikar, D. Raz, Approximating min-sum k-clustering in metric spaces, in: Proc. of the 33rd Annual ACM Symposium on Theory of Computing, 2001, pp. 1120. [2] N. Guttman-Beck, R. Hassin, Approximation algorithms for min-sum p-clustering, Discrete Applied Mathematics 89 (1998) 125142. [3] C. Buckley, A.F. Lewitt, Optimization of inverted vector searches, in: Proc. of ACM SIGIR Conf. on Research and Development in Information Retrieval, 1985, pp. 97110. [4] F.Y.Y. Choi, P. Wiemer-Hastings, J. Moore, Latent semantic analysis for text segmentation, in: Proc. of the Conf. on Empirical Methods in Natural Language Processing, 2001, pp.109117. [5] Classic Text Database, <ftp://ftp.cs.cornell.edu/pub/smart/>. [6] D.R. Cutting, D.R. Karger, J.O. Pedersen, J.W. Tukey, Scatter/gather: a cluster-based approach to browsing large document collections, in: Proc. of ACM SIGIR Conf. on Research and Development in Information Retrieval, 1992, pp. 318329. [7] S. Deerwester, S.T. Dumais, G.W. Furnas, T.K. Landauer, R. Harshman, Indexing by latent semantic analysis, Journal of the American Society for Information Science 41 (6) (1990) 391407. [8] M.E. Dyer, A.M. Frieze, A simple heuristic for the p-center problem, Operations Research Letters 3 (1985) 285288. [9] L. Ertz, M. Steinbach, V. Kumar, Finding topics in collections of documents: a shared nearest neighbor approach, in: W. Wu, H. Xiong, S. Shekhar (Eds.), Clustering and Information Retrieval, Kluwer Academic Publishers, 2004, pp. 83104. [10] M. Ester, H. Kriegel, J. Sander, X. Xu, A density-based algorithm for discovering clusters in large spatial databases with noise, in: Proc. of the Second Intl Conf. on Knowledge Discovery and Data Mining, 1996, pp. 226231. [11] A.K. Jain, R.C. Dubes, Algorithms for Clustering Data, Prentice Hall, Englewood Cliffs, 1988. [12] M.R. Garey, D.S. Johnson, H.S. Witsenhausen, Complexity of the generalized Lloyd-max problem, IEEE Transactions on Information Theory 28 (2) (1982) 256257. [13] K.C. Gowda, G. Krishna, Agglomerative clustering using the concept of mutual nearest neighborhood, Pattern Recognition 10 (2) (1978) 105112. [14] S. Guha, R. Rastogi, K. Shim, ROCK: a robust clustering algorithm for categorical attributes, Information Systems 25 (5) (2000) 345366. [15] L. Hamers, Y. Hemeryck, G. Herweyers, M. Janssen, H. Keters, R. Rousseau, A. Vanhoutte, Similarity measures in scientometric research: the Jaccard index versus Saltons cosine formula, Information Processing and Management 25 (3) (1989) 315318. [16] High Accuracy Retrieval from Documents (HARD) Track of Text Retrieval Conference, 2004, <http://handle.dtic.mil/100.2/ADA455426>. [17] J.A. Hartigan, Clustering Algorithms, John Wiley and Sons, 1975. [18] D.S. Hochbaum, D.B. Shmoys, A best possible approximation algorithm for the k-center problem, Mathematics of Operations Research 10 (2) (1985) 180184. [19] J.D. Holt, S.M. Chung, Y. Li, Usage of mined word associations for text retrieval, in: Proc. of IEEE Intl Conf. on Tools with Articial Intelligence (ICTAI2007), vol. 2, 2007, pp. 4549. [20] A. Hotho, S. Staab, A. Madche, Ontology-based text clustering, in: Proc. of the Workshop on Text Learning: Beyond Supervision, in Conjunction with IJCAI-2001, 2001. [21] A. Hotho, S. Staab, G. Stumme, Ontologies improve text document clustering, in: Proc. of IEEE Intl Conf. on Data Mining, 2003, pp. 541544. [22] R.A. Jarvis, E.A. Patrick, Clustering using a similarity measure based on shared near neighbors, IEEE Transactions on Computers C-22 (11) (1973) 1025 1034. [23] L. Kaufman, P.J. Rousseeuw, Finding Groups in Data: An Introduction to Cluster Analysis, John Wiley & Sons, 1990. [24] B. Larsen, C. Aone, Fast and effective text mining using linear-time document clustering, in: Proc. of ACM SIGKDD Intl Conf. on Knowledge Discovery and Data Mining, 1999, pp. 1622. [25] The Lemur Toolkit for Language Modeling and Information Retrieval, <http://lemurproject.org>. [26] Y. Li, S.M. Chung, Parallel bisecting k-means with prediction clustering algorithm, The Journal of Supercomputing 39 (1) (2007) 1937. [27] Y. Li, S.M. Chung, J.D. Holt, Text document clustering based on frequent word meaning sequences, Data and Knowledge Engineering 64 (1) (2008) 381 404. [28] Y. Li, C. Luo, S.M. Chung, Text clustering with feature selection by using statistical data, IEEE Transactions on Knowledge and Data Engineering 20 (5) (2008) 641652. [29] C. Ordonez, E. Omiecinski, Efcient disk-based k-means clustering for relational databases, IEEE Transactions on Knowledge and Data Engineering 16 (8) (2004) 909921. [30] Reuters-21578 Distribution 1.0, <http://www.daviddlewis.com/resources/testcollections/reuters21578>. [31] C.J. van Rijsbergen, Information Retrieval, second ed., Buttersworth, London, 1979. [32] H. Schtze, C. Silverstein, Projections for efcient document clustering, in: Proc. of ACM SIGIR Conf. on Research and Development in Information Retrieval, 1997, pp. 7481. [33] W. Song, S.C. Park, A novel document clustering model based on latent semantic analysis, in: Proc. of the Third Intl Conf. on Semantics, Knowledge and Grid, 2007, pp. 539542. [34] M. Steinbach, G. Karypis, V. Kumar, A comparison of document clustering techniques, in: KDD Workshop on Text Mining, 2000. [35] B. Tang, M. Shepherd, E. Milios, M.I. Heywood, Comparing and combing dimension reduction techniques for efcient test clustering, in: Proc. of the Workshop on Feature Selection for Data Mining Interfacing Machine Learning and Statistics, in Conjunction with the SIAM Intl Conf. on Data Mining, 2005. [36] Y. Zhao, G. Karypis, Empirical and theoretical comparisons of selected criterion functions for document clustering, Machine Learning 55 (3) (2004) 311331.

Congnan Luo received the B.E. degree in Computer Science from Tsinghua University, P.R. China, in 1997, the M.S. degree in Computer Science from the Institute of Software, Chinese Academy of Sciences, Beijing, P.R. China, in 2000, and the Ph.D. degree in Computer Science and Engineering from Wright State University, Dayton, Ohio, in 2006. Currently he is a technical staff at the Teradata Corporation in San Diego, CA, and his research interests include data mining, machine learning, and database.

1288

C. Luo et al. / Data & Knowledge Engineering 68 (2009) 12711288 Yanjun Li received the B.S. degree in Economics from the University of International Business and Economics, Beijing, P.R. China, in 1993, the B.S. degree in Computer Science from Franklin University, Columbus, Ohio, in 2001, the M.S. degree in Computer Science and the Ph.D. degree in Computer Science and Engineering from Wright State University, Dayton, Ohio, in 2003 and 2007, respectively. She is currently an assistant professor in the department of Computer and Information Science at Fordham University, Bronx, New York. Her research interests include data mining and knowledge discovery, text mining, ontology, information retrieval, bioinformatics, and parallel and distributed computing.

Soon M. Chung received the B.S. degree in Electronic Engineering from Seoul National University, Korea, in 1979, the M.S. degree in Electrical Engineering from Korea Advanced Institute of Science and Technology, Korea, in 1981, and the Ph.D. degree in Computer Engineering from Syracuse University, Syracuse, New York, in 1990. He is currently a professor in the department of Computer Science and Engineering at Wright State University, Dayton, Ohio. His research interests include database, data mining, Grid computing, text mining, XML, and parallel and distributed computing.

Potrebbero piacerti anche

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDa EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceValutazione: 4 su 5 stelle4/5 (895)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDa EverandNever Split the Difference: Negotiating As If Your Life Depended On ItValutazione: 4.5 su 5 stelle4.5/5 (838)

- The Yellow House: A Memoir (2019 National Book Award Winner)Da EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Valutazione: 4 su 5 stelle4/5 (98)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDa EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeValutazione: 4 su 5 stelle4/5 (5794)

- Shoe Dog: A Memoir by the Creator of NikeDa EverandShoe Dog: A Memoir by the Creator of NikeValutazione: 4.5 su 5 stelle4.5/5 (537)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDa EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaValutazione: 4.5 su 5 stelle4.5/5 (266)

- The Little Book of Hygge: Danish Secrets to Happy LivingDa EverandThe Little Book of Hygge: Danish Secrets to Happy LivingValutazione: 3.5 su 5 stelle3.5/5 (400)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDa EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureValutazione: 4.5 su 5 stelle4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDa EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryValutazione: 3.5 su 5 stelle3.5/5 (231)

- Grit: The Power of Passion and PerseveranceDa EverandGrit: The Power of Passion and PerseveranceValutazione: 4 su 5 stelle4/5 (588)

- The Emperor of All Maladies: A Biography of CancerDa EverandThe Emperor of All Maladies: A Biography of CancerValutazione: 4.5 su 5 stelle4.5/5 (271)

- The Unwinding: An Inner History of the New AmericaDa EverandThe Unwinding: An Inner History of the New AmericaValutazione: 4 su 5 stelle4/5 (45)

- On Fire: The (Burning) Case for a Green New DealDa EverandOn Fire: The (Burning) Case for a Green New DealValutazione: 4 su 5 stelle4/5 (74)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDa EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersValutazione: 4.5 su 5 stelle4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnDa EverandTeam of Rivals: The Political Genius of Abraham LincolnValutazione: 4.5 su 5 stelle4.5/5 (234)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDa EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreValutazione: 4 su 5 stelle4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDa EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyValutazione: 3.5 su 5 stelle3.5/5 (2259)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Da EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Valutazione: 4.5 su 5 stelle4.5/5 (121)

- Her Body and Other Parties: StoriesDa EverandHer Body and Other Parties: StoriesValutazione: 4 su 5 stelle4/5 (821)

- S1 Mock 2Documento13 pagineS1 Mock 2Abdulrahman HatemNessuna valutazione finora

- Ten Steps To An Amazing INFJ LifeDocumento15 pagineTen Steps To An Amazing INFJ LifeG. S.100% (5)

- Transco Summary Gudinace For Work Method Statements R 0 300912Documento12 pagineTransco Summary Gudinace For Work Method Statements R 0 300912kla_alk100% (2)

- Remembering Thanu Padmanabhan - The HinduDocumento3 pagineRemembering Thanu Padmanabhan - The HinduIucaa libraryNessuna valutazione finora