Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Unit Testing

Caricato da

api-3709875Descrizione originale:

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Unit Testing

Caricato da

api-3709875Copyright:

Formati disponibili

IV&V Australia

The independent software testing specialists

Software Unit Testing

Rodney Parkin, IV&V Australia

This paper is an overview of software unit testing. It defines unit testing, and discusses many of the issues which

must be addressed when planning for unit testing. It also makes suggestions for appropriate levels of formality and

thoroughness of unit testing on typical development projects.

“test” in the way which returns the greatest

What is “Unit Testing”? reliability improvement for resources spent rather

The software literature (notably the military than mindlessly performing some “theoretically

standards) define a unit along the lines of the neat” collection of tests.

smallest collection of code which can be

[usefully] tested. Typically this would be a Experience has shown that unit-level testing (and

source file, a package (as in Ada), or a non-trivial reviewing) is very cost effective. It provides a

object class. A hardware development analog much greater reliability improvement for

might be a PC board. resources expended than system level testing. In

particular, it tends to reveal bugs which are

Unit Testing is just one of the levels of testing otherwise insidious and are often catastrophic −

which go together to make the “big picture” of like the strange system crashes that occur in the

testing a system. It complements integration and field when something unusual happens.

system level testing. It should also complement

(rather than compete with) code reviews and What should it cover?

walkthroughs.

Just as a system needs to be designed before it

can be effectively implemented, so too must the

Unit testing is generally seen as a “white box”

system test strategy be designed before it is

test class. That is, it is biased to looking at and

implemented. At the same time as the system

evaluating the code as implemented, rather than

concepts are emerging and an architecture is

evaluating conformance to some set of

being worked out, a “test strategy” must also be

requirements.

developed.

Why is it important? The test strategy should identify the totality of

For any system of more than trivial complexity, it testing which will be applied to the system −

is highly inefficient and ineffective to test the what types of testing will be performed, and how

system solely as a “big black box”. Any attempt they will contribute to the overall quality and

to do so quickly gets lost in a mire of reliability of the product. A good test strategy

assumptions and potential interactions. The only will clearly scope each class of test and assign

viable approach is to perform a hierarchy of tests, responsibility for it. Typically an organisation

with higher level tests assuming “reasonable and will have some standard conventions to follow,

consistent behaviour” by the lower level but each project must identify aspects of the

components, and separate lower level tests to system which are critical or problematical, and

demonstrate these assumptions. clearly identify the how these will be tested and

by whom. Ultimately the Project Plan (or some

It would be infeasible to test a space shuttle as a form of Master Test Plan) for each project will

system if you had to simultaneously question the define what needs to be covered by unit testing

design of every electrical component. It is on that project. This type of information works

similarly infeasible to test a large software well presented in a checklist.

system as a whole if you have to simultaneously

question whether every line of code, every “if Usually unit testing is primarily focused on the

statement”, was correctly written. implementation − Does the code implement what

the designer intended? For each conditional

Boris Beizer has defined a progression of levels statement, is the condition correct? Do all the

of sophistication in software testing. At the special cases work correctly? Are error cases

lowest level, testing is considered no different to correctly detected?

debugging. At the higher levels, testing becomes However many systems have some high-level

a mindset which aims to maximise the system requirements which are difficult to adequately

reliability. His approach stresses that you should test at a system level, and it is common to

Software Unit Testing Page 1 of 4

IV&V Australia Pty Ltd, 1997

rparkin@ivvaust.com.au

IV&V Australia

The independent software testing specialists

identify these as additional test obligations at the than regular liaison and one-on-one review with

unit-test level. An example is detailed signal the tester’s team leader.

processing algorithms. These may be fully

specified at the system functional requirements What type of documentation is

level, but it may be most efficient to test the required?

details of the processing at the unit-test level,

with system-level testing being confined to Like the required level of formality, the

testing the gross flow of data through the system. appropriate level of documentation for unit

testing varies from project to project, and even

within a project. There may be minimum

Who should do it? standards imposed by outside agencies, but

Because unit testing is primarily focussed on the generally there are not.

implementation, and requires an understanding of

the design intent, it is much more efficiently done The minimum requirements for the

by the designers rather than by independent documentation are:

testers. • It must be reviewable. That is, the records

must be sufficient for others to review the

There are some theoretical arguments that it is adequacy of the testing.

better for testing to be done independently. • It must be sufficient for the tests to be

However, in this case, the lost efficiency in repeatable. This is important for regression

having an independent person understand the testing - unless you are sure you can repeat a

code and understand the design issues strongly test, you can never be sure if you have fixed

outweighs any advantages. Beizer’s principal of the cause of a test failure. Repeatability is

applying available resources in the most efficient also important for analysing failures − both

way applies. The benefits to be gained by failures during the initial testing, and

independence are achieved more easily in a subsequent failures. Knowing exactly what

review or walkthrough forum. was and was not tested, and exactly what

passed and what failed during testing is an

What level of formality is required? invaluable aid in isolating difficult-to-

When considering the level of formality required reproduce field failures. Repeatability not

for unit testing, the sort of questions which arise only implies the need to record in reasonable

are: Do unit tests need a “pre-approved” test detail how the test is run and what data is

protocol, or is it sufficient for them to be worked used, but also implies identification of the

out “as you go”? Is a formal report required? Do version of code under test.

QA need to be involved? Are all results • The records must be archivable. That is, they

reviewed? must be sufficiently well kept and identified

that they can be found if required, at a later

The level of formality required for unit testing time (perhaps years later when analysing a

depends on your “customer” needs. Where field failure).

development is being done under a contract with

an external customer, or there are regulatory For many organisations, separate unit test

requirements to be met, these my impose specific documents are not produced. Typically unit

standards on the project. testing will be recorded in controlled lab-books,

or collected into project journals.

Where there are no specific requirements

imposed on the project, it becomes essentially a One approach which works well for software unit

tradeoff between project cost and risk. In fact, testing is to use a source code listing with hand

the project may choose to keep the level of annotations for the recording of tests. Test cases

testing in some areas quite informal, while other and data are identified on the listing, with

are more formal. markups showing which sections of code are

covered by which tests. Typically this listing will

These questions should be answered as part of be attached to a review sheet and a checklist of

the test planning, and need to be documented in unit testing requirements, and filed with the

the project test plan. In most cases there is little project records.

advantage in requiring any more formality than is The documentation method chosen may vary

required to ensure that adequate attention is being depending on the criticality, complexity, or risk

applied to the task. This may need nothing more associated with the unit. For example, in a

security-critical system, one or more units

Software Unit Testing Page 2 of 4

IV&V Australia Pty Ltd, 1997

rparkin@ivvaust.com.au

IV&V Australia

The independent software testing specialists

associated with the secure interface may be executed at least once, and every conditional

required to have formally documented unit tests, statement to go each way. In addition, all

while the (non-security critical) bulk of the “boundary cases” must be exercised. In actual

system is much less formally documented. These practice, 100% coverage can be surprisingly

decisions need to be made early as part of the difficult to achieve for well-written code. This is

initial project test planning and appropriately because there will be code to protect against

recorded. “should not occur” scenarios, which can be very

awkward to exercise. A code coverage standard

How “thorough” does it need to be? may concede coverage of these cases so long as

they are adequately desk-reviewed.

In general terms, unit testing should provide

confidence that a unit does not have

unpredictable or inconsistent behaviour, and that What “test environment” should be

it conforms to all the “design assumptions” that used?

have been made about it. If this is achieved, then As a general rule of thumb “the rest of the system

higher-level testing can concentrate on is the best test harness” for unit testing.

macroscopic properties of the system, rather than Performing unit tests in a system environment

having to iterate over numerous possibilities for maximises your likelihood of identifying

interaction at the lowest levels. In choosing tests, problems. On the other hand, the tester should

the tester should consider whether it behaves in not allow this “rule” to limit or hinder their

the way the design assumes, whether it does this testing. They should use the rest of the system to

over the full range of operational possibilities, generate and analyse test scenarios, but should

and whether there are any “special cases” in its not feel constrained from intruding into the

behaviour which are not visible at a higher level. system with debuggers, special test code, or other

For each line of code, the tester should ask “does aids.

it achieve what it was put here to do”?

Some people feel that for testing to be valid, it

Because unit testing is primarily implementation must be performed on exactly the code to be

driven, its thoroughness is usually measured by delivered, running exactly in its final

code coverage. Tools are available which will environment. Although this is appropriate for

evaluate code coverage while tests are being run, final acceptance testing at the system level, it can

but generally someone familiar with the code, actually be counter-productive at the lower

while focussed on a particular unit, will find it levels. At the unit test level it is far preferable to

quite easy to determine the coverage of a “put in some debug statements” to help perform a

particular set of tests. Various “measures” of particular test, than to avoid the test altogether in

coverage can be defined, such as “statement a mistaken attempt to ensure fidelity.

coverage” (each statement executed at least

once), “decision coverage” (each conditional It is often easy to make the system an almost

statement executed at least once each way), and ideal test harness. For example, removing

so on. restrictions on selectable system parameters when

in a “system test mode” may make it trivial to

Like documentation, the level of thoroughness force otherwise difficult “should not occur”

required for unit testing may depend on the special cases. Providing a capability to inject

criticality, complexity, or risk associated with the arbitrary byte sequence for internal messages

unit. For example, safety or security-critical may be trivial to implement buy extremely useful

units may be subjected to much more extensive for testing. When considered early in the design

unit testing than non-critical screen-formatting process, these sorts of capabilities are often

code. Some projects use metrics such as McCabe trivial to provide.

Cyclomatic Complexity to pre-determine the

appropriate level − units with a high complexity Conclusions

are required to have a greater degree of testing.

Again a policy on test rigour needs to be Software unit testing is an integral part of an

determined as part of the early project test efficient and effective strategy for testing

planning. systems. It is best performed by the designer of

the code under test.

For typical projects, the usual standard is to aim

for “decision coverage”. That is, unit testing The appropriate level of formality and

must demonstrate correct operation over a range thoroughness of the testing will vary from project

of cases which require every statement to be to project, and even within a project depending

Software Unit Testing Page 3 of 4

IV&V Australia Pty Ltd, 1997

rparkin@ivvaust.com.au

IV&V Australia

The independent software testing specialists

on the criticality, complexity, and risk associated

with the unit. The policy in this regard should be

decided early in test planning, and documented,

usually in the Project Plan or separate Master

Test Plan.

In most cases it is acceptable to adopt an

approach which requires little documentation

overhead. However there are some basic

requirements which should always be met. In

particular it must be reviewable, repeatable, and

archivable. Commonly, unit testing will be

recorded in labbooks, or in hand-written notes on

code listings stored in the project journal, with

guidance provided by a checklist that identifies

the required unit testing activities.

Some issues which should be considered when

evaluating a unit testing strategy are:

• Has a policy with regards formality,

documentation, and coverage been

determined early enough in the project?

• Does it relate to other levels of testing to

give an efficient and effective overall

strategy?

• Have the needs of units which are

particularly critical, complex, or risky been

considered?

• Will the documentation be reviewable,

repeatable, and archivable?

Questions which should be considered when

evaluating unit testing for adequacy include:

• Have all statements been exercised by at

least one test?

• Has each conditional statement been

exercised at least once each way by the tests?

• Have all boundary cases been exercised?

• Were any design assumptions made about

the operation of this unit? Have the tests

demonstrated these assumptions?

• Have the tests exercised the unit over the full

range of operational conditions it is expected

to address?

Software Unit Testing Page 4 of 4

IV&V Australia Pty Ltd, 1997

rparkin@ivvaust.com.au

Potrebbero piacerti anche

- 03 System TestingDocumento17 pagine03 System TestingramNessuna valutazione finora

- Defect Tracking SystemDocumento21 pagineDefect Tracking Systemmughees1050% (2)

- Code Review Document: Virtual Museum ExplorerDocumento13 pagineCode Review Document: Virtual Museum ExplorerSimran Radheshyam SoniNessuna valutazione finora

- Seven Principles of Software TestingDocumento3 pagineSeven Principles of Software TestingAkhil sNessuna valutazione finora

- Unit Testing Template Ver1.1Documento15 pagineUnit Testing Template Ver1.1Mohammed IbraheemNessuna valutazione finora

- Software Test Documentation IEEE 829Documento10 pagineSoftware Test Documentation IEEE 829Walaa HalabiNessuna valutazione finora

- Ieee Guide For SVVPDocumento92 pagineIeee Guide For SVVPwanya64Nessuna valutazione finora

- Test Design Specification TemplateDocumento4 pagineTest Design Specification TemplateRifQi TaqiyuddinNessuna valutazione finora

- SOA Test ApproachDocumento4 pagineSOA Test ApproachVijayanthy KothintiNessuna valutazione finora

- Introduction To Software Testing ToolsDocumento3 pagineIntroduction To Software Testing ToolsShekar BhandariNessuna valutazione finora

- Smoke Vs SanityDocumento7 pagineSmoke Vs SanityAmodNessuna valutazione finora

- Software Assessment FormDocumento4 pagineSoftware Assessment FormCathleen Ancheta NavarroNessuna valutazione finora

- Differences Between The Different Levels of Tests: Unit/component TestingDocumento47 pagineDifferences Between The Different Levels of Tests: Unit/component TestingNidhi SharmaNessuna valutazione finora

- Software Quality EnginneringDocumento26 pagineSoftware Quality EnginneringVinay PrakashNessuna valutazione finora

- IEEE829 Test Plan TemplateDocumento1 paginaIEEE829 Test Plan TemplatePuneet ShivakeriNessuna valutazione finora

- Overview of Software TestingDocumento12 pagineOverview of Software TestingBhagyashree SahooNessuna valutazione finora

- SQA Test PlanDocumento5 pagineSQA Test PlangopikrishnaNessuna valutazione finora

- Use Cases - NathDocumento1 paginaUse Cases - Nathapi-3801122Nessuna valutazione finora

- ERP Implementation at HCC Project ReportDocumento85 pagineERP Implementation at HCC Project ReportTrader RedNessuna valutazione finora

- Documents' Software TestingDocumento8 pagineDocuments' Software TestingTmtv HeroNessuna valutazione finora

- SE20192 - Lesson 2 - Introduction To SEDocumento33 pagineSE20192 - Lesson 2 - Introduction To SENguyễn Lưu Hoàng MinhNessuna valutazione finora

- Cyreath Test Strategy TemplateDocumento11 pagineCyreath Test Strategy TemplateshygokNessuna valutazione finora

- Software & Software EngineeringDocumento10 pagineSoftware & Software Engineeringm_saki6665803Nessuna valutazione finora

- Test PlanDocumento7 pagineTest PlanSarang DigheNessuna valutazione finora

- CS435: Introduction To Software EngineeringDocumento43 pagineCS435: Introduction To Software Engineeringsafrian arbiNessuna valutazione finora

- Software Testing MetricsDocumento20 pagineSoftware Testing MetricsBharathidevi ManamNessuna valutazione finora

- Software TestingDocumento5 pagineSoftware TestingHamdy ElasawyNessuna valutazione finora

- Design Verification White PaperDocumento12 pagineDesign Verification White PaperAmit PanditaNessuna valutazione finora

- System TestingDocumento3 pagineSystem TestinganilNessuna valutazione finora

- Bug tracking system Complete Self-Assessment GuideDa EverandBug tracking system Complete Self-Assessment GuideNessuna valutazione finora

- System Architecture TemplateDocumento8 pagineSystem Architecture TemplateOpticalBoyNessuna valutazione finora

- C Sharp Coding Guidelines and Best Practices 2Documento49 pagineC Sharp Coding Guidelines and Best Practices 2radiazmtzNessuna valutazione finora

- Water Cooler SQA PlanDocumento62 pagineWater Cooler SQA Planbububear01Nessuna valutazione finora

- Testing FundamentalsDocumento17 pagineTesting FundamentalsMallikarjun RaoNessuna valutazione finora

- Test Strategy - Wikipedia, ...Documento4 pagineTest Strategy - Wikipedia, ...ruwaghmareNessuna valutazione finora

- Software Quality Assurance: - Sanat MisraDocumento32 pagineSoftware Quality Assurance: - Sanat MisraSanat MisraNessuna valutazione finora

- Bsi MD MDR Best Practice Documentation SubmissionsDocumento29 pagineBsi MD MDR Best Practice Documentation SubmissionsMichelle Kozmik JirakNessuna valutazione finora

- Defect Tracking FlowchartDocumento5 pagineDefect Tracking FlowchartJohn MrGrimm DobsonNessuna valutazione finora

- Software Process: Hoang Huu HanhDocumento53 pagineSoftware Process: Hoang Huu HanhNguyễn Minh QuânNessuna valutazione finora

- Sachins Testing World: SachinDocumento31 pagineSachins Testing World: Sachinsachinsahu100% (1)

- SVVPLANDocumento17 pagineSVVPLANJohan JanssensNessuna valutazione finora

- Software Selection A Complete Guide - 2021 EditionDa EverandSoftware Selection A Complete Guide - 2021 EditionNessuna valutazione finora

- Software Quality StandardsDocumento13 pagineSoftware Quality Standards20024402pNessuna valutazione finora

- Understanding Software TestingDocumento37 pagineUnderstanding Software Testingemmarvelous11Nessuna valutazione finora

- Testing FundasDocumento44 pagineTesting FundasbeghinboseNessuna valutazione finora

- Software Testing LifecycleDocumento4 pagineSoftware Testing LifecycleDhiraj TaleleNessuna valutazione finora

- Software Development Life Cycle by Raymond LewallenDocumento6 pagineSoftware Development Life Cycle by Raymond Lewallenh.a.jafri6853100% (7)

- How To Create Test PlanDocumento7 pagineHow To Create Test PlanajayNessuna valutazione finora

- Client-Server Application Testing Plan: EDISON Software Development CentreDocumento7 pagineClient-Server Application Testing Plan: EDISON Software Development CentreEDISON Software Development Centre100% (1)

- Software Testing Life CycleDocumento14 pagineSoftware Testing Life CycleKarthik Tantri100% (2)

- SDP TemplateDocumento90 pagineSDP TemplateOttoman NagiNessuna valutazione finora

- Design Document Template - ChaptersDocumento17 pagineDesign Document Template - ChaptersSuresh SaiNessuna valutazione finora

- Data Anonymization A Complete Guide - 2020 EditionDa EverandData Anonymization A Complete Guide - 2020 EditionNessuna valutazione finora

- Requirements traceability A Complete Guide - 2019 EditionDa EverandRequirements traceability A Complete Guide - 2019 EditionNessuna valutazione finora

- Automated Software Testing A Complete Guide - 2020 EditionDa EverandAutomated Software Testing A Complete Guide - 2020 EditionNessuna valutazione finora

- Agile Software Development Quality Assurance A Complete Guide - 2020 EditionDa EverandAgile Software Development Quality Assurance A Complete Guide - 2020 EditionNessuna valutazione finora

- Change PasswordDocumento1 paginaChange Passwordapi-3709875Nessuna valutazione finora

- QA & TestingDocumento43 pagineQA & Testingapi-3738458Nessuna valutazione finora

- Software Testing and Development Life CycleDocumento6 pagineSoftware Testing and Development Life Cycleapi-3709875100% (1)

- Software TestingDocumento1 paginaSoftware Testingapi-3709875Nessuna valutazione finora

- Software EngineeringDocumento2 pagineSoftware Engineeringapi-3709875Nessuna valutazione finora

- Keyboard Shortcuts 1000+Documento6 pagineKeyboard Shortcuts 1000+api-3709875Nessuna valutazione finora

- Pioneer XDP - 30R ManualDocumento213 paginePioneer XDP - 30R Manualmugurel_stanescuNessuna valutazione finora

- Internship Report May 2016Documento11 pagineInternship Report May 2016Rupini RagaviahNessuna valutazione finora

- ISO 9001:2015 Questions Answered: Suppliers CertificationDocumento3 pagineISO 9001:2015 Questions Answered: Suppliers CertificationCentauri Business Group Inc.100% (1)

- Delhi Public School Bangalore North ACADEMIC SESSION 2021-2022 Ut2 Revision Work Sheet TOPIC: Sorting Materials Into Group Answer KeyDocumento6 pagineDelhi Public School Bangalore North ACADEMIC SESSION 2021-2022 Ut2 Revision Work Sheet TOPIC: Sorting Materials Into Group Answer KeySumukh MullangiNessuna valutazione finora

- Ball Mill SizingDocumento10 pagineBall Mill Sizingvvananth100% (1)

- IBM System Storage DS8000 - A QuickDocumento10 pagineIBM System Storage DS8000 - A Quickmuruggan_aNessuna valutazione finora

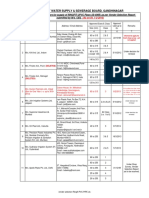

- GWSSB Vendor List 19.11.2013Documento18 pagineGWSSB Vendor List 19.11.2013sivesh_rathiNessuna valutazione finora

- Restoration and Adaptive Re-Use of Queen Mary's High School: Phase-1Documento4 pagineRestoration and Adaptive Re-Use of Queen Mary's High School: Phase-1Sonali GurungNessuna valutazione finora

- U042en PDFDocumento12 pagineU042en PDFTatiya TatiyasoponNessuna valutazione finora

- Feasibility Study of Solar Photovoltaic (PV) Energy Systems For Rural Villages of Ethiopian Somali Region (A Case Study of Jigjiga Zone)Documento7 pagineFeasibility Study of Solar Photovoltaic (PV) Energy Systems For Rural Villages of Ethiopian Somali Region (A Case Study of Jigjiga Zone)ollata kalanoNessuna valutazione finora

- Korantin PPDocumento4 pagineKorantin PPteddy garfieldNessuna valutazione finora

- EMOC 208 Installation of VITT For N2 Cylinder FillingDocumento12 pagineEMOC 208 Installation of VITT For N2 Cylinder Fillingtejcd1234Nessuna valutazione finora

- Tube Well Design Project SolutionDocumento5 pagineTube Well Design Project SolutionEng Ahmed abdilahi IsmailNessuna valutazione finora

- 20 Site SummaryDocumento2 pagine20 Site SummaryMuzammil WepukuluNessuna valutazione finora

- Quality ControlDocumento10 pagineQuality ControlSabbir AhmedNessuna valutazione finora

- WEISER Locks and HardwareDocumento24 pagineWEISER Locks and HardwareMaritime Door & WindowNessuna valutazione finora

- Good Practices in Government Resource Planning, Developed Vs Developing CountriesDocumento11 pagineGood Practices in Government Resource Planning, Developed Vs Developing CountriesFreeBalanceGRPNessuna valutazione finora

- Receiving Material Procedure (Done) (Sudah Direvisi)Documento8 pagineReceiving Material Procedure (Done) (Sudah Direvisi)Hardika SambilangNessuna valutazione finora

- Safety Data Sheet 84989 41 3 enDocumento4 pagineSafety Data Sheet 84989 41 3 enAdhiatma Arfian FauziNessuna valutazione finora

- TDS Sadechaf UVACRYL 2151 - v9Documento5 pagineTDS Sadechaf UVACRYL 2151 - v9Alex MacabuNessuna valutazione finora

- Fast, Accurate Data Management Across The Enterprise: Fact Sheet: File-Aid / MvsDocumento4 pagineFast, Accurate Data Management Across The Enterprise: Fact Sheet: File-Aid / MvsLuis RamirezNessuna valutazione finora

- Jamesbury Polymer and Elastomer Selection GuideDocumento20 pagineJamesbury Polymer and Elastomer Selection Guidesheldon1jay100% (1)

- A Sample of Wet Soil Has A Volume of 0Documento8 pagineA Sample of Wet Soil Has A Volume of 0eph0% (1)

- Simatic EKB Install 2012-03-08Documento2 pagineSimatic EKB Install 2012-03-08Oton SilvaNessuna valutazione finora

- Kubernetes CommandsDocumento36 pagineKubernetes CommandsOvigz Hero100% (2)

- 21-3971-CLA - DisenŞo Preliminar Cimentacion - Normal SoilDocumento4 pagine21-3971-CLA - DisenŞo Preliminar Cimentacion - Normal SoilJose ManzanarezNessuna valutazione finora

- ED ProcessDocumento9 pagineED ProcesskhanasifalamNessuna valutazione finora

- Heat Transfer - A Basic Approach - OzisikDocumento760 pagineHeat Transfer - A Basic Approach - OzisikMaraParesque91% (33)