Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Batch Processing Is Execution of A Series of Programs Jobson A Computer Without Manual Intervention

Caricato da

Shiba ShisDescrizione originale:

Titolo originale

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Batch Processing Is Execution of A Series of Programs Jobson A Computer Without Manual Intervention

Caricato da

Shiba ShisCopyright:

Formati disponibili

Batch processing System

In a batch processing system, an application program processes a batch of items or records (the Processing of each record corresponding to a transaction). The batch of records has been collected over a period, and is usually presorted for sequential processing at a predetermined time. Compare this with transaction processing, in which an application program processes a single item on demand for example, the receipt of an order. A major difference between transaction processing and batch processing is that the transaction Processing end user has online access when the application program is running, while batch processing is usually completely independent of end users. Batch processing lacks the immediacy of transaction processing, but is an economic way to computerize clerical work, and is ideally suited to applications like payroll, where immediacy is not important. Batch processing makes the most efficient use of computer resources, especially processing power. A batch system provides efficient processing of applications that have the following characteristics: No online access Minimal sharing requirements A throughput objective of maximum data processed in minimum elapsed time.

In a batch system, the unit of scheduling is a job, which consists of one or more programs that are executed serially. A job usually corresponds to an application, but a complex application can consist of several jobs. Depending on data volumes and processor power, a job can take up to several hours processing time, during which most of the required resources are dedicated to the job.

A batch system uses processing power economically because it receives input data in a presorted batch that enables sequential processing of the data store. The batching of data to produce a continuous stream means that a batch system tends to monopolize use of the data store. A batch system fails to meet requirements when end users want to process single items in Unpredictable volumes and sequence-for example, sales staff querying a customer's credit limit before making a sale. Transaction processing is then the solution.

Examples of batch applications include the printing of monthly credit card statements or the closing of the month end general ledgers. The following example is an order entry system, which is chosen for comparison with the previous example of a transaction processing solution for the same application. It is execution of a series of programs jobs on a computer without manual intervention. Batch jobs are set up so they can be run to completion without manual intervention, so all input data is preselected through scripts or command-line parameters. This is in contrast to "online" or interactive programs which prompt the user for such input. A program takes a set of data files as input, processes the data, and produces a set of output data files. This operating environment is termed as "batch processing" because the input data are collected into batches of files and are processed in batches by the program. History Batch processing has been associated with mainframe computers since the earliest days of electronic computing in the 1950s. There were a variety of reasons why batch processing dominated early computing. One reason is that the most urgent business problems for reasons of profitability and competitiveness were primarily accounting problems, such as billing. Billing is inherently a batch-oriented business process, and practically every business must bill, reliably and on-time. Also, every computing resource was expensive, so sequential submission of batch

jobs matched the resource constraints and technology evolution at the time. Later, interactive sessions with either text-based computer terminal interfaces or graphical user interfaces became more common. However, computers initially were not even capable of having multiple programs loaded into the main memory. Batch processing is still pervasive in mainframe computing, but practically all types of computers are now capable of at least some batch processing, even if only for "housekeeping" tasks. That includes UNIX-based computers, Microsoft Windows, Mac OS X, and even smart phones, increasingly. Virus scanning is a form of batch processing, and so are scheduled jobs that periodically delete temporary files that are no longer required. E-mail systems frequently have batch jobs that periodically archive and compress old messages. As computing in general becomes more pervasive in society and in the world, so too will batch processing. Modern systems Despite their long history, batch applications are still critical in most organizations in large part because many core business processes are inherently batch-oriented and probably always will be. (Billing is a notable example that nearly every business requires to function.) While online systems can also function when manual intervention is not desired, they are not typically optimized to perform high-volume, repetitive tasks. Therefore, even new systems usually contain one or more batch applications for updating information at the end of the day, generating reports, printing documents, and other non-interactive tasks that must complete reliably within certain business deadlines. Modern batch applications make use of modern batch frameworks such as Spring Batch, which is written for Java, and other frameworks for other programming languages, to provide the fault tolerance and scalability required for high-volume processing. In order to ensure highspeed processing, batch applications are often integrated with grid computing solutions to partition a batch job over a large number of processors, although there are significant programming challenges in doing so. High volume batch processing places particularly heavy demands on system and application architectures as well. Architectures that feature strong

input/output performance and vertical scalability, including modern mainframe computers, tend to provide better batch performance than alternatives. Scripting languages became popular as they evolved along with batch processing. Common batch processing usage Data processing A typical batch processing schedule includes end of day- reporting (EOD). Historically, many systems had a batch window where online subsystems were turned off and the system capacity was used to run jobs common to all data (accounts, users, or customers) on a system. In a bank, for example, EOD jobs include interest calculation, generation of reports and data sets to other systems, printing (statements), and payment processing. Many businesses have moved to concurrent online and batch architectures in order to support globalization, the Internet, and other relatively newer business demands. Such architectures place unique stresses on system design, programming techniques, availability engineering, and IT service delivery Printing A popular computerized batch processing procedure is printing. This normally involves the operator selecting the documents they need printed and indicating to the batch printing software when, where they should be output and priority of the print job. Then the job is sent to the print queue from where printing daemon sends them to the printer. Databases Batch processing is also used for efficient bulk database updates and automated transaction processing, as contrasted to interactive online transaction processing (OLTP) applications. The extract, transform, load (ETL) step in populating data warehouses is inherently a batch process in most implementations.

Images Batch processing is often used to perform various operations with digital images. There exist computer programs that let one resize, convert, watermark, or otherwise edit image files. Example of batch processing The batch program (figure) processes the order data set sequentially, updating the customer Accounts data set, sales ledger, and stock data set, and raising dispatch notes for each order. Orders can be rejected due to the state of a customer's account, or held over due to lack of stock. During the day, sales staff prepares the data for each order and place it in a batch of data for later (in this example, probably overnight) processing. Compare this with the transaction processing solution, where, instead of preparing batch data records, the end users process each order as it is received and can immediately confirm acceptance or rejection.

It usually involves the following activities. Gathering source documents originated by business transactions, such as sales Orders and invoices, into group called batches. Recording transaction data on an input medium, such as magnetic disks or Magnetic tapes. Sorting the transactions in a transaction file in the same sequence as records in a sequential master file. Processing transaction data and creating an updated master file and a variety of documents (such as customer invoices or pay cheques) and reports)

Types of Information System Requiring Batch Processing Transaction Processing System(TPS) Processing cycles can be on-demand daily,weekly,monthly,bi-monthly and quarterly very seldom will TPS require semi-annual and annual processing.

Management Information system(MIS) Processing cycle can be on-demand, daily, weekly, bi-weekly, monthly, bi-monthly, quarterly, semi-annual and annual.

Decision Support system(DSS) Typically demand but other processing cycle may be used

Executive Information System(EIS) Processing cycle can be monthly, bi-monthly, quarterly, semi-annual, annual processing cycle. It is not unusual to use historical information one,two,and up to 5 years in the past-for planning and forecasting purposes-one, two and up to 5 years to the future.

Batch Processing Strategies To help analyze, design and implement batch systems, the systems analyst and designer should be provided a framework on how to build the batch system. The framework is a Standard Batch Architecture consisting of: Basic batch application building blocks and; Programming design patterns with systems flowcharts.

Programmers should be provided with Structure descriptions - process flows, use cases, and/or structure charts and; Module code shells.

When starting to design a batch job, the business logic should be decomposed into a series of steps which can be implemented using the following batch processing building blocks Basic Components or Building Blocks Conversion For each type of file from an external system, a conversion component will need to be

developed to convert the input records into a standard format output records required for the next processing steps This type of components can partly or entirely consist of conversion utilities.

Validation Validation components ensure that all input and output records are correct and consistent. It uses routines, often called "validation rules" or "check routines", that check for correctness, meaningfulness, and security of data that are used by the system. The rules may be implemented through the automated facilities of a data base, or by the inclusion of explicit validation logic. Update It performs processing on input transactions from an extract or a validation component.

The update component involve reading a master/reference record to obtain data required for processing based on the input record, potentially updating the master/reference record. Extract An application component that reads a set of records from a database or input file, selects records based on predefined rules, and writes the records to a output file for transform, print and/or load processing. Update and Extract Print Application component that reads an input file, restructures data from this record accordingto a standard reporting format, and produces reports direct to a printer or to a spool file. If aspool file is used, a spool print utility is used to produce printouts. The update and extract functions are combined in a single component.

Extract, Transform, Load (ETL) Extract, Transform, and Load (ETL) is a process in data warehousing that involves Extracting data from outside sources. Transforming it to fit business needs (which can include quality levels), and ultimately. Loading it into the end target, i.e. the data warehouse.

ETL is important, as it is the way data actually gets loaded into the data warehouse. ETL can also be used for the integration with legacy systems. Usually ETL implementations store an audit trail on positive and negative process runs. _ Note: This manual will not expound concepts related to the ETL components, but some design patterns, structure descriptions and code shells can be used to analyze, design andcode ETL functionalities. Transform The transform component applies a series of rules or functions to the Extract output file data to derive the data to be used by the Load and/or Print components . Some data sources will require very little or even no manipulation of data. In other cases, one or more transformations routines may be required to meet the business and technical needs of the Load and Print components. Conversion, Validation, Print and Load components are special types of Transform components. Load The Load components load the data into the end target, usually the data warehouse (DW). Depending on the requirements of the organization, this process ranges widely. Some data warehouses might weekly overwrite existing information with cumulative, updated data while other DW (or even other parts of the same DW) might add new data in a detailed form e.g. hourly. The timing and scope to replace or append are strategic design choices dependent on the time available and the business needs. More complex systems can maintain a history and audit trail of all changes to the data loaded in the DW.

As the load phase interacts with a database, the constraints defined in the database schema as well as in triggers activated upon data load apply (e.g. uniqueness, referential integrity, mandatory fields), which also contribute to the overall data quality performance of the Extract-Transform-Load process.

Benefits Batch processing has these benefits:

It allows sharing of computer resources among many users and programs, It shifts the time of job processing to when the computing resources are less busy, It avoids idling the computing resources with minute-by-minute manual intervention and supervision,

By keeping high overall rate of utilization, it better amortizes the cost of a computer, especially an expensive one.

The performance increases

Advantages and Disadvantages.

Batch processing is economical when alarge volume of data must be processed. It is suitable to such applications as payroll,and the preparation of client, patient, or customer bills. However, it requires sorting,reduces timeliness, in some cases, and requires sequential file organization. Sincemaster files are updated only periodically, there is a potential for master files to become quite out-of-date if transactions are not processed frequently.

Master files(maganetic tape)

Existing Finance Records as they were the previous month

Central Processing Unit

Updated Master File New input(Unsorted punch cards)

New savings Automatic Deduction Pay Increases Incorporate New input as a Group

In this figure Batch Processing in a finance department. The computer looks at the master file. periodically making changes reflecting new transactions, old master files are updated as a group.

Potrebbero piacerti anche

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDa EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceValutazione: 4 su 5 stelle4/5 (895)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDa EverandNever Split the Difference: Negotiating As If Your Life Depended On ItValutazione: 4.5 su 5 stelle4.5/5 (838)

- The Yellow House: A Memoir (2019 National Book Award Winner)Da EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Valutazione: 4 su 5 stelle4/5 (98)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDa EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeValutazione: 4 su 5 stelle4/5 (5794)

- Shoe Dog: A Memoir by the Creator of NikeDa EverandShoe Dog: A Memoir by the Creator of NikeValutazione: 4.5 su 5 stelle4.5/5 (537)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDa EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaValutazione: 4.5 su 5 stelle4.5/5 (266)

- The Little Book of Hygge: Danish Secrets to Happy LivingDa EverandThe Little Book of Hygge: Danish Secrets to Happy LivingValutazione: 3.5 su 5 stelle3.5/5 (400)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDa EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureValutazione: 4.5 su 5 stelle4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDa EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryValutazione: 3.5 su 5 stelle3.5/5 (231)

- Grit: The Power of Passion and PerseveranceDa EverandGrit: The Power of Passion and PerseveranceValutazione: 4 su 5 stelle4/5 (588)

- The Emperor of All Maladies: A Biography of CancerDa EverandThe Emperor of All Maladies: A Biography of CancerValutazione: 4.5 su 5 stelle4.5/5 (271)

- The Unwinding: An Inner History of the New AmericaDa EverandThe Unwinding: An Inner History of the New AmericaValutazione: 4 su 5 stelle4/5 (45)

- On Fire: The (Burning) Case for a Green New DealDa EverandOn Fire: The (Burning) Case for a Green New DealValutazione: 4 su 5 stelle4/5 (74)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDa EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersValutazione: 4.5 su 5 stelle4.5/5 (345)

- Team of Rivals: The Political Genius of Abraham LincolnDa EverandTeam of Rivals: The Political Genius of Abraham LincolnValutazione: 4.5 su 5 stelle4.5/5 (234)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDa EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreValutazione: 4 su 5 stelle4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDa EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyValutazione: 3.5 su 5 stelle3.5/5 (2259)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Da EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Valutazione: 4.5 su 5 stelle4.5/5 (121)

- Her Body and Other Parties: StoriesDa EverandHer Body and Other Parties: StoriesValutazione: 4 su 5 stelle4/5 (821)

- PDFDocumento10 paginePDFerbariumNessuna valutazione finora

- Hans Belting - The End of The History of Art (1982)Documento126 pagineHans Belting - The End of The History of Art (1982)Ross Wolfe100% (7)

- Book 1518450482Documento14 pagineBook 1518450482rajer13Nessuna valutazione finora

- Jackson V AEGLive - May 10 Transcripts, of Karen Faye-Michael Jackson - Make-up/HairDocumento65 pagineJackson V AEGLive - May 10 Transcripts, of Karen Faye-Michael Jackson - Make-up/HairTeamMichael100% (2)

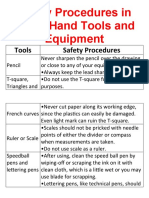

- Safety Procedures in Using Hand Tools and EquipmentDocumento12 pagineSafety Procedures in Using Hand Tools and EquipmentJan IcejimenezNessuna valutazione finora

- Kyle Pape - Between Queer Theory and Native Studies, A Potential For CollaborationDocumento16 pagineKyle Pape - Between Queer Theory and Native Studies, A Potential For CollaborationRafael Alarcón Vidal100% (1)

- Friction: Ultiple Hoice UestionsDocumento5 pagineFriction: Ultiple Hoice Uestionspk2varmaNessuna valutazione finora

- Drive LinesDocumento30 pagineDrive LinesRITESH ROHILLANessuna valutazione finora

- DBMS Lab ManualDocumento57 pagineDBMS Lab ManualNarendh SubramanianNessuna valutazione finora

- FuzzingBluetooth Paul ShenDocumento8 pagineFuzzingBluetooth Paul Shen许昆Nessuna valutazione finora

- Fundamentals of Public Health ManagementDocumento3 pagineFundamentals of Public Health ManagementHPMA globalNessuna valutazione finora

- (Jones) GoodwinDocumento164 pagine(Jones) Goodwinmount2011Nessuna valutazione finora

- Julia Dito ResumeDocumento3 pagineJulia Dito Resumeapi-253713289Nessuna valutazione finora

- Health Post - Exploring The Intersection of Work and Well-Being - A Guide To Occupational Health PsychologyDocumento3 pagineHealth Post - Exploring The Intersection of Work and Well-Being - A Guide To Occupational Health PsychologyihealthmailboxNessuna valutazione finora

- Government College of Nursing Jodhpur: Practice Teaching On-Probability Sampling TechniqueDocumento11 pagineGovernment College of Nursing Jodhpur: Practice Teaching On-Probability Sampling TechniquepriyankaNessuna valutazione finora

- Teaching Profession - Educational PhilosophyDocumento23 pagineTeaching Profession - Educational PhilosophyRon louise PereyraNessuna valutazione finora

- Ccoli: Bra Ica Ol A LDocumento3 pagineCcoli: Bra Ica Ol A LsychaitanyaNessuna valutazione finora

- Chapter 13 (Automatic Transmission)Documento26 pagineChapter 13 (Automatic Transmission)ZIBA KHADIBINessuna valutazione finora

- Cooperative Learning: Complied By: ANGELICA T. ORDINEZADocumento16 pagineCooperative Learning: Complied By: ANGELICA T. ORDINEZAAlexis Kaye GullaNessuna valutazione finora

- Design of Combinational Circuit For Code ConversionDocumento5 pagineDesign of Combinational Circuit For Code ConversionMani BharathiNessuna valutazione finora

- Hướng Dẫn Chấm: Ngày thi: 27 tháng 7 năm 2019 Thời gian làm bài: 180 phút (không kể thời gian giao đề) HDC gồm có 4 trangDocumento4 pagineHướng Dẫn Chấm: Ngày thi: 27 tháng 7 năm 2019 Thời gian làm bài: 180 phút (không kể thời gian giao đề) HDC gồm có 4 trangHưng Quân VõNessuna valutazione finora

- LP For EarthquakeDocumento6 pagineLP For Earthquakejelena jorgeoNessuna valutazione finora

- Topic 3Documento21 pagineTopic 3Ivan SimonNessuna valutazione finora

- Pidsdps 2106Documento174 paginePidsdps 2106Steven Claude TanangunanNessuna valutazione finora

- QuexBook TutorialDocumento14 pagineQuexBook TutorialJeffrey FarillasNessuna valutazione finora

- Z-Purlins: Technical DocumentationDocumento11 pagineZ-Purlins: Technical Documentationardit bedhiaNessuna valutazione finora

- How To Block HTTP DDoS Attack With Cisco ASA FirewallDocumento4 pagineHow To Block HTTP DDoS Attack With Cisco ASA Firewallabdel taibNessuna valutazione finora

- Siemens Make Motor Manual PDFDocumento10 pagineSiemens Make Motor Manual PDFArindam SamantaNessuna valutazione finora

- Fast Track Design and Construction of Bridges in IndiaDocumento10 pagineFast Track Design and Construction of Bridges in IndiaSa ReddiNessuna valutazione finora

- Toeic: Check Your English Vocabulary ForDocumento41 pagineToeic: Check Your English Vocabulary ForEva Ibáñez RamosNessuna valutazione finora