Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Netapp Tips

Caricato da

jnvu3902Descrizione originale:

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Netapp Tips

Caricato da

jnvu3902Copyright:

Formati disponibili

http://webcache.googleusercontent.com/search?q=cache:TC-gUgUS4XcJ:datastoragesol utions.us/migration-using-snapmirror/+snapmirror+old+new+vol&cd=3&hl=en&ct=clnk& gl=us&client=firefox-a&source=www.google.

com

Migration using snapmirror On , in Uncategorized, by StorageSolutions Volume migration using SnapMirror Ontap Snapmirror is designed to be simple, reliable and cheap tool to facilitate disaster recovery for business critical applications. It comes default with Ont ap but has to be licensed to use. Apart from DR, snapmirror is an extreamly useful in suituation like 1. Aggregates or volumes reached maximum size limit. 2, Need to change volume disk type (tiering). I experienced a similar suituation last week. My filer runs Ontap 7.2.3 which du e for an upgrade). The maximum aggregate size on this version of Ontap is 12TB u sable. A couple of my volumes hosted by this aggregate aggr1 were quickly runnin g out of disk space as nightly database dumps were chewing it up . So far I surv ived by oversubscribing the volumes. Although I have spares in the filer, I am u nable to expand the aggregate as it has touched its limit. The plan is to create a new aggregate (aggrgate_new) and migrate these volumes o nto it. I used snapmirror for this and it worked like a charm. Listed below is what one needs to do Prep work Build a new aggregate from free disks 1. List the spares in the system # vol status -s Spare disks RAID Disk Device HA SHELF BAY CHAN Pool Type RPM Used (MB/blks) Phys (MB/blks) - -

Spare disks for block or zoned checksum traditional volumes or aggregates spare 7a.18 7a 1 2 FC:B spare 7a.19 7a 1 3 FC:B spare 7a.20 7a 1 4 FC:B spare 7a.21 7a 1 5 FC:B spare 7a.22 7a 1 6 FC:B spare 7a.23 7a 1 7 FC:B FCAL 10000 372000/761856000 560879/1148681096 FCAL 10000 372000/761856000 560879/1148681096 FCAL 10000 372000/761856000 560879/1148681096 FCAL 10000 372000/761856000 560879/1148681096 FCAL 10000 372000/761856000 560879/1148681096 FCAL 10000 372000/761856000 560879/1148681096

spare 7a.24 7a 1 8 FC:B spare 7a.25 7a 1 9 FC:B spare 7a.26 7a 1 10 FC:B 2. Create new aggregate

FCAL 10000 372000/761856000 560879/1148681096 FCAL 10000 372000/761856000 560879/1148681096 FCAL 10000 372000/761856000 560879/1148681096

Add the new disks. Make sure you add sufficient disks to create complete raid gr oups. Else later when you add new disks to the aggregate , all the new writes wi ll go to the newly added disks until it fills up to the level of other disks in the raid group. This creates a disk bottleneck in the filer as all the writes ar e now handled by limited number of spindles. # aggr add aggr_new 7a.18,7a.19,7a.20,7a.21,7a.22,7a.23,7a.24,7a.25,7a.26,7a.27 3. Verify the aggregate is online # aggr status aggr_new 3. Create new volumes with name vol_new and size 1550g on aggr_new # vol create vol_new aggr_new 1500g 4. Verify the volume is online # vol status vol_new 5. Setup snapmirror between old and new volumes First you need to restrict the destination volume by using the command # vol res trict vol_new a. snapmirror initialize -S filername:volname filername:vol_new b. Also make an entry in /etc/snapmirror.conf file for this snapmirror session filername:/vol/volume filername:/vol/vol_new kbs=1000 0 0-23 * * Note kbs=1000 is throttling the snapmirror speed On day of cut over Update snapmirror session # snapmirror update vol_new Transfer started. Monitor progress with snapmirror status

or the snapmirror log.

# snapmirror status vol_new Snapmirror is on. Source Destination State Lag Status filername:volume_name filername:vol_new Snapmirrored 00:00:38 Idle Quiesce the relationship this will finish the in session transfers, and then hal t any further updates from snapmirror source to snapmirror destination. Quiecse the destination # snapmirror quiesce vol_new snapmirror quiesce: in progress

This can be a long-running operation. Use Control C (^C) to interrupt. snapmirror quiesce: dbdump_pb : Successfully quiesced Break the relationship this will cause the destination volume to become writable

# snapmirror break vol_new snapmirror break: Destination vol_new is now writable. Volume size is being retained for potential snapmirror resync. If you would like to grow the volume and do not expect to resync, set vol option fs_size_fixed to off Enable quotas: quota on volname Rename volumes Once the snapmirror session isnterminated, we can now rename the volumes # vol rename volume_name volume_name_temp # vol rename vol_new volume_name Remember, the shares move with the volume name. ie. if the volume hosting the sh are is renames the corresonding change is reflected in the recreate the path of the share. This requires us to delete the old share and recreate it with the cor rect volume name. File cifsconfig_share.cfg under etc$ has listing of the comman ds run to create the shares. Use this file as reference. cifs shares -add test_share$ cifs access test_share$ /vol/volume_name Admin Share Server Admins

S-1-5-32-544 Full Control

Use a -f at the end of the cifs shares -add line to eliminate the y or n prompt. Start quotas on the new volume # quota on volume_name Voila ! You are done. The shares and qtrees are now refering to the new volume o n a new aggregate. Test the shares by mapping them on a windows host. If you enjoyed this article, please consider sharing it!

Potrebbero piacerti anche

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDa EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceValutazione: 4 su 5 stelle4/5 (895)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDa EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeValutazione: 4 su 5 stelle4/5 (5794)

- Shoe Dog: A Memoir by the Creator of NikeDa EverandShoe Dog: A Memoir by the Creator of NikeValutazione: 4.5 su 5 stelle4.5/5 (537)

- Grit: The Power of Passion and PerseveranceDa EverandGrit: The Power of Passion and PerseveranceValutazione: 4 su 5 stelle4/5 (588)

- The Yellow House: A Memoir (2019 National Book Award Winner)Da EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Valutazione: 4 su 5 stelle4/5 (98)

- The Little Book of Hygge: Danish Secrets to Happy LivingDa EverandThe Little Book of Hygge: Danish Secrets to Happy LivingValutazione: 3.5 su 5 stelle3.5/5 (400)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDa EverandNever Split the Difference: Negotiating As If Your Life Depended On ItValutazione: 4.5 su 5 stelle4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDa EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureValutazione: 4.5 su 5 stelle4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDa EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryValutazione: 3.5 su 5 stelle3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerDa EverandThe Emperor of All Maladies: A Biography of CancerValutazione: 4.5 su 5 stelle4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDa EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaValutazione: 4.5 su 5 stelle4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDa EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersValutazione: 4.5 su 5 stelle4.5/5 (345)

- On Fire: The (Burning) Case for a Green New DealDa EverandOn Fire: The (Burning) Case for a Green New DealValutazione: 4 su 5 stelle4/5 (74)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDa EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyValutazione: 3.5 su 5 stelle3.5/5 (2259)

- Team of Rivals: The Political Genius of Abraham LincolnDa EverandTeam of Rivals: The Political Genius of Abraham LincolnValutazione: 4.5 su 5 stelle4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaDa EverandThe Unwinding: An Inner History of the New AmericaValutazione: 4 su 5 stelle4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDa EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreValutazione: 4 su 5 stelle4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Da EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Valutazione: 4.5 su 5 stelle4.5/5 (121)

- Her Body and Other Parties: StoriesDa EverandHer Body and Other Parties: StoriesValutazione: 4 su 5 stelle4/5 (821)

- Pcan-Usb Userman EngDocumento29 paginePcan-Usb Userman EngantidoteunrealNessuna valutazione finora

- Javascript CourseworkDocumento6 pagineJavascript Courseworkafjzcgeoylbkku100% (2)

- House Guide TemplateDocumento8 pagineHouse Guide TemplatewandolyNessuna valutazione finora

- Image2CAD AdityaIntwala CVIP2019Documento11 pagineImage2CAD AdityaIntwala CVIP2019christopher dzuwaNessuna valutazione finora

- Installation Procedure For Version 4.2.12.00 - Release 4.2Documento54 pagineInstallation Procedure For Version 4.2.12.00 - Release 4.2Talhaoui ZakariaNessuna valutazione finora

- Cosc - 1436 - Fall 16 - Dalia - Gumeel-CDocumento6 pagineCosc - 1436 - Fall 16 - Dalia - Gumeel-CBenNessuna valutazione finora

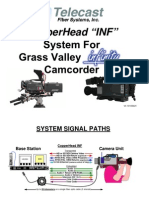

- CopperHead For Infinity Tech Manual V4Documento28 pagineCopperHead For Infinity Tech Manual V4TelejuanNessuna valutazione finora

- Do Teenagers Really Need A Mobile PhoneDocumento2 pagineDo Teenagers Really Need A Mobile Phonetasya azzahraNessuna valutazione finora

- MAC Service ManualDocumento51 pagineMAC Service ManualMirwansyah Tanjung100% (2)

- 23 Construction Schedule Templates in Word & ExcelDocumento7 pagine23 Construction Schedule Templates in Word & ExcelnebiyuNessuna valutazione finora

- Mettler Toledo LinxsDocumento36 pagineMettler Toledo LinxsSandro MunizNessuna valutazione finora

- FM Master Air Control NewDocumento4 pagineFM Master Air Control Newajay singhNessuna valutazione finora

- TK371 Plano ElectricoDocumento2 pagineTK371 Plano ElectricoCarlos IrabedraNessuna valutazione finora

- H1 Bent-Axis Motor: Customer / ApplicationDocumento1 paginaH1 Bent-Axis Motor: Customer / ApplicationRodrigues de OliveiraNessuna valutazione finora

- Flair SeriesDocumento2 pagineFlair SeriesSse ikolaha SubstationNessuna valutazione finora

- ReadmeDocumento26 pagineReadmepaquito sempaiNessuna valutazione finora

- Tnms SNMP Nbi - Operation GuideDocumento90 pagineTnms SNMP Nbi - Operation GuideAdrian FlorensaNessuna valutazione finora

- Chapter 5Documento21 pagineChapter 5Yoomif TubeNessuna valutazione finora

- Priti Kadam Dte ProjectDocumento15 paginePriti Kadam Dte ProjectAk MarathiNessuna valutazione finora

- Katalog MCCB Terasaki S160-SCJ Data SheetDocumento3 pagineKatalog MCCB Terasaki S160-SCJ Data SheetanitaNessuna valutazione finora

- CS-AI 2ND YEAR Final SyllabusDocumento40 pagineCS-AI 2ND YEAR Final Syllabusafgha bNessuna valutazione finora

- Erba LisaScan II User ManualDocumento21 pagineErba LisaScan II User ManualEricj Rodríguez67% (3)

- RMC-90 & RMC-37 Stock Till-18.10.2023Documento3 pagineRMC-90 & RMC-37 Stock Till-18.10.2023Balram kumarNessuna valutazione finora

- LO 2. Use FOS Tools, Equipment, and ParaphernaliaDocumento5 pagineLO 2. Use FOS Tools, Equipment, and ParaphernaliaReymond SumayloNessuna valutazione finora

- Starting and Stopping Procdure of GeneratorDocumento3 pagineStarting and Stopping Procdure of GeneratorSumit SinhaNessuna valutazione finora

- Byjusbusinesscanvasmodel 190201155441Documento5 pagineByjusbusinesscanvasmodel 190201155441Aarsh SoniNessuna valutazione finora

- Vin PlusDocumento20 pagineVin PlusNamrata ShettiNessuna valutazione finora

- CRM in Russia and U.S. - Case Study From American Financial Service IndustryDocumento40 pagineCRM in Russia and U.S. - Case Study From American Financial Service IndustryebabjiNessuna valutazione finora

- PDS S-Series Is Electronic MarshallingDocumento31 paginePDS S-Series Is Electronic Marshallingesakkiraj1590Nessuna valutazione finora

- Low FE & Pick Up Check SheetDocumento2 pagineLow FE & Pick Up Check SheetSandeep SBNessuna valutazione finora