Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Instrument Calibration Procedure

Caricato da

josejose19Descrizione originale:

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Instrument Calibration Procedure

Caricato da

josejose19Copyright:

Formati disponibili

Instrument Calibration Procedure

Internal and External Dial, Vernier and Digital Calipers and Outside Micrometers GPC CAL001 DATE 10/07 REV. 0

Quality Assurance Manager Approved Date:

SECTION1 INTRODUCTION AND DESCRIPTION 1.1 This procedure describes the calibration of Internal and External Dial, Vernier and Digital Calipers and Outside Micrometers. The instrument being calibrated is referred to herein as the TI (Test Instrument) This procedure includes test and essential performance parameters only. Any malfunction noticed during calibration, whether specifically tested for or not, should be corrected. Table 1 Calibration Description TI Dial, Vernier and Digital Calipers TI Characteristics Zero indication test Performance Specification Test point 0 in. Tolerance +/- .0005 in. Range 0 to 12 Tolerance: see Table II Range 0 to 12 Tolerance: see Table II Test Method Determined by sliding the jaws together and reading the TI indication. Comparison to gage blocks between the TI jaws. Comparison to gage blocks dimensions placing the blocks with attached caliper jaws outside the TI jaws. Comparison to gage block dimensions, placing the TI depth gage block and reading the TI outside dimension scale. Measured by comparing TI indications to gage block dimensions setup to test the basic length and micrometer head linearity.

1.2

Outside accuracy Inside accuracy

Depth gage accuracy

Range 0 to 12

Outside Micrometers

Length and Linearity

Range: 0 to 12

SECTION 2 EQUIPMENT REQUIREMENTS Table 2 Equipment Requirements Item 2.1 Gage Block Set 2.2 Low power magnifier 2.3 Micrometer wrench 2.4 Small jewelers screwdriver 2.5 Small clamp 2.6 Light machine oil or spray 2.7 Clean flat surface Minimum Use Specifications Range .050- 4 Tolerance .00005 To aid in reading the TL vernier or barrel scale Adjustment of micrometer Adjustment of caliper Hold gage blocks for inside measurement comparison Lubrication of slides or thimble Surface Plate or Steel Block SECTION 3 PRELIMINARY OPERATIONS 3.1 3.2 TI INSPECTION Ensure that the work area is clean, well illuminated, free from excessive drafts, free from excessive humidity, and that the rate of temperature change does not exceed 4 oF per hour. Ensure that the gage block set (item 2.1) is clean and that the TI and the gage blocks have been allowed to stabilize at the ambient temperature for a minimum of 2 hours. Calibration Equipment ESSM #SE0060

3.3

CALIPERS 3.4 3.5 Ensure that the TI jaws slide smoothly and freely along the full length of the TI beam, if not take corrective action. Inspect to ensure the jaws are free of nicks and burrs and that it is clean and free from damage that would impair its operation. NOTE TI test point are basically determined by selecting 4 test point within the first inch of range and 4 to 8 point additional test points extending over the remainder of the TI range at approximately equal spacing.

3.6

In order top minimize the number of gage blocks needed to test calipers with higher ranges, select test point at 25, 50, 75, and 100% of the TI range beyond the 1st inch and round to the nearest inch. Use major vernier graduations or normal electronic digital values as test points as necessary.

OUTSIDE MICROMETERS 3.7 3.8 Slowly rotate the TI micrometer thimble, and ensure that it operates smoothly its entire range. The TI length measurement test should be preformed at approximately 6 points across the range of the TI. For example, 0 to 1 micrometer may have calculated test points at .000, .200, .400, .600, .800 and 1.000 inch. SECTION 4 CALIBRATION PROCESS CALIPERS NOTES Unless otherwise specified, verify the results of each test and take corrective action whenever the test requirement is not being met before proceeding. Cotton gloves should be worn when handling gage blocks to prevent the transfer of body heat, protect the gage surfaces. 4.1 4.1.1 ZERO TEST Slide the TI jaws together, ensuring that no light is visible between the jaws measuring surfaces. If the TI has inside measurement capability, verify that the dial indicator or vernier indicates zero, as necessary. Adjust the dial bezel, if necessary. Tighten the TI sliding jaw set screw, if applicable If the TI has a digital readout, depress the zero set and verify that the digital indication reads 0.000. If the TI is a vernier type, verify that the TI zero marks are aligned, as applicable. OUTSIDE ACCURACY TEST Determine the gage blocks required to obtain test points at a minimum of 4 point throughout the first inch of the TI as follows .125, .300, .650, and 1.000 inch.

4.1.2 4.1.3 4.1.4 4.2 4.2.1

4.2.2. Open the TI to beyond the first test point. Insert the gage blocks and close the jaws until they are firmly in contact with the gage block. Repeat each reading 3-5 times and verify that each indication does not exceed +/- of the least dial graduation. 4.2.3 4.2.4 4.3 4.3.1 Verify that the first inch test points are within +/- .001 inch for 0-6 range and +/.002 for 0-12calipers. Repeat steps 4.2.2 and 4.2.3 for the remaining test points of 25%, 50%, 75% and full range (100%). INSIDE ACCURACY TEST Determine the gage blocks required to obtain test points at a minimum of 4 point throughout the first inch of the TI as follows .125, .300, .650, and 1.000 inch.

4.3.2. Using a three gage block set-up. Place the test gage block between two other blocks and secure with a thumb screw type clamp (Figure 1). Open the TI to the approximately the first test point. Insert the TI and open the jaws until they are firmly in contact with the inside of the gage blocks. Repeat each reading 3-5 times and verify that each indication does not exceed +/- of the least dial graduation. 4.3.3 4.3.4 Verify that the first inch test points are within +/- .001 inch for 0-6 range and +/.002 for 0-12calipers. Repeat steps 4.2.2 and 4.2.3 for the remaining test points of 25%, 50%, 75% and full range (100%).

Test Point G age Block G age Block G age Block

Clamp

Figure 1 Inside Measurement Set-up 4.4 4.4.1 DEPTH ACCURACY Position the end of the TI beam against a 1.0 inch gage block surface with the end of the rod against the surface plate or steel gage block.

4.4.2 4.4.3 4.4.4 4.4.5

Ensure that the TI gage measuring surfaces are squarely placed against the surface of the gage block and the surface plate. Make any necessary final adjustments and note the scale indication. Verify that the value(s) noted in the preceding step is within +/- .001 for 0-6 range and +/- .002 for 0-12calipers. Perform steps 4.4.1 through 4.4.4 for each additional inch of depth gage range, changing gage blocks, as necessary. SECTION 5 CALIBRATION PROCESS OUTSIDE MICROMETERS

5.1 5.1.1

Determine the gage blocks required to obtain test points at a minimum of 5 point. (Lowest TI indication, 20%, 40%, 60%, 80% and full range.) Adjust the spindle several tenths of an inch from zero, based on the TI basic length. Starting with the gage block equal to the lowest TI range, slide the gage block(s) between the anvil and spindle. Adjust the TI as applicable to contact the gage block(s). Repeat each reading 2-3 times and verify each indication. Refer to Table 3 Micrometer Calibration Tolerance. Repeat step 5.1.1 & 5.1.2 through the complete range of the TI. If the TI is the interchangeable anvil type, attach the each anvil in range and verify as outlined in paragraph 5.1-5.1.3.

5.1.2

5.1.3 5.2

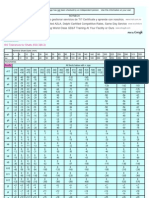

Table 3 Micrometer Calibration Tolerance Micrometer Size Range Calibration Tolerance Calibration Tolerance w/vernier Without vernier 0-1 +/- .0001 +/- .001 1-2 through 9-10 +/- .0002 +/- .001 10-11 and greater +/- .0003 +/- .002 SECTION 6 LABELING AND RECORDS 6.1 Complete a Measuring and Test Calibration Record (QA-4) for each item calibrated. Utilize additional QA-4 form as continuation sheet as needed.

6.2

Affix a calibration label to the TI with the date calibrated, date of next calibration and who performed the calibration. 6.2.1 Special calibration label shall be applied to any item that has not been calibrated to its fully range of capability.

6.3

All completed calibration records shall be file and maintained.

Potrebbero piacerti anche

- SOP For Inhouse CalibrationDocumento7 pagineSOP For Inhouse Calibrationvasudeva yasas80% (5)

- Calibration ProcedureDocumento5 pagineCalibration ProcedureAkhilesh KumarNessuna valutazione finora

- Vernier CaliperDocumento2 pagineVernier CaliperMechtek LabNessuna valutazione finora

- TS-0048 Caliper Calibration ProcedureDocumento2 pagineTS-0048 Caliper Calibration Proceduregiamillia100% (2)

- Calibration Procedure.Documento7 pagineCalibration Procedure.Mohammed Kamal75% (8)

- Calibration Certificate Basic 315Documento1 paginaCalibration Certificate Basic 315Anonymous xnpIPaMoNessuna valutazione finora

- 003-Megger - Insulation TesterDocumento4 pagine003-Megger - Insulation TesterAjlan KhanNessuna valutazione finora

- Yoke Calibration ProcedureDocumento1 paginaYoke Calibration ProcedureArjun Rawat100% (3)

- Calibration Procedure For Measuring and Testing EquipmentDocumento5 pagineCalibration Procedure For Measuring and Testing Equipmentade_sofyanNessuna valutazione finora

- Cal Cert FormatDocumento6 pagineCal Cert FormatINSTRUCAL CALIBRATION SERVICESNessuna valutazione finora

- ISO - 286-2 Shaft Limits TolerancesDocumento2 pagineISO - 286-2 Shaft Limits TolerancesMixtli Garcia100% (1)

- Instrument Calibration ProcedureDocumento10 pagineInstrument Calibration ProcedureMohammad Nurayzat JohariNessuna valutazione finora

- Outside Micrometer Calibration Procedure: 1.0 Standards and EquipmentDocumento2 pagineOutside Micrometer Calibration Procedure: 1.0 Standards and Equipmenterharsingh100% (1)

- Instrument Calibration ProcedureDocumento16 pagineInstrument Calibration ProcedureMohammad Nurayzat JohariNessuna valutazione finora

- Dial Indicator Calibration Procedure TS-0051Documento3 pagineDial Indicator Calibration Procedure TS-0051alexrferreiraNessuna valutazione finora

- Digital Multimeter CalibrationDocumento6 pagineDigital Multimeter CalibrationKhalidNessuna valutazione finora

- Calibration Procedure For Calibration of External MicrometerDocumento11 pagineCalibration Procedure For Calibration of External MicrometerDiganta Hatibaruah100% (1)

- Calibration Procedure FOR Calipers General: Technical ManualDocumento8 pagineCalibration Procedure FOR Calipers General: Technical ManualrcmetrologyNessuna valutazione finora

- Calibration Procedure PMIDocumento8 pagineCalibration Procedure PMIbmkale100% (1)

- Calibration Interval 1Documento7 pagineCalibration Interval 1fajar_92Nessuna valutazione finora

- 1.SOP For Calibratioon of Pressure GaugesDocumento3 pagine1.SOP For Calibratioon of Pressure Gaugesget_engineer05100% (1)

- QSCP-92 - Welding MachinesDocumento3 pagineQSCP-92 - Welding Machineszaheeruddin_mohdNessuna valutazione finora

- Standard Procedure For Calibration of GaugesDocumento12 pagineStandard Procedure For Calibration of Gaugessharif sayyid al mahdalyNessuna valutazione finora

- SOP 006 Rev - NC Calibration & Verification of EquipmentDocumento5 pagineSOP 006 Rev - NC Calibration & Verification of EquipmentmeongNessuna valutazione finora

- SOP-QAD-001 - Inhouse CalibrationDocumento4 pagineSOP-QAD-001 - Inhouse Calibrationdanaka00750% (2)

- Procedure For Caliper Calibration (Rev.1)Documento10 pagineProcedure For Caliper Calibration (Rev.1)syisyi87Nessuna valutazione finora

- Method Statement For PH MeterDocumento2 pagineMethod Statement For PH MeterMuhamed RasheedNessuna valutazione finora

- SOP of Calibration of BalanceDocumento3 pagineSOP of Calibration of BalanceYousif100% (1)

- Calibration, American Laboratory: FlijkeDocumento3 pagineCalibration, American Laboratory: FlijkeAMINE MEDDAHINessuna valutazione finora

- Dial Gauge Calibration ProcedureDocumento6 pagineDial Gauge Calibration ProcedurearabcalNessuna valutazione finora

- Fluk Ammeter CalibrationDocumento250 pagineFluk Ammeter CalibrationASHUTOSH BHATTNessuna valutazione finora

- Vernier Calibration ProcedureDocumento12 pagineVernier Calibration ProcedureAaron QuinnNessuna valutazione finora

- Calibration and Verification OF Spherical Tanks: Antwerp Februari 2001Documento6 pagineCalibration and Verification OF Spherical Tanks: Antwerp Februari 2001m1c2rNessuna valutazione finora

- 02 - Calibration Procedure - Asf-Qc-Cal-001Documento7 pagine02 - Calibration Procedure - Asf-Qc-Cal-001Sherif YehiaNessuna valutazione finora

- Calibration Report For Digital Thermometer: Sample Customer S/N: X-XXXX Report Number: W195839Documento4 pagineCalibration Report For Digital Thermometer: Sample Customer S/N: X-XXXX Report Number: W195839Sherwin John NavarroNessuna valutazione finora

- Comparison Method Calibration For HydrometerDocumento12 pagineComparison Method Calibration For HydrometerHi Tech Calibration ServicesNessuna valutazione finora

- Height Gauge Calibration Procedure: 1.0 Standards and EquipmentDocumento2 pagineHeight Gauge Calibration Procedure: 1.0 Standards and EquipmentKiranNessuna valutazione finora

- Calibration PDFDocumento20 pagineCalibration PDFHakim HakimNessuna valutazione finora

- Method Statement For Conductivity MeterDocumento2 pagineMethod Statement For Conductivity MeterMuhamed RasheedNessuna valutazione finora

- Calibration HandbookDocumento46 pagineCalibration HandbookWaqas83% (23)

- Analytical Balance SOPDocumento2 pagineAnalytical Balance SOPdivine productionNessuna valutazione finora

- Calibration ProceduresDocumento24 pagineCalibration Procedurestingpadu83% (6)

- Calibration of Phase Sequence IndicatorDocumento6 pagineCalibration of Phase Sequence IndicatorManivasagan VasuNessuna valutazione finora

- Calibration Thermometer PDFDocumento7 pagineCalibration Thermometer PDFGilberto Andrés Jurado100% (1)

- How To Calibrate An IR Thermometer - Frank Liebmann 2017-06-21Documento35 pagineHow To Calibrate An IR Thermometer - Frank Liebmann 2017-06-21CALIBRATION TRACKING100% (1)

- CAT CP 1 (T) Calibration of RTDDocumento4 pagineCAT CP 1 (T) Calibration of RTDAmar Singh100% (1)

- Insulation Tester 1 CI 02 I2Documento13 pagineInsulation Tester 1 CI 02 I2ebbasinghNessuna valutazione finora

- METHOD STATEMENT PRESSURE SWITCH UAE Univ.Documento2 pagineMETHOD STATEMENT PRESSURE SWITCH UAE Univ.Muhamed RasheedNessuna valutazione finora

- U 5753 Centrifuge 17300Documento1 paginaU 5753 Centrifuge 17300NENO BHUBANESWAR100% (1)

- Thermometer CalibrationDocumento8 pagineThermometer CalibrationGeroldo 'Rollie' L. Querijero100% (1)

- Sop-43 Calibration of Pressure Transmitter For Pressure, Differential Pressure, Flow & LevelDocumento4 pagineSop-43 Calibration of Pressure Transmitter For Pressure, Differential Pressure, Flow & LevelOSAMA100% (1)

- NMS FT 78 Calibration Certificate Format SoundDocumento1 paginaNMS FT 78 Calibration Certificate Format SoundYeswe calNessuna valutazione finora

- Deutscher Kalibrierdienst: Guideline DKD-R 5-1 Calibration of Resistance ThermometersDocumento24 pagineDeutscher Kalibrierdienst: Guideline DKD-R 5-1 Calibration of Resistance Thermometersjonh66-1Nessuna valutazione finora

- Calibration Procedures Virginia PDFDocumento81 pagineCalibration Procedures Virginia PDFraja qammarNessuna valutazione finora

- CalibrationDocumento6 pagineCalibrationMadhavan RamNessuna valutazione finora

- U 929 RPM MeterDocumento7 pagineU 929 RPM MeterAmit KumarNessuna valutazione finora

- NIST Stopwatch & Timer Calibrations 2009Documento82 pagineNIST Stopwatch & Timer Calibrations 2009ririmonir100% (1)

- Instrument Calibration ProcedureDocumento16 pagineInstrument Calibration ProcedureRino SutrisnoNessuna valutazione finora

- CP-003 Calibration of Vernier CaliperDocumento6 pagineCP-003 Calibration of Vernier CaliperSUNILNessuna valutazione finora

- H-3860D - Man - 0816 DesgasteDocumento8 pagineH-3860D - Man - 0816 Desgastealvaro almendarezNessuna valutazione finora

- Micrometer CalibrationDocumento4 pagineMicrometer CalibrationSHANENessuna valutazione finora

- Oily Water Separator Safety DevicesDocumento15 pagineOily Water Separator Safety DevicesMohammad Jahid Alam100% (6)

- TdsDocumento2 pagineTdsSudipta BainNessuna valutazione finora

- Performance Data: Schedule of Technical Data Genius Cooling Tower Model: GPC 125M Project: Afc Bukit JalilDocumento1 paginaPerformance Data: Schedule of Technical Data Genius Cooling Tower Model: GPC 125M Project: Afc Bukit JalilJeghiNessuna valutazione finora

- 3046 Heavy Equipment Maintenance RepairDocumento12 pagine3046 Heavy Equipment Maintenance Repairام احمدNessuna valutazione finora

- 09highly Coupled Dist SystDocumento19 pagine09highly Coupled Dist SystJohn YuNessuna valutazione finora

- Using CHEMCAD For Piping Network Design and AnalysisDocumento51 pagineUsing CHEMCAD For Piping Network Design and AnalysisRawlinsonNessuna valutazione finora

- Design Procedure of A Pyramid Shaped HopperDocumento4 pagineDesign Procedure of A Pyramid Shaped HopperOkayNessuna valutazione finora

- Paper On Curing Methods of GPCDocumento17 paginePaper On Curing Methods of GPCKUMAR RAJUNessuna valutazione finora

- MSDS CR2032 DBV 2017Documento5 pagineMSDS CR2032 DBV 2017Humberto GarciaNessuna valutazione finora

- Lecture 7 - The Environmental Impact of Vehicle EmissionsDocumento8 pagineLecture 7 - The Environmental Impact of Vehicle EmissionsSanthoshKumarSharmaCHNessuna valutazione finora

- Caleb Catalogue DiscspringDocumento5 pagineCaleb Catalogue DiscspringsantoshNessuna valutazione finora

- Preparation of Methylene Chloride (Greene 1879)Documento2 paginePreparation of Methylene Chloride (Greene 1879)j9cr4zrdxxNessuna valutazione finora

- Piping Color CodesDocumento12 paginePiping Color CodescrysNessuna valutazione finora

- Safe Furnace and Boiler Firing (2012) PDFDocumento96 pagineSafe Furnace and Boiler Firing (2012) PDFFrank Seipel6100% (1)

- 13 SVC Systems Power Quality D 2008-03-12Documento10 pagine13 SVC Systems Power Quality D 2008-03-12satelite54100% (1)

- Fuse BibleDocumento164 pagineFuse BiblepaulNessuna valutazione finora

- Multi - 1 Drills: YE-ML20Documento2 pagineMulti - 1 Drills: YE-ML20Michel DominguesNessuna valutazione finora

- Goodfellow SRMs AlloyDocumento306 pagineGoodfellow SRMs AlloytqthienNessuna valutazione finora

- High Performance Concrete With Partial Replacement of Cement by ALCCOFINE & Fly AshDocumento6 pagineHigh Performance Concrete With Partial Replacement of Cement by ALCCOFINE & Fly AshvitusstructuresNessuna valutazione finora

- Cassida Fluid CR 46 - SD - (Gb-En)Documento9 pagineCassida Fluid CR 46 - SD - (Gb-En)Huu Tri HuynhNessuna valutazione finora

- CSWIP3 1题目Documento6 pagineCSWIP3 1题目龚勇勇Nessuna valutazione finora

- VOITH Variable Speed Fluid CouplingsDocumento20 pagineVOITH Variable Speed Fluid CouplingsSamir BenabdallahNessuna valutazione finora

- Chemical CompositionDocumento219 pagineChemical CompositionPhan Huy100% (5)

- Coagulants For Water TreatmentDocumento48 pagineCoagulants For Water TreatmentClauwie Ganoy BuenavistaNessuna valutazione finora

- F1079Documento2 pagineF1079Gustavo Suarez100% (1)

- Towards 4.0: The Smelter of The FutureDocumento4 pagineTowards 4.0: The Smelter of The FutureShyamal VermaNessuna valutazione finora

- Elective Course (2) - Composite Materials MET 443Documento16 pagineElective Course (2) - Composite Materials MET 443يوسف عادل حسانينNessuna valutazione finora

- MUlecular Distilation UnitDocumento6 pagineMUlecular Distilation UnitElly SufriadiNessuna valutazione finora

- Flexible Cables BrochureDocumento16 pagineFlexible Cables Brochuresunil_v50% (1)