Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Kmeans Algorithm

Caricato da

Chhanda SarkarDescrizione originale:

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Kmeans Algorithm

Caricato da

Chhanda SarkarCopyright:

Formati disponibili

Advances in Computational Sciences and Technology ISSN 0973-6107 Volume 3 Number 1 (2010) pp. 18 Research India Publications http://www.ripublication.com/acst.

htm

Image Classification by K-means Clustering

P. Jeyanthi1 and V. Jawahar Senthil Kumar2

1

Dept of Information Technology, Research Scholar, Sathyabama University, Old Mahapalipuram Road, Chennai, Tamil Nadu, Pin-600119. 2 Dept of Electronics and communication Engg., Anna University, Chennai

Abstract In a content based image retrieval system, target images are sorted by feature similarities with respect to the query (CBIR)[5].In this paper, we propose to use K-means clustering for the classification of feature set obtained from the histogram. Histogram provides a set of features for proposed for Content Based Image Retrieval (CBIR). Hence histogram method further refines the histogram by splitting the pixels in a given bucket into several classes[1]. Here we compute the similarity for 8 bins and similarity for 16 bins. Standard histograms, because of their efficiency and insensitivity to small changes, are widely used for content based image retrieval. But the main disadvantage of histograms is that many images of different appearances can have similar histograms because histograms provide coarse characterization of an image.

Introduction

Color histograms are widely used for retrieval of results based on queries. For such queries, color histograms can be employed because they are very efficient regarding computations as well as they offer insensitivity to small changes regarding camera position[1]. But the main problem with color histograms is their coarse characterization of an image. That may itself result in same histograms for images with different appearances. Color histograms are employed in systems such as QBIC, Chabot etc. They all utilize the advantages of color histogram. In this paper, a modified scheme based on color histogram is used. This modified method is based on histogram refinement [1]. The histogram refinement method provides that the pixels within a given bucket be split into classes based upon some local property and these split histograms are then compared on bucket by bucket basis just like normal histogram matching but the pixels within a bucket with same local property are compared. So the results are better than the normal histogram matching. So not only

P. Jeyanthi and V. Jawahar SenthilKumar

the color features of the image are used but also the spatial information is incorporated to refine the histogram. Introduction to Content Based image Retrieval The size of image databases had increased dramatically in recent years. Causes include the development of image capturing devices such as digital cameras and the internet. New techniques and tools need to be proposed with efficient results for sorting, browsing, searching and retrieving images. The text-based approach can be tracked back to 1970s for retrieving images by using annotations. In 1980s contentbased image retrieval - CBIR [4] was introduced to overcome some disadvantages of the text-based approach. Content-based image retrieval(CBIR) has become an important practicable technique to support effective searching and browsing of larger and larger collections of unstructured images and videos. Content-based image retrieval - CBIR uses visual content (low-level features) of images such as color, texture, shape, etc. to represent and to index images. These features are described by multi-dimensional vectors called feature vectors that are used in the process of retrieve similar images. Extensive experiments on CBIR show that low-level features not represent exactly the high-level semantic concepts and can fail when used to retrieve similar images. In order to overpass this problem, different approaches aim to propose new methods that use different techniques combined with low level descriptors. Different CBIR systems have been developed such as SIMPLIcity ,CLUE [5] and others. More specifically, the discrepancy between the limited descriptive power of low-level image feature and the richness of user semantics, is referred to as the semantic gap [4]. In order to bridge this gap, different approaches aim to propose new methods by combining low level features and other techniques as textual annotations for creating new descriptors that improve the results in image retrieval. However, the retrieve process become more complex and any method does not warranty the absolute accuracy of results. Low level Features for Image Retrieval Different techniques were proposed for extracting low-level features. Color feature is one of the most widely used features in image retrieval because it is efficient in describing colors although it is not directly related to high-level semantics. MPEG-7 is an ISO/IEC standard developed by Moving Pictures Expert Group for standardizing the description of multimedia content data. This standard defines seven color descriptors: Color space, color quantization, dominant colors, scalable color, color layout, color-structure, and GoF/GoP color. The scalable color descriptor is a color histogram in HSV color space, which is encoded by a Haar transform. Its binary representation is scalable in terms of bin numbers and bit representation accuracy over a broad range of data rates. Color layout descriptor represents the spatial distribution of color of visual signals in a very compact form. This compactness allows visual signal matching functionality with high retrieval efficiency at very small computational costs. The color-structure descriptor captures color content and

Image Classification by K-means Clustering

information about the structure of this content. To represent each image for image retrieving, their low level features are calculated. After that, an algorithm of searching and indexing is applied for retrieving the most similar images.

Existing System

In existing system the standard histograms are used. Standard histograms, because of their efficiency and insensitivity to small changes, are widely used for content based image retrieval. But the main disadvantage of histograms is that many images of different appearances can have similar histograms[4] because histograms provide coarse characterization of an image.

Proposed System

In this project, we propose to use K-means clustering for the classification of feature set obtained from the histogram refinement method. Histogram refinement provides a set of features for proposed for Content Based Image Retrieval (CBIR). Histogram refinement method further refines the histogram by splitting the pixels in a given bucket into several classes and producing the comparison graph of 8-bin(bucket) and 16 bin.

Segmentation & Quantization

In this module first the RGB image is changed to grayscale image, also known as the intensity image, which is a single 2-D matrix containing values from 0 to 255. After the conversion from RGB to grayscale image, we perform quantization to reduce the number of levels in the image. We reduce the 256 levels to 16 levels in the quantized image by using uniform quantization[1]. The segmentation is done by using color histograms. Cluster Analysis A cluster is a collection of data objects that are similar to one another within the same cluster and are dissimilar to the objects in other clusters. Cluster analysis has been widely used in numerous applications, including pattern recognition, data analysis, image processing, and market research. By clustering, one can identify dense and sparse regions and therefore, discover overall distribution patterns and interesting correlations among data attributes. As a branch of statistics, cluster analysis has been studied extensively for many years, focusing mainly on distance-based cluster analysis. Cluster analysis tools based on k-means, k-medoids, and several other methods have also been built into many statistical analysis software packages or systems, such as S-Plus, SPSS, and SAS. In machine learning, clustering is an example of unsupervised learning. Unlike classification, clustering and unsupervised learning do not rely on predefined classes and class-labeled training examples. For this reason, clustering is a form of learning by observation, rather than learning by examples. In conceptual clustering, a group of objects forms a class only if it is describable by a concept. This differs from

P. Jeyanthi and V. Jawahar SenthilKumar

conventional clustering, which measures similarity based on geometric distance. Conceptual clustering consists of two components: (1) it discovers the appropriate classes, and (2) it forms descriptions for each class, as in classification. The guideline of striving for high intraclass similarity and low interclass similarity still applies.

Categorization Of Major Clustering Methods

Centroid-Based Technique: The k-Means Method The k-means algorithm takes the input parameter, k, and partitions a set of n objects into k clusters so that the resulting intracluster similarity is high but the intercluster similarity is low. Cluster similarity is measured in regard to the mean value of the objects in a cluster, which can be viewed as the clusters center of gravity. How does the k-means algorithm work The k-means algorithm proceeds as follows. First, it randomly selects k of the objects, each of which initially represents a cluster mean or center. For each of the remaining objects, an object is assigned to the cluster to which it is the most similar, based on the distance between the object and the cluster mean. It then computes the new mean for each cluster. This process iterates until the criterion function converges. Typically, the squared-error criterion is used, defined as E = ki=1pci | p mi|2 , Where E is the sum of square-error for all objects in the database, p is the point in space representing a given object, and mi is the mean of cluster ci (both p and mi are multidimensional). This criterion tries to make the resulting k clusters as compact and as separate as possible. The algorithm attempts to determine k partitions that minimize the squared error function. It works well when the clusters are compact clouds that are rather well separated from one another. The method is relatively scalable and efficient in processing large data sets because the computational complexity of the algorithm is O(nkt), where n is the total number of objects, k is the number of clusters, and t is the number of iterations. Normally, k << n and t << n. The method often terminates at a local optimum. The k-means method, however, can be applied only when the mean of a cluster is defined. This may not be the case in some applications, such as when data with categorical attributes are involved. The necessity for users to specify k, the number of clusters, in advance can be seen as a disadvantage. The k-means method is not suitable for discovering clusters with nonconvex shapes or clusters of very different size. Moreover, it is sensitive to noise and outlier data points since a small number of such data can substantially influence the mean value Suppose that there is a set of objects located in space, Let k = 2; that is, the user would like to cluster the objects into two clusters. According to the algorithm, we arbitrarily choose two objects as the two initial cluster centers, where cluster centers are marked by a +. Each object is distributed to a cluster based on the cluster center to which it is the nearest. Such a distribution forms silhouettes encircled by dotted curves, as shown in Fig.1(a).This kind of grouping will update the cluster centers. That is, the mean value of each cluster is recalculated based on the objects in the cluster. Relative to these new centers, objects are redistributed to the cluster domains based on which cluster center is the nearest.

Image Classification by K-means Clustering

Such a redistribution forms new silhouettes encircled by dashed curves, as shown in Fig.1(b). Eventually, no redistribution of the objects in any cluster occurs and so the process terminates. The resulting clusters are returned by the clustering process. The k-means algorithm Algorithm: k-means. The k-means algorithm for partitioning based on the mean value of the objects in the cluster. Input: The number of clusters k and a database containing n objects. Output: A set of k clusters that minimizes the squared-error criterion. Method: (1) arbitrarily choose k objects as the initial cluster centers: (2) repeat (3) (re)assign each object to the cluster to which the object is the most similar, based on the mean value of the objects in the cluster; (4) Update the cluster means, i.e., calculate the mean value of the objects for each cluster; (5) Until no change; Block Diagram

Query Image Compute Similarity for 8-bin Retrieve Images

Database Image

Compute Similarity for 16-bin

Retrieve Images

Calculate Precision Value

Calculate Recall Value

Plot a Graph

Dfd for 8 Bin

Query Image Database Image Query Image

Dfd for 16 Bin

Database Image

Find Histogram

Find Difference

Find Histogram

Find Histogram

Find Difference

Find Histogram

Quantize to 8-Bin

Quantize to 8-Bin Segmentation & Clustering

Quantize to 16-Bin

Quantize to 8-Bin Segmentation & Clustering

Segmentation & Clustering

Similarity Matrix Quadratic distance Display Images

Segmentation & Clustering

Similarity Matrix Quadratic distance Display Images

P. Jeyanthi and V. Jawahar SenthilKumar

The purpose of K-mean clustering is to classify the data. We selected K-means clustering because it is suitable to cluster large amounts of data. K-means creates a single level of clusters unlike hierarchical clustering methods tree structure. Each observation in the data is treated as an object having a location in space and a partition is found in which objects within each cluster are as close to each other as possible, and as far from objects in other clusters as possible. Selection of distance measure is an important step in clustering. Distance measure determines the similarity of two elements. It greatly influences the shape of the clusters, as some elements may be close to one another according to one distance and further away according to another. We selected to use quadratic distance measure which provides the quadratic between the various features. We calculated the distance between all the row vectors of our feature set obtained from previous section, hence finding similarity between every pair of objects in the data set. The result is a distance matrix. Next, we used the member objects and the centroid to define each cluster. The centroid for each cluster is the point to which the sum of distances from all objects in that cluster is minimized. The distance information generated above is utilized to determine the proximity of objects to each other. The objects are grouped into Kclusters using the distance between the centroids of the two groups. Let Op is the number of objects in cluster p and Oq is the number of objects in cluster q, dpi is the ith object in cluster p and dqj is the jth object in cluster q. The centroid distance between the two clusters p and q is given as:

Where,

Display the Images

In this module the retrieved images are displayed. Find the difference between histograms of query image & Database image. Find the similarity matrix of Query image & Database image. Calculate the Quadratic distance. Retrieve the relevant image.

Calculate Precision Retrieval images Using 8-bin Calculate Recall Rate Plot a Graph Calculate Precision Retrieval images Using 16-bin Calculate Recall Rate

Image Classification by K-means Clustering Retrieval For 8-Bin Retrieval For 16-Bin

Plotting a Graph

Find the Precision rate for the retrieved images of using 8-bin and 16-bin. Precision rate = relevant image/ returned image Find the Recall rate for the retrieved images of using 8-bin and 16-bin. Recall rate = relevant image /total number of image.

Graph For Recall Rate

Conclusion

This project proposes to use K-means clustering for the feature set obtained using the histogram refinement method which is based on the concept of coherency and incoherency. The feature selection is based on the number, color and shape of objects present in the image. The grayscale values difference, mean, sizes of the objects are considered as appropriate features for retrieval. For indexing of images, we proposed

P. Jeyanthi and V. Jawahar SenthilKumar

K-means clustering. We have shown that K-means clustering is quite useful for relevant image retrieval queries. Future Enhancement This classification of feature set can be enhanced to heterogeneous (shape, texture) so that we can get more accurate result. It can also enhanced to merging of heterogeneous features and neural network. The schemes proposed in this work can be further improved by introducing fuzzy logic concepts into the clustering process.

References

[1] [2] [3] [4] [5] Youngeun An, Junguk Baek etal,Classification of Feature set using K-means Clustering from Histogram Refinement method,IEEE 2008. Donn Morrison, Stephane Marchand Maillet, Eric Bruno, Semantic clustering of images using patterns of relevance feedback, IEEE 2008. Bink Wang, Xin Zhang,XiaoYan Zhao, Zhi-De Zhang,Hong- Xia zhang, A semantic description for content based Image retrieval ,IEEE 2008. Raquel E.Patino-Escarcina and Jose Alfredoferreira costa, The semantic clustering of images and its relation with low level color features, IEEE 2008. Yixin Chen, James Z. Wang, Krovetz, CLUE: cluster-based retrieval of images by unsupervised learning, IEEE Transaction on Image Processing vol.14, No.8, August 2005. Xiaoxin Yin, Mingjing Li, Lei Zhang, Hongjiang Zhang, Semantic image clustering using relevance feedback, IEEE 2003. Gholamhosein, Sheikholeslami et al., Semquery:Semantic Clustering and Querying on Heterogeneous Features For Visual Data, IEEE Transaction on Knowledge and Data Engineering ,vol .14,No.5,September/October 2002. J.Z.Wang, J. Li, and G.Wiederhold. Simplicity: semanticssensitive integrated matching for picture libraries. IEEE Trans. Pattern Anal. Mach. Intell., 23(9):947963, 2001.

[6] [7]

[8]

Potrebbero piacerti anche

- SAP Workflow in Plain English: Applies ToDocumento12 pagineSAP Workflow in Plain English: Applies Tosap100912Nessuna valutazione finora

- Link List ProgramDocumento3 pagineLink List ProgramChhanda SarkarNessuna valutazione finora

- Wipro Placement Paper 2010:-Aptitude TestDocumento7 pagineWipro Placement Paper 2010:-Aptitude TestChhanda SarkarNessuna valutazione finora

- Full FormsDocumento1 paginaFull FormsChhanda SarkarNessuna valutazione finora

- AI QsDocumento2 pagineAI QsChhanda SarkarNessuna valutazione finora

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDa EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeValutazione: 4 su 5 stelle4/5 (5783)

- The Yellow House: A Memoir (2019 National Book Award Winner)Da EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Valutazione: 4 su 5 stelle4/5 (98)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDa EverandNever Split the Difference: Negotiating As If Your Life Depended On ItValutazione: 4.5 su 5 stelle4.5/5 (838)

- Shoe Dog: A Memoir by the Creator of NikeDa EverandShoe Dog: A Memoir by the Creator of NikeValutazione: 4.5 su 5 stelle4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerDa EverandThe Emperor of All Maladies: A Biography of CancerValutazione: 4.5 su 5 stelle4.5/5 (271)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDa EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceValutazione: 4 su 5 stelle4/5 (890)

- The Little Book of Hygge: Danish Secrets to Happy LivingDa EverandThe Little Book of Hygge: Danish Secrets to Happy LivingValutazione: 3.5 su 5 stelle3.5/5 (399)

- Team of Rivals: The Political Genius of Abraham LincolnDa EverandTeam of Rivals: The Political Genius of Abraham LincolnValutazione: 4.5 su 5 stelle4.5/5 (234)

- Grit: The Power of Passion and PerseveranceDa EverandGrit: The Power of Passion and PerseveranceValutazione: 4 su 5 stelle4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDa EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaValutazione: 4.5 su 5 stelle4.5/5 (265)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDa EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryValutazione: 3.5 su 5 stelle3.5/5 (231)

- On Fire: The (Burning) Case for a Green New DealDa EverandOn Fire: The (Burning) Case for a Green New DealValutazione: 4 su 5 stelle4/5 (72)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDa EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureValutazione: 4.5 su 5 stelle4.5/5 (474)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDa EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersValutazione: 4.5 su 5 stelle4.5/5 (344)

- The Unwinding: An Inner History of the New AmericaDa EverandThe Unwinding: An Inner History of the New AmericaValutazione: 4 su 5 stelle4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDa EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyValutazione: 3.5 su 5 stelle3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDa EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreValutazione: 4 su 5 stelle4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Da EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Valutazione: 4.5 su 5 stelle4.5/5 (119)

- Her Body and Other Parties: StoriesDa EverandHer Body and Other Parties: StoriesValutazione: 4 su 5 stelle4/5 (821)

- Amul ReportDocumento48 pagineAmul ReportUjwal JaiswalNessuna valutazione finora

- Childrens Ideas Science0Documento7 pagineChildrens Ideas Science0Kurtis HarperNessuna valutazione finora

- Individual Assignment ScribdDocumento4 pagineIndividual Assignment ScribdDharna KachrooNessuna valutazione finora

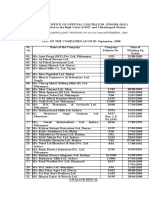

- Statement of Compulsory Winding Up As On 30 SEPTEMBER, 2008Documento4 pagineStatement of Compulsory Winding Up As On 30 SEPTEMBER, 2008abchavhan20Nessuna valutazione finora

- Active and Passive Voice of Future Continuous Tense - Passive Voice Tips-1Documento5 pagineActive and Passive Voice of Future Continuous Tense - Passive Voice Tips-1Kamal deep singh SinghNessuna valutazione finora

- Vidura College Marketing AnalysisDocumento24 pagineVidura College Marketing Analysiskingcoconut kingcoconutNessuna valutazione finora

- IP68 Rating ExplainedDocumento12 pagineIP68 Rating ExplainedAdhi ErlanggaNessuna valutazione finora

- Waves and Thermodynamics, PDFDocumento464 pagineWaves and Thermodynamics, PDFamitNessuna valutazione finora

- School Quality Improvement System PowerpointDocumento95 pagineSchool Quality Improvement System PowerpointLong Beach PostNessuna valutazione finora

- MMW FinalsDocumento4 pagineMMW FinalsAsh LiwanagNessuna valutazione finora

- Impact of Bap and Iaa in Various Media Concentrations and Growth Analysis of Eucalyptus CamaldulensisDocumento5 pagineImpact of Bap and Iaa in Various Media Concentrations and Growth Analysis of Eucalyptus CamaldulensisInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- Embryo If Embryonic Period PDFDocumento12 pagineEmbryo If Embryonic Period PDFRyna Miguel MasaNessuna valutazione finora

- Supreme Court rules stabilization fees not trust fundsDocumento8 pagineSupreme Court rules stabilization fees not trust fundsNadzlah BandilaNessuna valutazione finora

- Reservoir Rock TypingDocumento56 pagineReservoir Rock TypingAffan HasanNessuna valutazione finora

- Trading Course DetailsDocumento9 pagineTrading Course DetailsAnonymous O6q0dCOW6Nessuna valutazione finora

- Urodynamics Griffiths ICS 2014Documento198 pagineUrodynamics Griffiths ICS 2014nadalNessuna valutazione finora

- Introduction To OpmDocumento30 pagineIntroduction To OpmNaeem Ul HassanNessuna valutazione finora

- Course Tutorial ASP - Net TrainingDocumento67 pagineCourse Tutorial ASP - Net Traininglanka.rkNessuna valutazione finora

- Modul-Document Control Training - Agus F - 12 Juli 2023 Rev1Documento34 pagineModul-Document Control Training - Agus F - 12 Juli 2023 Rev1vanesaNessuna valutazione finora

- SEO Design ExamplesDocumento10 pagineSEO Design ExamplesAnonymous YDwBCtsNessuna valutazione finora

- New Brunswick CDS - 2020-2021Documento31 pagineNew Brunswick CDS - 2020-2021sonukakandhe007Nessuna valutazione finora

- Boiler Check ListDocumento4 pagineBoiler Check ListFrancis VinoNessuna valutazione finora

- 2019-10 Best Practices For Ovirt Backup and Recovery PDFDocumento33 pagine2019-10 Best Practices For Ovirt Backup and Recovery PDFAntonius SonyNessuna valutazione finora

- JD - Software Developer - Thesqua - Re GroupDocumento2 pagineJD - Software Developer - Thesqua - Re GroupPrateek GahlanNessuna valutazione finora

- Human Resouse Accounting Nature and Its ApplicationsDocumento12 pagineHuman Resouse Accounting Nature and Its ApplicationsParas JainNessuna valutazione finora

- Master of Commerce: 1 YearDocumento8 pagineMaster of Commerce: 1 YearAston Rahul PintoNessuna valutazione finora

- Numerical Methods: Jeffrey R. ChasnovDocumento60 pagineNumerical Methods: Jeffrey R. Chasnov2120 sanika GaikwadNessuna valutazione finora

- Report Daftar Penerima Kuota Telkomsel Dan Indosat 2021 FSEIDocumento26 pagineReport Daftar Penerima Kuota Telkomsel Dan Indosat 2021 FSEIHafizh ZuhdaNessuna valutazione finora

- Emergency Room Delivery RecordDocumento7 pagineEmergency Room Delivery RecordMariel VillamorNessuna valutazione finora

- Problems of Teaching English As A Foreign Language in YemenDocumento13 pagineProblems of Teaching English As A Foreign Language in YemenSabriThabetNessuna valutazione finora