Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Optimization Methods

Caricato da

crisnaCopyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Optimization Methods

Caricato da

crisnaCopyright:

Formati disponibili

Advance Mathematics

Outline Linear Algebra

Reference Books 《⼯程最优化设计》

mathematical modeling identifying the objective,variables,constraints

an region enclosed by multiple constraint

Domain

boundaries

construction of a appropirate model

Feasible Region ⼦主题 1

Basic Steps

an optimization algorithm can be used to find the

once the model is formulated

solution

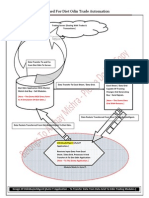

optimization is the process of minimizing or

maximzing of a function subject to constraints on

its variables

linear problems vortex(漩涡) conversion simplex method

golden section search method(GSM)

Fibonacci search method (FSM)

one-dimensional

Quadratic interpolation method

Cubic interpolation method

Steepest descent method

unconstrained optimization

Newton method

optimization is also called "linear programming" Use info of derivatives(派⽣物)

Chapter 1 nonlinear problems Quasi-Newton Method

Conjugate Gradient method

⼦主题 4

Don't use info Powell method

solve directly Feasible direction method

constrained optimization Penalty fuchtion method

solve indirectly

SQP method

1. determinate the domains of the variables

2. identify the feasible region of te solutions

Graphical method

3. plot a few contours of objective function to

search the descent direction of the function

4. find the optimal solutions of the problem

search direction:S0

determinate the optimal step length to minimize

iteration methods descent methods

the function value :a

obtain the new iteration point X1=X0+a*S0

the direction the function value increases most negative gradient direction decreases most

rapidly rapidly

a vector comprised of all the first-order partial

partial derivative gradient

derivatives at this point

decribes the local variation of the function values

at one point

梯度是列向量

directional derivative 注意转置⽅向

⽅向向量也是列向量

one variable

Taylor Expansion ⼆阶:Hessian matrix significant in optimization

multiple variables ⼆次近似

⼀阶

positive definite

positive semi-definite

type of matrix negative positive

negative semi-definite

indefinite

Fundamental of Optimization Positive Definite Quadratic Function(PDQF)

1. the contours of PDQFs are a set of concentric

ellipses; the center of this set is the minimum

point of the PDQFs

characteristics

2. for the non-positive-definite QFs, the contours

near the minimum point are approximately as

ellipses; the contours become irregular when they

are away form the minimum point.

if the straight line segment connecting any two

convexity set

points in S lies entirely inside S

Convexity

for any two points ,the straight line lies above the

convexity function

graph of the function

global minimizer

Minimizer local minimizer

strong minimizer

Descent direction 通过⻆度来判定

only one variables

Extremum conditions first order

multiple variables

second order

Optimization Methods

unimodal function(单峰函数)

STEP1: an initial interval including the minimum

point needs to be determined

basic steps to determine the initial interval

interpolate(差补) two points inside the current

known interval [a,b] , then compare the two

points.

1-D dimensional search method STEP2: then the length of the interval is reduced

after every iteration until the minimum point is when the given convergence accuracy, some

found point inside the interval can be approximately

considered as the minimum point along this

search direction.

ratio of interval reduction

Determining Initial Interval some basic steps

Linear search methods

the principle to determine two pionts

GSM ratio is 0.618

and the principle to determine the convergence

Fn递增:1,1,2,3,5,8,13,21......

FSM

Ln递减

the minimum point Xp 两个条件确定

Powell's quadratic interpolation method(QIM) 类似待定系数法 the direction of parabolic

convergence criterion of QIM X2-Xp

Davidon's cubic interpolation method (CIM)

searching direction negative gradient direction at iteration point

Sk=-gk=-梯度 the search direction

Steepest Descent Method X2=X1+a*Sk df/da=0 a is the step length

Convergence judgement

fault need a lot iterations

utilize the negative gradient and the Hessian

Taylor Expansion of second order

matrix of the function

Newton's Direction Sk

Newton Method

the step length α is 1

Chapter4: Unconstrained Optimization Methods if the Hessians are not positive definite ,the

fault

method is not applicable

DFP 经过迭代H接近H阵的逆

use a positive definite matrix to approximate the f

两种⽅法获得校正值 better

的⼆阶偏导

Quasi-Newton Method BFGS

经过迭代,B接近H阵 B=H的逆

superlinear convergence 1<β<2

PRP

Conjugate Gradient Method

FR

main method : utilizing the information of the first-

order and second order derivatives of the

objective function

constraint equations ought to be equalities if there exists inequalities ,bring in slack variables 将不等式变为等式

constraints

nonnegativity constraints

Basic solution only satisfies the constraint equations

satisfies both constraint equations and the non-

Basic feasible solution

negative constraints for variables

solutions the feasible solution which achieve the optimal

optimal solution

value

set n-m variables to zeros non-basic variables

n variables ; m equations (n>m)

m variables Basic variables

all the constraint equations are the inequalities introduce slack variables basic variables 变量系数为0,其他系数为要求的表达式中的系数

Linear Programming

object function is the sum of all the artificial

variables

Basic Feasible Solution

when the value of the object function is zero, the

constraint equations are equalities introduce artificial variables

solution is founded

the basic feasible solution is the part without the

artificial variables

the principle column with the smallest judgement

number

the row has the smallest quotient(商):b/a ( a属于

The simplex method

第k列 ; a >0 )

the judgement number for the basic variable is and the judgement number for the non-basic

always zero variable 有公式

the iteration points are always confined in the

direct methods feasible region, after considering all the Feasible Direction Method

constraints.

constraints are put into the objective function

such that constrained problems can be converted Penalty Function Method

indirect methods to unconstrained problem, or nonlinear problems

are converted to relatively simple quadratic SQP (Sequential Quadratic Programming) Method

Constrained Optimization Methods

programming problems.

effective constraints ECs for equality-constrained problems Kuhn-Tucker Condition

depending on whether the investigated point is

on the constraint boundary or not

ineffective constraints

ECs for inequality-constrained problems introducing slack variables

⾃由主题

⾃由主题

Potrebbero piacerti anche

- Never Split the Difference: Negotiating As If Your Life Depended On ItDa EverandNever Split the Difference: Negotiating As If Your Life Depended On ItValutazione: 4.5 su 5 stelle4.5/5 (838)

- Shoe Dog: A Memoir by the Creator of NikeDa EverandShoe Dog: A Memoir by the Creator of NikeValutazione: 4.5 su 5 stelle4.5/5 (537)

- FiguresDocumento1 paginaFigurescrisnaNessuna valutazione finora

- Eng PDFDocumento56 pagineEng PDFcrisnaNessuna valutazione finora

- CFDEx1 NotesDocumento25 pagineCFDEx1 NotescrisnaNessuna valutazione finora

- Linear Optimization - A ManufacturerDocumento62 pagineLinear Optimization - A ManufacturercrisnaNessuna valutazione finora

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDa EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeValutazione: 4 su 5 stelle4/5 (5794)

- The Yellow House: A Memoir (2019 National Book Award Winner)Da EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Valutazione: 4 su 5 stelle4/5 (98)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDa EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceValutazione: 4 su 5 stelle4/5 (894)

- The Little Book of Hygge: Danish Secrets to Happy LivingDa EverandThe Little Book of Hygge: Danish Secrets to Happy LivingValutazione: 3.5 su 5 stelle3.5/5 (399)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDa EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureValutazione: 4.5 su 5 stelle4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDa EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryValutazione: 3.5 su 5 stelle3.5/5 (231)

- Grit: The Power of Passion and PerseveranceDa EverandGrit: The Power of Passion and PerseveranceValutazione: 4 su 5 stelle4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDa EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaValutazione: 4.5 su 5 stelle4.5/5 (265)

- On Fire: The (Burning) Case for a Green New DealDa EverandOn Fire: The (Burning) Case for a Green New DealValutazione: 4 su 5 stelle4/5 (73)

- The Emperor of All Maladies: A Biography of CancerDa EverandThe Emperor of All Maladies: A Biography of CancerValutazione: 4.5 su 5 stelle4.5/5 (271)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDa EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersValutazione: 4.5 su 5 stelle4.5/5 (344)

- The Unwinding: An Inner History of the New AmericaDa EverandThe Unwinding: An Inner History of the New AmericaValutazione: 4 su 5 stelle4/5 (45)

- Team of Rivals: The Political Genius of Abraham LincolnDa EverandTeam of Rivals: The Political Genius of Abraham LincolnValutazione: 4.5 su 5 stelle4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDa EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyValutazione: 3.5 su 5 stelle3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDa EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreValutazione: 4 su 5 stelle4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Da EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Valutazione: 4.5 su 5 stelle4.5/5 (119)

- Her Body and Other Parties: StoriesDa EverandHer Body and Other Parties: StoriesValutazione: 4 su 5 stelle4/5 (821)

- OPC UA Part 1 - Overview and Concepts 1.03 SpecificationDocumento27 pagineOPC UA Part 1 - Overview and Concepts 1.03 SpecificationKanenas KanenasNessuna valutazione finora

- Christian Kernozek ResumeDocumento2 pagineChristian Kernozek Resumeapi-279432673Nessuna valutazione finora

- Documentation - Diet Odin Demo ApplicationDocumento4 pagineDocumentation - Diet Odin Demo Applicationashucool23Nessuna valutazione finora

- Dorks-12 10 19-04 46 17Documento36 pagineDorks-12 10 19-04 46 17Jhon Mario CastroNessuna valutazione finora

- A Review On Plant Recognition and Classification Techniques Using Leaf ImagesDocumento6 pagineA Review On Plant Recognition and Classification Techniques Using Leaf ImagesseventhsensegroupNessuna valutazione finora

- March 2018 Fundamental IT Engineer Examination (Afternoon)Documento34 pagineMarch 2018 Fundamental IT Engineer Examination (Afternoon)Denz TajoNessuna valutazione finora

- IBM Rational Quality Manager 000-823 Practice Exam QuestionsDocumento20 pagineIBM Rational Quality Manager 000-823 Practice Exam QuestionsCahya PerdanaNessuna valutazione finora

- GROUP 3 Image CompressionDocumento31 pagineGROUP 3 Image CompressionPrayerNessuna valutazione finora

- Generic EULA template optimized for SEODocumento2 pagineGeneric EULA template optimized for SEONonoyLaurelFernandezNessuna valutazione finora

- AIF20 Master GuideDocumento52 pagineAIF20 Master GuideAntonio Di BellaNessuna valutazione finora

- MSIM 602 Simulation Fundamentals AssignmentDocumento2 pagineMSIM 602 Simulation Fundamentals Assignmentahaque08Nessuna valutazione finora

- Algebra 2 HN 1Documento9 pagineAlgebra 2 HN 1euclidpbNessuna valutazione finora

- PBS CCMD CVMD Administrator GuideDocumento40 paginePBS CCMD CVMD Administrator GuidesharadcsinghNessuna valutazione finora

- Digital Rights ManagementDocumento11 pagineDigital Rights ManagementVignesh KrishNessuna valutazione finora

- Mining Frequent Itemsets and Association RulesDocumento59 pagineMining Frequent Itemsets and Association RulesSandeep DwivediNessuna valutazione finora

- Sap HR FaqDocumento36 pagineSap HR FaqAnonymous 5mSMeP2jNessuna valutazione finora

- Noa MopDocumento103 pagineNoa MopRaja SolaimalaiNessuna valutazione finora

- Common Core Standardized Test Review Math TutorialsDocumento3 pagineCommon Core Standardized Test Review Math Tutorialsagonza70Nessuna valutazione finora

- Comprehensive Project Plan - Sean SectionsDocumento2 pagineComprehensive Project Plan - Sean SectionsSean HodgsonNessuna valutazione finora

- Computer Processing of Human LanguageDocumento2 pagineComputer Processing of Human LanguageKym Algarme50% (2)

- Lecture 11 Unsupervised LearningDocumento19 pagineLecture 11 Unsupervised LearningHodatama Karanna OneNessuna valutazione finora

- Planning Advertising Campaign to Maximize New CustomersDocumento4 paginePlanning Advertising Campaign to Maximize New CustomersAdmin0% (1)

- ReadmeDocumento18 pagineReadmeankit99ankitNessuna valutazione finora

- Platform1 Server and N-MINI 2 Configuration Guide V1.01Documento47 paginePlatform1 Server and N-MINI 2 Configuration Guide V1.01sirengeniusNessuna valutazione finora

- Idm CrackDocumento34 pagineIdm CracktilalmansoorNessuna valutazione finora

- Time Series Using Stata (Oscar Torres-Reyna Version) : December 2007Documento32 pagineTime Series Using Stata (Oscar Torres-Reyna Version) : December 2007Humayun KabirNessuna valutazione finora

- Robo Guide BookDocumento18 pagineRobo Guide BookVenkateswar Reddy MallepallyNessuna valutazione finora

- Integrating Siebel Web Services Aug2006Documento146 pagineIntegrating Siebel Web Services Aug2006api-3732129100% (1)

- Vamsi Krishna Myalapalli ResumeDocumento2 pagineVamsi Krishna Myalapalli ResumeVamsi KrishnaNessuna valutazione finora

- Programmers PDFDocumento5 pagineProgrammers PDFSrinivasan SridharanNessuna valutazione finora