Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Prac Res 2nd Quater Notes

Caricato da

JynRamaCopyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Prac Res 2nd Quater Notes

Caricato da

JynRamaCopyright:

Formati disponibili

RESEARCH 2.6.

2.7.

Projective devices

Stoichiometric devices

2.2. Structured

2.3. Semi-structured

1. Focus observation

2. Concealment

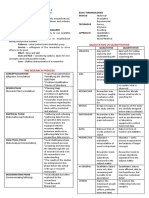

INSTRUMENT Most Frequently Used Data Strengths

3. Duration

Instrument Collection Techniques 1. Good for measuring attitude Types of Observation

-gen. term for researches 1. Documentary analysis & most other content of 1. Structured

-use for measuring device (survey, 2. Interview Schedule interest -uses checklist (data collection

test, questionnaire, etc.) 3. Observation 2. Allow probing by the tool)

-to help distinguish between 4. Physiological Measurement interview -specifies behaviors of

instrumentation, consider that the 5. Psychological Test 3. Can provide in-depth info interest

instrument is the device & 6. Questionnaire 4. Allow good interpretative

instrumentation is the course of validity 2. Unstructured

action (the process of dev., testing 1. Documentary Analysis 5. Very quickly turnaround for -observes things as they happen

& using the device) Use too analyze primary & telephone interview -conducts experiment w/out

-researchers (chooses which type secondary sources 6. Moderately high preconceived ideas about what

of instrument/s to use based on the @ times, data are not measurement validity for will be observed

research question) available/ difficult to locate well-constructed & well-

2 Broad Categories Available mostly: tested interview protocols. 4. Physiological Measures

1. Researcher-Completed (1)churches, (2)schools, collection of physiological

Instrument (3)public/private offices, Weaknesses data from subjects

1.1. Rating Scales (4)hospitals/ community 1. In-person int. are expensive more accurate & objective

1.2. Interview Information gathered tend & time-consuming that any other data-

Schedule/guides to be incomplete & not 2. Perceived anonymity by collection methods

1.3. Tally Sheets definite respondents possible low

1.4. flow chart 3. Data analysis sometimes 5. Psychological Test:

1.5. Performance checklist 2. Interview Schedule time consuming for open- 1. personality inventories

1.6. time-&-motion logs skill of interviewer ended items. -self reported measurements that

1.7. Observation forms determines if the assess the differences of

interviewee is able to 3. Observation personality traits/values of people

2. Subject-Completed express his thoughts clearly observation guide/checklist -gathers info form a person thru

Instrument conducted w/ single person (instrument used) questions/statements that requires

2.1. Questionnaires Focus group interview must be done quietly & responses/reactions

2.2. Self-checklist (conducted w/ gr. of inconspicuous manner so as -EX.: Minnesota Multiphasic

2.3. attitude scales people) to get realistic data Personality Inventory (MMP) & the

2.4. Personality inventories Edwards Personal Preference

2.5. Achievement/aptitude Types of Interview Schedule the ff. should be taken into Schedule (EPPs)

tests 2.1. Unstructured consideration:

2. projective techniques variables being investigated -Q is a combination of 2/ more of standards addressed by an

-subject is presented w/ stimulus in the study types of Q assessment. For ex., if you

designed to be ambiguous/vague descriptive info gathered administer an assessment that

in meaning.

-EX.: Rorschach Inkblot Test &

from diff. sources are called

indicators for the specific

VALIDITY & covers 3 standards, you

CANNOT infer from those results

Thematic Apperception Test variable & they are used in

making sure that the

RELIABILITY whether/ not a student is

proficient in 7th grade

6. Questionnaire (Q) content of the Q is valid mathematics (cuz that is NOT

most common used Valid indicator (supported Validity what you’ve assessed).

instrument in research by prev. studies done by accuracy of inferences

list of Q about a topic, w/ experts) drawn from an assessment Types of Construct Validity

spaces provided for the degree to which the 1. Convergent Validity

response, & intended to be Types of Question assessment measures what it 2. Discriminant Validity

answered by a # of person 1. Yes/ No Type is intended to measure (na 3. Known-Group Validity

less expensive -ex. do you have the right to refuse measure ba niya ang dapat 4. Factorial Validity

yields more honest the call? yes/no? e measure?) 5. Hypothesis- Testing Validity

responses

guarantees confidentiality 2. Recognition Type Types of Validity 1. Convergent Validity

minimizes biases based on -alternative responses are provided 1. Construct validity there is evidence that the

question-phasing mode & respondents simply choose 2. Face Validity same concept measured in

researcher must make sure among the choices 3. Criterion-related Validity diff. ways yields similar results

Q is valid, reliable & include 2 dif. test

unambiguous (critical point 3. Completion Type I. Construct Validity diff. measures of same

in designing) -respondents are asked to fill in the the assessment actually concept yield similar results

tables w/ necessary info measures what is designed to researchers uses self-report

3 Types of Questionnaires measure (A is actually A) v.s observation (diff.

1. Closed-ended/ structured 4. Coding Type refers to “Validity” measures)

2. Open-ended/Unstructured -Numbers are assigned to names, what you’re trying to measure

3. mixture of close & open date & other pertinent data (i.e. IQ test is intended to

measure intelligence)

5. Subjective Type ex.: you are trying to measure

Relationship of RRL to the -respondents are free to give student’s knowledge of the

Questionnaire opinions about issue of concern solar system. You don’t want to

RRL& studies must have be measuring their ability to

sufficient info & data to read complex info text.

enable the researcher to 6. Combination Type ex.: This is most commonly an

thoroughly understand the issue with regard to the breadth

2. Discriminant Validity statistical model called Types of Criterion-Related Validity validity is about providing

there is evidence that 1 factor analysis 1. Predictive evidence

concept is diff. form other 2. Concurrent ECD boosts validity:

closely related concepts 1. What you want to know?

1. Predictive Validity (A predicts B) 2. How would you know?

assessment predicts 3. What should the

performance on a future assessment look like?

assessment

Reliability

how consistent a measure is

5. Hypothesis-testing validity

of a particular element over

evidence that a research

a period of time, & between

3. Known- Group (KG) validity hypothesis about the

diff. participants

group w/ established relationship between the

is synonymous w/ the

attribute of the outcome of measures concept (variable) or

consistency of a test, survey,

construct is compared w/ a other concepts (variables),

observation, or measuring

group whom the attribute is derived from a theory, is 2. Concurrent validity (A correlates

device

not yet established supported w/ B)

ex,: in a survey that used assessment correlates w/

4 General Estimators

Questionnaire (Q) to explore II. Face Validity other assessments that

1. Inter-Rated/ Observer

depression among 2 gr. of when an individual (& or measure the same

Reliability

patients w/ clinical diagnosis researcher) who is an expert on constructs

2. Test-Retest Reliability

of depression & those w/out. the research subject reviewing

3. Parallel-Forms Reliability

it is expected in KG that the the questionnaire (instrument)

4. Internal Consistency

construct of depression in concludes that it measures the Valid Inferences

Reliability

the Q will be scored higher characteristic/trait of interest “Validity” (closely tied to the

among the patients w/ purpose/ use of an

Inter-Rated/ Observer Reliability

clinically diagnosed III. Criterion-Related Validity assessment)

used to assess the degree to

depression than those w/out Assessed if interested i

which diff. judges/raters

the diagnosis. determining the relationship of DON’T ASK: “Is this assessment

agree in their assessment

scores on a test to a specific valid?”

decisions

4. Factorial Validity criterion. ASK: “Are the inferences I’m

useful cuz human observers

empirical extension of a measure of how well a making based on this assessment

will not necessarily interpret

content validity questionnaire findings stack up valid for my purpose?”

answers the same way

it validates the contents of against another

raters may disagree as to

the construct employing the instrument/predictor Evidence-Centered Design (ECD)

how well certain responses/

material demonstrate

knowledge of the responses are. In general, ex.: a personality test may individuals, the total score

construct/skill being correlation coefficient (r) seem to have 2/more for each “set ” is computed,

assessed. values are censured good if questions that are asking the & finally the Split-half

uses2 individuals to mark/ r > 0.70 same thing. If the reliability is obtained by

rate scores of psychometric participants answers these determining the correlation

test, if their scores/ratings Parallel Form of Reliability similarly, then the internal between the 2 total “set”

are comparable then inter- obtained by administering consistency reliability is scores

rated reliability is confirmed diff. versions of assessment assumed correct.

tool (both version must 2 Types of Internal Consistency

Test-Retest Reliability contain items that probe the Reliability

Obtained by administering same construct, skill, 1. Average inter-item

the same test twice over a knowledge, base, etc.) to correlation

period of time to a group of the same indi 2. Split-half reliability

individuals. the scores from the 2

the scores form time 1 & 2 versions can be correlated Average inter-item correlation

can be correlated in order in order to evaluate the obtained by taking all the

to evaluate the test for the consistency of results across items on the test that probe

stability over time alternate versions the same construct (e.g.

this is the final sub-type & is To evaluate the reliability of reading comprehension)

achieved by giving the critical thinking assessment: determining the correlation

same test out at 2 diff. times you might create a large set coefficient for each pair of

& gaining the same result of items that all pertain to items, & finally taking the

each time critical thinking & then slowly average of all these

to assess student learning in split the questions up into 2 correlation coefficients

psychology could be given sets, which could represent this final step yields the

to a group of students twice, the parallel forms. average inter-item

w/ the second correlation

administration perhaps Internal Consistency Reliability

coming a week after the 1st. used to evaluate the Split-half reliability

The obtained correlation degree to which diff. test process: “splitting in half” all

coefficient would indicate items that probe the same the items of a test that are

the stability of the scores. construct produce similar intended to probe the same

it is measures by having the results area of knowledge (e.g.

same respondents this looks at the items w/in WW II)in order to form 2

complete a survey at 2 the test, to assess the “sets” of items

different points in time to internal reliability between the entire test is

see how stable the items. administered to a group of

POPULATION, extent to which the

measurement tool provides

what happens if the

instruments use change

2. Degree of Precision desired

by the Researcher

PARAMETER & consistent outcomes if the

measurement is repeatedly

during the course of a study

if different groups of

a larger sample size will result

in greater

SAMPLE perform participants interpret your precision/accuracy of results

questions differently? 3. Types of Sampling

Population

Regression towards the mean Procedure

composed of

if you start w/ extreme probability sampling (by

persons/objects that possess

groups, they are likely to be chance) utilizes smaller sizes

some common charact

less extreme at another time than NON-probability

eristics that are of the same

point sampling (by choice)

interest of the researcher

Selection 4. The use of Formula

are participants in your study a. Slovin’s Formula

Parameter

Threats to Internal Validity somehow diff. than those 𝑁

numeric/other measurable 𝑛=

1. history who are not? 1 + 𝑁^2

characteristics forming 1 of

2. maturation Mortality

a set that defines a system

3. testing those who drop out of your b.Calmorin’s Formula

sets the condition of its

4. instrumentation study could be qualitatively

operation 𝑁𝑉 + [(𝑆𝑒)2 𝑥(1 − 𝑝)]

5. regression towards mean different than those who 𝑆𝑠 =

Sample 𝑁𝑆𝑒 + [𝑉 2 𝑥 𝑝(1 − 𝑝)]

6. selection remain

subset of the entire

population or a group of 7. mortality

PARTICIPANTS OF Sampling

individuals

represents the population History THE STUDY a way of getting a

representative portion of the

serves as the respondents of there is something about the

experience & history of 1 target population

the study Ways to Determine Sample Size

group that could lead to needed regardless of the

Methodology

differences? research design for practical

is the systematic, theoretical Factors to consider in determining

Maturation reasons especially when the

analysis of the methods Sample sizes:

natural development population is large

applied to a field of study 1. Homogeneity of the

influence the association? at TOTAL population of 100/

Validity population

Testing less may not need to be

the degree to which the higher the degree of

does actual participation in sampled

research instrument gauges variation w/in the

the study change the greater than 100 needs

what it is supposed to population, the smaller the

behavior/ responses? sampling for desirable

measure? sample size that can be

Instrumentation effectiveness, efficiency &

Reliability utilized economy in data gathering

area, otherwise the results Simple Random Sampling ex.: the population of

Research Design obtained will be erroneous. all members of the students in 1 school is listed

1. Descriptive 4. Characteristics to be population are given a alphabetically & numbered

2. Experimental observed may occur rarely chance to be selected consecutively. From the list,

in the population (ex. selection is done by draw lot the sample to be taken is

2 Strengths (Sampling) teacher over 30 yrs. of / the use of the table of the name that falls every nth

teaching experience) random numbers. in the list until the desired

1. time, money & effort are 5. Complicated sampling number of sample is

minimized plans are laborious to Get a list of the total population completed

number of respondents, prepare 1. cut pieces of paper to small

subjects/ items to be studied sizes (1x1 in)that can be Sampling Scheme

becomes small yet they 2 General Types of Sampling rolled used when there is a ready

represent the population 2. put a number on each list of the total population

data collection, analysis & 1. Probability sampling / piece of paper the steps:

interpretation are lessened Scientific sampling corresponding to each o get the list of the total

gives every member of the number of the total population

2. Sampling gives more population equal chance to population o divide the total

comprehensive information be selected as part of the 3. Roll each paper of the population by the

small sample representing a study. number and put them in the desired sample size to

big population, a thorough a box get the sampling

study yields results that give 1. Non Probability Sampling/ 4. Shake well the box to give interval

more comprehensive info Non- Scientific sampling equal chance for every

that allows generalization & opposite of probability number to be chosen a Stratified Random Sampling

conclusion researcher’s judgment sample dividing the population into

determines the choice of 5. pick out 1 rolled paper at a strata & drawing the sample

5 Weaknesses (Sampling) sample time & unfold it at random from each

1. Due to limited number of 6. Record the number of the division

data source, detailed sub 5 Types of Probability Sampling/ unrolled paper

classification must be Scientific Sampling 7. Repeat picking out until the Used when there is a ready list of

prepared w/ utmost care 1. Simple Random Sampling desired number of sample is the population whose members

2. incorrect sampling design/ 2. Systematic Sampling completed. are categorized as students,

incorrectly following the 3. Stratified Random Sampling Systematic Random Sampling farmers, and fisherman. The steps

sampling plan will obtain 4. Cluster Sampling chooses nth name of a to follow are:

results that are misleading 5. Multi-stage Sampling population as the sample 1. get the list of the total

3. Sampling requires an expert it entails using a list of the population

to conduct the study in an population & deciding how 2. decide on the sampling

the nth name is chosen size/ the actual percentage

of the population to area that will serve as the Example: characteristics/ prescribed

considered as sample. cluster 1st level. 3 municipalities/province criteria of the research

3. get an equal proportion of a 2. select the sample wi/in the 2nd level. 2 district/ municipality

sample from each group.

Example:

area or cluster by

systematic/ stratified

3rd level. 4 barangays/ districts

4th level. 100

FINDING THE

500 pupils x .20 = 100

200 teachers x .20 = 40

sampling depending on the

availability of the info

respondents/barangay

SAMPLE SIZE

150 parents x .20 = 30 *use any of the sampling tech. Slovin’s Formula

Total Sample= 110 Multistage Sampling given earlier in the arriving at the use to calculate the sample

*get the 110 respondents by simple done by stages: 2,3,4 as the desired sample number. size(n) & the margin of

random sampling/ systematic case may be depending on error(e)

sampling the number of stages 3 Types of Non Probability random sampling tech.

sampling is made Sampling/ Non- Scientific sampling formula to estimate

Cluster Sampling Population is grouped by 1. Purposive Sampling sampling size

uses a group as sample hierarchy from which 2. Incident/ Accidental

rather than an indi sampling is done in each Sampling 𝑁

𝑛=

ex.: the population may be stage. 3. Quota Sampling 1 + 𝑁^2

the parents in 1 school ex.: the population to be

district. the parents may be studied consists of the Purposive Sampling Example:

grouped by barangay w/in personnel in the public samples are choses based the parameter of the

the district or by those in the elementary schools in the on the judgment of the population is 8K at 2 % margin

east, west, north, & south of country. Samples have to researcher who determines of error or 98% accuracy

the district. be take form the national, an individual, as sample for 𝑁

differs from stratified regional, provincial, district, possessing special 𝑛=

1 + 𝑁^2

sampling that includes all & school levels. characteristics of some sort

the strata in the sampling Steps followed in this scheme: 8,000

=

process 1. decide on the level of Incidental/ Accidental Sampling 1 + 8,000 (0.02)2

analysis to be studied such this design is used to take

Used when the population od national, regional, samples who may be 8,000

=

HOMOGENEOUS but scattered provincial, district, available/ the nearest at 1 + 8,000 (0.0004)

geographically in all parts of the barangay, etc. the time of data gathering 8,000

=

country & that there is no need to 2. select the sample at the 1 + 3.2

include all in the sampling. Steps in next level. ex.: if the survey is Quota Sampling 8,000

=

the SCHEME: provincial, the survey should popular for opinion research 4.2

=1,905

1. decide on the sample size. start at the municipal level, made by looking for indi

select the geographical then district, then barangay, that possess the required

etc.

Calmorin’s Formula

Population of more than

100, sampling is a must

follows formula for scientific

sampling, illustrations &

examples (Calmorin &

Calmorin, 1995)

𝑁𝑉 + [(𝑆𝑒)2 𝑥(1 − 𝑝)]

𝑆𝑠 =

𝑁𝑆𝑒 + [𝑉 2 𝑥 𝑝(1 − 𝑝)]

When Ss=Sample Size

N= total no. of population

V= standard values (2.58) of 1 %

level of the probability with 0.99%

reliability

Se=Sampling error

P= largest possible proportion (0.50)

Example:

The total population is 500 has a

standard value of 2.58 at 1% level

of probability & 99% reliability. the

sampling error is 1% (0.01) & the

proportion of a target population is

50% (0.50)

given:

N= 500

V=2.58

Se=0.01

P=0.05

𝑁𝑉 + [(𝑆𝑒)2 𝑥(1 − 𝑝)]

𝑆𝑠 =

𝑁𝑆𝑒 + [𝑉 2 𝑥 𝑝(1 − 𝑝)]

500(2.58) + [(0.01)2 𝑥(1 − 0.5)]

𝑆𝑠 =

500(0.01) + [2.582 𝑥 0.5(1 − 0.5)]

1290.00005

𝑆𝑠 =

6.6641

Potrebbero piacerti anche

- Psychological Assessment NotesDocumento8 paginePsychological Assessment NotesArtemis VousmevoyezNessuna valutazione finora

- What To Do Following A Workplace AccidentDocumento5 pagineWhat To Do Following A Workplace AccidentMona DeldarNessuna valutazione finora

- Prelims Practical Research Grade 12Documento11 paginePrelims Practical Research Grade 12Jomes Aunzo100% (1)

- Cemco T80Documento140 pagineCemco T80Eduardo Ariel Bernal100% (3)

- DLL PR 2 Week 3Documento3 pagineDLL PR 2 Week 3RJ Fernandez75% (4)

- Study Guide for Practical Statistics for EducatorsDa EverandStudy Guide for Practical Statistics for EducatorsValutazione: 4 su 5 stelle4/5 (1)

- Geology Harn v1 2Documento17 pagineGeology Harn v1 2vze100% (1)

- Iso 9227Documento13 pagineIso 9227Raj Kumar100% (6)

- Criminological Research 1Documento3 pagineCriminological Research 1AJ Layug83% (6)

- Prof. Madhavan - Ancient Wisdom of HealthDocumento25 pagineProf. Madhavan - Ancient Wisdom of HealthProf. Madhavan100% (2)

- Assignment On Tools For Data Collection by Kamini23Documento6 pagineAssignment On Tools For Data Collection by Kamini23kamini Choudhary100% (1)

- PPC Production PlantDocumento106 paginePPC Production PlantAljay Neeson Imperial100% (1)

- PR2 HeDocumento12 paginePR2 HeJay-r MatibagNessuna valutazione finora

- Department of Education Tnchs - Senior High SchoolDocumento9 pagineDepartment of Education Tnchs - Senior High SchoolCatherine Sosa MoralesNessuna valutazione finora

- Me N Mine Science X Ist TermDocumento101 pagineMe N Mine Science X Ist Termneelanshujain68% (19)

- DLL PR 2 Week 3Documento3 pagineDLL PR 2 Week 3RJ Fernandez100% (1)

- Steps in Research ProcessDocumento8 pagineSteps in Research ProcessSkye M. PetersNessuna valutazione finora

- Research II NotesDocumento14 pagineResearch II NotesCarlaNessuna valutazione finora

- Respondent (Random) Respondent (Nonrandom) : Systematically Collect DataDocumento3 pagineRespondent (Random) Respondent (Nonrandom) : Systematically Collect DataJasmine Joy LopezNessuna valutazione finora

- Lesson 11Documento4 pagineLesson 11Rein JoshuaNessuna valutazione finora

- PR2 ReviewerDocumento3 paginePR2 ReviewerJhon Vincent Draug PosadasNessuna valutazione finora

- Research Instruments: LessonDocumento4 pagineResearch Instruments: LessonROSE ANN SAGUROTNessuna valutazione finora

- SYLLAB - 01management Accounting ResearchDocumento5 pagineSYLLAB - 01management Accounting ResearchEfrenNessuna valutazione finora

- Practical Research ReviewerDocumento4 paginePractical Research ReviewerGenne kayeNessuna valutazione finora

- FIELD METHODS Midterm ReviewerDocumento3 pagineFIELD METHODS Midterm ReviewerVallada, FebroseNessuna valutazione finora

- Overview-Psy AssDocumento7 pagineOverview-Psy AssPanjeline V. MarianoNessuna valutazione finora

- Unit 2 ObservationDocumento10 pagineUnit 2 ObservationDhruv ShahNessuna valutazione finora

- Lesson-2 ResearchDocumento3 pagineLesson-2 ResearchMary Carmielyne PelicanoNessuna valutazione finora

- Collects Data Using Appropriately Instrument 20231202 123405 0000Documento9 pagineCollects Data Using Appropriately Instrument 20231202 123405 0000Jennelyn JacintoNessuna valutazione finora

- Reviewer PR2 Part 1Documento3 pagineReviewer PR2 Part 1Maureen Dayo100% (1)

- Qualitative Vs QuantitativeDocumento3 pagineQualitative Vs QuantitativePrincess Anne RoldaNessuna valutazione finora

- Writing The Research Methodolo GyDocumento17 pagineWriting The Research Methodolo GyAibie AlmoradoNessuna valutazione finora

- Practical Research 1Documento4 paginePractical Research 1nicole corpuzNessuna valutazione finora

- Nursing Research NotesDocumento12 pagineNursing Research NotesIris MambuayNessuna valutazione finora

- Semifinal ResearchDocumento8 pagineSemifinal Researchvaldez.adler.lNessuna valutazione finora

- University of Cebu - College of Nursing Nursing Research 1 (NCM 111)Documento6 pagineUniversity of Cebu - College of Nursing Nursing Research 1 (NCM 111)Katriona IntingNessuna valutazione finora

- DLL Week 2Documento3 pagineDLL Week 2Hazel VelosoNessuna valutazione finora

- Grade 8 - Pasteur: Sigmund Adrian E. Alaba & Karl TumulakDocumento2 pagineGrade 8 - Pasteur: Sigmund Adrian E. Alaba & Karl TumulakMalote Elimanco AlabaNessuna valutazione finora

- UNIT III Collecting Data July 1Documento13 pagineUNIT III Collecting Data July 1michael sto domingoNessuna valutazione finora

- Practical Research (G11 STEM) (Qualitative)Documento5 paginePractical Research (G11 STEM) (Qualitative)Zakari SatoNessuna valutazione finora

- 1 Periodic ReviewerDocumento10 pagine1 Periodic ReviewerBatnoy FransyyyNessuna valutazione finora

- Practical Research 1 ReviewerDocumento13 paginePractical Research 1 Reviewerjamifatie21Nessuna valutazione finora

- Writing The Research Paperchapter 3Documento26 pagineWriting The Research Paperchapter 3angel annNessuna valutazione finora

- Nursing Research Data CollectionDocumento220 pagineNursing Research Data Collectiondr.anu Rk100% (2)

- Research Instrument: Dr. Eunice B. Custodio PhilippinesDocumento14 pagineResearch Instrument: Dr. Eunice B. Custodio PhilippinesJona MempinNessuna valutazione finora

- BACC3 BR Business Research 1 Module Six 6Documento10 pagineBACC3 BR Business Research 1 Module Six 6Blackwolf SocietyNessuna valutazione finora

- Eapp Reviewer Q2 Melcs5 7Documento4 pagineEapp Reviewer Q2 Melcs5 7LUJILLE MANANSALANessuna valutazione finora

- Practical Research Module 1 3 Melvin R. Meneses 12 STEM SystematicDocumento29 paginePractical Research Module 1 3 Melvin R. Meneses 12 STEM SystematicJasmine CorpuzNessuna valutazione finora

- AGRICDocumento3 pagineAGRIClayla landNessuna valutazione finora

- A. Probability Sampling MethodsDocumento6 pagineA. Probability Sampling MethodsYmon TuallaNessuna valutazione finora

- Untitled DocumentDocumento6 pagineUntitled DocumentElyza Chloe AlamagNessuna valutazione finora

- K To 12 Senior High School - Inquiries, Investigations and ImmersionDocumento2 pagineK To 12 Senior High School - Inquiries, Investigations and ImmersionMariel CarabuenaNessuna valutazione finora

- NOTES S1Q1 Practical Research 2Documento5 pagineNOTES S1Q1 Practical Research 2Stephen Carl EsguerraNessuna valutazione finora

- Scientific Method On ResearchDocumento2 pagineScientific Method On Researchsophia chuaNessuna valutazione finora

- Psy Ass Midterm ReviewerDocumento9 paginePsy Ass Midterm ReviewerMARIANO, AIRA MAE A.Nessuna valutazione finora

- Psy Ass Midterm ReviewerrDocumento9 paginePsy Ass Midterm ReviewerrMARIANO, AIRA MAE A.Nessuna valutazione finora

- Q1 PR2 Notes 2Documento14 pagineQ1 PR2 Notes 2ericka khimNessuna valutazione finora

- Reviewer in PR2Documento1 paginaReviewer in PR2Pangilinan FaithNessuna valutazione finora

- See Pages 4 and 5 For Broader Description, and ExamplesDocumento5 pagineSee Pages 4 and 5 For Broader Description, and ExamplesDnnlyn CstllNessuna valutazione finora

- Technological Institute of The PhilippinesDocumento3 pagineTechnological Institute of The PhilippinesBrent FabialaNessuna valutazione finora

- Reviewer EDUC 7Documento4 pagineReviewer EDUC 7Albert EuclidNessuna valutazione finora

- PR1 Q4 WK3 4 WorktextDocumento3 paginePR1 Q4 WK3 4 WorktextMONIQUE LEMENTENessuna valutazione finora

- Lesson 3 Assessing The Curriculum Tools To Assess CurriculumDocumento2 pagineLesson 3 Assessing The Curriculum Tools To Assess Curriculumcrisselda chavezNessuna valutazione finora

- Validated MatrixDocumento8 pagineValidated MatrixAndres MatawaranNessuna valutazione finora

- Daily Lesson Log For 2nd Qtr. Practical ResearchDocumento10 pagineDaily Lesson Log For 2nd Qtr. Practical Researchrosalvie danteNessuna valutazione finora

- PR 1 (Take Notes)Documento4 paginePR 1 (Take Notes)Sorry ha Ganito lang aqNessuna valutazione finora

- AERO241 Example 10Documento4 pagineAERO241 Example 10Eunice CameroNessuna valutazione finora

- Geography - Development (Rural - Urban Settlement)Documento32 pagineGeography - Development (Rural - Urban Settlement)jasmine le rouxNessuna valutazione finora

- Distress Manual PDFDocumento51 pagineDistress Manual PDFEIRINI ZIGKIRIADOUNessuna valutazione finora

- NCR RepairDocumento4 pagineNCR RepairPanruti S SathiyavendhanNessuna valutazione finora

- CFPB Discount Points Guidence PDFDocumento3 pagineCFPB Discount Points Guidence PDFdzabranNessuna valutazione finora

- Lesson 49Documento2 pagineLesson 49Андрій ХомишакNessuna valutazione finora

- Me3391-Engineering Thermodynamics-805217166-Important Question For Engineering ThermodynamicsDocumento10 pagineMe3391-Engineering Thermodynamics-805217166-Important Question For Engineering ThermodynamicsRamakrishnan NNessuna valutazione finora

- OKRA Standards For UKDocumento8 pagineOKRA Standards For UKabc111007100% (2)

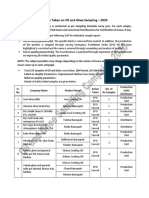

- Action Taken On Oil and Ghee Sampling - 2020Documento2 pagineAction Taken On Oil and Ghee Sampling - 2020Khalil BhattiNessuna valutazione finora

- L04-課文單片填空 (題目) (Day of the Dead)Documento3 pagineL04-課文單片填空 (題目) (Day of the Dead)1020239korrnellNessuna valutazione finora

- Hydrolysis and Fermentation of Sweetpotatoes For Production of Fermentable Sugars and EthanolDocumento11 pagineHydrolysis and Fermentation of Sweetpotatoes For Production of Fermentable Sugars and Ethanolkelly betancurNessuna valutazione finora

- Butt Weld Cap Dimension - Penn MachineDocumento1 paginaButt Weld Cap Dimension - Penn MachineEHT pipeNessuna valutazione finora

- Pyq of KTGDocumento8 paginePyq of KTG18A Kashish PatelNessuna valutazione finora

- Variance AnalysisDocumento22 pagineVariance AnalysisFrederick GbliNessuna valutazione finora

- Assignment On Inservice Education Sub: Community Health NursingDocumento17 pagineAssignment On Inservice Education Sub: Community Health NursingPrity DeviNessuna valutazione finora

- Aplikasi Metode Geomagnet Dalam Eksplorasi Panas BumiDocumento10 pagineAplikasi Metode Geomagnet Dalam Eksplorasi Panas Bumijalu sri nugrahaNessuna valutazione finora

- Dissertation Topics Forensic BiologyDocumento7 pagineDissertation Topics Forensic BiologyHelpMeWriteMyPaperPortSaintLucie100% (1)

- An Energy Saving Guide For Plastic Injection Molding MachinesDocumento16 pagineAn Energy Saving Guide For Plastic Injection Molding MachinesStefania LadinoNessuna valutazione finora

- Carolyn Green Release FinalDocumento3 pagineCarolyn Green Release FinalAlex MilesNessuna valutazione finora

- Nodular Goiter Concept MapDocumento5 pagineNodular Goiter Concept MapAllene PaderangaNessuna valutazione finora

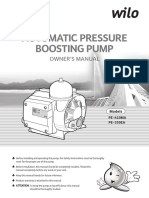

- Wilo Water PumpDocumento16 pagineWilo Water PumpThit SarNessuna valutazione finora

- Remote Control Unit Manual BookDocumento21 pagineRemote Control Unit Manual BookIgor Ungur100% (1)

- Tri-Partite Agreement AssociationDocumento9 pagineTri-Partite Agreement AssociationThiyagarjanNessuna valutazione finora