Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

RecoverPoint 5.0 Advanced Performance Guide

Caricato da

emcviltCopyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

RecoverPoint 5.0 Advanced Performance Guide

Caricato da

emcviltCopyright:

Formati disponibili

EMC INTERNAL USE ONLY

PERFORMANCE GUIDE

EMC RecoverPoint 5.0

Advanced Performance Guide

P/N 302-003-894

REV 01

April 2017

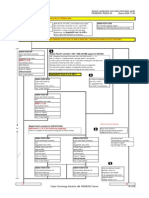

This document contains information on these topics:

Revision History ......................................................................... 4

Introduction ............................................................................... 5

About this document ........................................................... 5

Definitions............................................................................... 5

Related documentation ..................................................... 5

RecoverPoint Performance Fundamentals ............................ 6

Test Environment ................................................................... 6

Configuration........................................................................... 6

I/O Patterns .............................................................................. 6

Response time ....................................................................... 7

Workload................................................................................ 8

Communications .................................................................. 8

Communications medium and protocol ........................... 8

Bandwidth ................................................................................ 9

Latency and packet loss ....................................................... 9

Journal .................................................................................. 10

Best practices in provisioning journals ............................... 11

Additional best practices for journals on Symmetrix ...... 12

Journal compression ............................................................ 12

Security level ....................................................................... 13

Multi-cluster and multi-copy configuration .................... 13

RecoverPoint connectivity to the storage array ........... 15

RPA to storage array multipathing .................................... 15

EMC INTERNAL USE ONLY

Splitter to RPA multipathing ................................................. 15

VMAX V2 splitter port considerations ................................ 16

vRPA ..................................................................................... 16

vRPA resources ...................................................................... 16

Scaling considerations ......................................................... 17

Deployment considerations ................................................ 18

Best practice for synchronous replication ........................ 19

Snap-based replication ..................................................... 19

Added response time .......................................................... 20

Snap sizes................................................................................ 21

Journal size and protection window ................................. 21

RPO .......................................................................................... 22

Max IOPS ................................................................................ 22

Frequency of RecoverPoint bookmarks ........................... 22

Additional considerations and best practices ................ 24

SCSI UNMAP command in VPLEX ..................................... 24

MetroPoint ........................................................................... 26

IOPS and throughput............................................................ 26

Added response time .......................................................... 26

Deployment considerations ................................................ 27

Performance Test Results ....................................................... 27

VPLEX splitter test ................................................................ 28

Configuration......................................................................... 28

Performance results .............................................................. 29

VMAX splitter test ................................................................ 30

Configuration......................................................................... 30

Performance results .............................................................. 31

Unity splitter test .................................................................. 32

Configuration......................................................................... 32

Performance results .............................................................. 33

VNX splitter test with physical RPAs .................................. 34

Configuration......................................................................... 34

VNX splitter test with virtual RPAs...................................... 37

Configuration......................................................................... 37

Performance results .............................................................. 38

Performance Tools .................................................................. 40

2 EMC RecoverPoint 5.0 Advanced Performance Guide

EMC INTERNAL USE ONLY

Copyright © 2017 Dell Inc. or its subsidiaries. All rights reserved.

Published April 2017.

Dell believes the information in this publication is accurate as of its publication

date. The information is subject to change without notice.

The information in this publication is provided “as is”. Dell makes no

representations or warranties of any kind with respect to the information in this

publication, and specifically disclaims implied warranties of merchantability or

fitness for a particular purpose. Use, copying, and distribution of any Dell

software described in this publication requires an applicable software license.

Dell, EMC, and other trademarks are trademarks of Dell Inc. or its subsidiaries.

Other trademarks may be the property of their respective owners.

Published in the USA.

EMC RecoverPoint 5.0 Advanced Performance Guide 3

EMC INTERNAL USE ONLY Revision History

Revision History

The following table presents the revision history of this document:

Revision Date Description

01 April 2017 First publication.

4 EMC RecoverPoint 5.0 Advanced Performance Guide

Introduction EMC INTERNAL USE ONLY

Introduction

About this document

This document provides extensive information on the performance capabilities of

RecoverPoint, and performance considerations for building and configuring a

RecoverPoint system.

It assumes that you are familiar with the RecoverPoint product, and have a basic

knowledge of storage technologies and their respective performance

characteristics.

In addition, before using this document, you should be familiar with basic

RecoverPoint performance capabilities, as described in the EMC RecoverPoint

5.0 Performance Guide. If you are not responsible for the detailed specification

of your RecoverPoint system, that document may provide all the information that

you need.

This guide focuses on major use cases and common questions; however, given

the complexity of real-world environments, it cannot cover all of the possible

configurations and scenarios. If, after studying this guide, you still need additional

information consult RPSPEED.

Definitions

Throughput — volume of incoming writes, normally expressed in megabytes per

second (MB/s).

IOPS — number of incoming writes, in I/Os per second.

Sustained performance — maximum replication rate that can be sustained over

an extended time, and which maintains the required RPO and RTO without

entering a highload state.

Distribution — process by which the replicated data in the copy journal is written

to the copy storage. This process is CPU-intensive and I/O-intensive.

Protection window — how far in time the copy image can be rolled back.

Primary RPA — the preferred RPA for replicating a given consistency group (CG)

Highload — a system state that occurs during replication when RPA resources at

the production cluster are insufficient.

Related documentation

The following related documents are available for download from EMC Online

Support:

EMC RecoverPoint 5.0 Performance Guide

EMC RecoverPoint 5.0 Release Notes

EMC RecoverPoint 5.0 Advanced Performance Guide 5

EMC INTERNAL USE ONLY RecoverPoint Performance Fundamentals

EMC RecoverPoint 5.0 Administrator’s Guide

EMC RecoverPoint vRPA Technical Notes

EMC RecoverPoint Detecting Bottlenecks Technical Notes

EMC RecoverPoint Deploying with Symmetrix Arrays and Splitter Technical

Notes

EMC RecoverPoint Provisioning and Sizing Technical Notes

EMC RecoverPoint Deploying with VPLEX Technical Notes

EMC RecoverPoint 5.0 Security Configuration Guide

RecoverPoint Performance Fundamentals

Test Environment

Configuration

The following configurations apply to all the tests that are described in this

document, unless otherwise specified:

RPAs are running RecoverPoint version rel5.0_d.205.

All available ports on the RPA are connected to the storage.

Physical RPAs are all Gen6.

The link between RPA clusters at the two sites is 1Gb Ethernet, 10 Gb Ethernet,

8 Gb FC, or 16 Gb FC, all with round trip time of 0 ms.

The values obtained after moving to three-phase distribution are considered

to be the maximum sustainable values. For more information on three-phase

distribution, refer to the EMC RecoverPoint 5.0 Product Guide.

I/Os are performed to all of the available replication volumes, with one

concurrent outstanding I/O per device.

Tests were conducted with the RPA communication security level set to

“Accessible”.

I/O Patterns

IOPS are measured with 4K I/O blocks.

Throughput is measured with 64K I/O blocks.

Except in the application pattern tests, the I/O pattern that is generated is

100% random write cache hit.

The data is generated with compressibility ratio of 2; that is, the RPA can

compress the data to half of its initial size if compression is enabled.

6 EMC RecoverPoint 5.0 Advanced Performance Guide

RecoverPoint Performance Fundamentals EMC INTERNAL USE ONLY

Response time

All host I/Os pass through the splitter: read I/Os are immediately passed to the

designated devices, while write I/Os are intercepted by the splitter. From the

splitter, a write I/O is sent first to the primary RPA. Once it is acknowledged by the

RPA it is passed to the designated device, and only then it is acknowledged to

the host.

In asynchronous replication, the primary RPA acknowledges write I/Os

immediately upon receiving them in its memory. However, even in this case

there may be an added response time to every write I/O, due to any of the

following factors:

Primary RPA hardware — stronger RPAs may respond faster.

Load on primary RPA for the production copy — higher load may induce

longer response time.

Communications protocol between splitter and RPA (FC or iSCSI), and size of

the I/O — these determine the number of round trips needed for the I/O to

pass from the splitter to the RPA.

Splitter type

In synchronous replication, the primary RPA acknowledges write I/Os only after

they are received and acknowledged by its peer RPA at the remote cluster. The

remote RPA acknowledges a write immediately when it reaches its memory.

Hence, in synchronous replication, response time depends on all of the factors

listed for asynchronous replication, together with the following factors:

Remote peer RPA hardware — stronger RPAs may respond faster.

Load on the RPA for the remote copy — higher load may induce longer

response time.

Communications protocol between the RPA clusters at the two sites (FC or

IP), and size of the I/O — these determine the number of round trips needed

for the I/O to pass between the clusters.

As a result of these factors, added response times are presented in the

Performance Test Results” section for several example environments.

RecoverPoint added response time for distributed consistency groups is the same

as for regular consistency groups.

RecoverPoint added response time for write I/Os is typically higher for

synchronous replication than asynchronous replication. However, for a multi-user

application, although each user’s transaction experiences a delay, the overall

impact on performance is minor.

Dynamic sync mode replication can be assigned to a group that generally

requires synchronous replication, but which can be switched to asynchronous

replication at peak times to avoid excessive application delay. For this

replication mode, the user defines thresholds for the latency and/or the

throughput between RPA clusters. When any threshold is reached, replication

over the link automatically switches to asynchronous mode. When values are

EMC RecoverPoint 5.0 Advanced Performance Guide 7

EMC INTERNAL USE ONLY RecoverPoint Performance Fundamentals

once again below the thresholds, replication automatically switches back to

synchronous mode.

It is RecoverPoint best practice to define synchronous and asynchronous

consistency groups on different RPAs.

Workload

RecoverPoint replicates only write I/Os because only these I/Os change the state

of the device. Thus, the added response time due to read I/Os is negligible

relative to write I/Os. Real-world applications have a complex I/O pattern which

is composed of both reads and writes of various sizes.

The following benchmarks are commonly used to simulate common application

patterns:

OLTP1 — mail applications

OLTP2 — small Oracle applications

OLTP2HW — large Oracle applications

DSS2 — data warehouse applications

The sustained write throughput that can be replicated by a single RPA was

described in the EMC RecoverPoint 5.0 Performance Guide. With complex

application patterns, however, many factors beside the RPA can affect the

performance, including the splitter type, the communications channel

properties, port connectivity, and remote-site storage type and configuration.

The results for throughput, IOPS, and added response time are provided for

several example environments in the “Performance Test Results” section, which

begins on page 27.

Communications

The nature of the communications link between RPA clusters has a major impact

on RecoverPoint performance.

Communications medium and protocol

RecoverPoint supports both Fibre Channel and IP communication between RPA

clusters.

With virtual appliances, only IP communication is supported. For vRPAs, a

10 Gb Ethernet can be used if it’s available on the ESX.

For Gen5 physical RPAs, when IP communication is used, there is a hard limit

of 110 MB/s per RPA due to the 1 Gb Ethernet port that is used for WAN

communication. This is not a hard limit on the incoming throughput, but

rather, on the communication between sites.

Gen6 physical RPAs support 8 Gb FC, 16Gb FC, 1 Gb Ethernet, or 10 Gb

Ethernet communications between sites, depending on the I/O Modules

(SLICS) that are used.

8 EMC RecoverPoint 5.0 Advanced Performance Guide

RecoverPoint Performance Fundamentals EMC INTERNAL USE ONLY

Bandwidth

The bandwidth between RPA clusters may become a bottleneck that limits the

replication throughput. Compression and deduplication WAN optimizations can

be enabled to allow greater throughput over the link between these RPA

clusters. WAN optimizations, however, are CPU–intensive, and may reduce RPA

performance if CPU is the performance bottleneck, as it may be, for example,

with weak vRPAs.

In synchronous replication, WAN optimizations are disabled, since they tend to

increase RecoverPoint added response time.

The default compression level is low compression, because it gives the most

benefit across all RPA and vRPA configurations.

When the data is compressible and dedupable, enabling compression and

dedup optimizations doesn’t degrade maximum IOPS and throughput when

replicating with a physical RPA. For example, when the workload has

compressibility ratio of 2 (that is, it can be compressed into half of its initial size)

and dedupe ratio of 2 (that is, dedup optimization can save half the WAN

bandwidth), a single physical RPA can replicate up to 300 MB/s.

Latency and packet loss

Line latency between RPA clusters at site A and site B is the time it takes an I/O to

pass from site A to site B. Round trip time (RTT) is the time it takes an I/O to pass

from site A to site B and back.

In Fibre Channel networks, line latency is directly related to the distance

between sites, where 100 km equals latency of 0.5 ms.

In IP communications, RecoverPoint scales well over distance. The data is passed

to the RPA cluster at the remote site in a single round trip. As shown in Table 1, for

synchronous replication, additional latency over the WAN will increase the

added response time of RecoverPoint by the same amount, regardless of the

volume of I/Os.

Table 1. Impact of RTT between sites on response time in synchronous over

WAN (in milliseconds)

Round Trip Time

Outstanding

I/Os (4K) 0 ms 1 ms 2 ms 4 ms 8 ms

2 1.9 3 4.1 6.2 10.3

16 1.9 3.2 4.2 6.3 10.5

32 2 3.2 4.5 6.3 11.1

The greater that the latency and the packet loss are, the smaller the throughput

that RecoverPoint can replicate.

Table 2. Impact of RTT on throughput of single RPA in asynchronous replication

Round Trip Time (ms) 0 25 50 100 150 200

Throughput (MB/s) 111 111 93 47 32 25

EMC RecoverPoint 5.0 Advanced Performance Guide 9

EMC INTERNAL USE ONLY RecoverPoint Performance Fundamentals

The maximum supported round trip time for asynchronous replication is 200 ms

with up to 1% packet loss.

The maximum supported round trip time for synchronous replication is 4 ms over

FC (distance of 200 km) or 10 ms over WAN.

Communications problems often cause highloads. Ways to detect these

problems are presented in the “Performance Tools” section, on page 38.

External WAN accelerators can be used to improve performance during

asynchronous replication. It is best practice to disable RecoverPoint WAN

optimizations (compression and deduplication) if WAN optimization is performed

by a WAN accelerator.

Journal

To replicate a production write, while maintaining the undo data that is needed

if you want to roll back the target copy image, five-phase distribution mode is

applied. This mode produces five I/Os at the target copy. Of these, two I/Os are

directed to the replication volumes and three I/Os are directed to the journal.

Thus, the throughput requirement of the journal at the target copy is three times

that of the production, and 1.5 times that of the replication volumes. For that

reason it is very important to configure the journal correctly. Misconfiguration

may result in a decrease in sustained throughput, an increase in journal lag, and

highloads.

Journal I/Os are typically large and sequential as opposed to the target copy

I/Os that depend on application write I/O patterns, which may be random. For

performance reasons, the I/O chunk size that RecoverPoint sends depends on

the array type, including:

VNX/Unity — 1 MB

VMAX — 256 KB

VPLEX — 256K for reads and 128 KB for writes. (Starting with GeoSynchrony 5.2,

the write size is 1MB).

Example:

The application generates throughput of 50 MB/s and 6400 write IOPS. What is the

required performance of the journal at a remote copy?

The throughput requirement of the journal would be (50 MB/s × 3) = 150 MB/s.

The average I/O size in this example is (50 MB/s / 6400 IOPS) = 8 KB. Thus, many

I/Os (16–64, depending on the array type) would be aggregated into a single

I/O to the journal. The IOPS requirement from the journal would be between

(150 MB/s / 512 KB) = 300 and (150 MB/s / 128 KB) = 1200.

Production journals, as opposed to copy journals, do not have strict performance

requirements since they are used for writing only small amounts of metadata

during replication. In the case of failover, however, these production journals

become copy journals that have major effect on performance. This should be

taken into account when configuring the system.

10 EMC RecoverPoint 5.0 Advanced Performance Guide

RecoverPoint Performance Fundamentals EMC INTERNAL USE ONLY

Best practices in provisioning journals

The following are best practices with regard to journal provisioning:

It is a good practice to allocate the journal volumes on a dedicated storage

pool or RAID group. Most importantly, do not allocate any volume that

receives random I/Os on the same storage pool or RAID group with the

journal volumes. Hence it is a good practice not to put volumes of a local or

remote copy on the same storage pool or RAID group as the journal volumes

for that copy.

If high performance is critical, do not use RecoverPoint auto journal

provisioning; rather, use RecoverPoint manual provisioning or provision the

volumes manually on the array.

It is not cost-effective to place journals on EFDs (Enterprise Flash Drives).

Hence, it is not recommended to configure journal volumes on storage where

FAST VP (Fully Automatic Storage Tiering with Virtual Pools) is enabled, and

contains an EFD tier.

Note: Journal volumes on flash drives may improve performance for virtual

image access.

Bind to the fastest SAS or Fibre Channel drives, if possible, and avoid EFDs and

SATA drives.

If FAST VP is enabled pin the journal thin devices to SAS/FC drives to avoid

impact of FAST VP sub-LUN movements.

RAID 10 is the best option for journal performance; however, it also requires

the most disk space. RAID 5 (3+1) is also a good option for performance.

RAID 1 and RAID 6 will provide some extra reliability at the expense of

performance.

RAID groups are preferred over storage pools.

On VNX thick LUNs are preferred over thin LUNs.

Configuring a consistency group with multiple journal volumes from separate

storage groups or RAID groups may improve its throughput performance.

It is still recommended to configure multiple journal volumes for a CG even if

you cannot allocate them from different storage pools or RAID groups.

When adding journal volumes to an existing consistency group, it is advisable

to add groups of volumes of the same size. This is because RecoverPoint

performs striping only for journal volumes of the same size. Striping improves

the performance of the journal, especially if the devices come from different

RAID groups.

It is better to include all of the journal volumes when you create the CG than

to add journal volumes later.

It is not recommended to enable FAST Cache for journal volumes on VNX.

Traffic on production journals is light; however, if using non-virtually

provisioned journal LUNs, or if FAST VP is disabled, the journals should be fast

enough to function as replica journals in case of failover. If using virtually

EMC RecoverPoint 5.0 Advanced Performance Guide 11

EMC INTERNAL USE ONLY RecoverPoint Performance Fundamentals

provisioned journal LUNs with FAST VP, they should be bound to a pool with

the slowest drives, provided faster drives are available in the same pool in

case of failover.

Additional best practices for journals on Symmetrix

The following are additional best practices when provisioning journals on

Symmetrix storage:

Use fully pre-allocated TDEVs for journals.

Journal volumes should be striped over many physical devices to prevent the

physical disks from becoming a bottleneck.

TDATs should be configured as RAID5 or, if possible, even as two-way

mirroring. The journal device should be configured as striped meta.

When replicating in both directions (or if the local copy is on the same

storage array as production), each storage array contains both production

LUNs and journals, and target copy LUNs and journals. In this situation, there is

a risk that the target copy journals will consume more resources than

production LUNs on the same array. It may therefore be beneficial to

separate the production workload from the target copy workload. Separate

production LUNs and production journal LUNs from target copy LUNs and

target copy journal LUNs, by placing them on separate FA ports and physical

disks. If using virtually provisioned storage, FAST VP may still be enabled within

each pool.

When using a Symmetrix splitter at the production side, if a replicating volume

is exposed through a specific port to a host, then it must also be mapped

through the same port to the RPA. Journal volumes don’t have this limitation.

A common strategy for having stable and predictable journal performance

that does not depend on host I/Os, is to map the journals to the RPA through

dedicated directors.

Journal compression

RecoverPoint can compress the data that is written to the journal in order to

decrease the required journal capacity and increase the protection window.

However this compression is CPU-intensive and usually reduces the overall

throughput of an RPA and CG. In addition, it significantly reduces image access

performance. Hence, it is recommended to enable journal compression only

when there is not enough journal storage.

Table 3 presents the effect of journal compression on throughput in

asynchronous replication over WAN with Gen6 RPAs.

Table 3. Impact of journal compression on throughput (asynchronous)

Journal compression Throughput (MB/s)

Medium 70

High 40

12 EMC RecoverPoint 5.0 Advanced Performance Guide

RecoverPoint Performance Fundamentals EMC INTERNAL USE ONLY

Note that multiple CGs running on the same RPA may show better performance

for journal compression.

The compression ratio depends on the I/O pattern generated from the

application. Without additional information, as a rule of thumb, medium journal

compression ratio doubles the protection window, and high compression ratio

triples it.

Security level

The following security levels can be configured for IP communication between

all RPA clusters in a RecoverPoint system:

Not authenticated, not encrypted

Authenticated and encrypted

For more details, refer to the EMC RecoverPoint 5.0 Security Configuration Guide.

If maximum security is required, it is recommended that you use the

“Authenticated and encrypted” security level.

If the security provided by authentication and encryption is not required, you

can achieve a small gain in performance by using the “Not authenticated, not

encrypted” security level, especially when the WAN quality is low (i.e., high

latency and/or packet loss) or when sync replication is needed.

Note that when the security level is set higher than “Not authenticated, not

encrypted”, the response time for synchronous replication over WAN may be as

much as doubled.

During an upgrade, if both product versions support the same security levels,

then the existing security level is maintained. If not, ensure that, following

upgrade, the security level is set to your desired level.

Multi-cluster and multi-copy configuration

A RecoverPoint multi-cluster system is comprised of between two and five

connected RPA clusters. A consistency group can have up to five copies at up

to 5 clusters where, in fan-out configuration, a single production copy is

replicated to four remote copies. Alternatively, the CG data can be replicated

to a local copy and up to 3 remote copies. Only one of the links to a remote RPA

cluster can be configured for synchronous replication. Each cluster may hold up

to two copies for a CG; that is, a cluster that supports a production copy can

also support a local copy, while a cluster without a production copy can support

two remote copies.

Table 4 and Table 5 summarize RecoverPoint performance in a multi-cluster,

multi-copy environment using Gen6 RPAs, and replicating asynchronously over

FC and over IP.

EMC RecoverPoint 5.0 Advanced Performance Guide 13

EMC INTERNAL USE ONLY RecoverPoint Performance Fundamentals

Table 4. Asynchronous replication over FC or 10Gb IP, using Gen6 RPAs

Regular CG Distributed CG

Throughput Throughput

IOPS IOPS

Configuration (MB/s) (MB/s)

2 clusters — 1 local copy and

35,000 140 35,000 350

1 remote copy

3 clusters — 2 async remote copies 35,000 200 35,000 460

3 clusters — 1 async and 1 sync

20,000 200 21,000 350

remote copies

Table 5. Asynchronous replication over 1Gb IP, using Gen6 RPAs

Regular CG Distributed CG

Throughput Throughput

IOPS IOPS

Configuration (MB/s) (MB/s)

2 clusters — 1 local copy and 1 remote 20,000 110 27,000 350

copy

3 clusters — 2 async remote copies 13,000 55 27,000 220

3 clusters — 1 async and 1 sync 13,000 55 16,000 88

remote copies

The write I/Os that are received at production are duplicated once for every

local and remote copy. Only then, WAN compression and deduplication are

applied according to the link configuration. Note that the splitter to RPA

communication does not depend on the number of copies.

Example:

An application generates writes at 80 MB/s, and is being replicated by

RecoverPoint to two remote copies and one local copy. The data that the

application generates can be compressed by half. What is the required WAN

bandwidth?

The local copy doesn’t require WAN bandwidth, but for every remote copy, the

80 MB/s throughput is duplicated. Therefore without compression the required

bandwidth is 160 MB/s. However with compression enabled the required

bandwidth is only 80 MB/s.

Compression is CPU-intensive. Consider configuring a CG with high throughput to

multiple remote copies as a distributed CG. This will allow spreading of the CPU

load over multiple RPAs.

Note: Replication always takes place between RPAs that have the same roles in

their respective clusters; for example, RPA 1 in one cluster replicates to RPA 1 of

another cluster). “Diagonal replication”—that is, replication between

unmatched RPAs— is not supported. As a consequence, adding an RPA to one

RPA cluster without balancing the number of RPAs at the other clusters will not

help to increase replication performance between these clusters.

14 EMC RecoverPoint 5.0 Advanced Performance Guide

RecoverPoint Performance Fundamentals EMC INTERNAL USE ONLY

RecoverPoint connectivity to the storage array

There are performance considerations also with regard to the RPA connection to

the storage array.

RPA to storage array multipathing

When the RPA is performing I/Os to the storage array it spreads the load over

multiple paths to the device. The decision regarding which subset of paths the

I/Os are sent depends on the storage array type. For example, if the storage

array is VMAX, the I/Os are spread equally on all its directors. This accelerates the

distribution process and reduces the overall load on the array and its ports, and

on the RPA ports.

You should expose the volumes (production copy, local and remote copy,

journal, and repository) to the RPA through as many storage array

controllers/directors, storage array ports, and RPA ports as possible, provided you

stay within the maximum limit (200,000) for the number of paths . If full-mesh

connectivity is above the scale limit, you can reduce the number of paths by

removing paths through different ports of the same controller/director.

Example:

The storage array has 4 directors with 4 ports each. My RPA has 2 ports. I need to

mask 7,000 devices to the RPA. What is the preferred way to do this?

Full mesh connectivity to all the devices would create (4 × 4 × 2 × 7000) =224,000

paths. This is above the RPA’s path scaling limitation. Mapping the volumes

through only 2 ports of each director will reduce the number of paths to (4 × 2 ×

2 × 7000) = 112,000 paths. This connectivity allows spreading the load over all the

directors and all the RPA ports, while keeping the scale below the limit.

Splitter to RPA multipathing

In VNX, starting with R32 MR1 SP1 and R33, and in VPLEX, starting with VPLEX 5.4,

the splitter performs load balancing of the I/Os to the RPA through different

paths. Hence, there is an advantage for having as many paths as possible

between the storage array and the RPA. Adding paths help to reduce the load

on the RPAs ports and increase concurrency between the splitter and the RPA.

EMC RecoverPoint 5.0 Advanced Performance Guide 15

EMC INTERNAL USE ONLY RecoverPoint Performance Fundamentals

VMAX V2 splitter port considerations

Table 6 presents RecoverPoint performance as a function of the number of

VMAX V2 engines and ports and the number of RPAs in asynchronous replication

over FC.

Table 6. Improving performance by adding VMAX engines and ports and RPAs

VMAX V2 Ports per

engines engine RPAs IOPS Throughput (MB/s)

1 2 1 21,736 166

1 4 1 23,598 172

1 8 1 25,002 185

2 8 1 25,126 188

2 8 2 34,480 359

2 8 4 48,860 570

4 VMAX V2 ports that are spread on two directors can hold the maximum IOPS

and throughput of a single RPA.

To support more IOPS and throughput, you can add additional RPAs and VMAX

ports while keeping the ratio of at least 4 VMAX ports per RPA. The ports should

be spread as equal as possible over the directors and engines.

vRPA

vRPA resources

vRPAs can be deployed in the following predefined virtual machine

configurations:

8 vCPUs, 8 GB RAM

4 vCPUs, 4 GB RAM

2 vCPUs, 4 GB RAM

Refer to EMC RecoverPoint 5.0 Performance Guide for the capabilities of these

vRPA configurations.

When designating the configuration of the production side vRPAs, consider the

following:

If synchronous replication is needed, use the “8 vCPUs, 8 GB RAM” or

“4 vCPUs, 4 GB RAM” vRPA configuration.

If deduplication is needed, use the “8 vCPUs, 8 GB RAM” vRPA configuration.

Stronger vRPAs will be able to sustain higher write IOPS and throughput

generated by the application.

Stronger vRPAs will be able to handle longer and stronger peaks of write I/Os.

16 EMC RecoverPoint 5.0 Advanced Performance Guide

RecoverPoint Performance Fundamentals EMC INTERNAL USE ONLY

Note: In virtual RecoverPoint configurations, distributed CGs are not supported.

Using a stronger vRPA is the only way to increase the maximum IOPS and

throughput of a single CG.

A vRPA communicates with the storage array using iSCSI protocol. Only VNX

arrays with iSCSI modules currently support vRPAs. In VNX, the following types of

iSCSI modules are available:

1 Gb port

10 Gb port

The performance limits of a 1 Gb VNX port are 80 MB/s and 10K IOPS for read

I/Os, with similar limits for write I/Os. Since it is full duplex, these limits can be

reached at the same time. Use of a single 1 Gb port at the remote cluster may

cause a bottleneck.

Example:

With a VNX array at the remote copy with four 1 Gb iSCSI ports, and when using

vRPAs for replication, what is the maximum sustainable write throughput and

IOPS for which undisturbed replication can be supported?

At the remote RPA cluster, the vRPA is running the distribution process. Every

production write spawns 5 I/Os at the remote copy—3 writes and 2 reads—which

are aggregated by the journal.

If the theoretical limit of the VNX is 320 MB/s, then the theoretical write I/Os limit

for the application is (320 MB/s / 3 writes) = 106 MB/s.

Scaling considerations

As in the physical case, RecoverPoint scales linearly as vRPAs are added. This is

especially important because it is very easy to add vRPAs (up to 8) to the RPA

cluster (by cloning an existing vRPA or deploying a new vRPA using OVA) without

adding additional physical hardware.

Example:

Four applications generate throughput of 100 MB/s each. How many vRPAs

should be deployed?

Each “8 vCPUs, 8 GB RAM” vRPA can replicate incoming write throughput of

about 100 MB/s over WAN. To allow a total throughput of 400 MB/s 4 RPAs are

required. However an additional RPA should be provisioned in order to allow the

system to continue replication non-disruptively in case of an RPA failure. Weaker

vRPA hardware cannot be used unless the application data is divided into

several CGs that are grouped in a “group set” with parallel bookmarks.

EMC RecoverPoint 5.0 Advanced Performance Guide 17

EMC INTERNAL USE ONLY RecoverPoint Performance Fundamentals

Example:

Two applications generate writes at 5,000 IOPS. Which vRPA hardware should be

provided?

Putting aside redundancy considerations, 5,000 IOPS can be handled by a single

“8 vCPUs, 8 GB RAM” vRPA or by two “2 vCPUs, 4 GB RAM” vRPAs. The hardware

requirement of the latter, however, is lower than the first. Hence, in this case, two

weaker vRPAs will utilize host resources better than one strong vRPA.

Deployment considerations

When deploying a vRPA cluster consider the following recommendations:

Memory — It is highly recommended to reserve on the ESX all memory

required for the vRPAs. Note that when deploying vRPAs from an OVF,

whether it reserves all of the memory depends on the exact version.

CPU — It is recommended to reserve CPU. When deploying a vRPA from an

OVF, about 4000 MHz of CPU is reserved.

Total CPU and memory available on an ESX host must be at least the sum of

CPU and memory required by each of the individual vRPAs on it.

Network bandwidth of the ESX host must be sufficient to handle the I/O load

of all vRPAs that run on it.

If production VMs run on the same ESX host as the vRPA, you must consider also

their CPU, memory, and network requirements in the sizing. In addition, you

should consider the VM management services (such as vMotion) network

requirements.

It is recommended to deploy vRPAs of the same cluster on different ESX hosts, to

allow spreading the CPU load and avoiding networking congestion on the ESX

ports. Nonetheless, if you deploy more than one vRPA from an RPA cluster on a

single ESX, consider the following:

Never put vRPA roles 1 and 2 on the same ESX, in order to prevent a single

ESX failure causing the failure of an entire RPA cluster.

If that ESX fails, all the CGs running on the vRPAs that run on it will switch to

other vRPAs running on other ESXs. Plan accordingly to ensure that the other

vRPAs and ESXs will be able to handle this extra load.

It is best practice to enable VMware HA (high availability) on the ESX server

hosting the vRPAs. In case of an ESX failure, the vRPAs that ran on it will restart

on another ESX. However, note that the CG switch to another vRPA is likely to

happen before the vRPA starts running on another ESX.

It is best practice to run the vRPAs on a different ESX from the application that

they are replicating. This prevents the vRPA and the application from competing

for ESX resources, especially at peak times. Note that it does not mean that

vRPAs cannot share ESX resources with production VMs.

18 EMC RecoverPoint 5.0 Advanced Performance Guide

RecoverPoint Performance Fundamentals EMC INTERNAL USE ONLY

Example:

Four applications generate throughput of 100MB/s each. How many ESX

machines are required to run the vRPAs, assuming that each ESX machine has

10 Gb WAN?

Best practice is to deploy five “8 vCPUs, 8 GB RAM” vRPAs on five different ESX

machines. However, it is possible for RecoverPoint to replicate this load using only

two ESX machines with 40 GB RAM and enough vCPUs since each one of them

can hold 5 replicating “8 vCPUs, 8 GB RAM” vRPAs. In any case, you must ensure

that vRPA role 1 and role 2 are deployed on different ESXs and that HA is

enabled for all vRPAs. In case of an ESX failure, all the vRPAs on that ESX will

restart on the other ESX; however, until then, there might be a period when

replication will be temporarily disrupted due to overloading of the remaining

vRPAs. This situation will correct itself automatically once the vRPAs boot up.

Example:

As In the previous example, four applications generate throughput of 100MB/s

each. How many ESX machines are required to run the vRPAs, assuming that

each ESX machine has only 1 Gb WAN?

Assuming that there are no additional requirement from production VMs or VM

management services, five ESX machines are needed because every such ESX

can handle the network traffic of a single “8 vCPUs, 8 GB RAM” vRPA, and an

additional ESX machine should be provisioned for redundancy in case of an ESX

failure.

For additional vRPA considerations and best practices, refer to the EMC

RecoverPoint vRPA Technical Notes.

Best practice for synchronous replication

When using vRPA for synchronous replication, it is strongly recommended to

configure the number of replication streams to 2 (rather than the default, which

is 5) using the set_num_of_streams CLI command. This is to avoid a known issue of

peak of host I/Os that causes a delay that can lead to temporary disruption of

replication followed by a short synchronization period. Note, however, that

reducing the number of streams is a system-wide operation that may degrade

performance for asynchronous replication over connections with poor WAN

quality.

Snap-based replication

Snap-based replication provides an alternative to RecoverPoint traditional

continuous replication, which, despite its many advantages, may in the event of

high I/O load cause an extended out-of-sync mode (that is, highload), with

associated high RPO.

EMC RecoverPoint 5.0 Advanced Performance Guide 19

EMC INTERNAL USE ONLY RecoverPoint Performance Fundamentals

Snap-based replication is supported only with VNX storage systems running VNX

OE for Block 05.32.000.5.215 and later, or VNX OE for Block 05.33.000.5.038 and

later.

In order to allow consistent snaps across all replication sets, all volumes in a

consistency group must reside on the same array, so that a snap taken on the

array is applied on all the volumes in the consistency group at exactly the same

time.

To enable snap-based replication, one of the following shipping modes must be

set for the Snap-based Configuration parameter (in the Link Policy tab for the

consistency group):

On Highload — a single snap will be taken on the production array after a

highload event.

Periodic — the system will create snaps on the production array according to

a specified interval.

I/O flow during snap-based replication is as follows:

Production copy

During snap-based replication, the VNX splitter splits the I/Os to the RPA;

however, the RPA uses only the metadata to mark the dirty regions. This is

similar to RPA behavior when a consistency group is in a pause state. Due to

this, IOPS, throughput, and added response time of snap-based replication

are similar to that of a group that is paused.

Replica copy

Replicated snaps are written to the journal at the remote storage array and

distributed using the regular distribution process to the replica volume. A

RecoverPoint bookmark is taken after a snap is replicated successfully.

For additional information about snap-based replication for VNX storage systems,

including configuration and limitations, refer to the EMC RecoverPoint 5.0

Product Guide.

The following sections present the indicators used to measure performance for

snap-based replication, and the factors that affect those indicators.

Added response time

The following factors affect host response time when snap-based replication is

enabled:

Snapshots that are taken on the production LUNs in VNX increase the

response time, even without RecoverPoint.

Splitting data to the RPA, where it is used for marking the dirty regions, adds

response time to the application. Table 7 shows the added response time of

RecoverPoint due to this factor. These measurements were done on thin

devices. Both “With RecoverPoint” and “Without RecoverPoint”, a snapshot

of the user volumes exists on the array.

20 EMC RecoverPoint 5.0 Advanced Performance Guide

RecoverPoint Performance Fundamentals EMC INTERNAL USE ONLY

Table 7. Snap-based replication added response time at very low IOPS on VNX

array

Response time (ms) Added

response

With time by

Without RecoverPoint RecoverPoint

I/O size (KB) IOPS RecoverPoint replicating (ms)

4 150 0.99 1.3 0.31

8 150 1.28 1.8 0.52

64 150 1.09 1.31 0.22

128 75 1.5 1.96 0.46

1024 15 3.82 5.65 1.83

Snap sizes

Snaps sizes depend on the frequency at which snaps are replicated and on the

application write I/O rate, I/O pattern, and hot spots.

The RPA replicates only the dirty regions that were changed since the last snap.

As a consequence, the amount of data to be transferred may be smaller than

the amount of data that was written by the application, due to write-folding. If,

for example, two consecutive I/Os were written to the same offset and length,

only the second I/O will be replicated. This is significant when the application I/O

pattern consists of many hot spots. In addition, the lower the snapshot frequency,

the higher the expected folding factor.

The maximum size of a snap is the sum of the capacities of all the production

volumes in the consistency group. In this extreme case, in which all the

production volumes are changed in a single snap, the journal will need to be

larger than the sum of all the production volumes. If not, the snap can be

replicated only through long resync, in which case you will lose all previous points

in time.

Journal size and protection window

The protection window is determined by the oldest point in time that can be

used for image access. When snaps are transferred, they are written to the

journal of the remote copy. Hence, the protection window depends on the

journal volume size, and can be calculated basically using the same formula as

that used for continuous replication, which is presented in the EMC RecoverPoint

Provisioning and Sizing Technical Notes.

The only difference is that, due to the large I/O folding factor in snap-based

replication, the changed rate of the data is smaller than the incoming write rate

and therefore a larger protection window for a given journal size is expected.

EMC RecoverPoint 5.0 Advanced Performance Guide 21

EMC INTERNAL USE ONLY RecoverPoint Performance Fundamentals

RPO

The RPO is the amount of data that has reached the production copy but is not

yet available for image access at the copy in case of production disaster.

The RPO for periodic snap-based replication is the interval you set plus the time it

takes to create a snap on the production array and transfer it to the remote side.

Example:

Periodic snap replication is configured on the link between two clusters with a

1-hour interval. What is the RPO, assuming that it takes 10 minutes to create a

snap on the production array, and 20 minutes to transfer it to the remote copy?

The RPO is 1 hour and 30 minutes. That is because right before a snap is created

at the remote copy, the latest available bookmark for image access contains

the snap that was taken 1 hour and 30 minutes ago.

Max IOPS

In snap-based replication, just as for consistency groups that are in pause state,

the maximum IOPS that RecoverPoint can sustain depends on its hardware. For

example, on Gen6 RPAs the maximum IOPS is 50,000. Spreading the IOPS load on

more RPAs will linearly increase the total IOPS RecoverPoint system can handle,

up to the VNX array limit.

Frequency of RecoverPoint bookmarks

Frequency of RecoverPoint bookmarks determines the average time between

two consecutive points in time that can be used for image access. This

frequency depends on many factors, of which the main ones are as follows:

The period that is configured for periodic snap-based replication.

The period that is set defines the minimum time between consecutive

bookmarks. If the configured period is insufficient, the next snap will be

created with a small delay after the previous snap was replicated

successfully.

WAN bandwidth and RPA compression

Limited WAN may slow the transfer of a snap. You can use compression and

deduplication to increase WAN throughput. Using distributed CG or group

set may help also, depending on the configuration and location of the

bottleneck.

Production array load

When the application significantly loads the production array it slows down

the rate that the RPA can read from it. This will cause a slowdown in

replicating a snap.

Number of volumes in a CG

Simultaneous snap operations on many volumes put a heavy load on the

array and may take a lot of time. For example, if a single consistency group

22 EMC RecoverPoint 5.0 Advanced Performance Guide

RecoverPoint Performance Fundamentals EMC INTERNAL USE ONLY

has 64 volumes, it may take several minutes to complete a snap cycle

(create, expose, detach from SMP and delete).

Number of links configured for snap-based replication

The more links that are configured for snap-based replication, the greater

the number of snaps that need to be taken. Moreover, there is a limitation

that snaps are taken on the array sequentially and not in parallel. This means

that links can interfere with each other and reduce the frequency of

bookmarks.

Example:

An application is performing OLTP2HW pattern at maximum rate on 5 volumes in

a single CG. Would it be better to use continuous replication or snap-based

replication to protect it?

It depends on the bookmark granularity you would like to have. If you need

granularity of any point-in-time or on the order of seconds or a few minutes, use

continuous replication. Otherwise, use snap-based replication.

OLTP2HW writes its data to hot-spots. This reduces the change rate of the data

due to the large folding factor. As a consequence, in CRR replication, snap-

based replication would reduce dramatically the bandwidth usage between

clusters when compared to continuous replication. The larger interval you

choose, the more bandwidth is saved. For example, for a 10-minute, it may be

possible to achieve up to a 95% saving in bandwidth.

In addition snap-based replication would improve host performance by about

30% compared to continuous replication, since RP added response time in snap-

based replication is much lower, especially when the write volume is high.

Example:

The application generates constant write throughput of 300 MB/s to several

volumes that are protected by a single CG. What period should I configure for

snap-based replication?

The answer depends on the change rate of the data.

Each physical RPA can replicate snapshots at average rate of 150-200 MB/s.

If the folding factor is 2, then the change rate would be 150 MB/s which is less

than or equal to the replication rate. This means that snapshot sizes won’t

increase over time and you can configure a short period between snapshots.

However, best practice is to choose an interval that is not less than 1 hour.

If the folding factor is 1 then the change rate would be 300 MB/s, which is faster

than the replication rate. This means that regardless of the period you choose

the sizes of snapshots will increase in time. This will continue until the size of the

snapshot will equal the size of all the user volumes.

EMC RecoverPoint 5.0 Advanced Performance Guide 23

EMC INTERNAL USE ONLY RecoverPoint Performance Fundamentals

Additional considerations and best practices

As in continuous asynchronous replication, snap-based replication

performance scales linearly with the number of RPAs. Hence, if consistency is

not required across all devices, the best approach to increase performance

is to split a consistency into smaller groups, and run them on different RPAs. If

the group cannot be split, configure it as a distributed CG to allow several

RPAs to replicate snaps simultaneously.

RecoverPoint can affect application performance indirectly by producing

load on the production array during the read of the regions that need to be

synchronized.

To moderate this effect, run the config_io_throttling CLI command to

configure a limit on the RPAs read throughput that they produce during

replication.

Adopt snap-based replication gradually. It is not advisable to convert all links

from continuous replication to snap-based replication at once.

For “on highload” snap-based replication, start by choosing links that suffer

from recurring long highloads. It is not advisable to select this mode for more

than 32 links from any production array.

For “periodic” snap-based replication, start by choosing a few links on which

you will get the most benefit. Add more links only after several snap

replication periods have passed successfully, and the results are satisfactory.

SCSI UNMAP command in VPLEX

vStorage APIs for Array Integration (VAAI) define a set of storage primitives that

enable a host to offload certain storage operations to the storage array. This

reduces resource overhead on the host, and can significantly improve

performance for storage-intensive operations. One of these VAAI storage

primitives, the SCSI UNMAP command, enables a host to inform the storage array

that space that previously had been occupied can be reclaimed.

An ESXi host can issue a SCSI UNMAP command in the following scenarios:

Storage vMotion migrations

Virtual Machine snapshot consolidation

VMDK or VM deletion

Usually, UNMAP commands are addressed to a relatively large region of storage,

which is larger than a typical region that would be modified by a standard read

or write command. For example, VMware ESXi hosts may issue a single UNMAP

command on 32MB of storage. In some scenarios, UNMAP commands may be

sent also by non-VMware hosts.

Starting with VPLEX version 5.5 SP1, the UNMAP command is supported by VPLEX

with XtremIO back-end storage. An UNMAP command that is sent to the front-

end VPLEX device is forwarded to the back-end XtremIO device at the storage

array. RecoverPoint, however, does not replicate the UNMAP command itself.

Rather, for a device protected by RecoverPoint, the splitter that resides on the

24 EMC RecoverPoint 5.0 Advanced Performance Guide

RecoverPoint Performance Fundamentals EMC INTERNAL USE ONLY

VPLEX array intercepts the UNMAP command, and sends write IOs filled with

zeros to the RPA to be replicated to all the copies. The size and number of such

write IOs depends on the capacity that was unmapped by the host.

Depending on the type of array that hosts the replica copy, the writes of zeros

may be translated back to an UNMAP command when applied to the replica.

For example, if a VPLEX volume is replicated to a VNX, a VPLEX UNMAP

command that was sent to RecoverPoint as a write of zeros is applied to the VNX

replica using an UNMAP command. While RecoverPoint supports replication of

UNMAP commands from a VPLEX array at production, it does not yet support

issuing UNMAP commands to a VPLEX array serving as replica.

When UNMAP commands are sent to devices protected by RecoverPoint, the

translation of the commands to zeros may cause the following performance

problems:

Highloads — the amount of data that is being unmapped is the amount of

data that needs to be replicated. If the UNMAP command addresses a large

storage region, it causes high IO load on the relevant CG and RPA. That load

may cause highload even on other CGs running on the same RPA.

Increase in UNMAP command response time (latency) — As in every write IO,

the acknowledgement of the UNMAP IO is sent to the host only after the data

is sent to the RPA. When the UNMAP command is addressed to a large region

of storage, multiple write IOs filled with zeroes are sent to the RPA. That may

cause a significant increase in latency of UNMAP commands sent to devices

that are protected by RecoverPoint. In synchronous replication, that increase

may be even larger because the writes must be sent to and acknowledged

by the replica RPA.

Incompatibility of provisioned storage capacity between production and

remote — Since RecoverPoint does not replicate the original UNMAP

command, the replica receives write IOs filled with zeroes instead. Thus,

capacity that has been de-allocated at production remains allocated at the

replica. This is the case wherever RecoverPoint does not support the issuing of

UNMAP commands to the replica storage (VPLEX included)

Note that the load on communication between sites is expected to be very

minor as long as compression is enabled, because write IOs that are pure zeroes

can be significantly and easily compressed.

Note also that UNMAP commands are not frequently sent by hosts. In addition

the system recovers automatically from highloads. Thus, in most cases, highloads

are not considered very severe as long as they don’t happen too often.

In case of severe problems due to UNMAP commands, it is possible to configure

the ESXi host not to use UNMAP commands according to VMWare

documentation. Note, however, that this will disable UNMAP commands to all

datastores and devices, including those that are not protected by RecoverPoint,

thereby degrading their performance.

EMC RecoverPoint 5.0 Advanced Performance Guide 25

EMC INTERNAL USE ONLY RecoverPoint Performance Fundamentals

MetroPoint

The MetroPoint solution allows full RecoverPoint protection of the VPLEX Metro

configuration, maintaining replication even when one Metro site is down.

I/O flow during MetroPoint replication is as follows:

The VPLEX splitter is installed on all VPLEX directors on all sites. The splitter is

located beneath the VPLEX cache. When host sends a write I/O to a VPLEX

volume the I/O is intercepted by the splitter on both the metro sites. Each

splitter that receives the I/O sends it to the RPA that is connected and runs

the consistency group that protects this volume. Only when it is

acknowledged by the RPA is it sent to the backend storage array. After the

I/O to the backend storage array on both Metro sites is complete, the host is

acknowledged.

In this flow, two RPAs receive the I/O, one RPA on each side of the Metro.

Only the RPA that runs the active production replicates the I/O to the remote

copy. The RPA that runs the standby production will only mark the regions of

the I/O as dirty as if the group is in pause state.

For additional information about MetroPoint, see the EMC RecoverPoint

Deploying with VPLEX Technical Notes and the EMC RecoverPoint 5.0

Administrator’s Guide.

The following sections present the indicators used to measure performance for

MetroPoint replication, and the factors that affect those indicators.

IOPS and throughput

Performance tests indicate that MetroPoint maximum IOPS and throughput for

sync and async are only 2%-4% lower than RP replication performance of only

one side of the VPLEX Metro (that is, without a standby production copy). The

only observed exception is 10% degradation in IOPS test of sync replication.

Added response time

Due to the fact that the I/O needs to be sent by two splitters to two RPAs before

it is sent to the backend storage array, RecoverPoint added response time could

be expected to be higher than in regular replication flow. Since, however, this is

done in parallel on both splitters, it does not increase the response time.

In async replication, RecoverPoint added response time is 20% higher than VPLEX

replication in a non-MetroPoint configuration. In sync replication they are equal,

since in sync replication the splitter at the active production receives an ACK

from the RPA only when the I/O reaches the remote site. In most cases by that

time the splitter at the standby production has already received an ACK from

the RPA, since it is only marking the data and not replicating it.

26 EMC RecoverPoint 5.0 Advanced Performance Guide

Performance Test Results EMC INTERNAL USE ONLY

Deployment considerations

As in regular replication, load balancing of the consistency groups over RPAs

can greatly affect RecoverPoint overall performance. Hence, in MetroPoint, it is

advisable to balance the active and standby copies of the consistency groups

between the two production RecoverPoint clusters.

Example:

There are four MetroPoint CGs with throughput of 50 MB/s each. All of the RPA

clusters have 2 RPAs each. How should the CGs be configured to balance the

load?

Put two CGs on each RPA role. In each RPA role define one of the CGs as active

on one of the production RP clusters and the other CG as active on the second

production RP cluster.

In this way, each RPA at production will need to handle an incoming throughput

of 100 MB/s but replicate only 50 MB/s, and each RPA at the remote site will

need to distribute 100 MB/s.

Example:

Two MetroPoint CGs have throughput of 50 MB/s each. All RPA clusters have 2

RPAs each. How should I the CGs be configured to balance the load?

Put one CG on each RPA role. Unless you have WAN restrictions between one of

the production sites and the remote site, then performance-wise it doesn’t

matter which copy is active and which is standby.

It would be incorrect to put the two CGs on RPA 1 but define one of the CGs as

active on one production site and the other CG as active on the second

production site, since in that configuration, RPA 1 on the remote site will need to

distribute 100 MB/s while RPA 2 will be idle.

Performance Test Results

Performance test were conducted on an example environment for each of the

following RecoverPoint write splitters:

VPLEX

VMAX

Unity

VNX

Physical RPAs

Virtual RPAs

EMC RecoverPoint 5.0 Advanced Performance Guide 27

EMC INTERNAL USE ONLY Performance Test Results

It is important to note that the results depend on many parameters and can vary

significantly even if only some of the environment parameters are changed. It is

recommended, therefore, that you not compare the results of different

environments, since they are different from each other in so many parameters.

When assessing the expected performance of your environment, refer to the

performance results of the environment that most closely resembles yours. The

given performance results provide you an estimate only of the performance that

you can expect.

In order to be able to see the effect of RecoverPoint on performance, each

table contains the results with and without RecoverPoint. The results without

RecoverPoint can be considered as a baseline, or as the performance

characteristic of the example environment.

VPLEX splitter test

Configuration

Hosts:

2 Cisco UCS C200 E5620:

2 Intel® Xeon® Processors E5630, 2133 MHz (4 cores and 8virtual CPU

each one)

24 GB RAM

FC connectivity to the storage array using QLogic QLE2564 HBA

PowerPath v 6.0.0.2.0-3 multipath software was used

OS Solaris 11.3

Production storage:

VPLEX Medium (2 engines):

Software version: INT_D35-30-0.0.08 (Acropolis 5.5)

8 backend FC ports, 8 frontend FC ports connected

4 VPLEX directors

1:1 volume encapsulation

VNX backend storage (8000):

Flare version : 05.33.009.3.101

8 frontend FC ports connected

96 SAS disks of 820GB each, in 12 RAID groups (RAID 1/0, 3282GB each)

used for 512 production volumes

48 SAS disk of 820GB each, in 6 RAID groups (RAID 1/0, 3282GB each)

used for 24 journal volumes

28 EMC RecoverPoint 5.0 Advanced Performance Guide

Performance Test Results EMC INTERNAL USE ONLY

Replica storage:

VNX 8000:

Flare version : 05.33.006.5.102

4 frontend FC ports connected

96 SAS disks of 820GB each, in 12 RAID groups (RAID 1/0, 3282GB each)

used for 512 production volumes

40 SAS disk of 820GB each, in 5 RAID groups (RAID 1/0, 3282GB each)

used for 24 journal volumes

Communication between RPA clusters:

IP bandwidth of 10Gb per RPA

Performance results

Table 8. Async replication added response time at very low IOPS

Response time (ms)

I/O size With RecoverPoint Added response time

(KB) IOPS Without RecoverPoint replicating by RecoverPoint (ms)

4 150 1.23 1.60 0.37

8 150 1.25 1.60 0.25

64 150 1.49 2.04 0.55

128 75 1.71 2.34 0.63

512 35 3.08 4.19 1.11

1024 15 4.81 6.83 2.02

Table 9. Sync replication added response time at very low IOPS

Response time (ms)

With RecoverPoint Added response time by

I/O size (KB) IOPS Without RecoverPoint replicating RecoverPoint (ms)

4 150 1.23 2.74 1.51

8 150 1.25 3.06 1.81

64 150 1.49 3.54 2.05

128 75 1.71 4.96 3.25

512 35 3.08 10.08 7.00

1024 15 4.81 18.15 13.34

EMC RecoverPoint 5.0 Advanced Performance Guide 29

EMC INTERNAL USE ONLY Performance Test Results

Table 10. Application pattern performance in async replication

Without RecoverPoint With RecoverPoint replicating

Application

pattern IOPS Throughput (MB/s) IOPS Throughput (MB/s)

OLTP1 70,935 277 69,213 261

OLTP2 30,879 578 28,602 536

OLTP2HW 38,466 722 22,340 419

DSS2 32,680 1,659 33,375 1,695

Table 11. Async replication added response time for application patterns, at 60%

max IOPS

Response time (ms)

Application With RecoverPoint Added response time

pattern Without RecoverPoint replicating by RecoverPoint (ms)

OLTP1 1.77 2.23 0.46

OLTP2 3.05 3.4 0.35

OLTP2HW 1.4 1.5 0.1

DSS2 5.24 5.29 0.05

VMAX splitter test

Configuration

Hosts:

2 Cisco UCS C200 E5620:

2 Intel® Xeon® Processors E5630, 2133 MHz (4 cores and 8virtual CPU

each one)

24 GB RAM

FC connectivity to the storage array using QLogic QLE2564 HBA

PowerPath v 6.0.0.2.0-3 multipath software was used

OS Solaris 11.3

Production storage:

VMAX2 40K:

Enginuity 5876. 251.161

32 frontend FC ports connected

64 FC disks of 5GB each, in RAID 5 for 512 production volumes

38 FC disks of 50GB each, in RAID 5 for 24 journal volumes

30 EMC RecoverPoint 5.0 Advanced Performance Guide

Performance Test Results EMC INTERNAL USE ONLY

Replica storage:

VPLEX Medium (2 engines):

Software version: INT_D35-30-0.0.08 (Acropolis 5.5)

8 backend FC ports, 8 frontend FC ports connected

4 VPLEX directors

1:1 volume encapsulation

VNX backend storage (8000):

Flare version : 05.33.009.3.101

8 frontend FC ports connected

96 SAS disks of 820GB each, in 12 RAID groups (RAID 1/0, 3,282GB each)

used for 512 production volumes

48 SAS disks of 820GB each, in 6 RAID groups (RAID 1/0, 3,282GB each) for 24

journal volumes

Communication between RPA clusters:

IP bandwidth of 10Gb per RPA

Performance results

Table 12. Async replication added response time at very low IOPS

Response time (ms) Added response time

I/O size (KB) IOPS Without RecoverPoint With RecoverPoint replicating by RecoverPoint (ms)

4 150 0.24 1.12 0.88

8 150 0.27 1.12 0.85

64 150 0.54 1.73 1.19

128 75 0.97 2.9 1.93

512 35 3.68 10.7 7.02

1024 15 7.28 20.65 13.37

Table 13. Sync replication added response time at very low IOPS

Response time (ms) Added response time

I/O size (KB) IOPS Without RecoverPoint With RecoverPoint replicating by RecoverPoint (ms)

4 150 0.24 2.48 2.24

8 150 0.27 2.72 2.45

64 150 0.54 3.34 2.8

128 75 0.97 7.03 6.06

512 35 3.68 27.1 23.42

1024 15 7.28 54.14 46.86

EMC RecoverPoint 5.0 Advanced Performance Guide 31

EMC INTERNAL USE ONLY Performance Test Results

Table 14. Application pattern performance in async replication

With RecoverPoint

Without RecoverPoint

replicating

Application pattern IOPS Throughput (MB/s) IOPS Throughput (MB/s)

OLTP1 147,560 576 79955 312

OLTP2 78,090 1,463 32551 609

OLTP2HW 56,868 1,068 19536 366

DSS2 69,286 3,518 39735 2017

Table 15. Async replication added response time for application patterns, at 60%

max IOPS

Response time (ms)

Application With RecoverPoint Added response time

pattern Without RecoverPoint replicating by RecoverPoint (ms)

OLTP1 2.18 4.13 1.95

OLTP2 2.2 4.38 2.18

OLTP2HW 1.72 2.23 0.51

DSS2 1.58 2.43 0.85

Unity splitter test

Configuration

Hosts:

PowerEdge R210:

Intel(R) Core(TM) i3 CPU 540 @ 3.07GHz

16 GB RAM

ISP2532-based 8Gb Fibre Channel to PCI Express HBA

PowerPath v 5.5 P 02 (build 12) multipath software was used

Production storage:

Unity 500:

Software version : 4.0.0.733913

32 frontend FC ports connected

88 FC disks of 20GB each, in RAID 1/0 for 512 production volumes

24 FC disks of 50GB each, in RAID 1/0 for 128 journal volumes

32 EMC RecoverPoint 5.0 Advanced Performance Guide

Performance Test Results EMC INTERNAL USE ONLY

Replica storage:

Unity 500:

Software version : 4.0.0.733913

8 FC ports connected

88 FC disks of 20GB each, in RAID 1/0 for 512 production volumes

24 FC disks of 50GB each, in RAID 1/0 for 128 journal volumes

Communication between RPA clusters:

FC bandwidth of 8 Gb per RPA FC port

Performance results

Table 16. Async replication added response time at very low IOPS

Response time (ms) Added response time

I/O size (KB) IOPS Without RecoverPoint With RecoverPoint replicating by RecoverPoint (ms)

4 150 1.02 1.52 0.5

8 150 1.2 1.73 0.53

64 150 1.18 1.91 0.73

128 75 1.33 2.32 0.99

512 35 3 5.51 2.51

1024 15 4.64 7.92 3.28

Table 17. Sync replication added response time at very low IOPS

Response time (ms) Added response time

I/O size (KB) IOPS Without RecoverPoint With RecoverPoint replicating by RecoverPoint (ms)

4 150 1.02 2.89 1.87

8 150 1.2 3.22 2.02

64 150 1.18 3.88 2.7

128 75 1.33 4.37 3.04

512 35 3 10.81 7.81

1024 15 4.64 14.07 9.43

EMC RecoverPoint 5.0 Advanced Performance Guide 33

EMC INTERNAL USE ONLY Performance Test Results

Table 18. Application pattern performance in async replication

With RecoverPoint

Without RecoverPoint

replicating

Application pattern IOPS Throughput (MB/s) IOPS Throughput (MB/s)

OLTP1 111119 434 65417 255

OLTP2 45405 851 15110 283

OLTP2HW 37170 698 9416 176

DSS2 24352 1237 18712 950

Table 19. Async replication added response time for application patterns, at 60%

max IOPS

Response time (ms)

Application With RecoverPoint Added response time

pattern Without RecoverPoint replicating by RecoverPoint (ms)

OLTP1 2.30 4.65 2.35

OLTP2 3.78 6.25 2.48

OLTP2HW 2.49 3.02 0.53

DSS2 1.26 2.26 1.00

VNX splitter test with physical RPAs

Configuration

Hosts:

2 Cisco UCS C200 E5620:

2 Intel® Xeon® Processors E5630, 2133 MHz with 4 cores and 8 virtual CPU

each

24 GB RAM

FC connectivity to the storage array using QLogic QLE2564 HBA

PowerPath v 6.0.0.2.0-3 multipath software was used

OS Solaris 11.3

Production storage:

VNX 8000:

Flare version : 05.33.006.5.102

4 frontend FC ports connected

34 EMC RecoverPoint 5.0 Advanced Performance Guide

Performance Test Results EMC INTERNAL USE ONLY

96 SAS disks of 820GB each, in 12 RAID groups (RAID 1/0, 3282GB each)

used for 512 production volumes

40 SAS disks of 820GB each, in 5 RAID groups (RAID 1/0, 3282GB each)

used for 24 journal volumes

Replica storage:

VPLEX Medium (2 engines):

Software version: INT_D35-30-0.0.08 (Acropolis 5.5)

8 backend FC ports, 8 frontend FC ports connected

4 VPLEX directors

1:1 volume encapsulation

VNX backend storage (8000):

Flare version : 05.33.009.3.101

8 frontend FC ports connected

96 SAS disks of 820GB each, in 12 RAID groups (RAID 1/0, 3282GB each)

used for 512 user volumes

48 SAS disks of 820GB each, in 6 RAID groups (RAID 1/0, 3282GB each)

used for 24 journal volumes

Communication between RPA clusters:

FC bandwidth of 8 Gb per RPA FC port

Table 20. Async replication added response time at very low IOPS

Response time (ms) Added response time

I/O size (KB) IOPS Without RecoverPoint With RecoverPoint replicating by RecoverPoint (ms)

4 150 0.51 0.84 0.33

8 150 0.52 0.85 0.33

64 150 0.67 1.06 0.39

128 75 0.775 1.238 0.463

512 35 1.452 2.496 1.044

1024 15 4.246 6.205 1.959

EMC RecoverPoint 5.0 Advanced Performance Guide 35

EMC INTERNAL USE ONLY Performance Test Results

Table 21. Sync replication added response time at very low IOPS

Response time (ms) Added response time

I/O size (KB) IOPS Without RecoverPoint With RecoverPoint replicating by RecoverPoint (ms)

4 150 0.51 1.94 1.43

8 150 0.52 2.07 1.55

64 150 0.67 2.42 1.75

128 75 0.78 2.79 2.017

512 35 1.45 4.53 3.073

1024 15 4.25 8.28 4.031

Table 22. Application pattern performance in async replication

With RecoverPoint

Without RecoverPoint

replicating

Application pattern IOPS Throughput (MB/s) IOPS Throughput (MB/s)

OLTP1 54,183 211 42,634 166

OLTP2 25,647 480 25,094 468

OLTP2HW 22,982 431 20,216 379

DSS2 17,059 866 16,872 858

Table 23. Async replication added response time for application patterns, at 60%

max IOPS

Response time (ms)

Application With RecoverPoint Added response time

pattern Without RecoverPoint replicating by RecoverPoint (ms)

OLTP1 0.96 1.21 0.25

OLTP2 1.95 2.89 0.94

OLTP2HW 0.81 1.11 0.3

DSS2 2.64 3.86 1.22

36 EMC RecoverPoint 5.0 Advanced Performance Guide

Performance Test Results EMC INTERNAL USE ONLY

VNX splitter test with virtual RPAs

Configuration

Host:

Dell R210:

Dual Intel® Xeon® Processor E5630@ 2.13GHz

Memory: 24 GB

PowerPath v 5.5 (build 589) multipath software was used