Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Hadoop

Caricato da

ayazahmadpatCopyright

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoHadoop

Caricato da

ayazahmadpatEdureka

Comoduty hardware

Mahout:-

Hive :-

Job Tacker -Task Trcaker

Cloudera Hotonwork

Master Slave

Within IBM InfoSphere DataStage, the user modifies a configuration file to define

multiple processing nodes. These nodes work concurrently to complete each job

quickly and efficiently. ... Parallel processing environments are categorized as

symmetric multiprocessing ( SMP ) or massively parallel processing ( MPP ) systems.

===============

data lake systems tend to employ extract, load and transform (ELT) methods for

collecting and integrating data, instead of the extract, transform and load (ETL)

approaches typically used in data warehouses. Data can be extracted and processed

outside of HDFS using MapReduce, Spark and other data processing frameworks

Hadoop data lakes have come to hold both raw and curated data.

https://www.ibm.com/blogs/insights-on-business/sap-consulting/enterprise-analytics-

reference-architecture/

http://www.ibmbigdatahub.com/blog/ingesting-data-data-value-chain

http://www.datavirtualizationblog.com/logical-architectures-big-data-analytics/

https://www.xenonstack.com/blog/data-engineering/ingestion-processing-data-for-big-

data-iot-solutions

Prescriptive vs Predictive

ETL tools like Talend/Pentaho?

Data storage format ( like Parquet/Avro)

Wherehouse:-Enterptise data,Structured Data

Data Lake :-Ingest data any shape any type.Not Structured Data

Startegic reportic: donene in wherhouse

==================================Types of No SQL DB=====

Key Value Store

Columnar Store

Graph Dat base

Document Database

=======================

Time Series DB for IoT and used for industrial data

Fast Load Vs Multiload - Teradata Community

Fastload has two phases: acquisition phase and application phase. mload has 5

phases(Note: however there is no acquisition phase for mload delete).

mload phases: Preliminary,DML Transaction,Acquisition,Application,Cleanup.

The name itself is fast load.

Mload:

LOGTABLE ---Identifies the table to be used to checkpoint information required for

safe, automatic restart of Teradata MultiLoad when the client or Teradata Database

system fails.

===============

Teradata WebSphere MQ Access Module

Terdata load unload

Check Point on Data

==================Tee Pump for Real time data

TPT Teradata

===================

Cloud Provide Schema Convers tool(SCT)--Source and Target

Informatoca Load Script

========================

data Ingestion in cloud:-

SPARK,STORM,Amazon Kinesis,LAMDA for small chunk of data evet based.

====================

Hive :-Apache Hive is the SQL-on-Hadoop technolog

Parquet file format Coumner

=================

data lake is one of the popular approach to build the next generation enterprise

analytics platform.

informatica Power center

These areas include:

B2B exchange

Data governance

Data migration

Data warehousing

Data replication and synchronization

Integration Competency Centers (ICC)

Master Data Management (MDM)

Service-oriented architectures (SOA) and more.

Informatica PowerCenter is an enterprise data integration platform working as a

unit.

Real time ingestion tool

=======================parquet file format columnar================

=========================== Compression for Parquet Files. For most CDH

components, by default Parquet data files are not

Compression for Parquet Files

Using Parquet Files in HBase

Using Parquet Tables in Hive

Using Parquet Tables in Impala

Using Parquet Files in MapReduce

Using Parquet Files in Pig

Using Parquet Files in Spark

Parquet File Interoperability

Parquet File Structure

Examples of Java Programs to Read and Write Parquet Files

Denormalized:-Reporting layer ,best suited for read only

Normalized:-

Defult Joint:- Inner Joint

NOSQL: ALL distributed DB

1.Key Value

2.Clumar

3.Graph

4.Dcocument

Cassandra™: A scalable multi-master database with no single points of

failure. KEY Value

ACID vs CAP(partitined and Tolernace)

NoSQL

As the term says NoSQL, it means non relational or Non-SQL database, refer to

Hbase, Cassandra, MongoDb, Riak, CouchDB. It is not based on table formats and

that’s the reason we don’t use SQL for data access. A traditional database deals

with structured data while a relational database deals with the vertical as well as

horizontal storage system. NoSQL deals with the unstructured, unpredictable kind of

data according to the system requirement.

Algorithms for Machine Learning

Other more well-known libraries that exist in this space which can be “easily”

leveraged are Apache Mahout, Spark MLlib, FlinkML, Apache SAMOA, H2O, and

TensorFlow. Not all of these are interoperable, but Mahout can run on Spark and

Flink, SAMOA runs on Flink, and H2O runs on Spark. Cross-platform compatibility is

becoming an important topic in this space, so look for more cross-pollination.

Apache Spark is a popular choice for streaming applications;

MapR Streams with the Kafka 0.9 API has shown that it can handle 18 million events

per second on a five-node cluster with each message being 200 bytes in size.

Leveraging this capability in a scalable platform with decoupled communications is

amazing. The cost to scale this platform is very low,

=================

elastic search

S3

casendra

==================

mysql - Database sharding Vs partitioning - Stack Overflow

Popular ETL Tools:

DataStage - IBM Product

SSIS - Microsoft Product ( SSAS --for creating cubes ( facts/dimension for

reporting layer) , SSRS -Reporting Tools

ODI - Oracle ( Golden Gate -Real time data push)

Informatica --Informatica

Ab Initio

Open Source ETL:

Pentaho

Talend

========================

Cloud Migration

AWS Cloud

======================

Oracle vs DB 2

Primary Key and primary Index

ELT vs ETL

LookUp optimization

Strategic Reporting

Facts and Dimension

In Memory Is cached is Huge

In-memory database for mission critical OLTP application

An Enterprise Data Lake provides a unified view for all data which are required to

cater organization’s analytical reporting needs.

Developed multiple MapReduce jobs in java for data cleaning and preprocessing.

Importing and exporting data into HDFS and Hive using Sqoop

=================

Terdata:-Max Parellilism much more than other. High incentive Query is being

suupported primary Index

===========================

Pages--Blocks--Segment--->Extent

=========================MAPReduce============

Context class that provides various mechanisms

to communicate with the Hadoop framework,

=======================

In order to handle the Objects in Hadoop way. For example, hadoop uses Text instead

of java's String. The Text class in hadoop is similar to a java String, however,

Text implements interfaces like Comparable, Writable and WritableComparable.

===========================

The Writable and WritableComparable interfaces

If you browse the Hadoop API for the org.apache.hadoop.io package, you'll

see some familiar classes such as Text and IntWritable along with others with

the Writable suffix.

==================

Primitive wrapper classes

These classes are conceptually similar to the primitive wrapper classes, such as

Integer

and Long found in java.lang. They hold a single primitive value that can be set

either

at construction or via a setter method.

BooleanWritable

ByteWritable

DoubleWritable

FloatWritable

IntWritable

LongWritable

VIntWritable – a variable length integer type

VLongWritable – a variable length long type

==================

https://www.hakkalabs.co/articles/cassandra-data-modeling-guide

Potrebbero piacerti anche

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDa EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryValutazione: 3.5 su 5 stelle3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Da EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Valutazione: 4.5 su 5 stelle4.5/5 (119)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDa EverandNever Split the Difference: Negotiating As If Your Life Depended On ItValutazione: 4.5 su 5 stelle4.5/5 (838)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDa EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaValutazione: 4.5 su 5 stelle4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingDa EverandThe Little Book of Hygge: Danish Secrets to Happy LivingValutazione: 3.5 su 5 stelle3.5/5 (399)

- Grit: The Power of Passion and PerseveranceDa EverandGrit: The Power of Passion and PerseveranceValutazione: 4 su 5 stelle4/5 (587)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDa EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyValutazione: 3.5 su 5 stelle3.5/5 (2219)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDa EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeValutazione: 4 su 5 stelle4/5 (5794)

- Team of Rivals: The Political Genius of Abraham LincolnDa EverandTeam of Rivals: The Political Genius of Abraham LincolnValutazione: 4.5 su 5 stelle4.5/5 (234)

- Shoe Dog: A Memoir by the Creator of NikeDa EverandShoe Dog: A Memoir by the Creator of NikeValutazione: 4.5 su 5 stelle4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerDa EverandThe Emperor of All Maladies: A Biography of CancerValutazione: 4.5 su 5 stelle4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDa EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreValutazione: 4 su 5 stelle4/5 (1090)

- Her Body and Other Parties: StoriesDa EverandHer Body and Other Parties: StoriesValutazione: 4 su 5 stelle4/5 (821)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDa EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersValutazione: 4.5 su 5 stelle4.5/5 (344)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDa EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceValutazione: 4 su 5 stelle4/5 (890)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDa EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureValutazione: 4.5 su 5 stelle4.5/5 (474)

- The Unwinding: An Inner History of the New AmericaDa EverandThe Unwinding: An Inner History of the New AmericaValutazione: 4 su 5 stelle4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)Da EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Valutazione: 4 su 5 stelle4/5 (98)

- On Fire: The (Burning) Case for a Green New DealDa EverandOn Fire: The (Burning) Case for a Green New DealValutazione: 4 su 5 stelle4/5 (73)

- Threat Intelligence in PracticeDocumento62 pagineThreat Intelligence in Practicejakpyke100% (1)

- Minal KhandareDocumento4 pagineMinal KhandareayazahmadpatNessuna valutazione finora

- Resume Vidya PMPDocumento5 pagineResume Vidya PMPayazahmadpatNessuna valutazione finora

- CV New As of 11132019Documento3 pagineCV New As of 11132019ayazahmadpatNessuna valutazione finora

- Ramesh ResumeDocumento4 pagineRamesh ResumeayazahmadpatNessuna valutazione finora

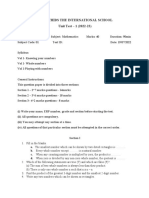

- Volume1 Test1 - Grade6Documento2 pagineVolume1 Test1 - Grade6ayazahmadpatNessuna valutazione finora

- Java MQ PDFDocumento188 pagineJava MQ PDFayazahmadpatNessuna valutazione finora

- CV SampleDocumento1 paginaCV SampleayazahmadpatNessuna valutazione finora

- TicketVoucher IBFA11309222000004Documento3 pagineTicketVoucher IBFA11309222000004ayazahmadpatNessuna valutazione finora

- WS Message Broker BasicsDocumento368 pagineWS Message Broker BasicsyousufbadarNessuna valutazione finora

- Jitender Kumar KhattarDocumento2 pagineJitender Kumar KhattarayazahmadpatNessuna valutazione finora

- Amqzao05 PDFDocumento379 pagineAmqzao05 PDFayazahmadpatNessuna valutazione finora

- First FloorDocumento1 paginaFirst FloorayazahmadpatNessuna valutazione finora

- Message-Driven-Bean Performance Using Websphere MQ V5.3 and Websphere Application Server V5.0Documento11 pagineMessage-Driven-Bean Performance Using Websphere MQ V5.3 and Websphere Application Server V5.0ayazahmadpatNessuna valutazione finora

- Websphere MQ As JMS Provider For WAS 6.xDocumento24 pagineWebsphere MQ As JMS Provider For WAS 6.xayazahmadpatNessuna valutazione finora

- MQ Server ConfDocumento19 pagineMQ Server ConfayazahmadpatNessuna valutazione finora

- Why Use A Service Integration Bus?: Message Exchange Through A Websphere MQ LinkDocumento9 pagineWhy Use A Service Integration Bus?: Message Exchange Through A Websphere MQ LinkayazahmadpatNessuna valutazione finora

- HADOOP TRAINING - List of Project Data Sets - Set1: WWW - Techdatasolution.co - in 1 Info@techdatasolution - Co.inDocumento2 pagineHADOOP TRAINING - List of Project Data Sets - Set1: WWW - Techdatasolution.co - in 1 Info@techdatasolution - Co.inayazahmadpatNessuna valutazione finora

- MQ DeploymentDocumento24 pagineMQ DeploymentayazahmadpatNessuna valutazione finora

- Spring BootDocumento1 paginaSpring BootayazahmadpatNessuna valutazione finora

- Aws ErrorDocumento5 pagineAws ErrorayazahmadpatNessuna valutazione finora

- Amqzao05 PDFDocumento379 pagineAmqzao05 PDFayazahmadpatNessuna valutazione finora

- Recipt For DriverDocumento1 paginaRecipt For DriverayazahmadpatNessuna valutazione finora

- Sap PMDocumento3 pagineSap PMayazahmadpatNessuna valutazione finora

- Pre-SOA (Monolithic and Tiered Architectures) Vs SOA Vs Microservices ViewDocumento1 paginaPre-SOA (Monolithic and Tiered Architectures) Vs SOA Vs Microservices ViewayazahmadpatNessuna valutazione finora

- Hadoop SkillsDocumento5 pagineHadoop SkillsayazahmadpatNessuna valutazione finora

- InformaticaDocumento18 pagineInformaticaayazahmadpatNessuna valutazione finora

- Hadoop SkillsDocumento5 pagineHadoop SkillsayazahmadpatNessuna valutazione finora

- EOI-Expression of InterestDocumento3 pagineEOI-Expression of InterestayazahmadpatNessuna valutazione finora

- Two PhaseDocumento3 pagineTwo PhaseayazahmadpatNessuna valutazione finora

- Bba Project ReferenceDocumento4 pagineBba Project ReferenceTejasviNessuna valutazione finora

- Operational Procedures For Imports Under The Asean-India Free Trade Area (Aifta) Trade in Goods (Tig) AgreementDocumento4 pagineOperational Procedures For Imports Under The Asean-India Free Trade Area (Aifta) Trade in Goods (Tig) AgreementDio MaulanaNessuna valutazione finora

- Ib Business Management - 5 5d Activity - Production Planning ActivityDocumento2 pagineIb Business Management - 5 5d Activity - Production Planning ActivityKANAK KOKARENessuna valutazione finora

- (ICSSR Research Surveys and Explorations) Jayati Ghosh (Ed.) - India and the International Economy (Economics, Volume 2)-Oxford University Press (2015)Documento509 pagine(ICSSR Research Surveys and Explorations) Jayati Ghosh (Ed.) - India and the International Economy (Economics, Volume 2)-Oxford University Press (2015)Sarthak RajNessuna valutazione finora

- Kuratko South Asian Perspective - EntrepreneshipKuratko - Ch04Documento35 pagineKuratko South Asian Perspective - EntrepreneshipKuratko - Ch04Rakshitha RNessuna valutazione finora

- CIR v. British Overseas AirwaysDocumento21 pagineCIR v. British Overseas Airwaysevelyn b t.Nessuna valutazione finora

- Kyambogo University: Faculty of Arts and Social SciencesDocumento13 pagineKyambogo University: Faculty of Arts and Social SciencesTumukunde JimmyNessuna valutazione finora

- Business PlanDocumento16 pagineBusiness PlanKwaku frimpongNessuna valutazione finora

- C09 Krugman425789 10e GEDocumento57 pagineC09 Krugman425789 10e GEVu Van CuongNessuna valutazione finora

- 4B Plant Layout PDFDocumento35 pagine4B Plant Layout PDFVipin Gupta100% (3)

- Comparative Financial Analysis of Hero Motocorp & Bajaj AutoDocumento97 pagineComparative Financial Analysis of Hero Motocorp & Bajaj Autoakshay gangwaniNessuna valutazione finora

- SSC Maths Questions SlidesDocumento44 pagineSSC Maths Questions Slidesasha jalanNessuna valutazione finora

- Find Local BusinessesDocumento1 paginaFind Local Businessesgs2020Nessuna valutazione finora

- Ip7 Warrnty TelestraDocumento1 paginaIp7 Warrnty TelestraBill DanyonNessuna valutazione finora

- Journal Entry Requirements: Accounting Services Guide Journal EntriesDocumento6 pagineJournal Entry Requirements: Accounting Services Guide Journal EntriesSudershan ThaibaNessuna valutazione finora

- Stefanus Miguel Jacob Lie - C1H021039 - Resume Chapter 3Documento5 pagineStefanus Miguel Jacob Lie - C1H021039 - Resume Chapter 3Almas DelianNessuna valutazione finora

- Training and Certification Matrix For Projects ContractorsDocumento1 paginaTraining and Certification Matrix For Projects ContractorsshafieNessuna valutazione finora

- Internship Report Sample 4Documento40 pagineInternship Report Sample 4KhanNessuna valutazione finora

- Mcdonalds in India and Promotion Strategy of Mcdonalds in IndiaDocumento7 pagineMcdonalds in India and Promotion Strategy of Mcdonalds in IndiaKrishna JhaNessuna valutazione finora

- Nº Nemotécnico Razón Social Isin: Instrumentos Listados en MILA - PERÚDocumento7 pagineNº Nemotécnico Razón Social Isin: Instrumentos Listados en MILA - PERÚWilson DiazNessuna valutazione finora

- Makerere University Agricultural Production Course OutlineDocumento3 pagineMakerere University Agricultural Production Course OutlineWaidembe YusufuNessuna valutazione finora

- Notice 610 Submission of Statistics and ReturnsDocumento3 pagineNotice 610 Submission of Statistics and ReturnsAbhimanyun MandhyanNessuna valutazione finora

- Country Specific EffectsDocumento46 pagineCountry Specific EffectsNur AlamNessuna valutazione finora

- Sieve Shaker Octagon 200Cl and Sieveware Evaluation SoftwareDocumento2 pagineSieve Shaker Octagon 200Cl and Sieveware Evaluation SoftwareRamirez FrancisNessuna valutazione finora

- Financial Management-Financial Statements-Chapter 2Documento37 pagineFinancial Management-Financial Statements-Chapter 2Bir kişi100% (1)

- SRI5151 Operations Management PORT1 202122 V1Documento15 pagineSRI5151 Operations Management PORT1 202122 V1Tharindu HashanNessuna valutazione finora

- Module I - Forms of Business Organisations and Corporate PersonalityDocumento280 pagineModule I - Forms of Business Organisations and Corporate PersonalityAthisaya cgNessuna valutazione finora

- FAQ List For VOLKSWAGEN CATIA Additional ApplicationsDocumento37 pagineFAQ List For VOLKSWAGEN CATIA Additional ApplicationszarasettNessuna valutazione finora

- Updated DepEd Guidelines on Grant of Vacation Service CreditsDocumento4 pagineUpdated DepEd Guidelines on Grant of Vacation Service CreditsGuilbert AtilloNessuna valutazione finora