Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

X and Y. The Joint Probabilities of Symbols From: III Tutorial Sheet (2018 - 19) EL-342

Caricato da

Musiur Raza Abidi0 valutazioniIl 0% ha trovato utile questo documento (0 voti)

28 visualizzazioni2 paginedigital communication tutorial sheet

Titolo originale

III Tutorial Sheet

Copyright

© © All Rights Reserved

Formati disponibili

DOCX, PDF, TXT o leggi online da Scribd

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentodigital communication tutorial sheet

Copyright:

© All Rights Reserved

Formati disponibili

Scarica in formato DOCX, PDF, TXT o leggi online su Scribd

0 valutazioniIl 0% ha trovato utile questo documento (0 voti)

28 visualizzazioni2 pagineX and Y. The Joint Probabilities of Symbols From: III Tutorial Sheet (2018 - 19) EL-342

Caricato da

Musiur Raza Abididigital communication tutorial sheet

Copyright:

© All Rights Reserved

Formati disponibili

Scarica in formato DOCX, PDF, TXT o leggi online su Scribd

Sei sulla pagina 1di 2

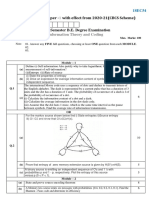

III Tutorial Sheet (2018 – 19)

EL-342

1. Given two information sources with alphabet X and Y. The joint probabilities of symbols from

these sources are given in the following table

y1 y2 y3

x1 0.1 0.08 0.13

x2 0.05 0.03 0.09

x3 0.05 0.12 0.14

x4 0.11 0.04 0.06

Find H(X), H(Y) and H(X|Y).

2. A DMS has a symbol alphabet with |X| =10. Find the upper and lower bounds of its entropy.

3. A source X, has an infinitely large set of outputs with probability of occurrence 2 -i, i=1,2,3,….

What is the entropy of the source?

4. A source is transmitting two symbols a and b with p(a)=1/16 and p(b)=15/16. Design a code which

would provide an efficiency of around (i) 50 % (ii) 70%.

5. Consider the channels A and B and the cascaded channel AB shown in Fig-1.

Fig – 1

(i) Find the channel capacity of channels A, B, and AB.

(ii) Explain the relationship among the capacities obtained in Part (i).

6. Calculate the amount of information needed to open a lock whose combination consists of three

integers, each ranging from 00 to 99.

7. A signal is bandlimited to 5 kHz sampled at a rate of 10000 samples/s, and quantized to 4 levels

such that the samples can take the values 0, 1, 2 and 3 with probabilities p, p, 0.5 – p and 0.5 – p

respectively. Find the capacity of the channel which has following channel transition probability

matrix.

1 0 0 0

p(Y|X) [1 1 0 0 ]

0 0 0.5 0.5

0 0 0.5 0.5

8. Consider a (127, 92) linear block code capable of triple error corrections.

(i) What is the probability of message error for an uncoded block of 92 bits if the channel

symbol error probability is 0.001?

(ii) What is the probability of message error when using (127, 92) block code for the same

channel?

9. Consider rate-1/2 convolutional encoder of Fig-2. Find the encoder output produced by the message

sequence 10111….

Fig – 2

10. Consider the following generator matrix over GF(2)

1 01 00

G 1 0 0 11

0 1 0 10

(i) Generate all possible codewords.

(ii) Find the check matrix H.

(iii) Find the generator matrix of an equivalent systematic code.

(iv) What is the minimum distance of the code?

(v) How many errors this code can detect? Write the set of error patterns this code can detect.

(vi) How many errors this code correct?

(vii) Is it a linear code?

(viii) What is the probability of symbol error with this encoding? Compare it with an uncoded

system.

11. Few codewords of a linear block code are listed as 11000, 01111, 11110,01010. List all codeword

of this code.

12. Prove that if sum of two error patterns is a valid codeword, then each error pattern has the same

syndrome.

13. Consider the (8, 4) extended Hamming code with parity check matrix

Check the number of errors in the following received words:10111011, 00101101, 11011010,

10000101.

Potrebbero piacerti anche

- Feminist Philosophy of Religion (Stanford)Documento35 pagineFeminist Philosophy of Religion (Stanford)Jagad-Guru DasNessuna valutazione finora

- Kant-Critique of JudgmentDocumento3 pagineKant-Critique of JudgmentDavid Fernyhough0% (2)

- Cyber AttacksDocumento12 pagineCyber AttacksMusiur Raza AbidiNessuna valutazione finora

- Cyber AttacksDocumento12 pagineCyber AttacksMusiur Raza AbidiNessuna valutazione finora

- Suppliers of Ese Lightning Conductor Kalre Lightning ArresterDocumento2 pagineSuppliers of Ese Lightning Conductor Kalre Lightning ArresterRemedies EarthingNessuna valutazione finora

- Public Art, Private PlacesDocumento20 paginePublic Art, Private PlacesLisa Temple-CoxNessuna valutazione finora

- SP Racing F3 FC Board(Deluxe) internal OSD Specification and SetupDocumento5 pagineSP Racing F3 FC Board(Deluxe) internal OSD Specification and SetupibyselfNessuna valutazione finora

- Gafti AP Apeo SopDocumento8 pagineGafti AP Apeo SopManoj ChaudhariNessuna valutazione finora

- NIT JAMSHEDPUR ASSIGNMENT ON INFORMATION THEORY AND CODINGDocumento2 pagineNIT JAMSHEDPUR ASSIGNMENT ON INFORMATION THEORY AND CODINGSaket GoluNessuna valutazione finora

- Important questions on error detection and correction codesDocumento3 pagineImportant questions on error detection and correction codesSuraj RamakrishnanNessuna valutazione finora

- Question Paper Code:: Reg. No.Documento3 pagineQuestion Paper Code:: Reg. No.krithikgokul selvamNessuna valutazione finora

- Itc Imp QuestionsDocumento2 pagineItc Imp QuestionsAsaNessuna valutazione finora

- DC Assignments - 18-19Documento4 pagineDC Assignments - 18-19Allanki Sanyasi RaoNessuna valutazione finora

- A G1002 Pages: 2: Answer Any Two Full Questions, Each Carries 15 MarksDocumento2 pagineA G1002 Pages: 2: Answer Any Two Full Questions, Each Carries 15 MarksAdithyan JNessuna valutazione finora

- Ece-V-Information Theory & Coding (10ec55) - AssignmentDocumento10 pagineEce-V-Information Theory & Coding (10ec55) - AssignmentLavanya Vaishnavi D.A.Nessuna valutazione finora

- Sheet No.5Documento6 pagineSheet No.5samaa saadNessuna valutazione finora

- Anna University Exams Nov Dec 2019 - Regulation 2017 Ec8501 Digital Communication Part B & Part C QuestionsDocumento2 pagineAnna University Exams Nov Dec 2019 - Regulation 2017 Ec8501 Digital Communication Part B & Part C QuestionsMohanapriya.S 16301Nessuna valutazione finora

- Digital Comms Question Bank Entropy Rates CapacityDocumento3 pagineDigital Comms Question Bank Entropy Rates CapacityAllanki Sanyasi RaoNessuna valutazione finora

- Roll No.: Time: 3hours MM: 100 Q1-Attempt Any Four of The FollowingDocumento2 pagineRoll No.: Time: 3hours MM: 100 Q1-Attempt Any Four of The Followingrachna009Nessuna valutazione finora

- APJ Abdul Kalam Tech University B.Tech Degree Exam Dec 2018 Information Theory & CodingDocumento2 pagineAPJ Abdul Kalam Tech University B.Tech Degree Exam Dec 2018 Information Theory & CodingMkNessuna valutazione finora

- Answer Any Two Full Questions, Each Carries 15 MarksDocumento2 pagineAnswer Any Two Full Questions, Each Carries 15 MarksKrishnakumar K ANessuna valutazione finora

- CRCDocumento2 pagineCRCrachna009Nessuna valutazione finora

- ECE ITC Model Paper 1Documento4 pagineECE ITC Model Paper 1SaurabhNessuna valutazione finora

- Question Bank - NewDocumento5 pagineQuestion Bank - NewJeevanreddy MamillaNessuna valutazione finora

- Question Bank ItcDocumento14 pagineQuestion Bank ItcVenu Madhav ChittaNessuna valutazione finora

- It PSDocumento18 pagineIt PSDominic LeeNessuna valutazione finora

- Channel Coding ProblemsDocumento6 pagineChannel Coding Problemssamaa saadNessuna valutazione finora

- Huffman Coding and Data Compression SolutionsDocumento25 pagineHuffman Coding and Data Compression SolutionsRamNessuna valutazione finora

- New Question Bank - Itc-Qb UltimateDocumento14 pagineNew Question Bank - Itc-Qb UltimateMayur Chandnani0% (1)

- Department of Physics: HETT210: Digital Communication Systems Continuous Assessment 1Documento1 paginaDepartment of Physics: HETT210: Digital Communication Systems Continuous Assessment 1Shawn Moyo100% (1)

- Jan 08Documento3 pagineJan 08djaytgNessuna valutazione finora

- A 2 Digital Unit 5Documento2 pagineA 2 Digital Unit 5utkarshtk1403Nessuna valutazione finora

- Subject: No... : ExamDocumento2 pagineSubject: No... : ExamHoney SinghNessuna valutazione finora

- TTE2008: Information TheoryDocumento4 pagineTTE2008: Information TheorystephanNessuna valutazione finora

- ITC 6thdec2017Documento2 pagineITC 6thdec2017Koush RastogiNessuna valutazione finora

- 19ET5PCITCDocumento4 pagine19ET5PCITCMayur NayakaNessuna valutazione finora

- Coding Techniques Important Questions-1Documento6 pagineCoding Techniques Important Questions-1jaya Sekhar lankelaNessuna valutazione finora

- 17D38101 Error Control CodingDocumento1 pagina17D38101 Error Control CodingSreekanth PagadapalliNessuna valutazione finora

- Data Compression Techniques and Information Theory ExercisesDocumento32 pagineData Compression Techniques and Information Theory ExercisesCrystal HarringtonNessuna valutazione finora

- Model Question Paper - With Effect From 2020-21 (CBCS Scheme)Documento5 pagineModel Question Paper - With Effect From 2020-21 (CBCS Scheme)Hemanth KumarNessuna valutazione finora

- Information Coding Techniques Question BankDocumento10 pagineInformation Coding Techniques Question BankManikandan ArunachalamNessuna valutazione finora

- IT Assignment 2Documento2 pagineIT Assignment 2syed02Nessuna valutazione finora

- Digital Communications Key Techniques & Coding MethodsDocumento2 pagineDigital Communications Key Techniques & Coding MethodsSowmya ChowdaryNessuna valutazione finora

- It2302 PDFDocumento1 paginaIt2302 PDFSonaAnnyaNessuna valutazione finora

- KAUST ECE 242 Digital Communications and Coding Final ExamDocumento4 pagineKAUST ECE 242 Digital Communications and Coding Final ExamYingquan LiNessuna valutazione finora

- Semester Two Examinations - May 2008: Communication Engineering 16-7204Documento5 pagineSemester Two Examinations - May 2008: Communication Engineering 16-7204amit panvekarNessuna valutazione finora

- Itc Information Theory and Coding Jun 2020Documento3 pagineItc Information Theory and Coding Jun 2020Margoob TanweerNessuna valutazione finora

- College of EngineeringDocumento3 pagineCollege of EngineeringTemitayo EjidokunNessuna valutazione finora

- B.E./B.Tech. Degree Examinations Information Coding Techniques Question PaperDocumento3 pagineB.E./B.Tech. Degree Examinations Information Coding Techniques Question PaperSaravanan SadhasivamNessuna valutazione finora

- R09-Coding Theory and TechniquesDocumento2 pagineR09-Coding Theory and TechniquesmegarebelNessuna valutazione finora

- ITC Unit IDocumento31 pagineITC Unit IankurwidguitarNessuna valutazione finora

- DC 2 ND MIDUandi StarDocumento18 pagineDC 2 ND MIDUandi StarNeeraja SoumyaNessuna valutazione finora

- This Exam Has An Open Question and A Multiple Choice SectionDocumento7 pagineThis Exam Has An Open Question and A Multiple Choice SectionPawan RajwanshiNessuna valutazione finora

- MATH3411 Information, Codes and Ciphers 2020 T3Documento18 pagineMATH3411 Information, Codes and Ciphers 2020 T3Peper12345Nessuna valutazione finora

- Ec 7005 1 Information Theory and Coding Dec 2020Documento3 pagineEc 7005 1 Information Theory and Coding Dec 2020Rohit kumarNessuna valutazione finora

- EC-7005 (1) - CBGS: B.E. VII Semester Choice Based Grading System (CBGS) Information Theory and CodingDocumento3 pagineEC-7005 (1) - CBGS: B.E. VII Semester Choice Based Grading System (CBGS) Information Theory and CodingRohit kumarNessuna valutazione finora

- 4 Channel CodingDocumento2 pagine4 Channel Codingerrohit123Nessuna valutazione finora

- ICTDocumento10 pagineICTShanmugapriyaVinodkumarNessuna valutazione finora

- A) B) C) D) E) 0 G) : Ii/FormationDocumento4 pagineA) B) C) D) E) 0 G) : Ii/FormationPooja MishraNessuna valutazione finora

- All CodingDocumento52 pagineAll CodingAHMED DARAJNessuna valutazione finora

- M. Tech - Dig Elo. Error Control CodingDocumento5 pagineM. Tech - Dig Elo. Error Control Codingammayi9845_930467904Nessuna valutazione finora

- 3-18EC8T01 B.Tech Model Paper CTT Set-2Documento2 pagine3-18EC8T01 B.Tech Model Paper CTT Set-2Chandra SekharNessuna valutazione finora

- Tutorial 2 + SolutionsDocumento6 pagineTutorial 2 + SolutionsMujaahid KhanNessuna valutazione finora

- Tutorial 2Documento3 pagineTutorial 2amit100singhNessuna valutazione finora

- FP Data CommDocumento5 pagineFP Data CommExperza HillbertNessuna valutazione finora

- ITC I MID TERMDocumento2 pagineITC I MID TERMYadvendra BediNessuna valutazione finora

- Digital Signal Processing (DSP) with Python ProgrammingDa EverandDigital Signal Processing (DSP) with Python ProgrammingNessuna valutazione finora

- Error-Correction on Non-Standard Communication ChannelsDa EverandError-Correction on Non-Standard Communication ChannelsNessuna valutazione finora

- ELC3410 Tutorial Sheet – IV QPSK Modulation and DemodulationDocumento2 pagineELC3410 Tutorial Sheet – IV QPSK Modulation and DemodulationMusiur Raza AbidiNessuna valutazione finora

- Mid-Semester Examination - September 2017 Department of Electronics EngineeringDocumento1 paginaMid-Semester Examination - September 2017 Department of Electronics EngineeringMusiur Raza AbidiNessuna valutazione finora

- Securing The Internet of Things A ZKP Approach Thesis ExtractDocumento26 pagineSecuring The Internet of Things A ZKP Approach Thesis ExtractMusiur Raza AbidiNessuna valutazione finora

- MOBILE COMMUNICATIONS OVERVIEWDocumento106 pagineMOBILE COMMUNICATIONS OVERVIEWMusiur Raza AbidiNessuna valutazione finora

- Maqtal e Muqarram PDFDocumento529 pagineMaqtal e Muqarram PDFMusiur Raza AbidiNessuna valutazione finora

- Digital Communication Tutorial SheetDocumento2 pagineDigital Communication Tutorial SheetMusiur Raza AbidiNessuna valutazione finora

- EL-342 Tutorial Sheet - IIDocumento2 pagineEL-342 Tutorial Sheet - IIMusiur Raza AbidiNessuna valutazione finora

- The Role of Significance Tests1: D. R. CoxDocumento22 pagineThe Role of Significance Tests1: D. R. CoxMusiur Raza AbidiNessuna valutazione finora

- EL651QDocumento3 pagineEL651QMusiur Raza AbidiNessuna valutazione finora

- X and Y. The Joint Probabilities of Symbols From: III Tutorial Sheet (2018 - 19) EL-342Documento2 pagineX and Y. The Joint Probabilities of Symbols From: III Tutorial Sheet (2018 - 19) EL-342Musiur Raza AbidiNessuna valutazione finora

- An Illustrated History of ComputersDocumento49 pagineAn Illustrated History of ComputersMoiz_ul_Amin100% (1)

- Definition of Non-uniform Discrete Fourier Transform (NDFTDocumento10 pagineDefinition of Non-uniform Discrete Fourier Transform (NDFTMusiur Raza AbidiNessuna valutazione finora

- Digital Communication Tutorial SheetDocumento2 pagineDigital Communication Tutorial SheetMusiur Raza AbidiNessuna valutazione finora

- POC2014Documento4 paginePOC2014Musiur Raza AbidiNessuna valutazione finora

- Reliable Communication: Basics of Error Control CodingDocumento4 pagineReliable Communication: Basics of Error Control CodingMusiur Raza AbidiNessuna valutazione finora

- Mid-Semester Examination - September 2017 Department of Electronics EngineeringDocumento1 paginaMid-Semester Examination - September 2017 Department of Electronics EngineeringMusiur Raza AbidiNessuna valutazione finora

- An Illustrated History of ComputersDocumento8 pagineAn Illustrated History of ComputersMusiur Raza AbidiNessuna valutazione finora

- Use of IT in ControllingDocumento19 pagineUse of IT in ControllingSameer Sawant50% (2)

- Basculas Con MIGO SAPDocumento3 pagineBasculas Con MIGO SAPmizraimNessuna valutazione finora

- Book ReviewDocumento1 paginaBook ReviewBaidaNessuna valutazione finora

- Itp 8Documento5 pagineItp 8Arung IdNessuna valutazione finora

- Business Culture BriefingDocumento9 pagineBusiness Culture BriefingSonia CamposNessuna valutazione finora

- Cri 201 Pre Compre ExaminationDocumento6 pagineCri 201 Pre Compre ExaminationKyle Adrian FedranoNessuna valutazione finora

- Persuasive Writing G7Documento18 paginePersuasive Writing G7Shorouk KaramNessuna valutazione finora

- Kinds of Adverbs Lesson PlanDocumento4 pagineKinds of Adverbs Lesson PlanSuliat SulaimanNessuna valutazione finora

- Final QuestionDocumento5 pagineFinal QuestionrahulNessuna valutazione finora

- Public Service InnovationDocumento112 paginePublic Service InnovationresearchrepublicNessuna valutazione finora

- Writing Ink Identification: Standard Guide ForDocumento5 pagineWriting Ink Identification: Standard Guide ForEric GozzerNessuna valutazione finora

- 11.servlet WrappersDocumento14 pagine11.servlet WrapperskasimNessuna valutazione finora

- Oil Based Mud ThinnerDocumento2 pagineOil Based Mud ThinnerjangriNessuna valutazione finora

- The Revised VGB-S-506pg9Documento1 paginaThe Revised VGB-S-506pg9retrogrades retrogradesNessuna valutazione finora

- Is It Worth Dropping One More Year For GATE - QuoraDocumento6 pagineIs It Worth Dropping One More Year For GATE - QuoraRaJu SinGhNessuna valutazione finora

- HPS100 2016F SyllabusDocumento6 pagineHPS100 2016F SyllabusxinNessuna valutazione finora

- FGD on Preparing Learning Materials at Old Cabalan Integrated SchoolDocumento3 pagineFGD on Preparing Learning Materials at Old Cabalan Integrated SchoolRAQUEL TORRESNessuna valutazione finora

- Aneka Cloud IntroductionDocumento36 pagineAneka Cloud IntroductionPradeep Kumar Reddy ReddyNessuna valutazione finora

- An Introduction To Acoustics PDFDocumento296 pagineAn Introduction To Acoustics PDFmatteo_1234Nessuna valutazione finora

- ClinicalKey - Supporting Healthcare ProfessionalsDocumento51 pagineClinicalKey - Supporting Healthcare ProfessionalsrsbhyNessuna valutazione finora

- SDO City of Malolos-Math5-Q4M1-Area of A Circle-Ramirez EWDocumento25 pagineSDO City of Malolos-Math5-Q4M1-Area of A Circle-Ramirez EWKris Bernadette David100% (1)

- DBMS Lab - Practical FileDocumento21 pagineDBMS Lab - Practical Fileakhileshprasad1Nessuna valutazione finora

- Commodi Cation of Women's Bodies andDocumento9 pagineCommodi Cation of Women's Bodies andunesa fikNessuna valutazione finora

- Chapter 14 Speaking To PersuadeDocumento6 pagineChapter 14 Speaking To PersuadeAtiqah NadirahNessuna valutazione finora