Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

BITS Pilani: Machine Learning (IS ZC464)

Caricato da

TabithaDsouzaTitolo originale

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

BITS Pilani: Machine Learning (IS ZC464)

Caricato da

TabithaDsouzaCopyright:

Formati disponibili

BITS Pilani

Machine Learning (IS ZC464) Session 4 :

Classification Algorithms

Classification Algorithms

• K-Nearest Neighbors

• Naïve Bayes’ classifier

• Decision tree classifier

February 10, 2019 IS ZC464 (Machine Learning) 2

K-Nearest Neighbors

• Training instances are stored but no

generalization is done before an instance for

testing arrives.

• No hypothesis using training input output

pairs is constructed.

• A new instance is examined with respect to all

training instances (observations) and is given

its target value.

February 10, 2019 IS ZC464 (Machine Learning) 3

Lazy Learning

• These methods are sometimes referred to as

“lazy” learning methods because they delay

processing until a new instance must be

classified.

• The target function is not estimated once for

the entire input (instance) space.

• The target label or class of the new instance is

computed locally or differently when it comes.

February 10, 2019 IS ZC464 (Machine Learning) 4

What does it mean by lazy

learning?

• The instance based methods keep using the

training data from its memory without

learning any knowledge from the data.

• Every new instance requires the entire training

data to be processed due to which

classification is delayed and is called lazy

learning.

February 10, 2019 IS ZC464 (Machine Learning) 5

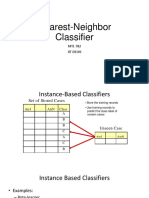

Instance based methods

• K-Nearest Neighbor Learning

• Locally Weighted Regression

February 10, 2019 IS ZC464 (Machine Learning) 6

k-nearest Neighbor Learning

• This algorithm assumes all instances

correspond to points in the n-dimensional

space .

• The nearest neighbors are defined in terms of

the Euclidean distance.

• Let an arbitrary instance ‘x’ be described by

the feature vector (a1(x), a2(x), …, an(x)) where

ar(x) is the value of the rth attribute of instance

x.

February 10, 2019 IS ZC464 (Machine Learning) 7

Distance measure

• The distance between two instances xi and xj is

defined by d(xi, xj) where

(a r ( xi ) a r ( x j))

n 2

d ( xi , x j )

r 1

• The target function in nearest neighbor

learning may be either discrete valued or real

valued.

February 10, 2019 IS ZC464 (Machine Learning) 8

K-Nearest Neighbor Algorithm

• Consider the following two dimensional points

belonging to two different classes represented

as two colors

• The points are

representing training

instances by the

corresponding feature

vectors.

• The feature vector is an x,y

coordinate pair for each

point

February 10, 2019 IS ZC464 (Machine Learning) 9

K-Nearest Neighbor Algorithm

• Let a new testing instance arrives (shown as

blue dot) and is plotted on the same

coordinate plane

• The k-nearest neighbor

algorithm requires ‘k’ to be

defines as a value.

• For example if k=3, we

compute the distances of

the new instance from all

training data and mark

three closest points

February 10, 2019 IS ZC464 (Machine Learning) 10

K-Nearest Neighbor Algorithm

The three closest neighbors are marked as

double bordered circles with respective colors

as class labels

As two yellow colored

instances are close to the

new testing instance (blue)

out of the three closest

neighbors, we assign the

class (with yellow color as

label) to the testing

instance

February 10, 2019 IS ZC464 (Machine Learning) 11

Classification as mapping

• Define f: n V

• Where V = {v1, v2, ..vs} set of class labels

• And n is the n-dimensional space

• A value f(xq) represents the class label to

which the testing sample xq belongs to

February 10, 2019 IS ZC464 (Machine Learning) 12

Which class should be assigned to

the testing sample xq?

• Define a function as follows

(a,b) = 1 if a=b

0 otherwise

• (v,f(xi)) gets a value 1 if the class of training

data xi is v. This value contributes in the

classification

• The class of the test instance xq is given by

k

f ( xq ) arg max (v, f ( xi ))

vV i 1

February 10, 2019 IS ZC464 (Machine Learning) 13

1-Nearest Neighbor Learning

• This method selects value of k as 1 and assigns

the target class to the testing instance of the

training instance which is nearest to it.

Of the samples around

the testing sample, the

nearest one is seen

and its corresponding

class is assigned to the

testing sample

February 10, 2019 IS ZC464 (Machine Learning) 14

Classification accuracy may

change if k changes

• 1-NN assigns the class label 1 (red)

• 3-NN assigns the class label 2 (yellow)

• 4-NN has 2 yellow and 2 red nearest samples

confusion

• 5-NN assigns class

label 1 (red)

February 10, 2019 IS ZC464 (Machine Learning) 15

Distance Weighted Nearest Neighbor

Algorithm

• The contribution of the k-neighbors is

weighed according to their respective distance

from the given test data point.

• More weight is given to the training data of

the k nearest points being the closest while

the points at father distance are given less

weight.

February 10, 2019 IS ZC464 (Machine Learning) 16

Weight

• The weight wi of the ith training point at

distance d(xi, xq) is inverse of square of the

value of d(xi, xq).

1

w i 2

d ( xi , x j )

February 10, 2019 IS ZC464 (Machine Learning) 17

Which class should be assigned

based on weights?

• Define a function as follows

(a,b) = 1 if a=b

0 otherwise

k

f ( xq) arg max wi (v, f ( xi ))

vV i 1

February 10, 2019 IS ZC464 (Machine Learning) 18

Example

• 5-NN

• Let the distances of the training instances of class

1 be 4, 6 and 2 and those of the class 2 be 2 and 3

from the test instance

• Compute the class label for test instance

• Compute for class 1: 1/(4)2 ,1/(6)2 , 1/(2)2 which

are 0.0625, 0.0277, 0.25 sum of which is 0.3402

• Compute for class 2: 1/(2)2 ,1/(3)2 which are 0.25

and 0.1111 respectively, sum of which is 0.3611

• Class 2 is assigned to the test sample.

February 10, 2019 IS ZC464 (Machine Learning) 19

Naïve Bayes’ Classifier

• This classifier uses the posterior probabilities

computed using Bayes’ theorem

• Bayes theorem

• Where

February 10, 2019 IS ZC464 (Machine Learning) 20

An example: Training with 14

observations

Training data: The weather data, with counts and probabilities

Outlook temperature humidity Windy Play

(X1) (X2) (X3) (X4) (Y)

yes no yes no yes no yes no yes no

sunny 2 3 hot 2 2 high 3 4 false 6 2 9 5

overcast 4 0 mild 4 2 normal 6 1 true 3 3

rainy 3 2 cool 3 1

sunny 2/9 3/5 hot 2/9 2/5 high 3/9 4/5 false 6/9 2/5 9/14 5/14

overcast 4/9 0/5 mild 4/9 2/5 normal 6/9 1/5 true 3/9 3/5

rainy 3/9 2/5 cool 3/9 1/5

Testing data

outlook temperature humidity windy play

sunny cool high true ?

February 10, 2019 IS ZC464 (Machine Learning) 21

Highlight testing variables for Y = yes

Training data: The weather data, with counts and probabilities

Outlook temperature humidity Windy Play

(X1) (X2) (X3) (X4) (Y)

yes no yes no yes no yes no yes no

sunny 2 3 hot 2 2 high 3 4 false 6 2 9 5

overcast 4 0 mild 4 2 normal 6 1 true 3 3

rainy 3 2 cool 3 1

sunny 2/9 3/5 hot 2/9 2/5 high 3/9 4/5 false 6/9 2/5 9/14 5/14

overcast 4/9 0/5 mild 4/9 2/5 normal 6/9 1/5 true 3/9 3/5

rainy 3/9 2/5 cool 3/9 1/5

Testing data

outlook temperature humidity windy play

sunny cool high true ?

February 10, 2019 IS ZC464 (Machine Learning) 22

Testing for Y=yes

For Y = yes (plays) P(X1=sunny, X2=cool, X3 =high, X4 = true| Y=yes) P(Y=yes)

2 3 3 3 9

0.0053

9 9 9 9 14

February 10, 2019 IS ZC464 (Machine Learning) 23

Highlight testing variables for Y = no

Training data: The weather data, with counts and probabilities

Outlook temperature humidity Windy Play

(X1) (X2) (X3) (X4) (Y)

yes no yes no yes no yes no yes no

sunny 2 3 hot 2 2 high 3 4 false 6 2 9 5

overcast 4 0 mild 4 2 normal 6 1 true 3 3

rainy 3 2 cool 3 1

sunny 2/9 3/5 hot 2/9 2/5 high 3/9 4/5 false 6/9 2/5 9/14 5/14

overcast 4/9 0/5 mild 4/9 2/5 normal 6/9 1/5 true 3/9 3/5

rainy 3/9 2/5 cool 3/9 1/5

Testing data

outlook temperature humidity windy play

sunny cool high true ?

February 10, 2019 IS ZC464 (Machine Learning) 24

Testing for Y=no

For Y = no P(X1=sunny, X2=cool, X3 =high, X4 = true| Y=no) P(Y=no)

3 1 4 3 5

0.0206

5 5 5 5 14

February 10, 2019 IS ZC464 (Machine Learning) 25

Prediction

Testing data

outlook temperature humidity windy play

sunny cool high true ?

• P(Y=yes| X1=sunny, X2=cool, X3 =high, X4 = true) = 0.0053/α

• P(Y=no| X1=sunny, X2=cool, X3 =high, X4 = true) = 0.0206/α

• Where α = P(X1, X2, X3, X4) and is constant for both

• Since 0.0206/α is greater than 0.0053/α, therefore the

predicted class is no for the given test data

February 10, 2019 IS ZC464 (Machine Learning) 26

Potrebbero piacerti anche

- K Nearest Neighbor Algorithm: Fundamentals and ApplicationsDa EverandK Nearest Neighbor Algorithm: Fundamentals and ApplicationsNessuna valutazione finora

- Unit 5 - DA - Classification & ClusteringDocumento105 pagineUnit 5 - DA - Classification & Clustering881Aritra PalNessuna valutazione finora

- Math Power Packs, Grade 3: Reproducible Homework PacketsDa EverandMath Power Packs, Grade 3: Reproducible Homework PacketsValutazione: 5 su 5 stelle5/5 (1)

- Part A 3. KNN ClassificationDocumento35 paginePart A 3. KNN ClassificationAkshay kashyapNessuna valutazione finora

- Instance Based LearningDocumento39 pagineInstance Based LearningDarshan R GowdaNessuna valutazione finora

- K-Nearest Neighbour (KNN)Documento14 pagineK-Nearest Neighbour (KNN)Muhammad HaroonNessuna valutazione finora

- Algorithms: K Nearest NeighborsDocumento16 pagineAlgorithms: K Nearest NeighborsmeenaNessuna valutazione finora

- Accelerated Data Science Introduction To Machine Learning AlgorithmsDocumento37 pagineAccelerated Data Science Introduction To Machine Learning AlgorithmssanketjaiswalNessuna valutazione finora

- K Nearest Neighbors: Probably A Duck."Documento14 pagineK Nearest Neighbors: Probably A Duck."Daneil RadcliffeNessuna valutazione finora

- 18CS71 AI & ML Module 5 NotesDocumento21 pagine18CS71 AI & ML Module 5 Notesakashroshan17Nessuna valutazione finora

- K Nearest Neighbors (KNN) : "Birds of A Feather Flock Together"Documento16 pagineK Nearest Neighbors (KNN) : "Birds of A Feather Flock Together"Sourabh ChiprikarNessuna valutazione finora

- ML Lecture 11 (K Nearest Neighbors)Documento13 pagineML Lecture 11 (K Nearest Neighbors)Md Fazle RabbyNessuna valutazione finora

- Ue21cs352a 20230830121009Documento42 pagineUe21cs352a 20230830121009Sonupatel SonupatelNessuna valutazione finora

- Dr. S. Vairachilai Department of CSE CVR College of Engineering Mangalpalli TelanganaDocumento18 pagineDr. S. Vairachilai Department of CSE CVR College of Engineering Mangalpalli TelanganaSavitha ElluruNessuna valutazione finora

- Module 5 Part 2 3Documento19 pagineModule 5 Part 2 3Anonymous C7EPgpXxNessuna valutazione finora

- Module 5 Part 2 3 PDFDocumento19 pagineModule 5 Part 2 3 PDFSANJAY NSNessuna valutazione finora

- Lecture 22 - K-Nearnest NeighboursDocumento11 pagineLecture 22 - K-Nearnest Neighboursbscs-20f-0009Nessuna valutazione finora

- Instance Based Learning: November 2015Documento11 pagineInstance Based Learning: November 2015Manu SNessuna valutazione finora

- KNN PDFDocumento30 pagineKNN PDFavinash singhNessuna valutazione finora

- Why Nearest Neighbor?: Used To Classify Objects Based On Closest Training Examples in The Feature SpaceDocumento40 pagineWhy Nearest Neighbor?: Used To Classify Objects Based On Closest Training Examples in The Feature SpaceUrsTruly AnirudhNessuna valutazione finora

- Data Mining Lecture 10B: ClassificationDocumento62 pagineData Mining Lecture 10B: ClassificationArul Kumar VenugopalNessuna valutazione finora

- K-Nearest Neighbor LearningDocumento31 pagineK-Nearest Neighbor LearningEdward KenwayNessuna valutazione finora

- Chapter 4 & 5Documento41 pagineChapter 4 & 5tinsaeNessuna valutazione finora

- AIML M-5 FullDocumento19 pagineAIML M-5 Fullkeerthana rNessuna valutazione finora

- Nearest Neighbor Classification: Utkarsh Kulshrestha Data Scientist - Tcs LearnbayDocumento13 pagineNearest Neighbor Classification: Utkarsh Kulshrestha Data Scientist - Tcs LearnbayN MaheshNessuna valutazione finora

- K Nearest NeighboursDocumento13 pagineK Nearest NeighboursN MaheshNessuna valutazione finora

- Lecture Week 2 KNN and Model Evaluation PDFDocumento53 pagineLecture Week 2 KNN and Model Evaluation PDFHoàng Phạm100% (1)

- Lecture 4Documento31 pagineLecture 4Ahmed MosaNessuna valutazione finora

- Univt - IVDocumento72 pagineUnivt - IVmananrawat537Nessuna valutazione finora

- ML Lab Programs (1-12)Documento35 pagineML Lab Programs (1-12)Shyam NaiduNessuna valutazione finora

- Instance Based LearningDocumento16 pagineInstance Based LearningSwathi ReddyNessuna valutazione finora

- Instance Based Learning: Artificial Intelligence and Machine Learning 18CS71Documento19 pagineInstance Based Learning: Artificial Intelligence and Machine Learning 18CS71Suraj HSNessuna valutazione finora

- Internet of Things Comparative StudyDocumento3 pagineInternet of Things Comparative StudySriz PradhanNessuna valutazione finora

- Instance Based LearningDocumento49 pagineInstance Based LearningVanshika Mehrotra100% (1)

- KD IV IBL Clustering 1415Documento51 pagineKD IV IBL Clustering 1415ManuelNessuna valutazione finora

- KNN Dan KMeansDocumento37 pagineKNN Dan KMeansmichelle winataNessuna valutazione finora

- Learning From Data: Jörg SchäferDocumento33 pagineLearning From Data: Jörg SchäferHamed AryanfarNessuna valutazione finora

- Lesson 4.1 - Unsupervised Learning Partitioning Methods PDFDocumento41 pagineLesson 4.1 - Unsupervised Learning Partitioning Methods PDFTayyaba FaisalNessuna valutazione finora

- KNN PresentationDocumento16 pagineKNN PresentationRam RoyalNessuna valutazione finora

- K-NN (Nearest Neighbor)Documento17 pagineK-NN (Nearest Neighbor)Shisir Ahmed100% (1)

- 06c Nearest NeighborDocumento17 pagine06c Nearest NeighborWang Chen YuNessuna valutazione finora

- Adaptive Learning-Based K-Nearest Neighbor Classifiers With Resilience To Class ImbalanceDocumento17 pagineAdaptive Learning-Based K-Nearest Neighbor Classifiers With Resilience To Class ImbalanceAyushNessuna valutazione finora

- Supervised Learning: Instance Based LearningDocumento16 pagineSupervised Learning: Instance Based LearningPrajakta RaneNessuna valutazione finora

- Modified KNN OHLC StudyDocumento17 pagineModified KNN OHLC StudyVitor DuarteNessuna valutazione finora

- ML Lec07 KNNDocumento37 pagineML Lec07 KNNossamasamir.work100% (2)

- DWDM PPTDocumento35 pagineDWDM PPTRakesh KumarNessuna valutazione finora

- Module 5 Notes 1Documento26 pagineModule 5 Notes 1Mohammad Athiq kamranNessuna valutazione finora

- Clustering Gene Expression Data: CS 838 WWW - Cs.wisc - Edu/ Craven/cs838.html Mark Craven Craven@biostat - Wisc.edu April 2001Documento12 pagineClustering Gene Expression Data: CS 838 WWW - Cs.wisc - Edu/ Craven/cs838.html Mark Craven Craven@biostat - Wisc.edu April 2001Fadhili DungaNessuna valutazione finora

- EndSem 202122 SolutionDocumento11 pagineEndSem 202122 Solutionf20202072Nessuna valutazione finora

- PRML Assignment1 2022Documento2 paginePRML Assignment1 2022Basil Azeem ed20b009Nessuna valutazione finora

- Lecture 15-16Documento16 pagineLecture 15-16minimailNessuna valutazione finora

- WINSEM2020-21 ECE3047 ETH VL2020210503202 Reference Material II 09-Apr-2021 KNN PresentationDocumento16 pagineWINSEM2020-21 ECE3047 ETH VL2020210503202 Reference Material II 09-Apr-2021 KNN PresentationsartgNessuna valutazione finora

- Lecture8 KNN1Documento16 pagineLecture8 KNN1Zarin TasnimNessuna valutazione finora

- A Review of Data Classification Using K-Nearest NeighbourDocumento7 pagineA Review of Data Classification Using K-Nearest NeighbourUlmoTolkienNessuna valutazione finora

- MidSem 202122 SolutionDocumento7 pagineMidSem 202122 Solutionf20202072Nessuna valutazione finora

- Lecture 07 KNN 14112022 034756pmDocumento24 pagineLecture 07 KNN 14112022 034756pmMisbah100% (1)

- ML DSBA Lab4Documento5 pagineML DSBA Lab4Houssam FoukiNessuna valutazione finora

- CHE320 Sp22 Assessment 2 F3Documento3 pagineCHE320 Sp22 Assessment 2 F3hazem ab2009Nessuna valutazione finora

- SUMSEM-2020-21 MEE6070 ETH VL2020210700842 Reference Material I 16-Jul-2021 K-Nearest Neighbors (KNN) Algorithm (Repaired) Week-3Documento40 pagineSUMSEM-2020-21 MEE6070 ETH VL2020210700842 Reference Material I 16-Jul-2021 K-Nearest Neighbors (KNN) Algorithm (Repaired) Week-3KrupaNessuna valutazione finora

- Module 4 ADocumento29 pagineModule 4 AAKSHITH V SNessuna valutazione finora

- Multiple VideosDocumento6 pagineMultiple VideosTabithaDsouzaNessuna valutazione finora

- IR IndexingDocumento15 pagineIR IndexingTabithaDsouzaNessuna valutazione finora

- Class 12 Chemistry Isolation of ElementsDocumento12 pagineClass 12 Chemistry Isolation of ElementsTabithaDsouzaNessuna valutazione finora

- SS ZG536 - January 2019Documento8 pagineSS ZG536 - January 2019TabithaDsouzaNessuna valutazione finora

- Homework2 SolutionDocumento11 pagineHomework2 SolutionTabithaDsouza100% (1)

- Sszg514 Nov25 FNDocumento2 pagineSszg514 Nov25 FNTabithaDsouza100% (1)

- Csizg518 Nov25 AnDocumento2 pagineCsizg518 Nov25 AnTabithaDsouza100% (1)

- 6th Sem Microwave Engineering Lab VivaDocumento18 pagine6th Sem Microwave Engineering Lab VivaAnand Prakash Singh80% (5)

- Transmission of Digital Data: Interfaces and ModemsDocumento20 pagineTransmission of Digital Data: Interfaces and ModemsTabithaDsouzaNessuna valutazione finora

- Embedded Based Real-Time Patient Monitoring SystemDocumento5 pagineEmbedded Based Real-Time Patient Monitoring SystemTabithaDsouza50% (2)

- Co2 Sensor ModuleDocumento10 pagineCo2 Sensor ModuleTabithaDsouzaNessuna valutazione finora

- Zigbee Based Heartbeat Rate Monitoring SystemDocumento6 pagineZigbee Based Heartbeat Rate Monitoring SystemTabithaDsouzaNessuna valutazione finora

- Thin Film DepositionDocumento12 pagineThin Film DepositionTabithaDsouzaNessuna valutazione finora

- 3ionospheric PropagationDocumento60 pagine3ionospheric PropagationTabithaDsouzaNessuna valutazione finora

- Literature Survey: 2.1 Review On Machine Learning Techniques For Stock Price PredictionDocumento15 pagineLiterature Survey: 2.1 Review On Machine Learning Techniques For Stock Price Predictionritesh sinhaNessuna valutazione finora

- Investigation and Comparison Missing Data Imputation MethodsDocumento73 pagineInvestigation and Comparison Missing Data Imputation MethodsJason YinglingNessuna valutazione finora

- K-Nearest Neighbour Classifier: PrerequisiteDocumento6 pagineK-Nearest Neighbour Classifier: PrerequisiteShubham KackerNessuna valutazione finora

- Unit 3 DataDocumento37 pagineUnit 3 DataSangamNessuna valutazione finora

- A Research On Bitcoin Price Prediction Using Machine Learning AlgorithmsDocumento5 pagineA Research On Bitcoin Price Prediction Using Machine Learning AlgorithmsAfzalSyedNessuna valutazione finora

- MDD1816 PDFDocumento10 pagineMDD1816 PDFdineshshaNessuna valutazione finora

- (IJCST-V10I5P50) :DR K. Sailaja, Guttalasandu Vasudeva ReddyDocumento7 pagine(IJCST-V10I5P50) :DR K. Sailaja, Guttalasandu Vasudeva ReddyEighthSenseGroupNessuna valutazione finora

- ShowfileDocumento130 pagineShowfileJayNessuna valutazione finora

- A Comprehensive Guide To Data Exploration: Steps of Data Exploration and Preparation Missing Value TreatmentDocumento8 pagineA Comprehensive Guide To Data Exploration: Steps of Data Exploration and Preparation Missing Value Treatmentsnreddy b100% (1)

- K-Nearest NeighborDocumento24 pagineK-Nearest NeighborAbdalah Saleh Moustafa ElgholmyNessuna valutazione finora

- Final Year Project ReportDocumento44 pagineFinal Year Project ReportBABAI GHOSHNessuna valutazione finora

- Unit-3 ClassificationDocumento28 pagineUnit-3 ClassificationAnand Kumar BhagatNessuna valutazione finora

- Machine Learning Multiple Choice QuestionsDocumento20 pagineMachine Learning Multiple Choice QuestionsSatyanarayan Gupta100% (1)

- COMP551 Fall 2020 P1Documento4 pagineCOMP551 Fall 2020 P1AlainNessuna valutazione finora

- ML Quiz 2Documento8 pagineML Quiz 2mr IndiaNessuna valutazione finora

- IoT (15CS81) Module 4 Machine LearningDocumento66 pagineIoT (15CS81) Module 4 Machine LearningRijo Jackson TomNessuna valutazione finora

- Data Mining Based Cyber-Attack Detection: Tianfield, HuagloryDocumento18 pagineData Mining Based Cyber-Attack Detection: Tianfield, HuagloryCésar Javier Montaña RojasNessuna valutazione finora

- Super Important Questions For BDA-18CS72: Module-1Documento2 pagineSuper Important Questions For BDA-18CS72: Module-1SamarthNessuna valutazione finora

- Machine Learning Project in Python Step-By-StepDocumento23 pagineMachine Learning Project in Python Step-By-StepbebshnnsjsNessuna valutazione finora

- Internship Report On Machine LearningDocumento26 pagineInternship Report On Machine Learningmadhur chavan100% (1)

- 11 Most Common Machine Learning Algorithms Explained in A Nutshell by Soner Yıldırım Towards Data ScienceDocumento16 pagine11 Most Common Machine Learning Algorithms Explained in A Nutshell by Soner Yıldırım Towards Data ScienceDheeraj SonkhlaNessuna valutazione finora

- Customer Review Analysis Using Data ScienceDocumento31 pagineCustomer Review Analysis Using Data ScienceSahil GroverNessuna valutazione finora

- Music Genre Classification For Indian MuDocumento9 pagineMusic Genre Classification For Indian MuKakasdNessuna valutazione finora

- Internship ReportDocumento38 pagineInternship ReportAbhishek MNessuna valutazione finora

- Data Mining: Concepts and Techniques: - Chapter 7Documento61 pagineData Mining: Concepts and Techniques: - Chapter 7sathyam66Nessuna valutazione finora

- Lecture #2: Prediction, K-Nearest Neighbors: CS 109A, STAT 121A, AC 209A: Data ScienceDocumento28 pagineLecture #2: Prediction, K-Nearest Neighbors: CS 109A, STAT 121A, AC 209A: Data ScienceLeonardo Lujan GonzalezNessuna valutazione finora

- Topic 4 MLDocumento125 pagineTopic 4 MLﻣﺤﻤﺪ الخميسNessuna valutazione finora

- Fake Currency Detection With Machine Learning Algorithm and Image ProcessingDocumento6 pagineFake Currency Detection With Machine Learning Algorithm and Image ProcessingKrishna KrishNessuna valutazione finora

- ParkinsonDocumento7 pagineParkinsonjaideepNessuna valutazione finora

- Sess05b Classification Models-kNNDocumento23 pagineSess05b Classification Models-kNNAastha NagdevNessuna valutazione finora