Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Validity and Reliability

Caricato da

Amelia GalacticCopyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Validity and Reliability

Caricato da

Amelia GalacticCopyright:

Formati disponibili

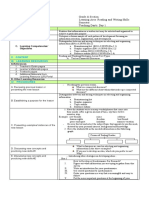

ITEM ANALYSIS

1.) Item Validity – the degree to which a test or other measuring device is truly measuring what we

purport it to measure.

Content Validity – essence of what you’re measuring consists of topics and processes (are these

the right type or items?)

Criterion Related – how well a test corresponds with a particular criterion

(a) Concurrent Validity – correlate what is occurring now;

(b) Predictive Validity – correlate what occurs in the future (ex: college entrance exams ->

academic performance)

Construct Validity – an informed scientific idea developed or hypothesized to describe or

explain a behavior; something built by mental synthesis. (does this test relate to other tests?)

- Constructs are made up or constructed by us in our attempts to organize and make

sense of behavior and other psychological processes.

(a) Convergent Validity – the test correlates well and measures the same construct as to other

test.

(b) Divergent/Discriminant Validity - a validity coefficient sharing little or no relationship

between the newly created test and an existing test.

2.) Item Reliability – indicates the internal consistency of a test, survey, observation or other

measuring device.

Sources of Error:

Time Sampling Error – error due to testing occasions

Test-retest Reliability – same test is given to a group of subjects on at least two separate

occasions

Domain Sampling error – error due to test items

Internal Consistency Error – error due to testing multiple traits

o Split-half method

o Kuder-Richardson formula

o Cronbach’s alpha –a measure used to assess the internal consistency, or a set of scale

or test items.

Potrebbero piacerti anche

- Grit: The Power of Passion and PerseveranceDa EverandGrit: The Power of Passion and PerseveranceValutazione: 4 su 5 stelle4/5 (588)

- NeurotransmittersDocumento1 paginaNeurotransmittersAmelia GalacticNessuna valutazione finora

- The Yellow House: A Memoir (2019 National Book Award Winner)Da EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Valutazione: 4 su 5 stelle4/5 (98)

- DreamsDocumento1 paginaDreamsAmelia GalacticNessuna valutazione finora

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDa EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeValutazione: 4 su 5 stelle4/5 (5795)

- Too Little Too Much Serotonin - Affects Mood, Dopamine - InfluencesDocumento1 paginaToo Little Too Much Serotonin - Affects Mood, Dopamine - InfluencesAmelia GalacticNessuna valutazione finora

- Never Split the Difference: Negotiating As If Your Life Depended On ItDa EverandNever Split the Difference: Negotiating As If Your Life Depended On ItValutazione: 4.5 su 5 stelle4.5/5 (838)

- Attitudes and FunctionsDocumento1 paginaAttitudes and FunctionsAmelia GalacticNessuna valutazione finora

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDa EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceValutazione: 4 su 5 stelle4/5 (895)

- IOPSYCHDocumento1 paginaIOPSYCHAmelia GalacticNessuna valutazione finora

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDa EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersValutazione: 4.5 su 5 stelle4.5/5 (345)

- IOPSYCHDocumento1 paginaIOPSYCHAmelia GalacticNessuna valutazione finora

- Shoe Dog: A Memoir by the Creator of NikeDa EverandShoe Dog: A Memoir by the Creator of NikeValutazione: 4.5 su 5 stelle4.5/5 (537)

- Physical Science 20 - Reactions RubricDocumento2 paginePhysical Science 20 - Reactions Rubricapi-349567441Nessuna valutazione finora

- Mengya Mia Hu Resume Data Science-Full-TimeDocumento1 paginaMengya Mia Hu Resume Data Science-Full-Timeapi-265794616Nessuna valutazione finora

- The Little Book of Hygge: Danish Secrets to Happy LivingDa EverandThe Little Book of Hygge: Danish Secrets to Happy LivingValutazione: 3.5 su 5 stelle3.5/5 (400)

- Master Thesis Linkoping UniversityDocumento4 pagineMaster Thesis Linkoping Universityafjvbpyki100% (2)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDa EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureValutazione: 4.5 su 5 stelle4.5/5 (474)

- BUS 511-Midterm-Test - 1-SampleQuestionsDocumento3 pagineBUS 511-Midterm-Test - 1-SampleQuestionshod.mechengineerNessuna valutazione finora

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDa EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryValutazione: 3.5 su 5 stelle3.5/5 (231)

- Book of AbstractsDocumento186 pagineBook of Abstractsdesseu abrahamNessuna valutazione finora

- On Fire: The (Burning) Case for a Green New DealDa EverandOn Fire: The (Burning) Case for a Green New DealValutazione: 4 su 5 stelle4/5 (74)

- Summer Internship Report On STUDY of PERDocumento82 pagineSummer Internship Report On STUDY of PERsahilsorakhe036Nessuna valutazione finora

- The Emperor of All Maladies: A Biography of CancerDa EverandThe Emperor of All Maladies: A Biography of CancerValutazione: 4.5 su 5 stelle4.5/5 (271)

- 2020 Appendices To Applications For Promotion 2020round 1Documento5 pagine2020 Appendices To Applications For Promotion 2020round 1Luis Gabriel VasquezNessuna valutazione finora

- Name: Class Schedule: General Direction: Write Your Answer On The Answer SheetDocumento3 pagineName: Class Schedule: General Direction: Write Your Answer On The Answer SheetEvia AbegaleNessuna valutazione finora

- Guidelines For Transformation Between Local and Global DatumsDocumento6 pagineGuidelines For Transformation Between Local and Global DatumsSuta VijayaNessuna valutazione finora

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDa EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaValutazione: 4.5 su 5 stelle4.5/5 (266)

- Chapter 1. Statistics and Data: SolutionsDocumento7 pagineChapter 1. Statistics and Data: SolutionsJingjing ZhuNessuna valutazione finora

- The Unwinding: An Inner History of the New AmericaDa EverandThe Unwinding: An Inner History of the New AmericaValutazione: 4 su 5 stelle4/5 (45)

- PETCONOMYDocumento44 paginePETCONOMYMaz Maz MazNessuna valutazione finora

- Team of Rivals: The Political Genius of Abraham LincolnDa EverandTeam of Rivals: The Political Genius of Abraham LincolnValutazione: 4.5 su 5 stelle4.5/5 (234)

- Saccos and Youth DevelopmentDocumento29 pagineSaccos and Youth DevelopmentGgayi Joseph0% (1)

- Tutorial 1Documento2 pagineTutorial 1valerieflyyingNessuna valutazione finora

- or... 22 - Manuk - EE - Manu Kapoor PDFDocumento1 paginaor... 22 - Manuk - EE - Manu Kapoor PDFAnurag PrabhakarNessuna valutazione finora

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDa EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyValutazione: 3.5 su 5 stelle3.5/5 (2259)

- Endoscopic Combined Intrarenal Surgery (ECIRS) - Tips and Tricks To Improve Outcomes. A Systematic ReviewDocumento12 pagineEndoscopic Combined Intrarenal Surgery (ECIRS) - Tips and Tricks To Improve Outcomes. A Systematic ReviewJoha Paez CortésNessuna valutazione finora

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDa EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreValutazione: 4 su 5 stelle4/5 (1091)

- Cayetano V MonsodDocumento6 pagineCayetano V MonsodGladys BantilanNessuna valutazione finora

- Electromagnetism Demonstration ScriptDocumento5 pagineElectromagnetism Demonstration ScriptEswaran SupramaniamNessuna valutazione finora

- (IJCST-V3I3P47) : Sarita Yadav, Jaswinder SinghDocumento5 pagine(IJCST-V3I3P47) : Sarita Yadav, Jaswinder SinghEighthSenseGroupNessuna valutazione finora

- State Estimation in Electric Power SystemsDocumento1 paginaState Estimation in Electric Power Systemsruben773Nessuna valutazione finora

- Elon Musk, Oprah Winfrey, and Steve Jobs: Who Is An Entrepreneurial Role Model?Documento7 pagineElon Musk, Oprah Winfrey, and Steve Jobs: Who Is An Entrepreneurial Role Model?The Ewing Marion Kauffman FoundationNessuna valutazione finora

- RWS 11.1.2 (Selecting and Organizing Info)Documento2 pagineRWS 11.1.2 (Selecting and Organizing Info)roxann djem sanglayNessuna valutazione finora

- Automatic Car Wash System CONSUMER BEHAVIOURDocumento30 pagineAutomatic Car Wash System CONSUMER BEHAVIOURMalik Muhammad Bilal100% (4)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Da EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Valutazione: 4.5 su 5 stelle4.5/5 (121)

- PESTLE Analysis On Coca ColaDocumento3 paginePESTLE Analysis On Coca Colavinayj07Nessuna valutazione finora

- Steel Silo DesignDocumento6 pagineSteel Silo Designlahlou_d9216100% (1)

- Indian Railways EvolutionDocumento242 pagineIndian Railways EvolutionSumanNessuna valutazione finora

- Analysis of Retailer's Coping Style After Epidemic - Based On IKEA's Coping StyleDocumento8 pagineAnalysis of Retailer's Coping Style After Epidemic - Based On IKEA's Coping StyleYiting GuNessuna valutazione finora

- Professional DevelopmentDocumento2 pagineProfessional Developmentapi-324846334Nessuna valutazione finora

- Thesis Format ChecklistDocumento11 pagineThesis Format ChecklistJennifer0% (1)

- Unit 3: Introduction To Strategic ManagementDocumento26 pagineUnit 3: Introduction To Strategic ManagementTolulope DorcasNessuna valutazione finora

- NUST Science Society - Annual Report 2010-11Documento30 pagineNUST Science Society - Annual Report 2010-11Osama HasanNessuna valutazione finora

- Her Body and Other Parties: StoriesDa EverandHer Body and Other Parties: StoriesValutazione: 4 su 5 stelle4/5 (821)