Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Department of Mathematics Indian Institute of Technology Guwahati Problem Sheet 10

Caricato da

Michael CorleoneTitolo originale

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Department of Mathematics Indian Institute of Technology Guwahati Problem Sheet 10

Caricato da

Michael CorleoneCopyright:

Formati disponibili

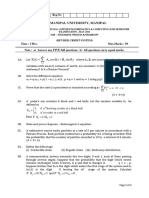

DEPARTMENT OF MATHEMATICS

INDIAN INSTITUTE OF TECHNOLOGY GUWAHATI

MA225 Probability Theory and Random Processes July - November 2017

Problem Sheet 10 NS

1. Consider the experiment of tossing a coin repeatedly. Let 0 < p < 1 be the probability of head in

a single trial. Check whether the following processes are Markov processes or not. If they are, then

find the state transition probabilities and draw the state transition probability diagram. Also, find the

initial distribution. Find the communicating classes and classify the states according to transient and

recurrent states.

(a) For all n ∈ N, let Xn = 1 if the nth trial gives a head, Xn = 0 otherwise.

(b) For all n ∈ N, let Yn be the number of heads in the first n trials.

(c) For all n ∈ N, let Zn = 1 if both the (n − 1)th and nth trial result in heads, Zn = 0 otherwise.

(d) For all n ∈ N, let Un be the number of heads minus the number of tails in the first n trials.

(e) For all n ∈ N, let Vn be the number of tails which occurred after the last head in the first n trials.

2. Suppose that {Xn , n ≥ 0} is a stochastic process on S (state space) which is of the form Xn =

f (Xn−1 , Yn ), n ≥ 1, where f : S × S 0 → S and Y1 , Y2 , . . . are IID random variables with values in

S 0 that are independent of X0 . Then show that {Xn } is a Markov chain with transition probabilities

pij = P {f (i, Y1 ) = j}.

3. A commodity is stocked in a warehouse to satisfy continuing demands and the demands D1 , D2 , . . . in

time periods 1, 2, . . . are IID non-negative integer-valued random variables. The inventory is controlled

by the following (s, S) inventory-control policy, where s < S are pre-determined integers. At the end

of period n − 1, the inventory level Xn−1 is observed and one of the following actions is taken: (a)

Replenish the stock (instantaneously) up to the level S if Xn−1 ≤ s (b) Do not replenish the stock

if Xn−1 > s. Assume X0 ≤ S for simplicity and that X0 is independent of Dn ’s. Write down

the recursion relation for Xn ’s and show that {Xn } is a Markov chain and determine the transition

probabilities if Dn ’s follow a Poisson distribution with parameter λ.

(n)

4. Consider the simple random walk and assume that the process starts at the origin. Determine pij .

the Markov chain {X

5. Consider n } with state space S = {0, 1, 2, 3} and transition probability matrix

0.8 0 0.2 0

0 0 1 0

P = 1

. Find the communicating classes and classify the states according to

0 0 0

0.3 0.4 0 0.3

transient and recurrent states.

6. Let {X n , n ≥ 0} be a Markov

chain with three states 0, 1, 2 and with transition probability matrix

1/2 1/2 0

P = 1/3 1/3 1/3 and the initial distribution P (X0 = i) = 31 , i = 0, 1, 2. Compute P {X2 =

0 1/2 1/2

2, X0 = 1}, P {X1 6= X0 }, P {X3 = 1|X1 = 0}, P {X2 = 2, X1 = 1|X0 = 2}, P {X2 = 1} and

P {X0 = 1|X1 = 1}. Now, draw the state transition diagram and find the communicating classes. Is

the chain reducible, recurrent (null or non-null)? What are the periods of the states? Determine the

stationary distribution. Is this the same as the limiting distribution? If so, why?

Potrebbero piacerti anche

- Stochastic Calculus by Alan BainDocumento87 pagineStochastic Calculus by Alan BainSoeryawan Gilang100% (1)

- Time Series Analysis in The Toolbar of Minitab's HelpDocumento30 pagineTime Series Analysis in The Toolbar of Minitab's HelppiqryNessuna valutazione finora

- Lecture Notes Stochastic Optimization-KooleDocumento42 pagineLecture Notes Stochastic Optimization-Koolenstl0101Nessuna valutazione finora

- SolutionsDocumento8 pagineSolutionsSanjeev BaghoriyaNessuna valutazione finora

- Chapter 7 - TThe Box-Jenkins Methodology For ARIMA ModelsDocumento205 pagineChapter 7 - TThe Box-Jenkins Methodology For ARIMA Modelsnkminh19082003Nessuna valutazione finora

- Stanford - Discrete Time Markov Chains PDFDocumento23 pagineStanford - Discrete Time Markov Chains PDFSofoklisNessuna valutazione finora

- Cadena de MarkovDocumento20 pagineCadena de MarkovJaime PlataNessuna valutazione finora

- Co 3 MaterialDocumento16 pagineCo 3 MaterialAngel Ammu100% (1)

- Markov Process and Markov Chains (Unit 3)Documento5 pagineMarkov Process and Markov Chains (Unit 3)Aravind RajNessuna valutazione finora

- Markov Chains ErgodicityDocumento8 pagineMarkov Chains ErgodicitypiotrpieniazekNessuna valutazione finora

- MC NotesDocumento42 pagineMC NotesvosmeraNessuna valutazione finora

- 502Documento3 pagine502TheAlpha CephNessuna valutazione finora

- Markov ChainsDocumento42 pagineMarkov Chainsbuhyounglee7010Nessuna valutazione finora

- 1 Discrete-Time Markov ChainsDocumento7 pagine1 Discrete-Time Markov Chainssdilips19898170Nessuna valutazione finora

- Unit 2 The Basics Markov Chain: StructureDocumento25 pagineUnit 2 The Basics Markov Chain: StructurePrabin SilwalNessuna valutazione finora

- Markov Chains: 1.1 Specifying and Simulating A Markov ChainDocumento38 pagineMarkov Chains: 1.1 Specifying and Simulating A Markov ChainRudi JuliantoNessuna valutazione finora

- StochBioChapter3 PDFDocumento46 pagineStochBioChapter3 PDFThomas OrNessuna valutazione finora

- Problem Sheet 6Documento3 pagineProblem Sheet 6sumit_drdoNessuna valutazione finora

- Stochastic HWDocumento3 pagineStochastic HWHaniyaAngelNessuna valutazione finora

- Tutorial 1Documento3 pagineTutorial 1ayan.av0010Nessuna valutazione finora

- MIT6 436JF18 Lec23Documento9 pagineMIT6 436JF18 Lec23DevendraReddyPoreddyNessuna valutazione finora

- Stochastic Processes and Time Series Markov Chains - I: 1 A Coin-Tossing GameDocumento4 pagineStochastic Processes and Time Series Markov Chains - I: 1 A Coin-Tossing GameBiju AngaleesNessuna valutazione finora

- Markov Chains (4728)Documento14 pagineMarkov Chains (4728)John JoelNessuna valutazione finora

- Homework Assignment #3: T TV X, y S T T TVDocumento3 pagineHomework Assignment #3: T TV X, y S T T TVSnoop celevNessuna valutazione finora

- An Introduction To Markovchain PackageDocumento72 pagineAn Introduction To Markovchain PackageJhon Edison Bravo BuitragoNessuna valutazione finora

- Random Process Characteristic Equation NOTESDocumento147 pagineRandom Process Characteristic Equation NOTESAjay SinghNessuna valutazione finora

- MATH858D Markov Chains: Maria CameronDocumento44 pagineMATH858D Markov Chains: Maria CameronadelNessuna valutazione finora

- Exercise 3Documento2 pagineExercise 3drive quintoNessuna valutazione finora

- Problems Markov ChainsDocumento35 pagineProblems Markov ChainsJuan Carlos FdzNessuna valutazione finora

- Markov Chains 2013Documento42 pagineMarkov Chains 2013nickthegreek142857Nessuna valutazione finora

- more exactly, measurable function w.r.t. some σ-algebraDocumento6 paginemore exactly, measurable function w.r.t. some σ-algebraAhmed Karam EldalyNessuna valutazione finora

- Pages: 6Documento6 paginePages: 6prakashjithu250Nessuna valutazione finora

- STAT3007 Problem Sheet 1Documento2 pagineSTAT3007 Problem Sheet 1ray.jptryNessuna valutazione finora

- ProblemsMarkovChains PDFDocumento35 pagineProblemsMarkovChains PDFKlajdi QoshiNessuna valutazione finora

- Applied Stochastic ProcessesDocumento2 pagineApplied Stochastic ProcessesAyush SekhariNessuna valutazione finora

- An Introduction To Markovchain Package PDFDocumento71 pagineAn Introduction To Markovchain Package PDFCatherine AlvarezNessuna valutazione finora

- Markov ChainsDocumento13 pagineMarkov Chainsmittalnipun2009Nessuna valutazione finora

- An Introduction To Markovchain PackageDocumento71 pagineAn Introduction To Markovchain PackageKEZYA FABIAN RAMADHANNessuna valutazione finora

- The Markovchain Package: A Package For Easily Handling Discrete Markov Chains in RDocumento73 pagineThe Markovchain Package: A Package For Easily Handling Discrete Markov Chains in RJuan Jose Leon PaedesNessuna valutazione finora

- 3 Classification of Random Processes.9402112 PDFDocumento32 pagine3 Classification of Random Processes.9402112 PDFRobinson MichealNessuna valutazione finora

- MA1254 Random Processes :: Unit 4 :: Classification of Random ProcessesDocumento3 pagineMA1254 Random Processes :: Unit 4 :: Classification of Random Processesjeyaganesh100% (1)

- FgadfadgadsgsgsgDocumento2 pagineFgadfadgadsgsgsgdreamivory29Nessuna valutazione finora

- Asymptotic Evaluation of The Poisson Measures For Tubes Around Jump CurvesDocumento12 pagineAsymptotic Evaluation of The Poisson Measures For Tubes Around Jump CurvesLuis Alberto FuentesNessuna valutazione finora

- Tarea1 PEstocDocumento6 pagineTarea1 PEstocJorge0% (1)

- Markov Decission Process. Unit 3Documento37 pagineMarkov Decission Process. Unit 3AlexanderNessuna valutazione finora

- Sst414 Lesson 2Documento8 pagineSst414 Lesson 2kamandawyclif0Nessuna valutazione finora

- Markovchain PackageDocumento70 pagineMarkovchain PackageRenato Ferreira Leitão AzevedoNessuna valutazione finora

- Metric Spaces, Topology, and ContinuityDocumento21 pagineMetric Spaces, Topology, and ContinuitymauropenagosNessuna valutazione finora

- Allex PDFDocumento42 pagineAllex PDFPeper12345Nessuna valutazione finora

- ProblemsDocumento34 pagineProblemsAkshay KaushikNessuna valutazione finora

- N (m/2, (m+1) /2) K K N A.S. N PDocumento2 pagineN (m/2, (m+1) /2) K K N A.S. N PJineet DesaiNessuna valutazione finora

- HW 4Documento2 pagineHW 4Sorush MesforushNessuna valutazione finora

- Markov ChainsDocumento76 pagineMarkov ChainsHaydenChadwickNessuna valutazione finora

- Stats Lesson 2Documento3 pagineStats Lesson 2Russell AngelesNessuna valutazione finora

- Tutsheet 7 NewDocumento4 pagineTutsheet 7 NewVishal PatelNessuna valutazione finora

- Chap1-2 Markov ChainDocumento82 pagineChap1-2 Markov ChainBoul chandra GaraiNessuna valutazione finora

- An Introduction To Markovchain Package PDFDocumento68 pagineAn Introduction To Markovchain Package PDFJames DunkelfelderNessuna valutazione finora

- Stochastic Processes BeamerDocumento43 pagineStochastic Processes BeamerSheikh Mijanur RahamanNessuna valutazione finora

- Classproblem1 PDFDocumento3 pagineClassproblem1 PDFankiosaNessuna valutazione finora

- 117 Ijmperdjun2019117Documento12 pagine117 Ijmperdjun2019117TJPRC PublicationsNessuna valutazione finora

- 3Documento58 pagine3Mijailht SosaNessuna valutazione finora

- Stochastic Process ExerciseDocumento2 pagineStochastic Process ExerciseAnonymous OIAm6f2JZGNessuna valutazione finora

- Radically Elementary Probability Theory. (AM-117), Volume 117Da EverandRadically Elementary Probability Theory. (AM-117), Volume 117Valutazione: 4 su 5 stelle4/5 (2)

- Green's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)Da EverandGreen's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)Nessuna valutazione finora

- Psheet 12Documento1 paginaPsheet 12Michael CorleoneNessuna valutazione finora

- Lecture1 Introduction CVMLDocumento26 pagineLecture1 Introduction CVMLMichael CorleoneNessuna valutazione finora

- Department of Mathematics Indian Institute of Technology GuwahatiDocumento3 pagineDepartment of Mathematics Indian Institute of Technology GuwahatiMichael CorleoneNessuna valutazione finora

- Department of Mathematics Indian Institute of Technology GuwahatiDocumento3 pagineDepartment of Mathematics Indian Institute of Technology GuwahatilekhanasriNessuna valutazione finora

- Psheet 11Documento1 paginaPsheet 11Michael CorleoneNessuna valutazione finora

- Psheet 10 AnsDocumento3 paginePsheet 10 AnsMichael CorleoneNessuna valutazione finora

- 1 Solution of Psheet 11: Ij n+1 NDocumento1 pagina1 Solution of Psheet 11: Ij n+1 NMichael CorleoneNessuna valutazione finora

- Psheet 6 AnsDocumento7 paginePsheet 6 AnsMichael CorleoneNessuna valutazione finora

- Department of Mathematics Indian Institute of Technology Guwahati Problem Sheet 1Documento2 pagineDepartment of Mathematics Indian Institute of Technology Guwahati Problem Sheet 1Michael CorleoneNessuna valutazione finora

- Psheet 8Documento1 paginaPsheet 8Michael CorleoneNessuna valutazione finora

- Psheet 7 AnsDocumento3 paginePsheet 7 AnsMichael CorleoneNessuna valutazione finora

- Psheet 7Documento1 paginaPsheet 7Michael CorleoneNessuna valutazione finora

- Psheet 9Documento1 paginaPsheet 9Michael CorleoneNessuna valutazione finora

- Psheet 9 AnsDocumento4 paginePsheet 9 AnsMichael CorleoneNessuna valutazione finora

- Department of Mathematics Indian Institute of Technology Guwahati Problem Sheet 4Documento2 pagineDepartment of Mathematics Indian Institute of Technology Guwahati Problem Sheet 4Michael CorleoneNessuna valutazione finora

- Department of Mathematics Indian Institute of Technology Guwahati Problem Sheet 6Documento2 pagineDepartment of Mathematics Indian Institute of Technology Guwahati Problem Sheet 6Michael CorleoneNessuna valutazione finora

- Psheet 4 AnsDocumento7 paginePsheet 4 AnsMichael CorleoneNessuna valutazione finora

- Psheet 2 AnsDocumento5 paginePsheet 2 AnsMichael CorleoneNessuna valutazione finora

- Psheet 5Documento2 paginePsheet 5Michael CorleoneNessuna valutazione finora

- Department of Mathematics Indian Institute of Technology Guwahati Problem Sheet 1 - AnswersDocumento2 pagineDepartment of Mathematics Indian Institute of Technology Guwahati Problem Sheet 1 - AnswersMichael CorleoneNessuna valutazione finora

- Psheet 2Documento2 paginePsheet 2Michael CorleoneNessuna valutazione finora

- Psheet 5 AnsDocumento5 paginePsheet 5 AnsMichael CorleoneNessuna valutazione finora

- Problem Sheet 3: AnswersDocumento3 pagineProblem Sheet 3: AnswersMichael CorleoneNessuna valutazione finora

- Sample Questions ShellDocumento2 pagineSample Questions ShellMichael CorleoneNessuna valutazione finora

- Tutorial 3Documento1 paginaTutorial 3Michael CorleoneNessuna valutazione finora

- Department of Mathematics Indian Institute of Technology Guwahati Problem Sheet 3Documento2 pagineDepartment of Mathematics Indian Institute of Technology Guwahati Problem Sheet 3Michael CorleoneNessuna valutazione finora

- SensorsDocumento3 pagineSensorsMichael CorleoneNessuna valutazione finora

- BatchDocumento23 pagineBatchMichael CorleoneNessuna valutazione finora

- Tutorial 7Documento1 paginaTutorial 7ywakadeNessuna valutazione finora

- Topic 5 Unit Roots, Cointegration and VECMDocumento42 pagineTopic 5 Unit Roots, Cointegration and VECMshaibu amana100% (1)

- Shreve S.E. Stochastic Calculus For Finance I.. The Binomial Asset Pricing ModelDocumento203 pagineShreve S.E. Stochastic Calculus For Finance I.. The Binomial Asset Pricing ModelBill100% (1)

- Time Series Analysis - COMPLETEDocumento15 pagineTime Series Analysis - COMPLETELavesh GuptaNessuna valutazione finora

- Stopping Times SolutionsDocumento3 pagineStopping Times SolutionsLilia XaNessuna valutazione finora

- ARIMA ForecastingDocumento9 pagineARIMA Forecastingilkom12Nessuna valutazione finora

- Department of Mathematics MTL 794 (Advanced Probability Theory) 3 Credits (3-0-0) I Semester 2021 - 2022Documento2 pagineDepartment of Mathematics MTL 794 (Advanced Probability Theory) 3 Credits (3-0-0) I Semester 2021 - 2022dushyant kumarNessuna valutazione finora

- Cf01 Tut SolDocumento5 pagineCf01 Tut SolLao MjieNessuna valutazione finora

- Unit-16 TIME SERIES MODELSDocumento19 pagineUnit-16 TIME SERIES MODELSmopliqNessuna valutazione finora

- EE 278B: Stationary Random Processes 7 - 1Documento14 pagineEE 278B: Stationary Random Processes 7 - 1nathanamarNessuna valutazione finora

- Arima: Autoregressive Integrated Moving AverageDocumento32 pagineArima: Autoregressive Integrated Moving AverageJoshy_29Nessuna valutazione finora

- Random Processes: Saravanan Vijayakumaran Sarva@ee - Iitb.ac - inDocumento10 pagineRandom Processes: Saravanan Vijayakumaran Sarva@ee - Iitb.ac - inSonu kumarNessuna valutazione finora

- Nips Tutorial 2016Documento104 pagineNips Tutorial 2016Marko MilovanovićNessuna valutazione finora

- Gardiner - Stochastic MeethodsDocumento10 pagineGardiner - Stochastic MeethodsganjeyNessuna valutazione finora

- Simulasi Model Antrian Optimal Loket Pembayaran ParkirDocumento14 pagineSimulasi Model Antrian Optimal Loket Pembayaran ParkirAdit MangolNessuna valutazione finora

- 12.5 Markov Chains 2 (OR Models)Documento38 pagine12.5 Markov Chains 2 (OR Models)SSNessuna valutazione finora

- Stock Watson 3u Exercise Solutions Chapter 14 Students 1Documento8 pagineStock Watson 3u Exercise Solutions Chapter 14 Students 1Tardan TardanNessuna valutazione finora

- Examples FTSA Questions2Documento18 pagineExamples FTSA Questions2Anonymous 7CxwuBUJz3Nessuna valutazione finora

- Feynman-Kac FormulaDocumento4 pagineFeynman-Kac Formulawoodword99Nessuna valutazione finora

- Assignment: Rawan Abdulbari Aldarwish. 439814960. 241Documento2 pagineAssignment: Rawan Abdulbari Aldarwish. 439814960. 241RooRee AbdulbariNessuna valutazione finora

- Markov ChainsDocumento55 pagineMarkov Chainsswofe1Nessuna valutazione finora

- Time Series Analysis ForecastingDocumento18 pagineTime Series Analysis ForecastingUttam BiswasNessuna valutazione finora

- Systems Simulation Chapter 6 Queuing Mod PDFDocumento36 pagineSystems Simulation Chapter 6 Queuing Mod PDFanarki417Nessuna valutazione finora

- Solutions Manual To Accompany Stochastic Calculus For Finance II 9781441923110Documento24 pagineSolutions Manual To Accompany Stochastic Calculus For Finance II 9781441923110christopherharveynmbexqtjyp100% (36)

- Markov Analysis QuestionDocumento4 pagineMarkov Analysis QuestionJasa Cetak100% (1)

- Stilger Poon 2013Documento28 pagineStilger Poon 201358ttjmym42Nessuna valutazione finora

- What Is The Microprice?: Sasha StoikovDocumento13 pagineWhat Is The Microprice?: Sasha StoikovunknownNessuna valutazione finora