Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Gesture-Based Character Recognition Using SVM Classifier

0 valutazioniIl 0% ha trovato utile questo documento (0 voti)

40 visualizzazioni3 pagineVirtual Reality(VR) is a popular segment that is

rapidly developing in the recent years with the

advancements in compact and cost-effective GPU

technologies required to render such intensive tasks in

real-time. Communication in VR is usually done either

through Voice or Text through a point and click method

using motion controllers. It’s tedious to move and point

towards a specific character to type a particular word and

also breaks the illusion of the VR. In this paper we have

proposed a method to counter these issues, by using

gesture recognition of hand movements, using

accelerometer and gyroscope data, through Machine

Learning. This system easily integrates into the existing

platform of motion controllers as they already include all

the required sensors built into them already. A prototype

is also showcased using an Arduino controller and an MP-

6050 (Accelerometer and Gyroscope sensor) with data

transmission through Bluetooth. This paper also includes

appropriate performance analysis of said method in

comparison with existing methods.

Titolo originale

Gesture-Based Character Recognition using SVM Classifier

Copyright

© © All Rights Reserved

Formati disponibili

PDF, TXT o leggi online da Scribd

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoVirtual Reality(VR) is a popular segment that is

rapidly developing in the recent years with the

advancements in compact and cost-effective GPU

technologies required to render such intensive tasks in

real-time. Communication in VR is usually done either

through Voice or Text through a point and click method

using motion controllers. It’s tedious to move and point

towards a specific character to type a particular word and

also breaks the illusion of the VR. In this paper we have

proposed a method to counter these issues, by using

gesture recognition of hand movements, using

accelerometer and gyroscope data, through Machine

Learning. This system easily integrates into the existing

platform of motion controllers as they already include all

the required sensors built into them already. A prototype

is also showcased using an Arduino controller and an MP-

6050 (Accelerometer and Gyroscope sensor) with data

transmission through Bluetooth. This paper also includes

appropriate performance analysis of said method in

comparison with existing methods.

Copyright:

© All Rights Reserved

Formati disponibili

Scarica in formato PDF, TXT o leggi online su Scribd

0 valutazioniIl 0% ha trovato utile questo documento (0 voti)

40 visualizzazioni3 pagineGesture-Based Character Recognition Using SVM Classifier

Virtual Reality(VR) is a popular segment that is

rapidly developing in the recent years with the

advancements in compact and cost-effective GPU

technologies required to render such intensive tasks in

real-time. Communication in VR is usually done either

through Voice or Text through a point and click method

using motion controllers. It’s tedious to move and point

towards a specific character to type a particular word and

also breaks the illusion of the VR. In this paper we have

proposed a method to counter these issues, by using

gesture recognition of hand movements, using

accelerometer and gyroscope data, through Machine

Learning. This system easily integrates into the existing

platform of motion controllers as they already include all

the required sensors built into them already. A prototype

is also showcased using an Arduino controller and an MP-

6050 (Accelerometer and Gyroscope sensor) with data

transmission through Bluetooth. This paper also includes

appropriate performance analysis of said method in

comparison with existing methods.

Copyright:

© All Rights Reserved

Formati disponibili

Scarica in formato PDF, TXT o leggi online su Scribd

Sei sulla pagina 1di 3

Volume 3, Issue 4, April – 2018 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

Gesture-Based Character Recognition using SVM

Classifier

Rama.S Vikas. S

Department of Computer Science andEngineering Department of Computer Science andEngineering

SRM University SRM University

Chennai, India Chennai, India

Ram Kumar. P Raagul. L

Department of Computer Science andEngineering Department of Computer Science andEngineering

SRM University SRM University

Chennai, India Chennai, India

Abstract:- Virtual Reality(VR) is a popular segment that is II. EXISTING METHODOLOGY

rapidly developing in the recent years with the

advancements in compact and cost-effective GPU The most popular way to type in VR as of date is the

technologies required to render such intensive tasks in point at the character in the keyboard on a virtual environment

real-time. Communication in VR is usually done either and press a button to select that character. The motion

through Voice or Text through a point and click method controller is used to point at the virtual object. The location and

using motion controllers. It’s tedious to move and point angle of pointing are all tracked using the inbuilt sensors and

towards a specific character to type a particular word and the optional tracking cameras surrounding the setup area, which

also breaks the illusion of the VR. In this paper we have is together used to accurately locate the controller in the virtual

proposed a method to counter these issues, by using space. The accuracy of this method is also dependant on the

gesture recognition of hand movements, using accuracy of the tracking system itself. The drawback of this

accelerometer and gyroscope data, through Machine method is that it sorts of breaks the illusion of VR. Another

Learning. This system easily integrates into the existing common method for typing in VR would be voice typing, such

platform of motion controllers as they already include all using service like the Google Speech to Text Keyboard. The

the required sensors built into them already. A prototype accuracy of Google’s service is around 95% with it also being

is also showcased using an Arduino controller and an MP- the threshold for speech prediction in humans. This method is

6050 (Accelerometer and Gyroscope sensor) with data also a lot faster and more convenient than the previously

transmission through Bluetooth. This paper also includes specified method. The drawback to this method is also that it

appropriate performance analysis of said method in would kind of break the illusion of VR when a specific type of

comparison with existing methods. action, which involves a drawing motion or typing motion, is to

be elicited from the user for complete immersion. Another

Keywords:- component; Virtual Reality; Gestures; Arduino; drawback would be the availability of the preferred language.

SVM Classifier;Sensors; Augmented Reality; Mixed Reality. Google Voice typing, even though it has an ever-expanding

library of languages, does not provide the feature for every

I. INTRODUCTION language that we can currently type in.

VR is a field that’s gaining rapid popularity in modern

day with advancements in the fields of Motion detection,

Graphical processing and comparatively low-cost HMD’s

(Head Mounted Display). VR has the potential to encompass

over everything we do with a computer. With the introduction

of Mixed Reality, which is a combination of Virtual reality

along with real-life, the prospect of a more ever-connected

world is getting closer than ever. VR, in itself, has applications

in fields ranging from Medicine, Armed Forces, Staff Training

to Personal Recreation and even to the point of leading entire

virtual lives, provided if the level of immersion is right. In VR,

when it comes to texting or chatting, it is usually done through

either voice or text chat. Text chat can be tricky or even tedious

to do depending on the method used. The proposed method in

this paper is to allow the user to draw the characters in the air,

but in a virtual environment appear like they’re drawing on a

Board or even paper. These characters are recognized through

Machine Learning by using Accelerometer and Gyroscope

Fig 1:- Typing in Google Street View in VR

sensors which are already prebuilt into most mainstream

Motion controllers used with VR like the Oculus Touch

controller and the HTC Vive controller.

IJISRT18AP178 www.ijisrt.com 419

Volume 3, Issue 4, April – 2018 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

Fig 2:- Typing in Steam VR Keyboard

Previously, Hand Gestures have been observed though

Accelerometer and Gyroscope sensors to do things like control

robots and various other things. They have been used to detect

Sign Language and even certain specific words and phrases

based on their correlation with a certain action. Hand Gestures

have also been observed through Image processing and Feature

Extraction to the same effect. Fig 4:- Prototype Overview

In the paper by Bhuyan et al[3], Gyro-Accelerometer is

used to control a Robotic Arm and have been met with an III. PROPOSED SYSTEM

average accuracy of around 97% in their methods. Any hand

motion detected, is reported by the dual sensors and the

The proposed system is to use the existing inbuilt

resulting change in data, conveys the direction of shift when

sensors such as the accelerometer and gyroscope to predict

compared to the default location. Using this, the same path

characters through machine learning. An SVM Classifier is

which was detected is sent asa command to the Servo motors of

used for the purposes of machine learning. The XYZ axis data

the robot.

from both the sensors are used to model each character. The

In the paper by Piawiak et al[4], A DG5 VHand Glove, raw axis data from the sensors are used to model for each

which contains five flexion sensors for each finger, specific character individually. The initial model is built after

accelerometer and gyroscope, is used to collect the data for training multiple times with various angles and styles of the

gesture recognition. A specific combination of Shift, Rotation characters, so as to keep the model as accurate as possible.

and Movement of specific fingers is used to detect a specific

The accuracy of detection increases as the number of

gesture. The system was designed to detect 22 specific,

times trained increases. Also, as the system is functionally

commonly used gestures.

used, the model keeps improving with more and more

predictions that lie above a specific certainty factor. The

proposed system would integrate easily with the existing

systems of VR due to the presence of the required sensors in

the existing hardware and hence would also be cost-effective in

the process.

IV. IMPLEMENTATION

A prototype, for the proof of concept, of the proposed

method is built using an Arduino controller. It is connected

toan HC-05 Bluetooth module for Wireless connectivity. It uses

an MP-6050 Accelerometer and Gyroscope sensor to provide

the required data for modelling and detection. Figure 2 is used

Fig 3:- Data flow to show the overlook of the prototype and figure 3 shows the

data flow across it. When the Button 1 is pressed, Transmission

is initiated and the sensor data is sent through the Bluetooth

COM Port. The model for classification is built using Scikit-

learn’s SVM classifier to classify the raw sensor data as

characters and to distinguish between the different characters.

IJISRT18AP178 www.ijisrt.com 420

Volume 3, Issue 4, April – 2018 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

Fig 7:- Sensor Data for the character ‘f’

V. CONCLUSION AND FUTURE WORK

Fig 5:- Circuit diagram of Prototype

VR is something that is inevitable and one day should

Figure 4 shows the circuit diagram of the prototype. encompass our existing field of computers. With decreasing

Figure 5 and 6 shows the raw sensor data obtained while hardware cost and a steady increase in the allocation of

training the letter g. It also includes the characteristics of the development time towards VR applications, VR could become

parameters required for classifying it as the character g and f the next revolution for the masses. The proposed method in

during prediction as stored in the model. The normalized x- this paper could be very useful in VR applications that require

axis, the y-axis shows the prediction certainty at every part of text input, especially when they contextually require the user

the gesture as per the number of samples provided. to perform certain hand gestures or writing actions. This

Depending on the number of training passes per method could also be used towards ALS patients or any other

character the accuracy of prediction varies from 87% to 92%. patients with prohibiting them from using their voice or a

The accuracy is measured by comparing the average amount of physical standard Keyboard.

characters predicted correctly versus the total amount of REFERENCES

characters provided. The complexity of the character and the

likeliness of two characters tend to create some issues at times.

Characters like b and h were notorious with accuracy compared [1]. G. Sziládi, T. Ujbányi and J. Katona, "Cost-effective hand

to other characters and required a bit more training for accurate gesture computer control interface," in 7th IEEE

tracking. For these characters a little more well-defined training International Conference on Cognitive

samples were required to have the accuracy up to the mark as Infocommunications, Wrocław, 2016.

the rest of the of characters. The average amount of time to [2]. J. Yang, Y. Wang, Z. Lv, N. Jiang and A. Steed,

train a specific character was around 120 seconds, which had a "Interaction with Three-Dimensional Gesture and

total of 40 specific trials for training. Character Input in Virtual Reality: Recognizing Gestures

in Different Directions and Improving User Input," IEEE

Consumer Electronics Magazine, vol. 7, no. 2, pp. 64-72,

2018.

[3]. A. I. Bhuyan and T. C. Mallick, "Gyro-Accelerometer

based control of a robotic Arm using AVR

Microcontroller," in The 9th International Forum on

Strategic Technology, Cox’s Bazar, Bangladesh, 2014.

[4]. P. Pławiak, T. So´snicki, M. Nied´zwiecki, Z. Tabor and

K. Rzecki, "Hand Body Language Gesture Recognition

Based on Signals From Specialized Glove and Machine

Learning Algorithms," IEEE TRANSACTIONS ON

INDUSTRIAL INFORMATICS, vol. 12, no. 3, pp. 1104-

1113, 2016.

[5]. J. Wang, Y. Xu, H. Liang, K. Li, H. Chen and J. Zhou,

"Virtual Reality and Motion Sensing Conjoint

Applications Based on Stereoscopic Display," in Progress

In Electromagnetic Research Symposium, Shanghai, 2016.

[6]. e. a. H. Lee, "Select-and-Point: A Novel Interface for

Multi-Device Connection and Control Based on Simple

Hand Gestures," CHI, pp. 5-10, 2008.

Fig 6:- Sensor Data for the character ‘g’

IJISRT18AP178 www.ijisrt.com 421

Potrebbero piacerti anche

- Air Written Digits Recognition Using CNN For Device Control IJERTCONV8IS14035Documento4 pagineAir Written Digits Recognition Using CNN For Device Control IJERTCONV8IS14035RgmNessuna valutazione finora

- Air Signature Recognition Using Deep Convolutional Neural Network-Based Sequential ModelDocumento6 pagineAir Signature Recognition Using Deep Convolutional Neural Network-Based Sequential ModelAzeez ReadhNessuna valutazione finora

- A Survey Paper On Sign Language RecognitionDocumento6 pagineA Survey Paper On Sign Language RecognitionIJRASETPublicationsNessuna valutazione finora

- Handwritten Digit Recognition Using Machine and Deep Learning AlgorithmsDocumento6 pagineHandwritten Digit Recognition Using Machine and Deep Learning AlgorithmsRoshanNessuna valutazione finora

- Sign Language TranslationDocumento4 pagineSign Language TranslationNaila AshrafNessuna valutazione finora

- Paper 9004Documento4 paginePaper 9004IJARSCT JournalNessuna valutazione finora

- Sign Language Recognition Using Machine LearningDocumento7 pagineSign Language Recognition Using Machine LearningIJRASETPublicationsNessuna valutazione finora

- Implementation of Virtual Dressing Room Using Newton's MechanicsDocumento5 pagineImplementation of Virtual Dressing Room Using Newton's MechanicsshrutiNessuna valutazione finora

- Skin Based Real Time Air Writing Using Web CameraDocumento8 pagineSkin Based Real Time Air Writing Using Web CameraDr Muhamma Imran BabarNessuna valutazione finora

- Report On Fingerprint Recognition SystemDocumento9 pagineReport On Fingerprint Recognition Systemaryan singhalNessuna valutazione finora

- Face Recognition With 2D-Convolutional Neural NetworkDocumento5 pagineFace Recognition With 2D-Convolutional Neural NetworkSupreeth Suhas100% (1)

- Hand Gesture Recognition Using OpenCV and PythonDocumento7 pagineHand Gesture Recognition Using OpenCV and PythonEditor IJTSRDNessuna valutazione finora

- Augmented Reality Based Indoor Navigation SystemDocumento3 pagineAugmented Reality Based Indoor Navigation SystemInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- Indian Sign Language Converter System Using An Android App.: Pranali Loke Juilee Paranjpe Sayli BhabalDocumento4 pagineIndian Sign Language Converter System Using An Android App.: Pranali Loke Juilee Paranjpe Sayli BhabalGurudev YankanchiNessuna valutazione finora

- Literature Survey On Fingerprint Recognition Using Level 3 Feature Extraction MethodDocumento8 pagineLiterature Survey On Fingerprint Recognition Using Level 3 Feature Extraction MethodBindhu HRNessuna valutazione finora

- JournalNX - Language RecognitionDocumento3 pagineJournalNX - Language RecognitionJournalNX - a Multidisciplinary Peer Reviewed JournalNessuna valutazione finora

- An Embedded Real Time Finger Vein Recognition System For ATMDocumento7 pagineAn Embedded Real Time Finger Vein Recognition System For ATMerpublicationNessuna valutazione finora

- A Review of Handwritten Text Recognition Using Machine Learning and Deep Learning TechniquesDocumento6 pagineA Review of Handwritten Text Recognition Using Machine Learning and Deep Learning TechniquesVIVA-TECH IJRINessuna valutazione finora

- Crowd Monitoring Using HOGDocumento8 pagineCrowd Monitoring Using HOGInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- A Machine Learning Approach For Air Writing RecognitionDocumento10 pagineA Machine Learning Approach For Air Writing RecognitionIJRASETPublicationsNessuna valutazione finora

- Controlled Hand Gestures Using Python and OpenCVDocumento7 pagineControlled Hand Gestures Using Python and OpenCVIJRASETPublicationsNessuna valutazione finora

- Accord Computer Through Hand Gestures Using Deep Learning and Image ProcessingDocumento6 pagineAccord Computer Through Hand Gestures Using Deep Learning and Image ProcessingPrem Sai SwaroopNessuna valutazione finora

- Game Control Using Hand Gesture RecognitionDocumento6 pagineGame Control Using Hand Gesture RecognitionShivani ReddyNessuna valutazione finora

- Virtual Paint Application by Hand Gesture RecognitionDocumento4 pagineVirtual Paint Application by Hand Gesture RecognitionGaurav MalhotraNessuna valutazione finora

- Smart Attendance System Using Raspberry PiDocumento4 pagineSmart Attendance System Using Raspberry PiInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- Pattern Recognition: Matteo Gadaleta, Michele RossiDocumento13 paginePattern Recognition: Matteo Gadaleta, Michele RossiHuy NguyenNessuna valutazione finora

- A 3D-Deep-Learning-based Augmented Reality Calibration Method ForDocumento7 pagineA 3D-Deep-Learning-based Augmented Reality Calibration Method ForManny MerazinniNessuna valutazione finora

- Home SecurityDocumento4 pagineHome SecurityWARSE JournalsNessuna valutazione finora

- Remote Control Rover With Camera and Ultrasonic SensorDocumento4 pagineRemote Control Rover With Camera and Ultrasonic Sensornikhilesh jadhavNessuna valutazione finora

- Air Writing and Recognition SystemDocumento8 pagineAir Writing and Recognition SystemIJRASETPublicationsNessuna valutazione finora

- Realization of Smart and Highly Efficient IOT Based Surveillance System Using Facial Recognition On FPGADocumento5 pagineRealization of Smart and Highly Efficient IOT Based Surveillance System Using Facial Recognition On FPGAPawan ChaurasiyaNessuna valutazione finora

- Paper 8905Documento4 paginePaper 8905IJARSCT JournalNessuna valutazione finora

- V5 I1 2016 Paper18Documento3 pagineV5 I1 2016 Paper18IIR indiaNessuna valutazione finora

- Expert Systems With Applications: Sohom Mukherjee, Sk. Arif Ahmed, Debi Prosad Dogra, Samarjit Kar, Partha Pratim RoyDocumento13 pagineExpert Systems With Applications: Sohom Mukherjee, Sk. Arif Ahmed, Debi Prosad Dogra, Samarjit Kar, Partha Pratim RoyRgmNessuna valutazione finora

- Face Detection and Recognition Using Opencv and PythonDocumento3 pagineFace Detection and Recognition Using Opencv and PythonGeo SeptianNessuna valutazione finora

- Augmented RealityDocumento24 pagineAugmented RealityPavan Prince0% (1)

- Real-Time Human Activity Recognition From Smart Phone Using Linear Support Vector MachinesDocumento10 pagineReal-Time Human Activity Recognition From Smart Phone Using Linear Support Vector MachinesTELKOMNIKANessuna valutazione finora

- Hand Gesture Recognition Using CNNDocumento12 pagineHand Gesture Recognition Using CNNLokesh ChowdaryNessuna valutazione finora

- Live Object Recognition Using YOLODocumento5 pagineLive Object Recognition Using YOLOInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- Augmented Reality Navigation System On AndroidDocumento7 pagineAugmented Reality Navigation System On AndroidBudi Azhari SyahNessuna valutazione finora

- Real Time Finger Tracking and Contour Detection For Gesture Recognition Using OpencvDocumento4 pagineReal Time Finger Tracking and Contour Detection For Gesture Recognition Using OpencvRamírez Breña JosecarlosNessuna valutazione finora

- Finger Print Matching Based On Miniature and Phog Feature ExtractionDocumento5 pagineFinger Print Matching Based On Miniature and Phog Feature ExtractionIJRASETPublicationsNessuna valutazione finora

- Irjet Sniffer For Tracking Lost MobileDocumento6 pagineIrjet Sniffer For Tracking Lost MobileRekha SNessuna valutazione finora

- Augmented RealityDocumento1 paginaAugmented RealitySandeep KulkarniNessuna valutazione finora

- Hand Gesture Controlled Virtual Mouse Using Artificial Intelligence Ijariie19380Documento14 pagineHand Gesture Controlled Virtual Mouse Using Artificial Intelligence Ijariie19380Rr RrNessuna valutazione finora

- Indoor Positioning and Navigation System For Interior Design Augmented RealityDocumento6 pagineIndoor Positioning and Navigation System For Interior Design Augmented Realityketu89Nessuna valutazione finora

- A Reliable and Accurate Indoor Localization Method Using Phone Inertial SensorsDocumento10 pagineA Reliable and Accurate Indoor Localization Method Using Phone Inertial SensorsLászló RáczNessuna valutazione finora

- Attendance Management System For Industrial Worker Using Finger Print ScannerDocumento7 pagineAttendance Management System For Industrial Worker Using Finger Print ScannerMohamed SaberNessuna valutazione finora

- ICSCSS 177 Final ManuscriptDocumento7 pagineICSCSS 177 Final ManuscriptdurgatempmailNessuna valutazione finora

- Interior Design in Augmented Reality EnvironmentDocumento3 pagineInterior Design in Augmented Reality EnvironmentDrs Eryon M HumNessuna valutazione finora

- Static hand gesture recognition using stacked Denoising Sparse AutoencodersDocumento7 pagineStatic hand gesture recognition using stacked Denoising Sparse Autoencoderssafa ameurNessuna valutazione finora

- A Vision Base Application For Virtual Mouse Interface Using Hand GestureDocumento6 pagineA Vision Base Application For Virtual Mouse Interface Using Hand GestureInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- ICSCSS 177 Final ManuscriptDocumento6 pagineICSCSS 177 Final ManuscriptdurgatempmailNessuna valutazione finora

- Paper 1Documento6 paginePaper 1fatima.uroojofficalNessuna valutazione finora

- Traffic Sign Detection For Autonomous VehicleApplicationDocumento5 pagineTraffic Sign Detection For Autonomous VehicleApplicationInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- Naveen SynopsisDocumento12 pagineNaveen SynopsisDeepak SewatkarNessuna valutazione finora

- Navigation: Smart Glasses Make Driving Directions A SnapDocumento2 pagineNavigation: Smart Glasses Make Driving Directions A SnapBara AndreiNessuna valutazione finora

- 60-64Documento6 pagine60-64Sathvika ChNessuna valutazione finora

- Number Detection System Using CNN ResearchpaperDocumento5 pagineNumber Detection System Using CNN ResearchpaperSai TejashNessuna valutazione finora

- Handwriting Recognition: Fundamentals and ApplicationsDa EverandHandwriting Recognition: Fundamentals and ApplicationsNessuna valutazione finora

- Forensic Evidence Management Using Blockchain TechnologyDocumento6 pagineForensic Evidence Management Using Blockchain TechnologyInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- Food habits and food inflation in the US and India; An experience in Covid-19 pandemicDocumento3 pagineFood habits and food inflation in the US and India; An experience in Covid-19 pandemicInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- Severe Residual Pulmonary Stenosis after Surgical Repair of Tetralogy of Fallot: What’s Our Next Strategy?Documento11 pagineSevere Residual Pulmonary Stenosis after Surgical Repair of Tetralogy of Fallot: What’s Our Next Strategy?International Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- Late Presentation of Pulmonary Hypertension Crisis Concurrent with Atrial Arrhythmia after Atrial Septal Defect Device ClosureDocumento12 pagineLate Presentation of Pulmonary Hypertension Crisis Concurrent with Atrial Arrhythmia after Atrial Septal Defect Device ClosureInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- Databricks- Data Intelligence Platform for Advanced Data ArchitectureDocumento5 pagineDatabricks- Data Intelligence Platform for Advanced Data ArchitectureInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- Design and Development of Controller for Electric VehicleDocumento4 pagineDesign and Development of Controller for Electric VehicleInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- The Experiences of Non-PE Teachers in Teaching First Aid and Emergency Response: A Phenomenological StudyDocumento89 pagineThe Experiences of Non-PE Teachers in Teaching First Aid and Emergency Response: A Phenomenological StudyInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- Scrolls, Likes, and Filters: The New Age Factor Causing Body Image IssuesDocumento6 pagineScrolls, Likes, and Filters: The New Age Factor Causing Body Image IssuesInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- The Students’ Assessment of Family Influences on their Academic MotivationDocumento8 pagineThe Students’ Assessment of Family Influences on their Academic MotivationInternational Journal of Innovative Science and Research Technology100% (1)

- Design and Implementation of Homemade Food Delivery Mobile Application Using Flutter-FlowDocumento7 pagineDesign and Implementation of Homemade Food Delivery Mobile Application Using Flutter-FlowInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- Targeted Drug Delivery through the Synthesis of Magnetite Nanoparticle by Co-Precipitation Method and Creating a Silica Coating on itDocumento6 pagineTargeted Drug Delivery through the Synthesis of Magnetite Nanoparticle by Co-Precipitation Method and Creating a Silica Coating on itInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- Anxiety, Stress and Depression in Overseas Medical Students and its Associated Factors: A Descriptive Cross-Sectional Study at Jalalabad State University, Jalalabad, KyrgyzstanDocumento7 pagineAnxiety, Stress and Depression in Overseas Medical Students and its Associated Factors: A Descriptive Cross-Sectional Study at Jalalabad State University, Jalalabad, KyrgyzstanInternational Journal of Innovative Science and Research Technology90% (10)

- Blockchain-Enabled Security Solutions for Medical Device Integrity and Provenance in Cloud EnvironmentsDocumento13 pagineBlockchain-Enabled Security Solutions for Medical Device Integrity and Provenance in Cloud EnvironmentsInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- Quality By Plan Approach-To Explanatory Strategy ApprovalDocumento4 pagineQuality By Plan Approach-To Explanatory Strategy ApprovalInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- Enhancing Biometric Attendance Systems for Educational InstitutionsDocumento7 pagineEnhancing Biometric Attendance Systems for Educational InstitutionsInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- Investigating the Impact of the Central Agricultural Research Institute's (CARI) Agricultural Extension Services on the Productivity and Livelihoods of Farmers in Bong County, Liberia, from 2013 to 2017Documento12 pagineInvestigating the Impact of the Central Agricultural Research Institute's (CARI) Agricultural Extension Services on the Productivity and Livelihoods of Farmers in Bong County, Liberia, from 2013 to 2017International Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- Optimizing Sound Quality and Immersion of a Proposed Cinema in Victoria Island, NigeriaDocumento4 pagineOptimizing Sound Quality and Immersion of a Proposed Cinema in Victoria Island, NigeriaInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- A Review on Process Parameter Optimization in Material Extrusion Additive Manufacturing using ThermoplasticDocumento4 pagineA Review on Process Parameter Optimization in Material Extrusion Additive Manufacturing using ThermoplasticInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- Comparison of Lateral Cephalograms with Photographs for Assessing Anterior Malar Prominence in Maharashtrian PopulationDocumento8 pagineComparison of Lateral Cephalograms with Photographs for Assessing Anterior Malar Prominence in Maharashtrian PopulationInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- Administration Consultancy Administrations, a Survival Methodology for Little and Medium Undertakings (SMEs): The Thailand InvolvementDocumento4 pagineAdministration Consultancy Administrations, a Survival Methodology for Little and Medium Undertakings (SMEs): The Thailand InvolvementInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- Development of a Local Government Service Delivery Framework in Zambia: A Case of the Lusaka City Council, Ndola City Council and Kafue Town Council Roads and Storm Drain DepartmentDocumento13 pagineDevelopment of a Local Government Service Delivery Framework in Zambia: A Case of the Lusaka City Council, Ndola City Council and Kafue Town Council Roads and Storm Drain DepartmentInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- Digital Pathways to Empowerment: Unraveling Women's Journeys in Atmanirbhar Bharat through ICT - A Qualitative ExplorationDocumento7 pagineDigital Pathways to Empowerment: Unraveling Women's Journeys in Atmanirbhar Bharat through ICT - A Qualitative ExplorationInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- Post-Treatment Effects of Multiple Sclerosis (MS) on the Executive and Memory Functions ofCommercial Pilots in the UAEDocumento7 paginePost-Treatment Effects of Multiple Sclerosis (MS) on the Executive and Memory Functions ofCommercial Pilots in the UAEInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- Gardening Business System Using CNN – With Plant Recognition FeatureDocumento4 pagineGardening Business System Using CNN – With Plant Recognition FeatureInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- Analysis of Risk Factors Affecting Road Work Construction Failure in Sigi DistrictDocumento11 pagineAnalysis of Risk Factors Affecting Road Work Construction Failure in Sigi DistrictInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- Antibacterial Herbal Mouthwash Formulation and Evaluation Against Oral DisordersDocumento10 pagineAntibacterial Herbal Mouthwash Formulation and Evaluation Against Oral DisordersInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- Examining the Role of Work-Life Balance Programs in Reducing Burnout among Healthcare Workers: A Case Study of C.B. Dunbar Hospital and the Baptist Clinic in Gbarnga City, Bong County, LiberiaDocumento10 pagineExamining the Role of Work-Life Balance Programs in Reducing Burnout among Healthcare Workers: A Case Study of C.B. Dunbar Hospital and the Baptist Clinic in Gbarnga City, Bong County, LiberiaInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- Chemical Farming, Emerging Issues of Chemical FarmingDocumento7 pagineChemical Farming, Emerging Issues of Chemical FarmingInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- Work-Life Balance: Women with at Least One Sick or Disabled ChildDocumento10 pagineWork-Life Balance: Women with at Least One Sick or Disabled ChildInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- Harnessing Deep Learning Methods for Detecting Different Retinal Diseases: A Multi-Categorical Classification MethodologyDocumento11 pagineHarnessing Deep Learning Methods for Detecting Different Retinal Diseases: A Multi-Categorical Classification MethodologyInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- The Structure of A Schematic Report - English For Today #1 Online Language Class in NigeriaDocumento3 pagineThe Structure of A Schematic Report - English For Today #1 Online Language Class in NigeriaDavid NyanguNessuna valutazione finora

- PascalDocumento3 paginePascalsenthilgnmitNessuna valutazione finora

- Cure Matters - Epo-TekDocumento4 pagineCure Matters - Epo-TekCelia ThomasNessuna valutazione finora

- Turbo Charger by ERIDocumento35 pagineTurbo Charger by ERIRamzi HamiciNessuna valutazione finora

- Template For Lesson Plan: Title of Lesson: Name: Date: Time: LocationDocumento3 pagineTemplate For Lesson Plan: Title of Lesson: Name: Date: Time: Locationapi-307404330Nessuna valutazione finora

- Solar CarsDocumento30 pagineSolar Carsnaallamahesh0% (1)

- En Cement Industry Open Cast MiningDocumento8 pagineEn Cement Industry Open Cast MiningArnaldo Macchi MillanNessuna valutazione finora

- FDM Case StudyDocumento2 pagineFDM Case StudyAnonymous itBHhEuNessuna valutazione finora

- Chilled Water Design SpecificationsDocumento45 pagineChilled Water Design SpecificationsAthirahNessuna valutazione finora

- Powered By: SpecificationDocumento2 paginePowered By: SpecificationStevenNessuna valutazione finora

- Why I Did It by Nathuram Godse-Court StatementDocumento2 pagineWhy I Did It by Nathuram Godse-Court StatementmanatdeathrowNessuna valutazione finora

- Indian Institute of Technology Kharagpur Kharagpur, West Bengal 721302Documento28 pagineIndian Institute of Technology Kharagpur Kharagpur, West Bengal 721302Bipradip BiswasNessuna valutazione finora

- Conveyor design analysis reportDocumento10 pagineConveyor design analysis reportRafael FerreiraNessuna valutazione finora

- Reynolds Tubing Parts List 2014Documento9 pagineReynolds Tubing Parts List 2014Pato Patiño MuñozNessuna valutazione finora

- Talent EXP CalculatorDocumento27 pagineTalent EXP CalculatorWahyu KunNessuna valutazione finora

- Filter Separator SizingDocumento3 pagineFilter Separator SizingChem.EnggNessuna valutazione finora

- Solid Waste Pollution in Ho Chi Minh CityDocumento33 pagineSolid Waste Pollution in Ho Chi Minh CityLamTraMy13Nessuna valutazione finora

- Oracle SQL PL SQL A Brief IntroductionDocumento112 pagineOracle SQL PL SQL A Brief IntroductionSadat Mohammad Akash100% (2)

- Transistor Equivalent Book 2sc2238Documento31 pagineTransistor Equivalent Book 2sc2238rsmy88% (32)

- C252 Service Manual: Ricoh Group CompaniesDocumento164 pagineC252 Service Manual: Ricoh Group CompaniesThambi DuraiNessuna valutazione finora

- MGMT 405 Chapters 7, 8 & 9 AnnotationsDocumento15 pagineMGMT 405 Chapters 7, 8 & 9 AnnotationskevingpinoyNessuna valutazione finora

- HRM NotesDocumento2 pagineHRM NotesPrakash MahatoNessuna valutazione finora

- Technical Data: NPN Power Silicon TransistorDocumento2 pagineTechnical Data: NPN Power Silicon TransistorJuan RamírezNessuna valutazione finora

- Servo2008 10Documento84 pagineServo2008 10Karina Cristina Parente100% (1)

- Hardware GT2560 RevA+Documento2 pagineHardware GT2560 RevA+Jonatan PisuNessuna valutazione finora

- OTC119101 OptiX OSN 9800 Hardware Description ISSUE1.11Documento76 pagineOTC119101 OptiX OSN 9800 Hardware Description ISSUE1.11EliValdiviaZúnigaNessuna valutazione finora

- Gujarat Technological UniversityDocumento2 pagineGujarat Technological UniversityRishabh TalujaNessuna valutazione finora

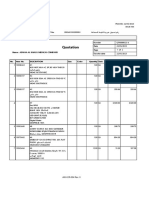

- QuotationDocumento2 pagineQuotationMahmoud Sadaka SafiaNessuna valutazione finora

- Unit-7 Verification and ValidationDocumento28 pagineUnit-7 Verification and ValidationSandeep V GowdaNessuna valutazione finora