Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

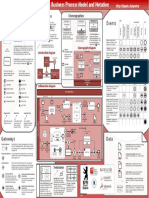

Software Testing at A Glance or Two

Caricato da

RajarajanDescrizione originale:

Titolo originale

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Software Testing at A Glance or Two

Caricato da

RajarajanCopyright:

Formati disponibili

The purpose of test:

Provide information to assure the quality of

- the product (finding faults) Mistake Process

/ Error

- decisions (quantifying risks)

Software Testing at a Glance – or two improvement

- process (finding root causes) Defect / Fault Fault correction

Test is comparing what is to what should be

Failure Incident report Deployment

Start Business goals WWW.DELTA.DK Maintenance testing Disposal

Business Tool support for monitoring Continuous analysis of usage Hyperlinks

here requirements

Vision

Vision // ideas

ideas // needs

needs // expectations

expectations

Organizational management

Test policy

- definition of test Process Improvement

- test process to use

- test evaluation

- quality level Project plan Be prepared - Project management

- approach to

improvement R Customer

Test management satisfaction

Phase specific Overall Test process for all test phases Base From test basis to test cases

test strategy test plan document

R R Configuration management Test

Overall planning derive create using

test strategy

Requirements tower test case design techniques

R Environment requirements Test plan Test phases Quality assurance

User Test Organize into

Platform Hardware Standards 1. Introduction Acceptance testing

requirements specification

- user Report

De

2. Test item(s)

- operational (OAT) User Test conditions / Testgruppe

Product quality requirements 3. Features to be tested

ve

Acceptance testing T T Test requirements TestTestgruppe

procedure /

Test strategy 4. Features not to be - contractual (FAT) requirements Test Test script

R 1

lop

1. Entry & exit criteria 5. Approach - alpha & beta Test plan Test specification correction execution 2

Test cases 3

2. Test approach 6. Item pass/fail criteria System integration Product R R of the 4

me

Functional requirements

Monitoring and control

3. Techniques to use 7. Suspension criteria design Report test object

nt

testing Test

4. Completion criteria 8. Test deliverables Product ÷

System testing design

System integration testing T recording

5. Independence 9. Testing tasks Test coverage

- functional R Test plan Test logs

6. Standards to use 10. Environmental needs Test specification

- non-functional System R R % of the coverage items touched by a test

7. Environment 11. Responsibilities N Checking for

Non-functional requirements Tracing

8. Test automation Component requirements Report Req. 146 Coverage items:

Integration

Performance requirements 12. Staffing and training test completion

9. Degree of reuse 13. Schedule integration testing SW - statements, decisions, conditions

Usability requirements System testing T Req. 147

10. Retest and Availability requirements 14. Risks Component testing requirements - interfaces

R Test plan Test case

regression testing Maintainability requirements Test specification Phase - user requirements, use cases

A plan must be The price of a fault correction Architectural R R Req. 148 27

11. Test process Phase

test - business processes, business goals

12. Measures Specific depends on when the fault is design Report

Project requirements Tester skills Phase

test

report Test case design techniques

13. Incident Measurable found Architectural Test case

Cost Resources Time Agreed Test Component integration testing T Hw Phase

test

report Specification based

management Test 1000 design - prevents omissions 56 Phase

test

Tool support Test Specification

Relevant skills R Tp Ts report - equivalence class partitioning for test

Tool support for skills - prevents gold-plating test

report 1. Test Design

Time specific Soft Test ITTest IT R R - boundary value analysis

Soft Detailed - shows the rationale for test cases report specification 1.1 Features to be tested

Requirements management skills skills skills Report - decision tables

100 design

Integration

skillsskills skills

Domain Test design 1.2 Approach refinement

Soft Soft

knowledge

IT DomainIT

€ Detailed

- state transitions

Test input data 1.3 Associated test case

Development skills skills skills skills

knowledge 10 Component testing T D - use cases

design Incident preparation 1.4 Feature pass/fail criteria

Domain Domain 1 R T pR TsR Experience based

models Requirement Design Tool support for dynamic analysis report Test oracles

knowledgeknowledge Test Production - error guessing

specification Source: Grove Consultants, UK Recognition 2. Test Cases

Sequential Component

Component

Component supporting data - check lists A fool with a tool 2.1 Test Case 1

Coding Component S

C D I T Test Roles The best (test)team is Hw classification - exploratory is still a fool 2.1.1 Test items (rationale)

Test leader (manager) based on knowledge of Tool support for static testing impact Structure based 2.1.2 Prerequisites

Test analyst / designer Static analysis, incl. requirements testing - statements 2.1.3 Inputs

Waterfall and Belbin types: Investigation

Test executer - decisions

The white-box insect

V-models Quality assurance for all work products supporting data branch (in) 2.1.4 Expected outcomes

C Reviewer / inspector Cerebral Definition of quality criteria Validation Verification Reporting classification - conditions 2.1.5 Inter case

T Reporting

Test environment responsible Plant Definition of quality criteria Validation Verification - models basic block ------- statement dependencies

D T Good Good Known impact

(Test)tool responsible Monitor/Evaluator Action -------

I code test quality R 2.N Test Case n

Specialist Review types: informal review, walkthrough, technical review, management review, inspection, audit supporting data decision

S classification condition ------- branch point Trace Test Procedure

Iterative

Responsibility matrix Action oriented Static analysis by tools D Dynamic analysis by tools T Dynamic test manually or by tools

C T UN- Tasks Shaper impact tables 1. Purpose

D

Poor Poor known R Disposition 2. Prerequisites

T Implementer branch branch

I

code test quality Configuration management supporting data Overall 3. Procedure

C P P Completer/Finisher Reporting Recognition Investigation Action (out) (out)

of selected work products , Reporting

Reporting

C T R R R C R R Storage Reporting

Reporting classific. test report steps

People oriented Tool support product components , and products Reporting Disposition Tool support decision outcome

D T Identification Change management impact

I Communication is this is what I Coordinator

People

P

C T think is meant Team-worker

this is what R P Plan at project start d

D T I mean Ressource investigator e Test environment

this is what l

this is what I hear C C R P i Hardware, software, network, other systems, tools, data,

I I say v Test report

Responsible, Performing, Consulted e

Test targets room, peripherals, stationary, chairs, ‘phones, food, drink, candy

RAD, RUP or Agile Triangle of test quality r 1. Summary

development test y Tool support for New product Change related

Conceive change one aspect Tool support for test execution and logging 2. Variances

Test could be ruled by product risks Plan during development d Test planning and monitoring - functional - regression testing

Design -> change at least two e 3. Comprehensiveness

background background + willingness to run risks l

Test running Test script generation

Implement i Management (of everything) - non-functional - retesting 4. Summary of results

v Test harness and drivers Coverage measurement

Test + Correction e - structure/architecture 5. Evaluation

r Monitoring and Comparators Simulator Security testing

development test y 6. Summary of

Product Risk analysis Estimation Scheduling control activities

Plan at test start d

Maintenance Tool support for non-functional test Performance, Load, Stress

Risk exposure = probability x effect Time e

Risk management Resources l

i Communication

Quality v

e

Risks hit in many places r

Project development test y

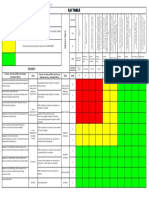

Process Higher productivity Level Org.al Innovation Causal Analysis Level 5: Optimization, Defect Prevention and Quality Control Scale p g

5 and Deployment and Resolution PA 5.2 N N N N N N Test strategy A B C D

Cooperation matrix

and quality Process improvement • Test process optimisation

5 Organizational Quantitative • Quality control Life-cycle model A B Learning

Business 4 Process Performance Project Management N N N N N N • Application of process data for defect prevention Moment of involvement A B C D

Lower Optimising PA 5.1 Tools

Requirements Estimating and planning A B 55 procedures

risk Integrated Project Development Level 4: Management and Measurement

N N N N N N Acting Test specification techniques A B

Organizational Management for IPPD Technical PA 4.2 methods

4 Learning • Software quality evaluation Static test techniques A B

Process Focus Solution Organizational Environ-

Risk Management • Establish a test measurement program Metrics A B C D

Controlled Organizational ment for Integration PA 4.1 N N N N N N • Establish an organisation-wide review program techniques

Product Integration

3 Process Definition Decision Analysis

(implementing) Test tools A B C

44

Integrated Teaming Test environment A B C

Organizational and Resolution

Verification L L P F L P Level 3: Integration

3 Training Integrated

PA 3.2 Office environment A

Described Supplier Management

• Control and monitor the test process Commitment and motivation A B C 33

Why do we work? Validation L L P F L P Initiating • Integrate testing into the software lifecycle

PA 3.1 Test functions and training A B C

• Establish a technical training program

Key areas

Configuration • Establish a software test organisation Scope of methodology A B C

Project Planning

2 Management Communication A B C

PA 2.2 L L P F L L 22 Actions

motives Repeatable Project Monitoring Requirements Process and Product Establishing Level 2: Phase Definition Reporting A B C D

incentives 2

and Control Management Quality Assurance

F F L F F P • Institutionalise basic testing techniques and methods Defect management A B C ☺

Supplier Agreement Measurement

PA 2.1 Diagnosing • Initiate a test planning process Testware management A B C D Adjustment

Management and Analysis (plan and write)

1 • Develop testing and debugging goals Test process management A B C 1

Initial PA 1.1 L F P F L P Evaluating A B

Process Management Project Management Engineering Support

(assessment) Level 1: Initial Low-level testing A B C

Test process

improvement

models

Maturity levels 1 2 3 4 5

General process

improvement

models

CMM - levels CMMI –process areas ISO 15504 – result profile TMM - levels TPI®– matrix Deployment

Input Exit Degree of independence (producer vs. tester) Industry specific Testing standards Other related standards The golden rule of testing:

Process Procedure: Test approaches Standards If you don’t measure you’re left with

criteria 1. Producer tests own product (you shall test this much) (you shall test like this) – IEEE 1044

- Activity 1 Analytical (risk) Model-based (statistical) Structured Always test so that whenever

template - Activity 2 2. Tests are designed by other team members Quality assurance – aviation – BS-7925 – ISO 9126

only one reason to believe you’re in

Entry - Consultative (domain experts) Methodical (check-lists) Heuristic (exploratory) 3. Tests are designed by independent testers (you shall test) – medical devices – IEEE 829 control – hysterical optimism. you have to stop you have

Output – IEEE/ISO 12207

criteria - Activity n Standard compliant Regression-averse (reuse and automation) 4. Tests are designed by external testers – ISO 9000:2001 – military equipment – IEEE 1028 Tom De Marco done the best possible test.

– IEEE/ISO 15288

© DELTA, WWW.DELTA.DK - Anne Mette Jonassen Hass, AMJ@DELTA.DK – based on ISEB Software Testing Practitioner Syllabus, BS-7925-1:1998; IEEE Std. 610-1990; IEEE Std. 829-1998; ISTQB testing terms 2005 and presentations at EuroSTAR conferences by Lee Copeland, Steward Reid, Martin Pool, Paul Gerrard, Grove Consultants and many others Graphic advise: EBB-CONSULT, Denmark Screen Beans® Clip Art, USA V. T-UK-o-0603

Potrebbero piacerti anche

- New Freed BrochureDocumento20 pagineNew Freed BrochureHendra JayalifNessuna valutazione finora

- Toki Wo Kakeru Shoujo (The Girl Who Leapt Through Time) - Daylife PDFDocumento1 paginaToki Wo Kakeru Shoujo (The Girl Who Leapt Through Time) - Daylife PDFtakumi75Nessuna valutazione finora

- Customer-Partners - WINDOW-POC GUIDE Harmony EndPoint EPM R81.10 - Step by Step Version FinalDocumento39 pagineCustomer-Partners - WINDOW-POC GUIDE Harmony EndPoint EPM R81.10 - Step by Step Version Finalgarytj210% (1)

- Elitmus Previous PaperDocumento15 pagineElitmus Previous PaperKumar Gaurav100% (1)

- Mount Hood National Forest Map of Closed and Open RoadsDocumento1 paginaMount Hood National Forest Map of Closed and Open RoadsStatesman JournalNessuna valutazione finora

- Still and Quiet MomentsDocumento3 pagineStill and Quiet MomentsRicardo Suarez G100% (1)

- Service in The AI Era: Science, Logic, and Architecture PerspectivesDocumento31 pagineService in The AI Era: Science, Logic, and Architecture PerspectivesCharlene KronstedtNessuna valutazione finora

- L1 in L2 NormDocumento11 pagineL1 in L2 NormzabigNessuna valutazione finora

- اللغة الانجليزية أساسي-7545Documento8 pagineاللغة الانجليزية أساسي-7545Ahmed JahaNessuna valutazione finora

- Projmatic Case StudyDocumento1 paginaProjmatic Case Studyk4gs62x9vdNessuna valutazione finora

- (Exemplo) Matriz de Treinamento GEDocumento1 pagina(Exemplo) Matriz de Treinamento GEpauloNessuna valutazione finora

- Safety Integrity Level (SIL)Documento1 paginaSafety Integrity Level (SIL)UKNessuna valutazione finora

- Deloitte Wireless Industry Value MapDocumento1 paginaDeloitte Wireless Industry Value MapDiogo Pimenta Barreiros0% (1)

- Collections Credit Cards Workflow PDFDocumento1 paginaCollections Credit Cards Workflow PDFBea LadaoNessuna valutazione finora

- AWS Periodic TableDocumento1 paginaAWS Periodic Tabledouglas.dvferreiraNessuna valutazione finora

- Dmaic 12873122766122 Phpapp01Documento1 paginaDmaic 12873122766122 Phpapp01quycoctuNessuna valutazione finora

- Dmaic 12873122766122 Phpapp01Documento1 paginaDmaic 12873122766122 Phpapp01quycoctuNessuna valutazione finora

- Reliability Web 5 Sources of Defects SecureDocumento1 paginaReliability Web 5 Sources of Defects SecureHaitham YoussefNessuna valutazione finora

- Large Campaigns: Login To Opsdog To Purchase The Full Workflow Template (Available in PDF, VisioDocumento1 paginaLarge Campaigns: Login To Opsdog To Purchase The Full Workflow Template (Available in PDF, VisioImran ChowdhuryNessuna valutazione finora

- Online Insurance Sales WorkflowDocumento1 paginaOnline Insurance Sales WorkflowFaisal AzizNessuna valutazione finora

- Value-Map TM DeloitteDocumento1 paginaValue-Map TM DeloitteHugo SalazarNessuna valutazione finora

- Vendor Compliance: Login To Opsdog To Purchase The Full Workflow Template (Available in PDF, VisioDocumento1 paginaVendor Compliance: Login To Opsdog To Purchase The Full Workflow Template (Available in PDF, VisioMrito ManobNessuna valutazione finora

- Tool Selector V2Documento1 paginaTool Selector V2Mohammed MuzakkirNessuna valutazione finora

- Order Management Ecommerce WorkflowDocumento1 paginaOrder Management Ecommerce WorkflowElena EnacheNessuna valutazione finora

- Materials Management: Login To Opsdog To Purchase The Full Workflow Template (Available in PDF, VisioDocumento1 paginaMaterials Management: Login To Opsdog To Purchase The Full Workflow Template (Available in PDF, VisioJyotsana VyasNessuna valutazione finora

- Customer Service Collections Processing WorkflowDocumento1 paginaCustomer Service Collections Processing WorkflowJancy KattaNessuna valutazione finora

- Reference Case Management Current Version Security Access ConfigurationDocumento2 pagineReference Case Management Current Version Security Access ConfigurationSreenathNessuna valutazione finora

- SmallgroupresultsreportDocumento1 paginaSmallgroupresultsreportapi-392364749Nessuna valutazione finora

- Codigos Especiales BMWDocumento37 pagineCodigos Especiales BMWAlberto GonzalezNessuna valutazione finora

- Von Willebrand Factor Activity - 0020004700: in Vitro Diagnostic Medical Device In-Vitro DiagnostikumDocumento3 pagineVon Willebrand Factor Activity - 0020004700: in Vitro Diagnostic Medical Device In-Vitro Diagnostikum28850Nessuna valutazione finora

- Activities Events: Conversations ChoreographiesDocumento1 paginaActivities Events: Conversations ChoreographiesMoataz BelkhairNessuna valutazione finora

- 1 WSF Risk Management PlanDocumento7 pagine1 WSF Risk Management PlanYong Kim100% (1)

- TrakCare Overview 09012015Documento5 pagineTrakCare Overview 09012015keziajessNessuna valutazione finora

- Past defect history details root cause analysis formatDocumento10 paginePast defect history details root cause analysis formatRahulNessuna valutazione finora

- S, D Table: Zone Actions To Be InitiatedDocumento1 paginaS, D Table: Zone Actions To Be InitiatedkrishnakumarNessuna valutazione finora

- Acep Report Attendance Form Final FormDocumento3 pagineAcep Report Attendance Form Final FormTerence VeronaNessuna valutazione finora

- ROKS KPI Canvas - ExcelDocumento2 pagineROKS KPI Canvas - ExcelwhauckNessuna valutazione finora

- Vendor & Customer Setup: Login To Opsdog To Purchase The Full Workflow Template (Available in PDF, VisioDocumento1 paginaVendor & Customer Setup: Login To Opsdog To Purchase The Full Workflow Template (Available in PDF, VisioLIGAYA SILVESTRENessuna valutazione finora

- Performance Manage MenDocumento1 paginaPerformance Manage MenmariaaltammamNessuna valutazione finora

- Adjustment Item ListDocumento1 paginaAdjustment Item ListKaren SantacruzNessuna valutazione finora

- Ccte Counseling Closing-The-Gap Results ReportDocumento2 pagineCcte Counseling Closing-The-Gap Results ReportCheryl McfaddenNessuna valutazione finora

- RACI TemplateDocumento8 pagineRACI TemplateVanitha raoNessuna valutazione finora

- FMEA Download Datei Formblatt Vorlagen DFMEA PFMEA FMEA MSR Approved EnglDocumento12 pagineFMEA Download Datei Formblatt Vorlagen DFMEA PFMEA FMEA MSR Approved EnglJosé Antonio Guerra HernándezNessuna valutazione finora

- DFMEA Examples 29JUN2020 7.2.19Documento24 pagineDFMEA Examples 29JUN2020 7.2.19Mani Rathinam RajamaniNessuna valutazione finora

- BBS and HSE Scorecard RecordsDocumento1 paginaBBS and HSE Scorecard RecordsAl - AminNessuna valutazione finora

- Sccurriculumresultsreport 2Documento1 paginaSccurriculumresultsreport 2api-392364749Nessuna valutazione finora

- A380 Family Maintenance ConceptDocumento1 paginaA380 Family Maintenance Conceptyazan999100% (1)

- Embedded Project WorksheetDocumento4 pagineEmbedded Project WorksheetcammanderNessuna valutazione finora

- Order Cash 1Documento14 pagineOrder Cash 1Alecia ChenNessuna valutazione finora

- Ai Tools! - AI ToolsDocumento3 pagineAi Tools! - AI ToolsHenk Van DamNessuna valutazione finora

- Ai Tools!Documento2 pagineAi Tools!ahmed smailNessuna valutazione finora

- PFMEA - MachiningDocumento14 paginePFMEA - Machiningmani317100% (1)

- Module 1 AssessmentDocumento4 pagineModule 1 AssessmentSheela AliNessuna valutazione finora

- Projective Geometric Algebra: Norms Binary Operations Transformation GroupsDocumento1 paginaProjective Geometric Algebra: Norms Binary Operations Transformation GroupsKrutarth PatelNessuna valutazione finora

- Securite ADV Nivellement Rails Tournées PN Autres VOIE Permis Joints Docum Entatio N Encadrem EntDocumento19 pagineSecurite ADV Nivellement Rails Tournées PN Autres VOIE Permis Joints Docum Entatio N Encadrem EntOuass AkilNessuna valutazione finora

- Segregation of Duties Matrix SampleDocumento3 pagineSegregation of Duties Matrix SampleNijith p.nNessuna valutazione finora

- VIOFO A119 ManualeditedenlargedDocumento1 paginaVIOFO A119 Manualeditedenlargedhi.i.am noneNessuna valutazione finora

- FinalDocumento6 pagineFinalKenneth EnriquezNessuna valutazione finora

- Triaxial Test CoggleDocumento1 paginaTriaxial Test CoggleAndika PerbawaNessuna valutazione finora

- IT4IT Reference Card4Documento1 paginaIT4IT Reference Card4GuillermoVillalonNessuna valutazione finora

- World Trade Organization and It's Millennium Development GoalsDocumento16 pagineWorld Trade Organization and It's Millennium Development GoalsRajarajanNessuna valutazione finora

- Ebook PMO EnglishDocumento68 pagineEbook PMO EnglishsudhirNessuna valutazione finora

- Ten Years of The WTO Agreement On Agriculture: Problems and ProspectsDocumento6 pagineTen Years of The WTO Agreement On Agriculture: Problems and ProspectsRajarajanNessuna valutazione finora

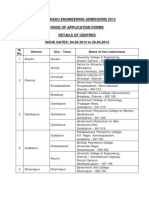

- TNEA 2013 Information BrochureDocumento38 pagineTNEA 2013 Information BrochureRajarajanNessuna valutazione finora

- Initiative v3.0Documento39 pagineInitiative v3.0RajarajanNessuna valutazione finora

- IBPS ProblemDocumento51 pagineIBPS ProblemRajarajanNessuna valutazione finora

- Pak LOC AttacksDocumento41 paginePak LOC AttacksRajarajanNessuna valutazione finora

- Tamilnadu 12th Five Year Plan - ApproachDocumento80 pagineTamilnadu 12th Five Year Plan - ApproachRajarajanNessuna valutazione finora

- National Food Security BillDocumento3 pagineNational Food Security BillRajarajanNessuna valutazione finora

- Tamilnadu Census 2011 Draft ReportDocumento2 pagineTamilnadu Census 2011 Draft ReportRajarajanNessuna valutazione finora

- World Press Freedom Index 2012Documento6 pagineWorld Press Freedom Index 2012RajarajanNessuna valutazione finora

- Colleges Issuing Counselling Form 2013 PDFDocumento4 pagineColleges Issuing Counselling Form 2013 PDFAnu ShibinNessuna valutazione finora

- Netherlands-Spain Final FIFA 2010 Spain Player StatisticsDocumento15 pagineNetherlands-Spain Final FIFA 2010 Spain Player StatisticsRajarajan100% (1)

- Netherlands-Spain Final FIFA 2010 Start ListDocumento1 paginaNetherlands-Spain Final FIFA 2010 Start ListRajarajanNessuna valutazione finora

- Netherlands-Spain Final FIFA 2010 Netherlands Tracking StatisticsDocumento1 paginaNetherlands-Spain Final FIFA 2010 Netherlands Tracking StatisticsRajarajanNessuna valutazione finora

- Uruguay-Germany Third Place Match FIFA 2010 Start ListDocumento1 paginaUruguay-Germany Third Place Match FIFA 2010 Start ListRajarajanNessuna valutazione finora

- Netherlands-Spain Final FIFA 2010 Spain Tracking StatisticsDocumento1 paginaNetherlands-Spain Final FIFA 2010 Spain Tracking StatisticsRajarajanNessuna valutazione finora

- Netherlands-Spain Final FIFA 2010 Players Heat MapDocumento1 paginaNetherlands-Spain Final FIFA 2010 Players Heat MapRajarajanNessuna valutazione finora

- Uruguay-Germany Third Place Match FIFA 2010 Match ReportDocumento2 pagineUruguay-Germany Third Place Match FIFA 2010 Match ReportRajarajanNessuna valutazione finora

- Netherlands-Spain Final FIFA 2010 Tactical LineupDocumento1 paginaNetherlands-Spain Final FIFA 2010 Tactical LineupRajarajanNessuna valutazione finora

- Netherlands-Spain Final FIFA 2010 Netherlands Player StatisticsDocumento15 pagineNetherlands-Spain Final FIFA 2010 Netherlands Player StatisticsRajarajanNessuna valutazione finora

- Netherlands-Spain Final FIFA 2010 Passing DistributionDocumento1 paginaNetherlands-Spain Final FIFA 2010 Passing DistributionRajarajanNessuna valutazione finora

- Netherlands-Spain Final FIFA 2010 Match ReportDocumento2 pagineNetherlands-Spain Final FIFA 2010 Match ReportRajarajanNessuna valutazione finora

- Uruguay-Germany Third Place Match FIFA 2010 Tactical Line UpDocumento1 paginaUruguay-Germany Third Place Match FIFA 2010 Tactical Line UpRajarajanNessuna valutazione finora

- Uruguay-Germany Third Place Match FIFA 2010 Uruguay Player StatisticsDocumento14 pagineUruguay-Germany Third Place Match FIFA 2010 Uruguay Player StatisticsRajarajanNessuna valutazione finora

- Oil Reaches Louisiana ShoresDocumento36 pagineOil Reaches Louisiana ShoresRajarajanNessuna valutazione finora

- Uruguay-Germany Third Place Match FIFA 2010 Uruguay Player StatisticsDocumento14 pagineUruguay-Germany Third Place Match FIFA 2010 Uruguay Player StatisticsRajarajanNessuna valutazione finora

- CLONING OF Candida Antarctica LIPASE A GENE IN Kluveromyces Lactis EXPRESSION SYSTEMDocumento53 pagineCLONING OF Candida Antarctica LIPASE A GENE IN Kluveromyces Lactis EXPRESSION SYSTEMRajarajanNessuna valutazione finora

- Stories in Photograph - Carnival 2010Documento41 pagineStories in Photograph - Carnival 2010RajarajanNessuna valutazione finora

- LG Mini Split ManualDocumento38 pagineLG Mini Split ManualMark ChaplinNessuna valutazione finora

- Presentasi AkmenDocumento18 paginePresentasi AkmenAnonymous uNgaASNessuna valutazione finora

- Service Manual Aire Central Lg. Ln-C0602sa0 PDFDocumento31 pagineService Manual Aire Central Lg. Ln-C0602sa0 PDFFreddy Enrique Luna MirabalNessuna valutazione finora

- Gram-Charlier para Aproximar DensidadesDocumento10 pagineGram-Charlier para Aproximar DensidadesAlejandro LopezNessuna valutazione finora

- Alignment Cooling Water Pump 4A: Halaman: 1 Dari 1 HalamanDocumento3 pagineAlignment Cooling Water Pump 4A: Halaman: 1 Dari 1 Halamanpemeliharaan.turbin03Nessuna valutazione finora

- Presentacion ISA Graphic Febrero 2015Documento28 paginePresentacion ISA Graphic Febrero 2015Ileana ContrerasNessuna valutazione finora

- Wills and Succession ReviewerDocumento85 pagineWills and Succession ReviewerYoshimata Maki100% (1)

- Converting WSFU To GPMDocumento6 pagineConverting WSFU To GPMDjoko SuprabowoNessuna valutazione finora

- Project ProposalDocumento6 pagineProject Proposalapi-386094460Nessuna valutazione finora

- m100 Stopswitch ManualDocumento12 paginem100 Stopswitch ManualPatrick TiongsonNessuna valutazione finora

- GostDocumento29 pagineGostMoldoveanu Teodor80% (5)

- List of Yale University GraduatesDocumento158 pagineList of Yale University GraduatesWilliam Litynski100% (1)

- 3G Ardzyka Raka R 1910631030065 Assignment 8Documento3 pagine3G Ardzyka Raka R 1910631030065 Assignment 8Raka RamadhanNessuna valutazione finora

- Princeton Review GRE ScheduleDocumento1 paginaPrinceton Review GRE ScheduleNishant PanigrahiNessuna valutazione finora

- PM and Presidential Gov'ts Differ Due to Formal Powers and AppointmentDocumento3 paginePM and Presidential Gov'ts Differ Due to Formal Powers and AppointmentNikeyNessuna valutazione finora

- Introduction To Circuit LabDocumento8 pagineIntroduction To Circuit LabDaudKhanNessuna valutazione finora

- Daily Timecard Entry in HoursDocumento20 pagineDaily Timecard Entry in HoursadnanykhanNessuna valutazione finora

- Ty Btech syllabusIT Revision 2015 19 14june17 - withHSMC - 1 PDFDocumento85 pagineTy Btech syllabusIT Revision 2015 19 14june17 - withHSMC - 1 PDFMusadiqNessuna valutazione finora

- 365) - The Income Tax Rate Is 40%. Additional Expenses Are Estimated As FollowsDocumento3 pagine365) - The Income Tax Rate Is 40%. Additional Expenses Are Estimated As FollowsMihir HareetNessuna valutazione finora

- SG LO2 Apply Fertilizer (GoldTown)Documento2 pagineSG LO2 Apply Fertilizer (GoldTown)Mayiendlesslove WhiteNessuna valutazione finora

- This Study Resource Was: Artur Vartanyan Supply Chain and Operations Management MGMT25000D Tesla Motors, IncDocumento9 pagineThis Study Resource Was: Artur Vartanyan Supply Chain and Operations Management MGMT25000D Tesla Motors, IncNguyễn Như QuỳnhNessuna valutazione finora

- BS Iec 61643-32-2017 - (2020-05-04 - 04-32-37 Am)Documento46 pagineBS Iec 61643-32-2017 - (2020-05-04 - 04-32-37 Am)Shaiful ShazwanNessuna valutazione finora

- Intra Cell HODocumento10 pagineIntra Cell HOMostafa Mohammed EladawyNessuna valutazione finora

- Self Improvement Books To ReadDocumento13 pagineSelf Improvement Books To ReadAnonymous oTtlhP67% (3)

- Lecture 3 - Evolution of Labour Laws in IndiaDocumento13 pagineLecture 3 - Evolution of Labour Laws in IndiaGourav SharmaNessuna valutazione finora

- Tucker Northlake SLUPsDocumento182 pagineTucker Northlake SLUPsZachary HansenNessuna valutazione finora

- Visual Basic Is Initiated by Using The Programs OptionDocumento141 pagineVisual Basic Is Initiated by Using The Programs Optionsaroj786Nessuna valutazione finora

- We BradDocumento528 pagineWe BradBudi SutomoNessuna valutazione finora

- Ecovadis Survey Full 3 07 2019Documento31 pagineEcovadis Survey Full 3 07 2019ruthvikNessuna valutazione finora

- Secure Password-Driven Fingerprint Biometrics AuthenticationDocumento5 pagineSecure Password-Driven Fingerprint Biometrics AuthenticationDorothy ManriqueNessuna valutazione finora