Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

NVIDIA System Information 11-27-2016 16-30-1688

Caricato da

Sai Ruthwick Madas0 valutazioniIl 0% ha trovato utile questo documento (0 voti)

75 visualizzazioni10 paginenvidia hiistory and how it formed what are the series of graphic cards

Copyright

© © All Rights Reserved

Formati disponibili

TXT, PDF, TXT o leggi online da Scribd

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentonvidia hiistory and how it formed what are the series of graphic cards

Copyright:

© All Rights Reserved

Formati disponibili

Scarica in formato TXT, PDF, TXT o leggi online su Scribd

0 valutazioniIl 0% ha trovato utile questo documento (0 voti)

75 visualizzazioni10 pagineNVIDIA System Information 11-27-2016 16-30-1688

Caricato da

Sai Ruthwick Madasnvidia hiistory and how it formed what are the series of graphic cards

Copyright:

© All Rights Reserved

Formati disponibili

Scarica in formato TXT, PDF, TXT o leggi online su Scribd

Sei sulla pagina 1di 10

This article is about the Nvidia brand name.

For other uses, see G force (disamb

iguation).

GeForce graphics processor

GeForce newlogo.png

Manufacturer Nvidia

Introduced August 31, 1999;

17 years ago

Type Consumer graphics cards

GeForce is a brand of graphics processing units (GPUs) designed by Nvidia. As of

2016, there have been thirteen iterations of the design. The first GeForce prod

ucts were discrete GPUs designed for add-on graphics boards, intended for the hi

gh-margin PC gaming market, and later diversification of the product line covere

d all tiers of the PC graphics market, ranging from cost-sensitive[1] GPUs integ

rated on motherboards, to mainstream add-in retail boards. Most recently, GeForc

e technology has been introduced into Nvidia's line of embedded application proc

essors, designed for electronic handhelds and mobile handsets.

With respect to discrete GPUs, found in add-in graphics-boards, Nvidia's GeForce

and AMD's Radeon GPUs are the only remaining competitors in the high-end market

. Along with its nearest competitor, the AMD Radeon, the GeForce architecture is

moving toward general-purpose graphics processor unit (GPGPU). GPGPU is expecte

d to expand GPU functionality beyond the traditional rasterization of 3D graphic

s, to turn it into a high-performance computing device able to execute arbitrary

programming code in the same way a CPU does, but with different strengths (high

ly parallel execution of straightforward calculations) and weaknesses (worse per

formance for complex decision-making code).

Contents [hide]

1 Name origin

2 License

3 Graphics processor generations

3.1 GeForce 256

3.2 GeForce 2 series

3.3 GeForce 3 series

3.4 GeForce 4 series

3.5 GeForce FX series

3.6 GeForce 6 series

3.7 GeForce 7 series

3.8 GeForce 8 series

3.9 GeForce 9 series and 100 series

3.10 GeForce 200 series and 300 series

3.11 GeForce 400 series and 500 series

3.12 GeForce 600 series, 700 series and 800M series

3.13 GeForce 900 series

3.14 GeForce 10 series

4 Variants

4.1 Mobile GPUs

4.2 Small form factor GPUs

4.3 Integrated desktop motherboard GPUs

5 Nomenclature

6 Graphics device drivers

6.1 Proprietary

6.2 Free and open-source

7 References

8 External links

Name origin[edit]

The "GeForce" name originated from a contest held by Nvidia in early 1999 called

"Name That Chip". The company called out to the public to name the successor to

the RIVA TNT2 line of graphics boards. There were over 12,000 entries received

and 7 winners received a RIVA TNT2 Ultra graphics card as a reward.[2][3]

License[edit]

The license has common terms against reverse engineering, copying and sub-licens

ing, and it disclaims warranties and liability.[4]

Starting in 2016 the GeFORCE license says Nvidia "collects... personally identif

iable information about Customer and CUSTOMER SYSTEM as well as configures CUSTO

MER SYSTEM in order to ... (d) deliver marketing communications."[4] The privacy

notice goes on to say, "We are not able to respond to "Do Not Track" signals se

t by a browser at this time. We also permit third party online advertising netwo

rks and social media companies to collect information... We may combine personal

information that we collect about you with the browsing and tracking informatio

n collected by these [cookies and beacons] technologies.".[5]

The software configures the user's system to optimize its use, and the license s

ays, "NVIDIA will have no responsibility for any damage or loss to CUSTOMER SYST

EM (including loss of data or access) arising from or relating to (y) any change

s to the configuration, application settings, environment variables, registry, d

rivers, BIOS, or other attributes of CUSTOMER SYSTEM (or any part of CUSTOMER SY

STEM) initiated through the SOFTWARE".[4]

Graphics processor generations[edit]

GeForce chip integrated on a laptop motherboard.

GeForce 256[edit]

Main article: GeForce 256

Launched on August 31, 1999, the GeForce 256 (NV10) was the first consumer-level

PC graphics chip with hardware transform, lighting, and shading although 3D gam

es utilizing this feature did not appear until later. Initial GeForce 256 boards

shipped with SDR SDRAM memory, and later boards shipped with faster DDR SDRAM m

emory.

GeForce 2 series[edit]

Main article: GeForce 2 series

Launched in April 2000, the first GeForce2 (NV15) was another high-performance g

raphics chip. Nvidia moved to a twin texture processor per pipeline (4x2) design

, doubling texture fillrate per clock compared to GeForce 256. Later, Nvidia rel

eased the GeForce2 MX (NV11), which offered performance similar to the GeForce 2

56 but at a fraction of the cost. The MX was a compelling value in the low/mid-r

ange market segments and was popular with OEM PC manufacturers and users alike.

The GeForce 2 Ultra was the high-end model in this series.

GeForce 3 series[edit]

Main article: GeForce 3 series

Launched in February 2001, the GeForce3 (NV20) introduced programmable vertex an

d pixel shaders to the GeForce family and to consumer-level graphics accelerator

s. It had good overall performance and shader support, making it popular with en

thusiasts although it never hit the midrange price point. The NV2A developed for

the Microsoft Xbox game console is a derivative of the GeForce 3.

GeForce 4 series[edit]

Main article: GeForce 4 series

Launched in February 2002, the then-high-end GeForce4 Ti (NV25) was mostly a ref

inement to the GeForce3. The biggest advancements included enhancements to anti-

aliasing capabilities, an improved memory controller, a second vertex shader, an

d a manufacturing process size reduction to increase clock speeds. Another membe

r of the GeForce 4 family, the budget GeForce4 MX, was based on the GeForce2, wi

th the addition of some features from the GeForce4 Ti. It targeted the value seg

ment of the market and lacked pixel shaders. Most of these models used the AGP 4

interface, but a few began the transition to AGP 8.

GeForce FX series[edit]

Main article: GeForce FX series

Launched in 2003, the GeForce FX (NV30) was a huge change in architecture compar

ed to its predecessors. The GPU was designed not only to support the new Shader

Model 2 specification but also to perform well on older titles. However, initial

models like the GeForce FX 5800 Ultra suffered from weak floating point shader

performance and excessive heat which required infamously noisy two-slot cooling

solutions. Products in this series carry the 5000 model number, as it is the fif

th generation of the GeForce, though Nvidia marketed the cards as GeForce FX ins

tead of GeForce 5 to show off "the dawn of cinematic rendering".

GeForce 6 series[edit]

Main article: GeForce 6 series

Launched in April 2004, the GeForce 6 (NV40) added Shader Model 3.0 support to t

he GeForce family, while correcting the weak floating point shader performance o

f its predecessor. It also implemented high dynamic range imaging and introduced

SLI (Scalable Link Interface) and PureVideo capability (integrated partial hard

ware MPEG-2, VC-1, Windows Media Video, and H.264 decoding and fully accelerated

video post-processing).

GeForce 7 series[edit]

Main article: GeForce 7 series

The seventh generation GeForce (G70/NV47) was launched in June 2005 and was the

last Nvidia video card series that could support the AGP bus. The design was a r

efined version of GeForce 6, with the major improvements being a widened pipelin

e and an increase in clock speed. The GeForce 7 also offers new transparency sup

ersampling and transparency multisampling anti-aliasing modes (TSAA and TMAA). T

hese new anti-aliasing modes were later enabled for the GeForce 6 series as well

. The GeForce 7950GT featured the highest performance GPU with an AGP interface

in the Nvidia line. This era began the transition to the PCI-Express interface.

A 128-bit, 8 ROP variant of the 7950 GT, called the RSX 'Reality Synthesizer', i

s used as the main GPU in the Sony PlayStation 3.

GeForce 8 series[edit]

Main article: GeForce 8 series

Released on November 8, 2006, the eighth-generation GeForce (originally called G

80) was the first ever GPU to fully support Direct3D 10. Manufactured using an 9

0 nm process and built around the new Tesla microarchitecture, it implemented th

e unified shader model. Initially just the 8800GTX model was launched, while the

GTS variant was released months into the product line's life, and it took nearl

y six months for mid-range and OEM/mainstream cards to be integrated into the 8

series. The die shrink down to 65 nm and a revision to the G80 design, codenamed

G92, were implemented into the 8 series with the 8800GS, 8800GT and 8800GTS-512

, first released on October 29, 2007, almost one whole year after the initial G8

0 release.

GeForce 9 series and 100 series[edit]

Main articles: GeForce 9 series and GeForce 100 series

The first product was released on February 21, 2008.[6] Not even four months old

er than the initial G92 release, all 9-series designs, both speculated and curre

ntly out, are simply revisions to existing late 8-series products. The 9800GX2 u

ses two G92 GPUs, as used in later 8800 cards, in a dual PCB configuration while

still only requiring a single PCI-Express 16x slot. The 9800GX2 utilizes two se

parate 256-bit memory busses, one for each GPU and its respective 512 MB of memo

ry, which equates to an overall of 1 GB of memory on the card (although the SLI

configuration of the chips necessitates mirroring the frame buffer between the t

wo chips, thus effectively halving the memory performance of a 256-bit/512MB con

figuration). The later 9800GTX features a single G92 GPU, 256-bit data bus, and

512 MB of GDDR3 memory.[7]

Prior to the release, no concrete information was known except that the official

s claimed the next generation products had close to 1 TFLOPS processing power wi

th the GPU cores still being manufactured in the 65 nm process, and reports abou

t Nvidia downplaying the significance of Direct3D 10.1.[8] On March 2009, severa

l sources reported that Nvidia had quietly launched a new series of GeForce prod

ucts, namely the GeForce 100 Series, which consists of rebadged 9 Series parts.[

9][10][11] GeForce 100 series products were not available for individual purchas

e.[1]

GeForce 200 series and 300 series[edit]

Main articles: GeForce 200 series and GeForce 300 series

Based on the GT200 graphics processor consisting of 1.4 billion transistors, cod

enamed Tesla, the 200 series was launched on June 16, 2008.[12] The next generat

ion of the GeForce series takes the card-naming scheme in a new direction, by re

placing the series number (such as 8800 for 8-series cards) with the GTX or GTS

suffix (which used to go at the end of card names, denoting their 'rank' among o

ther similar models), and then adding model-numbers such as 260 and 280 after th

at. The series features the new GT200 core on a 65nm die.[13] The first products

were the GeForce GTX 260 and the more expensive GeForce GTX 280.[14] The GeForc

e 310 was released on November 27, 2009, which is a rebrand of GeForce 210.[15][

16] The 300 series cards are rebranded DirectX 10.1 compatible GPUs from the 200

series, which were not available for individual purchase.

GeForce 400 series and 500 series[edit]

Main articles: GeForce 400 series and GeForce 500 series

On April 7, 2010, Nvidia released[17] the GeForce GTX 470 and GTX 480, the first

cards based on the new Fermi architecture, codenamed GF100; they were the first

Nvidia GPUs to utilize 1 GB or more of GDDR5 memory. The GTX 470 and GTX 480 we

re heavily criticized due to high power use, high temperatures, and very loud no

ise that were not balanced by the performance offered, even though the GTX 480 w

as the fastest DirectX 11 card as of its introduction.

In November 2010, Nvidia released a new flagship GPU based on an enhanced GF100

architecture (GF110), called the GTX 580, that featured higher performance, less

power utilization, less heat, and less noise than the GTX 480. This GPU receive

d much better reviews than the GTX 480. Nvidia later also released the GTX 590,

which packs two GF110 GPUs on a single card.

GeForce 600 series, 700 series and 800M series[edit]

Main articles: GeForce 600 series, GeForce 700 series, and GeForce 800M series

Asus Nvidia GeForce GTX 650 Ti, a PCI Express 3.0 16 graphics card

In September 2010, Nvidia announced that the successor to Fermi microarchitectur

e would be the Kepler microarchitecture, manufactured with the TSMC 28 nm fabric

ation process. Earlier, Nvidia had been contracted to supply their top-end GK110

cores for use in Oak Ridge National Laboratory's "Titan" supercomputer, leading

to a shortage of GK110 cores. After AMD launched their own annual refresh in ea

rly 2012, the Radeon HD 7000 series, Nvidia began the release of the GeForce 600

series in March 2012. The GK104 core, originally intended for their mid-range s

egment of their lineup, became the flagship GTX 680. It introduced significant i

mprovements in performance, heat, and power efficiency compared to the Fermi arc

hitecture and closely matched AMD's flagship Radeon HD 7970. It was quickly foll

owed by the dual-GK104 GTX 690 and the GTX 670, which featured only a slightly c

ut-down GK104 core and was very close in performance to the GTX 680.

With the GTX TITAN, Nvidia also released GPU Boost 2.0, which would allow the GP

U clock speed to increase indefinitely until a user-set temperature limit was re

ached without passing a user-specified maximum fan speed. The final GeForce 600

series release was the GTX 650 Ti BOOST based on the GK106 core, in response to

AMD's Radeon HD 7790 release. At the end of May 2013, Nvidia announced the 700 s

eries, which was still based on the Kepler architecture, however it featured a G

K110-based card at the top of the lineup. The GTX 780 was a slightly cut-down TI

TAN that achieved nearly the same performance for two-thirds of the price. It fe

atured the same advanced reference cooler design, but did not have the unlocked

double-precision cores and was equipped with 3 GB of memory.

At the same time, Nvidia announced ShadowPlay, a screen capture solution that us

ed an integrated H.264 encoder built into the Kepler architecture that Nvidia ha

d not revealed previously. It could be used to record gameplay without a capture

card, and with negligible performance decrease compared to software recording s

olutions, and was available even on the previous generation GeForce 600 series c

ards. The software beta for ShadowPlay, however, experienced multiple delays and

would not be released until the end of October 2013. A week after the release o

f the GTX 780, Nvidia announced the GTX 770 to be a rebrand of the GTX 680. It w

as followed by the GTX 760 shortly after, which was also based on the GK104 core

and similar to the GTX 660 Ti. No more 700 series cards were set for release in

2013, although Nvidia announced G-Sync, another feature of the Kepler architect

ure that Nvidia had left unmentioned, which allowed the GPU to dynamically contr

ol the refresh rate of G-Sync-compatible monitors which would release in 2014, t

o combat tearing and judder. However, in October, AMD released the R9 290X, whic

h came in at $100 less than the GTX 780. In response, Nvidia slashed the price o

f the GTX 780 by $150 and released the GTX 780 Ti, which featured a full 2880-co

re GK110 core even more powerful than the GTX TITAN, along with enhancements to

the power delivery system which improved overclocking, and managed to pull ahead

of AMD's new release.

The GeForce 800M series consists of rebranded 700M series parts based on the Kep

ler architecture and some lower-end parts based on the newer Maxwell architectur

e.

GeForce 900 series[edit]

Main article: GeForce 900 series

In March 2013, Nvidia announced that the successor to Kepler would be the Maxwel

l microarchitecture.[18] It was released in 2014.

GeForce 10 series[edit]

Main article: GeForce 10 series

In March 2014, Nvidia announced that the successor to Maxwell would be the Pasca

l microarchitecture; announced on the 6th May 2016 and released on the 27th May

2016. Architectural improvements include the following:[19][20]

In Pascal, an SM (streaming multiprocessor) consists of 64 CUDA cores, a number

identical to AMD's GCN CU (compute unit). Maxwell packed 128, Kepler 192, Fermi

32 and Tesla only 8 CUDA cores into an SM; the GP100 SM is partitioned into two

processing blocks, each having 32 single-precision CUDA Cores, an instruction bu

ffer, a warp scheduler, 2 texture mapping units and 2 dispatch units.

GDDR5X New memory standard supporting 10Gbit/s data rates and an updated memory

controller. Only the Nvidia Titan X and GTX 1080 support GDDR5X. The GTX 1070, G

TX 1060, GTX 1050TI, and GTX 1050 use GDDR5.[21]

Unified memory A memory architecture, where the CPU and GPU can access both main

system memory and memory on the graphics card with the help of a technology cal

led "Page Migration Engine".

NVLink A high-bandwidth bus between the CPU and GPU, and between multiple GPUs.

Allows much higher transfer speeds than those achievable by using PCI Express; e

stimated to provide between 80 and 200 GB/s.[22][23]

16-bit (FP16) floating-point operations can be executed at twice the rate of 32-

bit floating-point operations ("single precision")[24] and 64-bit floating-point

operations ("double precision") executed at half the rate of 32-bit floating po

int operations (Maxwell 1/32 rate).[25]

Variants[edit]

Mobile GPUs[edit]

Since the GeForce2, Nvidia has produced a number of graphics chipsets for notebo

ok computers under the GeForce Go branding. Most of the features present in the

desktop counterparts are present in the mobile ones. These GPUs are generally op

timized for lower power consumption and less heat output in order to be used in

notebook PCs and small desktops.

Beginning with the GeForce 8 series, the GeForce Go brand was discontinued and t

he mobile GPUs were integrated with the main line of GeForce GPUs, but their nam

e suffixed with an M. This ended in 2016 with the launch of the laptop GeForce 1

0 series - Nvidia dropped the M suffix, opting to unify the branding between the

ir desktop and laptop GPU offerings, as notebook Pascal GPUs are almost as power

ful as their desktop counterparts (something Nvidia tested with their "desktop-c

lass" notebook GTX 980 GPU back in 2015).[26]

Small form factor GPUs[edit]

Similar to the mobile GPUs, Nvidia also released a few GPUs in "small form facto

r" format, for use in all-in-one desktops. These GPUs are suffixed with an S, si

milar to the M used for mobile products.[27]

Integrated desktop motherboard GPUs[edit]

Beginning with the nForce 4, Nvidia started including onboard graphics solutions

in their motherboard chipsets. These onboard graphics solutions were called mGP

Us (motherboard GPUs).[28] Nvidia discontinued the nForce range, including these

mGPUs, in 2009.[citation needed]

After the nForce range was discontinued, Nvidia released their Ion line in 2009,

which consisted of a Intel Atom CPU partnered with an low-end GeForce 9 series

GPU, fixed on the motherboard. Nvidia released an upgraded Ion 2 in 2010, this t

ime containing a low-end GeForce 300 series GPU.

Nomenclature[edit]

This section needs additional citations for verification. Please help improve th

is article by adding citations to reliable sources. Unsourced material may be ch

allenged and removed. (February 2014) (Learn how and when to remove this templat

e message)

From the GeForce 4 series until the GeForce 9 series, the naming scheme below is

used.

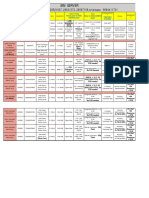

Category Number range Suffix[a] Price range[b] (USD) Shader a

mount[c] Memory Example products

Type Bus width Size

Entry-level graphics cards 000 550 SE, LE, No suffix, GS, GT, Ultra

<$100 <25% DDR, DDR2 25 50% ~25% GeForce 9400GT, GeForce 9500GT

Mid-range graphics cards 600 750 VE, LE, XT, No suffix, GS, GSO, GT, GTS,

Ultra $100 175 25 50% DDR2, GDDR3 50 75% 50 75% GeForce 9600GT, GeForce

9600GSO

High-end graphics cards 800 950 VE, LE, ZT, XT, No suffix, GS, GSO, GT, GTO, GTS

, GTX, GTX+, Ultra, Ultra Extreme, GX2 >$175 50 100% GDDR3 75 100% 50 100%

GeForce 9800GT, GeForce 9800GTX

Since the release of the GeForce 100 series of GPUs, Nvidia changed their produc

t naming scheme to the one below.[1]

Category Prefix Number range (Last 2 digits) Price range[b] (USD)

Shader amount[c] Memory Example products

Type Bus width Size

Entry-level graphics cards No prefix, G, GT 00 45 <$100 <25%

DDR2, GDDR3, GDDR5 25 50% ~25% GeForce G100, GeForce GT 610

Mid-range graphics cards GTS, GTX 50 65 $100 300 25 50% GDDR3, G

DDR5 50 75% 50 100% GeForce GTS 450, GeForce GTX 660

High-end graphics cards GTX 70 95 >$300 50 100% GDDR5, GDDR5X 75 100%

75 100% GeForce GTX 970, GeForce GTX 980 Ti, GeForce GTX 1080, GeForce GTX Titan

X (Pascal)

Jump up ^ Suffixes indicate its performance layer, and those listed are in order

from weakest to most powerful. Suffixes from lesser categories can still be use

d on higher performance cards, example: GeForce 8800 GT.

^ Jump up to: a b Price range only applies to the most recent generation and is

a generalization based on pricing patterns.

^ Jump up to: a b Shader amount compares the number of shaders pipelines or unit

s in that particular model range to the highest model possible in the generation

.

Earlier cards such as the GeForce4 follow a similar pattern.

cf. Nvidia's Performance Graph here.

Graphics device drivers[edit]

Proprietary[edit]

Nvidia develops and publishes GeForce drivers for Windows XP x86/x86-64 and late

r, Linux x86/x86-64/ARMv7-A, OS X 10.5 and later, Solaris x86/x86-64 and FreeBSD

x86/x86-64. A current version can be downloaded from Nvidia and most Linux dist

ributions contain it in their own repositories. Nvidia GeForce driver 340.24 fro

m 8 July 2014 supports the EGL interface enabling support for Wayland in conjunc

tion with this driver.[29][30] This may be different for the Nvidia Quadro brand

, which is based on identical hardware but features OpenGL-certified graphics de

vice drivers.

Basic support for the DRM mode-setting interface in the form of a new kernel mod

ule named nvidia-modeset.ko has been available since version 358.09 beta.[31] Th

e support Nvidia's display controller on the supported GPUs is centralized in nv

idia-modeset.ko. Traditional display interactions (X11 modesets, OpenGL SwapBuff

ers, VDPAU presentation, SLI, stereo, framelock, G-Sync, etc.) initiate from the

various user-mode driver components and flow to nvidia-modeset.ko.[32]

On the same day the Vulkan graphics API was publicly released, Nvidia released d

rivers that fully supported it.[33]

Legacy driver:[34]

GeForce driver 71.x provides support for RIVA TNT, RIVA TNT2, GeForce 256 and Ge

Force 2 series

GeForce driver 96.x provides support for GeForce 2 series, GeForce 3 series and

GeForce 4 series

GeForce driver 173.x provides support for GeForce FX series

GeForce driver 304.x provides support for GeForce 6 series and GeForce 7 series

GeForce driver 340.x provides support for Tesla 1 and 2-based, i.e. GeForce 8 se

ries GeForce 300 series

Usually a legacy driver does feature support for newer GPUs as well, but since n

ewer GPUs are supported by newer GeForce driver numbers which regularly provide

more features and better support, the end-user is encouraged to always use the h

ighest possible drivers number.

Current driver:

GeForce driver latest provides support for Fermi-, Kepler-, Maxwell- and Pascal-

based though Vulkan-support has not been made available for Fermis.

Free and open-source[edit]

Main article: Free and open-source "Nouveau" graphics device driver

Community-created, free and open-source drivers exist as an alternative to the d

rivers released by Nvidia. Open-source drivers are developed primarily for Linux

, however there may be ports to other operating systems. The most prominent alte

rnative driver is the reverse-engineered free and open-source nouveau graphics d

evice driver. Nvidia has publicly announced to not provide any support for such

additional device drivers for their products,[35] although Nvidia has contribute

d code to the Nouveau driver.[36]

Free and open-source drivers support a large portion (but not all) of the featur

es available in GeForce-branded cards. For example, as of January 2014 nouveau d

river lacks support for the GPU and memory clock frequency adjustments, and for

associated dynamic power management.[37] Also, Nvidia's proprietary drivers cons

istently perform better than nouveau in various benchmarks.[38] However, as of A

ugust 2014 and version 3.16 of the Linux kernel mainline, contributions by Nvidi

a allowed partial support for GPU and memory clock frequency adjustments to be i

mplemented.[citation needed]

References[edit]

^ Jump up to: a b c "GeForce Graphics Cards". Nvidia. Retrieved July 7, 2012.

Jump up ^ "Winners of the Nvidia Naming Contest". Nvidia. 1999. Archived from th

e original on June 8, 2000. Retrieved May 28, 2007.

Jump up ^ Taken, Femme (April 17, 1999). "Nvidia "Name that chip" contest". Twea

kers.net. Retrieved May 28, 2007.

^ Jump up to: a b c Empty citation (help)

Jump up ^ "NVIDIA Privacy Policy/Your California Privacy Rights". 2016-06-15.

Jump up ^ Brian Caulfield (January 7, 2008). "Shoot to Kill". Forbes.com. Retrie

ved December 26, 2007.

Jump up ^ "NVIDIA GeForce 9800 GTX". Retrieved May 31, 2008.

Jump up ^ DailyTech report: Crytek, Microsoft and Nvidia downplay Direct3D 10.1,

retrieved December 4, 2007

Jump up ^ "Nvidia quietly launches GeForce 100-series GPUs". April 6, 2009.

Jump up ^ "nVidia Launches GeForce 100 Series Cards". March 10, 2009.

Jump up ^ "Nvidia quietly launches GeForce 100-series GPUs". March 24, 2009.

Jump up ^ "NVIDIA GeForce GTX 280 Video Card Review". Benchmark Reviews. June 16

, 2008. Retrieved June 16, 2008.

Jump up ^ "GeForce GTX 280 to launch on June 18th". Fudzilla.com. Archived from

the original on May 17, 2008. Retrieved May 18, 2008.

Jump up ^ "Detailed GeForce GTX 280 Pictures". VR-Zone. June 3, 2008. Retrieved

June 3, 2008.

Jump up ^ " News :: NVIDIA kicks off GeForce 300-series range with GeForce 310 :

Page 1/1". Hexus.net. 2009-11-27. Retrieved 2013-06-30.

Jump up ^ "Every PC needs good graphics". Nvidia. Retrieved 2013-06-30.

Jump up ^ "Update: NVIDIA's GeForce GTX 400 Series Shows Up Early AnandTech :: Y

our Source for Hardware Analysis and News". Anandtech.com. Retrieved 2013-06-30.

Jump up ^ Smith, Ryan (March 19, 2013). "NVIDIA Updates GPU Roadmap; Announces V

olta Family For Beyond 2014". AnandTech. Retrieved March 19, 2013.

Jump up ^ Gupta, Sumit (2014-03-21). "NVIDIA Updates GPU Roadmap; Announces Pasc

al". Blogs.nvidia.com. Retrieved 2014-03-25.

Jump up ^ "Parallel Forall". NVIDIA Developer Zone. Devblogs.nvidia.com. Retriev

ed 2014-03-25.

Jump up ^ http://www.geforce.com/hardware/10series

Jump up ^ "nside Pascal: NVIDIA's Newest Computing Platform". 2016-04-05.

Jump up ^ Denis Foley (2014-03-25). "NVLink, Pascal and Stacked Memory: Feeding

the Appetite for Big Data". nvidia.com. Retrieved 2014-07-07.

Jump up ^ "NVIDIA's Next-Gen Pascal GPU Architecture to Provide upto 10X Speedup

for Deep Learning Apps". The Official NVIDIA Blog. Retrieved 23 March 2015.

Jump up ^ Smith, Ryan (2015-03-17). "The NVIDIA GeForce GTX Titan X Review". Ana

ndTech. p. 2. Retrieved 2016-04-22. ...puny native FP64 rate of just 1/32

Jump up ^ "GeForce GTX 10-Series Notebooks". Retrieved 2016-10-23.

Jump up ^ "NVIDIA Small Form Factor". Nvidia. Retrieved 2014-02-03.

Jump up ^ "NVIDIA Motherboard GPUs". Nvidia. Retrieved 2010-03-22.

Jump up ^ "Support for EGL". 2014-07-08. Retrieved 2014-07-08.

Jump up ^ "lib32-nvidia-utils 340.24-1 File List". 2014-07-15.

Jump up ^ "Linux, Solaris, and FreeBSD driver 358.09 (beta)". 2015-12-10.

Jump up ^ "NVIDIA 364.12 release: Vulkan, GLVND, DRM KMS, and EGLStreams". 2016-

03-21.

Jump up ^ "Nvidia: Vulkan support in Windows driver version 356.39 and Linux dri

ver version 355.00.26". 2016-02-16.

Jump up ^ "What's a legacy driver?". Nvidia.

Jump up ^ "Nvidia's Response To Recent Nouveau Work". Phoronix. 2009-12-14.

Jump up ^ Larabel, Michael (2014-07-11). "NVIDIA Contributes Re-Clocking Code To

Nouveau For The GK20A". Phoronix. Retrieved 2014-09-09.

Jump up ^ "Nouveau 3.14 Gets New Acceleration, Still Lacking PM". Phoronix. 2014

-01-23. Retrieved 2014-07-25.

Jump up ^ "Benchmarking Nouveau and Nvidia's proprietary GeForce driver on Linux

". Phoronix. 2014-07-28.

External links[edit]

GeForce product page on Nvidia's website

GeForce powered games on Nvidia's website

techPowerUp! GPU Database

[show] v t e

Nvidia

Categories: Nvidia graphics processorsVideo cards

Navigation menu

Not logged inTalkContributionsCreate accountLog inArticleTalkReadEditView histor

ySearch

Search Wikipedia

Go

Main page

Contents

Featured content

Current events

Random article

Donate to Wikipedia

Wikipedia store

Interaction

Help

About Wikipedia

Community portal

Recent changes

Contact page

Tools

What links here

Related changes

Upload file

Special pages

Permanent link

Page information

Wikidata item

Cite this page

Print/export

Create a book

Download as PDF

Printable version

Languages

???????

Bosanski

Ce tina

Deutsch

Eesti

Espaol

?????

Franais

???

Italiano

?????

Lietuviu

??????

Nederlands

???

Norsk bokml

Polski

Portugus

???????

Slovencina

Suomi

Svenska

???

Trke

??????????

??

??

Edit links

This page was last modified on 27 February 2017, at 09:10.

Text is available under the Creative Commons Attribution-ShareAlike License; add

itional terms may apply. By using this site, you agree to the Terms of Use and P

rivacy Policy. Wikipedia is a registered trademark of t

Potrebbero piacerti anche

- How To Play Initial D Arcade Stage 4-8 On PC W/ TeknoParrotDocumento16 pagineHow To Play Initial D Arcade Stage 4-8 On PC W/ TeknoParrotJosé María Romero100% (1)

- Radar Service Tool Installation Manual (INM - 357641-HI - 1 - Q) - 1Documento14 pagineRadar Service Tool Installation Manual (INM - 357641-HI - 1 - Q) - 1hermesdemeNessuna valutazione finora

- 2023 02 Ansys General Hardware RecommendationsDocumento24 pagine2023 02 Ansys General Hardware RecommendationsDayvid Melo BrittoNessuna valutazione finora

- Ashish CPIDocumento30 pagineAshish CPIAshish KumarNessuna valutazione finora

- Geforce Manual EnglishDocumento38 pagineGeforce Manual EnglishjohnNessuna valutazione finora

- Vga EditedDocumento26 pagineVga Editedapi-3829090Nessuna valutazione finora

- GeForce 256Documento3 pagineGeForce 256King 79Nessuna valutazione finora

- A Graphics Processing UnitDocumento14 pagineA Graphics Processing UnitsupermanijakNessuna valutazione finora

- Nvidia Geforce 7800 GTXDocumento33 pagineNvidia Geforce 7800 GTXIvana GjurevskaNessuna valutazione finora

- GT240 PS Us 102Documento2 pagineGT240 PS Us 102Oscar Olivari VargasNessuna valutazione finora

- The Video Card Is An Expansion Card That Allows The Computer To Send Graphical Information To A Video Display Device Such As A or ProjectorDocumento6 pagineThe Video Card Is An Expansion Card That Allows The Computer To Send Graphical Information To A Video Display Device Such As A or ProjectorRaja NagaiahNessuna valutazione finora

- Geforce 300 SeriesDocumento2 pagineGeforce 300 SeriesJ HNessuna valutazione finora

- Computer Power User - November 2016Documento88 pagineComputer Power User - November 2016vin1925Nessuna valutazione finora

- Geforce 10 Series: Jump To Navigation Jump To SearchDocumento5 pagineGeforce 10 Series: Jump To Navigation Jump To SearchKing 79Nessuna valutazione finora

- CpuDocumento15 pagineCpuissijfidsfdjifNessuna valutazione finora

- Nvidia Geforce 8800 GT User ManualDocumento3 pagineNvidia Geforce 8800 GT User Manualivan_nakic95Nessuna valutazione finora

- GPU WikiDocumento9 pagineGPU WikiAdam RiosNessuna valutazione finora

- ReadMe PDFDocumento32 pagineReadMe PDFYeferson FuentesNessuna valutazione finora

- NVIDIA Ampere GA102 GPU Architecture Whitepaper V1 PDFDocumento44 pagineNVIDIA Ampere GA102 GPU Architecture Whitepaper V1 PDFkorbyNessuna valutazione finora

- Read MeDocumento30 pagineRead MeAdrian Rivero MartinezNessuna valutazione finora

- Computer Power User - September 2015Documento88 pagineComputer Power User - September 2015BernandoNessuna valutazione finora

- MSI Afterburner v4Documento33 pagineMSI Afterburner v4pinkcatpawNessuna valutazione finora

- GPU Gpgpu Computing: Rajan PanigrahiDocumento24 pagineGPU Gpgpu Computing: Rajan Panigrahirajan_panigrahiNessuna valutazione finora

- Graphics Card Definition and FunctionDocumento3 pagineGraphics Card Definition and FunctionAlex DedalusNessuna valutazione finora

- CUDA WikipediaDocumento10 pagineCUDA WikipediaMuktikanta SahuNessuna valutazione finora

- Mingpu: A Minimum Gpu Library For Computer Vision: Pavel Babenko and Mubarak ShahDocumento30 pagineMingpu: A Minimum Gpu Library For Computer Vision: Pavel Babenko and Mubarak ShahEsubalew Tamirat BekeleNessuna valutazione finora

- Gainward Geforce GTX 970 Phantom 4Gb: Next Generation Nvidia MaxwellDocumento2 pagineGainward Geforce GTX 970 Phantom 4Gb: Next Generation Nvidia MaxwellGiuseppe OspinaNessuna valutazione finora

- GTX295 PS Us 106Documento2 pagineGTX295 PS Us 106Juan BarehandsNessuna valutazione finora

- gtgpu1Documento15 paginegtgpu1Raunak JhaNessuna valutazione finora

- MSI Afterburner v2.3.1Documento16 pagineMSI Afterburner v2.3.1scribd3991Nessuna valutazione finora

- Gpu IEEE PaperDocumento14 pagineGpu IEEE PaperRahul SharmaNessuna valutazione finora

- p00454 Datasheet 11584d2e9694dad60Documento2 paginep00454 Datasheet 11584d2e9694dad60_bartek_Nessuna valutazione finora

- Nvidia Geforce GTX 460Documento3 pagineNvidia Geforce GTX 460kanamasterNessuna valutazione finora

- Pitching TEMDocumento14 paginePitching TEMnumerianocanabeNessuna valutazione finora

- GPU Seminar Report on Graphics Processing UnitDocumento25 pagineGPU Seminar Report on Graphics Processing UnitPrince PatelNessuna valutazione finora

- MSI GE65 Raider (Intel® 9th Gen) (GeForce® GTX 1660 Ti)Documento4 pagineMSI GE65 Raider (Intel® 9th Gen) (GeForce® GTX 1660 Ti)Daniel CamposNessuna valutazione finora

- p00935 Datasheet 1721538440f6b97c9Documento1 paginap00935 Datasheet 1721538440f6b97c9BudiNessuna valutazione finora

- Centro de Soporte Técnico - Blackmagic DesignDocumento2 pagineCentro de Soporte Técnico - Blackmagic DesignluzdeblueNessuna valutazione finora

- List of Nvidia Graphics Processing Units - WikipedDocumento53 pagineList of Nvidia Graphics Processing Units - WikipedIman Teguh PNessuna valutazione finora

- GTX 580 1536mb gddr5 Brochure PDFDocumento2 pagineGTX 580 1536mb gddr5 Brochure PDFKristian MarkočNessuna valutazione finora

- A TiDocumento19 pagineA TiMuhammad Hafiz SalehudinNessuna valutazione finora

- OptiX Release Notes 3.7.0Documento4 pagineOptiX Release Notes 3.7.0bigwhaledorkNessuna valutazione finora

- p00410 Datasheet 4914c89ea80b9f65Documento2 paginep00410 Datasheet 4914c89ea80b9f65Dusan RadosavljevicNessuna valutazione finora

- Whitepaper NVIDIA's Next Generation CUDA Compute ArchitectureDocumento21 pagineWhitepaper NVIDIA's Next Generation CUDA Compute ArchitectureCentrale3DNessuna valutazione finora

- NV Po Gef7 Mar06Documento2 pagineNV Po Gef7 Mar06Lê Nguyên TríNessuna valutazione finora

- Gainward 9500GT 1024MB TV DVIDocumento2 pagineGainward 9500GT 1024MB TV DVICodrut ChiritaNessuna valutazione finora

- NVIDIA Quadro Vs GeForce Graphic CardsDocumento18 pagineNVIDIA Quadro Vs GeForce Graphic CardsHectorVasquezLara100% (1)

- HP Z Workstations - Family Data SheetDocumento7 pagineHP Z Workstations - Family Data SheetahmedNessuna valutazione finora

- IdeaPad Gaming 3 15ACH6 SpecDocumento8 pagineIdeaPad Gaming 3 15ACH6 SpecaashnathfamilyNessuna valutazione finora

- HP Z Workstations: Quick Reference GuideDocumento7 pagineHP Z Workstations: Quick Reference Guidemksamy2021Nessuna valutazione finora

- HGG 26 LDocumento1 paginaHGG 26 Lxisto9296Nessuna valutazione finora

- CpumagDocumento88 pagineCpumagTerranceNessuna valutazione finora

- Good Studies About How To Get Not To Get Even ThoseDocumento7 pagineGood Studies About How To Get Not To Get Even ThoseAicha OudNessuna valutazione finora

- Read MeDocumento35 pagineRead MeuserNessuna valutazione finora

- Service Source: Updated: 18 November 2003Documento118 pagineService Source: Updated: 18 November 2003Don HutchinsonNessuna valutazione finora

- RTT System Requirements DG DP DTDocumento1 paginaRTT System Requirements DG DP DTwwwengNessuna valutazione finora

- Galaxy GeForce GTS 450 Super OCDocumento13 pagineGalaxy GeForce GTS 450 Super OCsarthaksahniNessuna valutazione finora

- Apple Manual For Powermac g5Documento114 pagineApple Manual For Powermac g5tingo99Nessuna valutazione finora

- Wii Architecture: Architecture of Consoles: A Practical Analysis, #11Da EverandWii Architecture: Architecture of Consoles: A Practical Analysis, #11Nessuna valutazione finora

- Dreamcast Architecture: Architecture of Consoles: A Practical Analysis, #9Da EverandDreamcast Architecture: Architecture of Consoles: A Practical Analysis, #9Nessuna valutazione finora

- Wii U Architecture: Architecture of Consoles: A Practical Analysis, #21Da EverandWii U Architecture: Architecture of Consoles: A Practical Analysis, #21Nessuna valutazione finora

- Mega Drive Architecture: Architecture of Consoles: A Practical Analysis, #3Da EverandMega Drive Architecture: Architecture of Consoles: A Practical Analysis, #3Nessuna valutazione finora

- Master System Architecture: Architecture of Consoles: A Practical Analysis, #15Da EverandMaster System Architecture: Architecture of Consoles: A Practical Analysis, #15Nessuna valutazione finora

- Salary CertificateDocumento1 paginaSalary CertificateSai Ruthwick MadasNessuna valutazione finora

- ORDocumento174 pagineORrockstaraliNessuna valutazione finora

- 3 2 MECH R13 SyllabusDocumento12 pagine3 2 MECH R13 SyllabusKarthikKaruNessuna valutazione finora

- NVIDIA System Information 08-18-2014 18-05-03Documento1 paginaNVIDIA System Information 08-18-2014 18-05-03Shubhankar BiswasNessuna valutazione finora

- Mace - A Blueprint For Modern Infrastructure DeliveryDocumento17 pagineMace - A Blueprint For Modern Infrastructure DeliveryPurna Chowdary VootlaNessuna valutazione finora

- List DevicesDocumento33 pagineList DevicesAdam RobinsonNessuna valutazione finora

- X Plane 12 Book 1 1 - FinalDocumento200 pagineX Plane 12 Book 1 1 - FinalrobertNessuna valutazione finora

- NVDA Investor PresentationDocumento39 pagineNVDA Investor PresentationZerohedgeNessuna valutazione finora

- Video CardsDocumento17 pagineVideo CardsAlejo SpenaNessuna valutazione finora

- GPU Accelerator Capabilities : Release 19.2Documento3 pagineGPU Accelerator Capabilities : Release 19.2Paul MuresanNessuna valutazione finora

- ServerDocumento8 pagineServerSubramanyam IbsNessuna valutazione finora

- Daftar Harga VGA Card Di JakartaDocumento40 pagineDaftar Harga VGA Card Di Jakartalisar.yoeNessuna valutazione finora

- Lastexception 63738573352Documento5 pagineLastexception 63738573352Jennifer GonzálezNessuna valutazione finora

- Gigapixel AI For Video Help DocumentDocumento6 pagineGigapixel AI For Video Help DocumentEmmanuel TovarNessuna valutazione finora

- AI Boom Has Turbocharged Nvidias Fortunes Can It Hold Its PositionDocumento3 pagineAI Boom Has Turbocharged Nvidias Fortunes Can It Hold Its PositionCleo XueNessuna valutazione finora

- Amazon EC2 FAQsDocumento89 pagineAmazon EC2 FAQsasassaNessuna valutazione finora

- HistoryDocumento36 pagineHistoryLucas SousaNessuna valutazione finora

- Blitz-Logs 20230528092056Documento56 pagineBlitz-Logs 20230528092056Tiberiu IulianNessuna valutazione finora

- Lastexception 63823292936Documento13 pagineLastexception 63823292936Swag GamesNessuna valutazione finora

- File list for Win81 Driver CDDocumento45 pagineFile list for Win81 Driver CDGeorgiana Cîmpan0% (1)

- Cuda PDFDocumento18 pagineCuda PDFXemenNessuna valutazione finora

- 2016-07-08 01.00.20 GraphicsDocumento3 pagine2016-07-08 01.00.20 GraphicsgrodeslinNessuna valutazione finora

- Eyal Enav - Metropolis - Smater Cities With Vision AIDocumento33 pagineEyal Enav - Metropolis - Smater Cities With Vision AIatif_aman123Nessuna valutazione finora

- How To Build A Computer DocumentDocumento30 pagineHow To Build A Computer Documentapi-253599832Nessuna valutazione finora

- PCWorld - December 2021Documento122 paginePCWorld - December 2021BushavaAzbukaNessuna valutazione finora

- Grid Vgpu Release Notes Red Hat El KVMDocumento41 pagineGrid Vgpu Release Notes Red Hat El KVMRainaNessuna valutazione finora

- Pny Geforce RTX 4090 24gb TF BDocumento1 paginaPny Geforce RTX 4090 24gb TF BAshu GuptaNessuna valutazione finora

- UE3 Auto Report Ini Dump 0001Documento256 pagineUE3 Auto Report Ini Dump 0001Dito ArdantoNessuna valutazione finora

- Agisoft PhotoScan User ManualDocumento103 pagineAgisoft PhotoScan User ManualjuanNessuna valutazione finora

- List DevicesDocumento33 pagineList DevicesDaniel Aparecido Lopes BonfimNessuna valutazione finora

- Proviz Print Nvidia T600 Datasheet Us Nvidia 1670029 r5 WebDocumento1 paginaProviz Print Nvidia T600 Datasheet Us Nvidia 1670029 r5 WebLohRyderNessuna valutazione finora