Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Future Generation Computer Systems: Humphrey Waita Njogu Luo Jiawei Jane Nduta Kiere Damien Hanyurwimfura

Caricato da

Agus SevtianaTitolo originale

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Future Generation Computer Systems: Humphrey Waita Njogu Luo Jiawei Jane Nduta Kiere Damien Hanyurwimfura

Caricato da

Agus SevtianaCopyright:

Formati disponibili

Future Generation Computer Systems 29 (2013) 2745

Contents lists available at SciVerse ScienceDirect

Future Generation Computer Systems

journal homepage: www.elsevier.com/locate/fgcs

A comprehensive vulnerability based alert management approach for

large networks

Humphrey Waita Njogu , Luo Jiawei , Jane Nduta Kiere, Damien Hanyurwimfura

College of Information Science and Engineering, Hunan University, Changsha, Hunan, China

article

info

Article history:

Received 1 April 2011

Received in revised form

30 March 2012

Accepted 7 April 2012

Available online 25 April 2012

Keywords:

Alert management

Alert verification

Vulnerability database

Alert correlation

Intrusion detection system

abstract

Traditional Intrusion Detection Systems (IDSs) are known for generating large volumes of alerts despite all

the progress made over the last few years. The analysis of a huge number of raw alerts from large networks

is often time consuming and labour intensive because the relevant alerts are usually buried under heaps of

irrelevant alerts. Vulnerability based alert management approaches have received considerable attention

and appear extremely promising in improving the quality of alerts. They filter out any alert that does not

have a corresponding vulnerability hence enabling the analysts to focus on the important alerts. However,

the existing vulnerability based approaches are still at the preliminary stage and there are some research

gaps that need to be addressed. The act of validating alerts may not guarantee alerts of high quality

because the validated alerts may contain huge volumes of redundant and isolated alerts. The validated

alerts too lack additional information needed to enhance their meaning and semantic. In addition, the

use of outdated vulnerability data may lead to poor alert verification. In this paper, we propose a fast and

efficient vulnerability based approach that addresses the above issues. The proposed approach combines

several known techniques in a comprehensive alert management framework in order to offer a novel

solution. Our approach is effective and yields superior results in terms of improving the quality of alerts.

2012 Elsevier B.V. All rights reserved.

1. Introduction

Management of security in large networks is a challenging task

because new threats and flaws are being discovered every day. In

fact, the number of exploited vulnerabilities continues to rise as

computer networks grow. Intrusion Detection Systems (IDSs) are

used to manage network security in many organisations. They provide an extra layer of defence by gathering and analysing information in a network in order to identify possible security breaches [1].

There are two broad types of IDSs: signature based and anomaly

based. The former uses a database of known attack signatures for

detection while the latter uses a model of normative system behaviour and observes deviations for detection [2]. If an intrusion is

detected, an IDS generates a warning known as alert or alarm.

IDSs are designed with a goal of delivering alerts of high quality

to analysts. However, the traditional IDSs have not lived up to this

promise. They trigger an overwhelming number of unnecessary

alerts that are primarily false positives resulting from non existing

intrusions. Analysing the alerts of this nature is a challenging task

and therefore the alerts are prone to be misinterpreted, ignored or

Corresponding authors.

E-mail addresses: hnjogu@yahoo.com (H.W. Njogu), luojiawei@hnu.edu.cn

(L. Jiawei), jayri505@yahoo.com (J.N. Kiere), hadamfr@yahoo.fr

(D. Hanyurwimfura).

0167-739X/$ see front matter 2012 Elsevier B.V. All rights reserved.

doi:10.1016/j.future.2012.04.001

delayed. The important alerts are often buried and hidden among

thousands of other unverified, irrelevant and low priority alerts.

There are several reasons that lead IDSs to generate huge numbers

of alerts such as IDS systems being unaware of the network context

they are protecting [3,4]. Signature based IDSs are often run with

a default set of signatures. Therefore, alerts are generated for most

of the attack attempts irrespective of success or failure to exploit

vulnerability in the network under consideration. In fact, signature

based IDSs usually do not check the effectiveness of an attack to

the local network context thus contributing to a high number of

false positive alerts [2]. Further, most of the traditional IDSs have

limited observation abilities in terms of network space as well as

the kind of attacks they can deal with [5]. Attack evidences against

network resources can be scattered over several hosts. In fact, it is

a challenging issue to have an IDS with properly deployed sensors

able to detect the attacker traces at different spots in the network

and be able to find dependencies among them.

Research has shown that most of the damages result from vulnerabilities existing on application, services, ports and protocols of

hosts and networks [6]. Fixing all the known vulnerabilities before

damaging intrusions take place in order to reduce the number of

alerts [7,8] may not be effective especially in large networks because of the following limitations:

Time gap between vulnerability disclosure and the software

patch released by software developers.

28

H.W. Njogu et al. / Future Generation Computer Systems 29 (2013) 2745

Updating hosts from different vendors in a large network takes

Construction of comprehensive and dynamic threat profile

longer thus exposing the hosts to intruders.

Some vulnerabilities are protocol based thus an immediate

patch may not be available.

known as Enhanced Vulnerability Assessment (EVA) data. EVA

data represents all vulnerabilities present in a network. EVA

data is queried to assert information about alerts and the

context in which they occur thereby improving the accuracy of

alerts.

Introduction of new metrics such as alert relevance, severity,

frequency and source confidence thus improving the semantics

of alerts in order to offer better discriminative ability than the

ordinary alert attributes when evaluating the alerts.

Maintenance of history of alerts that contain the recent and

frequent meta alerts. Generally, IDSs produce alerts that may

have similar patterns manifested by features such as frequent

IP addresses and ports. Therefore, the history of alerts assists

in handling the incoming related alerts thus improving the

processing speed.

Application of fuzzy based reasoning to determine the interestingness of the validated alerts based on their metric values. This

helps to identify the most important alerts.

It is therefore demanding to apply vulnerability analysis to

improve network security.

The vulnerability based alert management approaches are

popular and extremely promising in delivering quality alerts

[2,9,10]. These approaches are widely accepted and used by many

researchers to improve the quality of alerts especially when

processing a huge number of alerts from signature based IDSs.

These approaches improve the quality of alerts by eliminating

alerts with low relevance in relation to the local context of a

given network. They correlate network vulnerabilities with IDS

alerts and filter out the alerts that do not have a corresponding

vulnerability. Therefore, the vulnerability based approaches are

able to remove the need for a complicated attack step library and

reduce irrelevant alerts (irrelevant alerts correspond to attacks that

target a nonexistent service) [11].

Most of the vulnerability based approaches are able to produce

alerts that are useful in the context of the network. However, these

approaches are still at the preliminary stage and there are some

research gaps that need to be addressed in order to produce better

results. So far there is little attention given to the following key

issues. The act of validating alerts may not guarantee alerts of high

quality because the validated alerts may contain huge volumes of

redundant alerts as evidently seen in several vulnerability based

works such as [2,4,7,9,1216]. Generally, the analysts who review

the validated alerts may take a longer time to understand the

complete security incident because it would involve evaluating

each redundant alert. Consequently, the analysts may not only

encounter difficulties when taking the correct decision but would

also take a longer time to respond against the intrusions. In fact,

it is common for attacks to produce thousands of similar alerts

hence it is more useful to reduce the redundancy in the validated

alerts. There is no practical use in retaining all the redundant

alerts. In reference to several vulnerability based approaches such

as [2,4,1216], the validated alerts may also contain a massive

number of isolated alerts that are very difficult to deal with. The

presence of isolated alerts may hinder the potential of discovering

the causal relationship in the validated alerts. Another challenging

issue is that numerous vulnerability based approaches such as

[12,13] depend on outdated vulnerability data to verify alerts

hence likely to contribute to poor alert verification. New attacks

and vulnerabilities in networks are discovered everyday hence the

need to update the vulnerability data accordingly. In addition, the

information found in the validated alerts is basic and insufficient

and may not enhance the meaning and semantics of the validated

alerts. In fact, the obvious alert features may not adequately

describe alerts in terms of their relevance, severity, frequency and

the confidence levels of their sources. Therefore, there is need

to supplement the information found on the alerts to further

understand the validated alerts in order to reduce the overall

amount of unnecessary alerts.

The primary focus of this paper is to address the above

issues in order to improve the effectiveness of vulnerability

based approaches. We developed a fast and efficient approach

based on several known techniques (such as alert correlation and

prioritisation) in a comprehensive alert management framework

in order to offer a novel solution. The contributions of this work

are summarised as follows:

Development of an alert correlation engine to reduce the huge

volumes of redundant and isolated alerts contained in the

validated alerts. Redundant alerts are often generated from

the same intrusion event or intrusions carried out in different

stages. Thus, the correlation engine helps the analysts to quickly

understand the complete security incident and take the correct

decision.

The following terminologies are used in this paper:

Vulnerability: A flaw or weakness in a system which could be

exploited by intruders.

Attack: Any malicious attempt to exploit vulnerability. An

attack may be successful or not in the network under

consideration.

Relevant attack: An attack which successfully exploits a

vulnerability.

Non relevant attack: An attack which fails to successfully

exploit vulnerabilities in a network.

Attack severity: The degree of damage associated with an attack

in a network.

Alert: A warning generated by IDS on a malicious activity.

An alert may be interesting or non interesting. An interesting

alert represents a relevant attack. While non interesting alert

represents an unsuccessful attack attempt or any other alert

considered not important.

Meta alert: Summarised information of related alerts.

The rest of the paper is organised as follows: Section 2 describes

the related work. Section 3 describes the proposed approach.

Section 4 discusses the experiments and performance of the

proposed approach. Finally, a conclusion and suggestions for future

work are given in Section 5.

2. Related work

Over the last few years, the research in intrusion detection has

focused on the post processing of alerts in order to manage huge

volumes of alerts generated by IDSs. In this section, we analyse

several vulnerability based correlation approaches.

The traditional IDSs tend to generate too general alerts that

are not network specific because of being unaware of the network

context they monitor. IDSs are often run with a default set

of signatures hence generate alerts for most of the intrusions

irrespective of success or failure to exploit vulnerabilities in

the network under consideration. According to Morin et al. [9],

many alerts (especially false positives) involve actors which are

inside the monitored information system and whose properties

are consequently also observable. The use of vulnerability data is

advocated as an important tool to reduce the noise in the alerts in

order to improve the quality of alerts [9,10,13]. The vulnerability

data helps to differentiate between successful and failed intrusion

attempts. Identifying and eliminating failed intrusion attempts

improves the quality of final alerts.

Gula [12] illustrates how vulnerability data elicits high quality

alerts from a huge number of alerts that are primarily false

H.W. Njogu et al. / Future Generation Computer Systems 29 (2013) 2745

positives. The author states that a particular true intrusion targets

a particular vulnerability and therefore correlating the alert with

vulnerability could reveal whether the vulnerability is exploited

or not. The author further describes how alerts can be correlated

with vulnerability data. Another similar approach to verify alerts

is proposed by Kruegel and Robertson [13] in order to improve

the false positive rate of IDSs. The focus of the work is to

provide a model for alert analysis and generation of prioritised

alert reports for security analysts. Despite some merits with the

aforementioned approaches, they are preliminary and have not

been integrated in the overall comprehensive correlation process.

They also lack useful additional information on host architecture,

software and hardware to support better alert verification. These

approaches rely on information about the security configuration

of the protected network that was collected at an earlier time

using vulnerability scanning tools, and do not support dynamic

mechanisms for alert verification. In addition, these approaches do

not reduce the redundant and isolated alerts after alert verification.

Eschelbeck and Krieger [14] propose an effective noise reduction for intrusion detection systems. This technique eliminates the

unqualified IDS alerts by correlating them with environmental intelligence about the network and systems. This work provides an

overview of correlation requirements with a proposed architecture

and solution for the correlation and classification of IDS alerts in

real time. The implementation of this scheme has demonstrated a

significant reduction of false alerts but it does not reduce the redundant and isolated alerts after alert verification.

A vulnerability based alert filtering approach is proposed by

Porras et al. [15]. It takes into account of the impact of alerts on

the overall mission that a network infrastructure supports. This

approach uses the knowledge of the network architecture and

vulnerability requirements of different incident types to tag alerts

with a relevance metric and then prioritises them accordingly.

Alerts representing attacks against non-existent vulnerabilities are

discarded. The work does not have a comprehensive vulnerability

data model to fully integrate the vulnerability data in the network

context. This work processes alerts from different sources such as

IDS, firewalls and other devices while ours deals with IDS alerts

only.

Morin et al. [16] present M2D2 model which relies on a

formal description (on information such as network context data

and vulnerabilities) of sensor capabilities in terms of scope and

positioning to determine if an alert is a false positive. The model

is used to verify if all sensors that could have been able to

detect an attack agreed during the detection process, making the

assumption that inconsistent detections denote the presence of a

false alert. Although this approach benefits from a sound formal

basis, it suffers from the limitation that false alerts can only be

detected for those cases in which multiple sensors are able to

detect the same attack and can participate in the voting process.

In fact, many real-world IDSs do not provide enough detection

redundancy to make this approach applicable. An extension of the

M2D2 model, known as the M4D4 data model [9] is proposed

to provide reasoning about the security alerts as well as the

relevant context in a cooperative manner. The extended model is a

reliable and formal foundation for reasoning about complementary

evidences providing the means to validate reported alerts by IDSs.

Although the aforementioned approaches look promising, they

have only been evaluated mathematically but not tested with real

datasets. The M4D4 model suffers from some limitations of M2D2.

In addition, the two approaches do not reduce the redundant and

isolated alerts after alert verification.

Valeur et al. [17] propose a general correlation model that

includes a comprehensive set of components such as alert fusion,

multi-step correlation and alert prioritisation. The authors observe

that reduction of alerts is an important task of alert management

29

and note that when an alert management approach receives false

positives as input, the quality of the results can be degraded

significantly. Failure to exclude the alerts that refer to failed attacks

may lead to false positive alerts being misinterpreted or given

undue attention. Our approach is inspired by the logical framework

proposed by the authors. We verify alerts prior to classification

and correlation processes because efforts to improve the quality

of an alert should start in the early stages of alert management.

We use different correlation schema to handle alerts from different

attacks. In addition, our approach is able to handle alerts from

unknown attacks.

Another interesting work is proposed by Yu et al. [18]. The authors propose a collaborative architecture (TRINETR) for multiple

IDSs to work together to detect real-time network intrusions. The

architecture is composed of three parts: collaborative alert aggregation, knowledge-based alert evaluation and alert correlation to

cluster and merge alerts from multiple IDS products to achieve

an indirect collaboration among them. The approach reduces false

positives by integrating network and host system information into

the evaluation process. However, the approach has a major shortcoming because it has not implemented the alert correlation part

which is very crucial in generating condensed alert views.

Chyssler et al. [19] propose a framework for correlating

syslog information with alerts generated by host based intrusion

detection systems (HIDS) and network intrusion Detection systems

(NIDS). The process of correlation begins by eliminating alerts

from non effective attacks. This involves matching both HIDS and

NIDS events in order to determine the success of attack attempt.

This approach has some merits, however correlating alerts from

HIDS and NIDS is difficult because response times of HIDS and

NIDS are different. In addition, the approach does not consider the

relevance of attack in the context of network and does not reduce

the redundant and isolated alerts after alert verification.

To reduce the number of false alerts generated by traditional

IDSs, Xiao and Xiao [20] present an alert verification based

system. The system distinguishes the false positives from true

positives or confirms the confidence of the alert by integrating

context information of protected network with alerts. The

proposed system has three core componentspre-processing,

alert verification and alert correlation. The scheme looks promising

but has several shortcomings. It does not give details on how alerts

are verified and correlated. Moreover, the work is not accompanied

with experiments hence it is difficult to assess its effectiveness.

Liu et al. [4] propose a collaborative and systematic framework

to correlate alerts from multiple IDSs by integrating vulnerability

information. The approach applies contextual information to

distinguish between successful and failed intrusion attempts. After

the verification process, the alerts are assigned confidence and

the corresponding actions are triggered based on that confidence.

The confidence values are: 0 for a false alert while 1 is for a

true alert. Although the approach has some merits, it has several

shortcomings. The scheme does not give details on the procedure

used to validate the alerts and does not include details of how alerts

are transformed into meta alerts. In addition, the scheme does

not differentiate different levels of alert relevance. Further, the

scheme only handles alerts with reference numbers thus lowering

detection rates.

Bolzoni et al. [21] present an architecture for alert verification in

order to reduce false positives. The technique is based on anomaly

based analysis of the system output which provides useful context

information regarding the network services. The assumption of

this approach is that there should be an anomalous behaviour seen

in the reverse channel in vicinity of alert generation time. If these

two are correlated a good guess about the attack corresponding

to alert can be made. The effectiveness of this approach depends

on the accurate identification of an output anomaly by the output

30

H.W. Njogu et al. / Future Generation Computer Systems 29 (2013) 2745

anomaly detector engine, for alerts generated by the IDS. For every

attack there may not be a corresponding output anomaly and this

could result in false negatives. Further, the time window used

to look for the correlation is very critical for correctness of the

scheme; a very small time window may lead to missing of attacks

while a large time window may result in increase of false positive

alerts. More so, this approach does not reduce the redundant and

isolated alerts after verification.

In an effort to reduce the amount of false positives, Colajanni

et al. [7] present a scheme to filter innocuous attacks by taking

advantage of the correlation between the IDS alerts and detailed

information concerning the protected information systems. Some

of the core units of the scheme are filtering and ranking units.

The authors extended this work by proposing a distributed

architecture [22] to provide security analysts with selective and

early warnings. One of its core components is the alert ranking

unit that correlates alerts with vulnerability assessment data.

According to this approach, alerts are ranked based on match

or mismatch of alerts between the alert and the vulnerability

assessment data. We considered this type of ranking to be limiting

because of two reasons: First, it relies on match or mismatch of

only one feature (software or application) to make the decision

whether an alert is critical or non critical. Secondly, it does not

show the degree of relevance of alerts hence it does not offer much

help to the analyst. In our approach, alerts are ranked in different

levels according to their degree of interestingness. Further the

two aforementioned approaches do not reduce the redundant and

isolated alerts contained in the validated alerts.

Massicotte et al. [23] propose a possible correlation approach

based on integration of Snort, Nessus and Bugtraq databases. The

approach uses reference numbers (identifiers) found on Snort

alerts. The reference numbers refer to Common Vulnerability

Exposure (CVE), Bugtraq and Nessus scripts. The authors show a

possible correlation based on the reference numbers. However, it

is not effective because not all alerts have reference numbers. In

addition, there is no guaranty that the lists provided by CVE and

Bugtraq contain complete listing of vulnerabilities.

Neelakantan and Rao [24] propose an alert correlation approach

comprising of three stages: (i) finding vulnerabilities in the network under consideration, (ii) creating a database of all vulnerabilities having reference numbers (CVE or Bugtraq) and (iii) selecting

signatures that correspond to the identified vulnerabilities. This

approach requires reconfiguration of the signatures when there is a

network change (such as hosts being added or removed) leading to

downtime of the IDS engine. Moreover, it only handles alerts with

reference numbers thus lowering detection rates due to incompleteness of vulnerability reference numbers. The approach does

not reduce the redundant and isolated alerts contained in the validated alerts.

There have been efforts to evaluate alerts using some metrics

based on vulnerability data but have not been used all together as

proposed in our paper. For example, Bakar and Belaton [25] propose an Intrusion Alert Quality Framework (IAQF) for improving

alert quality. Central to this approach is the use of vulnerability information that helps to compute alert metrics (such as accuracy,

reliability, correctness and sensitivity) in order to prepare them for

higher level reasoning. The framework improves the alert quality

before alert verification. However, the approach does not reduce

the redundant and isolated alerts after alert verification. A similar

work is presented by Chandrasekaran et al. [26]. The authors propose an aggregated vulnerability assessment and response against

zero-day exploits. The approach uses metrics such as deviation

in number of alerts, relevance of the alerts and variety of alerts

generated in order to prepare the final alerts. The work is limited

to zero day attacks while our work handles different types of attacks. In addition, the authors have not given details on how the

threat profile is constructed and how the alerts are verified. Alsubhi

et al. [27] present an alert management engine known as FuzMet

which employs several metrics (such as severity, applicability, importance and sensor placement) and a fuzzy logic based approach

for scoring and prioritising alerts. Although some of the metrics

are similar to the ones proposed in our work, there are several

key differences. The scheme tag metrics on the unverified alerts

(raw alerts) containing unnecessary alerts while in our work, only

the validated alerts are tagged with metrics i.e. metrics are only

tagged to the necessary alerts that show a certain degree of relevance to the network context in order to improve the accuracy of

the final alerts. In addition, our work employs fewer metrics yet

it is very powerful to improve the performance of alert management. Further, we use a simpler procedure to compute for the alert

metrics.

Njogu and Jiawei [28] propose a clustering approach to reduce

the unnecessary alerts. The approach uses vulnerability data to

compute for alert metrics. It determines the similarity of alerts

based on the alert metrics and the alerts that show similarity

are grouped together in one cluster. We have extended this

previous work by implementing a better architecture of building

a dynamic vulnerability data that draws information from three

sources i.e. network resource data, known vulnerability database

and scan reports from multiple vulnerability scanners. In addition,

our new work correlates alerts based on both alert features and

alert metrics. We have also introduced the concepts of alert

classification and prioritisation based on alert metrics.

A more recent and interesting work which is closer to our work

is presented by Hubballi et al. [2]. The authors propose a false

positive alert filter to reduce false alerts without manipulating

the default signatures of IDSs. Central to this approach is the

creation of a threat profile of the network which forms the basis

of alert correlation. The method correlates the IDS alerts with

network specific threats and filters out false positive alerts. This

involves two steps: (i) alerts are correlated with vulnerabilities to

generate correlation binary vector generation and (ii) classification

of correlation binary vectors using the neural networks. The idea of

correlating alerts with vulnerabilities in the work is promising in

delivering accurate alerts. However, this approach does not reduce

the number of redundant and isolated alerts after alert verification.

Further comparisons are presented in Section 3.1.4.

Al-Mamory and Zhang [29] note that most of the general

alert correlation approaches such as Julisch [30], Valdes and

Skinner [31], Debar and Wespi [32], Al-Mamory and Zhang [33],

Sourour et al. [34], Jan et al. [35], Lee et al. [36] and Perdisci

et al. [37] do not make full use of the information that is available

on the network under consideration. The general alert correlation

approaches usually rely on the information on the alerts which

may not be comprehensive and reliable to understand the nature

of the attack and may lead to poor correlation results. In fact,

correlating alerts that refer to failed attacks can easily result in

the detection of whole attack scenarios that are nonexistent. Thus,

it is useful to integrate the network context information in alert

correlation in order to identify the exact level of threat that the

protected systems are facing.

With respect to the related work, we noted that most

vulnerability based approaches proposed in the literature are able

to validate alerts successfully. However, validating alerts may

not guarantee alerts of high quality. For example, the validated

alerts may contain massive number of redundant and isolated

alerts from the same intrusion event and those carried out in

different stages. Such issues have received little attention in the

research field. In order to address the shortcomings of vulnerability

based approaches, our work has synergised several techniques in

a comprehensive alert management framework in order to build a

novel solution.

H.W. Njogu et al. / Future Generation Computer Systems 29 (2013) 2745

31

the alert is regarded as a suspicious alert and forwarded to the

Alert-EVA data verifier.

(iv) The Alert-EVA data verifier uses EVA data to: validate the

alerts, eliminates the obvious non interesting alerts and

computes alert metrics. Alerts are tagged with alert metrics

(transformed alerts) and forwarded to stage 2. The verifier has

6 sub verifiers to process the suspicious alerts.

Stage 2

(v) Using a corresponding alert sub classifier, the transformed

alerts (with alert metrics) are classified into one of the classes

according to their alert metrics. The alert classifier has 6

sub classifiers. Alerts contained in these classes are further

classified into 2 super classes (alert classes for ideal interesting

alerts and alert classes for partial interesting alerts). These

alert classes are forwarded to the alert correlator component.

Stage 3

(vi) The alert correlator component reduces the redundant and

isolated alerts and finds the causal relationships in alerts. The

correlator has sub correlators dedicated to each of the alert

classes for every group of attack. The correlated alerts are

finally presented in form of meta alerts. The frequent meta

alerts are forwarded to the meta alert history for two reasons:

correlates the future related alerts and assists in modifying the

IDS signatures.

(vii) The analyst receives the meta alerts and can view alerts in

terms of their priority and the nature of attack.

3.1. Stage 1

Stage 1 has 5 sub components that are discussed in the next sub

sections:

3.1.1. Alert receiver

Generally, IDSs do not produce alerts in an orderly manner. The

interesting alerts are buried under heaps of redundant, irrelevant

and low priority alerts. The alert receiver unit receives and

prepares the raw alerts appropriately. The important alert features

such as IP addresses and port numbers are extracted and stored in

a relational database for further analysis.

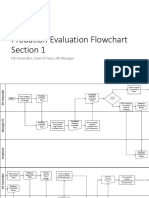

Fig. 1. Proposed alert management framework.

3. Proposed alert management approach

In this section, we describe a fast and efficient 3-stage alert

management approach. This approach has three stages: Stage 1

involves alert collection, correlation of alerts against the meta alert

history and verification of alerts against EVA data; Stage 2 involves

classification of alerts based on the alert metrics; and Stage 3

involves correlation of alerts in order to reduce the redundant and

isolated alerts. The 3 stages are illustrated below (Refer to Fig. 1):

Stage 1

(i) IDS Sensor generates alerts on malicious activities.

(ii) Raw alerts are received and pre-processed by alert receiver

unit.

(iii) The pre-processed alerts are compared with meta alerts (meta

alert history) using the Alert-Meta alert history correlator. If

the alert under consideration matches with any of the meta

alerts then the alert is considered successful and forwarded to

the alert correlator component. But if there is no match, then

3.1.2. Meta alert history

IDSs produce alerts that may have similar patterns

[30,35,38,39]. Similar patterns are manifested by frequent IP addresses, ports and triggered signatures within a period of time.

Some of the alert patterns may appear frequent and can last for

a relatively longer period of time. Julisch [30] states that: Large

group of alerts have a common root cause. Most of the alerts are

triggered by only a few signatures and if a signature has triggered

many alerts over longer periods of time, it is also likely to do so in

the near future generating many similar alerts with the same features. Vaarandi [38] adds that more than 85% of alerts are produced

by the most prolific signatures.

The meta alert history keeps the recent and frequent alerts

(ideal interesting and partial interesting meta alerts) for two main

reasons:

To assist in handling the incoming related alerts as illustrated

by the following logic: If an incoming alert with signature S

originates from A to B AND there was previously an meta alert M

with signature S originating from A to B AND M was classified as an

ideal interesting (meta) alert THEN the incoming alert is an ideal

interesting alert. The incoming alert is compared against meta

alerts by Alert-Meta alert history correlator.

To help the analysts in specifying the root causes behind the

alerts. The analysts are able to identify signatures or vulnerabilities that need to be modified or fixed thus improving

32

H.W. Njogu et al. / Future Generation Computer Systems 29 (2013) 2745

the future quality of alerts. A root cause (vulnerability) in the

network can instigate an IDS to trigger alerts with similar

features.

In this work, we focus on the first role of the meta alert history

because it is easier to implement and gives better results. Thus, by

identifying the frequent patterns of alerts, it is easier to predict and

know how to handle any incoming related alert hence improving

the efficiency of alert management. The second role may not yield

successful results if it is implemented poorly because more false

negatives could be introduced if it is not carefully implemented.

The task of modifying signatures is very complex in terms of

skills and time. Moreover, some vulnerabilities cannot be fixed

immediately as noted earlier and therefore the second role is

considered as our next future task.

The content of the meta alert history includes: meta alert type

(either ideal interesting or partial interesting), name of alert, class

of attack, source address (IP and port), destination address (IP and

port), meta alert class and age of meta alert.

To improve the efficiency and scalability of this sub component,

we eliminate meta alerts that appear old (aged), have not been

referenced to or have not been matched with alerts for a relatively

longer time (time value to be determined by the analyst). The

new meta alerts are forwarded by the meta alert correlator and

appended in the meta alert history. Old meta alerts are replaced

by the new ones if they are similar. The details of the formation of

meta alerts and how they are forwarded to the meta alert history

are discussed later in this section.

3.1.3. Alert-Meta alert history correlator

The Alert-Meta alert history correlator uses the following

procedure:

Input: Pre-processed alerts, meta alerts from meta alert history.

Output: Successful alerts (with inherited properties), Suspicious

alerts.

Step 1: Compare features of pre-processed alert with meta alert

(in terms of IP addresses, ports, names).

Step 2: Search for potential ideal interesting meta alerts from

meta alert history. Get IP destination of alert (Alert.IPdest)

to extract the potential meta alerts (MetaAlert.IP) i.e.

MetaAlert.IP that match Alert.IPdest.

Step 3: Choose the meta alert that best represents the alert. The

alert is matched with each of the potential ideal interesting

meta alerts and the one showing a perfect match in terms

of IP address, port and name is chosen.

Step 4: If a perfect match is established in step 3, the successful

alert inherits the properties of the parent ideal interesting

meta alert such as the name of class and is forwarded to

the alert correlator in stage 3.

Step 5: If a perfect match is not established in step 3, the

pre-processed alert repeats step 2 in order to search

for potential partial interesting meta alerts. The alert

is forwarded to step 3 in order to choose the partial

interesting meta alert that best represents the alert. If a

perfect match is established, the successful alert inherits

the properties of the parent partial interesting meta alert

and is forwarded to meta alert correlator in stage 3. If a

perfect match is not established, then the suspicious alert

is forwarded to the Alert-EVA data verifier.

Fig. 2. Construction of EVA data.

priority, IP address, port, protocol, class, time and applications. The

main purpose of EVA data is to validate alerts and enhance the

semantics of alerts to a sufficient level in order to deliver quality

alerts.

Fig. 2 illustrates how the EVA data is constructed. The network

specific vulnerability generator is the engine that constructs EVA

data. It does this by establishing a relationship in all the elements

of vulnerabilities drawn from three sources: known vulnerability

database, scan network reports and network context information.

The generator uses an entity relationship (ER) model shown in

Fig. 3 to capture and build EVA data. The elements of the ER model

include: hosts, ports, applications, port threats, application threats,

exploits, vulnerabilities and attack information. We established

relationships in all elements as follows: The relation between host

and applications is one to many as one host can run more than one

application. One host has multiple ports hence the relation is one

to many. The vulnerability entity represents known vulnerabilities

from various sources such as CVE (Common Vulnerability and

Exposure) [40] and Bugtraq [41] to provide complete details of

vulnerabilities. One vulnerability can lead to multiple exploits

hence the relation is one to many. The attribute age refers the age

of the vulnerability. The attack information entity contains attack

information that is associated with vulnerabilities. The threats are

represented in two entities. The port threat entity contains all

threats associated with ports while the application threat entity

contains all threats associated with applications.

Different vulnerability scanners such as Nessus and Protectorplus can help to populate information for some of the entities.

Nessus can generate exploits while Protector-plus can generate

a list of vulnerabilities for different applications in a network in

order to build a comprehensive EVA data. The scanners look for

potential loopholes such as missing patches that are associated to

particular applications, versions, service packs. The scanners are

able to determine the vulnerable software, applications, services,

ports and protocols. Different scanners use different techniques to

detect vulnerabilities and can run periodically to have a consistent

and recent threat view of the network and immediately forward

them to the network specific vulnerability generator. Scripting

languages such as Perl scripts can easily process the reports from

different scanners. The vulnerabilities are dynamic in nature hence

the need to install agents in the hosts to track changes in network

resources. The agents forward the necessary information to the

generator so that the EVA data is updated accordingly.

The architecture of building the threat profile in Ref. [2] is

almost similar to what we have proposed as shown in Fig. 2.

Specifically, we made the following contributions:

Threat profile of Ref. [2] is drawn from two major sources

3.1.4. EVA data

EVA data is a dynamic threat profile of a network. It represents

vulnerabilities of the network that are likely to be exploited by

attackers. It lists all the vulnerabilities by their reference id, name,

i.e. known vulnerability database and scan reports. Besides

these two sources, our work has introduced a database for

network resources in order to improve the management of

network resources.

H.W. Njogu et al. / Future Generation Computer Systems 29 (2013) 2745

33

Fig. 3. Entity relationship diagram.

Our approach has incorporated the concept of age of vulnera-

bilities to eliminate vulnerabilities which appear outdated, old

and have not been used for validation in a relatively longer time

(time varies with different network environment) hence making the approach more efficient and scalable.

Ref. [2] uses one verifier to process alerts of different attacks

while in our approach, we use 6 sub verifiers tailored to validate

alerts from specific group of attacks. Therefore, our approach is

able to improve the performance of alert verification process.

It is difficult to maintain a dynamic threat profile in Ref. [2]

because updating the threat profile is done offline. In our

approach, we use host agents to track changes in a network

and later relay the necessary information to the network

specific vulnerability generator so that the EVA data is updated

accordingly.

In Ref. [2], correlation binary vectors are generated by neural

network based engine to represent the match and mismatch

of the corresponding features between alert and vulnerability.

An example of the binary vector is (1000001001), where

1 represents a match while 0 represents a mismatch. Our

approach uses Alert-EVA data verifier engine to validate alerts

and compute the alert metrics as illustrated in the next sub

section.

The quality of alert verification is highly dependent on

various elements of information from IDS alerts and their

corresponding vulnerabilities. The threat profile and an IDS

product may use different attack details such as reference ids

when referring to the same attack. In fact, there are no unique

(standard) attack details such as reference ids to reference all

types of attacks. In order to have a comprehensive EVA data,

we have introduced additional attack reference information

from IDS product into EVA data. We use this information to

play a complementary role when determining the relevance of

alerts. This helps to ensure the completeness of attack details

in the EVA data because if attack details are missed then the

computation of alert score is affected.

3.1.5. Alert-EVA data verifier

Threats are due to vulnerabilities in a network [42,43]. It is

believed that each attack has a goal to exploit vulnerability on

particular application, service, port or protocol. As mentioned

earlier, traditional IDSs run with their default signature databases

and do not check the relevance of an intrusion to the local network

context. Thus, IDSs generate huge volumes of raw alerts majority

of which are non relevant alerts and not useful in the context of the

network. In addition, the information provided by the alerts is basic

and inadequate. Relying solely on this information may increase

cases of important alerts being misinterpreted, ignored or delayed.

Because of the above reasons, we introduce the concept of AlertEVA data verifier to validate alerts and compute the alert metrics.

The proposed approach is designed to handle 5 different groups

of attacks (DoS, Telnet, FTP, Mysql and Sql). Thus the verifier has

6 sub verifiers tailored to handle alerts from 5 different groups

of attacks. The sub verifiers are DoS verifier, Telnet verifier, FTP

verifier, Mysql verifier, Sql verifier and Undefined attack verifier.

For example, DoS sub verifier handles all alerts reporting DoS

attacks and so is FTP, Telnet, Mysql and Sql sub verifiers. While

the undefined attack sub verifier handles any alerts reporting new

attacks that have not been defined during the design of the alert

verification component.

The verifier validates the suspicious alerts by measuring

similarity with their corresponding vulnerabilities contained in

EVA data. Both alert and vulnerabilities have comparable features

and hence are easy to measure the similarity. The validation

process helps to determine the seriousness of alerts with respect

to the network under consideration.

In summary, the procedure of validating alerts and computing

their alert metrics is illustrated as follows. The sub verifier uses an

IP address of a given alert to search for the potential vulnerabilities

in EVA data and chooses the vulnerability that best represents the

alert (with highest alert score). The sub verifier uses Table 1 to

compute for the alert metrics and forwards the transformed alerts

to the alert classification component (stage 2). The procedure to

validate alerts is further illustrated here below.

Input: Suspicious alerts, vulnerabilities in EVA data.

Output: Transformed alerts (with alert metrics).

Step 1: To determine which sub verifier handles a given alert, the

alert verifier component makes this decision based on the

attack identifier (class) field in the alert and forwards the

alert to the corresponding sub verifier. For example an

alert reporting a DoS attack is forwarded to the DoS sub

verifier.

Step 2: The sub verifier compares the features of pre-processed

alert with the corresponding vulnerabilities in EVA data in

this order:

34

H.W. Njogu et al. / Future Generation Computer Systems 29 (2013) 2745

Table 1

Alert metrics.

Alert metric

Attributes and their sources

Metric validation rules

Scale

Alert relevance

(importance of an alert)

Reference id, name, priority, IP

address, port, protocol, class, time and

applications From alert and EVA data

Derived from alert score (step 4 in Section 3.1.5)

09

Alert severity

(criticality of an alert)

Reference id, priority and timestamp

If Alerts priority (1) matches with EVA datas severity (High) then Alert Severity = 1

13

From alert and EVA data

Else if Alerts priority (2) matches with EVA datas severity (Medium) then Alert

Severity = 2

Else if Alerts priority (3) matches EVA datas severity (Low) then the alert

severity = 3.

NB: cases where there are no matches e.g. if Alert priority (1 or 2) and EVA

data = Low or Medium then refer to the severity of EVA data

Alert frequency

(rate of alert occurrence)

Reference id, name, priority, IP

address, port, protocol, class, time and

applications From alert

Alert (sharing common features) belonging to a particular attack whose count

exceeds a certain threshold number of alerts with a certain time is regarded as

frequent alert while an alert belonging a particular attack whose count is below a

certain threshold is regarded as non frequent alert within a specified period of time

(time and threshold number of alerts in this case are dynamic)

01

Alert source confidence

(the reliability of sensor)

Sensor Id and timestamp from both

alert and sensor data

The value of this metric is stored in the alert source data. It is easier to extract the

confidence value of sensors because each alert has sensorId field.

06

IP => ReferenceId => Port => Time => Application

=> Protocol => Class => Name => Priority.

Step 3: The sub verifier searches for potential vulnerabilities from

EVA data i.e. get IP destination of alert (Alert.IPdest) to

extract the potential vulnerabilities i.e. EVAdata.IP that

match (Alert.IPdest).

Step 4: The sub verifier chooses the vulnerability that best

represents the alert. The alert is matched with each of

the potential vulnerabilities and the vulnerability with the

highest alert score is chosen. The process of matching

alerts and potential vulnerabilities is described below.

If Alert.IPdest matches EVA data.IP Then IP_similarity = 1

Else IP_similarity = 0

If Alert.ReferenceId matches EVA data. ReferenceId Then

ReferenceId_similarity = 1 Else ReferenceId_ similarity = 0

If Alert.Portdest matches EVA data.Port Then Port_ similarity = 1 Else Port_similarity = 0

If Alert.Time is greater or equal to EVA data.Time Then

Time_similarity = 1 Else Time_similarity = 0

If Alert.Application matches EVA data.Application Then

Application_similarity = 1 Else Application_similarity = 0

If Alert. Protocol matches EVA data.Protocol Then Protocol_

similarity = 1 Else Protocol_similarity = 0

If Alert.Class matches EVA data.Class Then Class_ similarity = 1 Else Class_similarity = 0

If Alert.Name matches EVA data.Name Then Name_similarity

= 1 Else Name_similarity = 0

If Alert.Priority matches EVA data.Priority Then Priority _

similarity = 1 Else Priority_similarity = 0.

Alert score = IP_similarity + ReferenceId_similarity +

Port_similarity + Time_similarity + Application_similarity

+ Protocol_similarity + Class_similarity + Name_similarity

+ Priority_similarity.

Step 5: The sub verifier computes for alert metrics (Alert Relevance, Severity, Frequency, Alert Source Confidence) and

tag the metrics to the alert. Refer to Table 1.

Step 6: The sub verifier forwards the transformed alerts to the

alert classification component while the most obvious non

interesting alerts (alerts with no similarity with EVA data)

are eliminated.

It is important to note that for simplicity reason, any match is

awarded a value of 1 while a mismatch is awarded a value of 0.

Alert Metrics

Although some of the alert metrics have been individually

used in previous works [15,26,27,44], they have not been used

altogether as proposed in this paper. The originality of our

approach is the use of dynamic threat profile (EVA data) to

provide a reliable computation ground thus ensuring the alert

metrics are accurate enough to represent the alerts in terms of

their relevance, severity, frequency and alert source confidence.

We used a simple method that does not consume unnecessary

resources. The metrics have a better discriminative ability than

the ordinary alert features. We compute metrics in this order

(Alert Relevance => Alert Severity => Alert Frequency =>

Alert Source Confidence). The details of alert metrics are as follows

(Refer to Table 1):

(i) Alert relevance: It indicates the importance of an alert in

reference to the vulnerabilities existing in a network. It

involves measuring the degree of similarity between alert

and EVA data because they contain comparable data types

(reference id, name, priority, IP address, port, protocol, class,

time and applications). We use the alert score to determine

the relevance of alerts. We performed a series of verifications

using different alert scores to search for the best threshold that

best represent the alerts in terms of relevance. An alert with a

higher score indicates that the number of matching fields are

high hence more relevant than alert with a lower score.

(ii) Alert severity: It indicates the degree of severity (undesirable

effect) associated with an attack reported by alert. The verifier

compares alert and EVA data in terms of their priority

(severity). Alert severity can be high, medium or low. An alert

with high alert severity causes a higher degree of damage.

While medium alert severity causes a medium degree of

damage and low severity has the least degree of damage

(trivial). Alert severity is represented in range of 13. An alert

with a lower score closer to 1 indicates the attack is very

severe.

(iii) Alert frequency: It reflects the occurrence of related alerts

(with common features) that are triggered by particular

source(s) or attack within a given period of time. An IDS

may generate similar alerts that are manifested by frequent

features such as IP addresses and ports within a particular

time window. For example, the probe based attack may trigger

H.W. Njogu et al. / Future Generation Computer Systems 29 (2013) 2745

35

Table 2

Pre-processed alert snapshot.

Reference Id

Name

SensorId IPdest

Portdest IPsrc

Portsrc

Priority

(severity)

Protocol Class

CVE-1999-0001

CVE-1999-0527

Teardrop

Telnet

resolv host

conf.

1

2

1238

1026

1238

1026

High

Medium

TCP

TCP

192.168.1.3

192.168.1.2

192.168.1.1

192.168.1.1

Time

Application

DoS

9:9:2011:10:12:05 Windows

U2R

9:9:2011:10:12:06 Telnet,

(Telnet)

Windows

Key * used in alert verification.

Table 3

EVA data snapshot.

Reference Id

Name

IP address

Port

Priority (severity)

Protocol

Class

Time

Application

CVE-1999-0001

CVE-1999-0527

Teardrop

Telnet resolv host conf.

192.168.1.3

192.168.1.2

1238

1026

High

Medium

TCP

TCP

DoS

U2R (Telnet)

8:9:2011:14:16:02

8:9:2011:14:16:02

Windows

Telnet, Windows

numerous repetitive alerts as the network is being intruded.

The verifier has a counter to determine the frequency of alerts.

An alert from a particular source (or attack) whose count

exceeds a certain threshold within a specified time is regarded

as a frequent alert while an alert belonging to a particular

source (or attack) whose count is below a certain threshold is

regarded as a non frequent alert. An alert above the threshold

is assigned value 1 while the one below the threshold is

assigned 0. A value closer to 1 is considered very frequent

while the one closer to 0 is considered less frequent.

(iv) Alert source confidence: It indicates the value an organisation

places on an IDS sensor. It is based on sensors past performance and reflects the ability of a given sensor to effectively

identify an attack hence making it possible to predict how a

sensor performs in future. The performance of any sensor is

influenced by factors such as accuracy and detection rates,

version of signatures and frequency of updates. To further understand the performance of sensors, consider the following

aspects:

In large networks, it is very difficult to ensure all sensors are

well tuned and updated. Therefore some sensors are likely

to be frequently updated and tuned while others are not.

Difference in versions and frequency of updates may lead to

different accuracy and detection rates of IDS sensors [25].

IDS sensors are placed in different locations facing varied

risks and exposure in a large network. Some locations are

more prone to threats than others. For example, the sensors

installed facing the Internet direct or being at the front-line

of internet connections are exposed to a higher degree of

threats than those located within the network. Some sensors are placed in environments with emergency routes carrying network traffic of third parties hence exposed to more

threats.

A consistent number of alerts over a long period of time

indicates the likelihood of the IDS sensor being stable and

with the ability to produce reliable results. While an inconsistent number of alerts indicates the likelihood of the

IDS sensor being unstable and may lead to unreliable results [26].

Consistent ratio of interesting alerts to non interesting

alerts over a period of time indicates the likelihood of IDS

sensor being stable and able to produce reliable results.

While an inconsistent ratio indicates the likelihood of IDS

sensor being unstable and may lead to unreliable results.

An acceptable false alert rate indicates the ability of an IDS

sensor to produce reliable alerts. While unacceptable false

alert rate indicates the likelihood of a sensor to output unreliable alerts. A false alert is caused by legitimate traffic

which may lead to a high false rate. The true danger of a high

false alert rate lies in the fact that it may cause analysts to

Table 4

Alert sensor data snapshot.

Sensor Id

Sensor score

1

2

5

2

ignore legitimate alerts. The goal of any sensor is to have a

lower false alert rate. According to several researches, it is

indicated that false alerts rate is between 60% and 90% [35].

However, it is possible to have a false alert rate of below 60%

under normal conditions. Generally, false alert rate can vary

depending on the level of tuning, technology of sensor and

the type of traffic on a network. Other works illustrating this

concept are contained in [45,46].

The analysts who review the alerts regularly are in a better

position to identify and tell the sources (sensors) that are

well known for generating relatively high numbers of true

alerts as well as sources (sensors) known for generating relatively high numbers of false alerts depending on their location and their threat levels [38].

Alerts are usually linked to their sensors by the sensorId field

and therefore it is easier to extract the value of alert source

confidence from the sensor data. The range of source confidence

score is 06. A sensor with a score closer to 6 is considered to

have higher confidence than the one with a score closer to 0. The

alert source confidence parameter is very useful in networks with

multiple IDS sensors and where analysts have some knowledge on

evaluating the performance of sensors.

To illustrate how the Alert-EVA data verifier works, consider

the following example. Table 2 shows a snapshot of pre-processed

alerts. Table 3 shows a section of EVA data while Table 4

shows a section of alert sensor data. In Table 2, the first

alert reports teardrop attack targeting a host with IPdest 192.168.1.3

on Portdest 1238. Using the alert IPdest 192.168.1.3, the DoS sub

verifier extracts all potential vulnerabilities in EVA data matching the IP address. In this case, EVA data has only one vulnerability matching this particular alert IPdest . The sub verifier then

matches other features (reference id, port, severity, protocol, class,

time, name and application) of alert against the said vulnerability.

The alert score is computed as follows: IP_similarity = 1 because

IP addresses are matching and so is the rest of other features; ReferenceId_similarity = 1; Port_similarity = 1; Time_similarity = 1;

Application_similarity = 1; Protocol_similarity = 1; Class_similarity

= 1;Name_similarity = 1; Priority_similarity = 1. Therefore, the alert

score (total number of matches) is 9. The sub verifier tags the alert

with the following: Relevance score of 9, Alert severity of 1, Alert

frequency of 0 and Source confidence of 5. The validated alert is

forwarded to the DoS sub classifier component in this form -DoS

(9,1,0,5).

36

H.W. Njogu et al. / Future Generation Computer Systems 29 (2013) 2745

Generally, the vulnerability based alert management approaches are not able to deal with unknown vulnerabilities and

intrusions [12]. In this work, we designed a policy to reduce the influence of the unknown vulnerabilities and intrusions during alert

verification. This policy minimises cases such as: relevant alerts being ignored or considered as irrelevant alerts simply because they

fail to match the vulnerabilities in EVA data when they are being

validated. This policy addresses the following four cases:

Table 5

Fuzzy rule base.

Rule

Relevance

Severity

Frequency

Source confidence

Class

1

2

3

...

N

High

High

High

...

Low

High

High

High

...

Low

High

High

Low

...

Low

High

Low

High

...

Low

Class 1

Class 2

Class 3

...

Class k

Case 1: IDS issues an alert on non relevant attack but EVA

data contains outdated vulnerabilities. As expected the verifier

identifies the corresponding vulnerability (that is outdated)

in EVA data that matches the alert (and yet the network is

not vulnerable). This issue is difficult to address but can be

minimised by frequently updating both IDS and EVA data as

well removing outdated vulnerabilities. Alerts in this case are

eliminated.

Case 2: IDS issues an alert on non relevant attack and EVA

data does not contain a corresponding vulnerability because

the network is not vulnerable to the non relevant attack. For

example, an IDS issues an alert in response to an attack that

exploits a well-known vulnerability of a Windows operating

system but the system that the IDS is monitoring is a Linux

operating system. This is a desired case hence alerts are

eliminated.

Case 3: IDS does not produce alert (false negative) on a

relevant attack and EVA data has not registered a vulnerability

corresponding to the relevant attack. This case is very difficult to

address because IDS and EVA data have no idea of the relevant

attack. However, this is minimised by frequently updating both

IDS and EVA data.

Case 4: IDS issues an alert on relevant attacks but EVA data

has not registered a vulnerability corresponding to the relevant

attack. This is a worst case scenario and such alerts are

eliminated. This is minimised by frequently updating EVA data.

3.2. Stage 2

3.2.1. Alert classification

Fuzzy logic based system reasons about the data by using a

collection of fuzzy membership functions and rules. Fuzzy logic

is applied to make better and clearer conclusions from imprecise

information. Fuzzy logic differs from classical logic in that it does

not require a deep understanding of the system, exact equations or

precise numeric values. With fuzzy logic, it is possible to express

qualitative knowledge using phrases like very low, low, medium,

high and very high. These phrases can be mapped to exact numeric

range. How to determine the interestingness of alerts and later

classify the alerts is a typical problem that can be handled by

fuzzy logic. We chose fuzzy logic because it is conceptually easy to

understand since it is based on natural language, easy to use and

flexible. Fuzzy inference is the process of formulating the mapping

from a given input to an output. The mapping then provides a

basis from which decisions can be made. The process of fuzzy

inference involves the following 5 steps: (1) fuzzification of the

input variables, (2) application of the fuzzy operators such as

AND to the antecedent, (3) implication from the antecedent to the

consequents, (4) aggregation of the consequents across the rules,

and (5) defuzzification.

Our classifier has 6 sub classifiers tailored to handle alerts

from 6 different groups of attacks: DoS, Telnet, FTP, Mysql,Sql

and Undefined attack groups. For example, the DoS sub classifier

handles all alerts reporting DoS attacks, and so do the FTP, Telnet,

Mysql and Sql sub classifiers. While the Undefined attack sub

classifier handles alerts reporting new attacks that have not been

defined during the design of the alert classification component.

The results from the alert verifier (alerts with four metrics)

are used as input to the fuzzy logic inference engine in order to

determine the interestingness of alerts i.e. we consider the four

alert metrics of an alert as the input metrics. The input metrics

are fuzzified to determine the degree to which they belong to

each of the appropriate fuzzy sets via membership functions.

Membership functions are curves that define how each point in

the input space is mapped to a degree of membership between 0

and 1. The membership functions of all input metrics are defined

in this step. The summary of membership functions in each input

metric is: RelevanceLow or High; SeverityLow, Medium or

High; FrequencyLow or High and; Source confidenceLow or

High. In this work, we used a Guassian distribution to define the

membership functions.

We used domain experts to define a set of IF THEN rules as

illustrated in Table 5. In total, we established 11 classes that

represent different levels of alert interestingness for every group

of attacks. These rules represent all possible and desired cases

in order to improve the efficiency of the inference engine. We

eliminated rules that were contradicting and meaningless. Each

rule consists of two parts: antecedent or premise (between IF and

THEN) and a consequent block (following THEN). The antecedent

is associated with input while the consequent is associated with

output. The inputs are combined logically with operators such as

AND to produce output values for all expected inputs. The general

form of the classification rule that takes alert metrics as input and

class as output is illustrated below:

Rule: IF feature A is high AND feature B is high AND feature C is

high AND feature D is high THEN Class = class 1. From Table 5, we

can extract a fuzzy rule as follows:

R1:IF Relevance is High AND Severity is High AND Frequency is High

AND Source Confidence is High THEN class = Class 1.

From the above rule, relevance, severity, frequency and source

confidence represent the input variables while class is an output

variable.

In brief, we used the fuzzy logic to determine the interestingness of different alerts contained in the 11 classes for each group

of attacks. As illustrated in Fig. 4, the fuzzy inference system takes

the input values from the four metrics.

The input values take this form DoS (9,1,0,5) where DoS

represents the group of attack, 9 for the relevance score, 1 for

severity, 0 for frequency and 5 for Source confidence . As previously

illustrated in Table 1, the ranges of metrics are: relevance score is

09 (least is 0 and highest is 9), severity is 13 (1 is most severe

and least severe is 3), frequency is 01 (least is 0 and highest is 1)

and source confidence is 06 (least is 0 and highest is 6). The input

metrics are fuzzified using the defined membership functions in

order to establish the degree of membership. The inference uses

the set of rules to process the input metrics. All the outputs are

combined and provided in a single fuzzy set. The fuzzy set is then

defuzzified to give a value that represents the interestingness of an

alert. The alert is then put into the right class.

Unlike other classification schemes that label alerts as either

true positive or false positive, our classifier determines the

interestingness of alerts using several sub classifiers. In addition,

most of the existing classification schemes require a lot of

H.W. Njogu et al. / Future Generation Computer Systems 29 (2013) 2745

37

training and human expertise and experience. We apply fuzzy

based reasoning to determine the interestingness of validated

alerts based on their metric values. This brings in the benefit

of flexibility and ease when determining the interestingness of

alerts. In addition, we use 6 sub classifiers tailored to classify alerts

from specific groups of attacks. Therefore, our approach is able to

improve the performance of the alert classification process.

3.2.2. Classifying ideal interesting alerts and partial interesting alerts

Alerts contained in the above classes are further sorted out and

classified into two super classes based on their interestingness

score. The score is 010. The ideal interesting alert super class

has interestingness score of 7 and above while the score of partial

interesting alert super class is below 7. Alerts are retained in their

respective classes and forwarded to the correlation component.

3.3. Stage 3

3.3.1. Alert correlation component

In this sub section, we discuss the details of the alert correlation

component. As previously noted the validated alerts of the existing

vulnerability based approaches may contain a huge number of

redundant alerts that need to be reduced. In the recent years, the

trend of multi-step attacks is on the rise leading to unmanageable

levels of redundant and isolated alerts. A single attack such as

a port scan is enough to generate a huge number of redundant

alerts. Analysing each redundant alert after alert verification, may

not be practically possible especially in large networks because

the analysts are likely to take longer time to understand the

complete security incident. Consequently, the analysts would not

only encounter difficulties when taking the correct decision but

would also take a longer time to respond to the intrusions.

As discussed in the previous sub section, the alert classification

component puts alerts into alert classes based on the alert metrics.

The alerts in the alert classes may be isolated and redundant.

The goal of the alert correlation component is to reduce the huge

number of redundant and isolated alerts and establish the logical

relationships of the classified alerts. The individual redundant

alerts representing every step of attack are correlated in order to

have a big picture of an attack. The alert correlation component has

6 correlators for 6 groups of attacks (DoS, FTP, Telnet, Mysql, Sql

and Undefined attacks). Each alert correlator has 11 sub correlators

to handle the classified alerts from 11 different classes in each

group of attack as illustrated in Fig. 5. Each class forwards alerts

to the corresponding sub correlator. For example, alerts in class 1

(reporting DoS attacks) are forwarded to sub correlator 1 of DoS

correlator.

The procedure of correlating alerts can be illustrated as follows.

The sub correlator uses the IP address(es) of a classified alert

to identify the potential meta alerts. To identify the best meta

alert representing the alert under consideration, the sub correlator

measures the similarity of alert and the existing meta alerts. If a

corresponding meta alert (both meta alert and alert have a perfect

match in terms of IP, port, time) exists then its details are updated

i.e. number of alerts in meta alert is incremented, meta alert update

time is updated and meta alert non update time is reset to 0. Only

one meta alert can be chosen from potential meta alerts. However,

if a corresponding meta alert does not exist, a new meta alert is

created. Details of the alert under consideration form the new meta

alert i.e. number of alert is set to 1 and timestamp of the alert

becomes the meta alert create time. To determine how long the

existing meta alert remains active, we used the rules in steps 6

and 7 (illustrated later in this sub section). Meta alerts are later

forwarded to the analyst for action.

The sub correlator uses exploit cycle time to determine the

extent of an attack. It generates meta alerts regarding a particular

Fig. 4. Fuzzy inference process.

Fig. 5. DoS correlation schema.

attack within an exploit cycle time. Generally, a multi-step attack

is likely to generate several meta alerts in a given exploit cycle

time while a single step attack is likely to generate one meta alert

within a given exploit cycle time. However, one meta alert could

also represent multi-step attack especially if the intruder knows

the network resources to exploit. Before going into details of how

alerts are correlated, we define some variables of each meta alert

M as follows:

Meta alert attack class (M.Class)indicates the specific alert

class where the alert is drawn from.

Meta alert create time (M.createtime )defines the create time

of meta alert. It is derived from the stamptime of the first alert

that formed the meta alert.

Number of alerts (M.NbreAlerts )defines the number of alerts

contained in the meta alert. Each time a new alert is fused to a

meta alert, this number is incremented.

Meta alert update time (M.updatetime )defines the time of the

last update in meta alert. This variable represents the time of

the most recent alert fused to the meta alert.

Meta alert non update time (M.non-updatetime )counts the

time since the last update made on the meta alert. This time is

reset to 0 each time an update of new alert is fused to the meta

alert.