Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Design Verification and Test Vector Minimization Using Heuristic Method of A Ripple Carry Adder

Caricato da

James MorenoTitolo originale

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Design Verification and Test Vector Minimization Using Heuristic Method of A Ripple Carry Adder

Caricato da

James MorenoCopyright:

Formati disponibili

International Journal on Cybernetics & Informatics (IJCI) Vol. 5, No.

4, August 2016

DESIGN VERIFICATION AND TEST VECTOR

MINIMIZATION USING HEURISTIC METHOD OF A

RIPPLE CARRY ADDER

K.L.V.Ramana Kumari 1 , M.Asha Rani 2and N. Balaji 3

1

Department of ECE, VNRVJIET, Hyderabad, India

2

Department of ECE, JNTUH, Hyderabad, India

3

Department of ECE, JNTUK, Vizianagaram, India

ABSTRACT

The reduction in feature size increases the probability of manufacturing defect in the IC will result in a

faulty chip. A very small defect can easily result in a faulty transistor or interconnecting wire when the

feature size is less. Testing is required to guarantee fault-free products, regardless of whether the product

is a VLSI device or an electronic system. Simulation is used to verify the correctness of the design. To test n

input circuit we required 2n test vectors. As the number inputs of a circuit are more, the exponential growth

of the required number of vectors takes much time to test the circuit. It is necessary to find testing methods

to reduce test vectors . So here designed an heuristic approach to test the ripple carry adder. Modelsim and

Xilinx tools are used to verify and synthesize the design.

KEYWORDS

Ripple carry Adder, Test vectors, Modelsim Simulator

1. INTRODUCTION

The complexity of VLSI technology has reached the point where we are trying to put 100 million

transistors on a single chip, and we are trying to increase the on-chip clock frequency to 1 GHz.

Transistor feature sizes on a VLSI chip reduce roughly by 10.5% per year, resulting in a transistor

density increase of roughly 22.1% every year. An almost equal amount of increase is provided by

wafer and chip size increases and circuit design and process innovations. This is evident that,

44% increase in transistors on microprocessor chips every year. This amounts to little over

doubling every two years[3]. The doubling of transistors on an integrated circuit every 18 to 24

months has been known as Moores since the mid-1970s. Although many have predicted its end,

it continues to hold, which leads to several results [1].

The reduction in feature size increases the probability that a manufacturing defect in the IC will

result in a faulty chip[2]. A very small defect can easily result in a faulty transistor or

interconnecting wire when the feature size is less than 100 nm. Furthermore, it takes only one

faulty transistor or wire to make the entire chip fail to function properly or at the required

operating frequency. Defects created during the manufacturing process are unavoidable, and, as a

result, some number of ICs is expected to be faulty therefore, testing is required to guarantee fault

free products, regardless of whether the product is a VLSI device or an electronic system

DOI: 10.5121/ijci.2016.5433

307

International Journal on Cybernetics & Informatics (IJCI) Vol. 5, No. 4, August 2016

composed of many VLSI devices. It is also necessary to test components at various stages during

the manufacturing process. For example, in order to produce an electronic system, we must

produce ICs, use these ICs to assemble printed circuit boards (PCBs), and then use the PCBs to

assemble the system. There is general agreement with the rule of ten, which says that the cost of

detecting a faulty IC increases by an order of magnitude as we move through each stage of

manufacturing, from device level to board level to system level and finally to system operation in

the field. Electronic testing includes IC testing, PCB testing, and system testing at the various

manufacturing stages and, in some cases, during system operation. Testing is used not only to find

the fault-free devices, PCBs, and systems but also to improve production yield at the various

stages of manufacturing by analyzing the cause of defects when faults are encountered. In some

systems, periodic testing is performed to ensure fault-free system operation and to initiate repair

procedures when faults are detected. Hence, VLSI testing is important to designers, product

engineers, test engineers, managers, manufacturers, and end-users [1].

Fig. 1 explains the basic principle of digital testing. Binary patterns (or test vectors) are applied to

the inputs of the circuit. The response of the circuit is compared with the expected response [4, 5].

The circuit is considered good if the responses match. Otherwise the circuit is faulty. Obviously,

the quality of the tested circuit will depend upon the thoroughness of the test vectors. Generation

and evaluation of test vectors is one of the main objectives of this paper. VLSI chip testing is an

important part of the manufacturing process.

Fig.1. Principle of Testing

2. PROPOSED SYSTEM

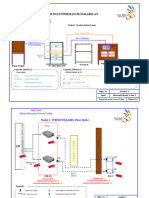

Simulation serves two distinct purposes in electronic design. First, it is used to verify the

correctness of the design and second, it verifies the tests. The simulation process is illustrated in

Figure 2. The process of realizing an electronic system begins with its specification, which

describes the input or output electrical behaviour (logical, analog, and timing) and other

characteristics (physical, environmental, etc.)

The specification is the starting point for the design activity. The process of synthesis produces an

interconnection of components called a net list. The design is verified by a true-value simulator.

True-value means that the simulator will compute the response for given input stimuli without

injecting any faults in the design. The input stimuli are also based on the specification.

Typically, these stimuli correspond to those input and output specifications that are either critical

or considered risky by the synthesis procedures. A frequently used strategy is to exercise all

functions with only critical data patterns. This is because the simulation of the exhaustive set of

308

International Journal on Cybernetics & Informatics (IJCI) Vol. 5, No. 4, August 2016

data patterns can be too expensive. However, the definition of critical often depends on

designers heuristics.

The true-value simulator in Figure 2. Computes the responses that a circuit would have produced

if the given input stimuli were applied. In a typical design verification scenario, the computed

responses are analyzed (either automatically, or interactively, or manually) to verify that the

designed net list performs according to the specification. If errors are found, suitable changes are

made, until responses to all stimuli match the specification.

Fig. 2. Block diagram for Design Verification

2.1. Test vector Minimization method

A combinational logic circuit is designed to add two 4-bit binary numbers. This circuit has 9

binary inputs and 5 outputs. To verify the circuit the number inputs required to the circuit is 2n ie.,

512, to completely verify the correctness of the implemented logic, we must simulate input

vectors and check that each produces the correct sum output.

This is clearly impractical. So, the designer must simulate some selected vectors. For example,

one may add pairs of integers where both are non-zero, one is zero, and both are zero shown in

Fig. 3. Then add a large number of randomly generated integer pairs. Such heuristic, though they

seem arbitrary, can effectively find many possible errors in the designed logic. The next example

illustrates a rather simple heuristic.

309

International Journal on Cybernetics & Informatics (IJCI) Vol. 5, No. 4, August 2016

Fig.3. Binary values applied to the FAs

Design verification heuristic for a ripple-carry adder: Fig. 4. shows the logic design of an adder

circuit. The basic building block in this design is a full-adder that adds two data bits, and one

carry bit, to produce sum and carry outputs, and respectively. For logic verification, one possible

strategy is to select a set of vectors that will apply all possible inputs to each full adder block. For

example, if we set and apply all four combinations shown in Fig.3.

Fig.4. Heuristic method for test minimization

Test vectors are generated from the logic block. Inject the SA1 or SA0 to one input to the full

adder through 2:1 Multiplexer. If the multiplexer input is 0, true bit will be selected and

multiplexer input is 1, faulty input is selected at the input of the full adder. Associated carry will

310

International Journal on Cybernetics & Informatics (IJCI) Vol. 5, No. 4, August 2016

be generated at the full adder and fault free and faulty outputs are compared. If the output is

different fault will be detected. Depending on the input, carry will be propagated, that effects the

next adder circuit also. 8 test vectors are sufficient to test the circuit. One significant advantage of

simulation is that all internal signals of the circuit can be examined. This reduces the complexity

of verification. The regular pattern in each vector allows us to expand the width of the vector

without increasing the number of vectors to an adder of arbitrarily large size.

3. RESULTS AND DISCUSSIONS

SA1 fault is injected to the 3rd full adder of a circuit, Fault free output and faulty outputs are

observed, if the two are different fault is identified and marked in the diagrams shown in Fig. 5.

and Fig.6.

Fig. 5. Fault is detected at the output of the full adder.

Fig.6. Fault is detected at the output of the full adder.

311

International Journal on Cybernetics & Informatics (IJCI) Vol. 5, No. 4, August 2016

Output is analyzed at the output of the full adder, that fault is propagated to next full adder, with

final carryout 0.

3.1. Register Transistor level diagram generated by tool shown in Fig.7.

Fig.7. Register transistor level diagram of a Ripple Carry Adder

Dynamic power, Leakage power, Switching power given by the tool is shown in the report.

Dynamic power, Leakage power, Switching power Report.

4. CONCLUSIONS

Test vector minimization method is designed in this paper. A heuristic method is used to reduce

the test vectors of a ripple carry adder. Testing operation of the ripple carry adder is performed.

This method reduces the number of test vectors to detect the faults. The regular pattern allows us

to expand the width of the vector without increasing the number of vectors to an adder of

arbitrary large size. Design compiler, Modelsim and Xilinx tools are used to analyze the method.

312

International Journal on Cybernetics & Informatics (IJCI) Vol. 5, No. 4, August 2016

REFERENCES

[1]

[2]

[3]

[4]

[5]

L.Bushnell, Vishwani D.Agrawal, Essentialsof Electronic Testing for Digital Memory & Mixed

Signal VLSI Circuits:Springer.

W. L.-T. Wang, C.-W. Wu, VLSI Test Principles and Architectures: Design for Testability.San

Francisco, CA: Morgan Kaufmann Publishers, 2006.

K.T. Cheng, S. Dey, M. Rodgers, and K.Roy, Test Challenges for Deep Sub-micronTechnologies,

in Proc. 37th Design Automation Conf., June 2000, pp. 142-149.

SM.Thamarai, K.Kuppusamy, T.Meyyappan, A New Algorithm for Fault based Test Minimization

in Combinational Circuits, International Journal of Computing and Applications, ISSN 0973- 5704,

Vol.5, No.1, pp.77-88, June 2010.

B.Pratyusha ,J.L.V.Ramana Kumari. Dr.M.Asha Rani , FPGA Implementation of High Speed Test

Pattern Generator For BIST . International Journal of Engineering Research and Technology, ISSN:

2278-0181, Vol.2 , Issue 11, Nov-2013.

313

Potrebbero piacerti anche

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDa EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeValutazione: 4 su 5 stelle4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingDa EverandThe Little Book of Hygge: Danish Secrets to Happy LivingValutazione: 3.5 su 5 stelle3.5/5 (400)

- Call For Papers-International Journal On Cybernetics & Informatics (IJCI)Documento2 pagineCall For Papers-International Journal On Cybernetics & Informatics (IJCI)James MorenoNessuna valutazione finora

- A Review of Cybersecurity As An Effective Tool For Fighting Identity Theft Across The United StatesDocumento12 pagineA Review of Cybersecurity As An Effective Tool For Fighting Identity Theft Across The United StatesJames MorenoNessuna valutazione finora

- Call For Papers-International Journal On Cybernetics & Informatics (IJCI)Documento2 pagineCall For Papers-International Journal On Cybernetics & Informatics (IJCI)James MorenoNessuna valutazione finora

- Call For Papers-International Journal On Cybernetics & Informatics (IJCI)Documento2 pagineCall For Papers-International Journal On Cybernetics & Informatics (IJCI)James MorenoNessuna valutazione finora

- Sliding Window Sum Algorithms For Deep Neural NetworksDocumento8 pagineSliding Window Sum Algorithms For Deep Neural NetworksJames MorenoNessuna valutazione finora

- International Journal On Cloud Computing: Services and Architecture (IJCCSA)Documento2 pagineInternational Journal On Cloud Computing: Services and Architecture (IJCCSA)Anonymous roqsSNZNessuna valutazione finora

- Call For Papers-International Journal On Cybernetics & Informatics (IJCI)Documento2 pagineCall For Papers-International Journal On Cybernetics & Informatics (IJCI)James MorenoNessuna valutazione finora

- International Journal On Cloud Computing: Services and Architecture (IJCCSA)Documento2 pagineInternational Journal On Cloud Computing: Services and Architecture (IJCCSA)Anonymous roqsSNZNessuna valutazione finora

- Investigating The Future of Engineer of 2020' Attributes: Moving From Access To Engagement & Sense of BelongingDocumento11 pagineInvestigating The Future of Engineer of 2020' Attributes: Moving From Access To Engagement & Sense of BelongingJames MorenoNessuna valutazione finora

- A New Method To Explore The Integer Partition ProblemDocumento9 pagineA New Method To Explore The Integer Partition ProblemJames MorenoNessuna valutazione finora

- Call For Papers-International Journal On Cybernetics & Informatics (IJCI)Documento2 pagineCall For Papers-International Journal On Cybernetics & Informatics (IJCI)James MorenoNessuna valutazione finora

- Call For Papers-International Journal On Cybernetics & Informatics (IJCI)Documento2 pagineCall For Papers-International Journal On Cybernetics & Informatics (IJCI)James MorenoNessuna valutazione finora

- International Journal On Cloud Computing: Services and Architecture (IJCCSA)Documento2 pagineInternational Journal On Cloud Computing: Services and Architecture (IJCCSA)Anonymous roqsSNZNessuna valutazione finora

- Improving User Experience With Online Learning Using Analytical Comparasions Between Instructors & Students of Qatar UniversityDocumento26 pagineImproving User Experience With Online Learning Using Analytical Comparasions Between Instructors & Students of Qatar UniversityJames MorenoNessuna valutazione finora

- Advancesin Vision Computing:An International Journal (AVC) : Call For PapersDocumento2 pagineAdvancesin Vision Computing:An International Journal (AVC) : Call For PapersAnonymous zsOsFoNessuna valutazione finora

- Call For Papers-International Journal On Cybernetics & Informatics (IJCI)Documento2 pagineCall For Papers-International Journal On Cybernetics & Informatics (IJCI)James MorenoNessuna valutazione finora

- Scope & Topics: ISSN: 0976-9404 (Online) 2229-3949 (Print)Documento2 pagineScope & Topics: ISSN: 0976-9404 (Online) 2229-3949 (Print)ijgcaNessuna valutazione finora

- Advancesin Vision Computing:An International Journal (AVC) : Call For PapersDocumento2 pagineAdvancesin Vision Computing:An International Journal (AVC) : Call For PapersAnonymous zsOsFoNessuna valutazione finora

- International Journal of Game Theory and Technology (IJGTT)Documento2 pagineInternational Journal of Game Theory and Technology (IJGTT)CS & ITNessuna valutazione finora

- Call For Papers - InformaticsEngineering, anInternationalJournal (IEIJ)Documento2 pagineCall For Papers - InformaticsEngineering, anInternationalJournal (IEIJ)Anonymous w6iqRPANessuna valutazione finora

- International Journal On Cybernetics & Informatics (IJCI)Documento2 pagineInternational Journal On Cybernetics & Informatics (IJCI)James MorenoNessuna valutazione finora

- International Journal On Cloud Computing: Services and Architecture (IJCCSA)Documento2 pagineInternational Journal On Cloud Computing: Services and Architecture (IJCCSA)Anonymous roqsSNZNessuna valutazione finora

- International Journal On Cybernetics & Informatics (IJCI)Documento2 pagineInternational Journal On Cybernetics & Informatics (IJCI)James MorenoNessuna valutazione finora

- International Journal On Cloud Computing: Services and Architecture (IJCCSA)Documento2 pagineInternational Journal On Cloud Computing: Services and Architecture (IJCCSA)Anonymous roqsSNZNessuna valutazione finora

- International Journal On Cloud Computing: Services and Architecture (IJCCSA)Documento2 pagineInternational Journal On Cloud Computing: Services and Architecture (IJCCSA)Anonymous roqsSNZNessuna valutazione finora

- International Journal On Cybernetics & Informatics (IJCI)Documento2 pagineInternational Journal On Cybernetics & Informatics (IJCI)James MorenoNessuna valutazione finora

- International Journal On Cloud Computing: Services and Architecture (IJCCSA)Documento2 pagineInternational Journal On Cloud Computing: Services and Architecture (IJCCSA)Anonymous roqsSNZNessuna valutazione finora

- International Journal On Cybernetics & Informatics (IJCI)Documento2 pagineInternational Journal On Cybernetics & Informatics (IJCI)James MorenoNessuna valutazione finora

- Teaching and Learning With Ict Tools: Issues and ChallengesDocumento8 pagineTeaching and Learning With Ict Tools: Issues and ChallengesJames MorenoNessuna valutazione finora

- International Journal On Cloud Computing: Services and Architecture (IJCCSA)Documento2 pagineInternational Journal On Cloud Computing: Services and Architecture (IJCCSA)Anonymous roqsSNZNessuna valutazione finora

- Shoe Dog: A Memoir by the Creator of NikeDa EverandShoe Dog: A Memoir by the Creator of NikeValutazione: 4.5 su 5 stelle4.5/5 (537)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDa EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceValutazione: 4 su 5 stelle4/5 (895)

- The Yellow House: A Memoir (2019 National Book Award Winner)Da EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Valutazione: 4 su 5 stelle4/5 (98)

- The Emperor of All Maladies: A Biography of CancerDa EverandThe Emperor of All Maladies: A Biography of CancerValutazione: 4.5 su 5 stelle4.5/5 (271)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDa EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryValutazione: 3.5 su 5 stelle3.5/5 (231)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDa EverandNever Split the Difference: Negotiating As If Your Life Depended On ItValutazione: 4.5 su 5 stelle4.5/5 (838)

- Grit: The Power of Passion and PerseveranceDa EverandGrit: The Power of Passion and PerseveranceValutazione: 4 su 5 stelle4/5 (588)

- On Fire: The (Burning) Case for a Green New DealDa EverandOn Fire: The (Burning) Case for a Green New DealValutazione: 4 su 5 stelle4/5 (74)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDa EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureValutazione: 4.5 su 5 stelle4.5/5 (474)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDa EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaValutazione: 4.5 su 5 stelle4.5/5 (266)

- The Unwinding: An Inner History of the New AmericaDa EverandThe Unwinding: An Inner History of the New AmericaValutazione: 4 su 5 stelle4/5 (45)

- Team of Rivals: The Political Genius of Abraham LincolnDa EverandTeam of Rivals: The Political Genius of Abraham LincolnValutazione: 4.5 su 5 stelle4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDa EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyValutazione: 3.5 su 5 stelle3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDa EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreValutazione: 4 su 5 stelle4/5 (1090)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDa EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersValutazione: 4.5 su 5 stelle4.5/5 (344)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Da EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Valutazione: 4.5 su 5 stelle4.5/5 (121)

- Her Body and Other Parties: StoriesDa EverandHer Body and Other Parties: StoriesValutazione: 4 su 5 stelle4/5 (821)

- Product Highlights: 28PW6302 28PW6322Documento18 pagineProduct Highlights: 28PW6302 28PW6322VoowooNessuna valutazione finora

- CLRF Call BCFDocumento5 pagineCLRF Call BCFVipin Chalakutty CNessuna valutazione finora

- SCH VsdSpeedstar2000 UmDocumento93 pagineSCH VsdSpeedstar2000 Umyasser_nasef5399Nessuna valutazione finora

- Presentation List Int 2023Documento16 paginePresentation List Int 2023125Jonwin Fidelis FamNessuna valutazione finora

- Coship SD Mpeg 4 ManualDocumento8 pagineCoship SD Mpeg 4 ManualpravnNessuna valutazione finora

- WP DP Series Brochure 20210115Documento4 pagineWP DP Series Brochure 20210115baraiyahetal26Nessuna valutazione finora

- D Link - Ant024Documento2 pagineD Link - Ant024hzhendykiawanNessuna valutazione finora

- Physics Project WorkDocumento15 paginePhysics Project WorkSidhant KaushikNessuna valutazione finora

- PVR7K User Manual (Release 2.7) PDFDocumento226 paginePVR7K User Manual (Release 2.7) PDFAgus KsNessuna valutazione finora

- Tesys D Lc1d25bdDocumento7 pagineTesys D Lc1d25bdw.priatmadiNessuna valutazione finora

- PSU's Common Syllabus For Electrical Engineering Non-Technical: General StudyDocumento2 paginePSU's Common Syllabus For Electrical Engineering Non-Technical: General StudyJitendra TambeNessuna valutazione finora

- User Manual OpenStage 60-80 HFA HP3000-HP5000Documento214 pagineUser Manual OpenStage 60-80 HFA HP3000-HP5000imarestiNessuna valutazione finora

- 4CH HDMI To ISDB-T Encoder Modulator User's Manual PDFDocumento28 pagine4CH HDMI To ISDB-T Encoder Modulator User's Manual PDFVladimir PeñarandaNessuna valutazione finora

- Wein Bridge OscillatorDocumento3 pagineWein Bridge OscillatorSakshi GosaviNessuna valutazione finora

- ECG-1103B Service ManualDocumento13 pagineECG-1103B Service ManualRaniel Aris Ligsay100% (1)

- Vc709-Pcie-Xtp237-2013 2-cDocumento55 pagineVc709-Pcie-Xtp237-2013 2-cteo2005Nessuna valutazione finora

- Communication Systems (ELE 3103) RCS (MAKE UP) 2017Documento2 pagineCommunication Systems (ELE 3103) RCS (MAKE UP) 2017devangNessuna valutazione finora

- Crearea Unei Lumi Fizice Şi Digitale Conectate Şi Integrate: Servicii, Posibilităţi Şi Tehnologii Generice 6G"Documento64 pagineCrearea Unei Lumi Fizice Şi Digitale Conectate Şi Integrate: Servicii, Posibilităţi Şi Tehnologii Generice 6G"start-up.roNessuna valutazione finora

- Test Project Inc 2022Documento6 pagineTest Project Inc 2022yoanaNessuna valutazione finora

- EES351 2020 Postmidterm HWDocumento48 pagineEES351 2020 Postmidterm HWAhmed MoosaniNessuna valutazione finora

- Te 18Documento75 pagineTe 18Ritika BakshiNessuna valutazione finora

- An Overview of 3D Integrated Circuits: Vachan Kumar Azad NaeemiDocumento3 pagineAn Overview of 3D Integrated Circuits: Vachan Kumar Azad NaeemiMeghanand KumarNessuna valutazione finora

- FM 8900S PDFDocumento4 pagineFM 8900S PDFsridharNessuna valutazione finora

- Digital Logic Design: VHDL Coding For Fpgas Unit 6Documento15 pagineDigital Logic Design: VHDL Coding For Fpgas Unit 6Srinivas CherukuNessuna valutazione finora

- Furman AR1215 Power Conditioning (120vac)Documento18 pagineFurman AR1215 Power Conditioning (120vac)Bob FarlowNessuna valutazione finora

- Datasheet 24c64 24c32 PDFDocumento26 pagineDatasheet 24c64 24c32 PDFLuix TiradoNessuna valutazione finora

- Fuente 32ld874htDocumento13 pagineFuente 32ld874htAntonioCésarUtrera100% (3)

- Motorola S10-HD Bluetooth Headphones Manual (EN)Documento22 pagineMotorola S10-HD Bluetooth Headphones Manual (EN)Jeff0% (2)

- Module 1 It Application Tools in Business - CompressDocumento38 pagineModule 1 It Application Tools in Business - CompressJames Ryan AlzonaNessuna valutazione finora

- Simocode Pro CDocumento2 pagineSimocode Pro CHowk RiosNessuna valutazione finora