Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Agent in ODI

Caricato da

Manohar Reddy0 valutazioniIl 0% ha trovato utile questo documento (0 voti)

40 visualizzazioni4 paginehi

Copyright

© © All Rights Reserved

Formati disponibili

DOCX, PDF, TXT o leggi online da Scribd

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentohi

Copyright:

© All Rights Reserved

Formati disponibili

Scarica in formato DOCX, PDF, TXT o leggi online su Scribd

0 valutazioniIl 0% ha trovato utile questo documento (0 voti)

40 visualizzazioni4 pagineAgent in ODI

Caricato da

Manohar Reddyhi

Copyright:

© All Rights Reserved

Formati disponibili

Scarica in formato DOCX, PDF, TXT o leggi online su Scribd

Sei sulla pagina 1di 4

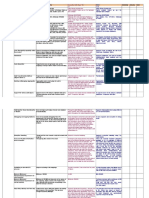

Agent in ODI

1 - If I have an agent running as a "scheduler" agent, do I also

have to set up a separate "listener" agent or will the scheduler do

both tasks?

2 - When setting up the ODIPARAMS.bat file for use in scheduling,

you have to define a work repository - what happens if you have

multiple work repositories (i.e. for dev, test and prod) or does it

not make in difference which work repository it is using (and in

which case what is the point of specifying a work repository).

1) A "scheduler" agent has all the functionality of a "listener"

agent as well, so you don't need two.

2) Because you tie a scheduler agent to a specific repository,

you should create separate scheduler agents for each of

your different repositories. (Although not recommended, and

advised against, a scheduler agent can act as a listener

agent for tasks in any repository. The repository information

is passed to the agent as part of the scenario invocation

parameters)

- "listener" type agent, which can "work for anybody", you pass it

all parameters at invocation time

- "scheduler" type agent which takes it parameters from the

odiparams file for connecting to the repository in order to read its

schedules.

- "command line" - where you invoke a scenario from the

command line, (startscen) in which case it will also read the

odiparams file for the repository info (you don't want to have to

pass all those in on the command line)

In the case where you want multiple scheduler agents, you set up

additional odiparams.bat/sh file, and corresponding

scheduleragent.bat/sh, which calls the appropriate one.

Faster and simpler development and maintenance:

The declarative rules driven approach to data integration

greatly reduces the learning curve of the product and

increases developer productivity while facilitating ongoing

maintenance. This approach separates the definition of the

processes from their actual implementation, and separates

the declarative rules (the "what") from the data flows (the

"how").

Data quality firewall: Oracle Data Integrator ensures that

faulty data is automatically detected and recycled before

insertion in the target application. This is performed without

the need for programming, following the data integrity rules

and constraints defined both on the target application and in

Oracle Data Integrator.

Better execution performance: traditional data

integration software (ETL) is based on proprietary engines

that perform data transformations row by row, thus limiting

performance. By implementing an E-LT architecture, based

on your existing RDBMS engines and SQL, you are capable of

executing data transformations on the target server at a setbased level, giving you much higher performance.

Simpler and more efficient architecture: the E-LT

architecture removes the need for an ETL Server sitting

between the sources and the target server. It utilizes the

source and target servers to perform complex

transformations, most of which happen in batch mode when

the server is not busy processing end-user queries.

Platform Independence: Oracle Data Integrator supports

all platforms, hardware and OSs with the same software.

Data Connectivity: Oracle Data Integrator supports all

RDBMSs including all leading Data Warehousing platforms

such as Oracle, Exadata, Teradata, IBM DB2, Netezza,

Sybase IQ and numerous other technologies such as flat

files, ERPs, LDAP, XML.

Cost-savings: the elimination of the ETL Server and ETL

engine reduces both the initial hardware and software

acquisition and maintenance costs. The reduced learning

curve and increased developer productivity significantly

reduce the overall labor costs of the project, as well as the

cost of ongoing enhancements.

Q) How Many Work Repositories can be created in a project?

You can create multiple work repositories for single master

repository. So there is no specific number. All work repositories

will use information stored in master repository (topology, agents

etc). And projects are stored in work repository. So you cannot

access project stored in work repository A inside WR B. You need

to import /export the project to other WR.

Q) What is remote file in odi? And what is the purpose of the

remote file?

Remote file is nothing but if you want to process any files

available in other system which access as a remote from your

local system. So if odi is installed in your local and you want

process those remote files then you have to use one agent

running in your remote system.

I have 10 interfaces in packages. if i got error at 6th interface

then how can i rollback previous all 5 interfaces?

I have 10 interfaces in packages. If I got error at 6th interface

then how can i restart from 6th interface?

For 1st one: You have to make the dml operation for all interfaces

in one transaction and at the end you have to commit that is after

10th interface.

For 2nd one. You have to delete the drop temp table step from the

LKM, CKM, IKM (Might be the last step of each knowledge module.

Just check it once from your side). So that whenever you will

restart the session all records will be available in your temp

tables. At the same time you have arrange the transaction for

INSERT ROWS STEP (i.e. I$ to Target Table). So that this

transaction wont be executed.

Potrebbero piacerti anche

- 2B Cover LetterDocumento2 pagine2B Cover Lettercarolinangelica50% (4)

- Abinitio InterviewDocumento70 pagineAbinitio InterviewChandni Kumari100% (5)

- Oracle Notes PDFDocumento32 pagineOracle Notes PDFRam Lakhan Verma75% (16)

- Oracle Notes PDFDocumento32 pagineOracle Notes PDFRam Lakhan Verma75% (16)

- ODI Interview Questions and AnswersDocumento13 pagineODI Interview Questions and AnswersTravis Pearson88% (8)

- TALEND Open Studio For Data IntegrationDocumento26 pagineTALEND Open Studio For Data IntegrationSrinivas Madhira50% (2)

- Data StageDocumento3 pagineData StageSathishNessuna valutazione finora

- Filenet GlossaryDocumento21 pagineFilenet GlossaryleogilsNessuna valutazione finora

- ETL Data Warehouse SQL Testing Interview Questions and AnswersDocumento29 pagineETL Data Warehouse SQL Testing Interview Questions and AnswersManohar Reddy100% (4)

- RPA Course ContentDocumento3 pagineRPA Course ContentUday KiranNessuna valutazione finora

- EWM-MES Direct Integration For Receiving HUs in EWMDocumento11 pagineEWM-MES Direct Integration For Receiving HUs in EWMabhijitknNessuna valutazione finora

- Template ProgrammingDocumento80 pagineTemplate Programmingmabderhakim1075100% (8)

- Car Database API - Global Specifications Vehicle DataDocumento5 pagineCar Database API - Global Specifications Vehicle DataDragan ZhivaljevikjNessuna valutazione finora

- Lab 1 Introduction To MS Access: Fig. 1 Database WindowDocumento6 pagineLab 1 Introduction To MS Access: Fig. 1 Database WindowDeepakNessuna valutazione finora

- Delete Duplicate Records From A Table: Task For SQLDocumento12 pagineDelete Duplicate Records From A Table: Task For SQLShashi ShirkeNessuna valutazione finora

- Ind Us Oft 1Documento8 pagineInd Us Oft 1edevaldosimasNessuna valutazione finora

- Chapter 1. Product OverviewDocumento8 pagineChapter 1. Product OverviewLavanya ReddyNessuna valutazione finora

- When Do You Go For Data Transfer Transformation: BenefitsDocumento5 pagineWhen Do You Go For Data Transfer Transformation: BenefitsRamkoti VemulaNessuna valutazione finora

- Task Toolkit Manual For Indusoft Web Studio V6.1+Sp3Documento28 pagineTask Toolkit Manual For Indusoft Web Studio V6.1+Sp3julio perezNessuna valutazione finora

- Step by Step Installation of Oracle EBS R12Documento38 pagineStep by Step Installation of Oracle EBS R12Prasad KaruturiNessuna valutazione finora

- Etl Tool ComparisionDocumento7 pagineEtl Tool ComparisionShravan KumarNessuna valutazione finora

- Interview QuestionsDocumento70 pagineInterview QuestionsjaikolariyaNessuna valutazione finora

- Foglight Vs OEMDocumento6 pagineFoglight Vs OEMnsliskovNessuna valutazione finora

- Ab Initio MeansDocumento19 pagineAb Initio MeansVenkat PvkNessuna valutazione finora

- Pipeline Parallelism 2. Partition ParallelismDocumento12 paginePipeline Parallelism 2. Partition ParallelismVarun GuptaNessuna valutazione finora

- Operating System: COPE/Technical Test/Interview/Database Questions & AnswersDocumento25 pagineOperating System: COPE/Technical Test/Interview/Database Questions & AnswersVasu DevanNessuna valutazione finora

- Selector Web - DefinitionsDocumento8 pagineSelector Web - Definitionsa_kumkumaNessuna valutazione finora

- DataStage Configuration FileDocumento10 pagineDataStage Configuration FileVasu SrinivasNessuna valutazione finora

- Informatica Vs OdiDocumento5 pagineInformatica Vs OdiRajesh Kumar100% (1)

- Interview Questions For Oracle Apps DBADocumento14 pagineInterview Questions For Oracle Apps DBAsivakrishnaNessuna valutazione finora

- Data Services Code MigrationDocumento8 pagineData Services Code MigrationkusumagmatNessuna valutazione finora

- Ab InitioFAQ2Documento14 pagineAb InitioFAQ2Sravya ReddyNessuna valutazione finora

- BCQuestionDocumento12 pagineBCQuestionRamesh NatarajanNessuna valutazione finora

- Informatica ImpDocumento141 pagineInformatica Impjanardana janardanaNessuna valutazione finora

- ODI 12c - Basic ConceptsDocumento8 pagineODI 12c - Basic Conceptsrambabuetlinfo149Nessuna valutazione finora

- Apps Faqs by AtulDocumento16 pagineApps Faqs by AtulMohammad NizamuddinNessuna valutazione finora

- Reportnet3 Database Feasibility Study PostgreSQLDocumento5 pagineReportnet3 Database Feasibility Study PostgreSQLDimas WelliNessuna valutazione finora

- Datastage NotesDocumento86 pagineDatastage Notesbimaljsr123Nessuna valutazione finora

- Data Stage ArchitectureDocumento9 pagineData Stage Architecturejbk111Nessuna valutazione finora

- Data Stage ETL QuestionDocumento11 pagineData Stage ETL Questionrameshgrb2000Nessuna valutazione finora

- Sapinterview QuesDocumento5 pagineSapinterview Quessatya1207Nessuna valutazione finora

- DWH & DatastageDocumento5 pagineDWH & DatastageMahesh GhattamaneniNessuna valutazione finora

- Datastage Interview QuestionsDocumento18 pagineDatastage Interview QuestionsGanesh Kumar100% (1)

- Teste - Professional - RespostasDocumento24 pagineTeste - Professional - Respostasmahesh manchalaNessuna valutazione finora

- Data Stage PDFDocumento37 pagineData Stage PDFpappujaiswalNessuna valutazione finora

- Recovered DataStage TipDocumento115 pagineRecovered DataStage TipBhaskar Reddy100% (1)

- G-06-Autonomous Database - Serverless and Dedicated-TranscriptDocumento7 pagineG-06-Autonomous Database - Serverless and Dedicated-TranscriptRodel G. SanchezNessuna valutazione finora

- Es Rtos Exp 5 - SKDocumento15 pagineEs Rtos Exp 5 - SKAkshara VNessuna valutazione finora

- What Is Oracle Data Integrator (ODI) ?Documento8 pagineWhat Is Oracle Data Integrator (ODI) ?surinder_singh_69100% (1)

- Xray: A Function Call Tracing SystemDocumento9 pagineXray: A Function Call Tracing SystembacondroppedNessuna valutazione finora

- IDQ LearningDocumento33 pagineIDQ LearningPradeep Kothakota0% (1)

- Issues DatastageDocumento4 pagineIssues DatastagerkpoluNessuna valutazione finora

- Datastage PointsDocumento26 pagineDatastage PointsNaresh KumarNessuna valutazione finora

- Interview QuestionsDocumento52 pagineInterview Questionsgetsatya347Nessuna valutazione finora

- ETL Tools: Basic Details About InformaticaDocumento121 pagineETL Tools: Basic Details About InformaticaL JanardanaNessuna valutazione finora

- Bloor ReportDocumento12 pagineBloor ReportGanesh ManoharanNessuna valutazione finora

- DataStage Theory PartDocumento18 pagineDataStage Theory PartJesse KotaNessuna valutazione finora

- Abinitio QuestionsDocumento2 pagineAbinitio Questionstirupatirao pasupulatiNessuna valutazione finora

- Informatica Experienced Interview Questions - Part 1Documento5 pagineInformatica Experienced Interview Questions - Part 1SnehalNessuna valutazione finora

- SAS Programming Guidelines Interview Questions You'll Most Likely Be Asked: Job Interview Questions SeriesDa EverandSAS Programming Guidelines Interview Questions You'll Most Likely Be Asked: Job Interview Questions SeriesNessuna valutazione finora

- Oracle PL/SQL Interview Questions You'll Most Likely Be Asked: Job Interview Questions SeriesDa EverandOracle PL/SQL Interview Questions You'll Most Likely Be Asked: Job Interview Questions SeriesNessuna valutazione finora

- Concise Oracle Database For People Who Has No TimeDa EverandConcise Oracle Database For People Who Has No TimeNessuna valutazione finora

- SAP interface programming with RFC and VBA: Edit SAP data with MS AccessDa EverandSAP interface programming with RFC and VBA: Edit SAP data with MS AccessNessuna valutazione finora

- How To Solve ORA-28001 - The Password Has Expired - Hectorino's BlogDocumento3 pagineHow To Solve ORA-28001 - The Password Has Expired - Hectorino's BlogManohar ReddyNessuna valutazione finora

- Informatica, Datawarehouse, Oracle, Unix - FINAL INTERVIEW QUESTIONS (ETL - INFORMATICA)Documento63 pagineInformatica, Datawarehouse, Oracle, Unix - FINAL INTERVIEW QUESTIONS (ETL - INFORMATICA)Manohar ReddyNessuna valutazione finora

- PL - SQL ConceptsDocumento10 paginePL - SQL ConceptsManohar ReddyNessuna valutazione finora

- ODI Export Log (Sree Ram Chennakrishnan)Documento4 pagineODI Export Log (Sree Ram Chennakrishnan)Manohar ReddyNessuna valutazione finora

- Stand Alone Agent CreaionDocumento20 pagineStand Alone Agent CreaionManohar ReddyNessuna valutazione finora

- ODI and OBIEEDocumento10 pagineODI and OBIEEManohar ReddyNessuna valutazione finora

- ODI Export Log (Sree Ram Chennakrishnan)Documento4 pagineODI Export Log (Sree Ram Chennakrishnan)Manohar ReddyNessuna valutazione finora

- PL - SQL ConceptsDocumento10 paginePL - SQL ConceptsManohar ReddyNessuna valutazione finora

- Oracle SQL & PL - SQL - SQL - Finding NTH Highest Salary25 PDFDocumento3 pagineOracle SQL & PL - SQL - SQL - Finding NTH Highest Salary25 PDFManohar ReddyNessuna valutazione finora

- Oracle SQL & PL - SQL - SQL - Finding NTH Highest Salary25 PDFDocumento3 pagineOracle SQL & PL - SQL - SQL - Finding NTH Highest Salary25 PDFManohar ReddyNessuna valutazione finora

- PL - SQL ConceptsDocumento10 paginePL - SQL ConceptsManohar ReddyNessuna valutazione finora

- Oracle SQL & PL - SQL - SQL - Finding Second Highest Salary26 PDFDocumento3 pagineOracle SQL & PL - SQL - SQL - Finding Second Highest Salary26 PDFManohar ReddyNessuna valutazione finora

- Interview Questions For QA TesterDocumento27 pagineInterview Questions For QA TesterManohar ReddyNessuna valutazione finora

- Oracle SQL & PL - SQL - Explicit Vsimplici Joins14 PDFDocumento3 pagineOracle SQL & PL - SQL - Explicit Vsimplici Joins14 PDFManohar ReddyNessuna valutazione finora

- PL - SQL ConceptsDocumento10 paginePL - SQL ConceptsManohar ReddyNessuna valutazione finora

- SQL Cheat SheetDocumento1 paginaSQL Cheat Sheetmitch4221Nessuna valutazione finora

- Odi 12CDocumento200 pagineOdi 12CNarayana Ankireddypalli83% (6)

- Top Ten Best Practices in Oracle Data Integrator ProjectsDocumento7 pagineTop Ten Best Practices in Oracle Data Integrator ProjectsManohar ReddyNessuna valutazione finora

- Marquee Odi-Oracledataintegrator MarqueeDocumento42 pagineMarquee Odi-Oracledataintegrator MarqueeManohar ReddyNessuna valutazione finora

- Odi12cDocumento8 pagineOdi12cManohar ReddyNessuna valutazione finora

- 25 Best Agile Testing Interview Questions and AnswersDocumento7 pagine25 Best Agile Testing Interview Questions and AnswersManohar ReddyNessuna valutazione finora

- PLSQL in 21 Hours - Oracle Interview Based Queries-4Documento3 paginePLSQL in 21 Hours - Oracle Interview Based Queries-4Manohar ReddyNessuna valutazione finora

- Dimension Hierarchies in OBIEE - Level-Based Hierarchy and Parent-Child HierarchyDocumento2 pagineDimension Hierarchies in OBIEE - Level-Based Hierarchy and Parent-Child HierarchyManohar ReddyNessuna valutazione finora

- Single Row Sub Query (Sub Query Returns Single Row) Multiple Row Sub Query (Sub Query Returns Multiple Rows)Documento8 pagineSingle Row Sub Query (Sub Query Returns Single Row) Multiple Row Sub Query (Sub Query Returns Multiple Rows)Manohar Reddy50% (2)

- Etl Testing Guide - Types of Etl TestingDocumento6 pagineEtl Testing Guide - Types of Etl TestingManohar ReddyNessuna valutazione finora

- Software Testing Interview QuestionsDocumento14 pagineSoftware Testing Interview QuestionsManohar ReddyNessuna valutazione finora

- Chapter 5 - Implementing Dynamic Access ControDocumento32 pagineChapter 5 - Implementing Dynamic Access ControSULTAN SksaNessuna valutazione finora

- Microsoft Aspnet MVC CoreDocumento69 pagineMicrosoft Aspnet MVC CorelookloNessuna valutazione finora

- Unit IiDocumento100 pagineUnit IiVani BaskaranNessuna valutazione finora

- BLISS 03-T3 Unit 1 Slides v3.0 Final ControledDocumento72 pagineBLISS 03-T3 Unit 1 Slides v3.0 Final ControledNedra BenletaiefNessuna valutazione finora

- What Is BigdataDocumento5 pagineWhat Is BigdatavaddeseetharamaiahNessuna valutazione finora

- I O T M A: AVA RogrammingDocumento27 pagineI O T M A: AVA RogrammingArshil NoorNessuna valutazione finora

- Out Put LogDocumento18 pagineOut Put Logajay_snghNessuna valutazione finora

- Database Management System (CSC631) : B+ TreeDocumento5 pagineDatabase Management System (CSC631) : B+ Treebharath rajNessuna valutazione finora

- Microsoft Fabric - James Serra - PublicDocumento54 pagineMicrosoft Fabric - James Serra - Publicousm.hamadouNessuna valutazione finora

- SAP BW Overview: Pennsylvania State System of Higher EducationDocumento15 pagineSAP BW Overview: Pennsylvania State System of Higher EducationSridhar KalyanNessuna valutazione finora

- Gym Management SystemDocumento9 pagineGym Management SystemRahithya AmbarapuNessuna valutazione finora

- How To Change IP AddressDocumento3 pagineHow To Change IP AddressMd Shadab AshrafNessuna valutazione finora

- CS101 MCQs For Final TermDocumento3 pagineCS101 MCQs For Final TermTaimoor Sultan100% (1)

- 2020 SaaS Trends ReportDocumento37 pagine2020 SaaS Trends ReportSubhro SenguptaNessuna valutazione finora

- Spin Locks and ContentionDocumento53 pagineSpin Locks and Contentionnsavi16eduNessuna valutazione finora

- Release Notes 9.6.11: Customer IssuesDocumento4 pagineRelease Notes 9.6.11: Customer Issuesgraf draculaNessuna valutazione finora

- BRD TemplateDocumento11 pagineBRD TemplateHussain ManzoorNessuna valutazione finora

- Sravan P V: Autodesk India (Role Employee of Kelly Services)Documento2 pagineSravan P V: Autodesk India (Role Employee of Kelly Services)Manoj MohandasNessuna valutazione finora

- Use Case - MulesoftDocumento2 pagineUse Case - MulesoftApoorv AgarwalNessuna valutazione finora

- Top 88 ODI Interview QuestionsDocumento16 pagineTop 88 ODI Interview QuestionsdilipNessuna valutazione finora

- B ISE Admin 27 PDFDocumento1.310 pagineB ISE Admin 27 PDFLê Trung LươngNessuna valutazione finora

- Python - Objective 08 Learn How To Use Arrays and Lists WorkbookDocumento25 paginePython - Objective 08 Learn How To Use Arrays and Lists WorkbookHana JuiceNessuna valutazione finora

- Advanced Computer ScienceDocumento43 pagineAdvanced Computer ScienceSwathi LoyaNessuna valutazione finora

- Firmware Update Guide: All VersionDocumento8 pagineFirmware Update Guide: All VersionMelvin FoongNessuna valutazione finora