Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Distributed Mobility Management Scheme With Mobility Routing Function at The Gateways

Caricato da

PoojaCambsTitolo originale

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Distributed Mobility Management Scheme With Mobility Routing Function at The Gateways

Caricato da

PoojaCambsCopyright:

Formati disponibili

Globecom 2012 - Wireless Networking Symposium

Distributed Mobility Management Scheme with Mobility

Routing Function at the Gateways

1

Petro P. Ernest1 , H. Anthony Chan2 , Olabisi E. Falowo1

Department of Electrical Engineering, University of Cape Town, Rondebosch 7701, South Africa

2

Futurewei Technologies, Plano, Texas, USA

Emails: prugambwa@yahoo.com; h.a.chan@ieee.org; bisi@crg.ee.uct.ac.za.

Abstract The current IP mobility management solutions

standardized by IETF rely on a centralized and static mobility

anchor point approach to manage mobility signaling and forward

data traffic. The approach requires all data traffic sent to a

Mobile Node (MN) to travel via the central anchor point

regardless of where the MN is currently attached in the Internet.

This leads to increased packet delivery latency as the packets

follow a non-optimal path via mobility anchor point in MNs home

network. This paper develops a distributed mobility management

scheme that releases the burden of the central mobility anchor by

distributing the mobility routing function to the gateway of each

sub-network. The scheme optimizes the data path for the MN that

has moved to a visited network, hence, reduces the packet delivery

latency. The paper presents in detail the scheme design,

operational mechanism and performance evaluation through

simulation. The simulation results show the improved packet

delivery latency as compared to non-optimized scheme in both less

loaded and heavily loaded network conditions.

Index TermsIP mobility management, distributed mobility

management

I. INTRODUCTION

The wireless network is being challenged with the rapidly

increasing volume of data traffic due to the current rapid

growth in number of mobile subscribers accessing wireless

data services, increased smart-phones and other mobile

broadband capable devices. To meet the demands for the

increasing number of mobile users and data traffic volume, a

distributed IP mobility management solution is required [1].

The current IP mobility management solutions make use of

a centralized and static mobility anchor point such as a Home

Agent (HA) in Mobile IPv6 [2] and Local Mobility Anchor

(LMA) in PMIPv6 [3] to manage both mobility signaling and

data traffic forwarding. The centralized mobility anchor point

is typically located in MNs home network. All data traffic

destined to MN home address (HoA) are intercepted by the

central mobility anchor and forwarded to the MN current point

of attachment. Routing the data traffic via the central mobility

anchor in the MNs home network to MN in a visited network

that is far away from the MNs home network and closer to

correspondent node (CN) may lead to sub-optimal routing path

and longer packet delivery latency. Distributed Mobility

Management (DMM) is one of the approaches to address these

problems [1]. Enhancing the current IP mobility management

solutions with distributed mobility management approach can

alleviate these problems [4], [5] [6], [7].

In this paper, we present in detail our Distributed Mobility

Management (DMM) scheme and evaluate its performance

through simulation. The scheme decomposes the mobility

management functions of the mobility anchor into location

management function and mobility routing function. Moreover,

the scheme co-locates the mobility routing function in the

gateway of each network or sub-network. The routing function

of the data-plane traffic of the MN is served by the local

mobility routing function at the gateway of the network.

The scheme maintains the MN location information in a

distributed database consisting of multiple servers in multiple

subnets, each in charge of a range of HoA. The distributed

route information database enables the visiting MNs to be

reachable at the HoA of each MN and enables optimizing the

packet routing path.

In summary, the contributions of this paper are as follows:

(i) a detailed explanation of the design and functional

operational of the proposed scheme that co-locate mobility

routing function at the gateways; and (ii) a comprehensive

simulation to investigate the performance benefit of the

proposed scheme under different network traffic loads and

increasing distance from the MN home network to the visited

network.

The rest of this paper is organized as follows. Section II

discusses the related work. Section III presents in detail the

DMM scheme. Section IV describes the simulation setup and

results obtained from the simulation. Finally, Section V

concludes the paper.

II. RELATED WORK

Distributed mobility management is presently a hot topic

under discussion by the IETF [8] to address the limitations of

the current IP mobility management solutions. The distributed

mobility management approach, distributes the mobility

management functions of the central mobility anchor,

HA/LMA, to different networks while bringing them closer to

the users, at the edge of the networks. The requirements for the

distributed mobility management solution have been discussed

in [9].

.

978-1-4673-0921-9/12/$31.00 2012 IEEE

5254

In [7] a mobility management scheme for flat architecture is

proposed. The scheme distributes the mobility management

functions at the access part of the network, at the access router

level. The implementation results under non loaded network

conditions showed that the scheme outperforms MIPv6 [10].

However, the scheme employs a static traffic anchoring where

the traffic flows remain anchored at the point of the initial

establishment regardless of where the MN has moved to.

Hence, when a MN moves far away from the traffic anchoring

point with a long lasting traffic, the resulting routing path can

become longer.

Authors [11], proposed two network-based distributed

mobility management schemes. The proposed schemes are (1)

fully distributed mobility management which unifies the

mobility functions of LMA and MAG in PMIPv6 at the access

part of the network, and (2) partially distributed mobility

management that separates the data plane of PMIPv6 from the

control plane and distributes the data plane at the access part of

the network. Nevertheless, the static traffic anchoring remains

unsolved problem which can result in a long routing path.

III. OUR DMM SCHEME APPROACH

In this section we propose a DMM scheme, which

decomposes mobility function of the mobility anchor (HA or

LMA) into three tasks namely: (a) allocation of home network

prefix( HNP), or HoA, to an MN that registers with the

network; (b) internetwork location management (LM) function:

managing and keeping track of the internetwork location of an

MN, which include a mapping of the HNP (or HoA) to the

mobility anchoring point that the MN is anchored to; and (c)

mobility routing (MR) function: intercepting packets to/from a

MNs HoA and forwarding the packets, based on the

internetwork location information, either to the destination or

to some other network element that knows how to forward

them to the destination.

Fig. 1 illustrates the DMM functional architecture, which

consists of: (1) the gateway routers (GWs) where the mobility

routing (MR) function is co-located, (2) the distributed

databases (LM) that maintain the mobility routing information

of the visiting MN, and (3) the Mobile Access Gateways

(MAGs) at the access router level that detect the MN

attachment to the access network and track its movements.

The gateway routers keep the binding information between

the MN Home Network Prefix (MN-HNP) and the MAG to

which the MN attaches. The routing function of the data-plane

traffic of the MN is served by the local MR function at the

gateway of the network that the MN attaches to. When the MN

moves to a visited network, the MR function of the visited

network keeps track of how to route packets to the mobile node

in order to ensure that the MN is reachable in the visited

network.

Fig. 1. DMM architecture for network-based mobility management with

MR co-located at the gateways.

The LM function is a distributed database with multiple

servers. The servers may individually be co-located with the

HNP (or HoA) allocation function in each network. The

servers in each network or sub-network keep the mapping of

MNs HNP to its care-of-address (CoA) in the MNs visited

network, IP address of the gateway of the visited network. For

example, when the MN is visiting GW2 network, LM1 keeps

the mapping between MN-HNP and GW2 IP address. Such

routing information in all networks forms distributed routing

information of all the visiting MNs. The gateways (GW) get

the location information of the MN in the visited network from

the respective distributed database server.

The MAG tracks the MN movement to and from the access

network and performs the registration on behalf of the MN.

In the following subsections, we describe the MN

registration procedures, data flow, and the handovers

mechanism of our scheme.

A. MN Registration

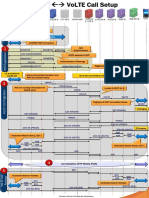

Fig. 2 illustrates the MN registration procedure in the

DMM domain of the proposed scheme. When a MN enters a

domain, the first MAG (i.e., MAG11) to which it attaches

registers the MN to the gateway router, GW1, using PMIPv6

[3] approach. MAG11 sends a proxy binding update (PBU)

massage to GW1. Then, GW1 in consultation with LM1

allocates the MN HNP, caches the binding between the MN

HNP and MAG11, and returns a proxy binding

acknowledgement (PBA) message to MAG11. MAG11 on

receiving the PBA message sends the router advertisement

(RA) to the MN. Finally, the MN configures its HoA. It is

important to note that it is the first network the MN attaches to

assigns a home IP address (HoA) to the MN; hence, it assumes

the role of MNs home network. For example, the MN first

attaches to GW1 network, hence, GW1 network is the home

network for the MN.

5255

C. MN handovers to visited network

Fig. 4 shows the signaling flow when the MN handovers

from the home network to visited network. When the MN

moves to GW2 network (visited network), it attaches to

MAG21. MAG21 then acquires the MNs ID and HNP, and

presents them to GW2 by sending the PBU message. On

receiving the PBU message, GW2 caches the binding between

MN-HNP and MAG2, and checks if it has binding information

of the MN-HNP, by comparing its prefix with MN-HNP.

Because the MN is visiting this network, GW2 will find out

that the MN-HNP does not belong to its network prefix(es),

hence, GW2 derives the MN home network from MN-HNP

information. Then, GW2 sends a notification message to the

MNs home network through PBU message. The notification

message includes the MNs ID and MN-HNP. It is assumed

that each GW network manages a unique set of HNP prefixes

and each network knows which HNP prefix belongs to which

network.

Fig. 2 . MN registration in DMM domain.

B. Data flow after the MN registration

Fig. 3 illustrates the packet flow from CN to MN when the

MN is in home network, GW1 network, before MN performs

handover. When the CN sends packet destined to MN HoA

(MN-HoA), the packet arrives at MAG31 which then forwards

the packet to GW3. On receiving packet, GW3 checks its

cache memory for MN-HoA binding information. If the biding

information is not found, GW3 uses its routing table to route

the packet to the MN home network (GW1) based on MN-HoA

prefix information. If the binding information is found, GW3

uses the cached information to tunnel the packet to the

destination network.

When the MN home network receives the PBU message, it

caches MN binding between the MN-HNP and the GW2 in

LM1, and returns PBA message with the MN-HNP. On

receiving the PBA message, GW2 sends the PBA message to

MAG21 including the HNP assigned in GW1 network. This

helps the MN to maintain the same IP address as it moves

across the proposed DMM domain. When MAG21 receives the

PBA message, it sends the RA with the IP prefix, which the

MN has configured at its home network. This procedure seems

to MN as if it is still in its home network.

LM1

On the arrival of the packet at GW1, GW1 checks its cache

memory for the MN-HoA and recognizes that the MN is still in

its network because the MN has not moved to visited network,

GW2 network. Then, GW1 tunnels packet to MAG11, which

then delivers the packet to MN.

MN

MAG21

GW1

GW2

MN attaches

Cache MN

binding in

LM1 (MN

HNP: GW2)

Get MNID &

MNHNP

PBU (MNID,

MNHNP)

PBU (MNID,

MNHNP)

GW3

Derive MN

Home network

using HNP &

Cache MAG2:

HNP

PBA (MNID,

MNHNP)

PBA (MNID,

MNHNP)

RA

Fig. 4. Signaling flow diagram when an MN moves to visited network.

Fig. 3. Data flows when the MN is in home network.

D. Data flow from the CN to the MN after handover to visited

network and route optimization process

Fig. 5 represents data flow from the CN to MN when the

MN has moved to GW2 network with ongoing communication.

When a packet arrives at GW1 and found that the MN has

moved to GW2 network, GW1 gets the MN current location

information from the LM1 and tunnels the packet to GW2

5256

which in turn delivers the packet to MN. Simultaneously, GW1

sends the location binding update information, PBU (GW2,

MN-HNP), to GW3 based on the source IP address found in

the received packet. On receiving the location binding

information that contains the MNs current location, GW3

caches this information in its cache memory and setup the

tunnel end point to GW2. Then, GW3 tunnels the subsequent

packets directly to GW2. When GW2 receives the packet,

caches the GW3 address from the source IP address of outer

header of the encapsulated packet, de-capsulate the packet and

tunnel it to MAG21. Finally, MAG21 de-capsulate packet and

deliver it to the MN. The cached information will be deleted

after a time out, when there is no more packet flow.

The MNs location information sent to GW3 by GW1

enables optimization of the routing path. Therefore, after the

location updates at GW3, the packet routing path will be CNGW3-GW2-MN. However, there will be few packets that will

go through GW1 during an interval when the first packet after

the MN handovers arrived at GW1 and when GW3 receives

update information about the MNs visited network. This is an

interval during which the routing path is being optimized.

latency the packet incurs when travelling from the CN to the

MN.

We consider a scenario where the MN is far away from its

home network, and it is in a visited network that is closer to the

CNs network. Hence, we consider two routing paths the

packet follows when travelling from CN to MN. These paths

are named optimal path and non-optimal path (sub-optimal

path). In non-optimal path, packet sent to MN while away

from home network travels via the MNs home network (GW1

network) and then tunneled to the network the MN is currently

visiting (GW2 network), as shown in Fig. 5. On the other hand,

in the optimal path the packet travels directly from CNs

network to MNs currently visited network. The optimal path is

achieved after optimizing the route soon after the MN

handovers to visited network as presented in Section III with

illustration in Fig. 5. Both optimal and non-optimal paths the

packets follow are simulated under the identical traffic loads

and the packet delivery latency performance is compared.

p41..

..p

44

Fig. 6. Simulation network topology in NS-2.

Fig. 5. Data flows from CN to MN before route optimization and after

route optimization.

IV. DMM SCHEME PERFORMANCE EVALUATION

In this section, we evaluate the performance of the

proposed scheme with the NS-2.29 network simulator with the

NIST PMIPv6 package [12], [13]. We extend the simulator to

support the distributed mobility scenarios.

In the internet, packet delivery latency is a significant

parameter affecting the users satisfaction with regard to

service. Therefore, the packet delivery latency is used as a

main performance evaluation metric. We define the packet

delivery latency as the length of time the packet takes to travel

from the source node to the destination node. For example, the

A. Simulation configuration

Fig. 6 shows the simulated network topology in NS-2. In

the simulation, the LM and the MR were co-located at GW1

and MR function was distributed in all others gateways. The

GW1 network presents the MNs home network, GW2 network

is the MNs visited network and GW3 network is the network

where the CN resides.

To demonstrate the fact that the MNs home network is far

away from the MNs visited network, the intermediate routers

(R0, R1 and R2) were placed in the path to the MNs home

network as illustrated in Fig. 6. To make our simulation closer

to the real Internet behaviors, four nodes were connected to the

inputs of each intermediate router (to both inputs side). The

nodes p11 p14 through p61 p64 generate background

traffic with an exponential distribution to congest the buffer at

the output of the routers to the link input by sending traffic to

the sink/null station connected to the next router. Each node

generates traffic load of 18.75Mbps under a network high

5257

loaded condition. A total load of 75Mbps (75% of the

transmission capacity) is generated at the buffer of the

intermediate routers in both output sides to the link. The

background traffic is received by sink (null) station connected

to the next router. For example a sink station sink_p11 frees

the background traffic generated from nodes p11, p12, p13 and

p14.

All the wired links were configured with bandwidth of

100Mbps. The wired link delay between intermediate routers

was configured to 0.25ms and the rest wired links the delay of

0.1ms was configured. The CN sends UDP CBR traffic to MN

of a packet size 1000 byte every 0.01s. The MN was

configured to move at a speed of 30m/s from its home network

to the visited network.

The topological distance from the MNs home network to

the MNs visited network was varied by increasing the number

of the intermediate routers in the path to MNs home network.

Three and six intermediate routers were used respectively to

emulate the increase in topological distance between MNs

visited network and the MNs home network. At these changes

the background traffic nodes were increased and configured in

a similar manner to generated congestion in the network at

each intermediate router.

B. Simulation results

Fig. 7 illustrates the effect of background traffic load

(congestion) on packet delivery latency for both non-optimal

path and optimal path. It was observed that the packet delivery

latency for the packet travelled through the non-optimal path

increases as the load of the background traffic increases.

Furthermore, it was observed that there is increased

randomness in packet delivery latency for the non-optimal

path. The reason is that the packet travelling through nonoptimal path encounters many intermediate nodes and the

increased path length. Both of these contribute the transmission

delay at each intermediate node and the propagation delay of

the link between the nodes. Furthermore, the increased

background traffic loads results to additional delay due to

processing and queuing at the intermediate nodes. These

further make the packets travelling through the non-optimal

path to experience large packet delivery latency.

The randomness is caused by the queuing delay variation at

each intermediate node along the path to the MNs home

network as the background traffic load changes. This has effect

on the QoS for real time streaming applications, voice and

video, since if a packet is delayed past its playout time, the

packet is effectively lost. Therefore, optimizing the path is

necessary in alleviating this problem.

In contrast, the packet delivery latency for the packets that

followed the optimal path is less or not influenced by the

background traffic load. This is because the path followed by

the packets has less intermediate nodes, hence, less

transmission and propagation latency, and reduced queuing

effects.

Fig. 7. Comparison of packet delivery latency between optimal and nonoptimal paths with change in background traffic load.

Fig. 8 represents the impact of the increased topological

distance of the MNs home network from the MNs visited

network to the packet delivery latency. The result is obtained

by varying the number of intermediate routers from three

routers to six routers in the path to the MNs home network

under identical background traffic loads. It is noted that as the

MN moves far away from its home network to the visited

network, the packet delivery latency is increased if the the path

is not optimised. This was observed by the increase in packet

delivery latency for the packets traveled through non-optimal

path when the MN has moved to the visited network. The

reason is that as the topological distance increases the packet

encounters a significant number of the intermediate nodes and

the path become longer. Both of these contribute to the

transmission delay at each node and propagation delay of the

link between the nodes. We also observe that there is increase

in randomness in the packet delivery latency. This effect is due

to queing delay variation at each intermediate nodes when the

background traffic load of 75Mbps (75% of transmission

capacity) is used.

Fig. 8. The influence of the increased distance between MNs home

network and visited network between optimal and non-optimal path

under the same network background traffic load.

It was also observed that the packet through the optimal

path was not influenced by the increased distance because the

packets do not follow the route through the MNs home

network which involves many intermediate nodes. This result

5258

confirms that the packet routed through the MNs home

network when the MN is far away from its home network and

closer to the CN network experiences large packet delivery

latency. Therefore, in real Internet the packet will actually

encounter many intermediate nodes if routed via the MNs

home network while the MN is far away from its home network

and is in visited network closer to the CN network. This will

result to the increased packet delivery latency.

Fig.9 illustrates the effectiveness and fast path optimization

of our proposed scheme after MN performs handover. The

Figure shows packet delivery latency variation when the MN is

in home network and visited network for both optimal and nonoptimal path. The MN moves from its home network to visited

network with ongoing communication from the CN. It is noted

that after the MN has performed handover there are few

packets that experience the longer latency in our proposed path

optimization. The reason is that after handover, ours scheme

uses the first packet that travels to the MNs home network to

trigger the route optimization process. Therefore, few packets

will travel via the MNs home network before the CN network

is updated about the network the MN is visiting. However, it

can be noted from Fig. 9 that only few packets travel through

the non-optimal path after handovers which confirms that our

path optimization scheme is faster and effective.

end to end latency (ms)

V.

REFERENCES

[1]

[2]

[3]

[4]

[6]

[7]

[8]

[9]

MN in home network

MN handover from

home network to

visited network

12.6

13.0

13.4

13.8

suboptimal path 75%

[10]

optimal path 75%

14.2

14.6

15.0

[11]

Simulation time (s)

Fig. 9. Comparison of packet delivery latency before and after MNs

handovers to visited network between optimal path and non-optimal

path.

CONCLUSION

In this paper, we have presented in detail our DMM scheme

that co-locates the mobility routing function at the gateway

router and its packet routing path optimization mechanism.

The scheme performance has been investigated through

simulation considering network load and latency. The

performance results of packet delivery latency obtained show

the benefits of optimizing the route under varying network load

condition and increasing distance from the MN home network

to the visited network.

[5]

MN in visitied network

9.50

9.00

8.50

8.00

7.50

7.00

6.50

6.00

5.50

5.00

4.50

4.00

3.50

3.00

2.50

2.00

1.50

1.00

0.50

0.00

delivered to the network the MN is currently visiting. Hence,

this contributes the delays from the CN network to MN home

network and then the delay from the MN home network to the

visited network.

[12]

[13]

We also observed that after handover completion, the

packet delivery latency of the optimal path becomes less

compared to latency before handover. Also, the non-optimal

path experiences increased packet delivery latency. The reason

is that the CN network and the network the MN is visiting are

closer, hence, after MN handovers, the route optimization

process is performed and then packet follows the route with

less number of intermediate nodes to the network the MN is

visiting without going through the MNs home network. In

contrast to the non-optimal path after the handover, the packets

continue travelling through the MNs home network before is

5259

H. A. Chan, Editor, Problem statement for distributed and dynamic

mobility management, draft-chan-distributed-mobility-ps-05 (work in

progress), October, 2011.

D. Jonson, C. Perkins, and J. Arkko, Mobility Support for IPV6, IETF

RFC 6275, July, 2011

S. Gundavelli, K. Leung, V. Devarapalli, K. Chowdhury, and B. Patil,

Proxy Mobile IPv6, IETF RFC 5213, August,2008

H. Yokota, et al., Use case scenarios for Distributed Mobility

Management, IETF draft-yokota-dmm-scenario-00 (work in progress),

October 2010.

P. Bertin, S. Bonjour, and J. Bonnin Distributed or Centralized

Mobility, Proceeding of the 28th, IEEE conference on Global

telecommunications, 2009.

H. A. Chan, H. Yokota, J. Xie, P. Seite, and D. Liu Distributed and

Dynamic Mobility Management in Mobile Internet: Current Approaches

and Issues, Journal of Communications, vol. 6, no. 1, pp. 4-15, Feb.

2011.

P. Bertin, S. Bonjour and J. Bonnin A Distributed Dynamic Mobility

Management Scheme Designed for Flat IP architectures, Proceeding of

3rd International Conference on New Technology, Mobility and

Security, (NTMS 2008), April 2008.

Distributed Mobility Management, http://datatracker.ietf.org/wg/dmm/

H. Chan, Editor, Requirements of distributed mobility management,

draft-ietf-dmm-requirements-01 (work in progress), July, 2012.

P. Bertin, S. Bonjour, and J. Bonnin, An Evaluation of Dynamic

Mobility Anchoring, VTC09: IEEE 70th Vehicular Technology

Conference, September 2009.

F. Giust, et al, A Network-based Localized Mobility Solution for

Distributed Mobility Management, WPMC 2011- International

Workshop on Mobility for Flat Networks (MMFN 2011), October 2011

PMIPv6 for NS-2 patch and required packages.

http://commani.net/pmip6ns/download.html

NS-2 Network Simulator, http://www.isi.edu/nsnam/ns.

Potrebbero piacerti anche

- q4 2015 Results AnnouncementDocumento56 pagineq4 2015 Results AnnouncementPoojaCambsNessuna valutazione finora

- Xmap Hmipv6Documento6 pagineXmap Hmipv6PoojaCambsNessuna valutazione finora

- Qoe-Driven Cache Management For HTTP Adaptive Bit Rate (Abr) Streaming Over Wireless NetworksDocumento6 pagineQoe-Driven Cache Management For HTTP Adaptive Bit Rate (Abr) Streaming Over Wireless NetworksPoojaCambsNessuna valutazione finora

- Applied Optimization With MatlabDocumento208 pagineApplied Optimization With MatlabIvan StojanovNessuna valutazione finora

- 2015 Giust Commag DMMDocumento8 pagine2015 Giust Commag DMMPoojaCambsNessuna valutazione finora

- 04 Restructuring Code - Lab SolutionsDocumento3 pagine04 Restructuring Code - Lab SolutionsPoojaCambsNessuna valutazione finora

- 02 Cheesman Daniels - SolutionsDocumento5 pagine02 Cheesman Daniels - SolutionsPoojaCambsNessuna valutazione finora

- 02 Basic GUI Programming - LabDocumento2 pagine02 Basic GUI Programming - LabPoojaCambsNessuna valutazione finora

- 01 Simple Architectures - SolutionsDocumento6 pagine01 Simple Architectures - SolutionsPoojaCambsNessuna valutazione finora

- Hindu Ast.Documento418 pagineHindu Ast.ANANTHPADMANABHAN100% (1)

- 49 Section 7.5 - Dynamic Traffic Assignment With Non Separable Link Cost Functions and Queue Spillovers (Cascetta2009)Documento10 pagine49 Section 7.5 - Dynamic Traffic Assignment With Non Separable Link Cost Functions and Queue Spillovers (Cascetta2009)PoojaCambsNessuna valutazione finora

- Install NotesDocumento1 paginaInstall NotesPoojaCambsNessuna valutazione finora

- A Cross-Layer-Based Routing With Qos-Aware Scheduling For Wireless Sensor NetworksDocumento8 pagineA Cross-Layer-Based Routing With Qos-Aware Scheduling For Wireless Sensor NetworksPoojaCambsNessuna valutazione finora

- PWC Annual Report 2013Documento74 paginePWC Annual Report 2013ekwonnaNessuna valutazione finora

- ControllerDocumento3 pagineControllerPoojaCambsNessuna valutazione finora

- 2012 Sixth International Conference On Innovative Mobile and Internet Services in Ubiquitous ComputingDocumento6 pagine2012 Sixth International Conference On Innovative Mobile and Internet Services in Ubiquitous ComputingPoojaCambsNessuna valutazione finora

- 02 Cheesman Daniels - SolutionsDocumento5 pagine02 Cheesman Daniels - SolutionsPoojaCambsNessuna valutazione finora

- Hindu Ast.Documento418 pagineHindu Ast.ANANTHPADMANABHAN100% (1)

- Happy April Fool Day, Ifians!! WE GOT YA!!! - 3970987 - Rules & Announcements ForumDocumento9 pagineHappy April Fool Day, Ifians!! WE GOT YA!!! - 3970987 - Rules & Announcements ForumPoojaCambsNessuna valutazione finora

- Hindu Ast.Documento418 pagineHindu Ast.ANANTHPADMANABHAN100% (1)

- Happy April Fool Day, Ifians!! WE GOT YA!!! - 3970987 - Rules & Announcements ForumDocumento9 pagineHappy April Fool Day, Ifians!! WE GOT YA!!! - 3970987 - Rules & Announcements ForumPoojaCambsNessuna valutazione finora

- Sachitra Jyotish Shiksha 4 Varshfhal KhandDocumento287 pagineSachitra Jyotish Shiksha 4 Varshfhal KhandPoojaCambs100% (1)

- Sachitra Jyotish Shiksha 6 Muharat KhandDocumento271 pagineSachitra Jyotish Shiksha 6 Muharat KhandPoojaCambsNessuna valutazione finora

- Nitro PDF Professional 6x User GuideDocumento127 pagineNitro PDF Professional 6x User GuidezoombylikNessuna valutazione finora

- Escalations DeveloperDocumento2 pagineEscalations DeveloperPoojaCambsNessuna valutazione finora

- Nitro PDF Professional 6x User GuideDocumento127 pagineNitro PDF Professional 6x User GuidezoombylikNessuna valutazione finora

- FT Analyst Graduate Non FsaDocumento2 pagineFT Analyst Graduate Non FsaPoojaCambsNessuna valutazione finora

- TableDocumento2 pagineTablePoojaCambsNessuna valutazione finora

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDa EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeValutazione: 4 su 5 stelle4/5 (5783)

- The Yellow House: A Memoir (2019 National Book Award Winner)Da EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Valutazione: 4 su 5 stelle4/5 (98)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDa EverandNever Split the Difference: Negotiating As If Your Life Depended On ItValutazione: 4.5 su 5 stelle4.5/5 (838)

- Shoe Dog: A Memoir by the Creator of NikeDa EverandShoe Dog: A Memoir by the Creator of NikeValutazione: 4.5 su 5 stelle4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerDa EverandThe Emperor of All Maladies: A Biography of CancerValutazione: 4.5 su 5 stelle4.5/5 (271)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDa EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceValutazione: 4 su 5 stelle4/5 (890)

- The Little Book of Hygge: Danish Secrets to Happy LivingDa EverandThe Little Book of Hygge: Danish Secrets to Happy LivingValutazione: 3.5 su 5 stelle3.5/5 (399)

- Team of Rivals: The Political Genius of Abraham LincolnDa EverandTeam of Rivals: The Political Genius of Abraham LincolnValutazione: 4.5 su 5 stelle4.5/5 (234)

- Grit: The Power of Passion and PerseveranceDa EverandGrit: The Power of Passion and PerseveranceValutazione: 4 su 5 stelle4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDa EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaValutazione: 4.5 su 5 stelle4.5/5 (265)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDa EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryValutazione: 3.5 su 5 stelle3.5/5 (231)

- On Fire: The (Burning) Case for a Green New DealDa EverandOn Fire: The (Burning) Case for a Green New DealValutazione: 4 su 5 stelle4/5 (72)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDa EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureValutazione: 4.5 su 5 stelle4.5/5 (474)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDa EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersValutazione: 4.5 su 5 stelle4.5/5 (344)

- The Unwinding: An Inner History of the New AmericaDa EverandThe Unwinding: An Inner History of the New AmericaValutazione: 4 su 5 stelle4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDa EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyValutazione: 3.5 su 5 stelle3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDa EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreValutazione: 4 su 5 stelle4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Da EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Valutazione: 4.5 su 5 stelle4.5/5 (119)

- Her Body and Other Parties: StoriesDa EverandHer Body and Other Parties: StoriesValutazione: 4 su 5 stelle4/5 (821)

- Data Communication & Networking MCQs Set-1 ExamRadarDocumento6 pagineData Communication & Networking MCQs Set-1 ExamRadarHammad RajputNessuna valutazione finora

- Volte-To-Volte Call Setup v1.2cDocumento1 paginaVolte-To-Volte Call Setup v1.2cmcmiljareNessuna valutazione finora

- CCNP Job Interview Questions - Networkingnews PDFDocumento12 pagineCCNP Job Interview Questions - Networkingnews PDFmanishathfcl100% (2)

- Nortel BesDocumento242 pagineNortel Besgb5102100% (1)

- Name: - SectionDocumento18 pagineName: - SectionNiña Francesca MarianoNessuna valutazione finora

- Auto FormationDocumento4 pagineAuto FormationkhalidNessuna valutazione finora

- ECMS2 2.0 Fast Lane LAB Guide 2.0.5Documento125 pagineECMS2 2.0 Fast Lane LAB Guide 2.0.5Peter Calderón PonceNessuna valutazione finora

- TeSys T LTM R - Instruction SheetDocumento4 pagineTeSys T LTM R - Instruction SheetDavid GarciaNessuna valutazione finora

- Port Forwarding and DMZ For Tilgin HG2381Documento7 paginePort Forwarding and DMZ For Tilgin HG2381foursoulNessuna valutazione finora

- Introduction To LISP and VXLAN BRKRST-3045Documento71 pagineIntroduction To LISP and VXLAN BRKRST-3045Harpreet Singh BatraNessuna valutazione finora

- Data Communications and Networking 1 and 2 - All AnswersDocumento19 pagineData Communications and Networking 1 and 2 - All AnswersJan Warry BaranueloNessuna valutazione finora

- Chapter 5 Firewall and Proxy ServerDocumento45 pagineChapter 5 Firewall and Proxy ServerNurlign YitbarekNessuna valutazione finora

- OMS1664 Topic 1 - 1 - 3DocGuideDocumento42 pagineOMS1664 Topic 1 - 1 - 3DocGuideGlauco TorresNessuna valutazione finora

- Router Switch CommandsDocumento121 pagineRouter Switch CommandsIonel GherasimNessuna valutazione finora

- Brkcol 2021Documento152 pagineBrkcol 2021Mohammed Abdul HaseebNessuna valutazione finora

- Display FilterDocumento8 pagineDisplay FilterIhsan ul HaqNessuna valutazione finora

- Dell Commands ExampleDocumento11 pagineDell Commands ExampleUsman AliNessuna valutazione finora

- COMSATS University Islamabad, Lahore Campus: Terminal Examination - Semester Spring 2020Documento2 pagineCOMSATS University Islamabad, Lahore Campus: Terminal Examination - Semester Spring 2020Ali SaleemNessuna valutazione finora

- Itu-T: Requirements For Deep Packet Inspection in Next Generation NetworksDocumento38 pagineItu-T: Requirements For Deep Packet Inspection in Next Generation NetworksVladimirNessuna valutazione finora

- Ch.3 - Configuring A Router: CCNA 1 Version 3.0 Rick Graziani Cabrillo CollegeDocumento52 pagineCh.3 - Configuring A Router: CCNA 1 Version 3.0 Rick Graziani Cabrillo CollegesumansanjivNessuna valutazione finora

- Lab-7 VLANDocumento6 pagineLab-7 VLANrobiul_hossen9911Nessuna valutazione finora

- System 6000 Rfid Encoder pk3695 PDFDocumento7 pagineSystem 6000 Rfid Encoder pk3695 PDFInnocent ElongoNessuna valutazione finora

- Total Days Core Networking SkillsDocumento6 pagineTotal Days Core Networking SkillsMuhammad Adel EsmailNessuna valutazione finora

- CVP ConfigurationDocumento2 pagineCVP ConfigurationmandeepmailsNessuna valutazione finora

- Deployment Wazuh With High Availability Like A ProductionDocumento15 pagineDeployment Wazuh With High Availability Like A ProductionjihadNessuna valutazione finora

- (Ms-Iphttps) : IP Over HTTPS (IP-HTTPS) Tunneling Protocol SpecificationDocumento25 pagine(Ms-Iphttps) : IP Over HTTPS (IP-HTTPS) Tunneling Protocol SpecificationjuanolilloNessuna valutazione finora

- Nokia ONT G-2425G-A (External Antenna)Documento4 pagineNokia ONT G-2425G-A (External Antenna)Sayed SaadNessuna valutazione finora

- 5G NR RRC Inactive StateDocumento32 pagine5G NR RRC Inactive StatekrishkarnNessuna valutazione finora

- Encapsulation and Framing Efficiency of DVB-S2 SatDocumento13 pagineEncapsulation and Framing Efficiency of DVB-S2 SatkakaNessuna valutazione finora

- What is an IP PBXDocumento3 pagineWhat is an IP PBXdugoprstiNessuna valutazione finora