Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Web Image Re-Ranking Using Query-Specific Semantic Signatures

Caricato da

swamishailuCopyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Web Image Re-Ranking Using Query-Specific Semantic Signatures

Caricato da

swamishailuCopyright:

Formati disponibili

Web image Re-ranking

Web Image Re-Ranking Using Query-Specific Semantic

Signatures

1. Introduction:

The revolutionary internet and digital technologies have imposed a need to have a

system to organize abundantly available digital images for easy categorization and

retrieval. The need to have versatile and general purpose image retrieval (IR) system

for a very large image database has attracted focus of many researchers of information

technology-giants and leading academic

institutions for development of IR

techniques .These techniques encompass diversified areas, viz. image segmentation,

image feature extraction, representation, mapping of features to semantics, storage

and indexing, image similarity-distance

measurement and retrieval - making IR

system development a challenging task. Visual information retrieval requires a large

variety of knowledge. The clues that must be pieced together when retrieving images

from a database include not only elements such as color, texture and shape but also

the relation of the image contents to alphanumeric information, and the higher-level

concept of the meaning of objects in the scene.

Image re-ranking, as an effective way to improve the results of web-based

image search, has been adopted by current commercial search engines. Given a query

keyword, a pool of images is rst retrieved by the search engine based on textual

information. By asking the user to select a query image from the pool, the remaining

images are re-ranked based on their visual similarities with the query image. A major

challenge is that the similarities of visual features do not well correlate with images

semantic meanings which interpret users search intention. On the other hand, learning

a universal visual semantic space to characterize highly diverse images from the web

is difcult and inefcient. In this paper, we propose a novel image re-ranking

framework, which automatically ofine learns different visual semantic spaces for

different query keywords through keyword expansions. The visual features of images

are projected into their related visual semantic spaces to get semantic signatures. At

the online stage, images are re-ranked by comparing their semantic signatures

obtained from the visual semantic space specied by the query keyword. The new

approach signicantly improves both the accuracy and efciency of image re-ranking.

The original visual features of thousands of dimensions can be projected to the

semantic signatures as short as 25 dimensions.Experimental results show that 20%1

info@ocularsystems.in

Mobile No : 7385350430

Web image Re-ranking

35% relative improvement has been achieved on re-ranking precisions compared with

the state of the art methods.

2. Literature Survey:

1. E. Bart and S. Ullman. Single-example learning of novel classes using representation

by similarity. In Proc. BMVC, 2005.

Summary : We develop an object classification method that can learn a novel class from a single training

example. In this method, experience with already learned classes is used to facilitate the

learning of novel classes. Our classification scheme employs features that discriminate

between class and non-class images. For a novel class, new features are derived by selecting

features that proved useful for already learned classification tasks, and adapting these features

to the new classification task. This adaptation is performed by replacing the features from

already learned classes with similar features taken from the novel class. A single example of a

novel class is sufficient to perform feature adaptation and achieve useful classification

performance. Experiments demonstrate that the proposed algorithm can learn a novel class

from a single training example, using 10 additional familiar classes. The performance is

significantly improved compared to using no feature adaptation. The robustness of the

proposed feature adaptation concept is demonstrated by similar performance gains across 107

widely varying object categories.

2.

Lampert, H. Nickisch, and S. Harmeling. Learning to detect unseen object classes by

between-class attribute transfer. In Proc. CVPR, 2005.

Summary :

In this paper, we tackle the problem by introducing attribute-based classification. It performs

object detection based on a human-specified high-level description of the target objects

instead of training images. The description consists of arbitrary semantic attributes, like

shape, color or even geographic information. Because such properties transcend the specific

learning task at hand, they can be pre-learned, e.g. from image datasets unrelated to the

current task. Afterwards, new classes can be detected based on their attribute representation,

without the need for a new training phase. In order to evaluate our method and to facilitate

research in this area, we have assembled a new largescale dataset, Animals with Attributes,

2

info@ocularsystems.in

Mobile No : 7385350430

Web image Re-ranking

of over 30,000 animal images that match the 50 classes in Oshersons classic table of how

strongly humans associate 85 semantic attributes with animal classes.

3. G. Cauwenberghs and T. Poggio. Incremental and decremental support vector

machine learning. In Proc. NIPS, 2001.

4. J. Cui, F. Wen, and X. Tang. Intentsearch: Interactive on-line image

search re-ranking.

5. In Proc. ACM Multimedia. ACM, 2008. N. Dalal and B. Triggs. Histograms of

oriented gradients for human detection. In Proc. CVPR, 2005.

3. Problem Statement:

we propose a novel image re-ranking framework, which automatically offline learns

different visual semantic spaces for different query keywords through keyword

expansions. Personalized search using agglomerative clustering

4. Objective

To design the front end and store the cumulative results in the back end.

To develop and design code for sematic signatures of images.

To test the system and implement the algorithm.

5. Methodology:

Discovery of Reference Classes :

- Keyword Expansion

- Image retrieval

- Remove outlier images

- Remove redundant references

Query specific reference classes :

Classifiers of reference classes :

Mining the keywords associated with Image :

Creating Semantic Signatures :

Text Based Image Search :

Re-Ranking Based on Semantic Signatures :

Agglomerative Clustering for personalized image search :

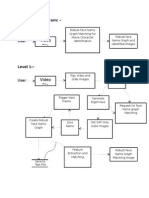

6. System Design and Architecture:

The diagram of the proposed approach is shown below.

3

info@ocularsystems.in

Mobile No : 7385350430

Web image Re-ranking

info@ocularsystems.in

Mobile No : 7385350430

Web image Re-ranking

7. Theoretical result:

The images for testing the performance of re-ranking and the images of reference classes can

be collected at different time4 and from different search engines. Given a query keyword,

1000 images are retrieved from the whole web using certain search engine. As summarized in

Table 1, we create three data sets to evaluate the performance of our approach in different

scenarios. In data set I, 120; 000 testing images for re-ranking were collected from the Bing

Image Search using 120 query keywords in July 2010. These query keywords cover diverse

topics including animal, plant, food, place, people, event, object, scene, etc. The images of

reference classes were also collected from the Bing Image Search around the same time. Data

set II use the same testing images for re-ranking as in data set I. However, its images of

reference classes were collected from the Google Image Search also in July 2010.

8. Future work/ Own Contributions:

We proposed our new algorithm for personalize image search using Agglomerative

clustering.

9. References:

1. E. Bart and S. Ullman. Single-example learning of novel classes using representation

by similarity. In Proc. BMVC, 2005.

2. Y. Cao, C. Wang, Z. Li, L. Zhang, and L. Zhang. Spatial-bag-offeatures.

In Proc. CVPR, 2010.

3. G. Cauwenberghs and T. Poggio. Incremental and decremental support vector

machine learning. In Proc. NIPS, 2001.

4. J. Cui, F. Wen, and X. Tang. Intentsearch: Interactive on-line image

search re-ranking.

5. In Proc. ACM Multimedia. ACM, 2008. N. Dalal and B. Triggs. Histograms of

oriented gradients for human detection. In Proc. CVPR, 2005.

info@ocularsystems.in

Mobile No : 7385350430

Potrebbero piacerti anche

- Graph TreeDocumento20 pagineGraph TreeUyen NhiNessuna valutazione finora

- 4. BUDAPEST2020Documento22 pagine4. BUDAPEST2020Uyen NhiNessuna valutazione finora

- Web Image Re-Ranking UsingDocumento14 pagineWeb Image Re-Ranking UsingkorsairNessuna valutazione finora

- An Efficient Perceptual of Content Based Image Retrieval System Using SVM and Evolutionary AlgorithmsDocumento7 pagineAn Efficient Perceptual of Content Based Image Retrieval System Using SVM and Evolutionary AlgorithmsAnonymous lPvvgiQjRNessuna valutazione finora

- Literature Review On Content Based Image RetrievalDocumento8 pagineLiterature Review On Content Based Image RetrievalafdtzwlzdNessuna valutazione finora

- Content Based Image Retrieval Thesis PDFDocumento7 pagineContent Based Image Retrieval Thesis PDFstephanierivasdesmoines100% (2)

- Content Based Image Retrieval ThesisDocumento8 pagineContent Based Image Retrieval ThesisDon Dooley100% (2)

- Thesis Content Based Image RetrievalDocumento4 pagineThesis Content Based Image Retrievaltiffanycarpenterbillings100% (2)

- Content Based Image Retrieval Literature ReviewDocumento7 pagineContent Based Image Retrieval Literature Reviewaflspfdov100% (1)

- Visual Diversification of Image Search ResultsDocumento10 pagineVisual Diversification of Image Search ResultsAlessandra MonteiroNessuna valutazione finora

- Multi-Feature and Genetic AlgorithmDocumento41 pagineMulti-Feature and Genetic AlgorithmVigneshInfotechNessuna valutazione finora

- Web Image Re-Ranking Using Query-Specific Semantic SignaturesDocumento3 pagineWeb Image Re-Ranking Using Query-Specific Semantic SignatureserpublicationNessuna valutazione finora

- Content-Based Image Retrieval System OverviewDocumento56 pagineContent-Based Image Retrieval System OverviewD Sugapriya SaliniNessuna valutazione finora

- Learning Image Similarity From Flickr Groups Using Fast Kernel MachinesDocumento13 pagineLearning Image Similarity From Flickr Groups Using Fast Kernel MachinesSammy Ben MenahemNessuna valutazione finora

- Sketch4Match - Content-Based Image Retrieval System Using SketchesDocumento17 pagineSketch4Match - Content-Based Image Retrieval System Using SketchesMothukuri VijayaSankarNessuna valutazione finora

- A Method For Comparing Content Based Image Retrieval MethodsDocumento8 pagineA Method For Comparing Content Based Image Retrieval MethodsAtul GuptaNessuna valutazione finora

- Semantic Reranking FinalDocumento8 pagineSemantic Reranking Finalaman.4uNessuna valutazione finora

- Learning To Detect Objects in Images Via A Sparse, Part-Based RepresentationDocumento16 pagineLearning To Detect Objects in Images Via A Sparse, Part-Based RepresentationLabeeb AhmedNessuna valutazione finora

- A User-Oriented Image Retrieval System Based On Interactive Genetic AlgorithmDocumento8 pagineA User-Oriented Image Retrieval System Based On Interactive Genetic Algorithmakumar5189Nessuna valutazione finora

- A User-Oriented Image Retrieval System Based On Interactive Genetic AlgorithmDocumento8 pagineA User-Oriented Image Retrieval System Based On Interactive Genetic AlgorithmVasanth Prasanna TNessuna valutazione finora

- Shape-Based Trademark Image Retrieval Using Two-Stage HierarchyDocumento35 pagineShape-Based Trademark Image Retrieval Using Two-Stage HierarchyNavjot PalNessuna valutazione finora

- Patil New Project ReportDocumento45 paginePatil New Project ReportRavi Kiran RajbhureNessuna valutazione finora

- Content-Based Image Retrieval Based On ROI Detection and Relevance FeedbackDocumento31 pagineContent-Based Image Retrieval Based On ROI Detection and Relevance Feedbackkhalid lmouNessuna valutazione finora

- Sketch4Match - Content-Based Image RetrievalDocumento4 pagineSketch4Match - Content-Based Image Retrievalshiridi sai mineralsNessuna valutazione finora

- Thesis On Content Based Image RetrievalDocumento7 pagineThesis On Content Based Image Retrievalamberrodrigueznewhaven100% (2)

- A Literature Survey On Various Approaches On Content Based Image SearchDocumento6 pagineA Literature Survey On Various Approaches On Content Based Image SearchijsretNessuna valutazione finora

- A Review On Personalized Tag Based Image Based Search EnginesDocumento4 pagineA Review On Personalized Tag Based Image Based Search EnginesRahul SharmaNessuna valutazione finora

- Zhao Memory-Augmented Attribute Manipulation CVPR 2017 PaperDocumento9 pagineZhao Memory-Augmented Attribute Manipulation CVPR 2017 PaperajsocoolNessuna valutazione finora

- Visual Categorization With Bags of KeypointsDocumento17 pagineVisual Categorization With Bags of Keypoints6688558855Nessuna valutazione finora

- Fundamentals of CbirDocumento26 pagineFundamentals of Cbiricycube86Nessuna valutazione finora

- Medical Images Retrieval Using Clustering TechniqueDocumento8 pagineMedical Images Retrieval Using Clustering TechniqueEditor IJRITCCNessuna valutazione finora

- Content-Based Image Retrieval System Using SketchesDocumento4 pagineContent-Based Image Retrieval System Using SketchesShreyanshNessuna valutazione finora

- Significance of DimensionalityDocumento16 pagineSignificance of DimensionalitysipijNessuna valutazione finora

- CBIR Framework with Feedback Knowledge Memory ModelDocumento16 pagineCBIR Framework with Feedback Knowledge Memory ModelAnkapa Naidu DamaNessuna valutazione finora

- Ijmer 45060109 PDFDocumento9 pagineIjmer 45060109 PDFIJMERNessuna valutazione finora

- A FFT Based Technique For Image Signature Generation: Augusto Celentano and Vincenzo Di LecceDocumento10 pagineA FFT Based Technique For Image Signature Generation: Augusto Celentano and Vincenzo Di LecceKrish PrasadNessuna valutazione finora

- ThungYang ClassificationOfTrashForRecyclabilityStatus ReportDocumento6 pagineThungYang ClassificationOfTrashForRecyclabilityStatus ReportLive Fast Dai YungNessuna valutazione finora

- Thesis On Image RetrievalDocumento6 pagineThesis On Image Retrievallanawetschsiouxfalls100% (2)

- Relevance Feedback and Learning in Content-Based Image SearchDocumento25 pagineRelevance Feedback and Learning in Content-Based Image SearchmrohaizatNessuna valutazione finora

- Content Based Image Retrieval Using Wavelet Transform PDFDocumento4 pagineContent Based Image Retrieval Using Wavelet Transform PDFResearch Journal of Engineering Technology and Medical Sciences (RJETM)Nessuna valutazione finora

- An Experimental Survey On Image Mining Tools Techniques and ApplicationsDocumento13 pagineAn Experimental Survey On Image Mining Tools Techniques and ApplicationsNivi MulyeNessuna valutazione finora

- Information Sciences: Changqin Huang, Haijiao Xu, Liang Xie, Jia Zhu, Chunyan Xu, Yong TangDocumento18 pagineInformation Sciences: Changqin Huang, Haijiao Xu, Liang Xie, Jia Zhu, Chunyan Xu, Yong TangkarnaNessuna valutazione finora

- Web Image Reranking Project ReportDocumento28 pagineWeb Image Reranking Project ReportJo Joshi100% (1)

- Intelligent Image Retrieval Techniques: A Survey: JournalofappliedresearchandtechnologyDocumento17 pagineIntelligent Image Retrieval Techniques: A Survey: JournalofappliedresearchandtechnologyNoor RahmanNessuna valutazione finora

- Performance of Clustering Algorithms for Scalable Image RetrievalDocumento12 paginePerformance of Clustering Algorithms for Scalable Image RetrievalYishamu YihunieNessuna valutazione finora

- Content-Based Image Retrieval Using Feature Extraction and K-Means ClusteringDocumento12 pagineContent-Based Image Retrieval Using Feature Extraction and K-Means ClusteringIJIRSTNessuna valutazione finora

- Web Image Re-Ranking With Feedback MechanismDocumento9 pagineWeb Image Re-Ranking With Feedback MechanismInternational Journal of Application or Innovation in Engineering & ManagementNessuna valutazione finora

- Automatic Linguistic Indexing of Pictures by A Statistical Modeling ApproachDocumento14 pagineAutomatic Linguistic Indexing of Pictures by A Statistical Modeling ApproachJava KalihackingNessuna valutazione finora

- (IJCST-V3I5P42) : Shubhangi Durgi, Prof. Pravinkumar BadadapureDocumento7 pagine(IJCST-V3I5P42) : Shubhangi Durgi, Prof. Pravinkumar BadadapureEighthSenseGroupNessuna valutazione finora

- Visual Information Retrieval For Content Based Relevance Feedback: A ReviewDocumento6 pagineVisual Information Retrieval For Content Based Relevance Feedback: A ReviewKarishma JainNessuna valutazione finora

- Feature Extraction Approach For Content Based Image RetrievalDocumento5 pagineFeature Extraction Approach For Content Based Image Retrievaleditor_ijarcsseNessuna valutazione finora

- Egyptian Journal of Basic and Applied Sciences: Mutasem K. AlsmadiDocumento11 pagineEgyptian Journal of Basic and Applied Sciences: Mutasem K. Alsmadishashi kiranNessuna valutazione finora

- Web Image Re-Ranking Using Query-Specific Semantic SignaturesDocumento14 pagineWeb Image Re-Ranking Using Query-Specific Semantic Signaturesphilo minaNessuna valutazione finora

- A Literature Review of Image Retrieval Based On Semantic ConceptDocumento7 pagineA Literature Review of Image Retrieval Based On Semantic Conceptc5p7mv6jNessuna valutazione finora

- Content-Based Image Retrieval Over The Web Using Query by Sketch and Relevance FeedbackDocumento8 pagineContent-Based Image Retrieval Over The Web Using Query by Sketch and Relevance FeedbackApoorva IsloorNessuna valutazione finora

- Tag Based Image Search by Social Re-Ranking: Chinnam Satyasree, B.Srinivasa RaoDocumento8 pagineTag Based Image Search by Social Re-Ranking: Chinnam Satyasree, B.Srinivasa RaosathishthedudeNessuna valutazione finora

- (IJCST-V3I2P31) : Smita Patil, Gopal PrajapatiDocumento4 pagine(IJCST-V3I2P31) : Smita Patil, Gopal PrajapatiEighthSenseGroupNessuna valutazione finora

- 18 TallapallyHarini 162-170Documento9 pagine18 TallapallyHarini 162-170iisteNessuna valutazione finora

- Artificial Intelligence for Image Super ResolutionDa EverandArtificial Intelligence for Image Super ResolutionNessuna valutazione finora

- Data Flow Diagram For Video Copy Detection ProjectDocumento1 paginaData Flow Diagram For Video Copy Detection ProjectswamishailuNessuna valutazione finora

- QUERIE Collborative Database ExplorationDocumento26 pagineQUERIE Collborative Database ExplorationswamishailuNessuna valutazione finora

- NS2 Project List 2015Documento4 pagineNS2 Project List 2015swamishailuNessuna valutazione finora

- A Segmentation Based Sequential Pattern Matching For Efficient Video Copy DetectionDocumento22 pagineA Segmentation Based Sequential Pattern Matching For Efficient Video Copy DetectionswamishailuNessuna valutazione finora

- Efficient Instant-Fuzzy Search With Proximity RankingDocumento17 pagineEfficient Instant-Fuzzy Search With Proximity RankingswamishailuNessuna valutazione finora

- Annotating Search Results From Web DatabasesDocumento24 pagineAnnotating Search Results From Web DatabasesswamishailuNessuna valutazione finora

- Heart Disease Prediction SystemDocumento8 pagineHeart Disease Prediction SystemswamishailuNessuna valutazione finora

- 2015 IEEE Project Topic For ME & BE StudentsDocumento19 pagine2015 IEEE Project Topic For ME & BE StudentsswamishailuNessuna valutazione finora

- Detection of Spyware by Mining Executable FilesDocumento4 pagineDetection of Spyware by Mining Executable FilesswamishailuNessuna valutazione finora

- A Hybrid Cloud Approach For Secure Authorized DeduplicationDocumento9 pagineA Hybrid Cloud Approach For Secure Authorized Deduplicationswamishailu100% (4)

- Keyword Query RoutingDocumento3 pagineKeyword Query RoutingswamishailuNessuna valutazione finora

- Opinion Mining & Sentiment Analysis Based On Natural Language ProcessingDocumento5 pagineOpinion Mining & Sentiment Analysis Based On Natural Language ProcessingswamishailuNessuna valutazione finora

- A Segmentation Based Sequential Pattern Matching For Efficient Video Copy DetectionDocumento19 pagineA Segmentation Based Sequential Pattern Matching For Efficient Video Copy DetectionswamishailuNessuna valutazione finora

- A Segmentation Based Sequential Pattern Matching For Efficient Video Copy DetectionDocumento22 pagineA Segmentation Based Sequential Pattern Matching For Efficient Video Copy DetectionswamishailuNessuna valutazione finora

- Single To Multicloud Using Vcs TechnologyDocumento8 pagineSingle To Multicloud Using Vcs TechnologyswamishailuNessuna valutazione finora

- Multimedia Answer Generation For Community Question AnsweringDocumento17 pagineMultimedia Answer Generation For Community Question AnsweringswamishailuNessuna valutazione finora

- Ns2 Project ListDocumento2 pagineNs2 Project ListswamishailuNessuna valutazione finora

- Ns2 Project ListDocumento13 pagineNs2 Project ListswamishailuNessuna valutazione finora

- Loading Equipment For Amsco Evolution™ Steam Sterilizers: ApplicationDocumento4 pagineLoading Equipment For Amsco Evolution™ Steam Sterilizers: ApplicationniNessuna valutazione finora

- Adaptive ArchitectureDocumento27 pagineAdaptive ArchitectureSanjeev BumbNessuna valutazione finora

- DEA R ScriptsDocumento3 pagineDEA R ScriptsFrancisco SilvaNessuna valutazione finora

- Civil Engineering Reference BooksDocumento2 pagineCivil Engineering Reference Booksdevbrat boseNessuna valutazione finora

- Assessment Clo1 Clo2 Clo3 Clo4 Clo5 Plo1 Plo2 Plo2 Plo1Documento12 pagineAssessment Clo1 Clo2 Clo3 Clo4 Clo5 Plo1 Plo2 Plo2 Plo1Ma Liu Hun VuiNessuna valutazione finora

- 6GK52160BA002AA3 Datasheet en PDFDocumento6 pagine6GK52160BA002AA3 Datasheet en PDFgrace lordiNessuna valutazione finora

- 050, 051Documento28 pagine050, 051kefaja67% (3)

- Unit 4 - Software Engineering - WWW - Rgpvnotes.inDocumento12 pagineUnit 4 - Software Engineering - WWW - Rgpvnotes.inNazma QureshiNessuna valutazione finora

- MI MetadataDocumento310 pagineMI MetadataMatthew McCreadyNessuna valutazione finora

- Parts List 09 636 02 02: AC Brake Motors BMG05-BMG1 Additional List: BrakeDocumento2 pagineParts List 09 636 02 02: AC Brake Motors BMG05-BMG1 Additional List: Brakeali morisyNessuna valutazione finora

- IEC CsODESDocumento2 pagineIEC CsODESArun KumarNessuna valutazione finora

- Lab5 AnswerSheetDocumento3 pagineLab5 AnswerSheetnislam57Nessuna valutazione finora

- Galvanize Galvanize Business Unit: Trust Us To GalvanizeDocumento6 pagineGalvanize Galvanize Business Unit: Trust Us To GalvanizeAdet WildanNessuna valutazione finora

- Quotation 615-4078 BabulalDocumento14 pagineQuotation 615-4078 Babulaldevrajan631Nessuna valutazione finora

- Parts List 8198417 RevCDocumento12 pagineParts List 8198417 RevCSonaina KhanNessuna valutazione finora

- AZAR Block CostcomparisonDocumento8 pagineAZAR Block CostcomparisontckittuNessuna valutazione finora

- Central GeothermalDocumento48 pagineCentral GeothermalНиколай ШипочкиNessuna valutazione finora

- Procedure Installation of Lighting - LABUAN BAJO PDFDocumento6 pagineProcedure Installation of Lighting - LABUAN BAJO PDFWika Djoko ONessuna valutazione finora

- Schneider LV Switchboard Inspection GuideDocumento59 pagineSchneider LV Switchboard Inspection GuideAlp Arslan Ok100% (1)

- NUSTian Final July SeptDocumento36 pagineNUSTian Final July SeptAdeel KhanNessuna valutazione finora

- Cotta Transfer Case Lube PlanDocumento3 pagineCotta Transfer Case Lube PlanMatias Alfredo Contreras KöbrichNessuna valutazione finora

- Testing Machines For TextilesDocumento35 pagineTesting Machines For TextilesAmarech YigezuNessuna valutazione finora

- Unit-I: Introduction To J2EEDocumento29 pagineUnit-I: Introduction To J2EEsurakshaNessuna valutazione finora

- Directional OCDocumento301 pagineDirectional OCurcalmNessuna valutazione finora

- Magnum 3904 DatasheetDocumento3 pagineMagnum 3904 DatasheetbobNessuna valutazione finora

- Supercharging: Superchargers & TurbochargersDocumento11 pagineSupercharging: Superchargers & TurbochargersAkhil Here100% (1)

- Modernize Customer Service with a Cloud-Based Contact CenterDocumento11 pagineModernize Customer Service with a Cloud-Based Contact CenterMishNessuna valutazione finora

- Stress-Strain Behaviour of Steel-Fibre-Reinforced Recycled Aggregate Concrete Under Axial TensionDocumento16 pagineStress-Strain Behaviour of Steel-Fibre-Reinforced Recycled Aggregate Concrete Under Axial TensionAndrucruz CruzNessuna valutazione finora

- Mixers Towable Concrete Essick EC42S Rev 8 Manual DataId 18822 Version 1Documento84 pagineMixers Towable Concrete Essick EC42S Rev 8 Manual DataId 18822 Version 1Masayu MYusoffNessuna valutazione finora