Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Cheating in Online Exam

Caricato da

Haziana RoniDescrizione originale:

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Cheating in Online Exam

Caricato da

Haziana RoniCopyright:

Formati disponibili

2010:112

MASTER' S THESI S

Behavioral Detection of Cheating

in Online Examination

Matus Korman

Lule University of Technology

D Master thesis

Computer and Systems Sciences

Department of Business Administration and Social Sciences

Division of Information Systems Sciences

2010:112 - ISSN: 1402-1552 - ISRN: LTU-DUPP--10/112--SE

Acknowledgements

I would like to thank everyone, who contributed in, opposed to, assisted with, or

otherwise helped me carrying out the study as well as writing this thesis a result

of the study.

My thanks go to Dan Harnesk, PhD. (supervisor), Soren Samuelsson, PhD., and

John Lindstrom, PhD., for the valuable advice and research guidance I was given;

to Hugo Quisbert, PhD., Artjom Vassiljev and Viola Veiderpass for constructive op-

position; to Lars Furberg for the ideas, which helped me to navigate to the research

problem chosen and the interesting discussions we had; to Neil Costigan, PhD., for

his inspiring work and presentations; to professor Ann Hagerfors for managing is-

sues also related to my study; and to my family for their mental support and advice.

My further thanks go to Amir Molavi, Onur Yirmibesoglu, Marko Niemimaa, Elina

Laaksonen, Nebojsa Mihajlovski, Vladimir Kichatov, Ali Fakhr, Darya Plankina,

Anna Selischeva, Sana Rouis, Svante Edzen, Peter Anttu, and others, who con-

tributed to my thoughtow through discussions, or supported me in dierent other

ways.

Special thanks go to Behaviometrics AB and the people, eorts of whom relate

to the study.

Also thanks to the contributions of all of you, the study has been done the way

it has, and I feel having learned valuable knowledge and gained practice, for which

there is use in the future.

Abstract

This thesis relates to studying possibilities of detecting online examination cheating

through the measures of human-computer interaction dynamics.

The need for and use of online or computer-based examination seems to be growing,

while this form of examination gives students a broader spectrum of opportunities

including those for cheating, as compared to non-computerized ways of examination.

The times are changing, there are many dierent reasons for examination dishonesty,

many ways of performing it, and many ways of coping with it. Given an equilib-

rium at this level, new ways of violation deserve new ways of prevention, or at least

detection.

The study focuses on a method of computer-based examination cheating detec-

tion based on measures of behavior and machine learning, and tries to link it to

a broadly taken concept of academic dishonesty. The detection potential of this

method is mainly indicated by cue leakage theory, subjects of which can be han-

dled with use of pattern recognition and anomaly detection theory, all through a

behavioral biometrics approach.

Contents

1 Introduction 1

1.1 Topic . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.2 Research goals and delimitation . . . . . . . . . . . . . . . . . . . . . 3

1.3 Signicance of the study . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.4 Document structure . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

2 Background 7

2.1 Examination cheating . . . . . . . . . . . . . . . . . . . . . . . . . . 7

2.1.1 Whats wrong with cheating? . . . . . . . . . . . . . . . . . . 8

2.1.2 Why do students cheat? . . . . . . . . . . . . . . . . . . . . . 9

2.1.3 The mission: preventing cheating . . . . . . . . . . . . . . . . 16

2.1.4 How do students cheat? . . . . . . . . . . . . . . . . . . . . . 19

2.1.5 Detecting cheating as a means of prevention . . . . . . . . . . 21

2.1.6 Cheating review summary . . . . . . . . . . . . . . . . . . . . 22

2.2 Specics of distance operation . . . . . . . . . . . . . . . . . . . . . . 26

3 Conceptual framework 29

3.1 Cue leakage theory . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

3.2 Pattern recognition theory . . . . . . . . . . . . . . . . . . . . . . . . 30

3.3 Anomaly detection . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

3.4 Behaviometrics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

3.4.1 Biometrics in general . . . . . . . . . . . . . . . . . . . . . . . 33

3.4.2 Specics of behaviometrics . . . . . . . . . . . . . . . . . . . 38

3.4.3 Keystroke dynamics . . . . . . . . . . . . . . . . . . . . . . . 41

3.4.4 Mouse dynamics . . . . . . . . . . . . . . . . . . . . . . . . . 43

3.4.5 Linguistic dynamics . . . . . . . . . . . . . . . . . . . . . . . 44

3.4.6 Special purpose behaviometrics . . . . . . . . . . . . . . . . 44

3.5 Vision of a behavioral cheating detection approach . . . . . . . . . . 48

3.5.1 The angle of attack . . . . . . . . . . . . . . . . . . . . . . . . 49

3.5.2 Behavioral characteristics as the cheating detection unier . . 50

3.5.3 The detection mechanism . . . . . . . . . . . . . . . . . . . . 50

4 Methodology 53

4.1 My setting and the research method . . . . . . . . . . . . . . . . . . 53

4.2 Validity of a research design . . . . . . . . . . . . . . . . . . . . . . . 55

4.3 Reliability and validity of a measure . . . . . . . . . . . . . . . . . . 56

4.4 Research design and research process . . . . . . . . . . . . . . . . . . 57

4.4.1 Empirical inputs . . . . . . . . . . . . . . . . . . . . . . . . . 58

4.4.2 Observations . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

4.4.3 Questionnaire . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

4.4.4 Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

5 Analysis and observations 65

5.1 Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

5.1.1 Quantitative molecular level . . . . . . . . . . . . . . . . . . . 65

5.1.2 Qualitative molecular level . . . . . . . . . . . . . . . . . . . 66

5.1.3 Qualitative molar level . . . . . . . . . . . . . . . . . . . . . . 66

5.2 Observations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

5.3 Observation 1 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

5.3.1 General highlights . . . . . . . . . . . . . . . . . . . . . . . . 67

5.3.2 Session-specic highlights . . . . . . . . . . . . . . . . . . . . 68

5.4 Observation 2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

5.4.1 General highlights . . . . . . . . . . . . . . . . . . . . . . . . 70

5.4.2 Session-specic highlights . . . . . . . . . . . . . . . . . . . . 70

5.5 Observation 3 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

5.5.1 General highlights . . . . . . . . . . . . . . . . . . . . . . . . 73

5.5.2 Session-specic highlights . . . . . . . . . . . . . . . . . . . . 73

5.6 Triangulative analysis remarks . . . . . . . . . . . . . . . . . . . . . 75

6 Results and ndings 77

6.1 Behavioral anomaly indication . . . . . . . . . . . . . . . . . . . . . 77

6.2 Indicating cheating . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

6.3 Indication diculties . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

7 Conclusion and discussions 79

7.1 Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

7.2 Cheating detection and prevention approach discussion . . . . . . . . 80

7.2.1 Behaviometric aspects . . . . . . . . . . . . . . . . . . . . . . 80

7.2.2 Cheating aspects . . . . . . . . . . . . . . . . . . . . . . . . . 82

7.2.3 Psychological aspects . . . . . . . . . . . . . . . . . . . . . . 83

7.3 Research approach discussion . . . . . . . . . . . . . . . . . . . . . . 84

7.4 Outlooks for further research . . . . . . . . . . . . . . . . . . . . . . 84

Appendices 97

A Subjects of automated observation 99

A.1 Basic structure of the analytics . . . . . . . . . . . . . . . . . . . . . 99

A.2 Keystroke dynamics features . . . . . . . . . . . . . . . . . . . . . . 100

A.3 Mouse dynamics features . . . . . . . . . . . . . . . . . . . . . . . . 100

A.4 Silence dynamics features . . . . . . . . . . . . . . . . . . . . . . . . 100

A.5 Linguistic dynamics features . . . . . . . . . . . . . . . . . . . . . . . 101

B Subjects of manual observation 105

C Questionnaire and observation task content 107

C.1 Questionnaire . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 107

C.2 Authentic writing and formulating . . . . . . . . . . . . . . . . . . . 108

C.3 Verbatim copying by reading . . . . . . . . . . . . . . . . . . . . . . 109

C.4 Verbatim copying by listening . . . . . . . . . . . . . . . . . . . . . . 109

C.5 Copying by reading and reformulating . . . . . . . . . . . . . . . . . 110

List of Figures

2.1 A cheating-extended model of Ajzens theory of planned behavior . . 10

2.2 Model of student cheating decision based on internal (personal) and

external factors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

2.3 Model of cheating causation . . . . . . . . . . . . . . . . . . . . . . . 15

2.4 Graphical overview of cheating and counter-cheating relations . . . . 24

2.5 Overview of a cheating and counter-cheating process . . . . . . . . . 25

3.1 A classication example . . . . . . . . . . . . . . . . . . . . . . . . . 30

3.2 Biometric system error rates . . . . . . . . . . . . . . . . . . . . . . . 36

3.3 A typical architecture of a biometric system . . . . . . . . . . . . . . 36

3.4 Fusion of biometric systems . . . . . . . . . . . . . . . . . . . . . . . 37

3.5 The biometric menagerie . . . . . . . . . . . . . . . . . . . . . . . . . 39

3.6 An example process of mouse dynamics analysis . . . . . . . . . . . 43

3.7 Deterrence mechanism of cheating detection . . . . . . . . . . . . . . 49

3.8 Model of the cheating detection approach . . . . . . . . . . . . . . . 52

4.1 Research process overview . . . . . . . . . . . . . . . . . . . . . . . . 58

4.2 The observation design used in the study . . . . . . . . . . . . . . . 60

4.3 The observation process (including questionnaire) . . . . . . . . . . . 62

4.4 Data ow and control relations of the data gathering and analysis

processes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

7.1 The cheating prevention approach . . . . . . . . . . . . . . . . . . . 82

A.1 Analytics structure . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

A.2 Context and process of the automated analysis part . . . . . . . . . 100

C.1 Example free diagram . . . . . . . . . . . . . . . . . . . . . . . . . . 109

C.2 Diagram to copy (redraw) . . . . . . . . . . . . . . . . . . . . . . . . 110

List of Tables

2.1 Factors correlated to plagiarism behavior 1 . . . . . . . . . . . . . . 13

2.2 Factors correlated to plagiarism behavior 2 . . . . . . . . . . . . . . 14

iv

3.1 Meta-functions of a computer mediated communication text analysis

framework . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

3.2 Text analysis linguistic features 1 . . . . . . . . . . . . . . . . . . . . 45

3.3 Text analysis linguistic features 2 . . . . . . . . . . . . . . . . . . . . 46

4.1 Levels of the predictor variable (PV) . . . . . . . . . . . . . . . . . . 61

7.1 Biometric properties of the approach . . . . . . . . . . . . . . . . . . 81

7.2 Discussion of measure validity . . . . . . . . . . . . . . . . . . . . . . 85

A.1 Explanation of terms used in the description of features . . . . . . . 101

A.2 Keystroke dynamics features . . . . . . . . . . . . . . . . . . . . . . 102

A.3 Mouse dynamics features . . . . . . . . . . . . . . . . . . . . . . . . 103

A.4 Silence dynamics features . . . . . . . . . . . . . . . . . . . . . . . . 104

A.5 Linguistic dynamics features . . . . . . . . . . . . . . . . . . . . . . . 104

Chapter 1

Introduction

Cheating in online examination is an educational problem similarly as it is in conven-

tional examination. Because of its lower detectability, however, universal reputation

of distance degrees suers. This study strives to explore and verify possibilities of

detecting specic types of cheating based on behavioral measures of computer inter-

action taken during an online examination. Detecting cheating is seen as a way to

preventing it (lowering its extent).

Distance education became an educational eld in 1970s and since then it is

gaining popularity in diverse parts of the world (Keegan, 1996; Allen & Seaman,

2005, 2007; Howell et al., 2003). According to Allen & Seaman (2008), nearly 22%

of all higher education enrollments in the United States in year 2007 were online

enrollments. The number was around 4 million and there is still a growing ten-

dency. Moreover, online education is dominantly perceived as critical to long-term

institutional strategy by educational institutions at least across the United States

(Allen & Seaman, 2008). Based on dierent trends and factors, the interest for

distance education is increasing and expected to increase further (Hawkridge, 1995;

Irele, 2005; Allen & Seaman, 2003). The trends have varied characters, among other

motivational (Parker, 2003; Maguire, 2005; Allen & Seaman, 2008), social, political

and technological (Bates, 1995; Howell et al., 2003). Relatively high future growth

of distance education is expected in developing countries (Koul, 1995).

Distance education is a form of education, in which teachers and class audi-

ence are separated by physical distance and/or by time (Moore & Kearsley, 1996,

chap. 1) as compared to conventional (on-site) education, which is based on face-

to-face meetings and time-synchronous physical presence of students, required by

the technology predominantly employed (Keegan, 1996, chap. 1,2). Following Kee-

gan (1996), distance education and conventional education dier at least in physical

centralization and time-synchronization (accessibility), economics, market, and also

didactics (Reushle & McDonald, 2004; Reushle et al., 1999), administration and

evaluation. Nowadays, not only dierent universities around the world oer courses

and programs for distance studies, there are whole universities often called open

universities, which are built on the concept of, and provide solely distance educa-

tion.

As to the process of education, the concept of physical decentralization and

time-asynchronization is also applicable to the process of assessment including ex-

amination (Mason, 1995), since the two are often employed at the same time, or in

1

a mutually successive manner. Distance examination is in dierent forms used to

validate the level of knowledge, skills or abilities of students/examinees. The most

common distance examination method seems to be online examination, which uses

a network-enabled computer environment (e.g., the Internet) to set up a two-way

communication.

Although dependent on specic environment, while major concerns in distance

education compared to on-site education are mostly related to nding, achieving and

maintaining eective means of teaching/tutoring, learning, student support and ad-

ministration (Holmberg, 1995; Keegan, 1996; Bates, 2005; Kim et al., 2008), the

problems of fairness assurance and trust seem to be often more challenging in on-

line examination compared to traditional/conventional examination means (Rowe,

2004). Public trust and fairness in education including examination is an important

attribute (Rumyantseva, 2005; Heyneman, 2002), yet seemingly tricky to achieve and

maintain (Herberling, 2002). The technology, which on one hand enables distributed

and asynchronous education, opens up a broad range of cheating possibilities within

an examination process on the other hand. Controlling or at least perceiving largely

unknown and distant examination environments as a way to detect and prevent ex-

amination dishonesty seems to be non-trivial. Also as a matter of this fact, distance

education often renders less accepted than conventional (on-site) education (Colum-

baro & Monaghan, 2009; Bourne et al., 2005). In a more general context, Allen &

Seaman (2003) shows that online education is perceived inferior to conventional ed-

ucation, however, near future beliefs (for three years later) show an optimistic turn

in the balance. Around six years later, Columbaro & Monaghan (2009) show that

such beliefs have been and might tend to be too optimistic, since more than 95%

of employers would prefer to accept a traditional degree to an online one in several

dierent elds according to their study.

Examination cheating and academic dishonesty in general seem to have been an

educational problem since a long time ago (Cizek, 1999). According to UC Berke-

ley (2009), cheating can be dened as fraud, deceit, or dishonesty in an academic

assignment, or using or attempting to use materials, or assisting others in using

materials, that are prohibited or inappropriate in the context of the academic as-

signment in question (no page numbering). Students often tend to shortcut achiev-

ing their grades and maintaining their sense of personal integrity otherwise than

through investing adequate amount of eort and time (Diekho et al., 1996). Aca-

demic cheating is prevalent and at the same time, it seems to have growing tendency

(Cizek, 1999; Dick et al., 2003; McCabe et al., 2006; Wehman, 2009; Howell et al.,

2009). A study in McCabe et al. (2006) shows that cheating was reported by 56%

business students and 47% non-business students. An earlier McCabes study (also

mentioned in the paper) shows that 66% of all students reported at least one serious

cheating incident in the past year, while among engineering students the number

was 72% and business students led with 84%. According to a survey carried out in

the United States, around 94% students reported cheating in any form, around 65%

students reported test cheating and more than 50% students reported plagiarism.

According to Stumber-McEwen et al. (2009), there is a wealth of studies on preva-

lence of cheating available, however, their quantitative results vary greatly based

on the type of survey and specic survey conditions. As to on-site examination,

cheating also applies to distance examination (Underwood, 2006; Wehman, 2009).

2

Dierent sources perceive the cheating prevalence among on-site and online exam-

ined students dierently (Stumber-McEwen et al., 2009; Herberling, 2002; Watson

& Sottile, 2010). Assuming that an online examination environment tends to be less

cheat-constraining and less perceivable by examiners than an on-site one, students

may generally tend to cheat more from distance as also believed by Rowe (2004).

Following an information security approach (Whitman & Mattord, 2008), the

occurrence of online examination cheating as an undesirable activity is a form of

risk, and the higher the cheating severity and probability, the greater the risk con-

trol importance. The ultimate goal of risk control, in this context applied to the

educational eld, is to eectively reduce risk related to the educational process.

Eectively reducing risk of online examination cheating is a problem.

There are multiple approaches to controlling online examination cheating (Olt,

2002), many of them suitable in one way or another. The primary approach usable

with the thesis concerns is the police approach monitoring for and reacting on

suspicion or detection, along with deterrence-based cheating demotivation. This ap-

proach is somewhat analogous to a feedback control system (

Astrom & Murray, 2008,

chap. 1) and within such one needs to rst perceive the examination environment

and detect anomalies in order to be able to make eective control actions. Perceiv-

ing a distant online examination environment and eectively detecting cheating is a

problem.

1.1 Topic

The topic of this thesis is to explore and verify possibilities of detecting specic types

of online examination cheating based on behavioral measures of human-computer

interaction. More specically, the focus lies on utilizing behaviometrics (behavioral

biometrics) for the analysis of keystroke, mouse and linguistic dynamics.

The primary motivation for this study is to enable or help faculties to both

(1) ght the prevalent and rather invisible online examination cheating, and to (2)

indirectly increase the acceptance of online grades.

By content, this study is focusing on the use of behaviometrics (work with

keystroke, mouse and linguistic dynamics) based on information technology and

machine learning (software, pattern recognition, anomaly detection, visualization)

for detecting examination cheating (an educational concern).

1.2 Research goals and delimitation

The goal of the research endeavor is not to enable one to exactly tell whether a

student cheats or not. According to the character of a probabilistic analysis process

and the variety of input data to it in context and time frame of this thesis, such a goal

would render extremely dicult to achieve to me. Instead, I consider the following

information to be both useful and realistic to indicate based on the measures of

human-computer interaction (keystroke and mouse events with their timings, and

linguistic features from keystrokes):

1. Histogrammatically displayed extent of behavioral anomaly compared to a be-

havioral baseline

3

2. Histogrammatically displayed amount of stress

3. Histogrammatically displayed probability of cheating together with the type of

possible cheating activity (e.g. copying by reading, listening, etc.) for each

suspicious segment of behavior during the examination session time

Being able to eectively and in a highly automated way provide the above about the

target population (described below) is the research vision (in a longer term). The

goal of this study, however, is to approach this vision with focus on the rst and the

third point.

The target population to which the research goal relates are distance students, a

great part of whose might be employed adults (Paulsen & Rekkedal, 2001), mostly

aged between 25 to 40 years. The rest of the target group might be graduate students

aged mostly between 20 and 30 years. The age ranges used are assumptive and they

constitute a part of the studys delimitation.

The following are research questions, answering which I expect to contribute to

achieving the research goal:

RQ-1 What are the behavioral signs of tasks carried out when cheating that

manifest themselves on keystroke, mouse and linguistic dynamics of the

users computer interaction during a computer-based examination?

RQ-2 How distinct is normal behavior from a cheating behavior and how dis-

tinct are dierent types of cheating behavior from each other?

The following are delimitation statements for this study: (1) A small number of

participants of online examination simulations (observations) are selected based on

convenience, instead of careful alignment to the target population. (2) No special

equipment such as skin humidity, body temperature or heartbeat sensors is used

within the study. (3) Automation of the whole cheating detection process from

gathering inputs to seeing indications of cheating type and amount itself, is not a

part of the study.

1.3 Signicance of the study

The contribution the study aims at is to identify and prototype a new approach to de-

tecting computer-based examination cheating using such behaviometric techniques,

operation of which does not depend on the availability of any student-uncommon

hardware

1

. No previous work known to the author has been done within this topic

at the time of writing.

Being able to reliably detect and perceive examination cheating could help assur-

ing examination fairness, promoting academic integrity, and hence be a step towards

shifting motivation of many students from cheating to seemingly more strategically

valuable personal eorts.

1

Assuming that in order to carry out an online examination, at least a computer with keyboard

and mouse is available to the student.

4

1.4 Document structure

After having introduced the topic, drawn the research goal, questions and delimi-

tation statements in the introduction chapter, the document describes the problem

background and parts of the state of the art in the background chapter. The chapter

conceptual framework contains description of core theories and concepts applied in

the study. The research method and its details are described as next in the method

chapter. Observations useful to know before analysis, are described in the chapter

named respectively. Findings of the study are summarized in the results and ndings

chapter. Finally, the whole research is summarized and concluded in the conclusion

chapter, and dierent questions are additionally discussed from the authors points

of view.

5

6

Chapter 2

Background

This chapter summarizes some cheating-related background and the state of the art

in relation to the research problem and the approaches chosen to solve it.

2.1 Examination cheating

This section tries to outline cheating from dierent perspectives and answer to a

couple of questions, which could arise with regards to cheating. Firstly, cheating is

described and some reasons for its negative consideration are given. Subsequently,

this section looks at how cheating is done, how can one detect it, why students

cheat and how can one prevent it. Finally, what was stated about cheating so far

is summarized according to my apprehension, and a description on what distance

operation can change regarding cheating is drawn.

As described earlier, examination cheating is a highly prevalent matter. Wehman

(2009) provides an extensive summary of self-reported academic dishonesty identied

by a number of scholars through a period of sixteen years from 1992 to 2008.

Before going deeper toward consequences, forms, reasons, and ways of detect-

ing and preventing cheating, let us attempt to dene or at least characterize what

cheating is. Based on the fact that the threshold of what is considered as cheating

depends on course-specic context and is therefore variable, Dick et al. (2003) try

to dene cheating in a way, which overcomes this problem:

A behavior may be dened as cheating if [at least] one of the two following

questions can be answered in the positive:

Does the behavior violate the rules that have been set for the assessment

task?

Does the behavior violate the accepted standard of student behavior at

the institution?

(Dick et al., 2003, p. 172)

Although the second question asked by Dick et al. uses the term accepted standard

of student behavior, practical image of which looks rather informal and fuzzy, the

denition seems to reect the perception of cheating pretty well in general and

also in the fuzziness, on the other hand. As said afterwards regarding the previous,

7

in both cases, this assumes that the accepted rules and standards have been

clearly laid out for students. (Dick et al., 2003, p. 172)

Facing the reality, this might not be the case in many academic environments,

though. Another problem with the denition is that technically breaking the rules or

such standard might also be inadvertent (unintentional), or too trivial, so it rather

becomes perceived as poor learning behavior instead of cheating.

Severity is an important parameter of cheating, especially in responding to cheat-

ing or handling it otherwise. Dick et al. (2003) proposes a number of factors to

consider regarding cheating severity (seriousness):

The presence of deception (deceptive intention) in achieving an unfair advan-

tage.

The presence of direct harm to some other person by the cheating behavior.

Course-relative value of the assessment task, on which the cheating was present.

Width of the cheating scope (1-2 students, or more?)

The presence of criminal behavior within the cheating behavior.

The cheaters learning outcome achievement (as inversely related).

Dick et al. (2003) uses the term management of cheating as an organizational

process with three stages as follows:

1. Cheating preemption stage trying to reduce cheating incidence within courses

by e.g. design of academic integrity policy and programs, culture, examination

environment, assessment etc.

2. Cheating detection stage trying to detect student cheating by e.g. examining

turned in assignments and student behavior

3. Cheating response stage trying to reactively respond to detected student cheat-

ing

2.1.1 Whats wrong with cheating?

Although the answer to this question might in its simple form sound pretty obvious,

let us try to look for a broader and more explicit answer. Taken very generally,

academic dishonesty including examination cheating would perhaps not be a sound

problem unless it had some serious consequences within the society.

Cheating is an important issue that needs to be considered for two main reasons.

The rst reason is that students who cheat are likely to have not achieved

competence in a variety of skills that will be necessary for them to use in their

profession. Graduating incompetent professionals is likely to cause:

Damage to society, as incompetent professionals may produce work that

fails or is even dangerous to human life.

Damage to the profession, as every professional represents the profession

to the wider community and any incompetence will reect badly on it.

8

Damage to the reputation of the institution as employers realise that the

graduates from an institution are sometimes or are often incompetent.

Damage to the reputation of the degree for the same reason.

The second reason that cheating is an important issue for academics is the harm

it causes to individual students. It

Harms the educational environment for all students as academics must

spend time and energy controlling cheating that could be better utilized

on enhancing positive learning

Harms the cheating student by their loss of learning and leaves them

unprepared for their profession when they graduate

Harms their fellow students who do not cheat as the cheating student

gains an unfair advantage over them. In an environment where grades are

important for scholarships and future employment, this can have serious

consequences.

(Dick et al., 2003, p. 173)

Besides that, cheating can pose a greater risk to the ones who cheated and were

detected:

The student learns little when the opportunity to learn is ignored, the gratica-

tion of creating something that he or she distinctly owns is lost, and if discovered

by others, the career of the student could be ruined depending upon the con-

text and seriousness of the oense (Whitley & Keith-Spiegel, 2001). (Wehman,

2009, p. 12)

Regarding student recommendation of examination process (presumably inu-

encing course and degree reputation), Shen et al. (2004) carried out a eld exper-

iment on 114 students showing that perceived examination fairness is positively

correlated to ethical recommendations about the examination process.

Digging a bit into cheating dynamics and following Albert Banduras social cog-

nitive theory (Bandura, 1991, 2002), especially in terms of social referential com-

parison, not only single acts of cheating are harmful. It is also the forming eect

of making the perception of cheating more common in surrounding human environ-

ment, which pushes the thresholds of social acceptability at people and hence, aids

further establishment and spreading of the cheating culture (McCabe et al., 2006;

Megehee & Spake, 2008). Within such a culture, cheating even forms itself away

from being perceived as dishonest (Cizek, 1999).

Summarized and perhaps a bit extended, too, Whitley & Keith-Spiegel (2002)

states that a faculty should be concerned about academic dishonesty because of

the following eight issues: (1) Equity, (2) character development, (3) the mission

to transfer knowledge, (4) student morale, (5) faculty morale, (6) students future

behavior, (7) reputation of the institution, and (8) public condence in higher edu-

cation.

2.1.2 Why do students cheat?

The question why people cheat seems to be important to answer for a discussion of

detection as a form of cheating prevention. Although a deep and thorough answer

9

Figure 2.1: Model of Ajzens (1991) Theory of Planned Behavior extended by Stone et al.

(2009)

to the question is beyond the limits of the thesis focus, this part provides a more

general and somewhat more near-the-surface answer instead.

From a pragmatic perspective and according to Ajzens (1991) Theory of Planned

Behavior (TPB) extended by Stone et al. (2009) (outlined on gure 2.1), people

intend to cheat and perform it according to three components (1) beliefs about

cheating and its outcomes, (2) perceived normative acceptability of cheating, and

(3) the ability (or diculty) to cheat and remain undetected (thus unpunished).

Although the theory describes an internal cheating control mechanism, it does not

explain what are the incentives for considering a cheating behavior at all. For the

needs of this study, let us simply assume the following:

Cheating is one of the strategies to achieve perceived goals with taking an

examination, which could in most cases be the achievement of a score (grade)

good enough at a cost low enough; certainly not the only one, though.

Within the process of decision making, people (students) choose strategies

based on perceived feasibility in terms of costs (e.g. invested time, eort,

money or mood, risk to undertake etc.) and benets (e.g. receiving better

examination score, receiving more social acceptance from certain peers, etc).

For a deeper insight towards more under the hood relations between student goals,

motivation and expectancy, one can refer to Covington (2000) and Eccles & Wigeld

(2002).

From a dierent perspective, Lawrence Hinmans words say:

People with integrity not only refrain from cheating, but dont want to cheat.

[...] People with integrity have a sense of wholeness, of who they are, that

eliminates the desire to pretend through cheating, through plagiarizing, and

the like that they are someone else. For them, signing their name to something

10

Figure 2.2: Model of student cheating decision based on internal (personal) and external

factors based on Dick et al. (2003)

signies that it is theirs. They would not want to pass something o as their

own. (Hinman, 1997, no page numbering)

People with integrity also have a clear vision of what is right and what is wrong.

Their world is not the murky world of thoughtless and easygoing relativism, but

a world that is sharply illuminated by the light of their vision of goodness. And

added to this clarity of vision is the strength of will to act of the basis of that

vision. They see what is right, and they stand up for it, even when the personal

cost is high. (Hinman, 1997, no page numbering)

Dick et al. (2003) identied four reasons based on which a student may decide

to cheat: Sensitivity as the ability to interpret a moral situation, judgement as the

ability to determine if a certain action is correct or not, [self-]motivation as the

inuence of internal values, character as the ability to resist pressures to perform

an immoral act.

As an extension to the previous model, Dick et al. provide a model of student

cheating decision based on internal factors (personal domain) and the external ones

as shown in gure 2.2. Technology is in this context seen as the enabler of dierent

possibilities, cheating among other. Societal context refers to e.g. inuence of a

students peer group, family, media, role models, culture, etc. Situational context

may include e.g. heavy or irrelevant course load, inadequate teaching, dicult as-

signments, lack of environment control from the examiners or proctors, some sort

of dependence on passing the examination, etc. Demographic factors including age,

gender, marital status, socioeconomic status, ethnicity, religiosity. (Dick et al., 2003)

Diekho et al. (1996), OLeary (1999) and McCabe et al. (2006) discuss rela-

tionships between cheating and cheater properties such as age, gender, cultural,

educational or professional background, etc. For instance in environments where

words are perceived as belonging to society more than belonging to individual,

cheating tends to be perceived as more acceptable and hence, more commonplace

(OLeary, 1999).

The importance of performing well on examination, and hence increased fear-

11

based cheating pressure evoked by conditions with high student population and

grading strongly aecting an individuals future career, also tends to result in higher

cheating rates among students (Howell et al., 2009). Opposed to that, dominantly

intrinsically motivated students (those with dominant mastery goal orientation),

show less cheating behavior than their dominantly performance goal oriented or

dominantly neutral peers (Rettinger & Kramer, 2008).

According to Whitley & Keith-Spiegel (2002), there are ve norms, which are

usually not perceived as academically dishonest by students: (1) Students may study

from old tests without explicit permission (as long as the tests are not stolen),

(2) taking shortcuts such as reading condensed books, listing unread sources in

bibliography, and faking lab reports is permissible, (3) unauthorized collaboration

with others is ne, especially when helping friends, (4) some forms of plagiarism such

as omitting sources and using direct quotations without citation are acceptable, (5)

conning teachers by faking excuses for missing deadlines and so, is permissible. Such

misconceptions make students more leaned towards the respective cheating without

realizing the seriousness of it.

On top of that, Wehman (2009) has identied that fear of negative teacher

evaluations and student morals and habits back from years ago are topics related to

the cheating problem.

Students often know that they are conducting an immoral activity when cheating.

As summarized by Whitley & Keith-Spiegel (2002) and corresponding with TPB,

theory of cognitive dissonance (Aronson, 1969) and neutralization theory (Harris

& Dumas, 2009), students justications for academic dishonesty (seemingly being

applicable to any kind of consciously immoral activity in general) can include denial

of injury (it doesnt hurt anyone), denial of personal responsibility (I got sick and

couldnt read the stu), denial of personal risk (they cant punish me anyhow),

selective morality (I only cheat to pass the classes, or friends come rst, they

needed help), trivializing (minimizing seriousness) (the assignment has a little

weight in nal grade), a necessary act (if I dont do well, my parents will kill me),

and dishonesty as a norm (everyone does it).

Another argument placing cheating into a more acceptable light is that cheating,

and more specically plagiarism, versus collaborative spreading of knowledge, seem

to be a bit conictive and fuzzy in borders:

There is a certain unambiguity about when collaborating in learning commu-

nity to extend knowledge and understanding stops and submitting only your

own work starts. (Le Heron, 2001, p. 3?)

Le Heron (2001) also says the following regarding student expectations:

Student expectation is that their study will qualify them for a high paying job.

Many are mature students re-training and in order to re-join the workforce

quickly they often take more papers than they can cope with. Some students

have the expectation that will pass because they have paid reasonably high fees.

(Le Heron, 2001, p. 245)

Extensively interviewing six rst-year masters students from three dierent pro-

grams at a university, Love & Simmons (1998) identied a set of factors correlated

to plagiarism behavior, which are divided into several groups based on character of

the factors: mediation character (inhibiting vs. contributing), factor type (internal

12

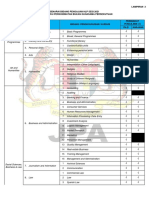

Mediation Type Eect Factor

Inhibiting

Internal

Positive

Personal condence

Positive professional ethics

Fairness to authors

Desire to work or learn

Fairness to others

Negative

Fear of detection consequences

Guilt

External

Professors knowledge

Probability of being caught

Time pressure

Cheating perceived as dangerous

Type of work required

Need for knowledge in the future

Contributing

Internal

Negative personal attitudes

Lack of awareness

Lack of competence

External

{Grade, time, task} pressure

Professor leniency

Table 2.1: Factors correlated to plagiarism behavior according to Love & Simmons (1998)

vs. external), and emotional eect (positive vs. negative). Those are summarized

in table 2.1. The set of factors is further extended by theoretical summary of Olt

(2007) and Megehee & Spake (2008) as summarized on table 2.2 according to the

apprehension of the author of this thesis. Although the authors focus on plagiarism

behavior, the results seem to have partial relevance to cheating in general.

As an addition to the tables, Iyer & Eastman (2008) found that perceptions

of low social desirability at students are directly correlated to the amount of their

cheating behavior.

In form of an extended application of TPB, gure 2.3 graphically summarizes

causes of cheating and the expected benets as one of cheating factor groups.

13

Mediation Type Factor

Inhibiting Internal

Academic achievement

Age

Contributing

Internal

Diculty seeing marks of plagiarism

Disorganization

Cryptomnesia

Fear of failure

Procrastination and laziness

Sense of alienation

Thrill seeking

Social activities

Cheating rationalization

Absenteeism

External

Unrealistic assignments

Ambivalence of faculty and administration

Benets outweigh risks

Competition (jobs and graduate school)

Devaluing assignment by the instructor

Ethical lapses

Information overload

Institutions subscriptions to market ideologies

Instructor bad example

Prominent bad examples

Opportunity

Peer observation

Social networking

Instructors failure to keep pace with tech. advances

Instructors failure to rotate curriculum

Instructors lenience

Lack of trust between student and instructor

Previous cheating experience

Internal

Cultural background

Gender

Marital status

Major

External

Student perception of instructor

Testing environment

Table 2.2: My apprehension of factors correlated to plagiarism behavior according to Olt

(2007) and Megehee & Spake (2008) those additional to table 2.1

14

F

i

g

u

r

e

2

.

3

:

M

o

d

e

l

o

f

c

h

e

a

t

i

n

g

c

a

u

s

a

t

i

o

n

(

i

n

s

p

i

r

e

d

b

y

W

h

i

t

l

e

y

&

K

e

i

t

h

-

S

p

i

e

g

e

l

,

2

0

0

2

)

15

Within an analogy between cheaters in the educational eld and attackers in

the eld of information security, as there are dierent types of attackers, there

might be similarly dierent types of cheaters. According to Whitman & Mattord

(2007), attackers have dierent motivations to intrude such as personal and social

status, the thrill of doing it, revenge, nancial gain, ideology, industrial espionage,

etc. Attempting to draw an analogy, cheaters might also cheat for dierent reasons

such as a notion of personal gain (grades or other academic credit, personal or

social status), providing oneself an additional layer of failure protection (although

a forbidden one), to accommodate oneself with a social environment, or simply

possessing a habit of cheating.

Although students are mostly believed to cheat for grades Cizek (1999), views

and experiences on it may slightly dier, e.g. that cheaters mostly just want to pass

a course or an examination (Le Heron, 2001).

To sum up this section, it seems that there wont be any existential emergency

for cheating intentions among students at least as long as we use the kinds of school

systems we use today. That could mean a very long time in the future well have

to keep combating cheating in one way or another. Besides, there are a number of

cheating correlates, which might make cheating a clue or a signal directed toward

improving other educational issues at an institution.

2.1.3 The mission: preventing cheating

In the history of education and assessment, a number of cheating prevention methods

have taken place. Each of those can be categorized according to its prevention

approaches or strategies.

Lawrence Hinman in Olt (2002) has identied three approaches to minimizing

cheating:

The virtues approach seeking to promote students intrinsic motivation (self-

motivation) to be honest and learn instead of cheating. It is a promotion-based

and deeply positively oriented approach.

The prevention approach seeking to eliminate or reduce cheating opportuni-

ties and suppress elements of cheating culture. This is a neutrally oriented

approach not promoting, not deterring, just reducing the time-space to cheat.

The police approach seeking to detect and punish cheating in reaction to it.

This approach is based on punishment and deterrence (as described and dis-

cussed by e.g. Carlsmith et al., 2002) in other words, the big brother style.

Inspired by the risk management terminology of Whitman & Mattord (2008), all of

the approaches can be seen as a form of cheating avoidance, the last one perhaps

also being partially mitigative.

Similarly, Olt (2002) has identied four basic strategies for minimizing academic

dishonesty in online assessment. For the sake of more clarity, I assigned names to

those (in italics):

1. Environment control strategy. This strategy focuses on acknowledging the dis-

advantages of online assessment and nding ways to overcome them through

16

technical and operational means of perceiving and/or controlling the exami-

nation environment.

2. Hardened assessment design strategy. This strategy focuses on eectively de-

signing online assessment and the assignments (questions) in order to reduce

the cheating-proneness.

3. Unique assignment strategy. This strategy focuses on the uniqueness of assign-

ments or rather the correct answers to them by e.g. rotating or modifying

the curriculum, so that e.g. sharing graded assignments or exams does not

help cheaters much.

4. Integrity policy strategy. This strategy focuses on providing students with an

academic integrity policy in order to promote an integral environment (free

from cheating).

In the eld of combating plagiarism, Usick (2004) in Olt (2007) has created a

plagiarism prevention model called Three-Rs model, which stands for (1) respect

between instructor and student towards each other and the academic discipline, (2)

relevancy in linking together the course matter with the real world matter in a

students perception, and (3) refresh-ing of the integrity policy awareness.

Within the area of information systems teaching, Le Heron (2001) tried to iden-

tify countermeasures to cheating in plain paper-based examination. Those are: Oral

explanation of skills in addition to writing a paper, oline performance consistency

test in addition to writing a paper, online skills test only. In context of LeHerons

research, online skills test has rendered most eective in terms of all cheating detec-

tion, provability and student skills verication, although it is potentially possible for

a student to reuse a work completed by someone else at an earlier session. Another

point is that online testing poses additional administration requirements such as reg-

istering and identifying students, marking procedures, and securing the examination

process (Le Heron, 2001).

Howell et al. (2009) has identied a number of ways used to combat cheating:

The Honor System, which builds on creating a honest and cheating-resistant

atmosphere and culture.

Banning or controlling electronic devices.

Photo and/or government identication.

Physical biometric scanning such as ngerprinting and palm vein scanning.

Commercial security systems such as web camera or 360-degree camera surveil-

lance systems, behaviometric (behavioral biometric) authentication and iden-

tication systems, systems based on asking and getting answers to personal

questions gathered from a database.

Cheat-resistant computers using a highly restrictive computer environment,

which allows students to more or less only write the exam using the computer.

Lawsuits to ght companies and websites providing braindumps (answer sheets

in dierent forms), which is an approach mostly used by large corporations.

17

Computer-adaptive testing and randomized testing. Instead of having the same

variant of test for each examinee, rest of the test varies based on how one has

answered the answered questions.

Statistical analysis [-based detection]. This includes dierent types of statistical

analysis and forensic methods, among other behaviometrics used dierently

than for plain authorization or identication.

Following Cizek (1999), Rowe (2004) and Deubel (2003), there are a few more

cheating ghting ideas as e.g.:

Knowing the writing style of students before examining them to be able to

easier detect diction or writing style anomalies.

Planning for unexpected matters, which can occur when using information

technology, or simply examination operation in general. For instance, a student

computer may crash, or may be taken down intentionally. Similarly, students

may ask for using a bathroom or having a drink or a snack innocently, or in

an attempt to realize fraudulent intentions such as cheating.

Entrapment such as trying to plant fake tests in locations, where curious people

searching exam questions or answers are likely to nd those. It is an analogy

to honeypots in network security as discussed in Whitman & Mattord (2007).

This method applied to education, however, seems to lay over the border of

professional ethics.

From a faculty-defensive point of view, Whitley & Keith-Spiegel (2002) in Wehman

(2009) list three conditions, which can make a faculty liable for student harm if a

faculty member (1) makes a malicious false accusation, (2) discusses a cheating case

and uses a students name together with individuals not involved in the case reso-

lution, and (3) violates a students right to due process by ignoring the institutions

procedures for resolving academically dishonest accusations. Wehman (2009) also

identied reasons why faculty personnel does not always take action in response to

detecting cheating, the latter the less frequent: being aware that nothing would have

prevented the faculty from acting, being afraid of inability to prove the case, stu-

dent denial of the incident, it would be too time consuming to pursue, being afraid

of law suits, having feared hassle faced from administration, student negotiated a

good excuse, being lazy, being afraid that management skills would be perceived

as lacking, knowing that student was making decent progress in the course, being

afraid of student violence, being afraid of damaging relationship with the student,

and identied cheating after a grade was given to the student. That is to say that

there are a lot of hinders in proceeding from cheating detection to reaction for those

which have the interest or responsibility to do so.

Finally, Dick et al. provide a recommendation:

An ounce of prevention is worth pound of cure deterring cheating is far

more eective than detecting and punishing cheating due to the costly nature

of formal responses to cheating, so academic should focus their time and energy

on pre-empting cheating rather than detecting cheating. (Dick et al., 2003, p.

182)

18

In conclusion, there seems to be quite a number of dierent means to ght

cheating, however and as seemingly generally valid, no silver bullets that simply

x it all alone. According to what was summarized, an educational institution

needs to employ a broad range of approaches and methods to be eective in this

process. Omitting one or more approaches as e.g. focusing on detection, reaction

and deterrence only, while not cheat-proong the environment and/or building an

integral culture, might not work very well, especially in the long run. Although

this study primarily aims at the police approach, this section was also meant to

mention that this approach needs some complementary support, since it is itself too

incomplete to rely on as the only one.

2.1.4 How do students cheat?

In my point of view, to answer the question how does cheating occur is required in

order for cheating detection methods to be developed. Since providing a compre-

hensive list of cheating methods would be vast, yet not directly useful for the study,

this section tries to categorize the methods by their operational similarity, instead.

First of all, the word examination might be mostly associated with a typical long

and extensive individually written examination at the end of a course. There are,

however, dierent types of examination, or assessment in general. Kim et al. (2008)

lists several from available literature (described below) and compares their usage

at three dierent programs at a university. According to assessment type, there

is formative assessment (assessment of learning experience progress; continuous,

ongoing assessment and feedback), and summative assessment (measuring learning

at the end of the process; traditional tests). Besides, assessment can be catego-

rized based on individuality to individual assessment (e.g. personal assessment,

self-assessment), and team assessment (e.g. assessment in collaborative learning).

According to assessment instrument or method, there are paper or essay (e.g.

student papers and reports), exam, quiz or problem set (e.g. conventional tests,

proctored testing, midterm and nal exams, self-tests), discussion or chat (e.g. on-

line discussion, chat or e-mail), project, simulation or case study (e.g. authentic

assessment, collaborative projects, case studies), reection (e.g. meta-cognitive es-

say), portfolio (e.g. electronic portfolio, portfolio essay), peer evaluations. These

are dierent types of examination, presumably each prone to cheating in one way

or another, all to dierent extent.

Rowe (2004) identies three main categories of cheating problems: (1) getting

assessment answers in advance, (2) unfair retaking of assessments, and (3) getting

unauthorized help during assessment. Those can be further broken into slightly more

items for the sake of being more specic. Inspired by Airasian (2001), Cizek (1999),

Stumber-McEwen et al. (2009), Howell et al. (2009), Rowe (2004), Dick et al. (2003)

and Faucher & Caves (2009), the following categories of examination cheating can

be identied. Those are, however, still rather general, as chosen for the purpose of

this study:

Using physical resources to cheat. This can occur in form of reading own or

others crib, desk or hand notes, papers, books, pieces of clothing or tissues,

looking at other students work, or using steganographic methods (e.g. ultra-

violet light) to extract notes or other data protected respectively.

19

Using electronic resources to cheat. For example, using resources as notes,

papers, e-books, web sites, old student work or old answer sheets from a com-

puter network, computer, telephone or other electronic medium, which are not

allowed to use.

Using communication, which is not allowed. An example is talking to peers,

listening to someone online or using a radio device, or other exchange of signals

with peers and anyone else besides the examiners and/or persons, with whom

it is allowed in a specic way. Even talking to an examiner asking details

about a question as it was unclear, in order to get more information to gure

out an answer to that one or some other question is, in fact, also cheating.

Using unauthorized intelligence such as obtaining answers or examination ques-

tions in advance.

Impersonation, which means using someone else to take parts or whole exam-

ination instead of the authentic person.

Fabrication of facts or measurements such as misreporting error of measure-

ment, etc.

Corrupting examination integrity such as changing answers when teachers al-

low students to grade each others tests, or unauthorized access to the tests

between being taken and being graded.

Process-level tricks such as using deceptive excuses, or unfair retaking of ex-

ams, and hence, training oneself for specic type of questions instead of ade-

quately learning the study matter.

Social engineering such as grade negotiation through exploitation of personal

sympathy etc.

Organized cheating and faculty personnel corruption such as bribing examiners,

proctors, illegal inltration of the grading process and other types serious fraud

(it is in fact also a form of examination or grading process integrity corruption).

Plagiarism, which means using parts of someone elses work without giving

adequate credit.

To sum up, there has been a number of dierent cheating categories identied

across the existing literature. Some of the categories cover tens or perhaps hundreds

of specic cheating methods. Information about those together with fairly advanced

cheating tactics can be read in Cizek (1999, chap. 3). For the purpose of this

study, however, describing those detailed seems to be marginally important, since

new technologies are being invented, and cheaters keep on modifying the existing

ways to cheat and nding new ones all the time.

Methods used to cheat on tests are like snowakes: There is an innite number

of possibilities. The possibilities are, however, related to the type of testing

being considered. (Cizek, 1999, p. 37)

20

Many forms of exam-time cheating seem to have a common denominator ob-

taining information from disallowed sources to give correct answers without having

learned the subject matter (reading, hearing, etc.), or letting someone else answer

instead of the authentic person. The rest of cheating types seems to require longer

time or other than exam conditions to set up, and hence, it is of marginal interest

for this study.

2.1.5 Detecting cheating as a means of prevention

One of the strategies in the mission of preventing cheating is deterrence through

detection and response. As discussed previously, in order to respond, one must

detect rst. There are a number of approaches to detection of dierent kinds of

cheating. The following list tries to identify those based on existing literature such

as Cizek (1999), Howell et al. (2009) or Rowe (2004):

Checking for identity that it is the authentic person who is being examined.

Checking for forbidden tools such as crib notes, electronic devices, etc.

Using examination proctors, who manually observe an examination environ-

ment. For more completeness, those can also be undercover proctors acting as

being examinees or indierent individuals during an examination.

Automated surveillance systems, which in dierent ways monitor students dur-

ing examination.

Plagiarism detection systems and Internet searches, which try to detect collu-

sion between students, cut-and-paste plagiarism, and the usage of paper mills

(old paper databases) by e.g. searching in those and searching the Internet

for similar texts among everything freely accessible and indexed by the search

engines (such as Google).

Statistical analysis methods, most of which analyze parameters of student re-

sponses to examination assignments or questions and the similarities of those in

a group. Besides that, statistical methods can also address dierent measures

of human behavior.

Possibly also auditing and intra-organizational intelligence

1

, which can be used

for combating e.g. personnel corruption.

Similarly, Harris (2009) identied some strategies of detecting plagiarism: Look-

ing for clues, knowing the possible sources of a suspect paper and/or searching for the

paper online, using a plagiarism detector system, which can automate the previous.

Further regarding the clues, the following examples are mentioned: Mixed citation

styles, lack of references or quotations, inconsistent formatting, o topic elements,

signs of datedness such as lack of recent sources from a certain year, anachronisms

such as referring to long past events such as they were current or recent, anomalies

and inconsistencies in style (vocabulary usage, rhetorical structure, punctuation,

spelling, layout, etc.), and smoking guns such as e.g. text (Thank you for using

1

This item has been added by the author and it is not mentioned in the literature cited herein

21

TermPaperMania), inconsistently embedded links (URLs) and other forms of direct

and apparent plagiarism evidence (Harris, 2009).

Additionally, University of Alberta Libraries (2009) identies a clue that if a sub-

mitted paper exceeds students research or writing capabilities, or has an anomalous

tone (too professional, journalistic or scholarly), or simply somehow largely exceeds

expectations from the student, it might signalize plagiarism or some other form of

cheating.

Within cheating detection based on personal vigilance, Dick et al. identify tech-

niques as careful scrutiny, eye inspection, hand analysis, observation, and pattern

spotting. Three comparisons commonly made are

(1) across the students looking for similarities of submissions, (2) within an

individual assessment looking for changes in style or unusual ideas, (3) with

previous work by the same student looking for dramatic changes in quality.

(Dick et al., 2003, p. 181)

As an important note and also relevant to this study, Cizek (1999) points out the

diculty and pitfalls of taking probabilistic evidence as sucient to prove cheating.

Although the class of statistical cheating detection methods seems to be the most

promising regarding power and availability, the methods may function rather as an

indicator and deterrent than a tool providing strong evidence alone. Another fact is

that Cizek focused on statistical methods of analyzing examination answers, which

do not take eventual measures during the examination process, building on such

assumptions as e.g. that the methods cannot detect use of cheat sheets (crib notes),

impersonation, electronic communication, etc. In contrast, this study is hoping to

show the opposite.

2.1.6 Cheating review summary

This part tries to summarize what was reviewed about examination cheating in this

section so far and how it is perceived by the author. It is complemented by gures

2.4 and 2.5.

In the very narrow goal context of attaining an examination pass (or a score

high enough), cheating simply renders as a highly viable strategy. As such, it is

probably often going to be chosen by students as well as its high performance is

probably often going to be confronted with the ideals of morality, ethics, principles

of academic integrity and productivity at both personal and societal level. Those

seem to be facts one can not do much about. On the other hand, within cheating

prevention in terms of its preemption, one can try to change the parameters of some

student decision making processes by e.g.:

Strengthening the ideals of morality and ethics, or the perception of academic

integrity principles so that they outweigh cheating incentives in the process

of cheating consideration. An example way to accomplish this is the use of

academic integrity programs.

Broadening the perceived goal context by e.g. making students understand why

and how it is benecial for them to all (1) learn the study matter properly, (2)

not getting caught cheating because of its probable consequences, and (3) not

22

contributing to spreading of the cheating culture. This can also be a goal of

an academic integrity program.

Limiting both challenges and possibilities of cheating by e.g. course, assess-

ment and assessment environment design optimization. The optimization itself

seems to be a non-trivial task, which needs to address a number of dierent

relations between (1) cheating incentives, (2) their factories inside a student

mind and (3) the extrinsic arousal of those.

Increasing the risk (penalty and probability) of being caught upon cheating by

e.g. hardening consequences of being detected cheating and increasing cheating

detection capabilities.

Cheating itself can occur in a number of forms. Also thanks to the generally de-

sired and deeply valued student inventiveness, the forms cheating eectively change

over time, which makes it both costly and inecient to address detection and pre-

vention of narrow cheating form groups one by one. Moreover, doing so can make the

counter-cheaters at best a couple of steps behind the cheaters. Regarding cheating

detection, there are eorts to develop more eective methods capable of detecting a

broader and more general range of cheating forms, i.e. through applying automated

statistical analysis to dierent measures of human behavior.

Last and not least, in the ways of both detecting and preventing cheating, there

are hinders and limits of dierent kind ranging from misalignment between the

counter-cheating and administrative, through fear from reporting cheating, up to

political unsuitability of e.g. cheating detection methods.

23

Figure 2.4: Graphical overview of cheating and counter-cheating relations

24

F

i

g

u

r

e

2

.

5

:

O

v

e

r

v

i

e

w

o

f

a

c

h

e

a

t

i

n

g

a

n

d

c

o

u

n

t

e

r

-

c

h

e

a

t

i

n

g

p

r

o

c

e

s

s

25

2.2 Specics of distance operation

There is no doubt about the great accessibility advantages and freedom in the choice

of study tempo the concept of distance work provides. On the other hand and within

some reection, the distance mode of operation could aect at least the following

aspects compared to the conventional one:

The study/examination environment and the student perception of it. A dif-

ference between on-site and distance study/examination environment seems

to be apparent. On-site students can attend school sessions together with

peers in an environment with a strongly academic feel, walking or travel to

school, attend lectures seeing peers and lecturers, and often feel as being a

part of a student community sharing similar goals together with others who

are physically near. One can have a lunch and talk to peers, study together

and cooperate on assignments face to face, etc. Distance students attend

school sessions from behind a computer screen, seeing and hearing peers and

lecturers on a videoconferencing tool, reading course matters from a remote

learning management system and rather seldom having a computer-mediated

peer discussion (Paulsen, 2001), perhaps physically alone for most of the time.

Independently from whether one is in some ways superior or inferior to the

other, there are certainly many dierences between how an on-site student and

a distance student can perceive and feel about their studies. Similarly the dif-

ference seems to apply to the examination process. Sitting in a controlled room

with an adequate surveillance feels certainly dierent from sitting in ones of-

ce or living room having a microphone and webcamera with a constant and

limited angle of sight on.

The possibilities and capacities of communication channels among students

and between students and teachers. One can surely e-mail or call a peer or a

teacher independently from whether one is an on-site or a distance student.

The dierence might come if one wants to discuss a topic face to face, simply

because it could under some circumstances be more eective. (Paulsen, 2001;

Stumber-McEwen et al., 2009) A question is if a videoconferencing tool can

be a sucient replacement for a physical meeting (see Media Synchronicity

Theory by Dennis & Valacich, 1999) for all types of students not only in terms

of plain words being said, but also how they are being said and heard, how both

communication parts perceive the atmosphere, how close do they feel toward

each other as people, and more in general, what is the overall enjoyability

of such meeting compared to a physical meeting, not forgetting a range of

motivational factors and cheating correlates possibly involved - such as those

mentioned earlier in the text (e.g. tables 2.1 and 2.2). Paulsen (2001) presents

an empirical study about distance student perspectives in Norway, stating that

the usage of electronic discussion forums is weak and while communication

with teachers is mostly perceived as satisfactory, communication among study

peers is mostly seen as lacking.

The level of examiner perception and control of the examination environment.

The ability to control the environment, or at least to perceive and detect dier-

ent activities of examinees or students, changes from conventional to distance

26

environment and mode of operation (Rowe, 2004). On a conventional examina-

tion, an examiner can often see parts of the classroom from dierent angles and

also hear what is happening. Although this could be possible within a distance

examination as well, it could require rather special surveillance equipment for

students, which comes with a cost to obtain and operate. Yet a dierent type

of problem is the analytical capacity of such detection systems - does it just

record data (e.g. voice, video, keystrokes, etc.) and make the actual detection

of tens or hundreds of students up to a human, or can it operate automatedly?

The behavioral distance between acceptable operation and cheating. In other

words, how much syntagmatic behavioral dierence there is between the two.

The level of cheating possibilities. Provided that an environment is largely

uncontrolled and unperceived by the examiners, how and how well can one

keep students away from cheating?

Indirectly the extent to which employers accept distance degrees. The public

trust in and employee acceptance of distance degrees seems to be smaller com-

pared to conventional degrees (Columbaro & Monaghan, 2009; Bourne et al.,

2005; Allen & Seaman, 2003). Although it might be tricky to identify the rea-

sons for this mistrust, some of them could presumably be related to dierent

assumptions about quality limits of distance education, cheating in distance

assessment, or simply doubts about a nonstandard and unconventional way of

studying.

The intention with these lines is not to mark one of the two environments as superior

or inferior to the other. It is to signify that an environment may have practically

benecial advantages, while at the same time, it may have practical disadvantages,

some of them in form of threats.

A dierent and more friendly view toward the concept of distance education is

that it best suits adults in need of additional or continued education, who cannot

aord an interruption from their job (Paulsen & Rekkedal, 2001). Moreover, com-

pulsory time-bound sessions have been shown as dramatically reducing application

interest of this type of students (ibid).

Regarding statistics and comparison between cheating among on-site and dis-

tance students, there are a couple of studies showing varied results (Stumber-McEwen

et al., 2009; Herberling, 2002; Watson & Sottile, 2010). Some of them state that

distance students cheat more, some of them state the opposite. Let this be anyhow,

according to the results presented, distance students cheat as well as their on-site

counterparts do and that seems to be a good reason to nd ways of reducing that

matter.

27

28

Chapter 3

Conceptual framework

This chapter identies important theoretical concepts related to cheating detection

in context of this study. Those include cue leakage theory, pattern recognition theory,

anomaly detection, and behaviometrics. Finally, my vision of a cheating detection

approach binds them by outlining the approach, and the vision gets related to a

specic goal through the summarized theory on examination cheating.

3.1 Cue leakage theory

Cue leakage theory is a concept based on the fact that when someone performs an

activity, the person tends to unconsciously leave cues about the activity being per-

formed. Perhaps the most common example is lying, which leaves dierent cues,

many in form of muscular activity such as facial gestures (Ekman, 1985). Although

the process of leaving cues is to some extent deliberately controllable (ibid), it is

questionable whether one can hide all respective cues when e.g. lying to another per-

son face to face. Generalized, the concept applies to the eld of deception deception

(DePaulo et al., 2003; Anolli et al., 2001), recent research in which also focuses on

the electronic (computer-based) and networked environment, specically text-based

asynchronous computer-mediated communication (TAC) (Zhou et al., 2003, 2004;

Zhou, 2005; Adkins et al., 2004; Fuller et al., 2006; Lee et al., 2009). Although

deception detection is not directly used in this study, linguistic features used in the

study are inspired or taken from studies related to deception detection in TAC.