Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

A Brief Introduction To Factor Analysis

Caricato da

waldemarclausDescrizione originale:

Titolo originale

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

A Brief Introduction To Factor Analysis

Caricato da

waldemarclausCopyright:

Formati disponibili

A Brief Introduction to Factor Analysis

1. Introduction

Factor analysis attempts to represent a set of observed variables X

1,

X

2

. X

n

in terms of a

number of 'common' factors plus a factor which is unique to each variable. The common

factors (sometimes called latent variables)

are hypothetical variables which explain why a

number of variables are correlated with each other -- it is because they have one or more

factors in common.

A concrete physical example may help. Say we measured the size of various parts of the

body of a random sample of humans: for example, such things as height, leg, arm, finger,

foot and toe lengths and head, chest, waist, arm and leg circumferences, the distance between

eyes, etc. We'd expect that many of the measurements would be correlated, and we'd say that

the explanation for these correlations is that there is a common underlying factor of body

size. It is this kind of common factor that we are looking for with factor analysis, although in

psychology the factors may be less tangible than body size.

To carry the body measurement example further, we probably wouldn't expect body size to

explain all of the variability of the measurements: for example, there might be a lankiness

factor, which would explain some of the variability of the circumference measures and limb

lengths, and perhaps another factor for head size which would have some independence from

body size (what factors emerge is very dependent on what variables are measured). Even

with a number of common factors such as body size, lankiness and head size, we still

wouldn't expect to account for all of the variability in the measures (or explain all of the

correlations), so the factor analysis model includes a unique factor for each variable which

accounts for the variability of that variable which is not due to any of the common factors.

Why carry out factor analyses? If we can summarise a multitude of measurements with a

smaller number of factors without losing too much information, we have achieved some

economy of description, which is one of the goals of scientific investigation. It is also

possible that factor analysis will allow us to test theories involving variables which are hard

to measure directly. Finally, at a more prosaic level, factor analysis can help us establish that

sets of questionnaire items (observed variables) are in fact all measuring the same underlying

factor (perhaps with varying reliability) and so can be combined to form a more reliable

measure of that factor.

There are a number of different varieties of factor analysis: the discussion here is limited to

principal axis factor analysis and factor solutions in which the common factors are

uncorrelated with each other. It is also assumed that the observed variables are standardised

(mean zero, standard deviation of one) and that the factor analysis is based on the correlation

matrix of the observed variables.

-2-

2. The Factor Analysis Model

If the observed variables are X

1,

X

2

. X

n

, the common factors are F

1

, F

2

F

m

and the

unique factors are U

1

, U

2

U

n

, the variables may be expressed as linear functions of the

factors:

X

1

=a

11

F

1

+a

12

F

2

+a

13

F

3

+ +a

1m

F

m

+

a

1

U

1

X

2

=a

21

F

1

+a

22

F

2

+a

23

F

3

+ +a

2m

F

m

+

a

2

U

2

.

X

n

=a

n1

F

1

+a

n2

F

2

+a

n3

F

3

+ +a

nm

F

m

+

a

n

U

n

(1)

Each of these equations is a regression equation; factor analysis seeks to find the coefficients

a

11

,

a

12

a

nm

which best reproduce the observed variables from the factors. The coefficients

a

11

,

a

12

a

nm

are weights in the same way as regression coefficients (because the variables

are standardised, the constant is zero, and so is not shown). For example, the coefficient a

11

shows the effect on variable X

1

of a one-unit increase in F

1.

In factor analysis, the

coefficients are called loadings (a variable is said to 'load' on a factor) and, when the factors

are uncorrelated, they also show the correlation between each variable and a given factor. In

the model above, a

11

is the loading for variable X

1

on F

1

, a

23

is the loading for variable X

2

on

F

3

, etc.

When the coefficients are correlations, i.e., when the factors are uncorrelated, the sum of the

squares of the loadings for variable X

1

, namely a

11

2

+a

12

2

+ +a

13

2

, shows the proportion

of the variance of variable X

1

which is accounted for by the common factors. This is called

the communality. The larger the communality for each variable, the more successful a factor

analysis solution is.

By the same token, the sum of the squares of the coefficients for a factor -- for F

1

it would

be [a

11

2

+a

21

2

+ +a

n1

2

] -- shows the proportion of the variance of all the variables which

is accounted for by that factor.

3. The Model for Individual Subjects

Equation (1) above, for variable 2, say, may be written explicitly for one subject i as

X

2i

=a

21

F

1i

+a

22

F

2i

+a

23

F

3i

+ +a

2m

F

mi

+a

2

U

2i

(2)

This form of the equation makes it clear that there is a value of each factor for each of the

subjects in the sample; for example, F

2i

represents subject i's score on Factor 2. Factor scores

are often used in analyses in order to reduce the number of variables which must be dealt

with. However, the coefficients a

11

, a

21, .

a

nm

are the same for all subjects, and it is these

coefficients which are estimated in the factor analysis.

-3-

4. Extracting Factors and the Rotation of Factors

The mathematical process used to obtain a factor solution from a correlation matrix is such

that each successive factor, each of which is uncorrelated with the other factors, accounts for

as much of the variance of the observed variables as possible. (The amount of variance

accounted for by each factor is shown by a quantity called the eigenvalue, which is equal to

the sum of the squared loadings for a given factor, as will be discussed below). This often

means that all the variables have substantial loadings on the first factor; i.e., that coefficients

a

11

, a

21, .

a

nm

are all greater than some arbitrary value such as .3 or .4. While this initial

solution is consistent with the aim of accounting for as much as possible of the total variance

of the observed variables with as few factors as possible, the initial pattern is often adjusted

so that each individual variable has substantial loadings on as few factors as possible

(preferably only one). This adjustment is called rotation to simple structure, and seeks to

provide a more interpretable outcome. As will be seen in the example which we'll work

through later, rotation to simple structure can be seen graphically as the moving or rotation of

the axes (using the term 'axis' in the same way as it is used in 'x-axis' and 'y-axis') which

represent the factors.

5. Estimating Factor Scores

Given the equations (1) above, which show the variables X

1

X

n

in terms of the factors F

1

F

m

, it should be possible to solve the equations for the factor scores, so as to obtain a score

on each factor for each subject. In other words, equations of the form

F

1

=b

11

X

1

+b

12

X

2

b

1n

X

n

F

2

=b

21

X

1

+b

22

X

2

b

2n

X

n

.

F

m

=b

m1

X

1

+b

m2

X

2

b

mn

X

n

(3)

should be available; however, problems are caused by the unique factors, because when they

are included with the common factors, there are more factors than variables, and no exact

solution for the factors is available. [An aside: The indeterminacy of the factor scores is one

of the reasons why some researchers prefer a variety of factor analysis called principal

component analysis (PCA). The PCA model doesn't contain unique factors: all the variance

of the observed variables is assumed to be attributable to the common factors, so that the

communality for each variable is one. As a consequence, an exact solution for the factors is

available in PCA, as in equations (3) above. The drawback of PCA is that its model is

unrealistic; also, some studies have suggested that factor analysis is better than PCA at

recovering known underlying factor structures from observed (Snook & Gorsuch, 1989;

Widaman, 1993)].

Because of the lack of an exact solution for factor scores, various approximations have been

offered, and three of these are available in SPSS. One of these approximations will be

discussed in considering the example with real data. It is worth noting, however, that many

researchers take matters into their own hands and use the coefficients a

11

,

a

12

a

nm

from the

factor solution as a basis for creating their own factor scores. Grice (2001), who describes

-4-

and evaluates various methods of calculating factor scores, calls this method a 'coarse' one,

and compares it unfavourably with some 'refined' methods and another 'coarse' one based on

factor score coefficients.

6. Calculating Correlations from Factors

It was mentioned above that an aim of factor analysis is to 'explain' correlations among

observed variables in terms of a relatively small number of factors. One way of gauging the

success of a factor solution is to attempt to reproduce the original correlation matrix by using

the loadings on the common factors and seeing how large a discrepancy there is between the

original and reproduced correlations -- the greater the discrepancy, the less successful the

factor solution has been in preserving the information in the original correlation matrix. How

are the correlations derived from the factor solution? When the factors are uncorrelated, the

process is simple. The correlation between variables X

1

and X

2

is obtained by summing the

products of the coefficients for the two variables across all common factors; for a three-factor

solution, the quantity would be (a

11

x a

21

) +(a

12

x a

22

) +(a

13

x a

23

). This processs will

become clearer in the description of the hypothetical two-factor solution based on five

observed variables in the next section.

7. A Hypothetical Solution

Variable Loadings/Correlations Communality Reproduced correlations

Factor 1 Factor 2 X

1

X

2

X

3

X

4

X

5

X

1

.7 .2 .53 X

1

.

X

2

.8 .3 .73 X

2

.62

.

X

3

.9 .4 .97 X

3

.71 .84 .

X

4

.2 .6 .40 X

4

.26 .34 .42 .

X

5

.3 .7 .58 X

5

.35 .45 .55 .48 .

x

2

2.07 x

2

1.14

7.1 The Coefficients

According to the above solution,

X

1

=.7F

1

+.2F

2

X

2

=.8F

1

+.3F

2

..

X

5

=.3F

1

+.7F

2

.

As well as being weights, the coefficients above show that the correlation between X

1

and F

1

is .70, that between X

1

and F

2

is .20, and so on.

7.2 Variance Accounted For

The quantities at the bottom of each factor column are the sums of the squared loadings for

that factor, and show how much of the total variance of the observed variables is accounted

-5-

for by that factor. For Factor 1, the quantity is .7

2

+.8

2

+.9

2

+.2

2

+.3

2

=2.07. Because in

the factor analyses discussed here the total amount of variance is equal to the number of

observed variables (the variables are standardised, so each has a variance of one), the total

variation here is five, so that Factor 1 accounts for (2.07/5) x 100 =41.4% of the variance.

The quantities in the communality column show the proportion of the variance of each

variable accounted for by the common factors. For X

1

this quantity is .7

2

+.2

2

=.53, for X

2

it

is .8

2

+.3

2

=.73, and so on.

7.3 Reproducing the Correlations

The correlation between variables X

1

and X

2

as derived from the factor solution is equal to (.7

x .8) +(.2 x .3) =.62, while the correlation between variables X

3

and X

5

is equal to (.9 x .3) +

(.4 x .7) =.55. These values are shown in the right-hand side of the above table.

8. How Many Factors?

A factor solution with as many factors as variables would score highly on the amount of

variance accounted for and the accurate reproduction of correlations, but would fail on

economy of description, parsimony and explanatory power. The decision about the number

of common factors to retain, or to use in rotation to simple structure, must steer between the

extremes of losing too much information about the original variables on one hand, and being

left with too many factors on the other. Various criteria have been suggested. The standard

one (but not necessarily the best) is to keep all the factors which have eigenvalues greater

than one in the original solution, and that is used in the example based on workmot.sav,

which is referred to in Exercise 2 in An Introduction to SPSS for Windows. This example is

described in greater detail in the next section.

9. An Example with Real Data

This example is based on variables d6a to d6h of workmot.sav. The items corresponding to

the variables are given in Appendix 1 of An Introduction to SPSS for Windows versions 9 and

10. The root question is "How important are the following factors to getting ahead in your

organisation?" Respondents rate the eight items, such as "Hard work and effort", "Good

luck", "Natural ability" and "Who you know" on a six-point scale. The scale ranges from

"Not important at all" (coded 1) to "Extremely important" (coded 6).

9.1 The correlation matrix

Correlations

1.000 -.320 .658 -.452 .821 -.302 .604 .178

-.320 1.000 -.062 .426 -.316 .355 -.078 .114

.658 -.062 1.000 -.313 .709 -.220 .597 .314

-.452 .426 -.313 1.000 -.453 .597 -.243 .174

.821 -.316 .709 -.453 1.000 -.381 .679 .160

-.302 .355 -.220 .597 -.381 1.000 -.082 .200

.604 -.078 .597 -.243 .679 -.082 1.000 .267

.178 .114 .314 .174 .160 .200 .267 1.000

D6A Hard work&effort

D6B Good luck

D6C Natural ability

D6D Who you know

D6E Good performance

D6F Office politics

D6G Adapatability

D6H Years of service

D6A Hard

work&effort

D6B

Good luck

D6C Natural

ability

D6D Who

you know

D6E Good

performance

D6F Office

politics

D6G

Adapatability

D6H Years

of service

-6-

Inspection of the correlation matrix suggests that certain kinds of items go together. For

example, responses to "Hard work and effort", "Natural ability", "Good performance" and

"Adaptability" are moderately correlated with each other and less so with other items.

9.2 Carrying out the Factor Analysis

Instructions for carrying out the factor analysis are given on pages 22 to 26 of An

Introduction to SPSS for Windows. Additional commands are described in the following sub-

sections.

9.3 The Initial Factor Analysis Solution

The first table shows the initial and final communalities for each factor. The final estimate of

Communalities

.708 .740

.265 .262

.581 .638

.477 .649

.782 .872

.421 .524

.523 .577

.216 .275

D6A Hard work&effort

D6B Good luck

D6C Natural ability

D6D Who you know

D6E Good performance

D6F Office politics

D6G Adapatability

D6H Years of service

Initial Extraction

Extraction Method: Principal Axis Factoring.

the communality which is given in the second column of the table, is arrived at by an iterative

process. To start the ball rolling, an initial estimate is used. By default, this is the squared

multiple correlation obtained when each variable is regressed on all the other variables. In

other words, the amount of the variance of variable X

1

explained by all the other variables is

taken as a reasonable first estimate of the amount of X

1

's variance accounted for by the

common factors.

Total Variance Explained

3.634 45.427 45.427 3.317 41.457 41.457

1.748 21.847 67.275 1.220 15.253 56.710

.766 9.569 76.844

.615 7.687 84.530

.415 5.188 89.719

.364 4.553 94.271

.306 3.824 98.096

.152 1.904 100.000

Factor

1

2

3

4

5

6

7

8

Total

% of

Variance Cumulative % Total

% of

Variance Cumulative %

Initial Eigenvalues Extraction Sums of Squared Loadings

Extraction Method: Principal Axis Factoring.

The second table shows the eigenvalues and the amount of variance explained by each

successive factor. The Initial Eigenvalues are for a principal components analysis, in which

the communalities are one. The final communalities are estimated by iteration for the

principal axis factor analysis, as mentioned earlier. As can be seen from the first table, they

-7-

are somewhat less than one, and the amount of variance accounted for is reduced, as can be

seen in the second table in the section headed Extraction Sums of Squared Loadings. The rest

of the factor analysis is based on two factors, because two factors have eigenvalues greater

than one. There are other methods which can be used to decide on the number of factors,

some of which may generally be more satisfactory than the rule used here (Fabriger et al,

1999). As an aside, it has been suggested that over-extraction (retaining more than the true

number of factors) leads to less distorted results than under-extraction (retaining too few

factors); Wood, Tataryn & Gorsuch, 1996.

Factor Matrix

a

.857 .078

-.347 .376

.749 .276

-.590 .548

.927 .110

-.465 .555

.664 .368

.187 .490

D6A Hard work&effort

D6B Good luck

D6C Natural ability

D6D Who you know

D6E Good performance

D6F Office politics

D6G Adapatability

D6H Years of service

1 2

Factor

Extraction Method: Principal Axis Factoring.

2 factors extracted. 9 iterations required.

a.

The table headed Factor Matrix shows the coefficients a

11

,

a

12

a

nm

for the factor analysis

model (1); for example, the results show that variable d6a =.857 x F

1

+.078 x F

2

, d6b = -

.347 x F

1

+.376 x F

2

, and so on.

As pointed out earlier, the sum of the squared loadings over factors for a given variable

shows the communality for that variable, which is the proportion of the variance of the

variable explained by the common factors; for example, the communality for d6a is .857

2

+

.078

2

, which is equal to .740, the second figure shown for that variable in the Communalities

table above. The sum of the squared loadings for a given factor shows the variance

accounted for by that factor. The figure for Factor 1, for example, is equal to .857

2

+-.347

2

+

.749

2

+ +.187

2

=3.32, which is the figure given for the first factor in the second part of

the Total Variance Explained table above. Finally, we may reproduce the correlations from

the factor-solution coefficients. The correlation between D6A and D6B, for example, is equal

to (.857 x -.347) +(.078 - .376) =-.268, a not unreasonable but not startlingly accurate

estimate of the -.32 shown in the table of observed correlations.

-8-

9.4 Plot of the Factor Loadings

Factor Plot

Factor 1

1.0 .5 0.0 -.5 -1.0

F

a

c

t

o

r

2

1.0

.5

0.0

-.5

-1.0

years of service

adapatability

office politics

good performan

who you know

natural ability

good luck

hard work&effort

When there are two factors, the coefficients shown in the Factor Matrix table may be plotted

on a two-dimensional graph, as above (choose Unrotated factor solution in the Display panel

of the Factor AnalysisExtraction display). This graph shows the phenomenon mentioned

earlier, whereby most variables tend to have substantial loadings on the first factor.

9.5 Rotated Factor Solution

Rotated Factor Matrix

a

.765 -.394

-.092 .503

.780 -.169

-.204 .779

.842 -.405

-.094 .718

.758 -.046

.421 .313

D6A Hard work&effort

D6B Good luck

D6C Natural ability

D6D Who you know

D6E Good performance

D6F Office politics

D6G Adapatability

D6H Years of service

1 2

Factor

Extraction Method: Principal Axis Factoring.

Rotation Method: Varimax with Kaiser Normalization.

Rotation converged in 3 iterations.

a.

The above table shows the factor loading/correlations for a rotated factor solution.

Comparing the graphs for the rotated and unrotated solutions, it can be seen that the

proximity of the points representing the variables to the axes (and the frame) have changed.

This change was brought about by rotating the whole frame, including the axes, in a counter-

clockwise direction. In this case the Varimax method was used; for each variable, this seeks

to maximise the loading on one factor and to minimise the loadings on other factors. As

discussed in An Introduction to SPSS for Windows, a clear pattern now emerges, with items

having to do with performance and ability loading highest on Factor 1 and those to do with

-9-

who you know and good luck loading highest on the Factor 2. Years of service has lowish

loadings on both factors.

9.6 Plot of the Rotated Factor Loadings

Factor Plot in Rotated Factor Space

Factor 1

1.0 .5 0.0 -.5 -1.0

F

a

c

t

o

r

2

1.0

.5

0.0

-.5

-1.0

years of service

adapatability

office politics

good performance

who you know

natural ability

good luck

hard work&effort

The plot shows a clear pattern of the loadings, with all items identified with either Factor 1 or

Factor 2, with the exception of Years of Service, which is poised between the two. This result

illustrates the benefits of rotation.

9.7 Factor Scores

In An Introduction to SPSS for Windows, a 'coarse' method (Grice, 2001) was used to create

factor scores. We simply decided which variables were identified with each factor, and

averaged the ratings on the selected items to creat a score for each subject on each of the two

factors. Years of service was omitted because it was not clearly identified with one factor.

This method of creating factor scores is equivalent to using the equations

F

1

=b

11

X

1

+b

12

X

2

+b

13

X

3

+b

14

X

4

+b

15

X

5

F

2

=b

21

X

1

+b

22

X

2

+b

23

X

3

+b

24

X

4

+b

25

X

5

and assigning the value 1 to the b-coefficients for variables with loadings/correlations greater

then .4 and zero to the b-coefficients for variables with loadings/correlations equal to or less

than .4. Grice (2001) critically evaluates this method. One obvious problem is that factor

scores created in this way may be highyl correlated even when the factors on which they are

based are uncorrelated (orthogonal).

SPSS provides three more sophisticated methods for calculating factor scores. In the

-10-

Factor Score Coefficient Matrix

.184 -.091

.052 .120

.230 .118

.094 .472

.473 -.186

.120 .312

.197 .163

.118 .135

D6A Hard work&effort

D6B Good luck

D6C Natural ability

D6D Who you know

D6E Good performance

D6F Office politics

D6G Adapatability

D6H Years of service

1 2

Factor

Extraction Method: Principal Axis Factoring.

Rotation Method: Varimax with Kaiser Normalization.

Factor Scores Method: Regression.

present example, the default Regression method was used. The first table above,

Factor Score Covariance Matrix

.882 -.090

-.090 .778

Factor

1

2

1 2

Extraction Method: Principal Axis Factoring.

Rotation Method: Varimax with Kaiser Normalization.

Factor Scores Method: Regression.

the Factor Score Coefficient Matrix, shows the coefficients used to calculate the factor

scores; these coefficients are such that if the observed variables are in standardised form, the

factor scores will also have a mean of zero and a standard deviation of one. Using the

coefficients for Factor 1, the factor scores are equal to .184 x zD6A +.052 x zD6B + +

.118 x zD6H, where zD6A, etc are the standardised forms of the original observed variables.

The second table above, Factor Score Covariance Matrix shows that although theoretically

the factor scores should be entirely uncorrelated, the covariance is not zero, which is a

consequence of the scores being estimated rather than calculated exactly.

10. Conclusion

This has been a very brief introduction to factor analysis, and only some of the many

decisions which have to be made by users of the method have been mentioned. Fabrigar et al

(1999) give a thorough review. Some the decisions they refer to are:

The number of subjects (see also MacCallum, et. al., 1999)

The type of factor analysis (e.g., principal axis factoring, principal components analysis,

maximum likelihood)

The method used to decide on the number factors (e.g., eigenvlaues greater than unity,

scree plot, parallel analysis)

The method and type of rotation

-11-

Grice (2001) adds another decision:

The method used to calculate factor scores

These two articles are a good place to start when considering the use of factor analysis, or

when designing a study in which factor analysis will be used.

Alan Taylor

Department of Psychology

5th J une 2001

Modifications and additions 4th May 2002

Slight changes 27th May 2003

Additions 20th March 2004

-12-

11. References and Further Reading

Cureton, Edward. (1983). Factor analysis: an applied approach. Hillsdale, N.J .: Erlbaum.

(QA278.5.C87)

Fabrigar, L., Wegener, D., MacCallum, R., & Strahan, E. (1999). Evaluating the use of

exploratory factor analysis in psychological research. Psychological Methods, 4, 272-

299. [Reserve]

Grice, J . (2001). Computing and evaluating factor scores. Psychological Methods, 6, 430-

450. [Reserve]

Hair, J .F. (1998). Multivariate data analysis. New J ersey: Prentice Hall. (QA278.M85)

(Chapter 3)

Harman, Harry. (1976). Modern factor analysis (3rd Ed., Revised). Chicago :University of

Chicago Press. (QA278.5.H38/1976)

Kim, J ae-on & Charles W. Mueller. (1978). Introduction to factor analysis : what it is and

how to do it. Beverly Hills, Calif. : Sage Publications. (HA29.Q35/VOL 13)

Kim, J ae-on & Charles W. Mueller. (1978). Factor analysis : statistical methods and

practical issues. Beverly Hills, Calif. : Sage Publications. (HA29.Q35/VOL 14)

MacCallum, R.C. et.al. , (1999). Sample size in factor analysis. Psychological Methods, 4,

84-99.

Snook, S.C. & Gorsuch, R.L. (1989). Component analysis versus common factor analysis.

Psychological Bulletin, 106, 148-154.

Widaman, K.F. (1993). Common factor analysis versus principal component analysis:

Differential bias in representing model parameters? Multivariate Behavioral

Research, 28, 263-311.

Wood, J .M., Tataryn, D.J . & Gorsuch, R.L. (1996). The effects of under- and overextraction

on principal axis factor analysis with varimax rotation. Psychological Methods, 1,

354-365.

Potrebbero piacerti anche

- Factor Analysis (FA)Documento61 pagineFactor Analysis (FA)SomyaVermaNessuna valutazione finora

- Quality of Work LifeDocumento1 paginaQuality of Work LifeIndranil SarkarNessuna valutazione finora

- Factorial DesignDocumento4 pagineFactorial DesignPatriciaIvanaAbiogNessuna valutazione finora

- Marketing Notes: Consumer BehaviourDocumento7 pagineMarketing Notes: Consumer BehaviourPriya SrinivasanNessuna valutazione finora

- An Introduction To Factor Analysis: Philip HylandDocumento34 pagineAn Introduction To Factor Analysis: Philip HylandAtiqah NizamNessuna valutazione finora

- Effect of Capital Structure, Size and Profitability on Mining Firm ValueDocumento18 pagineEffect of Capital Structure, Size and Profitability on Mining Firm Valuerahmat fajarNessuna valutazione finora

- Factor Analysis - SpssDocumento15 pagineFactor Analysis - Spssmanjunatha tNessuna valutazione finora

- Business Enviornment: PREPARED BY:alokvyas SemDocumento15 pagineBusiness Enviornment: PREPARED BY:alokvyas SemAlok VyasNessuna valutazione finora

- Consumer Behavior Towards Ayurvedic ProductsDocumento5 pagineConsumer Behavior Towards Ayurvedic ProductsVikrant AryaNessuna valutazione finora

- Factor Analysis T. RamayahDocumento29 pagineFactor Analysis T. RamayahAmir AlazhariyNessuna valutazione finora

- Type and TraitDocumento12 pagineType and TraitSajid AnsariNessuna valutazione finora

- Case - Kumar Soft Drink Bottling Company: Marketing Analytics Dr. Kalim KhanDocumento1 paginaCase - Kumar Soft Drink Bottling Company: Marketing Analytics Dr. Kalim KhanDipinderNessuna valutazione finora

- Macroeconomic Analysis and Policies PDFDocumento3 pagineMacroeconomic Analysis and Policies PDFRKNessuna valutazione finora

- First-mover advantage mechanismsDocumento8 pagineFirst-mover advantage mechanismsHabib EjazNessuna valutazione finora

- 10 PPT Factorial DesignDocumento20 pagine10 PPT Factorial Designavijitf100% (2)

- Macroeconomics: National Income AccountingDocumento10 pagineMacroeconomics: National Income AccountingPARUL SINGH MBA 2019-21 (Delhi)Nessuna valutazione finora

- Factor Analysis of Labor Policies in EU CountriesDocumento5 pagineFactor Analysis of Labor Policies in EU CountriesaidaNessuna valutazione finora

- 1915105-Organisational Behaviour PDFDocumento13 pagine1915105-Organisational Behaviour PDFThirumal AzhaganNessuna valutazione finora

- Analysing Consumer MarketDocumento24 pagineAnalysing Consumer Marketabhi4u.kumarNessuna valutazione finora

- The key is to look at multiple measures over time to understand productivity trends and changes in operations that may be driving improvements. No single measure tells the full storyDocumento27 pagineThe key is to look at multiple measures over time to understand productivity trends and changes in operations that may be driving improvements. No single measure tells the full storySaloni BishnoiNessuna valutazione finora

- Organizational Development Interventions for Improving ProductivityDocumento1 paginaOrganizational Development Interventions for Improving ProductivityAaron Wallace0% (1)

- Factor & Cluster Analysis ExplainedDocumento14 pagineFactor & Cluster Analysis Explainedbodhraj91Nessuna valutazione finora

- Locus of Control and Its Influence On Health Related Help Seeking Behavior in NepalDocumento8 pagineLocus of Control and Its Influence On Health Related Help Seeking Behavior in NepalDristy GurungNessuna valutazione finora

- 2 Puffery-in-AdvertisementDocumento9 pagine2 Puffery-in-AdvertisementHarmeet KaurNessuna valutazione finora

- Factor AnalysisDocumento11 pagineFactor AnalysisGeetika VermaNessuna valutazione finora

- Exploratory Factor AnalysisDocumento9 pagineExploratory Factor AnalysisNagatoOzomakiNessuna valutazione finora

- Introduction To Factor Analysis (Compatibility Mode) PDFDocumento20 pagineIntroduction To Factor Analysis (Compatibility Mode) PDFchaitukmrNessuna valutazione finora

- Synopsis of KasifDocumento7 pagineSynopsis of KasifAjeej ShaikNessuna valutazione finora

- PerceptionDocumento33 paginePerceptionapi-3803716100% (3)

- Monitor Company Case AnalysisDocumento6 pagineMonitor Company Case AnalysisNeel JadhavNessuna valutazione finora

- On Organistaion BehaviourDocumento31 pagineOn Organistaion Behaviourvanishachhabra5008100% (1)

- OB Personality & AttitudeDocumento51 pagineOB Personality & AttitudeRUSHI MISTARINessuna valutazione finora

- Quality of Work LifeDocumento13 pagineQuality of Work LifeMarc Eric RedondoNessuna valutazione finora

- Assesment Centre For SelectionDocumento4 pagineAssesment Centre For SelectionVinod Singh BishtNessuna valutazione finora

- Zeus Asset Management SubmissionDocumento5 pagineZeus Asset Management Submissionxirfej100% (1)

- M.Com 2nd Sem Corporate Legal Framework SyllabusDocumento66 pagineM.Com 2nd Sem Corporate Legal Framework SyllabusAashi PatelNessuna valutazione finora

- Personality TypesDocumento20 paginePersonality TypesGURNEESHNessuna valutazione finora

- Amity - Business Communication 2Documento27 pagineAmity - Business Communication 2Raghav MarwahNessuna valutazione finora

- BCG MatrixDocumento7 pagineBCG MatrixWajiha RezaNessuna valutazione finora

- Unit 2 Consumer As An IndividualDocumento17 pagineUnit 2 Consumer As An IndividualRuhit SKNessuna valutazione finora

- Creative Problem SolvingDocumento36 pagineCreative Problem Solvingwaznee100% (2)

- Mahindra & Mahindra - PGDM 39Documento4 pagineMahindra & Mahindra - PGDM 39jagdish_tNessuna valutazione finora

- Business Ethics by Ravinder TulsianiDocumento29 pagineBusiness Ethics by Ravinder TulsianiDeepika AhujaNessuna valutazione finora

- The Parable of the Sadhu and the Larger Purpose of OrganisationsDocumento11 pagineThe Parable of the Sadhu and the Larger Purpose of OrganisationsHimesh AnandNessuna valutazione finora

- What do you see? Individual psychological variablesDocumento30 pagineWhat do you see? Individual psychological variablesMarc David AchacosoNessuna valutazione finora

- Professional Ethics and Human Values NotDocumento116 pagineProfessional Ethics and Human Values Notluis mendozaNessuna valutazione finora

- NAAC Report SSR CollegeDocumento291 pagineNAAC Report SSR CollegeKaruppannanmariappancollege Kmc100% (1)

- Research Assistant: Passbooks Study GuideDa EverandResearch Assistant: Passbooks Study GuideNessuna valutazione finora

- Indian Retail BankingDocumento17 pagineIndian Retail BankingNeelam KarpeNessuna valutazione finora

- Light Electrical Industry Case Studies ReportDocumento70 pagineLight Electrical Industry Case Studies Reporthvactrg1Nessuna valutazione finora

- Motivation TheoriesDocumento40 pagineMotivation TheoriesRadhika GuptaNessuna valutazione finora

- IIMBx BusMgmtMM AAMP FAQ v3 PDFDocumento2 pagineIIMBx BusMgmtMM AAMP FAQ v3 PDFAnirudh AgarwallaNessuna valutazione finora

- Mergers and AmalgmationsDocumento38 pagineMergers and AmalgmationsShweta SawantNessuna valutazione finora

- Evolution of Banking SectorDocumento16 pagineEvolution of Banking SectorNaishrati SoniNessuna valutazione finora

- Presented by Group 18: Neha Srivastava Sneha Shivam Garg TarunaDocumento41 paginePresented by Group 18: Neha Srivastava Sneha Shivam Garg TarunaNeha SrivastavaNessuna valutazione finora

- A Model of Effective Leadership Styles in IndiaDocumento14 pagineA Model of Effective Leadership Styles in IndiaDunes Basher100% (1)

- Case Studies Solutions CHP 5,8Documento2 pagineCase Studies Solutions CHP 5,8Kaleem MiraniNessuna valutazione finora

- Nomothetic Vs IdiographicDocumento9 pagineNomothetic Vs IdiographicBhupesh ManoharanNessuna valutazione finora

- Brake Design ReportDocumento27 pagineBrake Design ReportK.Kiran KumarNessuna valutazione finora

- Module 6 RM: Advanced Data Analysis TechniquesDocumento23 pagineModule 6 RM: Advanced Data Analysis TechniquesEm JayNessuna valutazione finora

- GooglepreviewDocumento24 pagineGooglepreviewwaldemarclausNessuna valutazione finora

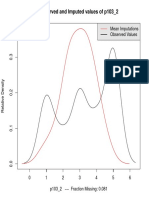

- Observed and Imputed Values of p103 - 2Documento1 paginaObserved and Imputed Values of p103 - 2waldemarclausNessuna valutazione finora

- Banyay, Irene Kardos-The History of The Hungarian MusicDocumento301 pagineBanyay, Irene Kardos-The History of The Hungarian MusicwaldemarclausNessuna valutazione finora

- BibliografíaDocumento1 paginaBibliografíawaldemarclausNessuna valutazione finora

- Call For Papers Coruña 2013 - 2 PDFDocumento2 pagineCall For Papers Coruña 2013 - 2 PDFwaldemarclausNessuna valutazione finora

- Claus LetterDocumento1 paginaClaus LetterwaldemarclausNessuna valutazione finora

- Handbook On Prisons. Willan Publishing, Collumpton: 471-495Documento2 pagineHandbook On Prisons. Willan Publishing, Collumpton: 471-495waldemarclausNessuna valutazione finora

- Chambliss StateorgcrimeDocumento26 pagineChambliss StateorgcrimewaldemarclausNessuna valutazione finora

- Illegal markets, protection rackets and organized crime in RioDocumento19 pagineIllegal markets, protection rackets and organized crime in RiowaldemarclausNessuna valutazione finora

- Bagley Drugs and Violence Final3Documento31 pagineBagley Drugs and Violence Final3waldemarclausNessuna valutazione finora

- Sociology Matters Sample CH1Documento35 pagineSociology Matters Sample CH1Roneil Quban WalkerNessuna valutazione finora

- Within Subjects ANOVADocumento4 pagineWithin Subjects ANOVAwaldemarclaus100% (1)

- Sain M.Documento72 pagineSain M.waldemarclausNessuna valutazione finora

- Unidimensional ScalingDocumento8 pagineUnidimensional ScalingwaldemarclausNessuna valutazione finora

- Sykes y Messinger - The Inmate Social System PDFDocumento9 pagineSykes y Messinger - The Inmate Social System PDFwaldemarclaus0% (1)

- Schrock Identity WorkDocumento18 pagineSchrock Identity WorkwaldemarclausNessuna valutazione finora

- Crime Handbook Final PDFDocumento62 pagineCrime Handbook Final PDFwaldemarclausNessuna valutazione finora

- 02 Ferrel CH 02Documento31 pagine02 Ferrel CH 02waldemarclausNessuna valutazione finora

- Ethno MethodologyDocumento31 pagineEthno MethodologywaldemarclausNessuna valutazione finora

- Moe-The Positive Theory of Public BureaucracyDocumento14 pagineMoe-The Positive Theory of Public BureaucracywaldemarclausNessuna valutazione finora

- Staff Prisoner Relations WhitemoorDocumento201 pagineStaff Prisoner Relations WhitemoorwaldemarclausNessuna valutazione finora

- Going The Distance - Foreign National PrisonersDocumento64 pagineGoing The Distance - Foreign National PrisonerswaldemarclausNessuna valutazione finora

- Moe-The Positive Theory of Public BureaucracyDocumento14 pagineMoe-The Positive Theory of Public BureaucracywaldemarclausNessuna valutazione finora

- Van Maanen, 2010Documento48 pagineVan Maanen, 2010waldemarclausNessuna valutazione finora

- Staff Prisoner Relations WhitemoorDocumento201 pagineStaff Prisoner Relations WhitemoorwaldemarclausNessuna valutazione finora

- Managing The Dilemma: Occupational Culture and Identity Among Prison OfficersDocumento1 paginaManaging The Dilemma: Occupational Culture and Identity Among Prison OfficerswaldemarclausNessuna valutazione finora

- Tait CareandtheprisonDocumento9 pagineTait CareandtheprisonwaldemarclausNessuna valutazione finora

- 6 F608 D 01Documento64 pagine6 F608 D 01waldemarclausNessuna valutazione finora

- 6 F608 D 01Documento64 pagine6 F608 D 01waldemarclausNessuna valutazione finora

- Solving Nonlinear Equations With Newton's MethodDocumento119 pagineSolving Nonlinear Equations With Newton's MethodTMaxtor100% (2)

- Fitri Anjaini Sinaga - 1906113279 - Laporan EVSONDocumento7 pagineFitri Anjaini Sinaga - 1906113279 - Laporan EVSONFitri Anjaini SinagaNessuna valutazione finora

- 6 Bromo Cresol GreenDocumento7 pagine6 Bromo Cresol GreenVincent KwofieNessuna valutazione finora

- JEE Main Sets Relations and Functions Important Questions (2022)Documento16 pagineJEE Main Sets Relations and Functions Important Questions (2022)shreenath gajalwarNessuna valutazione finora

- Lectures #1+2+3Documento10 pagineLectures #1+2+3Long NguyễnNessuna valutazione finora

- Cracking The GRE Chemistry Test, 3rd Edition (Graduate School Test Preparation) 2Documento7.455 pagineCracking The GRE Chemistry Test, 3rd Edition (Graduate School Test Preparation) 2icedjesuschrist0% (21)

- The Role of N H P P Models in The Practical Analysis of Maintenance Failure DataDocumento8 pagineThe Role of N H P P Models in The Practical Analysis of Maintenance Failure DataRoberto Cepeda CastelloNessuna valutazione finora

- MMGT6012 - Topic 7 - Time Series ForecastingDocumento46 pagineMMGT6012 - Topic 7 - Time Series ForecastingHuayu YangNessuna valutazione finora

- M3001 Cheat SheetDocumento4 pagineM3001 Cheat SheetVincent KohNessuna valutazione finora

- Universidad de Las Fuerzas Armadas Espe: Fausto Granda GDocumento13 pagineUniversidad de Las Fuerzas Armadas Espe: Fausto Granda GLuis Adrian CamachoNessuna valutazione finora

- Santander Customer Transaction Prediction Using R - PDFDocumento171 pagineSantander Customer Transaction Prediction Using R - PDFShubham RajNessuna valutazione finora

- Initial ValueDocumento10 pagineInitial ValuemeeranathtNessuna valutazione finora

- Binomial Series QuestionsDocumento2 pagineBinomial Series QuestionsDave WooldridgeNessuna valutazione finora

- AP Practice Exam 1Documento52 pagineAP Practice Exam 1kunichiwaNessuna valutazione finora

- Curl Noise SlidesDocumento52 pagineCurl Noise Slidesregistered99Nessuna valutazione finora

- Find Maximum Element Index in ArrayDocumento6 pagineFind Maximum Element Index in Arraysiddharth1kNessuna valutazione finora

- Literature ReviewDocumento6 pagineLiterature ReviewSiddhi SharmaNessuna valutazione finora

- E180 Standard Practice For Determining The Precision of ASTM Methods For Analysis and Testing of Industrial and Specialty Chemicals PDFDocumento14 pagineE180 Standard Practice For Determining The Precision of ASTM Methods For Analysis and Testing of Industrial and Specialty Chemicals PDFBryan Mesala Rhodas Garcia0% (1)

- Mathematical Inequalities Volume 5 Other Recent Methods For Creating and Solving InequalitiesDocumento554 pagineMathematical Inequalities Volume 5 Other Recent Methods For Creating and Solving InequalitiesNadiaNessuna valutazione finora

- 3D Solid and Axisymmetric Finite ElementsDocumento10 pagine3D Solid and Axisymmetric Finite ElementsQamar Uz ZamanNessuna valutazione finora

- Thomas Calculus SolutionDocumento13 pagineThomas Calculus Solutionong062550% (6)

- Linear Equation in One Variable Part 1 AssignmentDocumento3 pagineLinear Equation in One Variable Part 1 Assignmentgobinda prasad barmanNessuna valutazione finora

- NEXT CHAPTER MYP UP 9 Linear RelationshipsDocumento7 pagineNEXT CHAPTER MYP UP 9 Linear RelationshipsAmos D'Shalom Irush100% (1)

- Course Outline, MATH 101-Calculus-I Sec 1-2 - Fall2021 - Imran NaeemDocumento4 pagineCourse Outline, MATH 101-Calculus-I Sec 1-2 - Fall2021 - Imran Naeemsumbel123Nessuna valutazione finora

- Least CostDocumento4 pagineLeast CostSrikanth Kumar KNessuna valutazione finora

- Example of CGPA & SGPA CalculationsDocumento2 pagineExample of CGPA & SGPA CalculationsGrim Reaper Kuro OnihimeNessuna valutazione finora

- Icomos Iscarsah RecommendationsDocumento39 pagineIcomos Iscarsah RecommendationsGibson 'Gibby' CraigNessuna valutazione finora

- New Normal MPA Statistics Chapter 2Documento15 pagineNew Normal MPA Statistics Chapter 2Mae Ann GonzalesNessuna valutazione finora

- Chapter 4.0 Buffer SolutionDocumento28 pagineChapter 4.0 Buffer SolutionMuhd Mirza HizamiNessuna valutazione finora

- Exploring The Role of Stakeholders in Place Branding - A Case Analysis of The 'City of Liverpool'Documento19 pagineExploring The Role of Stakeholders in Place Branding - A Case Analysis of The 'City of Liverpool'Hasnain AbbasNessuna valutazione finora