Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Untitled

Caricato da

api-253067232Descrizione originale:

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Untitled

Caricato da

api-253067232Copyright:

Formati disponibili

Specialty Milestones and the Next Accreditation System: An Opportunity

for the Simulation Community

Michael S. Beeson, MD, MBA;

John A. Vozenilek, MD

Summary Statement: The Accreditation for Graduate Medical Education has de-

veloped a new process of accreditation, the Next Accreditation System (NAS), which

focuses on outcomes. A key component of the NAS is specialty milestonesVspecic be-

havior, attributes, or outcomes within the general competency domains. Milestones will

mark a level of prociency of a resident within a competency domain. Each specialty has

developed its own set of milestones, with semiannual reporting to begin July 2013, for 7

specialties, and the rest in July 2014.

Milestone assessment must be based on objective data. Each specialty will determine

optimal methods of measuringmilestones, basedonease, cost, validity, andreliability. The

simulation community has focused many graduate medical education efforts at training

and formative assessment. Milestone assessment represents an opportunity for simulation

modalities tooffer summative assessment of milestone prociencies, addingtothe potential

methods that residency programs will likely use or adapt. This article discusses the NAS,

milestone assessment, andthe opportunity tothe simulation community tobecome involved

in this next stage of graduate medical education assessment.

(Sim Healthcare 9:184Y191, 2014)

Key Words: Milestones, Resident assessment, Competency assessment

The Accreditation for Graduate Medical Education (ACGME)

and the specialty boards of the American Board of Medical

Specialties (ABMS) have cooperated to dene and develop

resident education milestones for each specialty. These mile-

stones are dened as specic behaviors, attributes, or out-

comes in the general competency domains to be demonstrated

by residents at a particular point during the residency educa-

tion. The milestones are outgrowths of the Outcome Pro-

ject,

1

in which 6 core competencies were dened (patient

care, medical knowledge, professionalism, interpersonal

and communication skills, systems-based practice, and

practice-based learning and improvement). The milestones

for each medical specialty represent outcome measures (ie,

milestones of competency development) to be used as evi-

dence of residency programs educational effectiveness and

a measure of a residents level of prociency within any

given milestone subcompetency. Procedural skills, often

referred to as technical skills, have been folded into the

patient care domain. Milestones are the cornerstone of the

ACGMEs Next Accreditation System (NAS), in which the

focus is on outcomes.

It is unclear which of multiple assessment methods

may be most appropriate for specic milestones and their

competency domains. Many may be amenable to assess-

ment using simulation and its related modalities, including

computer-based clinical environment modeling, standard-

ized patients, task trainers, and various hybrid models. The

simulation community may be in a unique position to de-

velop a role in the development of tools for the assessment

of specialty milestones.

Special Article

184 Specialty Milestones and Next Accreditation System Simulation in Healthcare

From the Akron General Medical Center (M.S.B.), Northeast Ohio Medical University,

Akron, OH; and Jump Trading Simulation and Education Center (J.A.V.), OSF

Healthcare, University of Illinois College of Medicine, Peoria, IL.

Reprints: Michael S. Beeson, MD, MBA, Akron General Medical Center,

Northeast Ohio Medical University, 400 Wabash Ave, Akron, OH 44307

(e<mail: Michael.beeson@akrongeneral.org).

The authors declare no conict of interest.

Michael S. Beeson, MD, MBA, is the medical director of simulation at the Simulation

Learning Center of Akron General Medical Center. He has nearly 20 years of

experience as a program director in emergency medicine. He is a past president of

the Council of Emergency Medicine Residency Directors Association (CORD). He is

the vice chair of the ACGME Residency Review Committee for Emergency Medicine.

He chaired the Emergency Medicine Milestone Working Group that developed the

emergency medicine milestones.

John A. Vozenilek, MD, is the director of simulation and chief medical ofcer

of the Jump Trading Simulation and Education Center. Dr Vozenilek provides

central coordination and oversight for OSF Healthcares undergraduate, graduate,

interdisciplinary, and continuing medical education programs. Under his direction,

OSF Healthcare and the University of Illinois College of Medicine at Peoria have

created additional organizational capabilities and infrastructure, building resources

for educators who wish to use additional innovative learning technologies for teaching

and assessment. As a faculty member of the university, Dr Vozenilek is actively

involved in the academic programs across traditional departmental boundaries and,

as CMO for Simulation at OSF, in clinical practice at the 8 hospitals and numerous

clinical sites of OSF Healthcare.

Dr Vozenilek has served as medical advisor to the Chicago Clinical Skills Evaluation

Center for the USMLE Clinical Skills Examination and has a leadership role in the

Simulation Academy within the Society for Academic Emergency Medicine. In May of

2008, he cochaired the rst Agency for Healthcare Research and QualityYsponsored

national consensus conference on using simulation research to dene and develop clinical

expertise. In addition to his role in simulation, Dr Vozenilek serves as faculty with the

Institute for Healthcare Research and its Center for Patient Safety and teaches within

Northwestern Universitys masters degree program in health care quality and safety.

Copyright * 2014 Society for Simulation in Healthcare

DOI: 10.1097/SIH.0000000000000006

Copyright 2014 by the Society for Simulation in Healthcare. Unauthorized reproduction of this article is prohibited.

Milestone Development Within the NAS

The ACGME accredits allopathic residencies and fellow-

ships. There are 26 main specialties and 109 subspecialties

that are accredited by the ACGME. At one time, the focus of

individual residency accreditation was on compliance with

process, ensuring that individual residencies followed all re-

quirements dened in program requirements. The emphasis

on process compliance often ignored whether educational

outcomes were satisfactory. The Outcome Project was initi-

ated by the ACGME in 1998, with a focus on dening the

6 core competencies and changing the emphasis from process

to educational outcomes. During a 10-year period, the ex-

pectation was that each specialty would develop specialty-

specic competencies. Residencies were expected to integrate

these into their curricula, developing instructional and assess-

ment methods for these competencies. However, it became

clear that this integration had not generated a dependable

set of assessment tools.

2

In May 2008, Dr Thomas Nasca,

3

the chief executive ofcer of the ACGME, announced a tran-

sition to outcomes-based accreditation, with milestones as

the underlying framework.

The framework of the NAS was introduced in 2012.

4

The

NAS is a result of the restructuring of the accreditation

system that began in 2009. The NAS will begin implemen-

tation in July 2013, with 7 specialties initially participatingV

emergency medicine, internal medicine, neurologic surgery,

orthopedic surgery, pediatrics, diagnostic radiology, and

urology. Each of these specialties have developed milestones

unique to their specialty

5Y11

but similar in categorization

within the 6 core competencies. The 3 goals of the NAS are

to enhance the peer-review accreditation system to better

prepare physicians for practice, focus the accreditation sys-

tem on educational outcomes, and reduce the burden of the

current process-based approach.

To achieve the goal of dening specialty-specic mile-

stones, each specialty formed working groups sponsored

by the ACGME and that specialtys certication board.

12

Typically, these working groups had membership derived

from the various academic and practice organizations of

that specialty and included residents, academic physicians,

ACGMEResidency ReviewCommittee members, andresidency

program directors. The charge to each working group was to

develop milestones for that specialty. Each milestone would

be categorized within a subcompetency under a specic core

competency domain. The ACGME formed an expert group

for 4 core competencies (practice-based learning, systems-

based practice, professionalism, as well as interpersonal and

communication skills). This expert group developed model

milestones that could be adopted or adapted to a particular

specialtys need. This allowed the milestone working groups

to focus on patient care and medical knowledge milestones.

Each specialty has different numbers of milestone sub-

competencies, varying from 12 in diagnostic radiology to

41 for orthopedic surgery (Table 1). The differences reect

the differences in each specialty. Table 2 demonstrates dif-

ferences between just 2 specialties, emergency medicine and

pediatrics. Although each specialty may have different sets

of milestones and subcompetencies, there is commonality

in that the 6 core competencies are addressed and each

milestone must have measurable attributes or outcomes.

Each subcompetency for each specialty will have the indi-

vidual residents prociency level reported to the ACGME

semiannually.

Example of a Milestone

Emergency medicine has emergency stabilization as

one of its patient care milestone subcompetencies (PC1)

(Table 3). This milestone subcompetency, similar to others

regardless of specialty, has 5 levels of prociency, based on

the Dreyfus model of skill acquisition.

13

Level 1 is the level

of competence expected of a graduating medical student.

Level 4 is the level of prociency expected of a graduating

resident fromthat specialty. Level 5 is attained primarily after

practice experience after residency completion. Levels 2 and

3 represent varying levels of prociency that occur during

residency training. Each of these levels may be attained at

different rates of progression, although some specialties

may suggest expected specic levels of prociency based on

training year. Each level has markers (milestones) within

it that dene knowledge, skills, and abilities for that level of

prociency. As an example, one milestone for level 3 of the

emergency stabilization emergency medicine milestone

subcompetency states, Discerns relevant data informa-

tion to formulate a diagnostic impression and plan. This

level of prociency is dened as more advanced than the

level 2 markers and less advanced than the level 4 markers.

Common Aspects of Milestones from Different Specialties

The Milestones, regardless of specialty, are rooted in

the 6 core competencies. Although each specialty will adapt

the expert groups milestone competencies developed for

professionalism, interpersonal communicationskills, practice-

based learning and improvement, and systems-based practice,

the underlying principles remain the same. Specialty differ-

ences may exist, but potentially broad milestone competencies

will not vary widely.

TABLE 1. Milestone Subcompetencies by Specialty

Core Competency

Emergency

Medicine

Internal

Medicine Pediatrics

Diagnostic

Radiology

Orthopedic

Surgery

Neurologic

Surgery Urology

Patient care 14 5 5 2 16 8 9

Medical knowledge 1 2 1 2 16 8 1

Professionalism 2 4 6 1 2 2 6

Interpersonal and communication skills 2 3 2 2 2 2 5

Systems-based practice 3 4 3 2 3 2 4

Practice-based learning 1 4 4 3 2 2 7

Total 23 22 21 12 41 24 32

Vol. 9, Number 3, June 2014 * 2014 Society for Simulation in Healthcare 185

Copyright 2014 by the Society for Simulation in Healthcare. Unauthorized reproduction of this article is prohibited.

Regardless of specialty, each residency must report to

the ACGMEthe level of prociency that a resident has within

each of the milestone subcompetencies. This reporting will

be performed every 6 months beginning with the 2013Y2014

academic year. The benets of this will include the ability to

compare performance across residencies and postgraduate

year levels.

A Potential Role for Simulation Modalities in the NAS

The ACGME Advisory Committee on Educational Out-

come Assessment has made 5 recommendations related to

high-quality assessment methods.

14

These recommendations

include a core set of specialty-appropriate assessment methods

implemented within and across residencies. This advisory

committee recommended that assessment methods be eval-

uated in terms of quality. The recommended grading of as-

sessment methods relies on a number of standards, including

reliability, validity, ease of use, required resources, ease of in-

terpretation, and educational impact. Class 1 represents an

assessment method recommended as a core component of

the programs evaluation system. Class 2 is an assessment

method that can be considered for use as one component of

the programs evaluation system. Class 3 is an assessment

that canbe provisionally usedas a component of the programs

evaluation system. As an example, in internal medicine, the

Mini-CEX is an assessment method using direct observation

with concurrent rating of a real patient encounter. It is graded

as class 2, meaning that the assessment method can be con-

sidered for use as one component of a programs evaluation

system. There were no class 1 methods identied, where a

specic assessment method was recommended as a core com-

ponent of the programs evaluation system. Simulation and its

various modalities may be able to play a role in the NAS

because no current methods of summative assessment ex-

ists, which are considered class 1. An argument may be made

that various simulation modalities can address reliability,

validity, ease of use, required resources, ease of interpreta-

tion, and educational impact, and ultimately be considered

class 1 or 2.

Simulation modalities provide a controlled environment

for the evaluation across predened and known circumstances,

anefciency for evaluationdue tothe reductionof system-based

interference, and the ability to produce reliable multievaluator

inputs. Simulation provides a platform for a highly structured

assessment and analysis. The variability of clinical circum-

stances may confound a similar analysis.

However, it is not clear which competency domains will

be best suited for assessment using simulation modalities.

TABLE 2. Comparison of Milestone Subcompetencies Between

Emergency Medicine and Pediatrics

Emergency medicine

1. PC1, Emergency stabilization

2. PC2, Performance of focused history and physical examination

3. PC3, Diagnostic studies

4. PC4, Diagnosis

5. PC5, Pharmacotherapy

6. PC6, Observation and reassessment

7. PC7, Disposition

8. PC8, Multitasking (Task switching)

9. PC9, General approach to procedures

10. PC10, Airway management

11. PC11, Anesthesia and acute pain management

12. PC12, Other diagnostic and therapeutic procedures: ultrasound

(diagnostic/procedural)

13. PC13, Other diagnostic and therapeutic procedures: wounds

management

14. PC14, Other diagnostic and therapeutic procedures: vascular access

15. MK, Medical knowledge

16. PROF1, Professional values

17. PROF2, Accountability

18. ICS1, Patient-centered communication

19. ICS2, Team management

20. PBLI, Practice-based performance improvement

21. SBP1, Patient safety

22. SBP2, Systems-based management

23. SBP3, Technology

Pediatrics

1. PC1, Gather essential and accurate information about the patient

2. PC2, Organize and prioritize responsibilities to provide patient care

that is safe, effective, and efcient

3. PC3, Provide transfer of care that ensures seamless transitions

4. PC4, Make informed diagnostic and therapeutic decisions that result

in optimal clinical judgment

5. PC5, Develop and carry out management plans

6. MK1, Locate, appraise, and assimilate evidence from scientic

studies related to their patients health problems

7. PBLI1, Identify strengths, deciencies, and limits in ones knowledge

and expertise

8. PBLI2, Identify and perform appropriate learning activities to guide

personal and professional development

9. PBLI3, Systematically analyze practice using quality improvement

methods, and implement changes with the goal of practice

improvement

10. PBLI4, Incorporate formative evaluation feedback into daily practice

11. ICS1, Communicate effectively with patients, families, and the

public, as appropriate, across a broad range of socioeconomic and

cultural backgrounds

12. ICS2, Demonstrate the insight and understanding into emotion

and human response to emotion that allow one to appropriately

develop and manage human interactions

13. PROF1, Demonstrate humanism, compassion, integrity, and respect

for others; based on the characteristics of an empathetic practitioner

14. PROF2, Professionalization: demonstrate a sense of duty and

accountability to patients, society, and the profession

15. PROF3, Demonstrate high professional conduct: high standards

of ethical behaviors that include maintaining appropriate

professional boundaries

16. PROF4, Develop the ability to use self-awareness of ones own

knowledge, skill, and emotional limitations that leads to appropriate

help-seeking behaviors

17. PROF5, Demonstrate trustworthiness that makes colleagues feel

secure when one is responsible for the care of patients

18. PROF6, Recognize that ambiguity is part of clinical medicine

and respond by utilizing appropriate resources in dealing

with uncertainty

19. SBP1, Coordinate patient care within the health care system

relevant to their clinical specialty

20. SBP2, Advocate for quality patient care and optimal patient

care systems

21. SBP3, Work in interprofessional teams to enhance patient safety

and improve patient care quality

ICS indicates interpersonal communication skills; MK, medical knowledge; PBLI,

Practice-based learning and improvement; PC, patient care; PROF, professionalism;

SBP, systems-based practice.

TABLE 2. (Continued)

186 Specialty Milestones and Next Accreditation System Simulation in Healthcare

Copyright 2014 by the Society for Simulation in Healthcare. Unauthorized reproduction of this article is prohibited.

Likewise, within competency domains, different prociency

levels may not be as easily assessed. These issues are not

unique to the use of simulation to assess milestone com-

petencies. Currently accepted assessment methods for core

competencies, such as direct observation and global rating

scales, will also need to undergo study.

The Opportunity for the Simulation Community

Within the NAS, prociency level reporting for each

resident will begin for the initial 7 specialties in July 2013.

The issue for each residency and teaching hospital will be

how to assess each resident to arrive at these individual

milestone prociency levels. This is the opportunity. Each

specialty will be looking at developing efcient methods of

assessment. The simulation community is in the unique

position of being able to develop and offer reproducible

assessment methods. It is not clear to any specialty which

competency domains are best assessed using different assess-

ment mechanisms. One of the opportunities that the NAS

and milestone assessment affords the simulation community

is the ability to explore which simulation modalities and as-

sessment methods may be useful in milestone prociency

assessment.

The broad competency domain of patient care also

includes procedural components. Most simulation centers

include procedural task training equipment. Developing

procedural competency training programs with an assess-

ment component may prove to be efcient in the assess-

ment of different procedural-based milestones. In addition,

if simulation centers develop procedural competency programs

rather than individual specialty residency programs, uniform

training and assessment in procedures that cross specialties,

such as central venous access, may result in uniform training

regardless of specialty.

One of the attractions of using simulation modalities for

teaching and assessment is the reproducible nature of sim-

ulation exercises. This allows for the reliability of assessment

instruments to be studied and known. This is different from

the potential issues related to global rating scales, direct

observation, chart review, and so on, in which the assessment

instrument may not be able to be adequately studied because

of its variability in the application to individual assessments.

Simulation modalities and their assessment tools have

been recognized for their validity and reliability in high-

stakes assessment.

15,16

Reliability of standardized patient

assessments has long been studied, aided by the reproducible

nature of the clinical exercise. Other areas of medical edu-

cation requiring assessment tools, such as handoffs, have

developed assessment tools, although with limited study of

their interrater reliabilities.

17,18

More research needs to be

done into the characteristics of assessment tools. This may be

one of the chief advantages of using simulation modalities

for milestone assessment over more traditional methods of

resident evaluationVthe ability to develop assessment tools

with known reliability given a reproducible clinical scenario.

Areas of Opportunity

There are multiple areas of opportunity for the simulation

community to assist in fullling the requirements of the

NAS. The NAS involves summative assessment reporting.

TABLE 3. PC1, Emergency Stabilization

Prioritizes critical initial stabilization action and mobilizes hospital support services in the resuscitation of a critically ill or injured patient and

reassesses after stabilizing intervention

Level 1 Level 2 Level 3 Level 4 Level 5

Describes a primary

assessment on a

critically ill or

injured patient

Recognizes when a patient

is unstable requiring

immediate intervention

Discerns relevant data to

formulate a diagnostic

impression and plan

Manages and prioritizes

critically ill or injured

patients

Develops policies andprotocols

for the management and/or

transfer of critically ill or

injured patients

Recognizes abnormal

vital signs

Recognizes in a timely

fashion when further

clinical intervention is futile

Prioritizes vital critical

initial stabilization

actions in the

resuscitation of a

critically ill or

injured patient

Reassesses after implementing

a stabilizing intervention

Evaluates the validity of a

do not resuscitate order

Performs a primary

assessment on a

critically ill or

injured patient

Integrates hospital support

services into a management

strategy for a problematic

stabilization situation

Comments:

Vol. 9, Number 3, June 2014 * 2014 Society for Simulation in Healthcare 187

Copyright 2014 by the Society for Simulation in Healthcare. Unauthorized reproduction of this article is prohibited.

Simulation modalities are increasingly accepted for spe-

cialty certication and licensure examinations, examples of

summative assessment.

19

The American Board of Emer-

gency Medicine has been using a hybrid model of simu-

lation for high-stakes certication assessment since its

inception with excellent interrater reliability.

20

Simulation

modalities include computer-based clinical environment

modeling, standardized patients, task trainers, and various

hybrid models. These modalities may be applied to the various

specialty milestone competencies to develop reliable assess-

ment instruments. Areas of opportunity include the following:

1. Development of specialty-wide clinical scenario sim-

ulations using various modalities that can assess some

(but not all) milestone competencies;

2. Development of unique procedural skill competency

assessments, unique to each specialty, and incorpo-

rated into an overall procedural skill acquisition pro-

gram for specialty-appropriate procedures;

3. Investigation into other simulation modalities besides

mannequin, such as standardized patients, traditional

objective standardized clinical examinations, task trainers,

computer-based situation scenarios, and so on, which

may be useful for specic milestone competency domains.

The development of specialty-wide clinical scenario

simulations may use various modalities including computer-

based clinical environment modeling, standardized patients,

or mannequins. Whereas the simulation community has been

developing clinical scenarios for years for teaching and for-

mative assessment, the subtle difference with its use and the

NAS is that clinical scenarios must map to milestone com-

petencies and inform their summative assessment. These

scenarios in turn must be evaluated for validity evidence in

reference to clinical care, and the scoring must be evaluated

for reliability. Simulation modalities are ideally suited for

reliability studies because of the reproducibility inherent

to the scenarios. This is in contradistinction to other as-

sessment modalities within the Toolbox of Assessment

Methods

21,22

including 360-degree evaluations, checklists

based on chart review, randomdirect observation of patients,

andglobal rating scales inwhichreproducibility of the clinical

scenario does not exist.

Development of procedural skill competency assess-

ments is another opportunity for the simulation commu-

nity. Rather than focusing on pure assessment, a better

longitudinal approach may be to develop, for each specialty,

a program of procedural skill acquisition consisting of a

stepwise progression to prociency. There is clear evidence

that mastery learning of key procedures using highly de-

fensible assessment standards results in improved clinical

outcomes.

23

Task trainers are an integral part of any stepwise

approach to procedural competency, as well as for main-

tenance of skill retention.

Implementation of Milestone Assessment Within a

Simulation Center

Many logistical concerns must be addressed. Logistical

concerns include the need for interdisciplinary relation-

ships and collaboration. Logistical concerns may include

the following:

1. Who designs the scenario and evaluation tools for

milestone assessment?

2. Cancurrent scenarios be usedfor milestone assessment?

3. What additional resources and personnel are needed

to provide milestone assessment?

4. The use of simulation centers for milestone assess-

ment as a value proposition

Who Designs the Scenario and Evaluation Tools for

Milestone Assessment?

The specialty residency leadership team must collaborate

with the simulation center leadership and staff (scenario de-

signers, simulation technicians, standardized patient trainers,

psychometricians) to designadequate tools to assess milestone

competency domains. The simulation center staff will likely

be more knowledgeable about issues related to generic as-

sessment tools, such as checklists, specic behaviors, global

ratings scales, and so on. The residency leadership will initially

be more familiar with the specic milestone competencies of

its specialty. This partnership between residency and simula-

tion educators can seek expertise in ultimately designing

assessment instruments that are reliable. The collaboration

can result in assessing tools specic to milestone compe-

tency domains and informing the residencys clinical com-

petency committee of milestone prociency level.

Because the NAS is newand beginning in July 2013, very

few if any assessment tools have been developed for mile-

stone assessment within a given specialty. One of the goals

of the simulation community as a whole could be to develop

specialty-specic milestone assessment tools and scenarios

where the reliability of the assessment instrument is known,

along with the accepted validity of the clinical scenario

compared with actual patient care.

Can Current Scenarios Be Used for Milestone Assessment?

Designed scenarios, whether used with standardized

patients, high-delity simulation mannequins, or hybrid

modalities were likely not designed for summative evalua-

tion. Instead, they were designed for teaching and formative

assessment. Most scenarios follow a template and include

overall goals of the exercise and specic objectives. The ob-

jectives are often linked to one or more of the 6 core com-

petencies. To be useful for milestone assessment, the specic

knowledge, skills, and abilities that the scenario focuses on

must be mapped to specic milestone subcompetency do-

mains for a given specialty. As an example, a chest pain

scenario may require the resident to recognize unstable vital

signs and begin stabilization by ordering an electrocardio-

gram and instituting rhythm and vital sign support. This is

clearly a patient care core competency. This scenarios ob-

jectives can be related to emergency medicines initial

stabilization milestone subcompetency (PC1)

5

as well as

internal medicines gathers and synthesizes essential and

accurate information to dene each patients clinical prob-

lem(s) milestone subcompetency (PC1).

8

To be useful as a

milestone assessment exercise, the specic milestone sub-

competency and prociency level must be mapped to the

scenarios objectives and behaviors.

188 Specialty Milestones and Next Accreditation System Simulation in Healthcare

Copyright 2014 by the Society for Simulation in Healthcare. Unauthorized reproduction of this article is prohibited.

What Additional Resources and Personnel Are Needed to Provide

Milestone Assessment?

The simulation center staff is in the best position to

evaluate this, in cooperation with the residency leadership

team. Multiple evaluators will be needed, and each of these

will require uniform assessment training. Additional sim-

ulation center staff may be needed, such as actors, confed-

erate nurses, mannequin technicians, standardized patients,

as well as support and administrative staff to ensure that a

multiple resident evaluation process is run smoothly and

efciently. The degree of additional resources needed is

directly dependent on the degree of simulation center in-

tegration into milestone competency assessment.

The Use of Simulation Centers for Milestone Assessment as a

Value Proposition

The use of simulation modalities to assess milestone

prociency represents a value proposition for teaching hos-

pitals. The cost of using simulation modalities for assessment

(simulation center staff, faculty raters, scenario development)

is balanced by the need to provide semiannual milestone as-

sessment of residents. Current methods of resident assessment

are focusedonthe endof rotationglobal rating scales. The NAS

requires objective measures of milestone prociency. Current

methods will need to be altered to provide this objective as-

sessment. As teaching hospitals struggle to develop new ap-

plicable methods of assessment, simulation centers are poised

to offer efcient solutions.

The ACGME has mandated the NAS, and after the rst-

year experience with the initial 7 specialties, all specialties

will be forced to participate. Regardless of whether a sim-

ulation center is involved in milestone assessment, there

will be additional cost to determine the level of prociency

each resident is at within their specialtys milestones.

The additional resources needed for resident milestone

assessment must be borne by the residency or simulation

center. Sources for payment of this cost may include the

teaching hospitals graduate medical education ofce, the

teaching hospitals foundation, auxiliary board, and so on.

This cost may be prohibitive to a residency desiring to use

simulation modalities to answer milestone prociency as-

sessment of their residents. The cost factor may also force

simulation modalities to be used instead as an audit tool

of a specic residents performance, after concerns are brought

up by global rating scales or other more subjective methods

of resident performance.

Benets to the Simulation Community

The simulation community can emerge as a contributor

to milestone assessment in the NAS. By being able to offer

solutions to the very real problem (for individual residen-

cies) of how to assess milestone competencies, simulation

programs within teaching hospitals and universities may

become forces not only in the formative assessment of

residents but also in the high-stakes summative assessment

mandated by the NAS. Because summative assessment must

answer reliability issues, simulation solutions are poised to

potentially deliver assessment programs where the milestone

competency reporting of an individual resident may be com-

pared with other residents using the same methodology.

Simulation centers may need additional resources to fully

explore anddevelopassessment mechanisms for the NAS. This

comes at a time when it is estimated that larger resources will

be needed to provide more denitive answers to the big

questions about simulation.

24

Milestone assessment within

simulation centers may be able to generate revenues for the

center, similar to what some centers have done with mainte-

nance of certication required by all ABMS specialties.

25

The

NAS and its milestones could benchmark professional com-

petencies, providing more objective assessment criteria for

maintenance of certication competency requirements.

Widespread acceptance of simulation-based assessment

to inform the milestone competencies may facilitate research

into translational patient care. This may occur not only at the

laboratory level (T1) but also at patient care practices (T2).

26

The result of providing summative assessment to inform

milestone competency scoring is that the simulation center

will become recognized not only as graduate and under-

graduate medical education training centers focused on

formative assessment but also potentially for high-stakes

milestone summative assessment within the teaching hos-

pital and university. It will become the center for train the

trainer with an accepted understanding of the importance

of faculty training in the assessment to provide reliable

scoring. This in turn will increase the role of the simulation

center in faculty development.

Limitations to the Use of Simulation Modalities

Assessment using simulation modalities has been fo-

cused on formative assessment, with limited experience in

high-stakes summative assessment using standardizedpatients

and OSCEs. Formative assessment is dynamic, allowing the

teacher to adjust teaching and learning to the learner. Sum-

mative assessment is an assessment usually done at different

points in time that assesses what a learner knows and does

not know. Milestone prociency reporting is required every

6 months and is a summative high-stakes assessment. If

simulation modalities are going to be used for this purpose,

the simulation community will need additional expertise to

develop simulations that are suitable to alter its focus for

milestone assessment toward summative assessment.

Simulation modalities may not be suitable for all mile-

stone competency domains. As an example, many aspects of

professionalism and interpersonal skills (2 of the core com-

petencies) may not translate well to actual patient and staff

interactions. A resident may be on his or her best behavior in

interacting with a standardized patient, which may not rep-

resent the residents typical behavior, whereas with direct

patient care, the resident may have lapses in both profes-

sionalism and interpersonal skills. Although some simulation

exercises may be designed which can assess practice-based

learning skills, whether a resident actually does that during

real patient care may not be known.

Milestone competencies that are focused on procedural

skills are beginning to accumulate limited literature that

demonstrates procedural simulation training improves skill

performance or skill retention. Articles are now beginning to

show the positive patient outcomes that can occur with a

stringent procedural training process.

27,28

Which procedural

Vol. 9, Number 3, June 2014 * 2014 Society for Simulation in Healthcare 189

Copyright 2014 by the Society for Simulation in Healthcare. Unauthorized reproduction of this article is prohibited.

skills and in what way to best train and retain still needs to

be determined and systematized across specialties.

Complex scenarios using mannequins may not be able

to adequately give the cues to an examinee, compared with

a real clinical scenario. This may result in the overall nding

that milestone assessment is most valid at lower levels of

prociency and at more basic levels of performance. This

would limit the ability of some simulation modalities to

inform prociency level at higher levels of performance.

The reliability and validity of simulation modalities may

actually detract fromtheir value. As an example, the interrater

reliability of a scenario may be high because the assessment

instrument is not detailed and therefore unable to provide

meaningful milestone subcompetency prociency level. Like-

wise, the validity of a scenario may be increased, but if done

with greater complexity, the interrater reliability would suffer.

Because of cost, simulation modalities may function

best as an audit tool for milestone assessment. As an ex-

ample, if a resident is identied as poorly performing in a

specic subcompetency, it could be veried with the use of

a well-designed simulation scenario. Likewise, conicting

resident evaluations may be resolved with a simulation sce-

nario. The cost of using simulation modalities may limit their

widespread use. Certainly, the use of multiple modalities,

their standardization, and support requires substantial hu-

man capital and investment in equipment.

Although a given site may be able to ensure a uniform

experience and assessment, it is also not clear if, from site

to site, these assessments would be entirely meaningful.

Individual competencies as documented at one site may not

translate across all sites. As such, their ability to convey con-

dence in an individuals performance is threatened. A tre-

mendous effort and expense would be required to ensure that

all assessments performed were valid across sites and could

potentially require uniform simulators and a high degree of

standardized patient training and support.

Challenges

It is clear that science regarding performance metrics in

clinical domains is, as yet, emerging. Multiple performance

standards for procedural skills exist and range from ef-

ciency, accuracy, and motion measurements, global scales,

through physiologic response measurements. Although no

single measurement tool has been shown to be superior, this

fact should not distract educators fromthe goal of establishing

behaviorally anchored milestones. In fact, progress toward

defensible standards requires the adoption of meaningful

initial standards. Behavioral scientists, psychometricians, and

neurocognitive scientists will no doubt be essential in the

long-term goal of rening simulation-based metrics. The ab-

sence of perfection today need not impede progress.

CONCLUSIONS

The NAS will present challenges to each teaching hos-

pital and residency to provide objective outcome measures

related to milestone subcompetencies on a semiannual basis.

Simulation modalities could provide part of this summative

assessment. The simulation community as a whole needs to

develop strategies to address the very real needs of the NAS,

with the development of specialty-specic programs of mile-

stone competency assessment. This may benet the simula-

tioncommunity by providing more resources to provide this

assessment, allowing for more simulation-based research

and elevating the importance of the simulation center within

the medical education community.

REFERENCES

1. Swing S. ACGME launches outcomes assessment project. JAMA

1998;279(18):1492.

2. Lurie SJ. History and practice of competency-based assessment.

Med Educ 2012;46(1):49Y57.

3. Nasca TJ. The CEOs First ColumnVthe next step in the

outcomes-based accreditation project. ACGME Bulletin May 2008;2Y4.

4. Nasca TJ, Philibert I, Brigham T, Flynn TC. The next GME accreditation

systemVrationale and benets. N Engl J Med 2012;366(11):1051Y1056.

5. Beeson MS, Carter WA, Christopher TA, et al. Emergency medicine

milestones. J Grad Med Educ 2013;5(1 Suppl 1):5Y13.

6. Carraccio C, Benson B, Burke A, et al. Pediatrics milestones. J Grad

Med Educ 2013;5(1 Suppl 1):59Y73.

7. Coburn M, Amling C, Bahnson RR, et al. Urology milestones. J Grad

Med Educ 2013;5(1 Suppl 1):79Y98.

8. Iobst W, Aagaard E, Bazari H, et al. Internal medicine milestones.

J Grad Med Educ 2013;5(1 Suppl 1):14Y23.

9. Selden NR, Abosch A, Byrne RW, et al. Neurological surgery milestones.

J Grad Med Educ 2013;5(1 Suppl 1):24Y35.

10. Stern PJ, Albanese S, Bostrom M, et al. Orthopaedic Surgery Milestones.

J Grad Med Educ 2013;5(1 Suppl 1):36Y58.

11. Vydareny KH, Amis ES, Becker GJ, et al. Diagnostic radiology

milestones. J Grad Med Educ 2013;5(1 Suppl 1):74Y78.

12. Swing SR, Beeson MS, Carraccio C, et al. Educational milestone

development in the rst 7 specialties to enter the Next Accreditation

System. J Grad Med Educ 2013;5(1):98Y106.

13. Dreyfus HL, Dreyfus SE. Five Steps From Novice to Expert.

Mind Over Machine. New York, NY: Free Press; 1988:16Y51.

14. Swing SR, Clyman SG, Holmboe ES, Williams RG. Advancing

resident assessment in graduate medical education. J Grad Med Educ

2009;1(2):278Y286.

15. McBride ME, Waldrop WB, Fehr JJ, Boulet JR, Murray DJ. Simulation in

pediatrics: the reliability and validity of a multiscenario assessment.

Pediatrics 2011;128(2):335Y343.

16. Reid J, Stone K, Brown J, et al. The Simulation Team Assessment Tool

(STAT): development, reliability and validation. Resuscitation 2012;83(7):

879Y886.

17. Apker J, Mallak LA, Applegate EB, et al. Exploring emergency

physician-hospitalist handoff interactions: development of the Handoff

Communication Assessment. Ann Emerg Med 2010;55(2):161Y170.

18. Telem DA, Buch KE, Ellis S, Coakley B, Divino CM. Integration of a

formalized handoff system into the surgical curriculum: resident

perspectives and early results. Arch Surg 2011;146(1):89Y93.

19. Boulet JR. Summative assessment in medicine: the promise of

simulation for high-stakes evaluation. Acad Emerg Med 2008;15(11):

1017Y1024.

20. Bianchi L, Gallagher EJ, Korte R, Ham HP. Interexaminer agreement

on the American Board of Emergency Medicine oral certication

examination. Ann Emerg Med 2003;41(6):859Y864.

21. LaMantia J. The ACGME core competencies: getting ahead of the curve.

Acad Emerg Med 2002;9(11):1216Y1217.

22. Taylor DK, Buterakos J, Campe J. Doing it well: demonstrating general

competencies for resident education utilising the ACGME Toolbox

of Assessment Methods as a guide for implementation of an evaluation

plan. Med Educ 2002;35(11):1102Y1103.

190 Specialty Milestones and Next Accreditation System Simulation in Healthcare

Copyright 2014 by the Society for Simulation in Healthcare. Unauthorized reproduction of this article is prohibited.

23. Barsuk JH, Cohen ER, Feinglass J, McGaghie WC, Wayne DB. Use of

simulation-based education to reduce catheter-related bloodstream

infections. Arch Intern Med 2009;169(15):1420Y1423.

24. Gaba DM. Where do we come from? What are we? Where are we going?

Simul Healthc 2011;6(4):195Y196.

25. Levine AI, Flynn BC, Bryson EO, DeMaria S. Simulation-based

Maintenance of Certication in Anesthesiology (MOCA) course

optimization: use of multi-modality educational activities.

J Clin Anesth 2012;24(1):68Y74.

26. McGaghie WC, Draycott TJ, Dunn WF, et al. Evaluating the impact

of simulation on translational patient outcomes. Simul Healthc

2011;6(7):S42YS47.

27. Smith CC, Huang GC, Newman LR, et al. Simulation training and

its effect on long-term resident performance in central venous

catheterization. Simul Healthc 2010;5(3):146Y151.

28. Seymour NE, Gallagher AG, Roman SA, et al. Virtual reality training

improves operating room performance: results of a randomized,

double-blinded study. Ann Surg 2001;236:458Y464.

Vol. 9, Number 3, June 2014 * 2014 Society for Simulation in Healthcare 191

Copyright 2014 by the Society for Simulation in Healthcare. Unauthorized reproduction of this article is prohibited.

Potrebbero piacerti anche

- Grit: The Power of Passion and PerseveranceDa EverandGrit: The Power of Passion and PerseveranceValutazione: 4 su 5 stelle4/5 (588)

- The Yellow House: A Memoir (2019 National Book Award Winner)Da EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Valutazione: 4 su 5 stelle4/5 (98)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDa EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeValutazione: 4 su 5 stelle4/5 (5795)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDa EverandNever Split the Difference: Negotiating As If Your Life Depended On ItValutazione: 4.5 su 5 stelle4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDa EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceValutazione: 4 su 5 stelle4/5 (895)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDa EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersValutazione: 4.5 su 5 stelle4.5/5 (345)

- Shoe Dog: A Memoir by the Creator of NikeDa EverandShoe Dog: A Memoir by the Creator of NikeValutazione: 4.5 su 5 stelle4.5/5 (537)

- The Little Book of Hygge: Danish Secrets to Happy LivingDa EverandThe Little Book of Hygge: Danish Secrets to Happy LivingValutazione: 3.5 su 5 stelle3.5/5 (400)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDa EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureValutazione: 4.5 su 5 stelle4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDa EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryValutazione: 3.5 su 5 stelle3.5/5 (231)

- On Fire: The (Burning) Case for a Green New DealDa EverandOn Fire: The (Burning) Case for a Green New DealValutazione: 4 su 5 stelle4/5 (74)

- The Emperor of All Maladies: A Biography of CancerDa EverandThe Emperor of All Maladies: A Biography of CancerValutazione: 4.5 su 5 stelle4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDa EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaValutazione: 4.5 su 5 stelle4.5/5 (266)

- The Unwinding: An Inner History of the New AmericaDa EverandThe Unwinding: An Inner History of the New AmericaValutazione: 4 su 5 stelle4/5 (45)

- Team of Rivals: The Political Genius of Abraham LincolnDa EverandTeam of Rivals: The Political Genius of Abraham LincolnValutazione: 4.5 su 5 stelle4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDa EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyValutazione: 3.5 su 5 stelle3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDa EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreValutazione: 4 su 5 stelle4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Da EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Valutazione: 4.5 su 5 stelle4.5/5 (121)

- Her Body and Other Parties: StoriesDa EverandHer Body and Other Parties: StoriesValutazione: 4 su 5 stelle4/5 (821)

- Copies of The Policy of The FollowingDocumento1 paginaCopies of The Policy of The FollowingPAULBENEDICT CATAQUIZNessuna valutazione finora

- ED Volunteer ManualDocumento15 pagineED Volunteer Manualkape1oneNessuna valutazione finora

- DNB CetssDocumento21 pagineDNB CetssRinku RinkuNessuna valutazione finora

- Books ListsDocumento7 pagineBooks ListsMiguel Cadagan100% (1)

- Chapter 1 Overview of Critical Care StudentDocumento40 pagineChapter 1 Overview of Critical Care StudentEmilyNessuna valutazione finora

- CMAM Training PPT 2018 - 0Documento57 pagineCMAM Training PPT 2018 - 0cabdinuux32Nessuna valutazione finora

- B.D.S Third Year Examination Tabulation ChartDocumento22 pagineB.D.S Third Year Examination Tabulation ChartRoshan barikNessuna valutazione finora

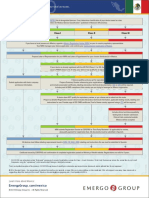

- Low Risk Class I Class II Class IIIDocumento2 pagineLow Risk Class I Class II Class IIImd edaNessuna valutazione finora

- Parliament of India: Rajya SabhaDocumento122 pagineParliament of India: Rajya SabhasurenkumarNessuna valutazione finora

- Daftar Pustaka ManajemenDocumento2 pagineDaftar Pustaka ManajemenAnip WungkulNessuna valutazione finora

- MGN815: Business Models: Ajay ChandelDocumento21 pagineMGN815: Business Models: Ajay ChandelSam RehmanNessuna valutazione finora

- What Really Makes You IllDocumento1.218 pagineWhat Really Makes You Illeu95% (19)

- RRL and RRSDocumento6 pagineRRL and RRSJAY LORRAINE PALACATNessuna valutazione finora

- Module 5 QuizDocumento3 pagineModule 5 Quizapi-329063194Nessuna valutazione finora

- Nurs FPX 4010 Assessment 3 Interdisciplinary Plan ProposalDocumento5 pagineNurs FPX 4010 Assessment 3 Interdisciplinary Plan Proposalfarwaamjad771Nessuna valutazione finora

- UT Health COVID-19 DataDocumento10 pagineUT Health COVID-19 DatakxanwebteamNessuna valutazione finora

- Trach CareDocumento24 pagineTrach CarePATRICIA ANNE CASTILLO. VASQUEZNessuna valutazione finora

- University of Illinois College of Medicine at Rockford Match List - Class of 2019Documento2 pagineUniversity of Illinois College of Medicine at Rockford Match List - Class of 2019Jeff KolkeyNessuna valutazione finora

- Cut and Paste Types of Housing and DesignDocumento2 pagineCut and Paste Types of Housing and Designapi-509516948Nessuna valutazione finora

- Neuro-Rehabilitation: Australian ExperienceDocumento31 pagineNeuro-Rehabilitation: Australian ExperienceLavisha SharmaNessuna valutazione finora

- April 2018 Question 1 C: View RationaleDocumento6 pagineApril 2018 Question 1 C: View RationaleJohannah DianeNessuna valutazione finora

- Infection Prevention and Control in Paediatric Office SettingsDocumento15 pagineInfection Prevention and Control in Paediatric Office SettingsGhalyRizqiMauludinNessuna valutazione finora

- Ebr 1000Documento2.065 pagineEbr 1000saeeddahmosh2015Nessuna valutazione finora

- Artikel Asli Artikel Asli: Volume 3, Nomor 1 Januari - April 2018Documento4 pagineArtikel Asli Artikel Asli: Volume 3, Nomor 1 Januari - April 2018Iska BuanasabahNessuna valutazione finora

- Ich Format For A Clinical Trial ProtocolDocumento4 pagineIch Format For A Clinical Trial Protocolpankz_shrNessuna valutazione finora

- Role Importance of Medical Records: Mahboob Ali Khan Mha, CPHQ Consultant HealthcareDocumento60 pagineRole Importance of Medical Records: Mahboob Ali Khan Mha, CPHQ Consultant HealthcareAKASH DAYALNessuna valutazione finora

- 3rd Sarawak Burn Update FlyerDocumento2 pagine3rd Sarawak Burn Update FlyerCY DingNessuna valutazione finora

- Lor Medicine 2.Documento1 paginaLor Medicine 2.Jitendra Raj KarkiNessuna valutazione finora

- Trust Lap A Simulation Case StudyDocumento11 pagineTrust Lap A Simulation Case StudyApurv GautamNessuna valutazione finora

- Fitrawati Arifuddin - How To Give An Oral Report To An RNDocumento9 pagineFitrawati Arifuddin - How To Give An Oral Report To An RNfitrawatiarifuddinNessuna valutazione finora