Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

WCET-AwarePartialControl-FlowCheckingfor Resource-ConstrainedReal-Time EmbeddedSystems

Caricato da

anmol6237Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

WCET-AwarePartialControl-FlowCheckingfor Resource-ConstrainedReal-Time EmbeddedSystems

Caricato da

anmol6237Copyright:

Formati disponibili

5652 IEEE TRANSACTIONS ON INDUSTRIAL ELECTRONICS, VOL. 61, NO.

10, OCTOBER 2014

WCET-Aware Partial Control-Flow Checking for

Resource-Constrained Real-Time

Embedded Systems

Zonghua Gu, Chao Wang, Ming Zhang, and Zhaohui Wu, Senior Member, IEEE

AbstractReal-time embedded systems in diverse application

domains, such as industrial control, automotive, and aerospace,

are often safety-critical systems with stringent timing constraints

that place strong demands on reliability and fault tolerance. Since

fault-tolerance mechanisms inevitably add performance and/or re-

source overheads, it is important to guarantee a systems real-time

constraints despite these overheads. Control-ow checking (CFC)

is an effective technique for improving embedded systems relia-

bility and security by online monitoring and checking of software

control ow to detect runtime deviations from the control-ow

graph (CFG). Software-based CFC has high runtime overhead,

and it is generally not applicable to resource-constrained embed-

ded systems with stringent timing constraints. We present tech-

niques for partial CFC (PCFC), which aims to achieve a tradeoff

between runtime overhead, which is measured in terms of in-

creases in worst case execution time (WCET), and fault-detection

coverage by selectively instrumenting a subset of basic blocks.

Experimental results indicate that PCFC signicantly enables

reductions of the program WCET compared to full CFC at the

cost of reduced fault-detection ratio, thus providing a tunable

fault-tolerance technique that can be adapted by the designer to

suit the needs of different applications.

Index TermsControl-ow checking (CFC), fault tolerance,

real-time embedded systems.

I. INTRODUCTION AND RELATED WORK

R

EAL-TIME embedded systems in diverse application

domains, such as industrial control, automotive, and

aerospace [1], are often safety-critical systems that place strong

demands on both real-time performance [2] and reliability [3].

In order to satisfy real-time constraints, two problems need

to be addressed: each task should have bounded Worst Case

Execution Time (WCET), and the operating system (OS) should

use a real-time scheduling algorithm that guarantees to meet

all deadlines given task WCETs. In this paper, we consider the

problem of WCET analysis and optimization, and we view real-

time scheduling as an orthogonal issue that should be addressed

Manuscript received April 6, 2013; revised September 30, 2013; accepted

November 17, 2013. Date of publication January 21, 2014; date of current

version May 2, 2014. This work was supported in part by the National Natural

Science Foundation of China (NSFC) under Project 61070002 and Project

61272127.

The authors are with the College of Computer Science, Zhejiang University,

Hangzhou 310027, China (e-mail: zgu@zju.edu.cn; superwang1988@

zju.edu.cn; editing@zju.edu.cn; wzh@zju.edu.cn).

Color versions of one or more of the gures in this paper are available online

at http://ieeexplore.ieee.org.

Digital Object Identier 10.1109/TIE.2014.2301752

separately, e.g., Monot et al. [4] addressed the problem of

sequencing multiple elementary software (SW) modules, which

are called runnables, on a limited set of identical cores, assum-

ing the WCET of each runnable is given as problem input;

Zhao et al. [5], [6] addressed real-time mixed-criticality

scheduling algorithms and shared resource synchronization

protocols for resource-constrained embedded systems.

In order to meet hard real-time constraints with limited

processing and memory resources, one approach is to use

hardwareSW (HWSW) codesign, e.g., Oliveira et al. [7]

presented the Advanced Real-time Processor Architecture

(ARPA), which is a customizable, synthesizable, and time-

predictable processor model optimized for multitasking real-

time embedded systems based on eld-programmable gate

array technology, and the ARPA OS coprocessor designed for

HW implementation of the basic real-time OS management

functions. They also present measured WCET values of the

kernel internal functions and services, including both SW and

HW parts. In this paper, we limit our attention to commer-

cial off-the-shelf (COTS) processors without any customiza-

tion or extension for fault-tolerance or real-time performance

enhancement.

HW faults can occur due to a number of reasons, including

smaller sizes of functional units, aggressive lowering of op-

erating voltages, single-event upsets (SEUs) caused by harsh

operating environments such as radiation-intensive space en-

vironment, etc. We consider transient soft errors, instead of

permanent hard errors, due to HW failure or SW bugs. Many

techniques have been presented to achieve fault tolerance. The

traditional approach of HW redundancy and voting is very

effective, but it incurs high cost in terms of wasted HW re-

sources, e.g., Idirin et al. [3] presented implementation details

and safety analysis of a microcontroller-based Safety-Integrity

Level-4 SW voter using a fault-tree analysis technique. The

system architecture is based on dual-channel redundancy, i.e.,

2-out-of-2 voting. This approach is common for aerospace

applications, but it is generally not economically feasible for

certain application domains with mass-produced products, e.g.,

the automotive industry. Due to intense cost-cutting pressure

in todays hypercompetitive automotive market, it is important

to minimize HW costs by adopting cheaper processors with

limited HW (processing and memory) resources [5], [6]. (In

contrast, the aerospace industry is much less cost sensitive

since airplanes are not mass-produced products.) Instead of HW

redundancy, we consider the alternative approach of temporal

0278-0046 2014 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission.

See http://www.ieee.org/publications_standards/publications/rights/index.html for more information.

GU et al.: WCET-AWARE PCFC FOR RESOURCE-CONSTRAINED REAL-TIME EMBEDDED SYSTEMS 5653

redundancy, where additional checking code is added to the SW

to detect certain faults, without any redundant or specialized

HW. In addition, our work focuses on fault detection and its

effects on the WCET of a single program, and it is orthogonal

to work on fault-tolerant scheduling, which addresses task-level

rollback and recovery to achieve fault tolerance.

Program control-ow errors (CFEs) are generally more seri-

ous than dataow errors, since it can cause the program to jump

to unexpected locations and cause program crash or security

breach. Control-ow checking (CFC) is an effective technique

for improving embedded systems reliability and security by

online monitoring and checking of SW control ow to detect

runtime deviations from the control-ow graph (CFG), due to

either transient HW errors, such as SEUs, or malicious attacks,

such as buffer-overow attack. CFC techniques can either be

HW- or SW-based. HW-based techniques usually rely on a

watchdog processor, which is a simple coprocessor attached to

the main processor via the system bus, to monitor the behavior

of programs running on the main processor and compare the

runtime control-ow signatures to the precomputed and stored

values, in order to detect any deviations from the legal control

ow as specied in the program CFG. Although HW-based

CFC can achieve low runtime overhead, it cannot be applied

to COTS processors that do not permit any HW changes.

Many SW-based CFC algorithms have been proposed. They

have certain limitations compared to HW-based CFC: they can

only detect internode CFEs, but cannot detect intranode CFEs;

they cannot detect faults that affect the checking instructions

themselves; but the most severe issue is that they typically incur

high runtime overhead due to frequently executed checking

instructions inserted at the beginning and/or end of each basic

block (BB), which may be only a few instructions long. Even

for CFE Detection using Assertions (CEDA) [8], which is a rel-

atively low-overhead CFC algorithm, its average performance

overhead can range from a few percent up to 60%. Other CFC

techniques may incur even higher overheads up to 23 of the

original program execution time. The large runtime overhead

prevents wide deployment of CFC algorithms in resource-

constrained real-time embedded systems, e.g., automotive con-

trol systems and low-cost satellites based on smart phones.

Although much work has been done on reducing overhead of

fault-tolerance techniques, including CFCtechniques, they gen-

erally target average-case performance with representative or

average inputs, instead of worst case performance as measured

by the program WCET.

Currently, the designer is faced with a difcult choice be-

tween having no fault tolerance at all and using a high-

overhead fault-tolerance algorithm that causes large increases

in system cost and power consumption. In order to en-

able wide acceptance and deployment of CFC algorithms in

resource-constrained embedded systems, we propose partial

CFC (PCFC) to trade off fault-detection coverage for real-time

performance, by instrumenting a subset of BBs in the program

to make it partially resilient to CFE while keeping its WCET

within a given upper bound, to achieve a tradeoff between

fault-detection rate and real-time performance. Our approach

provides a tunable fault-tolerance technique that can be adapted

by the designer to suit the needs of different applications.

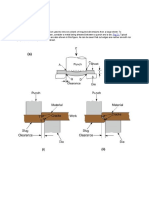

Fig. 1. Illustration of possible CFEs.

This paper is structured as follows. Section II presents back-

ground knowledge on CFC and WCET. Section III presents

the details of our PCFC algorithm, including an Integer Linear

Programming (ILP) formulation and a greedy heuristic algo-

rithm. Section IV presents experimental results and Section V

presents conclusions.

II. BACKGROUND

A. CFC

A program consists of a number of BBs interconnected into

a CFG. If program control ow at runtime deviates from the

legal paths as specied by the CFG, then a CFE has occurred.

It has been shown that 33%77% of transient HW faults lead to

CFEs [8]. In addition to HW faults, malicious attacks can also

cause control ow to jump to unexpected addresses containing

malicious code, e.g., buffer-overow attacks. There can be three

causes of CFEs: branch insertion error, i.e., a non-control-

ow instruction is changed to a control-ow instruction; branch

deletion error, i.e., a control-ow instruction is changed to a

non-control-ow instruction; branch target modication, i.e.,

target address of a control-ow instruction is modied. Fig. 1

illustrates eight possible CFEs in a CFG fragment with three

BBs: from middle of N

1

to entry or middle of its legal successor

N

2

; from middle of N

1

to entry or middle of some other BB N

3

that is not a legal successor of N

1

; from exit of N

1

to middle

of N

2

or N

3

; from exit of N

1

to entry or middle of N

3

; and

jump inside a single BB N

1

. The last CFE is an intranode CFE,

whereas the rest are internode CFEs.

Many SW-based CFC algorithms have been proposed, with

different overheads and degrees of fault coverage; represen-

tative examples include CFC by SW Signatures (CFCSS) [9]

and CEDA [8], among others. Since CEDA has been shown to

have superior performance and fault coverage than most other

techniques [8], we choose CEDA as the CFC technique in this

paper, but our approach is general and can be applied to other

similar CFC techniques. Here, the basic denitions of CEDA

are presented as follows.

1) Node type: A BB can either be a type-A or type-X BB.

2) Type-A BB: A BB is of type A if it has multiple prede-

cessors and at least one of its predecessors has multiple

successors.

3) Type-X BB: A BB is of type X if it is not of type A.

4) Signature register (S): A global variable that is continu-

ously updated to monitor the execution of the program.

5654 IEEE TRANSACTIONS ON INDUSTRIAL ELECTRONICS, VOL. 61, NO. 10, OCTOBER 2014

TABLE I

INSTRUCTIONS ADDED BASED ON CEDA

Fig. 2. (a) Example program, (b) its CFG, and (c) an instrumented BB.

5) Node signature (NS): The expected value of S at any

point within the BB on correct execution of the program.

6) Node exit signature (NES): The expected value of S on

exiting the BB on correct execution of the program.

For each BB, NS, and NES are statically assigned according

to some rules. Two parameters d1 and d2 are assigned values

statically and associated with each BB. Instructions are added

to the beginning and end of each BB to update S by either XOR

or AND operators, such that S is equal to its expected value

at each point in the program in the absence of any CFE. In

addition to signature-update instructions, checking instructions

are inserted at certain points to compare the computed signa-

ture S against its expected value at that point, and a CFE is

detected if the values do not match, e.g., if a check point is

placed at the end of some BB N

i

, the checking instruction is

br S ! = NES(Ni)error(). The checking points are placed

at the beginning and end of each BB to minimize fault-detection

latency. Table I shows the extra instructions added to each

BB based on CEDA. In this paper, we call these instructions

instrumentation code; a BB with these instructions is called an

instrumented BB; a BB without these instructions is called an

uninstrumented BB.

Fig. 2 shows an example program, its CFG, and an instru-

mented BB with both signature-update and checking instruc-

tions inserted. All BBs in this example happen to be type X;

hence, XOR operator is used to update S. The memory overhead

of most CFC techniques, particularly CEDA, is negligible.

As shown in Table I, the memory overhead due to CEDA

instrumentation includes the single global signature variable S

for the entire program and the two constants d1(N), d2(N) for

each BB, which are all 32-bit unsigned integers. Therefore, we

focus on execution time overhead, not memory overhead.

Vemu and Abraham [8] have proven that CEDA is complete,

i.e., every internode CFE will be detected. In this paper, we will

attempt to sacrice this completeness in exchange for reduced

instrumentation overhead, which is measured by its effect on

the program WCET.

B. WCET Analysis and Optimization

Standard WCET analysis has two parts: 1) low-level analysis

to nd the WCET for each BB in the program; and 2) high-level

analysis to nd the worst case path of BBs through the program.

In this paper, we use the popular WCET analysis tool SWEET

[10], which is based on abstract execution and can be applied

to the intermediate representation (IR) of the compiler to obtain

the WCET estimate, as well as the Worst Case Execution Path

(WCEP) that is responsible for the WCET. While actual pro-

gram WCET values are dependent on the target HW platform,

including processor speed and memory hierarchy conguration,

SWEET performs HW-platform-independent analysis at the

compiler (IR) level. This is acceptable for our purposes since

we only care about relative WCET comparisons on the same

HW platform, not the absolute WCET values for a specic HW

platform.

While conventional compiler optimizations aim to reduce

the program execution time for the average case, researchers

have proposed WCET-aware compiler optimizations targeted

toward reduction of program WCET, e.g., Suhendra et al.

[11] proposed compiler optimization algorithms for scratchpad

memory allocation to minimize the program WCET based on

ILP or heuristic algorithms, by moving selected BBs on the

critical path into scratchpad memory to reduce the WCET.

Among all paths from the start node of the CFG to some end

node, the WCEP is the critical path with execution time equal

to the programs WCET, which is computed as the sum of

the products of WCET and worst case execution frequency

of all BBs on the WCEP. A key challenge of WCET-aware

compilation is caused by a WCEP switch, where the program

WCEP may change to a different path as the current WCEP is

optimized to have a reduced length; hence, one should avoid

overoptimizing on an outdated WCEP.

While this body of related work aims to move code or data

into cache/scratchpad memory to decrease its WCET, we are

addressing the reverse problem of trying to add additional

CFC code into a program to increase its WCET in order to

achieve partial fault tolerance. Fig. 3 shows comparisons of

program execution time distributions for the original program

without CFC, the program with full CFC, and the program

with PCFC, with WCET

orig

, WCET

FCFC

, and WCET

PCFC

,

respectively. With full CFC, all BBs in the CFG are instru-

mented without exception; hence, execution time of each BB

is increased by similar amounts, causing the probability density

function of program execution time distribution to be shifted

to the right and a large increase in program WCET

FCFC

.

However, with PCFC, a select subset of BBs are instrumented,

preferably those that do not lie on the WCEP, in order to

minimize the impact of CFC instrumentation on the program

WCET. It illustrates the goal of PCFC of respecting an up-

per bound on program WCET, even when the Average-Case

GU et al.: WCET-AWARE PCFC FOR RESOURCE-CONSTRAINED REAL-TIME EMBEDDED SYSTEMS 5655

Fig. 3. Expected effects of CFC instrumentation on probability distribution of

program execution time.

Execution Time may increase, as indicated by the right shifting

of the execution time distribution curve. Our objective is to

achieve reasonable fault-detection coverage within a reason-

able upper bound on the program WCET, where the tradeoff

between fault-detection coverage and WCET is application

dependent and should be decided by the designer.

Fischmeister and Lam [12] presented techniques for timing-

aware program instrumentation to optimize the number of in-

sertion points and trace buffer size with respect to code size and

time budgets. However, they addressed simple control programs

running on small microcontrollers, where the program WCET

can be determined with explicit path enumeration without the

need for sophisticated WCET analysis.

III. PCFC

A. Overall Approach

In order to reuse existing CFC algorithms for PCFC without

modication, we propose a partial CFG (PCFG) consisting

of only instrumented BBs and excluding uninstrumented BBs.

Given the input fromthe optimization algorithmon the set of in-

strumented BBs and the complementary set of uninstrumented

BBs, we build the PCFG from an original CFG by deleting any

uninstrumented BBs from the CFG, then adding edges from

all its predecessors to all its successors, and rerun the CEDA

algorithm on the modied PCFG to obtain new values for NS,

NES and (possibly) new signature-update operations based on

node type. Hence, PCFG is not a subgraph of the original CFG:

it may have fewer number of BB nodes, but it may introduce

additional edges between other BB nodes. Consider the CFG of

the example program in Fig. 4(a) with ve BBs, where shaded

parts denote instrumentation code added using either CEDA or

some other signature-based CFC algorithm. If we decide to re-

move instrumentation from BB N

3

according to the decision of

the optimization algorithm, then we remove N

3

from the CFG

and add edges from N

1

/N

2

to N

4

/N

5

, as shown in Fig. 4(b).

We then run the CFC algorithm on the new modied PCFG

to obtain modied updated signature-update and checking code

and remove the instrumentation code from BB N

3

. Note that

the same BB in the PCFG may have the node type (A or X)

different from its original type in the full BB, e.g., the type of

nodes N

4

and N

5

changed from type X to type A; hence, the

Fig. 4. Constructing PCFG by removing the uninstrumented BB N

3

from

CFG.

signature-update operator at their beginning should be changed

from XOR operator to AND operator. This is automatically taken

into account by rerunning the CEDA algorithm on the modied

PCFG. Note that the program execution is still based on the

original CFG, but the CFC signature update and checking are

based on the PCFG. It is obvious that this method allows us to

uninstrument arbitrary BBs in the CFG.

Some BBs can be uninstrumented without affecting the

fault-detection coverage. For example, if all predecessors and

successors of every uninstrumented BB are instrumented, then

the fault-detection coverage of PCFC is the same as full CFC.

Take Fig. 7 as an example: in (a), a fault occurs in the middle

of an uninstrumented BB N

k

, and it causes the control ow

to jump to the middle of another uninstrumented BB N

m+1

.

Since the global signature variable S contains the NES of

N

k

s predecessor N

k1

, not that of N

m+1

s legal predecessor

N

m

, the CFE will be detected by the signature checking code

at the beginning of N

m+2

based on the PCFG. In (b), two

successive BBs N

m

and N

m+1

are both uninstrumented. When

a fault occurs in N

m

and causes the control ow to jump to

the middle of N

m+1

, the CFE will not be detected by N

m+2

,

since the global signature variable S contains the NES of N

m

s

predecessor; hence, the signature values are legal based on the

PCFG.

The optimization objective for PCFC is to maximize the

fault-detection coverage, by instrumenting a subset of BBs,

while keeping the program WCET within a given upper bound.

Fault detection coverage is obtained via random fault-injection

experiments. Since transient HW errors that cause CFEs, e.g.,

SEUs, can occur anywhere in the processor functional units

and memory hierarchy (registers, cache, and memory), it is

difcult to quantify the relationship between fault-detection

coverage with code-level metrics. However, it makes intuitive

sense to preferentially instrument and protect the BBs that are

frequently executed, instead of BBs that are rarely executed.

Therefore, as an indirect measure of fault coverage degree, we

use the average total execution time of the instrumented BBs

as the optimization objective [formally dened in (7)]. Our

optimization problem can be formulated as follows.

Maximize the total average execution time of BBs in

the program instrumented using CEDA, while keeping the

programs WCET within a given upper bound WCET

ub

.

Next, we present two optimization algorithms for solving this

optimization problem. One is an ILP algorithm; the other is a

greedy heuristic algorithm.

5656 IEEE TRANSACTIONS ON INDUSTRIAL ELECTRONICS, VOL. 61, NO. 10, OCTOBER 2014

Fig. 5. Possible transformations on a reducible CFG.

B. ILP Formulation

Our ILP formulation is based on the ILP model in [11]

for reducing the program WCET by allocating instructions or

variables to scratchpad or cache memory. The ILP formulation

is only applicable to reducible CFGs. A CFG is reducible, also

called well-structured, if we can partition its edges into two

disjoint sets, i.e., the forward and back edges, such:

1) the forward edges form an acyclic graph, in which every

node can be reached from the entry node;

2) the back edges consist only of edges whose targets dom-

inate their sources (i.e., node m dominates node n if all

paths from entry to node n include m).

Structured control-ow constructs give rise to reducible

CFGs. That is, if the program is restricted to using only if-

then-else, while, for, break, and continue, the resulting CFG

is always reducible; however, if goto is used, then the CFG

may be irreducible. Since goto is viewed as bad programming

practice, it is not a signicant limitation to restrict our attention

to reducible CFGs.

A reducible CFG has the following useful property: the CFG

can be reduced to a single node with successive applications of

the following two transformations (see Fig. 5).

1) Remove a self-edge from n to n.

2) Given a node n with a single predecessor m, merge n into

m; all successors of n then become successors of m.

Next, we present the ILP formulation. We dene an array of

01 variables to encode the set of instrumented and uninstru-

mented BBs, i.e.,

x[N

i

] =

1, N

i

is instrumented

0, N

i

is uninstrumented.

(1)

In a reducible CFG, an inner-most loop L has exactly one

back edge that turns it into a cyclic graph, and the loop has a

unique entry node and a unique exit node, which are denoted by

N

entry

and N

exit

, respectively. The WCET of exit node N

exit

is given by

w(N

exit

) = C(N

exit

) +x[N

i

] overhead

instru

(2)

where C(N

i

) is a constant denoting the execution time of a BB

N

i

without instrumentation; overhead

instru

denotes the timing

overhead imposed by the instrumentation; since instrumenta-

tion for each BB is identical, we can use a constant to represent

the overhead here. If some other CFC algorithm incurs different

overheads for different BBs, the formulation can be easily

extended to take them into consideration.

Using V

loop

to represent the set of BBs in the loop, the

WCET of the program path leading from a node N

i

to N

exit

must be greater than or equal to the WCET of any path leading

from any successor of N

i

in V

loop

, i.e.,

N

i

V

loop

/{N

exit

} : (N

i

, N

succ

) E (3)

w(N

i

) w(N

succ

) +C(N

i

) +x[N

i

] overhead

instru

(4)

where E represents the set of edges in the CFG.

Additional constraints can be added iteratively until we reach

the loop entry node N

entry

to obtain w(N

entry

) as WCET of a

single-loop iteration. Due to the properties of reducible CFG,

we can always reduce any loop L into a super node, whose

WCET is computed as w(N

entry

) multiplied by the loop bound,

which is part of the WCET ow facts obtained from the SWEET

analysis tool, i.e.,

w

loop

= bound

loop

w(N

entry

). (5)

Iterative applications of the reduction operations will even-

tually collapse the entire program into a single node. Since

main() is the entry point for the entire program, the following

constraint species an upper bound of the program WCET:

w

main

WCET

ub

(6)

where WCET

ub

is a tolerable WCET upper bound specied

by the designer based on application requirements.

The optimization objective is to maximize the average total

execution time of the instrumented BBs, which is normalized

to the average total program execution time, i.e.,

OptObj =

i

x[N

i

] f

i

C

i

i

f

i

C

i

(7)

where f

i

is the average-case execution frequency of BB N

i

,

which is obtained via proling the program with random inputs;

C

i

is the execution time of BB N

i

. The numerator in (7) is the

total execution time of instrumented BBs, and the denominator

is the total execution time of the entire program, which are

both obtained via proling program executions with random

inputs. For a given program, the denominator can be viewed

as a constant, and its purpose is to make OptObj a relative

percentage for easy comparison purposes.

Equations (1)(7) form a complete ILP optimization model

that can be input to the CPLEX solver and solved.

As mentioned in [11], this style of ILP formulation does not

take into account any infeasible path information for WCET

estimation, i.e., all the paths in the CFG are considered feasible;

hence, it is pessimistic for programs with infeasible paths. This

is necessary to make it possible to collapse a loop into a super

node during the procedure for obtaining w

main

described in

this section. For example, consider an if-then-else conditional

statement within a while loop body, where the then branch is

much shorter than the else branch. The ILP formulation will

always count the execution time of the longer else branch for

GU et al.: WCET-AWARE PCFC FOR RESOURCE-CONSTRAINED REAL-TIME EMBEDDED SYSTEMS 5657

estimation of execution time of the overall while loop. This is

obviously safe; however, it may overestimate the WCET by a

large amount, if the else branch is rarely executed. To be more

accurate and less pessimistic, a sophisticated WCET analysis

tool, such as SWEET, is needed. On the other hand, it is not

possible to build an ILP formulation, since the WCET analysis

tool must be used as a blackbox and cannot be formulated as

a set of linear constraints as input into an ILP formulation.

Therefore, heuristic algorithms must be used, instead of ILP,

if a WCET analysis tool is used.

C. Greedy Heuristic Algorithm

Here, we present heuristic algorithms for solving the op-

timization problem. Compared to existing techniques for

compiler-based WCET reduction, where selected BBs on the

WCEP are placed into scratchpad memory to reduce its WCET,

we approach the PCFC problem from the reverse direction:

rst fully instrument all BBs in the program CFG according

to CEDA, referred to as full CFC, then remove instrumentation

from selected BBs, preferably those on the current WCEP, in

order to reduce the WCET to below WCET

ub

. (Unlike the ILP

formulation, where the program WCEP is implicitly encoded,

the program WCEP is explicitly represented in the greedy

heuristic algorithm.)

Algorithm 1 shows the Basic Greedy Heuristic Algorithm.

We start with the conguration of full CFC with every BB

instrumented based on CEDA, by initializing the two sets

InstBB (denoting the set of instrumented BBs) to be the set

of all BBs in the program CFG and UninstBB (denoting the

set of uninstrumented BBs) to be the empty set (line 1).

As we select BBs to be uninstrumented, we will gradually

move more and more BBs from set InstBB to set UninstBB.

We run the WCET analyzer SWEET [9] to generate current

WCET estimate WCET

cur

and the corresponding WCEP

cur

(line 2). If WCET

cur

exceeds the given upper bound

WCET

ub

(line 3), then select the BB N

i

with minimum

f

i

C

i

among all instrumented BBs on WCEP

cur

, and remove

instrumentation from it (lines 45). At this point, we should not

simply decrement WCET

cur

by the overhead reduction due to

uninstrumentation of BB N

i

, which is equal to Iter(N

i

)

O

inst

,

where Iter(N

i

) is the number of iterations of BB N

i

on the

path WCEP

cur

, and O

inst

is the constant runtime overhead

introduced by instrumentation for each BB N

i

, since there may

be a WCEP switch; hence, WCEP

cur

may no longer be the

program WCEP after uninstrumenting N

i

. Instead, we must

rerun SWEET to obtain the new WCEP

cur

and WCET

cur

(line 6).

{Algorithm 1} Basic Greedy Heuristic Algorithm

1. InstBB = {Set of all BBs}; UninstBB = ; /

Start

with full CFC, where all BBs are instrumented.

/

2. Run SWEET to obtain WCEP

cur

and WCET

cur

3. while (WCET

cur

> WCET

ub

){

4. Select N

i

InstBB with minimum f

i

C

i

among all

BBs on WCEP

cur

, and remove instrumentation from it.

5. InstBB = InstBB \ {N

i

}; UninstBB = UninstBB

{N

i

}; /

Move N

i

from InstBB to UninstBB.

/

6. Run SWEET to obtain the updated WCEP

cur

and

WCET

cur

}

Running SWEET to obtain WCEP

cur

and WCET

cur

can

be time consuming for large programs. Algorithm 1 has very

long running time since it needs to run SWEET very frequently,

after each BB that is uninstrumented. In order to improve

algorithm efciency, we propose the Enhanced Greedy Heuris-

tic Algorithm, as shown in Algorithm 2, where the parts that

are different from Algorithm 1 are shown in bold font. It

improves efciency by successively removing instrumentation

from multiple BBs on the WCEP on the order of increasing

average execution time (f

i

C

i

) until execution time of the

current execution path ET

wcep

has been reduced to below

WCET

ub

, with the goal of preferentially instrumenting BBs

with large average execution times. During this process, there

may have been multiple WCEP switches, so that SWEET-

computed WCEP

cur

is no longer the real WCEP, and the

execution time ET

wcep

is no longer the real WCET. However,

since the real WCET must be greater than or equal to ET

wcep

,

it is a necessary but not sufcient condition to reduce ET

wcep

to below WCET

ub

, in order to reduce the real WCET to below

WCET

ub

.

{Algorithm 2} Enhanced Greedy Heuristic Algorithm

1. InstBB = {Set of all BBs}; UninstBB = ; /

Start

with full CFC, where all BBs are instrumented.

/

2. Run SWEET to obtain WCEP

cur

and WCET

cur

3. while (WCET

cur

> WCET

ub

){

4. ET

wcep

= WCET

cur

;

5. while (ET

wcep

> WCET

ub

){

6. Select N

i

InstBB with minimum f

i

C

i

among all

BBs on the WCEP

cur

, and remove instrumentation

from it.

7. InstBB = InstBB \ {N

i

}; UninstBB = UninstBB

{N

i

}; /

Move N

i

from InstBB to UninstBB.

/

8. ET

wcep

= ET

wcep

Iter(N

i

)

O

inst

}

9. Run SWEET to obtain the updated WCEP

cur

and

WCET

cur

}

Algorithm 1 is similar to the greedy heuristic algorithm in

[11] for allocation of program data to scratchpad memory, but

our objective is to reduce the WCET to below a given upper

bound WCET

ub

, while the objective in [11] is to minimize the

WCET; hence, we are able to develop the enhanced Algorithm2

to enhance its efciency by exploiting the WCET

ub

constraint.

Since the heuristic Algorithm 1 is many times slower than

Algorithm 2, and their performance results are similar, we used

Algorithm 2 in our experiments, which is referred to as the

greedy heuristic.

5658 IEEE TRANSACTIONS ON INDUSTRIAL ELECTRONICS, VOL. 61, NO. 10, OCTOBER 2014

Fig. 6. Example for illustrating nonoptimality of the greedy heuristic

algorithm.

Fig. 7. Detection of CFEs with PCFC. (a) CFE is detected. (b) CFE is

undetected.

It is obvious that the greedy algorithm is not optimal, since

it only considers the current WCEP and does not have the

global view. Consider the CFG fragment in Fig. 6. The heuristic

algorithm may select one BB each to be uninstrumented on

path b and path c to reduce the WCET, which is determined by

the execution time of both paths {a b, a c}, by a certain

amount, but it may be possible to uninstrument one single BB

on path a to reduce the WCET by the same amount, which could

lead to a better solution.

We emphasize that neither the ILP formulation nor the

heuristic algorithm is optimal: the ILP formulation is not

optimal since it does not take into account infeasible path

information for WCET estimation, and the heuristic algorithm

is not optimal due to its inherent greedy nature.

D. Fault Detection Anomalies

We present some discussions on fault-detection anomalies,

i.e., fault-detection coverage may not be always higher for

larger number of instrumented BBs for a given program. Fig. 7

shows how the fault-detection coverage depends on patterns of

instrumented BBs, not just the number of them. In Fig. 7(a),

an erroneous jump between BBs lying on two different paths is

detectable, while in Fig. 7(b), an erroneous jump between two

consecutive uninstrumented BBs is undetectable.

Figs. 8 and 9 show that fault-detection ratio may be higher

for fewer number of instrumented BBs. Consider the original

CFG, as shown in Fig. 8(a). If N

1

and N

4

are chosen to be

instrumented, then the resulting PCFG is shown in (b); if only

N

1

is chosen to be instrumented, then the resulting PCFG is

shown in (c). Fig. 9 shows a closeup look at the PCFGs in

Fig. 8(b) and (c). In Fig. 8(b), N

1

is type A, since it has (at

least) two predecessors N

1

and N

4

, and one of its predecessors

(N

1

) has multiple (two) successors (N

1

and N

4

). In Fig. 8(c),

N

1

is type X, assuming that the BB connected with the top edge

entering N

1

does not have any other successors. According to

CEDA, the signature-update instruction for N

1

in (b) is S =

S AND d1(N

1

) (S = S AND 11000), whereas the signature-

Fig. 8. Fault detection anomaly. (a) Original CFG. (b) PCFG if N

1

and N

4

are instrumented; N

1

is a type-A node. (c) PCFG if only N

1

is instrumented;

N

1

is a type-X node.

Fig. 9. Closeup look at the PCFGs in (left) Fig. 8(b) and (right) Fig.8(c).

update function for N

1

in (c) is S = S XOR d1(N

1

) (S = S

XOR 00100). (Note that d1(N

1

) may be different for node N

1

for different PCFGs in (a) and (b), since its value is dependent

on the PCFG.) Consider the scenario when a CFE occurs in

N

1

, causing an erroneous jump from N

1

to N

2

or N

3

. For the

case in (b), when the control ow comes back to N

1

from N

3

,

before entering N

1

, the global signature S = 11000 since the

update at the end of N

1

is skipped. The AND operation (S = S

AND 11000 = 11000 AND 11000) at beginning of N

1

in (b)

results in the global signature value (11000), which is same as

the expected value; thus, the CFE escapes detection. For the

case in (c), the XOR operation (S = S XOR 00100 = 11000

XOR 00100) at the beginning of N

1

in (c) results in a global

signature value 11100 that is different from the expected value

of 11000; thus, the CFE will be detected. However, we cannot

always conclude that Fig. 8(b) has lower fault-detection ratio

than Fig. 8(c). Since Fig. 8(b) has N

4

instrumented in addition

to N

1

, it can help detect additional faults that are undetectable

in Fig. 8(c), with only N

1

instrumented.

IV. PERFORMANCE EVALUATION

We use a set of programs from the Malardalen WCET

benchmark [13] in our experiments, as shown in Table II. Since

our target is low-cost resource-constrained real-time embedded

GU et al.: WCET-AWARE PCFC FOR RESOURCE-CONSTRAINED REAL-TIME EMBEDDED SYSTEMS 5659

TABLE II

BENCHMARK PROGRAMS USED IN THE EXPERIMENTS

Fig. 10. Normalized WCET for full CFC (WCET

FCFC

/WCET

orig

).

systems, it is not appropriate to use large benchmark programs

for general-purpose computing, such as those of Standard

Performance Evaluation Corporation, since they are not real-

time programs running in an embedded environment. Since

our heuristic algorithm is very efcient, we expect it to work

well with larger programs without any surprises, although the

ILP method may run into scalability issues. We implement the

CFC algorithms within a Low-Level Virtual Machine (LLVM)

IR. We implemented fault injection by processing the IR of

the LLVM compiler framework, and inject all three types of

CFEs randomly, including branch insertion, branch deletion,

and branch target modication.

Fig. 10 shows that full CFC results in large increases in pro-

gram WCET for most programs, which may not be acceptable

to real-time embedded systems. This justies our objective of

imposing a user-specied upper bound on program WCET with

PCFC.

As mentioned earlier, neither ILP nor the greedy heuris-

tic algorithm is optimal. Fig. 11 shows OptObj, as dened

Fig. 11. OptObj increases with increasing WCET

ub

Fig. 12. Average fault-detection ratio increases with increasing WCET

ub

.

in (7), i.e., average percentage of execution time of the in-

strumented BBs, further averaged across the diverse set of

programs in Table II. It indicates a clear tradeoff between

the allowed WCET

ub

and OptObj, using either the greedy

heuristic algorithm or ILP: the larger WCET

ub

, the larger

value of OptObj. Starting with WCET

ub

= WCET

orig

, with

the increasing value of WCET

ub

, OptObj (with either ILP

or heuristic) increases rapidly, but we see diminishing returns

when WCET

ub

is increased further, particularly when it goes

above 1.4

WCET

orig

. This indicates that our PCFC approach

can achieve signicant coverage of instrumented BBs by re-

moving instrumentation from a small number of BBs that lie on

the long paths that are responsible for program WCET.

In general, ILP outperforms the greedy heuristic, except

when the WCET upper bound is 1.0

WCET

orig

, i.e., no

WCET increase is permitted beyond the original program

WCET. For this case, the ILP approach cannot instrument any

BBs at all (OptObj = 0), since it inherently overestimates the

WCET due to lack of information on infeasible paths, and its

WCET estimate exceeds WCET

orig

obtained with SWEET;

hence, it leaves no timing margin for instrumenting even a

single BB. However, the heuristic algorithm can instrument

some BBs, since it uses SWEET for accurate WCET analysis

and can add instrumentation to BBs that are not on the WCEP.

With increasing WCET

ub

, more CFC instrumentation can be

added to the program until we reach full CFC, where all BBs

are instrumented.

We use random fault injections to inject three types of

CFEs mentioned earlier. For each benchmark program and

each WCET upper bound WCET

ub

value, 2000 random CFE

injection runs are performed, and one CFE is randomly acti-

vated for each run, simulating the common SEU faults. The

fault-detection ratio is the percentage of injected faults that

are detected by the CFC instrumentation. Fig. 12 shows the

average fault-detection ratio, which increases with increasing

5660 IEEE TRANSACTIONS ON INDUSTRIAL ELECTRONICS, VOL. 61, NO. 10, OCTOBER 2014

Fig. 13. Fault detection ratio and OptObj [as dened in (7)] for four programs: (a) cover; (b) r; (c) cnt; and (d) nsichneu. The x-axis is WCET

ub

/WCET

orig

.

Solid lines denote the fault-detection ratio; dot-dash lines denote OptObj; both are normalized to be a percentage between 0% and100%.

Fig. 14. Distribution of fault-injection results. The x-axis is WCET

ub

/WCET

orig

. Numbers in parenthesis denote the function return error code.

WCET

ub

. (Note that the fault-detection ratio is less than

100%, even for full CFC, since CEDA only detects internode

CFEs, not intranode CFEs.)

Fig. 13 shows the fault-detection ratio and OptObj for four

programs; both metrics increase with increasing WCET

ub

,

which is consistent with the average results in Figs. 11 and

12, but the plots for the two metrics do not have identical

shapes. This is expected since fault-detection ratio depends on

the specic patterns of instrumented BBs in the CFG, not just

on the average total execution time (OptObj) or the number

of instrumented BBs. That is, OptObj is a reasonable coarse-

grained metric for guiding placement of CFC instrumentation,

but it is not the sole factor in determining fault-detection ratio.

Fig. 14 shows detailed distribution of consequences of fault

injection for the heuristic algorithm (the results for ILP are

similar and omitted). The vertical bars denote silent errors

(exit from end of main), program crashes (abort, bus error, and

segmentation fault), and faults detected by CFC (exit from error

handler).

V. CONCLUSION

We have presented algorithms for PCFC to enable trade-

offs between the fault-detection ratio and the increase in pro-

gram WCET for SW-implemented CFC. Experimental results

demonstrate that PCFC signicantly enables reductions of the

program WCET compared to full CFC, at the cost of reduced

fault-detection ratio. Our techniques are useful for designing

safety-critical fault-tolerant systems on resource-constrained

HW platforms, when the designer cannot afford to use full CFC

but still expects a certain level of fault tolerance.

GU et al.: WCET-AWARE PCFC FOR RESOURCE-CONSTRAINED REAL-TIME EMBEDDED SYSTEMS 5661

APPENDIX

TABLE III

SOME NOTATIONS AND ABBREVIATIONS USED IN THIS PAPER

REFERENCES

[1] J. Muoz-Castaer, R. Asorey-Cacheda, F. J. Gil-Castieira,

F. J. Gonzlez-Castao, and P. S. Rodrguez-Hernndez, A review of

aeronautical electronics and its parallelism with automotive electronics,

IEEE Trans. Ind. Electron., vol. 58, no. 7, pp. 30903100, Jul. 2011.

[2] K. Erwinski, M. Paprocki, L. M. Grzesiak, K. Karwowski, and

A. Wawrzak, Application of Ethernet powerlink for communication in

a Linux RTAI open CNC system, IEEE Trans. Ind. Electron., vol. 602,

no. 2, pp. 628636, Feb. 2013.

[3] M. Idirin, X. Aizpurua, A. Villaro, J. Legarda, and J. Melndez, Imple-

mentation details and safety analysis of a microcontroller-based SIL-4

software voter, IEEE Trans. Ind. Electron., vol. 58, no. 3, pp. 822829,

Mar. 2011.

[4] A. Monot, N. Navet, B. Bavoux, and F. Simonot-Lion, Multisource

software on multicore automotive ECUscombining runnable sequenc-

ing with task scheduling, IEEE Trans. Ind. Electron., vol. 59, no. 10,

pp. 39343942, Oct. 2012.

[5] Q. Zhao, Z. Gu, and H. Zeng, PT-AMC: Integrating preemption

thresholds into mixed-criticality scheduling, in Proc. DATE, 2013,

pp. 141146.

[6] Q. Zhao, Z. Gu, and H. Zeng, HLC-PCP: A resource synchronization

protocol for certiable mixed criticality scheduling, IEEE Embedded

Syst. Lett., vol. 6, no. 1, pp. 811, Mar. 2014.

[7] A. S. R. Oliveira, L. Almeida, and A. de Brito Ferrari, The

ARPA-MT embedded SMT processor and its RTOS hardware accelera-

tor, IEEE Trans. Ind. Electron., vol. 58, no. 3, pp. 890904, Mar. 2011.

[8] R. Vemu and J. A. Abraham, CEDA: Control-ow error detection us-

ing assertions, IEEE Trans. Comput., vol. 60, no. 9, pp. 12331245,

Sep. 2011.

[9] N. Oh, P. P. Shirvani, and E. J. McCluskey, Control-ow checking by

software signatures, IEEE Trans. Rel., vol. 51, no. 1, pp. 111122,

Mar. 2002.

[10] B. Lisper, C. Sandberg, and N. Bermudo, A tool for automatic ow

analysis of C-programs for WCET calculation, in Proc. WORDS, 2003,

pp. 106112.

[11] V. Suhendra, T. Mitra, A. Roychoudhury, and T. Chen, WCET centric

data allocation to scratchpad memory, in Proc. RTSS, 2005, pp. 223232.

[12] S. Fischmeister and P. Lam, Time-aware instrumentation of real-time

programs, IEEE Trans. Ind. Informat., vol. 6, no. 4, pp. 652663,

Nov. 2010.

[13] J. Gustafsson, A. Betts, A. Ermedahl, and B. Lisper, The Mlardalen

WCET benchmarks: Past, present and future, in Proc. WCET, 2010,

pp. 136146.

Zonghua Gu received the Ph.D. degree in com-

puter science and engineering from the University of

Michigan, Ann Arbor, MI, USA, in 2004.

He is currently an Associate Professor with

Zhejiang University, Hangzhou, China. His research

interests include real-time embedded systems.

Chao Wang received the B.Sc. degree in com-

puter science in 2011 from Zhejiang University,

Hangzhou, China, where he is currently working

toward the Ph.D. degree.

Ming Zhang received the B.Sc. degree in soft-

ware engineering in 2010 from Zhejiang University,

Hangzhou, China, where he is currently working

toward the Ph.D. degree.

Zhaohui Wu (SM05) received the B.Sc. and

Ph.D. degrees in computer science from Zhejiang

University, Hangzhou, China, in 1988 and 1993,

respectively.

He is currently a Professor with the Department of

Computer Science, Zhejiang University. His research

interests include distributed articial intelligence, se-

mantic grid, and pervasive computing.

Dr. Wu is a Standing Council Member of the

China Computer Federation.

Potrebbero piacerti anche

- Online Adaptive Fault Tolerant Based Feedback Control Scheduling Algorithm For Multiprocessor Embedded SystemsDocumento9 pagineOnline Adaptive Fault Tolerant Based Feedback Control Scheduling Algorithm For Multiprocessor Embedded SystemsijesajournalNessuna valutazione finora

- Antoni 2002Documento9 pagineAntoni 2002alexanderfortis8628Nessuna valutazione finora

- Static Timing Analysis of Real-Time OS CodeDocumento15 pagineStatic Timing Analysis of Real-Time OS CodeRaffi SkNessuna valutazione finora

- A Processor and Cache Online Self-Testing Methodology For OS-Managed PlatformDocumento14 pagineA Processor and Cache Online Self-Testing Methodology For OS-Managed PlatformHitechNessuna valutazione finora

- A Hardware-Scheduler For Fault Detection in RTOS-Based Embedded SystemsDocumento7 pagineA Hardware-Scheduler For Fault Detection in RTOS-Based Embedded SystemsEvaldo Carlos F Pereira Jr.Nessuna valutazione finora

- Checkpoint-Based Fault-Tolerant Infrastructure For Virtualized Service ProvidersDocumento8 pagineCheckpoint-Based Fault-Tolerant Infrastructure For Virtualized Service ProvidersDevacc DevNessuna valutazione finora

- When To Migrate Software Testing To The Cloud?: Tauhida Parveen Scott TilleyDocumento4 pagineWhen To Migrate Software Testing To The Cloud?: Tauhida Parveen Scott TilleyMurali SankarNessuna valutazione finora

- Test SocDocumento133 pagineTest SocjeevithaNessuna valutazione finora

- Reservation Scheduler for Embedded Multimedia Systems (40 charactersDocumento11 pagineReservation Scheduler for Embedded Multimedia Systems (40 charactersGuilherme GavazzoniNessuna valutazione finora

- The International Journal of Science & Technoledge: Proactive Scheduling in Cloud ComputingDocumento6 pagineThe International Journal of Science & Technoledge: Proactive Scheduling in Cloud ComputingIr Solly Aryza MengNessuna valutazione finora

- Resource Management On Multi-Core Systems: The ACTORS ApproachDocumento9 pagineResource Management On Multi-Core Systems: The ACTORS ApproachVignesh BarathiNessuna valutazione finora

- Rtas 08Documento10 pagineRtas 08shridharbvbNessuna valutazione finora

- DVCon Europe 2015 TA1 5 PaperDocumento7 pagineDVCon Europe 2015 TA1 5 PaperJon DCNessuna valutazione finora

- Design and Evaluation of Hybrid Fault-Detection SystemsDocumento12 pagineDesign and Evaluation of Hybrid Fault-Detection SystemsAbdrazag MohNessuna valutazione finora

- Design and Evaluation of Confidence-Driven Error-Resilient SystemsDocumento11 pagineDesign and Evaluation of Confidence-Driven Error-Resilient SystemsNguyen Van ToanNessuna valutazione finora

- Deviation Detection in Production Processes Based On Video Data Using Unsupervised Machine Learning ApproachesDocumento6 pagineDeviation Detection in Production Processes Based On Video Data Using Unsupervised Machine Learning ApproachesRyan CoetzeeNessuna valutazione finora

- Ijsta V1n4r16y15Documento5 pagineIjsta V1n4r16y15IJSTANessuna valutazione finora

- Gridmpc: A Service-Oriented Grid Architecture For Coupling Simulation and Control of Industrial SystemsDocumento8 pagineGridmpc: A Service-Oriented Grid Architecture For Coupling Simulation and Control of Industrial SystemsIrfan Akbar BarbarossaNessuna valutazione finora

- Automatic Welding and Soldering MachineDocumento6 pagineAutomatic Welding and Soldering Machine07 Bharath SNessuna valutazione finora

- J Sysarc 2018 04 001Documento33 pagineJ Sysarc 2018 04 001Yusup SiswoharjonoNessuna valutazione finora

- Presented By, S.Manoj Prabakar, G.Venkatesh, Be-Ece-Iiiyr Email-Id: Contact No: 9791464608Documento11 paginePresented By, S.Manoj Prabakar, G.Venkatesh, Be-Ece-Iiiyr Email-Id: Contact No: 9791464608dhivakargunalanNessuna valutazione finora

- Electronics Circuit DesignDocumento8 pagineElectronics Circuit DesignBikash RoutNessuna valutazione finora

- Adaptive Fault Diagnosis Algorithm For Controller Area NetworkDocumento11 pagineAdaptive Fault Diagnosis Algorithm For Controller Area Networkanmol6237Nessuna valutazione finora

- Real-Time Implementation of Low-Cost University Satellite 3-Axis Attitude Determination and Control SystemDocumento10 pagineReal-Time Implementation of Low-Cost University Satellite 3-Axis Attitude Determination and Control SystemivosyNessuna valutazione finora

- An Efficient Approach Towards Mitigating Soft Errors RisksDocumento16 pagineAn Efficient Approach Towards Mitigating Soft Errors RiskssipijNessuna valutazione finora

- Simulation-Based Fault-Tolerant Multiprocessors SystemDocumento10 pagineSimulation-Based Fault-Tolerant Multiprocessors SystemTELKOMNIKANessuna valutazione finora

- Continuous OS Deployment For ServersDocumento8 pagineContinuous OS Deployment For ServersIJRASETPublicationsNessuna valutazione finora

- Checkpoint and Restart For Distributed Components in XCAT3Documento8 pagineCheckpoint and Restart For Distributed Components in XCAT3xico107Nessuna valutazione finora

- Design Methodologies and Tools for Developing Complex SystemsDocumento16 pagineDesign Methodologies and Tools for Developing Complex SystemsElias YeshanawNessuna valutazione finora

- Esm04 PDFDocumento7 pagineEsm04 PDFPadmasri GirirajanNessuna valutazione finora

- 00966777Documento9 pagine00966777arthy_mariappan3873Nessuna valutazione finora

- Dynamic Scheduling Strategies For Avionics MissionDocumento36 pagineDynamic Scheduling Strategies For Avionics MissionIsil OzNessuna valutazione finora

- 2023 ATZextra Softing Diagnostic Systems Accelerate the Development en-تم التحريرDocumento8 pagine2023 ATZextra Softing Diagnostic Systems Accelerate the Development en-تم التحريرgh6975340Nessuna valutazione finora

- TVVD Assign 01 2114110993Documento9 pagineTVVD Assign 01 2114110993Sakshi SalimathNessuna valutazione finora

- FYS 4220/9220 - 2012 / #3 Real Time and Embedded Data Systems and ComputingDocumento25 pagineFYS 4220/9220 - 2012 / #3 Real Time and Embedded Data Systems and ComputingsansureNessuna valutazione finora

- Formal Verificaiton of A FIFODocumento8 pagineFormal Verificaiton of A FIFOSandesh BorgaonkarNessuna valutazione finora

- An Operating System Architecture For Organic ComputingDocumento15 pagineAn Operating System Architecture For Organic ComputingBill PetrieNessuna valutazione finora

- Condition-Based Maintenance: Model vs. Statistics A Performance ComparisonDocumento7 pagineCondition-Based Maintenance: Model vs. Statistics A Performance ComparisonLourds Egúsquiza EscuderoNessuna valutazione finora

- Band-aid Patching Tests Software Patches in ParallelDocumento4 pagineBand-aid Patching Tests Software Patches in Parallelmkk123Nessuna valutazione finora

- Component Based On Embedded SystemDocumento6 pagineComponent Based On Embedded Systemu_mohitNessuna valutazione finora

- Shema FirstDocumento10 pagineShema FirstjayalakshmisnairNessuna valutazione finora

- Timing Analysis: Dr. M. Waqar AzizDocumento12 pagineTiming Analysis: Dr. M. Waqar AzizSalman Ali Khan cu 172Nessuna valutazione finora

- Making Embedded Software Reuse Practical and Safe: Nancy G. Leveson, Kathryn Anne WeissDocumento8 pagineMaking Embedded Software Reuse Practical and Safe: Nancy G. Leveson, Kathryn Anne WeissPadma IyengharNessuna valutazione finora

- 018kuehne PDFDocumento8 pagine018kuehne PDFnguyenthanhdt5Nessuna valutazione finora

- Software Timing Analysis Using HWSW Cosimulation and Instruction Set SimulatorDocumento5 pagineSoftware Timing Analysis Using HWSW Cosimulation and Instruction Set Simulatorkmjung1Nessuna valutazione finora

- Timeweaver: A Tool For Hybrid Worst-Case Execution Time AnalysisDocumento11 pagineTimeweaver: A Tool For Hybrid Worst-Case Execution Time AnalysisNico PopNessuna valutazione finora

- Sat 4Documento6 pagineSat 4Surya VaitheeswaranNessuna valutazione finora

- Fault-Injection and Dependability Benchmarking For Grid Computing MiddlewareDocumento10 pagineFault-Injection and Dependability Benchmarking For Grid Computing MiddlewareMustafamna Al SalamNessuna valutazione finora

- Research Article: Virtual Commissioning of An Assembly Cell With Cooperating RobotsDocumento12 pagineResearch Article: Virtual Commissioning of An Assembly Cell With Cooperating RobotsYannPascalNessuna valutazione finora

- A Scalable Synthesis Methodology For Application-Specific ProcessorsDocumento14 pagineA Scalable Synthesis Methodology For Application-Specific ProcessorsMurali PadalaNessuna valutazione finora

- PROJECTDocumento12 paginePROJECTpraneethNessuna valutazione finora

- A Survey of Present Research On Virtual ClusterDocumento10 pagineA Survey of Present Research On Virtual ClusterZhang YuNessuna valutazione finora

- Motor Drive SystemDocumento6 pagineMotor Drive SystemJay MjNessuna valutazione finora

- Measuring The Impact of Interference Channels On Multicore AvionicsDocumento8 pagineMeasuring The Impact of Interference Channels On Multicore AvionicspannaNessuna valutazione finora

- Dynamically Reconfigurable Energy Aware Modular SoftwareDocumento8 pagineDynamically Reconfigurable Energy Aware Modular Softwarewang123218888Nessuna valutazione finora

- Twincat Training ReportDocumento35 pagineTwincat Training ReportGurjit SinghNessuna valutazione finora

- Model equipment availability and reduce costsDocumento10 pagineModel equipment availability and reduce costsrlopezlealNessuna valutazione finora

- Synthesis of Embedded Software Using Free-Choice Petri NetsDocumento6 pagineSynthesis of Embedded Software Using Free-Choice Petri Netssorin_shady_20062960Nessuna valutazione finora

- D T S M A ECU: Ynamic ASK Cheduling ON Ulticore Utomotive SDocumento8 pagineD T S M A ECU: Ynamic ASK Cheduling ON Ulticore Utomotive SAnonymous e4UpOQEPNessuna valutazione finora

- Model-Driven Online Capacity Management for Component-Based Software SystemsDa EverandModel-Driven Online Capacity Management for Component-Based Software SystemsNessuna valutazione finora

- Damping Calculations for Race Vehicle SuspensionDocumento99 pagineDamping Calculations for Race Vehicle SuspensionManu PantNessuna valutazione finora

- Design and Fabrication of Automated Manual Gear Transmission in Motor BikesDocumento57 pagineDesign and Fabrication of Automated Manual Gear Transmission in Motor Bikesanmol6237Nessuna valutazione finora

- Industrial EnclosuresDocumento56 pagineIndustrial Enclosuresanmol6237Nessuna valutazione finora

- Nissan Walkin ChennaiDocumento2 pagineNissan Walkin Chennaianmol6237Nessuna valutazione finora

- Nissan Walkin ChennaiDocumento2 pagineNissan Walkin Chennaianmol6237Nessuna valutazione finora

- List of over 150 Solidworks clientsDocumento4 pagineList of over 150 Solidworks clientsanmol6237Nessuna valutazione finora

- HW Starter Manual March HresolutionDocumento23 pagineHW Starter Manual March HresolutionCharan KumarNessuna valutazione finora

- Sri Ganapathi Industries Kushaiguda Title: Scale Size DRWG No: Sheet NoDocumento2 pagineSri Ganapathi Industries Kushaiguda Title: Scale Size DRWG No: Sheet Noanmol6237Nessuna valutazione finora

- ME 443/643: Introduction to HyperMeshDocumento37 pagineME 443/643: Introduction to HyperMeshanmol6237Nessuna valutazione finora

- RGB Color TableDocumento5 pagineRGB Color Tableanmol6237Nessuna valutazione finora

- Sri Ganapathi Industries Kushaiguda Title: Scale Size DRWG No: Sheet NoDocumento1 paginaSri Ganapathi Industries Kushaiguda Title: Scale Size DRWG No: Sheet Noanmol6237Nessuna valutazione finora

- Nissan Walkin Chennai 1Documento2 pagineNissan Walkin Chennai 1anmol6237Nessuna valutazione finora

- GD AdvantagesDocumento1 paginaGD Advantagesanmol6237Nessuna valutazione finora

- Press Tool Cutting ForceDocumento1 paginaPress Tool Cutting Forceanmol6237Nessuna valutazione finora

- Nissan Walkin ChennaiDocumento2 pagineNissan Walkin Chennaianmol6237Nessuna valutazione finora

- E2SEM1MT2TD06102012Documento3 pagineE2SEM1MT2TD06102012anmol6237Nessuna valutazione finora

- Creo2 Adv PrimerDocumento174 pagineCreo2 Adv PrimerAmit JhaNessuna valutazione finora

- Tool Design TerminologyDocumento5 pagineTool Design Terminologyanmol6237Nessuna valutazione finora

- Term - 1 - Class - X Communicative English - 2010Documento27 pagineTerm - 1 - Class - X Communicative English - 2010Nitin GargNessuna valutazione finora

- Parametric Modeling With Creo ParametricDocumento41 pagineParametric Modeling With Creo Parametricfrancesca050168Nessuna valutazione finora

- GDDocumento2 pagineGDanmol6237Nessuna valutazione finora

- M Tech Project List 2015 NewDocumento5 pagineM Tech Project List 2015 Newanmol6237Nessuna valutazione finora

- Train Schedule: From Bhopal To Indore JN BG (INBD) BPL - INBD PassengerDocumento1 paginaTrain Schedule: From Bhopal To Indore JN BG (INBD) BPL - INBD Passengeranmol6237Nessuna valutazione finora

- Gate Syllabus For Mech EnggDocumento4 pagineGate Syllabus For Mech Engganmol6237Nessuna valutazione finora

- SHEARINGDocumento6 pagineSHEARINGanmol6237Nessuna valutazione finora

- Intro To CFD ProblemDocumento1 paginaIntro To CFD ProblemlinoNessuna valutazione finora

- Train Schedule: From Bhopal To Indore JN BG (INBD) BPL - INBD PassengerDocumento1 paginaTrain Schedule: From Bhopal To Indore JN BG (INBD) BPL - INBD Passengeranmol6237Nessuna valutazione finora

- Ansys Training Book.Documento15 pagineAnsys Training Book.Sarath Babu SNessuna valutazione finora

- Ceed Model Question PaperDocumento21 pagineCeed Model Question PaperSatvender SinghNessuna valutazione finora

- More Than 100 Keyboard Shortcuts Must ReadDocumento3 pagineMore Than 100 Keyboard Shortcuts Must ReadChenna Keshav100% (1)

- JA Ip42 Creating Maintenance PlansDocumento8 pagineJA Ip42 Creating Maintenance PlansvikasbumcaNessuna valutazione finora

- Cat IQ TestDocumento3 pagineCat IQ TestBrendan Bowen100% (1)

- Clinnic Panel Penag 2014Documento8 pagineClinnic Panel Penag 2014Cikgu Mohd NoorNessuna valutazione finora

- DelhiDocumento40 pagineDelhiRahul DharNessuna valutazione finora

- 1 CAT O&M Manual G3500 Engine 0Documento126 pagine1 CAT O&M Manual G3500 Engine 0Hassan100% (1)

- Effectiveness of Using High Gas Pressure in A Blast Furnace As A Means of Intensifying The Smelting OperationDocumento10 pagineEffectiveness of Using High Gas Pressure in A Blast Furnace As A Means of Intensifying The Smelting Operationchandan kumar100% (1)

- Hearing God Through Biblical Meditation - 1 PDFDocumento20 pagineHearing God Through Biblical Meditation - 1 PDFAlexander PeñaNessuna valutazione finora

- RRR Media Kit April 2018Documento12 pagineRRR Media Kit April 2018SilasNessuna valutazione finora

- Bibliography PresocraticsDocumento10 pagineBibliography Presocraticsalraun66Nessuna valutazione finora

- The Power of Networking for Entrepreneurs and Founding TeamsDocumento28 pagineThe Power of Networking for Entrepreneurs and Founding TeamsAngela FigueroaNessuna valutazione finora

- July 4th G11 AssignmentDocumento5 pagineJuly 4th G11 Assignmentmargo.nicole.schwartzNessuna valutazione finora

- Calibration GuideDocumento8 pagineCalibration Guideallwin.c4512iNessuna valutazione finora

- Laboratory Manual: Semester: - ViiiDocumento15 pagineLaboratory Manual: Semester: - Viiirsingh1987Nessuna valutazione finora

- Money MBA 1Documento4 pagineMoney MBA 1neaman_ahmed0% (1)

- LaQshya Labour Room Quality Improvement InitiativeDocumento2 pagineLaQshya Labour Room Quality Improvement InitiativeHimanshu SharmaNessuna valutazione finora

- MAN 2 Model Medan Introduction to School Environment ReportDocumento45 pagineMAN 2 Model Medan Introduction to School Environment ReportdindaNessuna valutazione finora

- Chicago TemplateDocumento4 pagineChicago TemplateJt MetcalfNessuna valutazione finora

- RFID Receiver Antenna Project For 13.56 MHZ BandDocumento5 pagineRFID Receiver Antenna Project For 13.56 MHZ BandJay KhandharNessuna valutazione finora

- JKR Specs L-S1 Addendum No 1 LED Luminaires - May 2011Documento3 pagineJKR Specs L-S1 Addendum No 1 LED Luminaires - May 2011Leong KmNessuna valutazione finora

- I Am Sharing 'Pregnancy Shady' With YouDocumento48 pagineI Am Sharing 'Pregnancy Shady' With YouNouran AlaaNessuna valutazione finora

- Karate Writing AssessmentDocumento2 pagineKarate Writing AssessmentLeeann RandallNessuna valutazione finora

- Penilaian Risiko Kerja Menggunakan Metode Hirarc Di Pt. Sinar Laut Indah Natar Lampung SelatanDocumento7 paginePenilaian Risiko Kerja Menggunakan Metode Hirarc Di Pt. Sinar Laut Indah Natar Lampung SelatanIndun InsiyahNessuna valutazione finora

- Fancy YarnsDocumento7 pagineFancy Yarnsiriarn100% (1)

- Design Process at LEGODocumento5 pagineDesign Process at LEGOkapsarcNessuna valutazione finora

- Generate Ideas with TechniquesDocumento19 pagineGenerate Ideas with TechniquesketulNessuna valutazione finora

- Key Elements of Participation and Conflict Resolution in a DemocracyDocumento6 pagineKey Elements of Participation and Conflict Resolution in a DemocracyAbhinayNessuna valutazione finora

- FAI - Assignment Sheet (Both Assignments)Documento5 pagineFAI - Assignment Sheet (Both Assignments)Wilson WongNessuna valutazione finora

- Ethics Book of TAMIL NADU HSC 11th Standard Tamil MediumDocumento140 pagineEthics Book of TAMIL NADU HSC 11th Standard Tamil MediumkumardjayaNessuna valutazione finora

- EOD Stanags Overview April 2021Documento12 pagineEOD Stanags Overview April 2021den mas paratate leo egoNessuna valutazione finora

- MARCOMDocumento35 pagineMARCOMDrei SalNessuna valutazione finora