Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Interval-Valued and Fuzzy-Valued Random Variables: From Computing Sample Variances To Computing Sample Covariances

Caricato da

spikedes123Descrizione originale:

Titolo originale

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Interval-Valued and Fuzzy-Valued Random Variables: From Computing Sample Variances To Computing Sample Covariances

Caricato da

spikedes123Copyright:

Formati disponibili

Interval-Valued and Fuzzy-Valued Random

Variables: From Computing Sample Variances

to Computing Sample Covariances

Jan B. Beck

1

, Vladik Kreinovich

1

, and Berlin Wu

2

1

University of Texas, El Paso TX 79968, USA, {janb,vladik}@cs.utep.edu

2

National Chengchi University, Taipei, Taiwan berlin@math.nccu.edu.tw

Summary. Due to measurement uncertainty, often, instead of the actual values

x

i

of the measured quantities, we only know the intervals x

i

= [ x

i

i

, x

i

+

i

],

where x

i

is the measured value and

i

is the upper bound on the measurement error

(provided, e.g., by the manufacturer of the measuring instrument). These intervals

can be viewed as random intervals, i.e., as samples from the interval-valued random

variable. In such situations, instead of the exact value of the sample statistics such

as covariance C

x,y

, we can only have an interval C

x,y

of possible values of this

statistic. It is known that in general, computing such an interval C

x,y

for C

x,y

is

an NP-hard problem. In this paper, we describe an algorithm that computes this

range C

x,y

for the case when the measurements are accurate enough so that the

intervals corresponding to dierent measurements do not intersect much.

Introduction

Traditional statistics: brief reminder. In traditional statistics, we have i.i.d.

variables x

1

, . . . , x

n

, . . ., and we want to nd statistics s

n

(x

1

, . . . , x

n

) that

would approximate the desired parameter of the corresponding probability

distribution. For example, if we want to estimate the mean E[x], we can take

the arithmetic average s

n

= (x

1

+ . . . + x

n

)/n. It is known that as n ,

this statistic tends (with probability 1) to the desired mean: s

n

E[x]. Simi-

larly, the sample variance tends to the actual variance, the sample covariance

between two dierent samples tends to the actual covariance C[x, y], etc.

Coarsening: a source of random sets. In traditional statistics, we implicitly

assume that the values x

i

are directly observable. In real life, due to (in-

evitable) measurement uncertainty, often, what we actually observe is a set

S

i

that contains the actual (unknown) value of x

i

. This phenomenon is called

coarsening; see, e.g., [3]. Due to coarsening, instead of the actual values x

i

,

all we know is the sets X

1

, . . . , X

n

, . . . that are known the contain the actual

(un-observable) values x

i

: x

i

X

i

.

2 Jan B. Beck, Vladik Kreinovich, and Berlin Wu

Statistics based on coarsening. The sets X

1

, . . . , X

n

, . . . are i.i.d. random sets.

We want to nd statistics of these random sets that would enable us to approx-

imate the desired parameters of the original distribution x. Here, a statistic

S

n

(X

1

, . . . , X

n

) transform n sets X

1

, . . . , X

n

into a new set. We want this

statistic S

n

(X

1

, . . . , X

n

) to tend to a limit set L as n , and we want

this limit set L to contain the value of the desired parameter of the original

distribution.

For example, if we are interested in the mean E[x], then we can take

S

n

= (X

1

+ . . . + X

n

)/n (where the sum is the Mankowski element-wise

sum of the sets). It is possible to show that, under reasonable assumptions,

this statistic tends to a limit L, and that E[x] L. This limit can be viewed,

therefore, as a set-based average of the sets X

1

, . . . , X

n

.

Important issue: computational complexity. There has been a lot of inter-

esting theoretical research on set-valued random variables and corresponding

statistics. In many cases, the corresponding statistics have been designed, and

their asymptotical properties have been proven; see, e.g., [2, 6] and references

therein.

In many such situations, the main obstacle on the way of practically using

these statistics is the fact that going from random numbers to random sets

drastically increases the computational complexity hence, the running time

of the required computations. It is therefore desirable to come up with new,

faster algorithms for computing such set-values heuristics.

What we are planning to do. In this paper, we design such faster algorithm

for a specic practical problem: the problem of data processing. As we will

show, in this problem, it is reasonable to restrict ourselves to random intervals

an important specic case of random sets.

Practical Problem: Data Processing From Computing to

Probabilities to Intervals

Why data processing? In many real-life situations, we are interested in the

value of a physical quantity y that is dicult or impossible to measure directly.

Examples of such quantities are the distance to a star and the amount of oil in

a given well. Since we cannot measure y directly, a natural idea is to measure

y indirectly. Specically, we nd some easier-to-measure quantities x

1

, . . . , x

n

which are related to y by a known relation y = f(x

1

, . . . , x

n

). This relation

may be a simple functional transformation, or complex algorithm (e.g., for

the amount of oil, numerical solution to an inverse problem).

It is worth mentioning that in the vast majority of these cases, the function

f(x

1

, . . . , x

n

) that describes the dependence between physical quantities is

continuous.

Then, to estimate y, we rst measure the values of the quantities x

1

, . . . , x

n

,

and then we use the results x

1

, . . . , x

n

of these measurements to to compute

an estimate y for y as y = f( x

1

, . . . , x

n

).

Interval-Valued Random Variables: How to Compute Sample Covariance 3

For example, to nd the resistance R, we measure current I and voltage V ,

and then use the known relation R = V/I to estimate resistance as

R =

V /

I.

Computing an estimate for y based on the results of direct measurements

is called data processing; data processing is the main reason why computers

were invented in the rst place, and data processing is still one of the main

uses of computers as number crunching devices.

Comment. In this paper, for simplicity, we consider the case when the relation

between x

i

and y is known exactly; in some practical situations, we only known

an approximate relation between x

i

and y.

Why interval computations? From computing to probabilities to intervals.

Measurement are never 100% accurate, so in reality, the actual value x

i

of

i-th measured quantity can dier from the measurement result x

i

. Because of

these measurement errors x

i

def

= x

i

x

i

, the result y = f( x

1

, . . . , x

n

) of data

processing is, in general, dierent from the actual value y = f(x

1

, . . . , x

n

) of

the desired quantity y [12].

It is desirable to describe the error y

def

= y y of the result of data

processing. To do that, we must have some information about the errors of

direct measurements.

What do we know about the errors x

i

of direct measurements? First,

the manufacturer of the measuring instrument must supply us with an up-

per bound

i

on the measurement error. If no such upper bound is supplied,

this means that no accuracy is guaranteed, and the corresponding measuring

instrument is practically useless. In this case, once we performed a measure-

ment and got a measurement result x

i

, we know that the actual (unknown)

value x

i

of the measured quantity belongs to the interval x

i

= [x

i

, x

i

], where

x

i

= x

i

i

and x

i

= x

i

+

i

.

In many practical situations, we not only know the interval [

i

,

i

] of

possible values of the measurement error; we also know the probability of

dierent values x

i

within this interval. This knowledge underlies the tradi-

tional engineering approach to estimating the error of indirect measurement,

in which we assume that we know the probability distributions for measure-

ment errors x

i

.

In practice, we can determine the desired probabilities of dierent val-

ues of x

i

by comparing the results of measuring with this instrument with

the results of measuring the same quantity by a standard (much more accu-

rate) measuring instrument. Since the standard measuring instrument is much

more accurate than the one use, the dierence between these two measure-

ment results is practically equal to the measurement error; thus, the empirical

distribution of this dierence is close to the desired probability distribution

for measurement error. There are two cases, however, when this determination

is not done:

First is the case of cutting-edge measurements, e.g., measurements in fun-

damental science. When a Hubble telescope detects the light from a distant

4 Jan B. Beck, Vladik Kreinovich, and Berlin Wu

galaxy, there is no standard (much more accurate) telescope oating

nearby that we can use to calibrate the Hubble: the Hubble telescope is

the best we have.

The second case is the case of measurements on the shop oor. In this

case, in principle, every sensor can be thoroughly calibrated, but sensor

calibration is so costly usually costing ten times more than the sensor

itself that manufacturers rarely do it.

In both cases, we have no information about the probabilities of x

i

; the only

information we have is the upper bound on the measurement error.

In this case, after we performed a measurement and got a measurement

result x

i

, the only information that we have about the actual value x

i

of the

measured quantity is that it belongs to the interval x

i

= [ x

i

i

, x

i

+

i

].

In such situations, the only information that we have about the (unknown)

actual value of y = f(x

1

, . . . , x

n

) is that y belongs to the range y = [y, y] of

the function f over the box x

1

. . . x

n

:

y = [y, y] = {f(x

1

, . . . , x

n

) | x

1

x

1

, . . . , x

n

x

n

}.

For continuous functions f(x

1

, . . . , x

n

), this range is an interval. The process

of computing this interval range based on the input intervals x

i

is called

interval computations; see, e.g., [4].

Interval computations techniques: brief reminder. Historically the rst method

for computing the enclosure for the range is the method which is sometimes

called straightforward interval computations. This method is based on the

fact that inside the computer, every algorithm consists of elementary opera-

tions (arithmetic operations, min, max, etc.). For each elementary operation

f(a, b), if we know the intervals a and b for a and b, we can compute the

exact range f(a, b). The corresponding formulas form the so-called interval

arithmetic. For example,

[a, a] + [b, b] = [a +b, a +b]; [a, a] [b, b] = [a b, a b];

[a, a] [b, b] = [min(a b, a b, a b, a b), max(a b, a b, a b, a b)].

In straightforward interval computations, we repeat the computations forming

the program f step-by-step, replacing each operation with real numbers by the

corresponding operation of interval arithmetic. It is known that, as a result,

we get an enclosure Y y for the desired range.

In some cases, this enclosure is exact. In more complex cases (see examples

below), the enclosure has excess width.

There exist more sophisticated techniques for producing a narrower enclo-

sure, e.g., a centered form method. However, for each of these techniques, there

are cases when we get an excess width. Reason: as shown in [5], the problem

of computing the exact range is known to be NP-hard even for polynomial

functions f(x

1

, . . . , x

n

) (actually, even for quadratic functions f).

Interval-Valued Random Variables: How to Compute Sample Covariance 5

Comment. NP-hard means, crudely speaking, that no feasible algorithm

can compute the exact range of f(x

1

, . . . , x

n

) for all possible polynomials

f(x

1

, . . . , x

n

) and for all possible intervals x

1

, . . . , x

n

.

What we are planning to do? In this paper, we analyze a specic interval

computations problem when we use traditional statistical data processing

algorithms f(x

1

, . . . , x

n

) to process the results of direct measurements.

Error Estimation for Traditional Statistical Data Processing

Algorithms under Interval Uncertainty: Known Results

Formulation of the problem. When we have n results x

1

, . . . , x

n

of repeated

measurement of the same quantity (at dierent points, or at dierent moments

of time), traditional statistical approach usually starts with computing their

sample average E

x

= (x

1

+. . . +x

n

)/n and their (sample) variance

V

x

=

(x

1

E

x

)

2

+. . . + (x

n

E

x

)

2

n

(1)

(or, equivalently, the sample standard deviation =

V ). If, during each

measurement i, we measure the values x

i

and y

i

of two dierent quantities x

and y, then we also compute their (sample) covariance

C

x,y

=

(x

1

E

x

) (y

i

E

y

) +. . . + (x

n

E

x

) (y

n

E

y

)

n

; (2)

see, e.g., [12].

As we have mentioned, in real life, we often do not know the exact values

of the quantities x

i

and y

i

, we only know the intervals x

i

of possible values of

x

i

and the intervals y

i

of possible values of y

i

. In such situations, for dierent

possible values x

i

x

i

and y

i

y

i

, we get dierent values of E

x

, E

y

, V

x

, and

C

x,y

. The question is: what are the intervals E

x

, V

x

, and C

x,y

of possible

values of E

x

, V

x

, and C

x,y

?

The practical importance of this question was emphasized, e.g., in [9, 10]

on the example of processing geophysical data.

Comment: the problem reformulated in terms of set-valued random variables.

Traditional statistical data processing means that we assume that the mea-

sured values x

i

and y

i

are samples of random variables, and based on these

samples, we are estimating the actual average, variance, and covariance.

Similarly, in case of interval uncertainty, we can say that the intervals

x

i

and y

i

coming from measurements are samples of interval-valued random

variables, and we are interested in estimating the actual (properly dened)

average, variance, and covariance of these interval-valued random variables.

Bounds on E. For E

x

, the straightforward interval computations leads to the

exact range:

E

x

=

x

1

+. . . +x

n

n

, i.e., E

x

=

x

1

+. . . +x

n

n

, and E

x

=

x

1

+. . . +x

n

n

.

6 Jan B. Beck, Vladik Kreinovich, and Berlin Wu

For variance, the problem is dicult. For V

x

, all known algorithms lead to

an excess width. Specically, there exist feasible algorithms for computing V

x

(see, e.g., [1]), but in general, the problem of computing V

x

is NP-hard [1].

It is also known that in some practically important cases, feasible algo-

rithms for computing V

x

are possible. One such practically useful case is

when the measurement accuracy is good enough so that we can tell that the

dierent measured values x

i

are indeed dierent e.g., the corresponding in-

tervals x

i

do not intersect. In this case, there exists a quadratic-time algorithm

for computing V

x

; see, e.g., [1].

What about covariance? The only thing that we know is that in general, com-

puting covariance C

x,y

is NP-hard [11].

In this paper, we show that (similarly to the case of variance), it is possible

to compute the interval covariance when the measurement are accurate enough

to enable us to distinguish between dierent measurement results ( x

i

, y

i

).

Main Results

Theorem 1. There exists a polynomial-time algorithm that, given a list of n

pairwise disjoint boxes x

i

y

i

(1 i n) (i.e., in which every two boxes

have an empty intersection), produces the exact range C

x,y

for the covariance

C

x,y

.

Theorem 2. For every integer K > 1, there exists a polynomial-time algo-

rithm that, given a list of n boxes x

i

y

i

(1 i n) in which > K boxes

always have an empty intersection, produces the exact range C

x,y

for the co-

variance C

x,y

.

Proof. Since C

x,y

is linear in x

i

, we have C

x,y

= C

x,y

hence C

x,y

=

C

x,y

, so C

x,y

= C

x,y

. Because of this relation, it is sucient to provide

an algorithm for computing C

x,y

: we will then compute C

x,y

as C

x,y

.

The function C

x,y

is linear in each of its variables x

i

and y

i

. In general,

a linear function f(x) attains its minimum on an interval [x, x] at one of its

endpoints: at x if f is non-decreasing (f/x 0) and at x if f is non-

increasing (f/x 0). For C

x,y

, we have C

x,y

/x

i

= (1/n) y

i

(1/n) E

y

,

so C

x,y

/x

i

0 if and only if y

i

E

y

. Thus, for each i, the values x

m

i

and

y

m

i

at which C

x,y

attains its minimum satisfy the following four properties:

1. if x

m

i

= x

i

, then y

m

i

E

y

; 2. if x

m

i

= x

i

, then y

m

i

E

y

;

3. if y

m

i

= y

i

, then x

m

i

E

x

; 4. if y

m

i

= y

i

, then x

m

i

E

x

.

Let us show that if we know the vector E

def

= (E

x

, E

y

), and this vector is

outside the i-th box b

i

def

= x

i

y

i

, then we can uniquely determine the values

x

m

i

and y

m

i

.

Indeed, the fact that E b

i

means that either E

x

x

i

or E

y

y

i

.

Without losing generality, let us assume that E

x

x

i

, i.e., that either E

x

< x

i

or E

x

> x

i

.

Interval-Valued Random Variables: How to Compute Sample Covariance 7

If E

x

< x

i

, then, since x

i

x

m

i

, we have E

x

< x

m

i

. Hence, according to

Property 4, we cannot have y

m

i

= y

i

. Since the minimum is always attained

at one of the endpoints, we thus have y

m

i

= y

i

. Now that we know the value

of y

m

i

, we can use Properties 1 and 2:

if y

i

E

y

, then x

m

i

= x

i

; if y

i

E

y

, then x

m

i

= x

i

.

Similarly, if E

x

> x

i

, then y

m

i

= y

i

, and:

if y

i

E

y

, then x

m

i

= x

i

; if y

i

E

y

, then x

m

i

= x

i

.

So, to compute C

x,y

, we sort all 2n values x

i

, x

i

into a sequence

x

(1)

x

(2)

. . . x

(2n)

, and we sort the 2n values y

i

, y

i

into a sequence

y

(1)

y

(2)

. . . y

(2n)

. We thus get 2n 2n zones z

k,l

def

= [x

(k)

, x

(k+1)

]

[y

(l)

, x

(l+1)

].

We know that the average E of the actual minimum values is attained

in one of these zones. If we assume that E z

k,l

, i.e., in particular, that

E

x

[x

(k)

, x

(k+1)

], then the condition x

i

E

x

is guaranteed to be satised

if x

i

x

(k+1)

. Thus, following the above arguments, we can nd the values

(x

m

i

, y

m

i

) for all the boxes b

i

that do not contain this zone:

y

i

y

(l)

y

i

y

(l)

y

(l+1)

y

i

y

(l+1)

y

i

x

i

x

(k)

(x

i

, y

i

) (x

i

, y

i

) (x

i

, y

i

)

x

i

x

(k)

x

(k+1)

x

i

(x

i

, y

i

) ? (x

i

, y

i

)

x

(k+1)

x

i

(x

i

, y

i

) (x

i

, y

i

) (x

i

, y

i

)

As we can see, for each of O(n

2

) zones z

k,l

, the only case when we do not

know the corresponding values (x

m

i

, y

m

i

) is when b

i

contains this zone. All

boxes b

i

with this property have a common intersection z

k,l

, thus, there can

be no more than K of them. For each of these K boxes b

i

, we try all 4

possible combinations of endpoints as the corresponding (x

m

i

, y

m

i

).

Thus, for each of O(n

2

) zones, we must try 4

K

possible sequences of

pairs (x

m

i

, y

m

i

). We compute each of these n-element sequences element-by-

element, so computing each sequence requires O(n) computational steps.

For each of these sequences, we check whether the averages E

x

and E

y

are indeed within this zone, and if they are, we compute the correlation. The

smallest of the resulting correlations is the desired value C

x,y

.

For each of O(n

2

) zones, we need O(n) steps, to the total of O(n

2

)O(n) =

O(n

3

); computing the smallest of O(n

2

) values requires O(n

2

) more steps.

Thus, our algorithm computes C

x,y

in O(n

3

) steps.

From Interval-Valued to Fuzzy-Valued Random Variables

Often, in addition (or instead) the guaranteed bounds, an expert can provide

bounds that contain x

i

with a certain degree of condence. Often, we know

several such bounding intervals corresponding to dierent degrees of con-

dence. Such a nested family of intervals is also called a fuzzy set, because it

8 Jan B. Beck, Vladik Kreinovich, and Berlin Wu

turns out to be equivalent to a more traditional denition of fuzzy set [7, 8] (if

a traditional fuzzy set is given, then dierent intervals from the nested family

can be viewed as -cuts corresponding to dierent levels of uncertainty ).

To provide statistical values of fuzzy-valued random variables, we can

therefore, for each level , apply the above interval-valued techniques to the

corresponding -cuts.

Acknowledgments. This work was supported by NASA grant NCC5-209,

by the Air Force Oce of Scientic Research grant F49620-00-1-0365, by

NSF grants EAR-0112968, EAR-0225670, and EIA-0321328, and by the Army

Research Laboratories grant DATM-05-02-C-0046. The authors are thankful

to Hung T. Nguyen and to the anonymous referees for valuable suggestions.

References

1. Ferson S, Ginzburg L, Kreinovich V, Longpre L, Aviles M (2002) Computing

Variance for Interval Data is NP-Hard, ACM SIGACT News 33(2):108118.

2. Goutsias J, Mahler RPS, Nguyen HT, eds. (1997) Random Sets: Theory and

Applications. Springer-Verlag, N.Y.

3. Heitjan DF, Rubin DB (1991) Ignorability and coarse data, Ann. Stat.

19(4):22442253

4. Jaulin L, Kieer M, Didrit O, and Walter E (2001) Applied Interval Analysis.

Springer-Verlag, Berlin

5. Kreinovich V, Lakeyev A, Rohn J, Kahl P (1997) Computational Complexity

and Feasibility of Data Processing and Interval Computations. Kluwer, Dor-

drecht

6. Li S, Ogura Y, Kreinovich V (2002) Limit Theorems and Applications of Set

Valued and Fuzzy Valued Random Variables. Kluwer, Dordrecht

7. Nguyen HT, Kreinovich V (1996) Nested Intervals and Sets: Concepts, Relations

to Fuzzy Sets, and Applications, In: Kearfott RB et al., Applications of Interval

Computations, Kluwer, Dordrecht, 245290

8. Nguyen HT, Walker EA (1999) First Course in Fuzzy Logic, CRC Press, Boca

Raton, Florida

9. Nivlet P, Fournier F, and Royer J. (2001) A new methodology to account for

uncertainties in 4-D seismic interpretation, Proc. 71st Annual Intl Meeting of

Soc. of Exploratory Geophysics SEG2001, San Antonio, TX, September 914,

16441647.

10. Nivlet P, Fournier F, Royer J (2001) Propagating interval uncertainties in su-

pervised pattern recognition for reservoir characterization, Proc. 2001 Society

of Petroleum Engineers Annual Conf. SPE2001, New Orleans, LA, September

30October 3, paper SPE-71327.

11. Osegueda R, Kreinovich V, Potluri L, Alo R (2002) Non-Destructive Testing of

Aerospace Structures: Granularity and Data Mining Approach. In: Proc. FUZZ-

IEEE2002, Honolulu, HI, May 1217, 2002, Vol. 1, 685689

12. Rabinovich S (1993) Measurement Errors: Theory and Practice. American In-

stitute of Physics, New York

Potrebbero piacerti anche

- Mathematical Foundations of Information TheoryDa EverandMathematical Foundations of Information TheoryValutazione: 3.5 su 5 stelle3.5/5 (9)

- Interval-Valued and Fuzzy-Valued Random Variables: From Computing Sample Variances To Computing Sample CovariancesDocumento1 paginaInterval-Valued and Fuzzy-Valued Random Variables: From Computing Sample Variances To Computing Sample CovariancesMahbubRiyadNessuna valutazione finora

- Learn Statistics Fast: A Simplified Detailed Version for StudentsDa EverandLearn Statistics Fast: A Simplified Detailed Version for StudentsNessuna valutazione finora

- Guesstimation: A New Justification of The Geometric Mean HeuristicDocumento7 pagineGuesstimation: A New Justification of The Geometric Mean HeuristicPiyushGargNessuna valutazione finora

- Detecting A Vector Based On Linear Measurements: Ery Arias-CastroDocumento9 pagineDetecting A Vector Based On Linear Measurements: Ery Arias-Castroshyam_utdNessuna valutazione finora

- Lecture 11: Standard Error, Propagation of Error, Central Limit Theorem in The Real WorldDocumento13 pagineLecture 11: Standard Error, Propagation of Error, Central Limit Theorem in The Real WorldWisnu van NugrooyNessuna valutazione finora

- Sample Theory With Ques. - Estimation (JAM MS Unit-14)Documento25 pagineSample Theory With Ques. - Estimation (JAM MS Unit-14)ZERO TO VARIABLENessuna valutazione finora

- Assignment 1 StatisticsDocumento6 pagineAssignment 1 Statisticsjaspreet46Nessuna valutazione finora

- MLT Assignment 1Documento13 pagineMLT Assignment 1Dinky NandwaniNessuna valutazione finora

- Theory of Uncertainty of Measurement PDFDocumento14 pagineTheory of Uncertainty of Measurement PDFLong Nguyễn VănNessuna valutazione finora

- Data Analysis For Physics Laboratory: Standard ErrorsDocumento5 pagineData Analysis For Physics Laboratory: Standard Errorsccouy123Nessuna valutazione finora

- Mean, Standard Deviation, and Counting StatisticsDocumento2 pagineMean, Standard Deviation, and Counting StatisticsMohamed NaeimNessuna valutazione finora

- Principles of Error AnalysisDocumento11 paginePrinciples of Error Analysisbasura12345Nessuna valutazione finora

- A Case Study of Bank Queueing Model: Kasturi Nirmala, DR - Shahnaz BathulDocumento8 pagineA Case Study of Bank Queueing Model: Kasturi Nirmala, DR - Shahnaz BathulIJERDNessuna valutazione finora

- Error Analysis, W011Documento12 pagineError Analysis, W011Evan KeastNessuna valutazione finora

- Unit 5 Probabilistic ModelsDocumento39 pagineUnit 5 Probabilistic ModelsRam100% (1)

- Lecture Notes Week 2Documento10 pagineLecture Notes Week 2tarik BenseddikNessuna valutazione finora

- Insppt 6Documento21 pagineInsppt 6Md Talha JavedNessuna valutazione finora

- Robust Statistics For Outlier Detection (Peter J. Rousseeuw and Mia Hubert)Documento8 pagineRobust Statistics For Outlier Detection (Peter J. Rousseeuw and Mia Hubert)Robert PetersonNessuna valutazione finora

- Linear Models: The Least-Squares MethodDocumento24 pagineLinear Models: The Least-Squares MethodAnimated EngineerNessuna valutazione finora

- Lecture4 Mech SUDocumento17 pagineLecture4 Mech SUNazeeh Abdulrhman AlbokaryNessuna valutazione finora

- Statistics 21march2018Documento25 pagineStatistics 21march2018Parth JatakiaNessuna valutazione finora

- Basic Statistics For Data ScienceDocumento45 pagineBasic Statistics For Data ScienceBalasaheb ChavanNessuna valutazione finora

- Basic Probability Reference Sheet: February 27, 2001Documento8 pagineBasic Probability Reference Sheet: February 27, 2001Ibrahim TakounaNessuna valutazione finora

- PDF ResizeDocumento31 paginePDF ResizeFahmi AlamsyahNessuna valutazione finora

- Block 7Documento34 pagineBlock 7Harsh kumar MeerutNessuna valutazione finora

- Unit 18Documento12 pagineUnit 18Harsh kumar MeerutNessuna valutazione finora

- Notes On Asymptotic Theory: IGIER-Bocconi, IZA and FRDBDocumento11 pagineNotes On Asymptotic Theory: IGIER-Bocconi, IZA and FRDBLuisa HerreraNessuna valutazione finora

- Errors and DeviationsDocumento8 pagineErrors and DeviationsSumit SahaNessuna valutazione finora

- ECE523 Engineering Applications of Machine Learning and Data Analytics - Bayes and Risk - 1Documento7 pagineECE523 Engineering Applications of Machine Learning and Data Analytics - Bayes and Risk - 1wandalexNessuna valutazione finora

- 1983 S Baker R D Cousins-NIM 221 437-442Documento6 pagine1983 S Baker R D Cousins-NIM 221 437-442shusakuNessuna valutazione finora

- Topic 5: Probability Bounds and The Distribution of Sample StatisticsDocumento16 pagineTopic 5: Probability Bounds and The Distribution of Sample Statisticss05xoNessuna valutazione finora

- Lectura 1 Point EstimationDocumento47 pagineLectura 1 Point EstimationAlejandro MarinNessuna valutazione finora

- Measurement and UncertaintyDocumento12 pagineMeasurement and UncertaintyTiến Dũng100% (1)

- Nbs VonneumannDocumento3 pagineNbs VonneumannRishav DhungelNessuna valutazione finora

- Errors Eng 1 - Part5Documento4 pagineErrors Eng 1 - Part5awrr qrq2rNessuna valutazione finora

- Wickham StatiDocumento12 pagineWickham StatiJitendra K JhaNessuna valutazione finora

- Non Linear Root FindingDocumento16 pagineNon Linear Root FindingMichael Ayobami AdelekeNessuna valutazione finora

- ST2187 Block 5Documento15 pagineST2187 Block 5Joseph MatthewNessuna valutazione finora

- Statistics PDFDocumento17 pagineStatistics PDFSauravNessuna valutazione finora

- Chapter 1 To Chapter 2 Stat 222Documento21 pagineChapter 1 To Chapter 2 Stat 222Edgar MagturoNessuna valutazione finora

- Fundamentals of ProbabilityDocumento58 pagineFundamentals of Probabilityhien05Nessuna valutazione finora

- Math TRM PPRDocumento15 pagineMath TRM PPRsummitzNessuna valutazione finora

- An Introduction To Objective Bayesian Statistics PDFDocumento69 pagineAn Introduction To Objective Bayesian Statistics PDFWaterloo Ferreira da SilvaNessuna valutazione finora

- Basic Concepts of Inference: Corresponds To Chapter 6 of Tamhane and DunlopDocumento40 pagineBasic Concepts of Inference: Corresponds To Chapter 6 of Tamhane and Dunlopakirank1Nessuna valutazione finora

- Using Extreme Value Theory To Optimally Design PV Applications Under Climate ChangeDocumento7 pagineUsing Extreme Value Theory To Optimally Design PV Applications Under Climate ChangeSEP-PublisherNessuna valutazione finora

- CHP 5Documento63 pagineCHP 5its9918kNessuna valutazione finora

- Bayesian Regression and BitcoinDocumento6 pagineBayesian Regression and BitcoinJowPersonaVieceliNessuna valutazione finora

- Notes On Sampling and Hypothesis TestingDocumento10 pagineNotes On Sampling and Hypothesis Testinghunt_pgNessuna valutazione finora

- Analysis of Random Errors in Physical MeasurementsDocumento48 pagineAnalysis of Random Errors in Physical MeasurementsApurva SahaNessuna valutazione finora

- 3.6: General Hypothesis TestsDocumento6 pagine3.6: General Hypothesis Testssound05Nessuna valutazione finora

- 1.august 8 Class NotesDocumento3 pagine1.august 8 Class Notesman humanNessuna valutazione finora

- Statistical InferenceDocumento15 pagineStatistical InferenceDynamic ClothesNessuna valutazione finora

- Lect1 12Documento32 pagineLect1 12Ankit SaxenaNessuna valutazione finora

- StatsLecture1 ProbabilityDocumento4 pagineStatsLecture1 Probabilitychoi7Nessuna valutazione finora

- Beyond The Bakushinkii Veto - Regularising Linear Inverse Problems Without Knowing The Noise DistributionDocumento23 pagineBeyond The Bakushinkii Veto - Regularising Linear Inverse Problems Without Knowing The Noise Distributionzys johnsonNessuna valutazione finora

- Notes On EstimationDocumento4 pagineNotes On EstimationAnandeNessuna valutazione finora

- International Journal of Uncertainty, Fuzziness and Knowledge-Based Systems Vol. 19, No. 6 (2011) 1059 - C World Scientific Publishing CompanyDocumento2 pagineInternational Journal of Uncertainty, Fuzziness and Knowledge-Based Systems Vol. 19, No. 6 (2011) 1059 - C World Scientific Publishing CompanyjimakosjpNessuna valutazione finora

- Method Least SquaresDocumento7 pagineMethod Least SquaresZahid SaleemNessuna valutazione finora

- Construction Claims and Contract Admin CPDDocumento40 pagineConstruction Claims and Contract Admin CPDCraig FawcettNessuna valutazione finora

- Amerex Ansul Badger Ul Catalogo Por PartesDocumento37 pagineAmerex Ansul Badger Ul Catalogo Por PartesPuma De La Torre ExtintoresNessuna valutazione finora

- Unit 20: TroubleshootingDocumento12 pagineUnit 20: TroubleshootingDongjin LeeNessuna valutazione finora

- Effective TeachingDocumento94 pagineEffective Teaching小曼Nessuna valutazione finora

- The Handmaid's TaleDocumento40 pagineThe Handmaid's Taleleher shahNessuna valutazione finora

- Ferroelectric RamDocumento20 pagineFerroelectric RamRijy LoranceNessuna valutazione finora

- Anykycaccount Com Product Payoneer Bank Account PDFDocumento2 pagineAnykycaccount Com Product Payoneer Bank Account PDFAnykycaccountNessuna valutazione finora

- Shelly Cashman Series Microsoft Office 365 Excel 2016 Comprehensive 1st Edition Freund Solutions ManualDocumento5 pagineShelly Cashman Series Microsoft Office 365 Excel 2016 Comprehensive 1st Edition Freund Solutions Manualjuanlucerofdqegwntai100% (10)

- Lecture BouffonDocumento1 paginaLecture BouffonCarlos Enrique GuerraNessuna valutazione finora

- MSDS Leadframe (16 Items)Documento8 pagineMSDS Leadframe (16 Items)bennisg8Nessuna valutazione finora

- ENSC1001 Unit Outline 2014Documento12 pagineENSC1001 Unit Outline 2014TheColonel999Nessuna valutazione finora

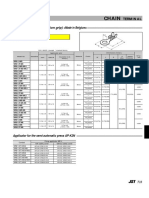

- Chain: SRB Series (With Insulation Grip)Documento1 paginaChain: SRB Series (With Insulation Grip)shankarNessuna valutazione finora

- Week 3 Alds 2202Documento13 pagineWeek 3 Alds 2202lauren michaelsNessuna valutazione finora

- Lab 1Documento51 pagineLab 1aliNessuna valutazione finora

- Do Now:: What Is Motion? Describe The Motion of An ObjectDocumento18 pagineDo Now:: What Is Motion? Describe The Motion of An ObjectJO ANTHONY ALIGORANessuna valutazione finora

- A Structural Modelo of Limital Experienci Un TourismDocumento15 pagineA Structural Modelo of Limital Experienci Un TourismcecorredorNessuna valutazione finora

- Handout Waste Catch BasinDocumento2 pagineHandout Waste Catch BasinJonniel De GuzmanNessuna valutazione finora

- Turner Et Al. 1991 ASUDS SystemDocumento10 pagineTurner Et Al. 1991 ASUDS SystemRocio HerreraNessuna valutazione finora

- Cummins: ISX15 CM2250Documento17 pagineCummins: ISX15 CM2250haroun100% (4)

- Gracella Irwana - G - Pert 04 - Sia - 1Documento35 pagineGracella Irwana - G - Pert 04 - Sia - 1Gracella IrwanaNessuna valutazione finora

- Corrosion Performance of Mild Steel and GalvanizedDocumento18 pagineCorrosion Performance of Mild Steel and GalvanizedNarasimha DvlNessuna valutazione finora

- A-1660 11TH Trimester From Mcdowell To Vodafone Interpretation of Tax Law in Cases. OriginalDocumento18 pagineA-1660 11TH Trimester From Mcdowell To Vodafone Interpretation of Tax Law in Cases. OriginalPrasun TiwariNessuna valutazione finora

- Bullying Report - Ending The Torment: Tackling Bullying From The Schoolyard To CyberspaceDocumento174 pagineBullying Report - Ending The Torment: Tackling Bullying From The Schoolyard To CyberspaceAlexandre AndréNessuna valutazione finora

- CrimDocumento29 pagineCrimkeziahmae.bagacinaNessuna valutazione finora

- Dragons ScaleDocumento13 pagineDragons ScaleGuilherme De FariasNessuna valutazione finora

- Ferrero A.M. Et Al. (2015) - Experimental Tests For The Application of An Analytical Model For Flexible Debris Flow Barrier Design PDFDocumento10 pagineFerrero A.M. Et Al. (2015) - Experimental Tests For The Application of An Analytical Model For Flexible Debris Flow Barrier Design PDFEnrico MassaNessuna valutazione finora

- HPSC HCS Exam 2021: Important DatesDocumento6 pagineHPSC HCS Exam 2021: Important DatesTejaswi SaxenaNessuna valutazione finora

- Adolescents' Gender and Their Social Adjustment The Role of The Counsellor in NigeriaDocumento20 pagineAdolescents' Gender and Their Social Adjustment The Role of The Counsellor in NigeriaEfosaNessuna valutazione finora

- Oracle SOA Suite Oracle Containers For J2EE Feature Overview OC4JDocumento10 pagineOracle SOA Suite Oracle Containers For J2EE Feature Overview OC4JLuis YañezNessuna valutazione finora

- Presentation LI: Prepared by Muhammad Zaim Ihtisham Bin Mohd Jamal A17KA5273 13 September 2022Documento9 paginePresentation LI: Prepared by Muhammad Zaim Ihtisham Bin Mohd Jamal A17KA5273 13 September 2022dakmts07Nessuna valutazione finora

- Scary Smart: The Future of Artificial Intelligence and How You Can Save Our WorldDa EverandScary Smart: The Future of Artificial Intelligence and How You Can Save Our WorldValutazione: 4.5 su 5 stelle4.5/5 (55)

- ChatGPT Millionaire 2024 - Bot-Driven Side Hustles, Prompt Engineering Shortcut Secrets, and Automated Income Streams that Print Money While You Sleep. The Ultimate Beginner’s Guide for AI BusinessDa EverandChatGPT Millionaire 2024 - Bot-Driven Side Hustles, Prompt Engineering Shortcut Secrets, and Automated Income Streams that Print Money While You Sleep. The Ultimate Beginner’s Guide for AI BusinessNessuna valutazione finora

- ChatGPT Side Hustles 2024 - Unlock the Digital Goldmine and Get AI Working for You Fast with More Than 85 Side Hustle Ideas to Boost Passive Income, Create New Cash Flow, and Get Ahead of the CurveDa EverandChatGPT Side Hustles 2024 - Unlock the Digital Goldmine and Get AI Working for You Fast with More Than 85 Side Hustle Ideas to Boost Passive Income, Create New Cash Flow, and Get Ahead of the CurveNessuna valutazione finora

- ChatGPT Money Machine 2024 - The Ultimate Chatbot Cheat Sheet to Go From Clueless Noob to Prompt Prodigy Fast! Complete AI Beginner’s Course to Catch the GPT Gold Rush Before It Leaves You BehindDa EverandChatGPT Money Machine 2024 - The Ultimate Chatbot Cheat Sheet to Go From Clueless Noob to Prompt Prodigy Fast! Complete AI Beginner’s Course to Catch the GPT Gold Rush Before It Leaves You BehindNessuna valutazione finora

- Generative AI: The Insights You Need from Harvard Business ReviewDa EverandGenerative AI: The Insights You Need from Harvard Business ReviewValutazione: 4.5 su 5 stelle4.5/5 (2)

- The Master Algorithm: How the Quest for the Ultimate Learning Machine Will Remake Our WorldDa EverandThe Master Algorithm: How the Quest for the Ultimate Learning Machine Will Remake Our WorldValutazione: 4.5 su 5 stelle4.5/5 (107)

- Demystifying Prompt Engineering: AI Prompts at Your Fingertips (A Step-By-Step Guide)Da EverandDemystifying Prompt Engineering: AI Prompts at Your Fingertips (A Step-By-Step Guide)Valutazione: 4 su 5 stelle4/5 (1)

- Who's Afraid of AI?: Fear and Promise in the Age of Thinking MachinesDa EverandWho's Afraid of AI?: Fear and Promise in the Age of Thinking MachinesValutazione: 4.5 su 5 stelle4.5/5 (13)

- Artificial Intelligence: The Insights You Need from Harvard Business ReviewDa EverandArtificial Intelligence: The Insights You Need from Harvard Business ReviewValutazione: 4.5 su 5 stelle4.5/5 (104)

- Machine Learning: The Ultimate Beginner's Guide to Learn Machine Learning, Artificial Intelligence & Neural Networks Step by StepDa EverandMachine Learning: The Ultimate Beginner's Guide to Learn Machine Learning, Artificial Intelligence & Neural Networks Step by StepValutazione: 4.5 su 5 stelle4.5/5 (19)

- Artificial Intelligence: The Complete Beginner’s Guide to the Future of A.I.Da EverandArtificial Intelligence: The Complete Beginner’s Guide to the Future of A.I.Valutazione: 4 su 5 stelle4/5 (15)

- The AI Advantage: How to Put the Artificial Intelligence Revolution to WorkDa EverandThe AI Advantage: How to Put the Artificial Intelligence Revolution to WorkValutazione: 4 su 5 stelle4/5 (7)

- Power and Prediction: The Disruptive Economics of Artificial IntelligenceDa EverandPower and Prediction: The Disruptive Economics of Artificial IntelligenceValutazione: 4.5 su 5 stelle4.5/5 (38)

- 100M Offers Made Easy: Create Your Own Irresistible Offers by Turning ChatGPT into Alex HormoziDa Everand100M Offers Made Easy: Create Your Own Irresistible Offers by Turning ChatGPT into Alex HormoziNessuna valutazione finora

- AI and Machine Learning for Coders: A Programmer's Guide to Artificial IntelligenceDa EverandAI and Machine Learning for Coders: A Programmer's Guide to Artificial IntelligenceValutazione: 4 su 5 stelle4/5 (2)

- HBR's 10 Must Reads on AI, Analytics, and the New Machine AgeDa EverandHBR's 10 Must Reads on AI, Analytics, and the New Machine AgeValutazione: 4.5 su 5 stelle4.5/5 (69)

- Artificial Intelligence: A Guide for Thinking HumansDa EverandArtificial Intelligence: A Guide for Thinking HumansValutazione: 4.5 su 5 stelle4.5/5 (30)

- Artificial Intelligence & Generative AI for Beginners: The Complete GuideDa EverandArtificial Intelligence & Generative AI for Beginners: The Complete GuideValutazione: 5 su 5 stelle5/5 (1)

- Your AI Survival Guide: Scraped Knees, Bruised Elbows, and Lessons Learned from Real-World AI DeploymentsDa EverandYour AI Survival Guide: Scraped Knees, Bruised Elbows, and Lessons Learned from Real-World AI DeploymentsNessuna valutazione finora

- Mastering Large Language Models: Advanced techniques, applications, cutting-edge methods, and top LLMs (English Edition)Da EverandMastering Large Language Models: Advanced techniques, applications, cutting-edge methods, and top LLMs (English Edition)Nessuna valutazione finora

- Four Battlegrounds: Power in the Age of Artificial IntelligenceDa EverandFour Battlegrounds: Power in the Age of Artificial IntelligenceValutazione: 5 su 5 stelle5/5 (5)