Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Robotics

Caricato da

api-19801502Descrizione originale:

Titolo originale

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Robotics

Caricato da

api-19801502Copyright:

Formati disponibili

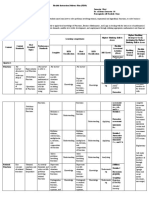

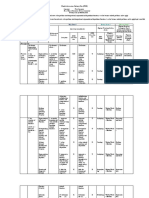

Learning agents

Performance standard

Critic Sensors

Learning from Observations

feedback

Environment

changes

Chapter 18, Sections 1–3 Learning Performance

element element

knowledge

learning

goals

experiments

Problem

generator

Agent Effectors

Chapter 18, Sections 1–3 1 Chapter 18, Sections 1–3 4

Outline Learning element

♦ Learning agents Design of learning element is dictated by

♦ what type of performance element is used

♦ Inductive learning

♦ which functional component is to be learned

♦ Decision tree learning ♦ how that functional compoent is represented

♦ what kind of feedback is available

♦ Measuring learning performance Example scenarios:

Performance element Component Representation Feedback

Alpha−beta search Eval. fn. Weighted linear function Win/loss

Logical agent Transition model Successor−state axioms Outcome

Utility−based agent Transition model Dynamic Bayes net Outcome

Simple reflex agent Percept−action fn Neural net Correct action

Supervised learning: correct answers for each instance

Reinforcement learning: occasional rewards

Chapter 18, Sections 1–3 2 Chapter 18, Sections 1–3 5

Learning Inductive learning (a.k.a. Science)

Learning is essential for unknown environments, Simplest form: learn a function from examples (tabula rasa)

i.e., when designer lacks omniscience

f is the target function

Learning is useful as a system construction method,

i.e., expose the agent to reality rather than trying to write it down O O X

An example is a pair x, f (x), e.g., X , +1

Learning modifies the agent’s decision mechanisms to improve performance X

Problem: find a(n) hypothesis h

such that h ≈ f

given a training set of examples

(This is a highly simplified model of real learning:

– Ignores prior knowledge

– Assumes a deterministic, observable “environment”

– Assumes examples are given

– Assumes that the agent wants to learn f —why?)

Chapter 18, Sections 1–3 3 Chapter 18, Sections 1–3 6

Inductive learning method Inductive learning method

Construct/adjust h to agree with f on training set Construct/adjust h to agree with f on training set

(h is consistent if it agrees with f on all examples) (h is consistent if it agrees with f on all examples)

E.g., curve fitting: E.g., curve fitting:

f(x) f(x)

x x

Chapter 18, Sections 1–3 7 Chapter 18, Sections 1–3 10

Inductive learning method Inductive learning method

Construct/adjust h to agree with f on training set Construct/adjust h to agree with f on training set

(h is consistent if it agrees with f on all examples) (h is consistent if it agrees with f on all examples)

E.g., curve fitting: E.g., curve fitting:

f(x) f(x)

x x

Chapter 18, Sections 1–3 8 Chapter 18, Sections 1–3 11

Inductive learning method Inductive learning method

Construct/adjust h to agree with f on training set Construct/adjust h to agree with f on training set

(h is consistent if it agrees with f on all examples) (h is consistent if it agrees with f on all examples)

E.g., curve fitting: E.g., curve fitting:

f(x) f(x)

x x

Ockham’s razor: maximize a combination of consistency and simplicity

Chapter 18, Sections 1–3 9 Chapter 18, Sections 1–3 12

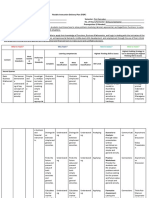

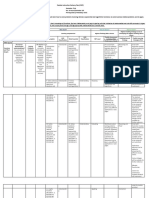

Attribute-based representations Hypothesis spaces

Examples described by attribute values (Boolean, discrete, continuous, etc.) How many distinct decision trees with n Boolean attributes??

E.g., situations where I will/won’t wait for a table:

Example Attributes Target

Alt Bar F ri Hun P at P rice Rain Res T ype Est WillWait

X1 T F F T Some $$$ F T French 0–10 T

X2 T F F T Full $ F F Thai 30–60 F

X3 F T F F Some $ F F Burger 0–10 T

X4 T F T T Full $ F F Thai 10–30 T

X5 T F T F Full $$$ F T French >60 F

X6 F T F T Some $$ T T Italian 0–10 T

X7 F T F F None $ T F Burger 0–10 F

X8 F F F T Some $$ T T Thai 0–10 T

X9 F T T F Full $ T F Burger >60 F

X10 T T T T Full $$$ F T Italian 10–30 F

X11 F F F F None $ F F Thai 0–10 F

X12 T T T T Full $ F F Burger 30–60 T

Classification of examples is positive (T) or negative (F)

Chapter 18, Sections 1–3 13 Chapter 18, Sections 1–3 16

Decision trees Hypothesis spaces

One possible representation for hypotheses How many distinct decision trees with n Boolean attributes??

E.g., here is the “true” tree for deciding whether to wait:

= number of Boolean functions

Patrons?

None Some Full

F T WaitEstimate?

>60 30−60 10−30 0−10

F Alternate? Hungry? T

No Yes No Yes

Reservation? Fri/Sat? T Alternate?

No Yes No Yes No Yes

Bar? T F T T Raining?

No Yes No Yes

F T F T

Chapter 18, Sections 1–3 14 Chapter 18, Sections 1–3 17

Expressiveness Hypothesis spaces

Decision trees can express any function of the input attributes. How many distinct decision trees with n Boolean attributes??

E.g., for Boolean functions, truth table row → path to leaf:

= number of Boolean functions

A B A xor B

A = number of distinct truth tables with 2n rows

F T

F F F

B B

F T T

F T F T

T F T

T T F F T T F

Trivially, there is a consistent decision tree for any training set

w/ one path to leaf for each example (unless f nondeterministic in x)

but it probably won’t generalize to new examples

Prefer to find more compact decision trees

Chapter 18, Sections 1–3 15 Chapter 18, Sections 1–3 18

Hypothesis spaces Hypothesis spaces

How many distinct decision trees with n Boolean attributes?? How many distinct decision trees with n Boolean attributes??

= number of Boolean functions = number of Boolean functions

n n

= number of distinct truth tables with 2n rows = 22 = number of distinct truth tables with 2n rows = 22

E.g., with 6 Boolean attributes, there are 18,446,744,073,709,551,616 trees

How many purely conjunctive hypotheses (e.g., Hungry ∧ ¬Rain)??

Each attribute can be in (positive), in (negative), or out

⇒ 3n distinct conjunctive hypotheses

More expressive hypothesis space

– increases chance that target function can be expressed

– increases number of hypotheses consistent w/ training set

⇒ may get worse predictions

Chapter 18, Sections 1–3 19 Chapter 18, Sections 1–3 22

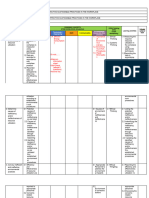

Hypothesis spaces Decision tree learning

How many distinct decision trees with n Boolean attributes?? Aim: find a small tree consistent with the training examples

= number of Boolean functions Idea: (recursively) choose “most significant” attribute as root of (sub)tree

n

= number of distinct truth tables with 2n rows = 22

function DTL(examples, attributes, default) returns a decision tree

E.g., with 6 Boolean attributes, there are 18,446,744,073,709,551,616 trees

if examples is empty then return default

else if all examples have the same classification then return the classification

else if attributes is empty then return Mode(examples)

else

best ← Choose-Attribute(attributes, examples)

tree ← a new decision tree with root test best

for each value vi of best do

examplesi ← {elements of examples with best = vi }

subtree ← DTL(examplesi, attributes − best, Mode(examples))

add a branch to tree with label vi and subtree subtree

return tree

Chapter 18, Sections 1–3 20 Chapter 18, Sections 1–3 23

Hypothesis spaces Choosing an attribute

How many distinct decision trees with n Boolean attributes?? Idea: a good attribute splits the examples into subsets that are (ideally) “all

positive” or “all negative”

= number of Boolean functions

n

= number of distinct truth tables with 2n rows = 22

E.g., with 6 Boolean attributes, there are 18,446,744,073,709,551,616 trees

Patrons? Type?

How many purely conjunctive hypotheses (e.g., Hungry ∧ ¬Rain)??

None Some Full French Italian Thai Burger

P atrons? is a better choice—gives information about the classification

Chapter 18, Sections 1–3 21 Chapter 18, Sections 1–3 24

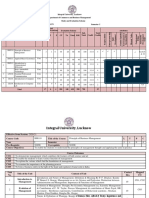

Information Performance measurement

Information answers questions How do we know that h ≈ f ? (Hume’s Problem of Induction)

The more clueless I am about the answer initially, the more information is 1) Use theorems of computational/statistical learning theory

contained in the answer

2) Try h on a new test set of examples

Scale: 1 bit = answer to Boolean question with prior h0.5, 0.5i (use same distribution over example space as training set)

Information in an answer when prior is hP1, . . . , Pni is Learning curve = % correct on test set as a function of training set size

1

n

H(hP1, . . . , Pni) = Σ − Pi log2 Pi

% correct on test set

i=1 0.9

(also called entropy of the prior) 0.8

0.7

0.6

0.5

0.4

0 10 20 30 40 50 60 70 80 90 100

Training set size

Chapter 18, Sections 1–3 25 Chapter 18, Sections 1–3 28

Information contd. Performance measurement contd.

Suppose we have p positive and n negative examples at the root Learning curve depends on

⇒ H(hp/(p+n), n/(p+n)i) bits needed to classify a new example – realizable (can express target function) vs. non-realizable

E.g., for 12 restaurant examples, p = n = 6 so we need 1 bit non-realizability can be due to missing attributes

or restricted hypothesis class (e.g., thresholded linear function)

An attribute splits the examples E into subsets Ei, each of which (we hope) – redundant expressiveness (e.g., loads of irrelevant attributes)

needs less information to complete the classification

% correct

Let Ei have pi positive and ni negative examples

1 realizable

⇒ H(hpi/(pi +ni), ni/(pi +ni)i) bits needed to classify a new example

⇒ expected number of bits per example over all branches is

redundant

pi + ni

Σi H(hpi/(pi + ni), ni/(pi + ni)i) nonrealizable

p+n

For P atrons?, this is 0.459 bits, for T ype this is (still) 1 bit

⇒ choose the attribute that minimizes the remaining information needed

# of examples

Chapter 18, Sections 1–3 26 Chapter 18, Sections 1–3 29

Example contd. Summary

Decision tree learned from the 12 examples: Learning needed for unknown environments, lazy designers

Patrons?

Learning agent = performance element + learning element

None Some Full Learning method depends on type of performance element, available

F T Hungry?

feedback, type of component to be improved, and its representation

Yes No

For supervised learning, the aim is to find a simple hypothesis

Type? F that is approximately consistent with training examples

French Italian Thai Burger Decision tree learning using information gain

T F Fri/Sat? T

No Yes Learning performance = prediction accuracy measured on test set

F T

Substantially simpler than “true” tree—a more complex hypothesis isn’t jus-

tified by small amount of data

Chapter 18, Sections 1–3 27 Chapter 18, Sections 1–3 30

Potrebbero piacerti anche

- FIDP in General Mathematics-2020-2021Documento13 pagineFIDP in General Mathematics-2020-2021Carmi Formentera Luchavez100% (5)

- IB HL AA Unit 02 FunctionsDocumento7 pagineIB HL AA Unit 02 FunctionsChee WongNessuna valutazione finora

- Subject Description: at The End of The Course, The Students Must Be Able To Apply Concepts and Solve Problems Involving Conic Sections, Systems ofDocumento5 pagineSubject Description: at The End of The Course, The Students Must Be Able To Apply Concepts and Solve Problems Involving Conic Sections, Systems ofMyla Rose AcobaNessuna valutazione finora

- Chapter 2 LOGIC PDFDocumento13 pagineChapter 2 LOGIC PDFKirsty LorenzoNessuna valutazione finora

- Learning AIDocumento27 pagineLearning AIShankerNessuna valutazione finora

- Flexible Instruction Delivery Plan (FIDP) Template - Problems Solving Involving FunctionsDocumento9 pagineFlexible Instruction Delivery Plan (FIDP) Template - Problems Solving Involving FunctionsJach OliverNessuna valutazione finora

- Tips Mejorar ConfibilidadDocumento15 pagineTips Mejorar ConfibilidadJose Alexander Peña Becerra100% (1)

- Fidp BlankDocumento3 pagineFidp Blankjonathan labajoNessuna valutazione finora

- Addie ModelDocumento1 paginaAddie Modelapi-399297224Nessuna valutazione finora

- FIDP Entrepreneurship Senior High SchoolDocumento9 pagineFIDP Entrepreneurship Senior High Schooljendelyn r. barbadillo100% (2)

- 06 Learning SystemsDocumento82 pagine06 Learning Systemssanthi sNessuna valutazione finora

- Flexible Instruction Delivery PlanDocumento5 pagineFlexible Instruction Delivery PlanRy BargayoNessuna valutazione finora

- And To Apply Logic To Real-Life SituationsDocumento15 pagineAnd To Apply Logic To Real-Life SituationsMatt BarbasaNessuna valutazione finora

- General Math LP1Documento8 pagineGeneral Math LP1Princess LlevaresNessuna valutazione finora

- FIDP - Gedaliah BuiserDocumento3 pagineFIDP - Gedaliah BuiserMa. Angelica De La PenaNessuna valutazione finora

- Competency MatrixDocumento1 paginaCompetency MatrixMAHALAKSHMI SIVAKUMARNessuna valutazione finora

- Final Format of Syllabi - Strategic Cost ManagementDocumento6 pagineFinal Format of Syllabi - Strategic Cost ManagementEsheikell ChenNessuna valutazione finora

- Reinforcement LearningDocumento3 pagineReinforcement LearningdumodubesNessuna valutazione finora

- Subject Description: at The End of The Course, The Students Must Be Able To Apply Concepts and Solve Problems Involving Conic Sections, Systems ofDocumento4 pagineSubject Description: at The End of The Course, The Students Must Be Able To Apply Concepts and Solve Problems Involving Conic Sections, Systems ofMicheal CarabbacanNessuna valutazione finora

- FIDP GenMathDocumento13 pagineFIDP GenMathJunem S. Beli-otNessuna valutazione finora

- CIDAM GenMath 1Documento2 pagineCIDAM GenMath 1Carole Janne EndoyNessuna valutazione finora

- SAFe General Overview v0.1 14-04-2024-Entire Course PackDocumento25 pagineSAFe General Overview v0.1 14-04-2024-Entire Course PackbcazorlaNessuna valutazione finora

- ReportDocumento8 pagineReportfoxbat1988Nessuna valutazione finora

- 1.2 Region 1 - Simple and General Annuity - ViduyaDocumento4 pagine1.2 Region 1 - Simple and General Annuity - ViduyaNapintas Clthg CONessuna valutazione finora

- The Development of Listening and Speaking Skills and Strategies For Effective Communication in Various SituationsDocumento3 pagineThe Development of Listening and Speaking Skills and Strategies For Effective Communication in Various SituationsBea TanglaoNessuna valutazione finora

- 8 - Uc 8 CLMDocumento3 pagine8 - Uc 8 CLMmariaestercampos22Nessuna valutazione finora

- Motherson Sumi Systems Limited: Competency Analysis (Applicable To E3 & Above)Documento1 paginaMotherson Sumi Systems Limited: Competency Analysis (Applicable To E3 & Above)Toshi VarshneyNessuna valutazione finora

- Cipriano - FIDP - Gen MathDocumento14 pagineCipriano - FIDP - Gen MathMark Anthony B. Israel100% (1)

- 2 NdweekDocumento3 pagine2 NdweekLizNessuna valutazione finora

- Template FIDPDocumento4 pagineTemplate FIDPKent Joshua Garcia TanganNessuna valutazione finora

- Fidp - Tamala, Sharmaine JoyDocumento2 pagineFidp - Tamala, Sharmaine JoyJoy TamalaNessuna valutazione finora

- Assurance EngagementDocumento1 paginaAssurance EngagementM MybarakNessuna valutazione finora

- Flexible Instruction Delivery Plan Template 4Documento2 pagineFlexible Instruction Delivery Plan Template 4Eve IntapayaNessuna valutazione finora

- UntitledDocumento17 pagineUntitledsaurabhsonaNessuna valutazione finora

- Machine Learning: Introduction and Linear RegressionDocumento29 pagineMachine Learning: Introduction and Linear RegressionThelazyJoe TMNessuna valutazione finora

- Scope Definition: Evaluation Questionnaire Checklist Templates Guidelines Estimation FrameworksDocumento10 pagineScope Definition: Evaluation Questionnaire Checklist Templates Guidelines Estimation Frameworksbudhaditya.choudhury2583Nessuna valutazione finora

- Statistics and ProbabilityDocumento14 pagineStatistics and ProbabilityElla Q. EngcoNessuna valutazione finora

- Fidp PMDocumento2 pagineFidp PMEve IntapayaNessuna valutazione finora

- Learning and Development Review Form For Schools CY 2022Documento1 paginaLearning and Development Review Form For Schools CY 2022CARMINA VALENZUELANessuna valutazione finora

- Fidp Gen. Math2Documento8 pagineFidp Gen. Math2Mark Anthony AnchetaNessuna valutazione finora

- ICRA2024 IRL Reward Shaping WuDocumento8 pagineICRA2024 IRL Reward Shaping Wukkanishk2023Nessuna valutazione finora

- 06-BDE-1038 - Control A Field Artillery Unit MoveDocumento6 pagine06-BDE-1038 - Control A Field Artillery Unit MoveChengchang TsaiNessuna valutazione finora

- Education, Entrepreneurial Demands, Middle-Level Skills Development, and Employment Through Utilizing Appropriate Mathematical and Financial ToolsDocumento7 pagineEducation, Entrepreneurial Demands, Middle-Level Skills Development, and Employment Through Utilizing Appropriate Mathematical and Financial ToolsKieren SilvaNessuna valutazione finora

- Grade 7 Curriculum MapDocumento3 pagineGrade 7 Curriculum MapRussell SanicoNessuna valutazione finora

- HSE-Risk Assessment SheetDocumento3 pagineHSE-Risk Assessment SheetRugadya PaulNessuna valutazione finora

- Chapter 5: Learning Systems Definition of Learning: Environment / AgentDocumento4 pagineChapter 5: Learning Systems Definition of Learning: Environment / AgentE KARANINessuna valutazione finora

- 2.2 Data Container - 2.2 Data Container - Ebgtc00000296 Courseware - Huawei IlearningxDocumento2 pagine2.2 Data Container - 2.2 Data Container - Ebgtc00000296 Courseware - Huawei IlearningxHagi ShahidNessuna valutazione finora

- 2.2 Data Container - 2.2 Data Container - Ebgtc00000296 Courseware - Huawei Ilearningx PDFDocumento2 pagine2.2 Data Container - 2.2 Data Container - Ebgtc00000296 Courseware - Huawei Ilearningx PDFHagi ShahidNessuna valutazione finora

- Flexible Evaluation Mechanism (FEM) Sample 1 (Name of The Subject) GRADE: - QUARTERDocumento6 pagineFlexible Evaluation Mechanism (FEM) Sample 1 (Name of The Subject) GRADE: - QUARTERDafchen Nio MahasolNessuna valutazione finora

- 06-BDE-5062 - Prepare The Fire Support PlanDocumento7 pagine06-BDE-5062 - Prepare The Fire Support PlanChengchang TsaiNessuna valutazione finora

- Flexible Instruction Delivery Plan (FIDP) : What To Teach? Why Teach?Documento2 pagineFlexible Instruction Delivery Plan (FIDP) : What To Teach? Why Teach?Fernando PradoNessuna valutazione finora

- Fidb Org ManDocumento2 pagineFidb Org ManEve IntapayaNessuna valutazione finora

- 2008 - Optimizing Spatial Filters For Robust EEG Single-Trial AnalysisDocumento16 pagine2008 - Optimizing Spatial Filters For Robust EEG Single-Trial AnalysisLucas da SilvaNessuna valutazione finora

- Doc1 Chart FlowDocumento1 paginaDoc1 Chart FlowSalbina EnlacintoNessuna valutazione finora

- Organization and ManagementDocumento5 pagineOrganization and ManagementElla Q. EngcoNessuna valutazione finora

- Fidp Basic CalculusDocumento10 pagineFidp Basic CalculusKaye CabangonNessuna valutazione finora

- Overview of The Instructional ProcessDocumento1 paginaOverview of The Instructional Processapi-25935494Nessuna valutazione finora

- Oral ComDocumento7 pagineOral ComLoriely Joy DuedaNessuna valutazione finora

- Curriculum Implementation Matrix (Cim) Template: Lo 1. Determine Criteria For Testing Electronics ComponentsDocumento6 pagineCurriculum Implementation Matrix (Cim) Template: Lo 1. Determine Criteria For Testing Electronics ComponentsJerry G. GabacNessuna valutazione finora

- poster-UNENE2016 Higo 1213Documento2 pagineposter-UNENE2016 Higo 1213rahulkrishnapagotiNessuna valutazione finora

- RoboticsDocumento10 pagineRoboticsapi-19801502Nessuna valutazione finora

- RoboticsDocumento27 pagineRoboticsapi-19801502Nessuna valutazione finora

- Neural Networks: Chapter 20, Section 5Documento21 pagineNeural Networks: Chapter 20, Section 5api-19801502Nessuna valutazione finora

- Mcculloch-Pitts "Unit": A G (In) G W ADocumento4 pagineMcculloch-Pitts "Unit": A G (In) G W Aapi-19801502Nessuna valutazione finora

- Learning From Observations: Chapter 18, Sections 1-3Documento30 pagineLearning From Observations: Chapter 18, Sections 1-3api-19801502Nessuna valutazione finora

- RoboticsDocumento3 pagineRoboticsapi-19801502Nessuna valutazione finora

- RoboticsDocumento13 pagineRoboticsapi-19801502Nessuna valutazione finora

- RoboticsDocumento5 pagineRoboticsapi-19801502Nessuna valutazione finora

- RoboticsDocumento7 pagineRoboticsapi-19801502Nessuna valutazione finora

- Chapter 15 ADocumento39 pagineChapter 15 ATân ConmadoiNessuna valutazione finora

- RoboticsDocumento3 pagineRoboticsapi-19801502Nessuna valutazione finora

- RoboticsDocumento14 pagineRoboticsapi-19801502Nessuna valutazione finora

- RoboticsDocumento7 pagineRoboticsapi-19801502Nessuna valutazione finora

- RoboticsDocumento38 pagineRoboticsapi-19801502Nessuna valutazione finora

- RoboticsDocumento6 pagineRoboticsapi-19801502Nessuna valutazione finora

- Universal Instantiation (UI) : That Does Not Appear Elsewhere in The Knowledge BaseDocumento8 pagineUniversal Instantiation (UI) : That Does Not Appear Elsewhere in The Knowledge Baseapi-19801502Nessuna valutazione finora

- Chapter13 PDFDocumento34 pagineChapter13 PDFAnastasia BulavinovNessuna valutazione finora

- RoboticsDocumento29 pagineRoboticsapi-19801502Nessuna valutazione finora

- RoboticsDocumento5 pagineRoboticsapi-19801502Nessuna valutazione finora

- Inference in First-Order LogicDocumento46 pagineInference in First-Order Logicapi-19801502Nessuna valutazione finora

- RoboticsDocumento71 pagineRoboticsapi-19801502Nessuna valutazione finora

- 08 - First Order LogicDocumento32 pagine08 - First Order LogicCloud StrifeNessuna valutazione finora

- RoboticsDocumento6 pagineRoboticsapi-19801502Nessuna valutazione finora

- RoboticsDocumento38 pagineRoboticsapi-19801502Nessuna valutazione finora

- Constraint Satisfaction ProblemsDocumento40 pagineConstraint Satisfaction Problemsapi-19801502Nessuna valutazione finora

- RoboticsDocumento12 pagineRoboticsapi-19801502Nessuna valutazione finora

- RoboticsDocumento7 pagineRoboticsapi-19801502Nessuna valutazione finora

- RoboticsDocumento7 pagineRoboticsapi-19801502Nessuna valutazione finora

- Chapter 04 BDocumento13 pagineChapter 04 Bapi-3705912Nessuna valutazione finora

- Ddco Question BankDocumento3 pagineDdco Question BankAftab YaragattiNessuna valutazione finora

- Capítulo 1 - Conceptos Básicos de PythonDocumento23 pagineCapítulo 1 - Conceptos Básicos de PythonRoger Jampier Tolosa AyalaNessuna valutazione finora

- NOT AND Nand OR NOR Ex-Or Ex-Nor: True FalseDocumento7 pagineNOT AND Nand OR NOR Ex-Or Ex-Nor: True FalseimtiaztabassamNessuna valutazione finora

- DE Unit 1Documento116 pagineDE Unit 1Doctor StarkNessuna valutazione finora

- Experiment No 3 Combinational Circuits: Binary Adder and Subtractor Circuits Learning ObjectivesDocumento20 pagineExperiment No 3 Combinational Circuits: Binary Adder and Subtractor Circuits Learning ObjectivesSHELPTSNessuna valutazione finora

- Pre Labs Digital Logic DesignDocumento21 paginePre Labs Digital Logic DesignHAsni SHeikhNessuna valutazione finora

- Digital - Chapter3.k MapDocumento18 pagineDigital - Chapter3.k MapJenberNessuna valutazione finora

- Mpal 1Documento58 pagineMpal 1ifrah shujaNessuna valutazione finora

- Unit 2: Digital ComponentsDocumento6 pagineUnit 2: Digital ComponentsMridupaban DuttaNessuna valutazione finora

- Epl Electronics Lab ManualDocumento40 pagineEpl Electronics Lab ManualSivarama Krishnan SNessuna valutazione finora

- Unit I Basic Syntactical Constructs in JavaDocumento21 pagineUnit I Basic Syntactical Constructs in JavaSs1122Nessuna valutazione finora

- Truth Tables Tautology...Documento9 pagineTruth Tables Tautology...sirrhougeNessuna valutazione finora

- 3.2 LogicDocumento31 pagine3.2 LogicChristian DemavivasNessuna valutazione finora

- Physics Investigatory Project On Logic GatesDocumento21 paginePhysics Investigatory Project On Logic GatesNeęrʌj JʌîswʌłNessuna valutazione finora

- Anna University EC2203-Digital Electronics Lecture NotesDocumento43 pagineAnna University EC2203-Digital Electronics Lecture Noteskenny kannaNessuna valutazione finora

- Introduction To Math LogicDocumento23 pagineIntroduction To Math LogicAnanya BanerjeeNessuna valutazione finora

- Anino, Bonono, Yunzal Math100 Midterm M2 L1 For UploadingDocumento18 pagineAnino, Bonono, Yunzal Math100 Midterm M2 L1 For UploadingPhilip CabaribanNessuna valutazione finora

- 0625 s17 QP 42Documento20 pagine0625 s17 QP 42Ved AsudaniNessuna valutazione finora

- Chapter - 2 Boolean Algebra and Logic SimplificationDocumento72 pagineChapter - 2 Boolean Algebra and Logic Simplificationtekalegn barekuNessuna valutazione finora

- Clarion Logic CH6 NotesDocumento23 pagineClarion Logic CH6 NotesBose ChanNessuna valutazione finora

- ErcdsDocumento13 pagineErcdsKadir SezerNessuna valutazione finora

- Variables and Data TypesDocumento13 pagineVariables and Data TypesOsha Al MansooriNessuna valutazione finora

- ECE 331 - Digital System Design: Basic Logic Functions, Truth Tables, and Standard Logic GatesDocumento31 pagineECE 331 - Digital System Design: Basic Logic Functions, Truth Tables, and Standard Logic GatesDeepika PerumalNessuna valutazione finora

- Aoc Unit-1Documento125 pagineAoc Unit-1Meet SolankiNessuna valutazione finora

- Screenshot 2022-04-18 at 1.25.40 PMDocumento72 pagineScreenshot 2022-04-18 at 1.25.40 PMZxsl FNessuna valutazione finora

- Classical and Fuzzy Logic VtuDocumento162 pagineClassical and Fuzzy Logic VtuNatarajan Subramanyam100% (1)

- Software Engineering Lecture Notes: Paul C. AttieDocumento200 pagineSoftware Engineering Lecture Notes: Paul C. AttieSruju100% (1)

- Unit I CoaDocumento59 pagineUnit I CoaRajitha ReddyNessuna valutazione finora

- Programming: Statements & Relational OperatorsDocumento19 pagineProgramming: Statements & Relational OperatorsMUHAMMAD BILALNessuna valutazione finora