Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

The Performance Balancing Act

Caricato da

Ahmed Yamil Chadid Estrada0 valutazioniIl 0% ha trovato utile questo documento (0 voti)

21 visualizzazioni6 pagineThe document discusses how to optimize database performance in Informix by configuring server parameters like NUMCPUVPS and NUMAIOVPS to balance load across CPU and I/O resources. It describes how the Informix server architecture transports data between connection services, query processing, and disk I/O groups. The key is to minimize blocking between these groups by tuning memory, disk layout, and parameters like NETTYPE to control connection types and limits.

Descrizione originale:

Titolo originale

The Performance Balancing Act.docx

Copyright

© © All Rights Reserved

Formati disponibili

DOCX, PDF, TXT o leggi online da Scribd

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoThe document discusses how to optimize database performance in Informix by configuring server parameters like NUMCPUVPS and NUMAIOVPS to balance load across CPU and I/O resources. It describes how the Informix server architecture transports data between connection services, query processing, and disk I/O groups. The key is to minimize blocking between these groups by tuning memory, disk layout, and parameters like NETTYPE to control connection types and limits.

Copyright:

© All Rights Reserved

Formati disponibili

Scarica in formato DOCX, PDF, TXT o leggi online su Scribd

0 valutazioniIl 0% ha trovato utile questo documento (0 voti)

21 visualizzazioni6 pagineThe Performance Balancing Act

Caricato da

Ahmed Yamil Chadid EstradaThe document discusses how to optimize database performance in Informix by configuring server parameters like NUMCPUVPS and NUMAIOVPS to balance load across CPU and I/O resources. It describes how the Informix server architecture transports data between connection services, query processing, and disk I/O groups. The key is to minimize blocking between these groups by tuning memory, disk layout, and parameters like NETTYPE to control connection types and limits.

Copyright:

© All Rights Reserved

Formati disponibili

Scarica in formato DOCX, PDF, TXT o leggi online su Scribd

Sei sulla pagina 1di 6

The Performance Balancing Act:

Capitalizing on Informix Configurations to Establish Higher Performance

Kerry Calvert

It is a given that everyone wants higher levels of performance from their database system. The obstacles

to satisfying this desire can seem daunting, given the multitude of issues that govern system throughput.

The first step to take towards achieving performance nirvana, (or at least a warm and fuzzy feeling) is to

relinquish the idea that there are "primary solutions" to the problem. The task in front of you is an

iterative one, that requires you to make some engineering judgments, monitor the results, and then

adjusting the judgments to reflect observed behavior. The better job of observation and correlation that

you can do as you make structural or configuration changes to the database, the better you will be able to

zero in on a configuration that is optimal for your usage of the database.

Someone ought to write a tool to deal with this, you are probably muttering. Someone probably is, but do

not buy one that promises that all you have to do is install the product and your weekends will be free for

eternity. In the meantime, I hope to provide some insight into some of the key configuration parameters

that impact the performance of the Informix server with this article.

The Main Stage

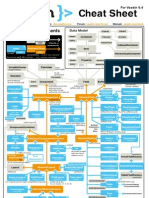

The following diagram illustrates a conceptual view of the Informix server architecture. It is not

necessarily reflective of the real architecture. Its primary purpose is to illustrate conceptually how the

server transports data, so the you have a better feel for what the configuration parameters are trying to

control:

The objective in performance tuning is to minimize blocking between the major processing groups. In the

ideal query, the required memory buffers would be available, the requested data is in the memory cache,

and the connection services are not blocked servicing other users. The net effect in this ideal scenario is

that the total transaction is a memory copy between the cache and the user. Since entropy rules, and

things are never ideal, we have to handle the case where the disk drives are being beat to death. This is

accomplished by configuring adequate memory, I/O bandwidth, data organization, and/or operating

system resources to meet the task, and hopefully keep the user community from beating the DBA to

death.

The first processing group, which performs the client connection services management, is CPU and

network intensive. As application programs open the database, this layer must allocate memory and

possibly network connections to support the request. If your system supports large number of users with

short connection life spans, insuring that there are adequate CPU resources allocated to handle this load is

important to reducing connection delays.

The second processing group, which processes the queries, is responsible for the parsing, data conversion,

and filtering demanded by the query load. This group is memory and CPU intensive. Other than some

general configuration parameters, which control how much CPU and memory resource a query can

consume, there are no definitive tuning parameters that allow you to scale resources specifically to query

processing.

The disk I/O processing group performs cache management and data reading/writing. This area of

processing is highly tunable of course, and is very important to the balancing equation. Since log activity

has very high overhead, Informix provides tuning and configuration capabilities separate of data I/O.

When performance is lagging, there is an imbalance between the processing groups. Isolating the cause is

half the battle, although sometimes it can seem like both halves !

The Usual Suspects

Assuming that you have already applied your genius to the logical design of the database, such that you

have the 8

th

wonder of the world as it pertains to efficient schema implementation, the other things that

affect throughput performance of your database are as follows:

Configuration

Memory

Disk Layout

Data Disorganization

CPU Utilization

At indicated earlier, the goal in performance tuning is to create an environment that minimizes disk I/O in

conjunction with minimizing wasted efforts of the CPU, given that swapping, process scheduling, and

busy waits are not productive use of the machine. If we can tune the system so that we have very high

levels of read and write cache percentages, without saturating CPU resources, then we will have a

responsive system. Unfortunately, this is easier said than done, which is why you are paid the big bucks to

solve this type of problem:

Configuration Parameters

A good starting point is to evaluate the configuration parameters to insure that they make effective use of

the memory in the system , connection service types, and available CPU resources. An inadequate

number of memory buffers, or using the wrong connection configuration can defeat the best schema and

data partitioning plan in short order.

The critical parameters for Informix Online are located in $INFORMIXDIR/etc/$ONCONFIG. These

parameters can be modified with an editor (you have to shut down the server and restart it to make the

parameters take affect), or you can use the onmonitor program to make modifications. The parameters

that are of particular concern to performance are documented in the Informix Online Dynamic Server

Performance Guide. We will discuss the most important of these here: NUMCPUVPS This parameter is

used to define the number of CPU virtual processors that Informix Online will bring up initially. A virtual

processor is different than a real CPU. In Informix implementation terms, a virtual processor is essentially

a UNIX process. A virtual processor will have a class, such as CPU or AIO, and threads that perform

certain types of work (compute intense, I/O, etc.) are scheduled for execution (through Informix code, not

the OS) in the appropriately classed process, or "virtual processor". Since I/O is performed

asynchronously, a process will not block in the OS kernel waiting for an I/O. Informix will suspend

thread that needs to wait on I/O, and reactivate a different thread in the process to perform work. Thread

scheduling is "lighter weight" than process scheduling, so this technique results in less OS busy work than

a simple multi-process architecture.

The purpose of NUMCPUVPS is to set a limit on the number of processes started, and the amount of

CPU horsepower that the database server will consume. The balancing act in deciding on an appropriate

value for this parameter is the classic use of a 5 pound bag to hold 20 pounds of : stuff. If you have high

cache rates in conjunction with CPU idle time, but response is lagging, you have too many threads being

squeezed through too few virtual processors. Increasing NUMCPUVPS solves this problem.

If you have multiple processors, it is beneficial to set NUMCPUVPS to a value greater than one ( for a

system with < 4 CPUS, set NUMCPUVPS to the number of CPUS, for a system with > 4 CPUS, set

NUMCPUVPS to the number of CPUS -1 (or something less if you do not want Informix to consume all

of the resources in the machine).

Performance Tip: Find the point where the performance is acceptable without negatively

impacting other applications on the system with the minimal setting for NUMCPUVPS

NUMAIOVPS This parameter is similar to NUMCPUVPS, but is used only when the OS kernel does not

support asynchronous I/O. AIO VPS process I/O requests by Informix, which are presented in a queue.

The objective is to keep the queue as short as possible. The queue can be monitored with the onstat -g ioq

command.

Performance Tip: Find the point where the queue is minimized with the minimal number of

AIO VPS enabled.

NETTYPE The NETTYPE parameter controls how connections to the database are supported. A given

connection can support up to 1024 sessions, although a limit of 300 is recommended. When your client

program is running on the same machine as the database server, it is best to set the NETTYPE parameter

for the local connection to a shared memory type connection. This is because it is much faster to transfer

data between the server and the client program through shared memory than it is transfer data through a

socket. The specification of a NETTYPE parameter is as follows:

NETTYPE connection_type, poll_threads, connections, vp class

The connection type can be onipcshm for shared memory, onsoctcp for a socket, or ontlitcp for a TLI

socket. Other than any OS level performance advantages, there are no distinct advantages of using TLI

over TCP.

The poll_threads parameter specifies how many threads are started in the server to support connection

requests. If we limit the number of connections per thread to 300, and we have to support 1000 users

concurrently, we would want to set poll_threads to 4, to insure there were adequate service levels in the

server.

The connections parameter limits how many simultaneous connections can be handled per poll thread.

The upper limit is 1024, but Informix recommends 300 on uni-processor systems, and 350 on multi-

processor systems.

The vp class value should be set to NET on a multi-processor system, and CPU on a uni-processor

system. By setting the vp class to NET, all connection service processing is performed in a process that is

dedicated to network services, so that this processing will not interfere with the disk I/O or other query

processing.

Multiple NETTYPE parameters can be specified in the $ONCONFIG file, so that multiple connection

types can be supported simultaneously.

Performance Tip: Set the number of poll threads so that there is an adequate number of

threads to support the number of users that are simultaneously trying to access the database. Do

not over-specify the number, as it will waste memory and CPU bandwidth.

BUFFERS The BUFFERS parameter controls how many memory buffers are available in the server to

cache data pages with. If insufficient memory buffers are available, data has to be flushed out of used

buffers to disk to provide service to new requests. This same data will have to be read back in later when

the data is needed. It is generally recommended that BUFFERS be set such that 25% of the available

memory in the system is allocated to the server (BUFFERS * PAGESIZE). The problem with setting

BUFFERS to high is that system memory is wasted, and this could cause paging.

Performance Tip: An indication that the BUFFERS setting is too small is when the read-

cache value is low. The read cache value can be observed with the onstat -p command. It

represents the percentage of database pages already in memory when the buffer is requested by

a query. When this is the case, increase BUFFERS until the cache rate is maximized without

causing paging activity in the os (see vmstat or sar operating system commands for instruction

on how to monitor paging activity).

PHYSBUFF The parameter sets the size for 2 buffers that are used for caching pages which have

changed, prior to being flushed to disk. The less often it is filled, the less often a write will occur. The size

should be specified as a multiple of the system page size.

Performance Tip: The first instinct is to size this as large as shared memory will allow.

However, you should consider the impact of losing the information in these memory buffers if a

system crashes. If you make this buffer very large, you could lose 100's of transactions!

LOGBUFF The LOGBUFF parameter allocates shared memory for each of three buffers which hold

logical log records until they are flushed to the logical log file on disk. You can reduce the number of

times that disk I/O is started to flush logs by increasing this parameter to a maximum size equal to

LOGSIZE.

Performance Tip: The memory allocated for the buffer is taken from shared memory, so you

should evaluate whether allocating 3*LOGBUFF bytes from shared memory might cause

swapping on the system.

LOGSIZE The major factor that governs how large a logical log file should be is need to insure that

adequate log space is available for long transactions. Smaller logs cause checkpoints to occur more

frequently which may be a disadvantage to performance. However, before making the counter move to

increase the size of logs, you should consider the affect on reliability caused by less frequent back-up of

logs to tape. When you use continuous logging, the logs are copied to tape when they fill up. You will be

at risk of not having tape backup for the length of time that it takes to fill up a log.

Performance Tip: To gain the performance advantage of using large logs without being to

long spans between backup to tape, use a cron job to perform the onmode -l command at some

reasonable time span.

DBSPACETEMP This parameter specifies one or more dbspaces that the server will use for temporary

tables and sort files. By specifying multiple dbspaces, the server will use parallel insert capability to

fragment the temporary tables across the dbspaces.

Performance Tip: If you do not specify this parameter, the server will perform sorting and

temp table storage in the root dbspace. This should be avoided if possible. If you are mirroring

the root dbspace, you pay a double penalty in performance

RA_PAGES The number of pages of data that the server will read when performing sequential scans is

set by this parameter. Reading more pages per I/O operation has a beneficial impact on performance

during serial scans. However, the pages compete for buffers such that page cleaning may be necessary to

find free buffers. If all of the pages being read in are not needed, then the page cleaning is a waste.

This is great theory, but how do you manage it practically ? How should you set reasonable values to get

the value of read ahead without causing unnecessary page cleaning ? Informix recommends the following

calculation:

RA_PAGES = (BUFFERS * fract) / (2 * queries) + 2

RA_THRESHOLD = (BUFFERS * fract) / (2 * queries) - 2

fract is the fraction of BUFFERS to be used for read ahead. If you want to use 50% of available buffers

for read ahead, you would set fract to .5

queries is the number of concurrent queries that will support this read-ahead logic.

The server is triggered to read RA_PAGES on a serial scan, when the number of unprocessed pages in

memory falls to the value set by RA_THRESHOLD.

If your application performs a high number of queries that use filtering conditions with wild cards or

ranges, you will be performing numerous serial scans. If you have updates occurring simultaneously, you

will see the write cache rates drop if you have a higher value for RA_PAGES.

If your application is doing a lot of serial scanning but limited, a higher value of RA_PAGES will

increase the I/O performance of the reads.

Performance Tip: If the read speeds are close to the disks upper end capacity, then you are

not experiencing the problem these parameters are trying to solve.

LRUS This defines the number of buffer queues that feed the page cleaners. Dirty pages that need to be

flushed to disk are placed on this queue. The onstat -R command will show the percentage of dirty pages

on the queues. If the number of pages on the queue exceeds LRU_MAX_DIRTY, there are either too few

LRU queues, or there are too few page cleaners. See the comments in the CLEANERS section to evaluate

whether you have adequate cleaners.

Performance Tip: All pages are flushed to disk during a checkpoint. By setting

LRU_MAX_DIRTY, the page cleaners will flush dirty buffers between checkpoints, so the

checkpoint will have less work to do. When all cleaning is left to the checkpoint, the system often

appears to have halted, which is annoying to users.

CLEANERS This controls the number of threads dedicated to page cleaning. If you have fewer than 20

disks, allocate one cleaner per disk. Between 20 and 100 disks, use 1 cleaner for every 2 disks. This can

be done because the demand per disk drops to a level that a single thread can adequately service two

disks. For greater than 100 disks, use 1 cleaner for every 4 disks.

Summary

The parameters discussed in this article set the stage for you to optimize the performance levels you can

get out of the Informix database server. In the next installment of this article, I will discuss how to use to

Informix and OS utilities to monitor the activity in the server, and use the results to make better decisions

about the values that should be set in these parameters.

Potrebbero piacerti anche

- Engineering & DesignDocumento12 pagineEngineering & DesignRasoul SadeghiNessuna valutazione finora

- Database Tuning: Definition - What Does Workload Mean?Documento27 pagineDatabase Tuning: Definition - What Does Workload Mean?harshaNessuna valutazione finora

- Informix Unleashed - CH 23 - Tuning Your Informix EnvironmentDocumento39 pagineInformix Unleashed - CH 23 - Tuning Your Informix EnvironmentAhmed Yamil Chadid EstradaNessuna valutazione finora

- CPS QaDocumento30 pagineCPS QaVijayakumar GandhiNessuna valutazione finora

- Assignment1-Rennie Ramlochan (31.10.13)Documento7 pagineAssignment1-Rennie Ramlochan (31.10.13)Rennie RamlochanNessuna valutazione finora

- SAS Programming Guidelines Interview Questions You'll Most Likely Be AskedDa EverandSAS Programming Guidelines Interview Questions You'll Most Likely Be AskedNessuna valutazione finora

- 11TB01 - Performance Guidelines For IBM InfoSphere DataStage Jobs Containing Sort Operations On Intel Xeon-FinalDocumento22 pagine11TB01 - Performance Guidelines For IBM InfoSphere DataStage Jobs Containing Sort Operations On Intel Xeon-FinalMonica MarciucNessuna valutazione finora

- 4-How To Choose A ComputerDocumento6 pagine4-How To Choose A ComputerLisandro LanfrancoNessuna valutazione finora

- Performance Tuning Oracle Rac On LinuxDocumento12 paginePerformance Tuning Oracle Rac On LinuxvigyanikNessuna valutazione finora

- HP-UX Kernel Tuning and Performance Guide: Getting The Best Performance From Your Hewlett-Packard SystemsDocumento31 pagineHP-UX Kernel Tuning and Performance Guide: Getting The Best Performance From Your Hewlett-Packard Systemsanoopts123Nessuna valutazione finora

- HTTP P.quizletDocumento3 pagineHTTP P.quizletAileen LamNessuna valutazione finora

- Scalable System DesignDocumento22 pagineScalable System DesignMd Hasan AnsariNessuna valutazione finora

- Embedded Systems Interview Questions - Embedded Systems FAQ PDFDocumento5 pagineEmbedded Systems Interview Questions - Embedded Systems FAQ PDFnavinarsNessuna valutazione finora

- Postgres DBA Interview QuestionsDocumento13 paginePostgres DBA Interview QuestionsMohd YasinNessuna valutazione finora

- Sybase ASE Database Performance TroubleshootingDocumento7 pagineSybase ASE Database Performance TroubleshootingPrasanna KirtaniNessuna valutazione finora

- Operating System QuestionsDocumento3 pagineOperating System QuestionsPraveen KumarNessuna valutazione finora

- Hunting Down CPU Related Issues With Oracle: A Functional ApproachDocumento9 pagineHunting Down CPU Related Issues With Oracle: A Functional ApproachphanithotaNessuna valutazione finora

- Digital Electronics & Computer OrganisationDocumento17 pagineDigital Electronics & Computer Organisationabhishek125Nessuna valutazione finora

- TR 3647Documento4 pagineTR 3647batungNessuna valutazione finora

- Bloor ReportDocumento12 pagineBloor ReportGanesh ManoharanNessuna valutazione finora

- Arich Book ZosDocumento340 pagineArich Book Zosprateekps0123Nessuna valutazione finora

- OS - Unit 1 - NotesDocumento15 pagineOS - Unit 1 - Notestanay282004guptaNessuna valutazione finora

- Zen and The Art of Power Play Cube BuildingDocumento84 pagineZen and The Art of Power Play Cube BuildingshyamsuchakNessuna valutazione finora

- ED01-1011-71380142 - Enterprise ComputingDocumento14 pagineED01-1011-71380142 - Enterprise ComputingM. Saad IqbalNessuna valutazione finora

- PPH ... FtwareDocumento3 paginePPH ... FtwareManoj PatelNessuna valutazione finora

- Embedded Systems Interview Questions and Answers: What Is The Need For An Infinite Loop in Embedded Systems?Documento13 pagineEmbedded Systems Interview Questions and Answers: What Is The Need For An Infinite Loop in Embedded Systems?Anonymous JPbUTto8tqNessuna valutazione finora

- Disruptor-1 0Documento11 pagineDisruptor-1 0ankitkhandelwal6Nessuna valutazione finora

- Technology Inventory Template FinalDocumento6 pagineTechnology Inventory Template FinalRebekah HaleNessuna valutazione finora

- Os 2 MarksDocumento4 pagineOs 2 MarksOmar MuhammedNessuna valutazione finora

- Lesson 7: System Performance: ObjectiveDocumento2 pagineLesson 7: System Performance: ObjectiveVikas GaurNessuna valutazione finora

- Benefits of Lenovo Exflash Memory-Channel Storage in Enterprise SolutionsDocumento26 pagineBenefits of Lenovo Exflash Memory-Channel Storage in Enterprise Solutionsnz0ptkNessuna valutazione finora

- Types of Parallel ComputingDocumento11 pagineTypes of Parallel Computingprakashvivek990Nessuna valutazione finora

- Engine 2018Documento45 pagineEngine 2018AloyceNessuna valutazione finora

- What Is Paging? Why Paging Is Used?: Resource Allocator and ManagerDocumento8 pagineWhat Is Paging? Why Paging Is Used?: Resource Allocator and ManagerCatherine OliverNessuna valutazione finora

- CP4253 Map Unit IiDocumento23 pagineCP4253 Map Unit IiNivi VNessuna valutazione finora

- Assignment4-Rennie RamlochanDocumento7 pagineAssignment4-Rennie RamlochanRennie RamlochanNessuna valutazione finora

- HTAMDocumento30 pagineHTAMniteshtripathi_jobs100% (1)

- Operating System Questions: by Admin - January 17, 2005Documento12 pagineOperating System Questions: by Admin - January 17, 2005Lieu Ranjan GogoiNessuna valutazione finora

- White Paper Online Performance Configuration Guidelines For Peopletools 8.45, 8.46 and 8.47Documento32 pagineWhite Paper Online Performance Configuration Guidelines For Peopletools 8.45, 8.46 and 8.47sumanta099Nessuna valutazione finora

- Parallel Processing: Types of ParallelismDocumento7 pagineParallel Processing: Types of ParallelismRupesh MishraNessuna valutazione finora

- Vaadin 14 Scalability Report - December 2019Documento26 pagineVaadin 14 Scalability Report - December 2019dskumargNessuna valutazione finora

- Embedded Sys Unit 4Documento28 pagineEmbedded Sys Unit 4Abubuker SidiqueNessuna valutazione finora

- Discussion 5Documento1 paginaDiscussion 5Mayur KamatkarNessuna valutazione finora

- Tuning Informix Engine ParametersDocumento14 pagineTuning Informix Engine ParametersAhmed Yamil Chadid EstradaNessuna valutazione finora

- What Is The Need For An Infinite Loop in Embedded Systems?Documento9 pagineWhat Is The Need For An Infinite Loop in Embedded Systems?Ajit KumarNessuna valutazione finora

- What Is Paging? Why Paging Is Used?: Resource Allocator and ManagerDocumento8 pagineWhat Is Paging? Why Paging Is Used?: Resource Allocator and ManagerAbhijeet MaliNessuna valutazione finora

- Unit IV - IT Infra ManagementDocumento8 pagineUnit IV - IT Infra ManagementMOHAMED FIRNAS (RA1931005020191)Nessuna valutazione finora

- A10 WP 21104 en PDFDocumento8 pagineA10 WP 21104 en PDFĐỗ Công ThànhNessuna valutazione finora

- DoubleTake and XOsoft ComparisonDocumento4 pagineDoubleTake and XOsoft ComparisonPrasad Kshirsagar100% (1)

- Top 27 SAP BASIS Interview Questions and Answers For IBMDocumento4 pagineTop 27 SAP BASIS Interview Questions and Answers For IBMsai_balaji_8Nessuna valutazione finora

- OS Interview QuestionsDocumento42 pagineOS Interview QuestionsKvijay VinoNessuna valutazione finora

- PeopleSoft Performance TuningDocumento9 paginePeopleSoft Performance Tuningrmalhotra86Nessuna valutazione finora

- AOS - Theory - Multi-Processor & Distributed UNIX Operating Systems - IDocumento13 pagineAOS - Theory - Multi-Processor & Distributed UNIX Operating Systems - ISujith Ur FrndNessuna valutazione finora

- TSN2101/TOS2111 - Tutorial 1 (Introduction To Operating Systems)Documento5 pagineTSN2101/TOS2111 - Tutorial 1 (Introduction To Operating Systems)MoonNessuna valutazione finora

- CPT FullDocumento153 pagineCPT FullNirma VirusNessuna valutazione finora

- Unit 1 MergedDocumento288 pagineUnit 1 MergedNirma VirusNessuna valutazione finora

- SAS Interview Questions You'll Most Likely Be AskedDa EverandSAS Interview Questions You'll Most Likely Be AskedNessuna valutazione finora

- Operating Systems Interview Questions You'll Most Likely Be Asked: Job Interview Questions SeriesDa EverandOperating Systems Interview Questions You'll Most Likely Be Asked: Job Interview Questions SeriesNessuna valutazione finora

- Build Your Own Distributed Compilation Cluster: A Practical WalkthroughDa EverandBuild Your Own Distributed Compilation Cluster: A Practical WalkthroughNessuna valutazione finora

- Thomson CB1000 (En)Documento80 pagineThomson CB1000 (En)doru_gabriel_stanNessuna valutazione finora

- Qradar API GuideDocumento1.634 pagineQradar API Guidehiehie272Nessuna valutazione finora

- (Sep 2021) Updated AZ-204 Developing Solutions For Azure Certification 2021 - ExamOutDocumento24 pagine(Sep 2021) Updated AZ-204 Developing Solutions For Azure Certification 2021 - ExamOutJohanna RamosNessuna valutazione finora

- A EG3200 Section 4 Config Toolkit (NXPowerLite)Documento39 pagineA EG3200 Section 4 Config Toolkit (NXPowerLite)Halit Yalçınkaya100% (1)

- ActivClient Administration GuideDocumento276 pagineActivClient Administration GuideJeshal PatelNessuna valutazione finora

- TESPBEATIBRIDK User LowresDocumento36 pagineTESPBEATIBRIDK User LowresAnton ZhyrkovNessuna valutazione finora

- Change Password For Weblogic Users in OBIEE 11gDocumento64 pagineChange Password For Weblogic Users in OBIEE 11gRAVI KUMAR LANKE100% (1)

- Vaadin Cheatsheet DuplexDocumento2 pagineVaadin Cheatsheet DuplexMohini SheshNessuna valutazione finora

- Intro To Computer Science Terminology: Hardware SoftwareDocumento8 pagineIntro To Computer Science Terminology: Hardware SoftwareSharreah LimNessuna valutazione finora

- Social Media ResDocumento1 paginaSocial Media Resapi-722664348Nessuna valutazione finora

- Fundamental Concepts in Video: 5.1 Types of Video SignalsDocumento13 pagineFundamental Concepts in Video: 5.1 Types of Video SignalsYoniNessuna valutazione finora

- Roger Manzo Back EndDocumento1 paginaRoger Manzo Back EndRoger ManzoNessuna valutazione finora

- Hakin9 01 2010 ENDocumento84 pagineHakin9 01 2010 ENrosgos100% (1)

- Nikhil Resume (Revised) 1Documento2 pagineNikhil Resume (Revised) 1Aditya GargNessuna valutazione finora

- XnetDocumento3 pagineXnetjarvishNessuna valutazione finora

- Which Platform Is Superior? Why?: Property of STIDocumento21 pagineWhich Platform Is Superior? Why?: Property of STIJeffrey CocoNessuna valutazione finora

- Web 3.0Documento31 pagineWeb 3.0Jannat NishaNessuna valutazione finora

- Suflete Tari Camil Petrescu PDFDocumento3 pagineSuflete Tari Camil Petrescu PDFValentin Mihai0% (1)

- What Is WCF (Windows Communication Foundation) Service?Documento8 pagineWhat Is WCF (Windows Communication Foundation) Service?Deepu KesavanNessuna valutazione finora

- Coding Made Simple 2016 PDFDocumento148 pagineCoding Made Simple 2016 PDFRicardo Burgos100% (2)

- 8814.microsoft IT Academy - Certification RoadmapDocumento1 pagina8814.microsoft IT Academy - Certification RoadmapÐaviđOsorioNessuna valutazione finora

- FlashFX Tera Getting Started GuideDocumento1 paginaFlashFX Tera Getting Started Guidebleep j blorpensteinNessuna valutazione finora

- Identify Running ProcessesDocumento2 pagineIdentify Running ProcessesPrince NagacNessuna valutazione finora

- Freemake Downloads: Free Video Converter, Video Downloader, Audio ConverterDocumento5 pagineFreemake Downloads: Free Video Converter, Video Downloader, Audio ConverterSaad TanveerNessuna valutazione finora

- How To Recover An XP Encrypted FileDocumento2 pagineHow To Recover An XP Encrypted FileratnajitorgNessuna valutazione finora

- Impact of Social Media To The Academic Performance ofDocumento49 pagineImpact of Social Media To The Academic Performance ofJackie Lou SantosNessuna valutazione finora

- Web ApplicationDocumento8 pagineWeb Applicationaquel1983Nessuna valutazione finora

- Cenovnik Opreme Za Video Nadzor - CCTV - 11.05Documento14 pagineCenovnik Opreme Za Video Nadzor - CCTV - 11.05kozameeNessuna valutazione finora

- What Google Knows About You and Your Devices and HOW WE CAN GET IT by Vladimir KatalovDocumento49 pagineWhat Google Knows About You and Your Devices and HOW WE CAN GET IT by Vladimir KatalovPranavPks100% (1)

- 01-b - PythonDocumento23 pagine01-b - PythonOmar AJNessuna valutazione finora