Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Example of Hessenberg Reduction

Caricato da

Mohammad Umar RehmanDescrizione originale:

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Example of Hessenberg Reduction

Caricato da

Mohammad Umar RehmanCopyright:

Formati disponibili

Eigenvalues

In addition to solving linear equations another important task of linear algebra is nding

eigenvalues.

Let F be some operator and x a vector. If F does not change the direction of the vector

x, x is an eigenvector of the operator, satisfying the equation

F(x) = x, (1)

where is a real or complex number, the eigenvalue corresponding to the eigenvector.

Thus the operator will only change the length of the vector by a factor given by the ei-

genvalue.

If F is a linear operator, F(ax) = aF(x) = ax, and hence ax is an eigenvector, too.

Eigenvectors are not uniquely determined, since they can be multiplied by any constant.

In the following only eigenvalues of matrices are discussed. A matrix can be considered

an operator mapping a vector to another one. For eigenvectors this mapping is a mere

scaling.

Let A be a square matrix. If there is a real or complex number and a vector x such

that

Ax = x, (2)

is an eigenvalue of the matrix and x an eigenvector. The quation (2) can also be writ-

ten as

(AI)x = 0.

If the equation is to have a nontrivial solution, we must have

det(AI) = 0. (3)

When the determinant is expanded, we get the characteristic polynomial, the zeros of

which are the eigenvalues.

If the matrix is symmetric and real valued, the eigenvalues are real. Otherwise at least

some of them may be complex. Complex eigenvalues appear always as pairs of complex

conjugates.

For example, the characteristic polynomial of the matrix

1 4

1 1

is

det

1 4

1 1

=

2

2 3.

The zeros of this are the eigenvalues

1

= 3 and

2

= 1.

Finding eigenvalues usign the characteristic polynomial is very laborious, if the matrix

is big. Even determining the coefcients of the equation is difcult. The method is not

suitable for numerical calculations.

To nd eigenvalues the matrix must be transformed to a more suiteble form. Gaussian

elimination would transform it into a triangular matrix, but unfortunately the eigenva-

lues are not conserved in the transform.

Another kind of transform, called a similarity transform, will not affect the eigenvalues.

Similarity transforms have the form

A A

= S

1

AS,

where S is any nonsingular matrix, The matrices A and A

are called similar.

QR decomposition

A commonly used method for nding eigenvalues is known as the QR method. The met-

hod is an iteration that repeatedly computes a decomposition of the matrix know as its

QR decomposition. The decomposition is obtained in a nite number of steps, and it has

some other uses, too. Well rst see how to compute this decomposition.

The QR decomposition of a matrix A is

A = QR,

where Q is an orthogonal matrix and R an upper triangular matrix. This decomposition

is possible for all matrices.

There are several methods for nding the decomposition

1) Householder transform

2) Givens rotations

3) GramSchmidt orthogonalisation

In the following we discuss the rst two methods with exemples. They will probably be

easier to understand than the formal algorithm.

Householder transform

The idea of the Householder transform is to nd a set of transforms that will make all

elements in one column below the diagonal vanish.

Assume that we have to decompose a matrix

A =

3 2 1

1 1 2

2 1 3

We begin by taking the rst column of this

x

1

= a(:, 1) =

3

1

1

2

and compute the vector

u

1

= x

1

x

1

1

0

1

0 =

0.7416574

1

1

2

This is used to create a Householder transformation matrix

P

1

= I 2

u

1

u

T

1

u

1

2

=

0.8017837 0.2672612 0.5345225

0.2672612 0.6396433 0.7207135

0.5345225 0.7207135 0.4414270

It can be shown that this is an orthogonal matrix. It is easy to see this by calculating the

scalar product of any two columns. The products are zeros, and thus the column vectors

of the matrix are mutually orthogonal.

When the original matrix is multiplied by this transform the result is a matrix with zeros

in the rst column below the diagonal:

A

1

= P

1

A =

3.7416574 2.4053512 2.9398737

0. 0.4534522 0.6155927

0. 0.0930955 2.2311854

Then we use the second column to create a vector

x

2

= a(2 : 3, 1) =

0.4534522

0.0930955

,

from which

u

2

= x

2

x

2

1

0

0.0094578

0.0930955

.

This will give the second transformation matrix

P

2

= I 2

u

2

u

T

2

u

2

2

=

1 0 0

0 0.9795688 0.2011093

0 0.2011093 0.9795688

The product of A

1

and the transformation matrix will be a matrix with zeros in the se-

cond column below the diagonal:

A

2

= P

2

A

1

=

3.7416574 2.4053512 2.9398737

0 0.4629100 0.1543033

0 0 2.3094011

Thus the matrix has been transformed to an upper triangular matrix. If the matrix is

bigger, repeat the same procedure for each column until all the elements below the diago-

nal vanish.

Matrices of the decomposition are now obtained as

Q = P

1

P

2

=

0.8017837 0.1543033 0.5773503

0.2672612 0.7715167 0.5773503

0.5345225 0.6172134 0.5773503

R = A

2

= P

2

P

1

A =

3.7416574 2.4053512 2.9398737

0 0.4629100 0.1543033

0 0 2.3094011

.

The matrix R is in fact the A

k

calculated in the last transformation; thus the original

matrix A is not needed. If memory must be saved, each of the matrices A

i

can be sto-

red in the area of the previous one. Also, there is no need to keep the earlier matrices P

i

,

but P

1

will be used as the initial value of Q, and at each step Q is always multiplied by

the new tranformation matrix P

i

.

As a check, we can calculate the product of the factors of the decomposition to see that

we will restore the original matrix:

QR =

3 2 1

1 1 2

2 1 3

.

Orthogonality of the matrix Q can be seen e.g. by calculating the productQQ

T

:

QQ

T

=

1 0 0

0 1 0

0 0 1

In the general case the matrices of the decomposition are

Q = P

1

P

2

P

n

,

R = P

n

P

2

P

1

A.

Givens rotations

Another commonly used method is based on Givens rotation matrices:

P

kl

() =

1

cos sin

.

.

. 1

.

.

.

sin cos

1

.

This is an orthogonal matrix.

Finding the eigenvalues

Eigenvalues can be found using iteratively the QR-algorithm, which will use the previous

QR decomposition. If we started with the original matrix, the task would be computa-

tionally very time consuming. Therefore we start by transformin the matrix to a more

suitable form.

A square matrix is in the block diagonal form if it is

T

11

T

12

T

13

T

1n

0 T

22

T

23

T

2n

0 0

.

.

.

0 0 0 T

nn

,

where the submatrices T

ij

are square matrices. It can be shown that the eigenvalues of

such a matrix are the eigenvalues of the diagonal blocks T

ii

.

If the matrix is a diagonal or triangular matrix, the eigenvalues are the diagonal ele-

ments. If such a form can be found, the problem is solved. Usually such a form cannot

be obtained by a nite number of similarity transformations.

If the original matrix is symmetric, it can be transformed to a tridiagonal form without

affecting its eigenvalues. In the case of a general matrix the result is a Hessenberg mat-

rix, which has the form

H =

x x x x x

x x x x x

0 x x x x

0 0 x x x

0 0 0 x x

The transformations required can be accomplished with Householder transforms or Gi-

vens rotations. The method is now slightly modied so that the elements immediately

below the diagonal are not zeroed.

Transformation using the Householder transforms

As a rst example, consider a symmetric matrix

A =

4 3 2 1

3 4 1 2

2 1 1 2

1 2 2 2

We begin to transform this using Householder transform. Now we construct a vector x

1

by taking only the elements of the rst column that are below the diagonal:

x

1

=

3

2

1

Using these form the vector

u

1

= x

1

x

1

1

0

1

0 =

0.7416574

2

1

and from this the matrix

p

1

= I 2u

1

u

T

1

/ u

1

=

0.8017837 0.5345225 0.2672612

0.5345225 0.4414270 0.7207135

0.2672612 0.7207135 0.6396433

and nally the Householder transformation matrix

P

1

=

1 0 0 0

0 0.8017837 0.5345225 0.2672612

0 0.5345225 0.4414270 0.7207135

0 0.2672612 0.7207135 0.6396433

Now we can make the similarity transform of the matrix A. The transformation matrix

is symmetric, so there is no need for transposing it:

A

1

= P

1

AP

1

=

4 3.7416574 0 0

3.7416574 2.4285714 1.2977396 2.1188066

0 1.2977396 0.0349563 0.2952113

0 2.1188066 0.2952113 4.5364723

The second column is handled in the same way. First we form the vector x

2

x

2

=

1.2977396

2.1188066

and from this

u

2

= x

2

x

2

(1, 0)

T

=

1.1869072

2.1188066

and

p

2

=

0.5223034 0.8527597

0.8527597 0.5223034

and the nal transformation matrix

P

2

=

1 0 0 0

0 1 00

0 0 0.5223034 0.8527597

0 0 0.8527597 0.5223034

Making the transform we get

A

2

= P

2

A

1

P

2

=

4 3.7416574 0 0

3.7416574 2.4285714 2.4846467 0

0 2.4846467 3.5714286 1.8708287

0 0 1.8708287 1

Thus we obtained a tridiagonal matrix, as we should in the case of a symmetric matrix.

As another example, we take an asymmetric matrix:

A =

4 2 3 1

3 4 2 1

2 1 1 2

1 2 2 2

The transformation proceeds as before, and the result is

A

2

=

4 3.4743961 1.3039935 0.4776738

3.7416574 1.7857143 2.9123481 1.1216168

0 2.0934787 4.2422252 1.0307759

0 0. 1.2980371 0.9720605

,

which is of the Hessenberg form.

QR-algorithm

We now have a simpler tridiagonal or Hessenberg matrix, which still has the same eigen-

values as the original matrix. Let this transformed matrix be H. Then we can begin to

search for the eigenvalues. This is done by iteration.

As an initial value, take A

1

= H. Then repeat the following steps:

Find the QR decomposition Q

i

R

i

= A

i

.

Calculate a new matrix A

i+1

= R

i

Q

i

.

The matrix Q of the QR decomposition is orthogonal and A = QR, and so R = Q

T

A.

Therefore

RQ = Q

T

AQ

is a similarity transform that will conserve the eigenvalues.

The sequence of matrices A

i

converges towards a upper tridiagonal or block matrix, from

which the eigenvalues can be picked up.

In the last example the limiting matrix after 50 iterations is

7.0363389 0.7523758 0.7356716 0.3802631

4.265E 08 4.9650342 0.8892339 0.4538061

0 0 1.9732687 1.3234202

0 0 0 0.9718955

The diagonal elements are now the eigenvalues. If the eigenvalues are complex numbers,

the matrix is a block matrix, and the eigenvalues are the eigenvalues of the diagonal 2 2

submatrices.

Potrebbero piacerti anche

- QR Decomposition ExplainedDocumento12 pagineQR Decomposition ExplainedSakıp Mehmet Küçük0% (1)

- Differential Manifolds ExplainedDocumento73 pagineDifferential Manifolds ExplainedKirti Deo MishraNessuna valutazione finora

- Numerical Analysis SolutionDocumento19 pagineNumerical Analysis SolutionPradip AdhikariNessuna valutazione finora

- MATH2045: Vector Calculus & Complex Variable TheoryDocumento50 pagineMATH2045: Vector Calculus & Complex Variable TheoryAnonymous 8nJXGPKnuW100% (2)

- Sylvester Criterion For Positive DefinitenessDocumento4 pagineSylvester Criterion For Positive DefinitenessArlette100% (1)

- 8.1 Convergence of Infinite ProductsDocumento25 pagine8.1 Convergence of Infinite ProductsSanjeev Shukla100% (1)

- Oscillation of Nonlinear Neutral Delay Differential Equations PDFDocumento20 pagineOscillation of Nonlinear Neutral Delay Differential Equations PDFKulin DaveNessuna valutazione finora

- Optimization Class Notes MTH-9842Documento25 pagineOptimization Class Notes MTH-9842felix.apfaltrer7766Nessuna valutazione finora

- Abstract Algebra Assignment SolutionDocumento12 pagineAbstract Algebra Assignment SolutionStudy With MohitNessuna valutazione finora

- Lucio Boccardo, Gisella Croce - Elliptic Partial Differential Equations-De Gruyter (2013)Documento204 pagineLucio Boccardo, Gisella Croce - Elliptic Partial Differential Equations-De Gruyter (2013)Amanda Clara ArrudaNessuna valutazione finora

- Duhamel PrincipleDocumento2 pagineDuhamel PrincipleArshpreet SinghNessuna valutazione finora

- Functional Analysis Week03 PDFDocumento16 pagineFunctional Analysis Week03 PDFGustavo Espínola MenaNessuna valutazione finora

- Differential Geometry 2009-2010Documento45 pagineDifferential Geometry 2009-2010Eric ParkerNessuna valutazione finora

- The Hopf Bifurcation: Parameter Changes Causing Dynamical System TransitionsDocumento5 pagineThe Hopf Bifurcation: Parameter Changes Causing Dynamical System Transitionsghouti ghouti100% (1)

- Sturm's Separation and Comparison TheoremsDocumento4 pagineSturm's Separation and Comparison TheoremsLavesh GuptaNessuna valutazione finora

- HumphreysDocumento14 pagineHumphreysjakeadams333Nessuna valutazione finora

- (Lecture Notes) Andrei Jorza-Math 5c - Introduction To Abstract Algebra, Spring 2012-2013 - Solutions To Some Problems in Dummit & Foote (2013)Documento30 pagine(Lecture Notes) Andrei Jorza-Math 5c - Introduction To Abstract Algebra, Spring 2012-2013 - Solutions To Some Problems in Dummit & Foote (2013)momNessuna valutazione finora

- Pde CaracteristicasDocumento26 paginePde CaracteristicasAnonymous Vd26Pzpx80Nessuna valutazione finora

- Functions of Bounded VariationDocumento30 pagineFunctions of Bounded VariationSee Keong Lee100% (1)

- hw10 (Do Carmo p.260 Q1 - Sol) PDFDocumento2 paginehw10 (Do Carmo p.260 Q1 - Sol) PDFjulianli0220Nessuna valutazione finora

- Chapter 3 Solution.. KreyszigDocumento78 pagineChapter 3 Solution.. KreyszigBladimir BlancoNessuna valutazione finora

- Banach SpaceDocumento13 pagineBanach SpaceAkselNessuna valutazione finora

- Analysis2011 PDFDocumento235 pagineAnalysis2011 PDFMirica Mihai AntonioNessuna valutazione finora

- Functional Analysis - Manfred Einsiedler - Exercises Sheets - 2014 - Zurih - ETH PDFDocumento55 pagineFunctional Analysis - Manfred Einsiedler - Exercises Sheets - 2014 - Zurih - ETH PDFaqszaqszNessuna valutazione finora

- D Alembert SolutionDocumento22 pagineD Alembert SolutionDharmendra Kumar0% (1)

- Probability and Geometry On Groups Lecture Notes For A Graduate CourseDocumento209 pagineProbability and Geometry On Groups Lecture Notes For A Graduate CourseChristian Bazalar SalasNessuna valutazione finora

- M07 Handout - Functions of Several VariablesDocumento7 pagineM07 Handout - Functions of Several VariablesKatherine SauerNessuna valutazione finora

- Metric SpacesDocumento21 pagineMetric SpacesaaronbjarkeNessuna valutazione finora

- Finite Abelian GroupsDocumento6 pagineFinite Abelian GroupsdancavallaroNessuna valutazione finora

- Hamiltonian Mechanics: 4.1 Hamilton's EquationsDocumento9 pagineHamiltonian Mechanics: 4.1 Hamilton's EquationsRyan TraversNessuna valutazione finora

- Solution Real Analysis Folland Ch6Documento24 pagineSolution Real Analysis Folland Ch6ks0% (1)

- A Brief Introduction To Laplace Transformation - As Applied in Vibrations IDocumento9 pagineA Brief Introduction To Laplace Transformation - As Applied in Vibrations Ikravde1024Nessuna valutazione finora

- Partial Differential Equations of Applied Mathematics Lecture Notes, Math 713 Fall, 2003Documento128 paginePartial Differential Equations of Applied Mathematics Lecture Notes, Math 713 Fall, 2003Franklin feelNessuna valutazione finora

- Functional Analysis Exam: N N + N NDocumento3 pagineFunctional Analysis Exam: N N + N NLLászlóTóthNessuna valutazione finora

- A General Approach To Derivative Calculation Using WaveletDocumento9 pagineA General Approach To Derivative Calculation Using WaveletDinesh ZanwarNessuna valutazione finora

- IIT Kanpur PHD May 2017Documento5 pagineIIT Kanpur PHD May 2017Arjun BanerjeeNessuna valutazione finora

- Topological Property - Wikipedia PDFDocumento27 pagineTopological Property - Wikipedia PDFBxjdduNessuna valutazione finora

- Lecture09 AfterDocumento31 pagineLecture09 AfterLemon SodaNessuna valutazione finora

- Partial Differential Equation SolutionDocumento3 paginePartial Differential Equation SolutionBunkun15100% (1)

- CompactnessDocumento7 pagineCompactnessvamgaduNessuna valutazione finora

- Chapter 17 PDFDocumento10 pagineChapter 17 PDFChirilicoNessuna valutazione finora

- Inverse and implicit function theorems explainedDocumento4 pagineInverse and implicit function theorems explainedkelvinlNessuna valutazione finora

- Functional AnalysisDocumento93 pagineFunctional Analysisbala_muraliNessuna valutazione finora

- Unit 4: Linear Transformation: V T U T V U T U CT Cu TDocumento28 pagineUnit 4: Linear Transformation: V T U T V U T U CT Cu TFITSUM SEIDNessuna valutazione finora

- (ADVANCE ABSTRACT ALGEBRA) Pankaj Kumar and Nawneet HoodaDocumento82 pagine(ADVANCE ABSTRACT ALGEBRA) Pankaj Kumar and Nawneet HoodaAnonymous RVVCJlDU6Nessuna valutazione finora

- Introduction To Calculus of Vector FieldsDocumento46 pagineIntroduction To Calculus of Vector Fieldssalem aljohiNessuna valutazione finora

- Department of Mathematics & Statistics PH.D Admission Written TestDocumento5 pagineDepartment of Mathematics & Statistics PH.D Admission Written TestArjun BanerjeeNessuna valutazione finora

- The University of Edinburgh Dynamical Systems Problem SetDocumento4 pagineThe University of Edinburgh Dynamical Systems Problem SetHaaziquah TahirNessuna valutazione finora

- Markov Chains Guidebook: Discrete Time Models and ApplicationsDocumento23 pagineMarkov Chains Guidebook: Discrete Time Models and ApplicationsSofoklisNessuna valutazione finora

- Least Squares AproximationsDocumento10 pagineLeast Squares AproximationsRaulNessuna valutazione finora

- Selected Solutions To AxlerDocumento5 pagineSelected Solutions To Axlerprabhamaths0% (1)

- Lattice Solution PDFDocumento142 pagineLattice Solution PDFhimanshu Arya100% (1)

- Rigid Bodies: 2.1 Many-Body SystemsDocumento17 pagineRigid Bodies: 2.1 Many-Body SystemsRyan TraversNessuna valutazione finora

- Solution - Partial Differential Equations - McowenDocumento59 pagineSolution - Partial Differential Equations - McowenAnuj YadavNessuna valutazione finora

- Algebra of Vector FieldsDocumento4 pagineAlgebra of Vector FieldsVladimir LubyshevNessuna valutazione finora

- Homework #5: MA 402 Mathematics of Scientific Computing Due: Monday, September 27Documento6 pagineHomework #5: MA 402 Mathematics of Scientific Computing Due: Monday, September 27ra100% (1)

- Dynamical Systems Method for Solving Nonlinear Operator EquationsDa EverandDynamical Systems Method for Solving Nonlinear Operator EquationsValutazione: 5 su 5 stelle5/5 (1)

- Inequalities for Differential and Integral EquationsDa EverandInequalities for Differential and Integral EquationsNessuna valutazione finora

- Introduction to the Theory of Linear Partial Differential EquationsDa EverandIntroduction to the Theory of Linear Partial Differential EquationsNessuna valutazione finora

- Numerical Solution of Ordinary and Partial Differential Equations: Based on a Summer School Held in Oxford, August-September 1961Da EverandNumerical Solution of Ordinary and Partial Differential Equations: Based on a Summer School Held in Oxford, August-September 1961Nessuna valutazione finora

- BrakingDocumento19 pagineBrakingMohammad Umar RehmanNessuna valutazione finora

- Drives SolutionsDocumento4 pagineDrives SolutionsMohammad Umar RehmanNessuna valutazione finora

- EEE-282N - S&S Quiz, U-1,2 - SolutionsDocumento2 pagineEEE-282N - S&S Quiz, U-1,2 - SolutionsMohammad Umar RehmanNessuna valutazione finora

- Field ControlDocumento1 paginaField ControlMohammad Umar RehmanNessuna valutazione finora

- B.tech. Electrical III SemDocumento95 pagineB.tech. Electrical III SemMohammad Umar RehmanNessuna valutazione finora

- Presentation 1Documento2 paginePresentation 1Mohammad Umar RehmanNessuna valutazione finora

- Eee 282n ProblemDocumento2 pagineEee 282n ProblemMohammad Umar RehmanNessuna valutazione finora

- NLC Synopsis 2Documento4 pagineNLC Synopsis 2riddler_007Nessuna valutazione finora

- Turning Your Dissertation Into A Publishable Journal ArticleDocumento10 pagineTurning Your Dissertation Into A Publishable Journal ArticleMohammad Umar RehmanNessuna valutazione finora

- LightLevels Outdoor+indoor PDFDocumento5 pagineLightLevels Outdoor+indoor PDFMauricio Cesar Molina ArtetaNessuna valutazione finora

- Teaching Dossier: Andrew W. H. HouseDocumento9 pagineTeaching Dossier: Andrew W. H. HouseMohammad Umar RehmanNessuna valutazione finora

- Measure resistors in series and parallelDocumento3 pagineMeasure resistors in series and parallelAnonymous QvdxO5XTRNessuna valutazione finora

- ME 422 Control Systems: Steady-State Error NotesDocumento4 pagineME 422 Control Systems: Steady-State Error NotesJames W MwangiNessuna valutazione finora

- Lecture 16-17-18 Signal Flow GraphsDocumento58 pagineLecture 16-17-18 Signal Flow GraphsMohammad Umar RehmanNessuna valutazione finora

- Expt 2Documento8 pagineExpt 2bryarNessuna valutazione finora

- Iare - e Autonomous Regulations and Syllubus - 12Documento293 pagineIare - e Autonomous Regulations and Syllubus - 12Mohammad Umar RehmanNessuna valutazione finora

- Solving Convolution Problems: PART I: Using The Convolution IntegralDocumento4 pagineSolving Convolution Problems: PART I: Using The Convolution IntegralMayank NautiyalNessuna valutazione finora

- Eec 504Documento25 pagineEec 504Mohammad Umar RehmanNessuna valutazione finora

- 2011 - Nature - Grad - Student - Aspirations and Anxieties PDFDocumento3 pagine2011 - Nature - Grad - Student - Aspirations and Anxieties PDFMohammad Umar RehmanNessuna valutazione finora

- Chapter 10Documento93 pagineChapter 10Carraan Dandeettirra Caala Altakkatakka100% (1)

- 10629Documento36 pagine10629Mohammad Umar RehmanNessuna valutazione finora

- Z01 FRAN6598 07 SE All 0 PDFDocumento113 pagineZ01 FRAN6598 07 SE All 0 PDFMohammad Umar RehmanNessuna valutazione finora

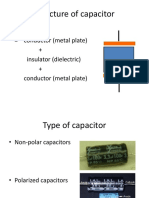

- Structure of Capacitor: - Capacitor Conductor (Metal Plate) + Insulator (Dielectric) + Conductor (Metal Plate)Documento11 pagineStructure of Capacitor: - Capacitor Conductor (Metal Plate) + Insulator (Dielectric) + Conductor (Metal Plate)Mohammad Umar RehmanNessuna valutazione finora

- Power Distribution IITKDocumento5 paginePower Distribution IITKMohammad Umar RehmanNessuna valutazione finora

- Why Teach Mathematical ModellingDocumento3 pagineWhy Teach Mathematical ModellingMohammad Umar RehmanNessuna valutazione finora

- 2015JEEADVP 1 SolutionsDocumento35 pagine2015JEEADVP 1 SolutionsRajdeepNessuna valutazione finora

- Calculus StandardsDocumento2 pagineCalculus StandardsMohammad Umar RehmanNessuna valutazione finora

- Ee 6365 Electrical Engineering Laboratory Manual: (Type Text)Documento59 pagineEe 6365 Electrical Engineering Laboratory Manual: (Type Text)Mohammad Umar RehmanNessuna valutazione finora

- B015Documento40 pagineB015Indrojyoti MondalNessuna valutazione finora

- Program On Curve Fitting of Straight Line (Y Ax+b)Documento12 pagineProgram On Curve Fitting of Straight Line (Y Ax+b)Anonymous movAM5iNessuna valutazione finora

- Intro To Multi Grid Methods-WesselingDocumento313 pagineIntro To Multi Grid Methods-Wesselingapi-3708428100% (1)

- ADA169794 - Second International Conference On Numerical Ship Hydrodynamics - September 1977 - University of California, BerkeleyDocumento407 pagineADA169794 - Second International Conference On Numerical Ship Hydrodynamics - September 1977 - University of California, BerkeleyYuriyAKNessuna valutazione finora

- Introduction To Numerical Methods ProfDocumento9 pagineIntroduction To Numerical Methods ProfMatthew AquinoNessuna valutazione finora

- CFD Modeling of Gas-Liquid-Solid Fluidized BedDocumento57 pagineCFD Modeling of Gas-Liquid-Solid Fluidized BedAnkga CamaroNessuna valutazione finora

- Graphing Polynomials ws1Documento6 pagineGraphing Polynomials ws1devikaNessuna valutazione finora

- Math 209: Numerical AnalysisDocumento31 pagineMath 209: Numerical AnalysisKish NvsNessuna valutazione finora

- Question Bank Unit I Part A: Ae306-Digital Signal Processing AEIDocumento4 pagineQuestion Bank Unit I Part A: Ae306-Digital Signal Processing AEIGlanNessuna valutazione finora

- Maths Made EasyDocumento76 pagineMaths Made Easybfactory 01Nessuna valutazione finora

- LSC Volume 33, Number 3, December 2010Documento79 pagineLSC Volume 33, Number 3, December 2010RizkaNessuna valutazione finora

- Trabajo Marcela FinalDocumento56 pagineTrabajo Marcela FinalDIEGO TORRESNessuna valutazione finora

- R Markdown File MidDocumento13 pagineR Markdown File MidzlsHARRY GamingNessuna valutazione finora

- Bargallo - Finite Elements For Electrical EngineeringDocumento325 pagineBargallo - Finite Elements For Electrical EngineeringPaco Cárdenas100% (1)

- Lecture 1: Module Overview: Zhenning Cai August 15, 2019Documento5 pagineLecture 1: Module Overview: Zhenning Cai August 15, 2019Liu JianghaoNessuna valutazione finora

- M (CS) 312Documento8 pagineM (CS) 312Samaira Shahnoor ParvinNessuna valutazione finora

- Road Map and OUtlines BS (CS) 2022-26Documento24 pagineRoad Map and OUtlines BS (CS) 2022-26annieashraf116Nessuna valutazione finora

- Optimize Greedy Algorithms with Optimal SubstructuresDocumento6 pagineOptimize Greedy Algorithms with Optimal SubstructuresGeorge ChalhoubNessuna valutazione finora

- On The Application of Linear Programming On A Transportation ProblemDocumento5 pagineOn The Application of Linear Programming On A Transportation ProblemDang AntheaNessuna valutazione finora

- Palanduz Seperable 2021Documento148 paginePalanduz Seperable 2021Jônatas Oliveira SilvaNessuna valutazione finora

- Properties of DeterminantsDocumento9 pagineProperties of DeterminantsSarthi GNessuna valutazione finora

- Chapter 5: Eigenvalues and Eigenvectors Multiple Choice QuestionsDocumento6 pagineChapter 5: Eigenvalues and Eigenvectors Multiple Choice QuestionsSaif BaneesNessuna valutazione finora

- HKVHDocumento31 pagineHKVHChristian BanaresNessuna valutazione finora

- Pivot PositionDocumento31 paginePivot PositionNazmaNessuna valutazione finora

- Chapter 1 Introduction To Numerical Method 1Documento32 pagineChapter 1 Introduction To Numerical Method 1Rohan sharmaNessuna valutazione finora

- TUM Algebraic Multigrid MethodsDocumento51 pagineTUM Algebraic Multigrid MethodsFelipe GuerraNessuna valutazione finora

- An Enhanced Computational Precision: Implementing A Graphical User Interface For Advanced Numerical TechniquesDocumento11 pagineAn Enhanced Computational Precision: Implementing A Graphical User Interface For Advanced Numerical TechniquesInternational Journal of Innovative Science and Research TechnologyNessuna valutazione finora

- ProblemsDocumento8 pagineProblemsburke_lNessuna valutazione finora

- Numerical Methods Implinting by PythonDocumento56 pagineNumerical Methods Implinting by PythonIshwor DhitalNessuna valutazione finora

- Chapra - Applied Numerical Methods MATLAB Engineers Scientists 3rd-Halaman-172-181Documento10 pagineChapra - Applied Numerical Methods MATLAB Engineers Scientists 3rd-Halaman-172-181Juwita ExoNessuna valutazione finora

- Response Surface MethodologyDocumento15 pagineResponse Surface MethodologyPavan KumarNessuna valutazione finora