Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Practice Questions

Caricato da

metricsverdhyCopyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Practice Questions

Caricato da

metricsverdhyCopyright:

Formati disponibili

Ec220 - Introduction to Econometrics

Practice Questions

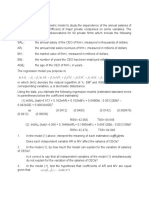

1. (Adapted from S&W ch. 7 exercises) Consider the following regression results for av-

erage hourly earnings for American workers aged 25 to 34 in 1998. The variables in

the regression are the following:

College dummy variable, 1 = college degree, 0 = high school

Female dummy variable, 1 = female, 0 = male

Age age in years

Northeast dummy variable, 1 = lives in the Northeast, 0 = lives elsewhere

Midwest dummy variable, 1 = lives in the Midwest, 0 = lives elsewhere

South dummy variable, 1 = lives in the South, 0 = lives elsewhere

West dummy variable, 1 = lives in the West, 0 = lives elsewhere

The variables Northeast, Midwest, South, and West indicate one of four broad regions

of the US.

Regressor (1) (2) (3) (4)

College

5:46

(0:21)

5:48

(0:21)

5:44

(0:21)

A

(B)

Female

2:64

(0:20)

2:62

(0:20)

2:62

(0:20)

C

(D)

Age

0:29

(0:04)

0:29

(0:04)

E

(F)

Northeast

0:69

(0:30)

G

(H)

Midwest

0:60

(0:30)

I

(J)

South

0:27

(0:26)

West

K

(L)

Constant

12:69

(0:14)

4:40

(1:05)

3:75

(1:06)

M

(N)

(a) Explain why the coecients on College and Female change little between the

dierent specications in columns (1) to (3).

(b) Explain why the standard errors on College and Female change little between the

dierent specications in columns (1) to (3).

(c) What is the interpretation of the constant term (the number 12.69) in col. (1)?

(d) Column (3) includes regional dummy variables. Explain why the variable West

is omitted in this regression.

(e) The regression in column (4) omits the variable South and includes West. Fill in

as many of the coecients A to N as you can.

(f) What is the interpretation of the constant term (the number 3.75) in col. (3)?

1

(g) What are the expected average earnings of a 28 year old female college graduate

from the South?

(h) What is the dierence in earnings between a 26 year old male college graduate

from the Northeast and a 29 year old female high school graduate from the West?

(i) Howwould you specify the regression if you wanted to test whether wages of female

workers increase with age by the same amount as the wages of male workers?

(j) How are the residuals from the regression in col. (3) related to those from col.

(4)?

(k) How is the R

2

from the regression in col. (2) related to that from the regression

in col. (3)? How is the R

2

from the regression in col. (3) related to that from the

regression in col. (4)?

(l) How would you specify the regression to impose the restriction that the coecient

on Northeast and Midwest are the same?

(m) Explain whether the estimated coecient on College reects the causal eect of

graduating from college as compared to graduating from high school.

(n) Explain whether the estimated coecient on Female reects the eect of discrim-

ination against women in the labour market.

2. (Adapted from S&W exercise 12.9) We are interested in the eect of military service

on later civilian earnings. Suppose we have a sample of American men born in 1950

and we observe their earnings Y

i

in 1990, when they are 40 years old. A dummy

variable Serv

i

indicates whether the individual served during the Vietnam war. We

are interested in running the regression

ln (Y

i

) = +Serv

i

+e

i

:

(a) Explain whether you expect military service to have a causal eect on later civilian

earnings. What would be the causal channel. Do you think the eect is positive

or negative?

(b) What potential confounders can you think of? Which way would the bias from

these confounders go?

(c) During the Vietnam War there was a draft and priority for the draft was based

on a national lottery. The lottery consisted of assigning every birthday a number

from 1 (for 1 January) to 365 (for 31 December), and then ordering these numbers

randomly. Men whose birthdays came up at the top of the list were drafted rst,

then the next lower numbers, and so forth until the military lled all its needs for

the year (men born in 1950 were mostly drafted in 1970).

1. Are the draft lottery numbers a potentially plausible instrument for Vietnam

War service?

2. Many men volunteered for service during the Vietnam War. Volunteers

typically got better conditions of service, e.g. they might have had more

choice of units of the military to serve in compared to those called up through

the draft. As a result, more men with low lottery numbers (those being

more likely to be eventually drafted) volunteered than those with high lottery

numbers. Does this violate any of the IV assumptions, which one, and why?

2

3. A company producing laundry detergent is interested in the eect of its TV advertising

on sales. You have the following monthly data for 56 TV markets over 3 years

sales sales in TV market i in month t

price the price charged in TV market i in month t in constant 2000

competitors number of competitors selling detergent in market i in month t

ads the number of TV ads which ran in TV market i in month t

You dont have any other data on the customers in dierent TV markets.

(a) Suppose the rm allocates TV ads randomly to TV markets and months. What

regression would you run to estimate the causal eect of ads on sales? Write down

the regression equation and method of estimation, and explain why you include

the right hand side variables you do.

(b) Would your regression include the variable price on the RHS? Explain why or

why not.

(c) Continue to assume that ads are placed randomly. How would you specify a

regression model to test the hypothesis that TV advertising matters more for

sales in more competitive markets (those in which 3 or more competitors also see

laundry detergent). Be precise about how you would carry out the statistical test.

(d) Suppose that a regional marketing manager responsible for a particular TVmarket

decides on whether to run ads in their region.

1. Explain why a simple regression of sales on ads may not identify the causal

eect of ads. In which direction do you think any bias would go?

2. How would you go about estimating the causal eect of the TV ads now?

What additional assumptions do you need to make compared to the case in

a)?

(e) Suppose the rm runs ads only in markets when two or more competitors are

present. How would you go about estimating the causal eect of the TV ads

now?

(f) Suppose you have data on the following additional variables:

i) ads run by competitors in the same market and month,

ii) sales in the region in the previous month.

Would these variables be useful as instruments to identify the causal eect of TV

ads on sales?

3

4. This question refers to the HMDA data set on mortgage applicants, which we have used in the

lecture on binary choice models. The following variables are used below:

deny a dummy variable for whether an applicant was denied the mortgage (mean =

0.120)

single a dummy variable for whether the applicant is single

condo a dummy variable, 1 = the applicant is buying a condominium (flat), 0 = the

applicant is buying a house

sin_con the product single*condo

pi_rat the total monthly debt repayments divided by the monthly income of the household

ccred the consumer credit score; a higher score means a worse credit rating

school years of education of the applicant

a) The following is Stata output from a linear probability model and a probit on the single dummy:

. reg deny single, robust

Linear regression Number of obs = 2357

F( 1, 2355) = 13.64

Prob > F = 0.0002

R-squared = 0.0062

Root MSE = .32466

------------------------------------------------------------------------------

| Robust

deny | Coef. Std. Err. t P>|t| [95% Conf. Interval]

-------------+----------------------------------------------------------------

single | .0525721 .0142343 3.69 0.000 .024659 .0804852

_cons | .0998603 .0079262 12.60 0.000 .0843173 .1154034

------------------------------------------------------------------------------

. probit deny single, robust

Probit regression Number of obs = 2357

Wald chi2(1) = 14.41

Prob > chi2 = 0.0001

Log pseudolikelihood = -859.96661 Pseudo R2 = 0.0083

------------------------------------------------------------------------------

| Robust

deny | Coef. Std. Err. z P>|z| [95% Conf. Interval]

-------------+----------------------------------------------------------------

single | .2562911 .0675203 3.80 0.000 .1239537 .3886285

_cons | -1.282348 .0452004 -28.37 0.000 -1.370939 -1.193757

------------------------------------------------------------------------------

Explain formally how the coefficients on female in the probit and the linear probability models are

related.

Consider the following regression output:

. reg deny single pi_rat ccred, robust

Linear regression Number of obs = 2357

F( 3, 2353) = 54.78

Prob > F = 0.0000

R-squared = 0.1089

Root MSE = .30756

------------------------------------------------------------------------------

| Robust

deny | Coef. Std. Err. t P>|t| [95% Conf. Interval]

-------------+----------------------------------------------------------------

single | .0455956 .0135127 3.37 0.001 .0190976 .0720936

pi_rat | .5638007 .0868146 6.49 0.000 .3935595 .7340418

ccred | .0493021 .0050359 9.79 0.000 .0394269 .0591774

_cons | -.188203 .0285917 -6.58 0.000 -.2442705 -.1321354

------------------------------------------------------------------------------

. reg deny single pi_rat ccred school, robust

Linear regression Number of obs = 2357

F( 4, 2352) = 42.17

Prob > F = 0.0000

R-squared = 0.1129

Root MSE = .30693

------------------------------------------------------------------------------

| Robust

deny | Coef. Std. Err. t P>|t| [95% Conf. Interval]

-------------+----------------------------------------------------------------

single | .0460667 .0134854 3.42 0.001 .0196221 .0725113

pi_rat | .5545216 .0909171 6.10 0.000 .3762356 .7328077

ccred | .048763 .0050368 9.68 0.000 .0388859 .0586402

school | -.0072373 .0021847 -3.31 0.001 -.0115214 -.0029531

_cons | -.0720306 .0456616 -1.58 0.115 -.1615718 .0175106

------------------------------------------------------------------------------

b) The coefficient on school in the second regression seems important while the coefficient on

single changes little when school is added. Explain why this is the case.

c) Explain why the standard error on pi_rat has increased in the second regression while the

standard error on ccred has basically stayed the same.

Consider the following regression output:

. reg deny single sin_con condo pi_rat ccred , robust

Linear regression Number of obs = 2357

F( 5, 2351) = 34.18

Prob > F = 0.0000

R-squared = 0.1118

Root MSE = .3072

------------------------------------------------------------------------------

| Robust

deny | Coef. Std. Err. t P>|t| [95% Conf. Interval]

-------------+----------------------------------------------------------------

single | .06748 .0176357 3.83 0.000 .0328968 .1020631

sin_con | -.0788836 .0315525 -2.50 0.012 -.1407573 -.0170099

condo | .0503891 .0222421 2.27 0.024 .0067729 .0940053

pi_rat | .5667692 .0867344 6.53 0.000 .3966854 .7368531

ccred | .0489473 .0050344 9.72 0.000 .0390751 .0588196

_cons | -.1974432 .0288937 -6.83 0.000 -.254103 -.1407834

------------------------------------------------------------------------------

d) What is the effect of being single compared to a married couple with similar characteristics

buying a house on the probability of being denied a mortgage?

e) What is the effect of being single compared to a married couple with similar characteristics

buying a condo on the probability of being denied a mortgage?

f) At what significance level is the interaction term sin_con significantly different from zero?

g) How would you carry out a test of whether applicants buying condos are more likely to be denied

mortgages than house buyers?

h) Based on the various regression results above, do you think there is a causal effect of being

single on being denied a mortgage? How large is this effect? Which additional results would you

like to see to assess this question?

5. The following Stata output is produced using the data set on lecture attendance from problem set

7.

. summarize attend fr_att frosh priGPA ACT

Variable | Obs Mean Std. Dev. Min Max

-------------+--------------------------------------------------------

attend | 680 26.14706 5.455037 2 32

fr_att | 680 6.029412 11.25743 0 32

frosh | 680 .2323529 .4226438 0 1

priGPA | 680 2.586775 .5447141 .857 3.93

ACT | 680 22.51029 3.490768 13 32

. reg stndfnl attend fr_att frosh priGPA ACT, robust

Linear regression Number of obs = 680

F( 5, 674) = 33.95

Prob > F = 0.0000

R-squared = 0.2044

Root MSE = .88583

------------------------------------------------------------------------------

| Robust

stndfnl | Coef. Std. Err. t P>|t| [95% Conf. Interval]

-------------+----------------------------------------------------------------

attend | .0204009 .0081117 2.51 0.012 .0044736 .0363281

fr_att | -.021336 .0140661 -1.52 0.130 -.0489548 .0062827

frosh | .6224787 .3719511 1.67 0.095 -.1078435 1.352801

priGPA | .4234329 .086018 4.92 0.000 .2545375 .5923284

ACT | .0832826 .0110908 7.51 0.000 .061506 .1050592

_cons | -3.489796 .306119 -11.40 0.000 -4.090858 -2.888735

------------------------------------------------------------------------------

The variable stndfnl is the standardised final exam mark in the course (i.e. a variable with mean zero

and std. deviation one), attend is the number of lectures attended, frosh is a dummy variable for first

year students, fr_att is the interaction frosh*attend, priGPA is prior grade point average, and

ACT is a score from a test taken before entering university.

a) Can you reject the hypothesis that a one unit higher ACT score raises final exam marks by 0.10

standard deviations at the 5% level? Can you reject the hypothesis at the 1% level? Can you

reject the hypothesis at the 10% level?

b) What is the interpretation of the coefficient on frosh?

c) What is the interpretation of the coefficient on attend?

d) A commentator suggests that the regression should include a variable measuring the number of

missed lectures. Explain whether you agree or disagree with this comment.

e) By how much do you expect the final exam result of a first year student to differ if she attends five

more lectures?

f) How different are the final exam results of a first year and a later year student attending the

average number of lectures?

g) Do you think the effect of being a first year student can be interpreted causally in the regression

above? J ustify your answer.

6. You have data from 1959 to 2009 on the following two variables:

temp: relative global annual average temperature in 0.01 degrees Celsius, base 1950-81

co2: annual average atmospheric carbon dioxide concentration in ppm., Mauna Loa,

Hawaii

and you are presented with the following Stata results:

. reg temp co2, robust

Linear regression Number of obs = 51

F( 1, 49) = 218.58

Prob > F = 0.0000

R-squared = 0.8026

Root MSE = 9.9499

------------------------------------------------------------------------------

| Robust

temp | Coef. Std. Err. t P>|t| [95% Conf. Interval]

-------------+----------------------------------------------------------------

co2 | .9190583 .0621644 14.78 0.000 .7941342 1.043982

_cons | -298.9318 21.77594 -13.73 0.000 -342.6921 -255.1714

------------------------------------------------------------------------------

. reg temp co2 year, robust

Linear regression Number of obs = 51

F( 2, 48) = 118.78

Prob > F = 0.0000

R-squared = 0.8318

Root MSE = 9.2797

------------------------------------------------------------------------------

| Robust

temp | Coef. Std. Err. t P>|t| [95% Conf. Interval]

-------------+----------------------------------------------------------------

co2 | 2.462617 .5214701 4.72 0.000 1.414131 3.511103

year | -2.258271 .7422222 -3.04 0.004 -3.750609 -.7659334

_cons | 3646.721 1293.088 2.82 0.007 1046.794 6246.649

------------------------------------------------------------------------------

. dfuller temp

Dickey-Fuller test for unit root Number of obs = 50

---------- Interpolated Dickey-Fuller ---------

Test 1% Critical 5% Critical 10% Critical

Statistic Value Value Value

------------------------------------------------------------------------------

Z(t) -1.828 -3.580 -2.930 -2.600

------------------------------------------------------------------------------

MacKinnon approximate p-value for Z(t) = 0.3665

3

2

0

3

4

0

3

6

0

3

8

0

4

0

0

C

O

2

c

o

n

c

.

,

p

p

m

-

.

2

0

.

2

.

4

.

6

t

e

m

p

e

r

a

t

u

r

e

,

d

e

g

C

e

l

s

i

s

u

s

1960 1970 1980 1990 2000 2010

year

temperature, deg Celsisus CO2 conc., ppm

. dfuller co2

Dickey-Fuller test for unit root Number of obs = 50

---------- Interpolated Dickey-Fuller ---------

Test 1% Critical 5% Critical 10% Critical

Statistic Value Value Value

------------------------------------------------------------------------------

Z(t) 5.445 -3.580 -2.930 -2.600

------------------------------------------------------------------------------

MacKinnon approximate p-value for Z(t) = 1.0000

. dfuller temp, trend

Dickey-Fuller test for unit root Number of obs = 50

---------- Interpolated Dickey-Fuller ---------

Test 1% Critical 5% Critical 10% Critical

Statistic Value Value Value

------------------------------------------------------------------------------

Z(t) -5.399 -4.150 -3.500 -3.180

------------------------------------------------------------------------------

MacKinnon approximate p-value for Z(t) = 0.0000

. dfuller co2, trend

Dickey-Fuller test for unit root Number of obs = 50

---------- Interpolated Dickey-Fuller ---------

Test 1% Critical 5% Critical 10% Critical

Statistic Value Value Value

------------------------------------------------------------------------------

Z(t) -1.311 -4.150 -3.500 -3.180

------------------------------------------------------------------------------

MacKinnon approximate p-value for Z(t) = 0.8852

. reg d.temp d.co2, robust

Linear regression Number of obs = 50

F( 1, 48) = 8.56

Prob > F = 0.0052

R-squared = 0.1276

Root MSE = 12.313

------------------------------------------------------------------------------

| Robust

D.temp | Coef. Std. Err. t P>|t| [95% Conf. Interval]

-------------+----------------------------------------------------------------

co2 |

D1. | 7.977158 2.726606 2.93 0.005 2.494949 13.45937

_cons | -10.36659 4.477012 -2.32 0.025 -19.36823 -1.364959

------------------------------------------------------------------------------

. reg d.temp d.co2 year, robust

Linear regression Number of obs = 50

F( 2, 47) = 6.34

Prob > F = 0.0036

R-squared = 0.1719

Root MSE = 12.123

------------------------------------------------------------------------------

| Robust

D.temp | Coef. Std. Err. t P>|t| [95% Conf. Interval]

-------------+----------------------------------------------------------------

co2 |

D1. | 11.87479 3.377353 3.52 0.001 5.080436 18.66915

year | -.2447391 .1439168 -1.70 0.096 -.5342624 .0447841

_cons | 469.7547 282.3302 1.66 0.103 -98.22035 1037.73

------------------------------------------------------------------------------

Comment on these results. What, if anything, do you conclude about the impact of increasing

carbon dioxide concentrations on the global temperature, and why? What other statistical

evidence would you like to see to address this problem? Give some specific examples of what

you would do.

Potrebbero piacerti anche

- QTMS Final Assessment (Spring 2020) PDFDocumento6 pagineQTMS Final Assessment (Spring 2020) PDFAbdul RafayNessuna valutazione finora

- Data Interpretation Guide For All Competitive and Admission ExamsDa EverandData Interpretation Guide For All Competitive and Admission ExamsValutazione: 2.5 su 5 stelle2.5/5 (6)

- Final Exam 2013Documento22 pagineFinal Exam 2013laurice wongNessuna valutazione finora

- Profit Driven Business Analytics: A Practitioner's Guide to Transforming Big Data into Added ValueDa EverandProfit Driven Business Analytics: A Practitioner's Guide to Transforming Big Data into Added ValueNessuna valutazione finora

- Problem Set 6Documento6 pagineProblem Set 6Sila KapsataNessuna valutazione finora

- ECMT1020 - Week 06 WorkshopDocumento4 pagineECMT1020 - Week 06 Workshopperthwashington.j9t23Nessuna valutazione finora

- B203: Quantitative Methods: y X Z, - Z, XDocumento12 pagineB203: Quantitative Methods: y X Z, - Z, Xbereket tesfayeNessuna valutazione finora

- Sample Exam QuestionsDocumento2 pagineSample Exam Questionskickbartram2Nessuna valutazione finora

- QNT 561, Week 5 Exercises, Chapter 3, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 17Documento14 pagineQNT 561, Week 5 Exercises, Chapter 3, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 17Danielle KingNessuna valutazione finora

- All 12 Assignment AnswersDocumento5 pagineAll 12 Assignment AnswersShafici CqadirNessuna valutazione finora

- 2012Documento9 pagine2012Akshay JainNessuna valutazione finora

- Panel Lecture - GujaratiDocumento26 paginePanel Lecture - GujaratiPär SjölanderNessuna valutazione finora

- CH 14 HandoutDocumento6 pagineCH 14 HandoutJntNessuna valutazione finora

- Econometrics Pset #1Documento5 pagineEconometrics Pset #1tarun singhNessuna valutazione finora

- Nu - Edu.kz Econometrics-I Assignment 7 Answer KeyDocumento6 pagineNu - Edu.kz Econometrics-I Assignment 7 Answer KeyAidanaNessuna valutazione finora

- Prep Lab Sample Paper For IBA TestDocumento25 paginePrep Lab Sample Paper For IBA Testwajihaabid441Nessuna valutazione finora

- TD PanelDocumento3 pagineTD PanelsmatiNessuna valutazione finora

- Topic3 IV ExampleDocumento18 pagineTopic3 IV Exampleandrewchen336Nessuna valutazione finora

- University of Hong Kong: Administrative MattersDocumento20 pagineUniversity of Hong Kong: Administrative Mattersavinashk1Nessuna valutazione finora

- PDFDocumento9 paginePDFsubash1111@gmail.comNessuna valutazione finora

- QM 2 Linear RegressionDocumento55 pagineQM 2 Linear RegressionDarkasy EditsNessuna valutazione finora

- ECN102 FNSample IIDocumento9 pagineECN102 FNSample IIakinagakiNessuna valutazione finora

- Mid Term Test BFT 64Documento3 pagineMid Term Test BFT 64Ngọc LươngNessuna valutazione finora

- BBA BS Accounting Finance BS Social Sciences Test PDFDocumento15 pagineBBA BS Accounting Finance BS Social Sciences Test PDFHafsah AnwarNessuna valutazione finora

- Answer Key: Chapter 8, Exercise 6Documento5 pagineAnswer Key: Chapter 8, Exercise 6Sukanya AhujaNessuna valutazione finora

- Ch8 Exercise6 Cell Phones KEY StataDocumento5 pagineCh8 Exercise6 Cell Phones KEY StataSukanya AhujaNessuna valutazione finora

- Quantitative Methods For Business Management: The Association of Business Executives QCFDocumento25 pagineQuantitative Methods For Business Management: The Association of Business Executives QCFShel LeeNessuna valutazione finora

- 2SLS Klein Macro PDFDocumento4 pagine2SLS Klein Macro PDFNiken DwiNessuna valutazione finora

- Business Statistics Level 3: LCCI International QualificationsDocumento22 pagineBusiness Statistics Level 3: LCCI International QualificationsHein Linn Kyaw100% (1)

- Lab-5-1-Regression and Multiple RegressionDocumento8 pagineLab-5-1-Regression and Multiple RegressionRakib Khan100% (1)

- Simple Regression Analysis: April 2011Documento10 pagineSimple Regression Analysis: April 2011Arslan TariqNessuna valutazione finora

- ECON 3300 Test 2 ReviewsDocumento5 pagineECON 3300 Test 2 ReviewsMarcus ShumpertNessuna valutazione finora

- Topic2 Reg ExampleDocumento6 pagineTopic2 Reg Exampleandrewchen336Nessuna valutazione finora

- Financial Econometrics Tutorial Exercise 4 Solutions (A) Engle-Granger MethodDocumento5 pagineFinancial Econometrics Tutorial Exercise 4 Solutions (A) Engle-Granger MethodParidhee ToshniwalNessuna valutazione finora

- Nu - Edu.kz Econometrics-I Assignment 3 Answer KeyDocumento7 pagineNu - Edu.kz Econometrics-I Assignment 3 Answer KeyAidanaNessuna valutazione finora

- Midterm GR5412 2019 PDFDocumento2 pagineMidterm GR5412 2019 PDFtinaNessuna valutazione finora

- Example 2Documento7 pagineExample 2Thái TranNessuna valutazione finora

- Advanced Stata For Linear ModelsDocumento11 pagineAdvanced Stata For Linear ModelsmaryNessuna valutazione finora

- Heckman Selection ModelDocumento9 pagineHeckman Selection ModelcarlosaliagaNessuna valutazione finora

- CAT DI Combination Graph M05Documento8 pagineCAT DI Combination Graph M05vatsadbgNessuna valutazione finora

- Model Question Paper - Industrial Engineering and Management - First Semester - DraftDocumento24 pagineModel Question Paper - Industrial Engineering and Management - First Semester - Draftpammy313Nessuna valutazione finora

- ECN 702 Final Examination Question PaperDocumento6 pagineECN 702 Final Examination Question PaperZoheel AL ZiyadNessuna valutazione finora

- ECON 301 - Midterm - F2020 Answer Key - pdf-1601016920671Documento7 pagineECON 301 - Midterm - F2020 Answer Key - pdf-1601016920671AidanaNessuna valutazione finora

- Discrete Choice Modeling: William Greene Stern School of Business New York UniversityDocumento58 pagineDiscrete Choice Modeling: William Greene Stern School of Business New York UniversitybluezapperNessuna valutazione finora

- Ch25 ExercisesDocumento16 pagineCh25 Exercisesamisha2562585Nessuna valutazione finora

- QM 7 Panel Regression Fixed EffectsDocumento36 pagineQM 7 Panel Regression Fixed EffectsDarkasy EditsNessuna valutazione finora

- ST104A 03 JuneDocumento21 pagineST104A 03 JuneAsad HassanNessuna valutazione finora

- 1Documento5 pagine1AmalAbdlFattahNessuna valutazione finora

- BMME5103 - Assignment - Oct 2011 211111Documento6 pagineBMME5103 - Assignment - Oct 2011 211111maiphuong200708Nessuna valutazione finora

- Tutorial Questions: Quantitative Methods IDocumento5 pagineTutorial Questions: Quantitative Methods IBenneth YankeyNessuna valutazione finora

- Stats For Data Science Assignment-2: NAME: Rakesh Choudhary ROLL NO.-167 BATCH-Big Data B3Documento9 pagineStats For Data Science Assignment-2: NAME: Rakesh Choudhary ROLL NO.-167 BATCH-Big Data B3Rakesh ChoudharyNessuna valutazione finora

- InterpretationDocumento8 pagineInterpretationvghNessuna valutazione finora

- Panel Data Problem Set 2Documento6 paginePanel Data Problem Set 2Yadavalli ChandradeepNessuna valutazione finora

- Econometrics With Stata PDFDocumento58 pagineEconometrics With Stata PDFbarkon desieNessuna valutazione finora

- 2023 Tutorial 11Documento7 pagine2023 Tutorial 11Đinh Thanh TrúcNessuna valutazione finora

- Bcoc - 134Documento4 pagineBcoc - 134Vipin K GuptaNessuna valutazione finora

- MultipleReg - SchoolData - Stata Annotated Output - UCLADocumento6 pagineMultipleReg - SchoolData - Stata Annotated Output - UCLAsd.ashifNessuna valutazione finora

- Homework 1Documento8 pagineHomework 1Andrés García Arce0% (1)

- Assignment (Probabilty Theory Probability Distribution, Correlation Regression AnanlysisDocumento4 pagineAssignment (Probabilty Theory Probability Distribution, Correlation Regression Ananlysisishikadhamija2450% (2)

- Python Programming Lab Manual: 1. Write A Program To Demonstrate Different Number Datatypes in PythonDocumento22 paginePython Programming Lab Manual: 1. Write A Program To Demonstrate Different Number Datatypes in PythonNaresh Babu100% (1)

- PMP Exam Cram Test BankDocumento260 paginePMP Exam Cram Test Bankmuro562001100% (2)

- A Tutorial On Differential Evolution With Python - Pablo R. MierDocumento21 pagineA Tutorial On Differential Evolution With Python - Pablo R. MierNeel GhoshNessuna valutazione finora

- Ellipse orDocumento23 pagineEllipse orTEJA SINGHNessuna valutazione finora

- Solutionsfor Uniformly Accelerated Motion Problems Worksheets CH 6Documento3 pagineSolutionsfor Uniformly Accelerated Motion Problems Worksheets CH 6Abreham GirmaNessuna valutazione finora

- SCM - Session 8 (Forecasting)Documento21 pagineSCM - Session 8 (Forecasting)Bushra Mubeen SiddiquiNessuna valutazione finora

- PDE Multivariable FuncDocumento16 paginePDE Multivariable Funcsaipavan iitpNessuna valutazione finora

- MicroBasic ReferenceDocumento19 pagineMicroBasic Referenceali beyNessuna valutazione finora

- Introduction To Solid Mechanics 2Documento18 pagineIntroduction To Solid Mechanics 2Şħäиtħä ӃumǻяNessuna valutazione finora

- Math F241 All Test PapersDocumento5 pagineMath F241 All Test PapersAbhinavNessuna valutazione finora

- Elliott Wave TheoryDocumento18 pagineElliott Wave TheoryQTP TrainerNessuna valutazione finora

- Latitude and DepartureDocumento11 pagineLatitude and DepartureNoel Paolo C. Abejo IINessuna valutazione finora

- Tybsc - Physics Applied Component Computer Science 1Documento12 pagineTybsc - Physics Applied Component Computer Science 1shivNessuna valutazione finora

- Chem Lab 7sDocumento7 pagineChem Lab 7sChantelle LimNessuna valutazione finora

- Synthetic Division ReviewDocumento3 pagineSynthetic Division ReviewLailah Rose AngkiNessuna valutazione finora

- The Journal of Space Syntax (JOSS)Documento9 pagineThe Journal of Space Syntax (JOSS)Luma DaradkehNessuna valutazione finora

- (K. V. Mardia, J. T. Kent, J. M. Bibby) Multivaria PDFDocumento267 pagine(K. V. Mardia, J. T. Kent, J. M. Bibby) Multivaria PDFRudy VegaNessuna valutazione finora

- Immediate InferenceDocumento56 pagineImmediate InferenceEdgardoNessuna valutazione finora

- 96-0237 Chinese Mill OperatorDocumento198 pagine96-0237 Chinese Mill Operatorapi-3775717100% (1)

- No. 19 Potential PCA Interpretation Problems For Volatility Smile Dynamics Dimitri Reiswich, Robert TompkinsDocumento42 pagineNo. 19 Potential PCA Interpretation Problems For Volatility Smile Dynamics Dimitri Reiswich, Robert TompkinsS.K. Tony WongNessuna valutazione finora

- Physics Project Class 12 Wave Nature of LightDocumento20 paginePhysics Project Class 12 Wave Nature of LightVasu22% (9)

- Properties of EqualityDocumento1 paginaProperties of EqualityGia Avereen JanubasNessuna valutazione finora

- Handouts For This Lecture: Lecture On Line Symmetry Orbitals (Powerpoint) Symmetry Orbitals (Powerpoint)Documento29 pagineHandouts For This Lecture: Lecture On Line Symmetry Orbitals (Powerpoint) Symmetry Orbitals (Powerpoint)EnigmanDemogorgonNessuna valutazione finora

- CTSH - Technical Bulletin 97-1Documento4 pagineCTSH - Technical Bulletin 97-1hesigu14Nessuna valutazione finora

- Short - Notes Complex Numbers - pdf-59Documento10 pagineShort - Notes Complex Numbers - pdf-59aditya devNessuna valutazione finora

- Statistical Methods For Machine LearningDocumento291 pagineStatistical Methods For Machine Learninggiby jose100% (1)

- MATH 352 Sample Project 1Documento10 pagineMATH 352 Sample Project 1Withoon ChinchalongpornNessuna valutazione finora

- SubtractorDocumento11 pagineSubtractorRocky SamratNessuna valutazione finora

- Work, Energy, Power: Chapter 7 in A NutshellDocumento31 pagineWork, Energy, Power: Chapter 7 in A NutshellArun Kumar AruchamyNessuna valutazione finora

- Lesson 2 - Variables and Data TypeDocumento21 pagineLesson 2 - Variables and Data TypeJohn SonNessuna valutazione finora

- Calculus Workbook For Dummies with Online PracticeDa EverandCalculus Workbook For Dummies with Online PracticeValutazione: 3.5 su 5 stelle3.5/5 (8)

- Basic Math & Pre-Algebra Workbook For Dummies with Online PracticeDa EverandBasic Math & Pre-Algebra Workbook For Dummies with Online PracticeValutazione: 4 su 5 stelle4/5 (2)

- Quantum Physics: A Beginners Guide to How Quantum Physics Affects Everything around UsDa EverandQuantum Physics: A Beginners Guide to How Quantum Physics Affects Everything around UsValutazione: 4.5 su 5 stelle4.5/5 (3)

- Build a Mathematical Mind - Even If You Think You Can't Have One: Become a Pattern Detective. Boost Your Critical and Logical Thinking Skills.Da EverandBuild a Mathematical Mind - Even If You Think You Can't Have One: Become a Pattern Detective. Boost Your Critical and Logical Thinking Skills.Valutazione: 5 su 5 stelle5/5 (1)

- Mathematical Mindsets: Unleashing Students' Potential through Creative Math, Inspiring Messages and Innovative TeachingDa EverandMathematical Mindsets: Unleashing Students' Potential through Creative Math, Inspiring Messages and Innovative TeachingValutazione: 4.5 su 5 stelle4.5/5 (21)

- Fluent in 3 Months: How Anyone at Any Age Can Learn to Speak Any Language from Anywhere in the WorldDa EverandFluent in 3 Months: How Anyone at Any Age Can Learn to Speak Any Language from Anywhere in the WorldValutazione: 3 su 5 stelle3/5 (79)

- A Mathematician's Lament: How School Cheats Us Out of Our Most Fascinating and Imaginative Art FormDa EverandA Mathematician's Lament: How School Cheats Us Out of Our Most Fascinating and Imaginative Art FormValutazione: 5 su 5 stelle5/5 (5)

- How Math Explains the World: A Guide to the Power of Numbers, from Car Repair to Modern PhysicsDa EverandHow Math Explains the World: A Guide to the Power of Numbers, from Car Repair to Modern PhysicsValutazione: 3.5 su 5 stelle3.5/5 (9)

- Who Tells the Truth?: Collection of Logical Puzzles to Make You ThinkDa EverandWho Tells the Truth?: Collection of Logical Puzzles to Make You ThinkValutazione: 5 su 5 stelle5/5 (1)

- Applied Predictive Modeling: An Overview of Applied Predictive ModelingDa EverandApplied Predictive Modeling: An Overview of Applied Predictive ModelingNessuna valutazione finora

- Mental Math: How to Develop a Mind for Numbers, Rapid Calculations and Creative Math Tricks (Including Special Speed Math for SAT, GMAT and GRE Students)Da EverandMental Math: How to Develop a Mind for Numbers, Rapid Calculations and Creative Math Tricks (Including Special Speed Math for SAT, GMAT and GRE Students)Nessuna valutazione finora