Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Market Technician No41

Caricato da

ppfahd0 valutazioniIl 0% ha trovato utile questo documento (0 voti)

47 visualizzazioni16 pagineMarket Technician No43

Copyright

© © All Rights Reserved

Formati disponibili

PDF, TXT o leggi online da Scribd

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoMarket Technician No43

Copyright:

© All Rights Reserved

Formati disponibili

Scarica in formato PDF, TXT o leggi online su Scribd

0 valutazioniIl 0% ha trovato utile questo documento (0 voti)

47 visualizzazioni16 pagineMarket Technician No41

Caricato da

ppfahdMarket Technician No43

Copyright:

© All Rights Reserved

Formati disponibili

Scarica in formato PDF, TXT o leggi online su Scribd

Sei sulla pagina 1di 16

web site: www.sta-uk.

org The Journal of the STA

1

Committee member Axel Rudolph recently interviewed Jack Schwager

at the Technical Analysis Trading Forum in Washington DC where he

was presented with the Traders Hall of Fame Award for his

contribution to technical analysis.

While researching his books, Schwager interviewed over 50 of the

worlds most successful hedge fund managers and found a crucial

common thread: there is no place for emotion. Truly great traders

retain their objectivity. Thats what sets them apart from most

investors, he asserts. Some traders will determine beforehand how a

trade should run and will place loss-limiting orders accordingly. Others

will exit, or reduce their exposure if they become unsure about it.

Either way the emotion is stripped out. As long as you are in a

position you cannot think straight, Schwager recalls, as he thinks back

to a time when he ran a profit before giving most of it back again.

Naturally, I wanted most of all to know from him what the winning

methods and common traits of the most successful traders are.

There is no single true path, he declared. The method is the least

important part.

From his roots as a pure fundamentalist Schwager has, over the years,

come to favour the technical approach at least in his own trading arena

futures. But Schwager is ready to admit that both approaches have

the ability to give people an edge.

Many of the Market Wizards he has interviewed have made

tremendous returns relying solely on fundamental analysis. But

Schwager is convinced by hard evidence that pure technical analysis

can consistently yield profits so far in excess of losses that there can

be no question of a statistical fluke. You can make an empirical,

statistical argument that technical analysis works. Most people may

lose using technical analysis but the fact that any of them can get

such an amazing outweighing of gains to losses would argue that

there is something there.

Some hedge funds which consistently make annual returns over 20%

are right less than 50% of the time. Its not a question of the

percentage of times you win. Its a matter of what the expected gain

is compared with the expected loss, he points out. But Schwager

also cites a contrasting example of one of the worlds most successful

S&P stock futures traders, Mark Cook, who has a trade where he will

risk three times as much as he stands to gain. However, on that

particular trade he will win about 85% of the time.

Investors thus need to have some kind of combination of either

being right very often or, on average, to make much more than they

are risking to consistently make money.

To complement a methodology with an edge, investors must also

apply a consistent discipline, says Schwager. Of course, this sounds

much easier than it is, because of the emotions involved in trading.

Schwager believes psychology is a key constituent of successful

investing. Anyone that has ever traded knows that nearly everyone

makes irrational decisions based on emotions such as fear, greed or

hope at some point or other. It is at these times that big losses occur

and investors get wiped out.

In all three books, Schwager interviewed a number of trading

coaches, one of whom is Dr. Ari Kiev, author and expert in trading

psychology. I dont need any more reason to include Dr. Kiev in my

latest book beyond the fact that Steve Cohen, probably one of the

greatest traders that has ever lived, thinks enough of him to have

him on his staff. Thats all the endorsement I need, he declares.

For outstanding investors, says Schwager, failure is not an option and

losses are part of the game. Many of the power traders have indeed

lost all of what they had and actually ended up in debt before

becoming truly successful at trading. Persistence and a sheer

unbreakable conviction that they will succeed is a common thread

among not just great traders but anyone who excels. And just one

other thing you have to like doing it. You have got to be sure

that trading is what you want to do, he warns. Most highly

successful traders are workaholics working 12-14 hour days,

Saturday and Sundays.

The message for aspiring market wizards who have studied

Schwagers thoughts is clear: there is no mystique, no magic formula,

no hidden secrets. Successful traders eat, sleep and dream about

trading. They absolutely thrive on it and enjoy it.

Reproduced by permission of Dow Jones Newswires

www.dowjonesnews.com

COPY DEADLINE FOR THE NEXT ISSUE

SEPTEMBER 2001

PUBLICATION OF THE NEXT ISSUE

OCTOBER 2001

July 2001 ISSUE No. 41

MARKET TECHNICIAN

FOR YOUR DIARY

IN THIS ISSUE

Wednesday 12th September Monthly Meeting

Wednesday 10th October AGM

Wednesday 14th November Monthly Meeting

N.B. The monthly meetings will take place at the Institute of Marine Engineers, 80 Coleman Street,

London EC2 at 6.00 p.m.

Exam Results 2

D. Watts Software review 3

A. Goodacre Evidence on the performance of the

CRISMA trading system in the US and

UK equity markets 4

R. Klink Is the close price really the closing price? 8

Financial Overconfidence In markets 9

Markets Group

D. Watts Bytes and pieces 13

M. Smyrk Book review 13

B. Millard Predicting future price movement 14

WHO TO CONTACT ON YOUR COMMITTEE

CHAIRMAN

Adam Sorab, Deutsche Bank Asset Management,

1 Appold Street, London EC2A 2UU

TREASURER

Vic Woodhouse. Tel: 020-8810 4500

PROGRAMME ORGANISATION

Mark Tennyson dEyncourt. Tel: 020-8995 5998 (eves)

LIBRARY AND LIAISON

Michael Feeny. Tel: 020-7786 1322

The Barbican Library contains our collection. Michael buys

new books for it where appropriate, any suggestions for new

books should be made to him.

EDUCATION

John Cameron. Tel: 01981-510210

Clive Hale. Tel: 01628-471911

George MacLean. Tel: 020-7312 7000

Michael Smyrk. Tel: 01428 643310

EXTERNAL RELATIONS

Axel Rudolph. Tel: 020-7842 9494

IFTA

Anne Whitby. Tel: 020-7636 6533

MARKETING

Simon Warren. Tel: 020-7656 2212

Kevan Conlon. Tel: 020-7329 6333

Tom Nagle. Tel: 020-7337 3787

MEMBERSHIP

Simon Warren. Tel: 020-7656 2212

Gerry Celaya. Tel: 020-7730 5316

Barry Tarr. Tel: 020-7522 3626

REGIONAL CHAPTERS

Robert Newgrosh. Tel: 0161-428 1069

Murray Gunn. Tel: 0131-245 7885

SECRETARY

Mark Tennyson dEyncourt. Tel: 020-8995 5998 (eves)

STA JOURNAL

Editor, Deborah Owen, 108 Barnsbury Road, London N1 0ES

Please keep the articles coming in the success of the Journal

depends on its authors, and we would like to thank all those

who have supported us with their high standard of work. The

aim is to make the Journal a valuable showcase for members

research as well as to inform and entertain readers.

The Society is not responsible for any material published in

The Market Technician and publication of any material or

expression of opinions does not necessarily imply that the

Society agrees with them. The Society is not authorised to

conduct investment business and does not provide

investment advice or recommendations.

Articles are published without responsibility on the part of the

Society, the editor or authors for loss occasioned by any

person acting or refraining from action as a result of any view

expressed therein.

STA DIPLOMA APRIL 2001

PASS LIST

L. Anastassiou F. Lien

M. Chesterton V. Mahajan

E. Chu M. Randall

D. Cunnington J. Richie

P. Day M. Schmidt

B. Jamieson M. Shannon

P. Kedia M. Sheridan

R. Khandelwal M. Wahl

R. Klingenschmidt P. Wong

M. Knowles

DITA II CANDIDATES

T. Kaschel

D. Lieber

M. Meyer

M. Unrath

A. A. Waked

A. Will

2

MARKET TECHNICIAN Issue 41 July 2001

Networking Exam Results

ANY QUERIES

For any queries about joining the Society, attending

one of the STA courses on technical analysis or

taking the diploma examination, please contact:

STA Administration Services

(Katie Abberton)

P.O. Box 2, Skipton BD23 6YH.

Tel: 07000 710207 Fax: 07000 710208

www.sta-uk.org

For information about advertising in the journal,

please contact:

Deborah Owen

108 Barnsbury Road, London N1 OES.

Tel/Fax: 020-7278 7220

Software available for sale as a result of a prize draw win.

Software is unused. Nirvanas Omnitrader end of day

stocks edition: high end automation of technical analysis

with 120 built-in trading systems. Hot Trader Gann Swing

Charting Program. 200 per package/ 350 for both inc.

P&P (mainland UK). A 20 reduction per package for

people who attend the monthly meetings, and who

therefore dont

require P&P.

Contact: Mr Rahul Mahajan MSc MBA MSTA

E-mail: r.mahajan@btinternet.com

Issue 41 July 2001 MARKET TECHNICIAN

3

TradingExpert 6 is the latest offering from the AIQ people. AIQ

(which stands for Artificial Intelligence Quotient) refers to the

Expert System that is at the heart of this suite of programs, but it

is also much more, with state of the art market charting and analysis

being incorporated. The program modules integrate well together,

forming a coherent complementary suite of programs that few others

can match.

Installation of the suite of programs is straightforward, both with an

instructional video provided to ease this process and the programs

initial operation. After installation, the programs are found via a tool

bar on the desktop, which allows you to access the specific module

that you require. What is impressive is the range and depth of the

applications on offer. These comprise the charting package with the

associated technical indicators and expert system. There is a data

manager and communication interface. An excellent addition is

Matchmaker a program to correlate groups of stocks and sort

them into groups or sectors. Also significant is the real time quote

monitor, a system and scanning writing programme called the

Expert-Design Studio, and a Portfolio Manager. In short, you have

a complete suite of programs to suit all your analysis or trading needs.

At the heart of this package is the technical charting package with an

array of indicators and the Expert System. The security chart is displayed

with a list of indicators to the right. With one click any of these can be

added to the chart. The indicator window also incorporates the Expert

Rating together with the indicators that form the basis of the system.

The Expert System gives a consensus rating of the technical indicators,

providing a prediction of the likely future price movement of the

security, with a rating from 0 to 100. Further information is provided by

clicking upon the ER icon and up pops a box showing the reasoning

for the expert rating shown. Does the Expert system perform? Overall I

found the expert rating useful with some correlation to future price

movement, but this is subjective judgement.

In the Charting module, I particularly liked the one click insertion of

technical indicators and moving averages into a chart, also the ability

to change the defaults by clicking on the indicator displayed. Bar, line

and candlestick charts can quickly be displayed via the top toolbar,

but point and figure charts were disappointing, being too small to be

analysed in the indicator window. Another column of icons provides

the drawing and retracement tools, all being very easy to place and

change on the chart.

The suite also provides a Data Manager, with an extensive collection

of pre-defined databases for the SP500, Nasdaq, FTSE100 stocks etc.

The US/UK stocks with their tickers and exchanges are defined. All

data is kept in the proprietary AIQ format, at which point I wished

the package could read the standard Metastock/Computrac data

format, as yet another database had to be set-up and maintained.

However the Data Manager and communications program makes

this easy.

The communications program allows you to download data from the

Mytrack servers, which I found fast and easy to use. The

communication package loads data once you are connected to the

Internet, making an Internet connection essential. The Mytrack servers

also provide real-time data via the Quotes and Alerts modules, so

once online both historical and real-time data are available.

One excellent feature is that once a download is completed, the AIQ

package also computes a set of reports for the most common

technical criteria reports, Relative Strength, new highs or lows,

breakouts, high volume days, and P&F breakouts. These are instantly

available, making processing thousands of stocks a breeze, and can

be immediately printed or stored for later analysis.

The reports are available via the reports module, their breadth being

the most extensive I have ever seen. Daily, weekly, sector, group and

technical stock reports are all available, allowing a top down

approach to stock selection to be developed, via a market, then

sector, then stock. Furthermore, by clicking upon any security in the

report module, up comes the chart for analysis. It is this integration

that AIQ provides that allows real productivity gains to be made

when analysing thousands of stocks and, importantly, not missing

key technical events.

The AIQ package scores again with its Expert Design Studio; here

you can produce your own reports or back test a system. The

program allows you to produce your own system, then scan for

when those system criteria are met. Also unique is the ability to

reference and hence back-test the Expert System in your analysis. An

extensive list of pre-programmed studies are available should you not

wish to program your own. Clearly AIQ have not left anything out of

this suite of packages, and even more programs are included, such as

the account manager and the fundamental database module.

So having found your stock you want to monitor it real-time? With

one click the real-time quote monitor, gives you a table of quotes

with both intra-day and historical charts. I did find one annoyance, in

that this did not work for a historical chart of a UK stock, the

designation for London having to be changed each time, from

-L to @L, whereas with one click US stock charts were instantly

available. For real-time UK charts this was not a problem, with

charts down to one minute being available in the charting module.

How did the feed perform? Using a standard 56k modem, I found

that the real-time data feed performed well with timely data, but a

good Internet service provider is critical.

Matchmaker is a real gem in the program suite. Here, via

correlation analysis, it is possible to generate groups and sectors and

look for leaders or laggards within a particular correlated group. AIQ

uses a correlation approach to defining sectors and the performance

of a stock within a sector. Then a variety of groups can be

produced; for example, following the Wychoff approach, an index

surrogate is possible. The Index surrogate generated could perhaps

be of the top ten FTSE100 stocks by capitalisation as a leading

indicator? Or you can generate a surrogate using spreads between

correlated stocks, buying or selling the spread once it is overbought

or oversold.

Overall I liked this package for its ease of use and integration with

the Internet. It offers a number of often excluded essential facilities

for the equity analyst or stock trader. The extensive reports are a real

boon for the analyst handling large numbers of stocks. The reports

and data handling allow fast processing, while the expert system is a

bonus for the less experienced. Further details can be found via the

AIQ website at www.aiq.com.

Software review AIQ TradingExpert 6 Review

By David Watts

FTSE100 INDEX

4

MARKET TECHNICIAN Issue 41 July 2001

The work reported here was undertaken jointly (but at different

times) with three researchers while they were students on the MSc

in Investment Analysis programme at Stirling University. I would

like to acknowledge the considerable contributions made by

Jacqueline Bosher, Andrew Dove and Tessa Kohn-Speyer.

One of the problems with technical analysis is that it is difficult to

apply academic rigour to what is essentially an art form. As a result,

academics have tended to focus on the more objective processes in

technical analysis, and typically, relatively simple trading rules such as

filter rules, relative strength and moving averages have been tested.

In many ways, this is somewhat unfair to technical analysts since

they often implore the use of several indicators to confirm trading

decisions. One unusual feature of the CRISMA (Cumulative volume,

RelatIve Strength, Moving Average) trading system is that it is based

on a multi-component trading rule that is objective and can therefore

be subjected to rigorous analysis and testing. Another is that details

of the system were first published more than ten years ago (Pruitt

and White, 1988, 1989 and Pruitt, Tse and White, 1992). The system

was shown to work very successfully in the US equity market. It was

tested out-of-sample, adjusted for risk, and with due allowance for

transaction costs and yet still provided statistically and economically

significant excess returns. Based on data for the period 1976 to 1985

the system predicted annualised excess returns of between 6.1% and

35.6% (depending on the level of transaction costs) and similar

excess returns continued for the 1986 to 1990 period. The CRISMA

system was also applied to identify the purchase of call options on

the underlying shares (Pruitt and White, 1989). Even in the presence

of maximum retail costs, the average round-trip profit was 12.1%

with an average holding period for each option contract of

approximately 25 days.

In an efficient market, technical trading systems such as CRISMA

should not be able to produce abnormal returns or beat the market,

once adjusted for risk and transaction costs. That CRISMA did

produce abnormal returns, not only during the initial test period, but

using out-of-sample data after the initial publication of CRISMAs

success, led Pruitt and White to conclude that staggering market

inefficiencies existed.

Whats more, the system is used by one of its originators to run real

money in a professional portfolio. The systems impressive

credentials attracted our attention and so, some time ago, we set out

to investigate whether CRISMA continues to work in the US and

whether it works in the UK market.

The rest of the paper is organised as follows. First, details of the

CRISMA system are described, followed by the data and methods we

used in back-testing the system. Results from applying the system in

the US market are presented and compared with Pruitt et als results,

as well as an analysis of the contributions of the system components.

A brief summary of the results for the UK market is provided,

including application of the system to option purchases, before some

final concluding remarks on the usefulness of the system.

The CRISMA trading system

The CRISMA trading rule operates a filter system for selecting equity

targets. The first three filters attempt to triple confirm upward

momentum, and use the 50 and 200-day price moving averages,

relative strength and volume as indicators. These filters must be

satisfied on the same day.

The moving average filter is satisfied when a stocks 50-day price

moving average intersects the 200-day price moving average from

below, when the slope of the latter is zero or positive. This can only

occur when the price of the stock is increasing relative to previous

periods. This golden cross is used to indicate the start of an upward

trend, which is expected to continue upwards.

The relative strength filter is satisfied when, over the previous four

weeks, relative strength has increased or remained unchanged. This

filter ensures that the relative performance of the stock is at least

equal to the relative performance of the market as a whole in the

previous four weeks.

Cumulative volume (also called on-balance volume by some users,

for example in Bloombergs) is defined as the cumulative sum of the

volume on days on which the price increases, minus the volume on

days on which the price falls. The cumulative volume filter is satisfied

if its level has increased over the past four weeks, suggesting that

there is increasing demand for the stock.

Once the first three filters are satisfied, the stock is purchased when

it reaches 110% of the intersection point of the 50 and 200 day

price moving averages. This final penetration filter attempts to

reduce the likelihood of whipsaws, the inadvertent issue of false

signals; it does not have to be satisfied on the same date as the first

three filters.

Stocks are sold when either the price rises to 120% of the moving

average crossover point or when it falls below the 200 day price

moving average. There are 3 exceptions to the trading system

mechanics as detailed below:

1. If the price of the stock does not reach the 110% level of the

intersection between the 50 and 200 day moving averages within

5 weeks of the first three filters being satisfied, it is not purchased,

2. If the stock price is already above the 110% filter when the first

3 filters are satisfied, it is not purchased until it has fallen below

this level, and

3. If the stock price is already above the 110% level, when the first

three filters are satisfied and reaches 120% of the intersection

point of the 50 and 200 day price moving averages without

falling below the 110% level, it is not purchased.

METHODS

Pruitt and White (1988) used the Daily Graphs series published

weekly by William ONeil and Company, Inc. to aid in the

identification of trades. Figure 1 re-creates the graphical selection

process for the CRISMA trading rule, using data from the current

study for American Airlines.

Identifying trades in this manner involves elements of subjectivity and

is time consuming, so a more efficient and accurate approach, using

a spreadsheet macro, was adopted. The macro imports pre-tidied

price and volume data for each company into a template containing

the formulae for the moving averages, relative strength and

cumulative volume measures. It then searches for days on which

CRISMAs three initial filters are satisfied and calculates the 110%

and 120% price levels. The dates of the purchase and subsequent

sale of the stock are then identified and, providing no exceptions to

the rule have been breached, the dates and prices are subsequently

copied to a results table.

A random sample of 333 companies from the S&P 500 was selected in

June 1997 with the requirement that a minimum of 4 years of volume

data could be obtained between January 1987 and June 1996. 11

companies were excluded due to insufficient available data giving a

final sample of 322. This sample selection process implies a degree of

survivorship bias (Kan and Kirikos, 1995) which is discussed later. The

prices obtained from Datastream were daily mid-market closing prices,

pre-adjusted for bonus and rights issues. The benchmark index used

for the return calculations was the S&P 500 Index.

Evidence on the performance of the CRISMA

trading system in the US and UK equity markets

By Alan Goodacre

Issue 41 July 2001 MARKET TECHNICIAN

5

To assess how successful CRISMA might have been, several questions

were considered.

On average:

Are the trades profitable?

Do the trades beat a market benchmark?

Do the trades beat a risk-adjusted benchmark?

Is the proportion of successful trades greater than the 50%

expected by chance?

How are the answers affected by transaction costs?

All of the above questions have to be considered in the context that,

effectively, we have taken a sample from all of the possible trades

(over all companies and all time). Sampling theory suggests that

positive answers to the above performance questions can be

achieved by chance, (i.e. solely as a result of the sampling process),

so the likelihood of the observations being chance events needs to be

assessed. This is achieved by carrying out hypothesis tests on the

results to identify those that are unlikely to be chance events

(described as being statistically significant and noted by an asterisk

in the Tables). This provides some confidence that any success might

be due to the CRISMA system rather than luck.

RESULTS

Results in the US market for the period 1 January 1988 through

30 June 1996

From the 322 companies included in the sample, over the 8

1

/2 year

test period the CRISMA trading system identified 328 trades in 208

companies whose stocks were assumed held for a total of 14,424

security-days (approximately 2% of the total security-days). This

gives an average annual total of 1,697 security-days which implies a

portfolio of approximately 6.8 stocks, on average. The mean and

median holding periods were 44 and 31 days respectively, with a

maximum holding period of 374 days and a minimum of 1 day.

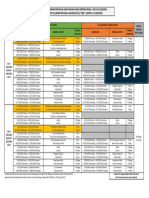

Table 1 presents a summary of the raw CRISMA generated profits.

Assuming zero transaction costs, the CRISMA trading system would

have been profitable with a mean and median profit per trade of

3.1% and 8.9% respectively. After allowing for round-trip transaction

costs of up to 2% the system was still profitable (all returns are

statistically significantly at 5% or less). The final column of the table

shows arithmetically annualised mean returns. These are calculated

by working out the average (mean) return per day across all of the

328 trades and multiplying by 250 (the approximate number of

potential trading days in a year). These annualised returns ranged

from 17.6% to 6.2% depending on the level of transaction costs

assumed. Thus, the system would have been profitable over the

period, even allowing for transaction costs of up to 2%.

However, a more important question is whether the system adds

value. Does it achieve better performance than a buy-and-hold

policy? To test this, the return on the market (S&P500) was deducted

from the return obtained for each day a stock was held to give a

return in excess of the market benchmark. Again, this was averaged

over all the days stocks were held and then annualised; the results

are in column 3 of Table 2.

A further question of importance is whether the system returns

compensate for the risks taken. Traditional finance theory suggests

that the only risk element that should be rewarded is systematic (or

beta) risk, since all stock-specific risk can be eliminated by holding a

well-diversified portfolio. Of particular importance here is that, in a

rising market, high beta stocks should out-perform the market.

Therefore, column 4 of Table 2 reports the results from using the so-

called market model to allow for the effects of each individual stocks

beta risk (consistent with Pruitt et als testing of the system). However,

it is worth noting that this model is potentially biased against the

trading system. A momentum-based system, such as CRISMA, seeks

to identify relatively good performance by a security in the

expectation that such performance will continue. The market model

will have some recent good performance built into the expectations,

for example, via a positive alpha (the mean value for alpha in the

current study was 8.4%, on an annualised basis). The market-adjusted

return does not suffer from this bias, but unfortunately neither does it

account for the relative riskiness of the stocks held.

The nature of the CRISMA system is that for each trade it seeks to

capture a profit of, or limit the loss to, approximately 9% (i.e.

10/110%). This is quite clearly illustrated in the histogram of

unadjusted returns in Figure 2. If, on balance, a higher proportion of

profitable trades is identified then the system will be successful. Thus, a

key measure of success is the proportion of trades that are profitable.

The lower half of Table 2 reports the proportion of profitable trades for

each of the benchmarks and transaction cost levels.

Transaction

Profit per trade (%)

Annualised

Costs Mean Median mean profit (%)

0.0% 3.1* 8.9 17.6*

0.5% 2.6* 8.4 14.8*

1.0% 2.1* 7.9 11.9*

2.0% 1.1* 6.9 6.2*

Table 1: Unadjusted Profits for CRISMA trading system Jan 88 to

June 96

Figure 2: Histogram of raw CRISMA returns Jan 88 to June 96

Figure 1: The CRISMA trading system applied to American Airlines

1. The 50-day moving average crosses the 200-day moving

average from below at a price of $60.9 on 13 December

1991. The slope of the 200-day moving average over the

previous four weeks is positive. The 110% and 120% price

levels are $67.0 and $73.1 respectively.

2. The relative strength and cumulative volume graphs are

positively sloped over the previous four weeks.

3. The 110% buy level is reached on 23 December and a buy

at $68.9 is indicated.

4. The 120% sell level of $73.1 is exceeded on 7 January 1992,

when a sell price of $75.0 is indicated.

5. Thus this trade achieves a raw profit (unadjusted for

dividends, transaction costs and risk) of 8.9% over a period

of 9 trading days when the market (S&P 500) rose by 5.2%.

6

MARKET TECHNICIAN Issue 41 July 2001

As can be seen from Table 2, adjusting for market movements and

risk significantly decreases the returns predicted by CRISMA.

Assuming zero transaction costs, the annualised mean excess return

is just 0.1% when compare with the market benchmark. Taking beta

risk into account further reduces the return to -6.8%. For non-zero

levels of transaction costs, the excess returns are negative for both

benchmarks, statistically significant for transaction costs of 2%

(market) and 0.5% and above (risk-adjusted).

An alternative way of assessing the success of CRISMA is to test

the proportion of recommended trades that were profitable (lower

half, Table 2). Generally, more than the 0.5 expected by chance

were identified, statistically greater than 0.5 for unadjusted returns

and also at low transaction cost levels for market-benchmark

returns. Thus, there is some evidence of success at predicting

profitable trades but no evidence of the system generating excess

returns.

It is not easy to identify the likely effect of the element of survivorship

bias implicit in the sample selection procedure. Companies that have

been forced out of the S&P 500 through failure and those that have

been acquired, or merged, will be under-represented in the sample;

i.e. good performance companies are likely to be over-represented,

suggesting a possible positive bias in reported returns. Kan and Kirikos

(1995) report a significant overstatement of the performance of a

momentum based trading strategy resulting from a similar selection

process in the Toronto stock exchange. If there is a positive bias in the

current study, this would imply that the CRISMA system performs

even less well than reported here.

In summary, back-testing the CRISMA system in the US market over

the 1988-96 period produces disappointing results. While the system

identifies profitable trades, even in the absence of transaction costs it

does not add value by providing significant returns in excess of a

buy-and-hold strategy. Once transaction costs are incorporated, the

system actually under-performs a buy-and-hold strategy.

Comparison with Pruitt et al studies

While much of the current study period is subsequent to the Pruitt et

al test periods, there is an overlapping period of 2

1

/2 years. This

allows a direct comparison between the graphical approach of Pruitt

et al and the spreadsheet-automated approach adopted here. Results

from this comparison and a summary of the original Pruitt et al

studies are presented in Table 3 (nr means that the measures were

not reported in the Pruitt papers).

The contrast between the positive excess returns in both Pruitt

studies and the negative returns here is very striking; similarly, there

is a marked difference between the proportions of profitable trades.

Particularly disconcerting are the differences in the results over the

identical overlap period June 88 through December 1990. Our

method finds more trades, resulting in more days held but identifies

less than 0.5 profitable trades. This leads to significant negative

excess returns of 16.5% against the market benchmark and 18.3%

on a risk-adjusted basis. By contrast, Pruitt et al found 0.76 of

profitable trades (at zero transaction costs) giving a significant

positive annualised excess return of 29.1% on a risk-adjusted basis.

Possible explanations for this different performance were investigated

relating to three areas: sample selection, method of application and

returns measurement. For this exercise zero transaction costs are

assumed throughout.

Pruitt et al. included all stocks jointly included in the CRSP daily data

tapes and the Daily Graphs series in contrast to our random selection

of approximately two-thirds of the S&P 500. One possible

explanation might be that these two samples have different

distributions of company size. We found evidence in the current

study that CRISMA performed better on the largest companies in the

sample, so if the Pruitt sample was biased towards larger companies

this might have contributed to the observed differences.

Application of the CRISMA system using the objective spreadsheet

approach rules out any subjective input to trade identification. For

example, one issue concerns the potential false identification of a

sell signal when a stock price falls when it goes ex-dividend; such

false signals could be avoided in the graph-based approach by

subjective intervention. This issue might have a negative impact on

performance in our computer-based approach through the induced

sale of profitable trades before the profit was achieved or through

the sale of loss-making trades at a lower price. The effect of this was

tested by re-running the full sample through the system with trades

identified on the basis of a smoothed returns price index for each

stock. This index adds back the dividend amount to the price series

thus avoiding a step-down effect on the ex-dividend day and is

available in the Datastream database. The results from this approach

indicate that excess returns were slightly improved to +1.0%

(market) -4.2% (risk-adjusted). Thus, a subjective input to trade

identification of this nature might have improved the Pruitt results

slightly. Pruitt et al. did not define the specific measure of relative

strength that they used, so the sensitivity of the current results to an

alternative definition of relative strength was tested. A larger number

of trades (394) was identified but the effect on returns was quite

small and negative (e.g. excess returns were -0.9% against the

market benchmark).

Perseverance is less of a difficulty in our objective computer-based

identification of trades. The mean and maximum stock holding

Return in excess of

Transaction Unadjusted Market Risk-adjusted

costs return benchmark benchmark

Annualised mean excess return (%)

0.0% 17.6* 0.1 -6.8

0.5% 14.8* -2.8 -9.6*

1.0% 11.9* -5.6 -12.5*

2.0% 6.2* -11.3* -18.2*

Proportion of trades profitable

0.0% 0.61* 0.56* 0.52

0.5% 0.61* 0.55* 0.51

1.0% 0.61* 0.53 0.50

2.0% 0.60* 0.51 0.47

Table 2: Tests of CRISMA trading system performance Jan 88 to

June 96

Return in excess of

Study

Time No of Days Trans Market Risk-adj

period trades held costs (%) benchmark benchmark

Annualised mean excess return (%)

Current 01/88-06/96 328 14424 0 0.1 -6.8

2 -11.3* -18.2*

Current 06/88-12/90 81 3084 0 -16.5* -18.3*

2 -29.6* -31.4*

Pruitt (1992) 06/88-12/90 68 1718 0 nr 29.1*

2 nr 9.3

Pruitt (1992) 01/86-12/90 148 3477 0 nr 26.5*

2 nr 5.2

Pruitt (1988) 01/76-12/85 204 4970 0 28.5* 27.0

2 13.1* 11.6

Proportion of trades profitable

Current 01/88-06/96 328 14424 0 0.56* 0.52

2 0.51 0.47

Current 06/88-12/90 81 3084 0 0.44* 0.43*

2 0.41* 0.40*

Pruitt (1992) 06/88-12/90 68 1718 0 nr 0.76*

2 nr 0.66*

Pruitt (1992) 01/86-12/90 148 3477 0 nr 0.75*

2 nr 0.64*

Pruitt (1988) 01/76-12/85 204 4970 0 0.73* 0.71*

2 0.66* 0.61*

Table 3: Comparison with original Pruitt et al results

Issue 41 July 2001 MARKET TECHNICIAN

7

periods in our study were 44 and 374 days respectively compared

with 24 and 102 in the later Pruitt study. We had 17 trades with

long holding periods (i.e. longer than their maximum period). The

total number of security-days for these trades was 3,254, which

represented 23% of the overall total of security-days, so it is possible

that these trades could significantly influence the overall picture.

Whilst the unadjusted returns for the long trades showed that they

were profitable, on average they achieved lower annualised returns

(10.8%) than the remaining normal holding period trades (19.6%).

However, the removal of long trades would leave small (but

insignificant) positive excess returns of +0.7% (market) or slightly less

negative returns of -5.9% (risk-adjusted).

Overall, the fundamental picture remains unchanged. CRISMA fails to

identify trades that give significant positive returns when adjusted for

market movements and risk, even in the absence of transaction costs.

Importance of the individual components of the

CRISMA trading rule

A weakness of the original Pruitt study is that it did not fully disclose

how the CRISMA system was developed; for example, the rationale

for the specific choices of moving average lengths, filter sizes, relative

strength periods and so on. The papers compensating strengths are

that the returns were adjusted for risk, and testing (certainly in the

second paper) was fully out-of-sample and even subsequent to the

market having knowledge of the system. Ultimately, the continued

success of the system itself can be argued to be sufficient justification.

However, the current study provides an opportunity to investigate the

relative importance of the different components of the system. First,

we identify all potential trades that meet the basic moving average

golden cross component. Then, we observe the proportion of such

trades that are eliminated as a result of the three exceptions to the

systems mechanics (e.g. the price does not rise to the purchase point

within five weeks of the moving average crossover). Last, we identify

the proportion of the remaining trades that are ruled out by the

relative strength (RS) and cumulative volume (CVOL) components

and measure the returns which these excluded trades would have

generated. A number of interesting observations can be made.

First, the number of potential trades eliminated as a result of the

exceptions to the systems mechanics is quite large, representing over

50% of the potential trades, indicating that these exceptions are not

quite as rare as suggested in Pruitt et al. (end note 4, 1988).

Second, the proportion of trades that are excluded because of the

failure of the trades to meet the relative strength and cumulative

volume components of the system is fairly small. The RS rule

excludes approximately 15%, and the CVOL rule approximately 26%

of potential trades that meet the basic golden cross condition;

jointly, these two rules exclude approximately 31% of potential

trades. Third, and more important, the profitability of these excluded

trades is positive and actually superior to the trades that are included!

Overall, the effect of excluding trades because they failed to meet

either the RS or CVOL conditions is to reduce the annualised excess

return by 1.2% against the market benchmark. Thus, the benefits of

the multi-component nature of the CRISMA system over a simple

moving average rule are not proven. The proportion of trades that

fail the extra two conditions is relatively small, and the exclusion of

the trades actually reduces the profitability of the system.

Results in the UK market for the period 1 January

1988 through 30 June 1996

Applying the CRISMA system to a sample of 254 FTSE 350 index

constituents produced very similar results to those presented above

for the US market.

It identified 176 trades in 113 companies whose stocks were

assumed held for a total of 7,067 security-days. The mean and

median holding periods were 40 and 32 days respectively, with a

maximum holding period of 152 days and a minimum of 2 days.

Assuming zero transaction costs the CRISMA trading system would

have been significantly profitable with a mean profit per trade of

3.1% and median profit per trade of 7.9%. After allowing for round-

trip transaction costs of up to 2% the system was still profitable,

though not significantly so at the 2% level of costs. Arithmetically

annualised returns (based on a 250 business day year) ranged from

19.3% to 6.9% depending on the level of transaction costs assumed;

for comparison, the mean annual return on the FTSE All Share Index

over the same time period was 14.0%.

Assuming zero transaction costs, annualised excess returns of 2.2%

(market) and -2.9% (risk-adjusted) were observed. For non-zero

levels of transaction costs the excess returns were negative for both

market and risk-adjusted benchmarks (statistically significant for 2%

and 1% transaction costs, respectively). For unadjusted returns, the

proportion of profitable trades was 0.60 for all four transaction cost

levels. For market benchmark returns, the proportions of profitable

trades were 0.57, 0.56, 0.54 and 0.51 for transaction costs of 0%,

0.5%, 1% and 2% respectively, statistically significantly greater than

0.50 for the two lower cost levels. For risk adjusted returns only 0.51

of trades were profitable at zero transaction costs, but less than 0.5

for the other three levels of costs.

Thus, for trades based on UK equity securities, the results are

similarly disappointing to those observed in the US market.

CRISMA applied to UK exchange traded options

Pruitt and White (1989) argued that few market traders actually

purchase equity securities when applying strategies similar to the

CRISMA system. Rather, they seek the higher leverage and profit

potential represented by exchange-traded options. Consideration of

the performance of such an option strategy was assessed for the UK

market, but was limited by the relatively small number of options

traded at LIFFE. Only 64 trades from the original 176 could be tested

because either there were no options listed on the particular

securities or there were no in-the-money call options available at the

time of the recommendation.

The mean holding period for options was 41 days with maximum

and minimum holding periods of 116 and 5 days. Assuming zero

transaction costs, the mean return per round-trip option trade was

27.7% with 61% of all trades profitable (statistically greater than

0.50). Even in the presence of maximum retail costs (estimated at

approximately 50 per contract, or 11.5% of the mean contract

price), the CRISMA system generated a mean return of 10.2% per

option trade, although with only 55% of trades profitable (not

statistically significantly greater than 0.50). Nevertheless, the

CRISMA system does appear to be able to produce high returns

when used to select options, since a mean return of 10.2% in the

presence of maximum retail transaction costs for an average holding

period of 41 days could not be considered undesirable. The returns

to CRISMA-generated option trades are very similar to those

reported by Pruitt and White (1989, 1992) but the US mean holding

period per trade of 25 days is shorter. The proportion of profitable

trades for the UK is lower than the 68% (64% for maximum retail

costs) reported by Pruitt and White (1992) for the 1986-1990 period.

One important feature of the option-based strategy is that, as with

equities, success depends on achieving a larger proportion of

profitable than unprofitable trades. However, the balance is between

positive and negative returns of about 75% rather than the 9% for

equities. In other words it is a much riskier strategy, so much higher

returns are needed to justify the additional risk.

CONCLUSIONS

The present study back-tested a spreadsheet-based version of the

CRISMA trading system in both the US and the UK markets over the

period 1988 to 1996. In general, the results were somewhat

disappointing. Whilst trades were profitable, on average, once these

were adjusted for market movements and risk they ceased to be so

and transaction costs merely served to worsen the negative excess

returns. The leverage attribute of exchange-traded options tested for

the UK market improved the picture and trades were profitable, on

average, after due allowance for maximum retail transaction costs.

However, this needs to be balanced against the increased risk of

adopting the option strategy.

continues on page 8

8

MARKET TECHNICIAN Issue 41 July 2001

Nothing is more critical to technical analysts in their trading than

accurate price information. Inaccurate historical data can result in

invalid back testing results, leading to the development and

implementation of faulty trading systems. Building a solid and

profitable trading system is hard enough for most of us without this

handicap!

One of the underlying themes in the determination of data quality is

whether the quality issues are ones of system error or by way of

definition. As we will see, there are many and varied ways of

calculating a close price. All methods are valid and their accuracy

may depend on the end users perspective and intended use of the

data.

Whilst I will be primarily focusing on technical analysis in this article,

the effects of data quality extend deep into the finance industry. For

example, Portfolio Valuations, Indexing and Benchmarking rely

heavily on accurate and consistent close prices.

Close prices provide a great starting point in terms of reviewing data

quality issues and consistency questions. As a provider of financial

data globally, we are constantly challenged with the different

variations on close prices defined by global stock exchanges. These

include terms such as end-of-day, last traded, indicative price,

derived price and settlement price. In an attempt to better define

and document the processes used by global exchanges to derive their

close processes, the Financial Information Services Division (FISD) of

the Software and Information Industry Association has weighed in

with their Closing Price Project. For more details on the project, visit

http://www.fisd.net/price

The purpose of this project is to gain transparency and full disclosure

of how close prices are created and calculated by equity exchanges

and processed and displayed by end-of-day price vendors globally.

The focus of this project is not one of dictating the method of

calculation by each exchange and vendor, rather, to define and

document their process so that users of the data will be better able

to understand the origins of the data they are dealing with and any

associated derivations, modifications or assumptions applied to the

same. The team is currently in the process of surveying each

exchange and data vendor to determine this information.

In terms of the exchanges we deal with globally today, many have

published end-of-day price files available for the data vendor

redistribution. These files include, at minimum, Open, High, Low,

Close and Volume values. Some examples of these exchanges include

Australia (www.asx.com.au), New York Stock Exchange

(www.nysedata.com) and Singapore (www.ses.com.sg). By publishing

their own, defined files daily, data consistency levels across data

vendors is high.

Other exchanges, including the London Stock Exchange

(www.londonstockexchange.com) supply data vendors with a real

time data feed. The data vendors then on-process this real time feed

to strip an end-of-day file to supply to end users. This can lead to

differing end-of-day prices being distributed by each data vendor

depending on the methodology used to strip the data from the feed.

For example, how does a vendor define the Open price? The first

trade, or by the definition some exchanges use, an average of the

first few minutes trading. The LSE is currently estimating the release

of their published end-of-day data service by year end.

Also, how do vendors allow for spurious or invalid trades? Some

automatically reject bad or doubtful ticks, based on cleansing

algorithms. In addition, some real time data providers have a team of

data analysts continuously monitoring the data to remove bad ticks

that would throw out end users charting applications for the rest of

the day. It is the thoroughness and speed of this cleansing process

that really separates data vendors in terms of data quality.

Another key determinant of data quality to look out for is the

application of the appropriate dilution factors (splits and adjustments)

to raw data pricing. The spikes sometimes present in non back-

adjusted data can play havoc with technical analysis indicators. For

example, moving averages and rate of change indicators can and will

provide spurious calculation results that will invalidate back-testing

and market scanning exercises.

In summary, the good news for Investors and Traders globally is that

efforts by organizations such as FISD are great steps forward in

driving accurate and consistent pricing globally. By raising the issue

with the global exchanges and working with them to better define

pricing methodologies, transparency will occur.

Rick Klink is the CEO of Paritech

continued from page 7

Further investigation of the multi-component nature of CRISMA

revealed that it is, in large part, a moving average trading rule. The

impact of the cumulative volume and relative strength components

on observed returns is negative; CRISMA would have produced

slightly improved returns had these components of the system been

ignored!

Over a common time period, application of the objective spreadsheet

version of the CRISMA provided disappointing performance

compared with that obtained using the graphical approach by Pruitt

et al. The sensitivity of the results to various aspects of the model

was investigated but no major individual impacts were identified.

However, it is possible that a set of minor differences in the

application of the model, taken together, could account for the

observed differential performance.

Overall, the results suggest that, at best, the success of the trading

system is extremely sensitive to the exact method of application.

Alan Goodacre is Senior Lecturer in Accounting and Finance at the

University of Stirling and can be contacted by e-mail:

Alan.Goodacre@stir.ac.uk

Further details of the research can be found in the two academic

papers in Applied Financial Economics listed in the references.

Additional information about Applied Financial Economics is

available on the journal publishers web site:

http://www.tandf.co.uk

REFERENCES

Goodacre, A., Bosher, J., and Dove, A. (1999), Testing the CRISMA

Trading System: Evidence from the UK Market, Applied Financial

Economics, 9, 455-468.

Goodacre, A. and Kohn-Speyer, T.K. (2001), CRISMA revisited,

Applied Financial Economics, 11, 221-230.

Kan, R. and Kirikos, G. (1995), Biases in Evaluating Trading

Strategies, Working Paper, University of Toronto.

Pruitt, S.W. and White, R.E. (1988), The CRISMA Trading System:

Who Says Technical Analysis Cant Beat the Market?, Journal of

Portfolio Management, 14, Spring, 55-58.

Pruitt, S.W. and White, R.E. (1989), Exchange-Traded Options and

CRISMA Trading, Journal of Portfolio Management, 15, Summer,

55-56.

Pruitt, S.W., Tse, K.S.M. and White, R.E. (1992), The CRISMA

Trading System: The Next Five Years, Journal of Portfolio

Management, 18, Spring, 22-25.

Is the close price really the closing price?

By Rick Klink

Issue 41 July 2001 MARKET TECHNICIAN

9

Last year stock markets reached unprecedented levels, with

extremely high Price-Earnings multiples and high price volatilities. In

the technology sector share prices of firms that had never posted any

earnings reached extraordinarily high levels. The rise of the New

Economy and the emergence of the biotechnology sector raised

fundamental questions regarding the proper valuation of companies

operating in these sectors. The levels of volatility last year threw

into doubt conventional valuation methodologies. It remained to be

seen whether market valuations were being driven by expectations

of future earnings or by irrationality and overconfidence.

In November, the Financial Markets Group of The London School of

Economics, with the support of UBS Warburg, organised a

conference on Market Rationality and the valuation of Technology

Stocks to analyse key issues, including valuation of firms that

exhibit no earnings and have limited history, aspects of trading

overconfidence in competitive securities markets and market

efficiency in the new environment.

The first session of the Conference was chaired by Hyun Song Shin

(FMG/CEP/LSE) and dealt with Market Efficiency in the New

Environment. The conference opened with Sunil Sharma

(International Monetary Fund) who presented the paper entitled

Herd Behaviour in Financial Markets: A Review co-authored with

Sushil Bhikchandani (Anderson Graduate School of Management,

UCLA).

Motivated by the growing literature in this area, Sharma looked at

the causes for and empirical evidence of herding with particular

emphasis on the Lakonishok-Shleifer-Vishny (LSV) measure and the

so-called learning among analysts. He surveyed the literature

corresponding to the three main causes of herding: (a) information-

based, (b) reputation-based, and (c) compensation-based.

Information-based herding is the belief that analysts are uncertain

about the costs and benefits of their actions and so rely on the

observable behaviour of other analysts, and not their own

information set. The information inferred from actions of

predecessors overwhelms the individuals private set of information.

Reputation-based herding models focus on the idea that fund

managers, unsure of their own abilities and those of their competitors

and concerned about the loss of reputation that may result as their

performances are compared to their peers, will end up imitating each

other. Finally compensation-based herding relies on the idea that

investment managers are compensated on the basis of their

performance relative to a chosen benchmark. If investment managers

are very risk averse, then they will have a tendency to imitate the

benchmark.

Sharma claimed that the empirical evidence of these issues is weak

given that the literature does not examine or test particular models of

herding. Most papers use purely statistical approaches, based on the

idea that the analysis may be picking up common responses to

publicly available information.

The most commonly used measure of herding is the LSV measure,

which defines herding as the average tendency of a group of money

managers to buy (sell) particular stocks at the same time relative to

what could be expected if they traded independently. Using this

measure there is not much evidence of herding in developed markets

but it tends to be greater in small and growth firms and is correlated

with managers use of momentum strategies. The analysis for

emerging markets, although it tends to be less econometrically

robust, indicates that herding behaviour forms much more easily

compared with stocks in larger and more developed markets.

Increased herding is also associated with greater return volatility.

Evidence on herding amongst investment analysts, collected by

Graham (1999), indicates that the likelihood that an analyst follows a

herding strategy is decreasing in his ability, increasing in the

reputation of the analysts newsletter, the strength with which prior

information is held and the correlation of informative signals. Other

literature finds that conditional on performance, inexperienced

analysts are more likely to herd than their older colleagues. Welch

(1999) shows that herding tends to be in the direction of the

prevailing consensus and the two most recent revisions. Such trading

behaviour is stronger in market up-turns than down-turns.

Sharma concluded that it is important to distinguish between

endogenous interactions, contextual decisions and correlated effects

because of the implications for public policy. There is a need for

better characterizations of interactions in terms of preferences,

expectations, constraints and equilibrium. Finally there is tremendous

need for better and more robust data to facilitate credible inference.

The discussant of this paper, Leonardo Felli (LSE/FMG), focused on

the question of how powerful is career-concern herding. He indicated

that comparing the behaviour of direct investors and delegated

manager investment might shed more light on the identification of

reputation-based herding.

The second paper of the session was presented by Kent Daniel

(Kellogg School of Management, Northwestern University). The

paper, co-authored with Sheridan Titman (College of Business

Administration, University of Texas), was entitled Market Efficiency

in an Irrational World.

Daniel explained the predictability of prices by examining the over-

reaction effect in the long term based on the distinction between

tangible and intangible information. Tangible information refers to

accounting measures that are indicative of the firms growth obtained

from its fundamentals; whereas intangible information is accountable

for the part of a securitys past returns that cannot be linked directly

to accounting numbers, but which may reflect expectations about

the future cash flows.

The predictability is broken down into three possible sources: (a)

extrapolation bias (over-reaction to tangible information, as measured

by the book-to-market ration); (b) over-reaction to intangible

information about future growth, and (c) pure noise (price

movements unrelated to future cash flows).

While finding no evidence to support the first effect, Daniel found

that over-reaction to intangible information is a significant factor in

explaining predictability. This is related to the observation that people

tend to be over confident about vague, non-specific information.

The paper was discussed by George Bulkley (University of Exeter),

who questioned the strength of the results. He claimed that,

although the decomposition is interesting, there is difficulty in

determining whether there is support for any particular model. He

claimed that the results presented in the paper were consistent with

any model which generates mispricing measured by the book-to-

market ratio. However, he admitted that there is substantial

econometric difficulty in testing these models given the continuously

overlapping layers of information in financial data and the lack of

discriminating tests.

Countering Bulkleys comments, Daniel argued that the aim of the

paper is to explain long run returns and he did not want to

contaminate the results with effects from the post-earnings

announcement drift.

The second session, covering Overconfidence in the Stock Market,

was chaired by Sudipto Bhattacharya (FMG/LSE) and started with

the presentation of a paper entitled Learning to be Over Confident

by Simon Gervais (Wharton School, University of Pennsylvania).

Gervais developed a multi-period market model describing both the

process by which traders learn about the ability and how a bias in

this learning can create overconfident traders. The principal goal of

this work was to demonstrate that a simple and a prevalent bias in

evaluating a traders personal performance is sufficient to create

markets in which investors are, on average, overconfident.

Overconfidence in markets

10

MARKET TECHNICIAN Issue 41 July 2001

In the model, traders initially do not know their ability. They learn

about their ability through experience. Traders who successfully

forecast net period dividends, improperly update their beliefs; they

overweight the possibility that their success was due to superior

ability. In so doing they become overconfident.

A traders level of overconfidence changes dynamically with his

successes and failures. A trader is not overconfident when he begins

to trade. Ex-ante, his expected overconfidence increases over his first

trading periods and then declines. Thus, a trader is likely to have

greater overconfidence in the early part of his career.

After this stage of his career, he tends to develop a progressively

more realistic assessment of his abilities as he ages. Overconfidence

does not make traders wealthy, but the process of becoming wealthy

can make traders overconfident. Gervais concentrated on the

dynamics by which self-serving attribution bias engenders over-

confidence in traders, and not on the joint distribution of trader

ability and the risky securitys final payoff. In this model,

overconfidence is determined endogenously and changes dynamically

over a traders life. This enables predictions about when a trader is

most likely to be overconfident (when he is inexperienced and

successful) and how overconfidence will change during a traders life

(it will, on average, rise early in traders career and then gradually

fall).

The model predicts that overconfident traders will increase their

trading volume and thereby lower their expected profits. To the

extent that trading is motivated by overconfidence, higher trading

will correlate with lower profits. Volatility increases with a traders

number of past successes. Both volume and volatility increase with

the degree of a traders learning bias.

The model has implications for changing market conditions. For

example, most equity market participants have long positions and

benefit from upward price movements. Therefore it is expected that

aggregate overconfidence will be higher after market gains and lower

after market losses. Since greater overconfidence leads to greater

trading volume, this suggests that trading volume will be greater

after market gains and lower after market losses.

David de Meza (Exeter University/Interdisciplinary Institute of

Management, LSE), the discussant for the paper, commented that he

found the results useful and interesting. He pointed out that the

paper makes a distinction between illusion of validity and

unrealistic optimism (understood as forecast error). He also

emphasized the mechanics of overconfidence by which insiders

overestimate their forecasting ability in the light of accurate forecast.

De Meza finished by agreeing that overconfidence leads to an

increase of trading volume. However he pointed out that it is not

clear why market confidence should fluctuate over time. He

mentioned, in addition, that correlated signals might also mean that

errors are correlated so there was a need for aggregated tests.

The session concluded with the presentation of a paper by Avanidhar

Subrahmanyam (UCLA), entitled Covariance Risk, Mispricing and

the Cross Section of Security Returns. The focus of the presentation

was a model in which asset prices reflect both covariance risk and

misperceptions of firms prospects, and in which arbitrageurs trade

against mispricing.

Subrahmanyam examined how the cross-section of expected security

returns is related to risk and investor mis-valuation. He provided a

model of equilibrium asset pricing in which risk averse investors use

their information incorrectly in selecting their portfolio. The model

was applied to address the ability of risk measures versus mis-pricing

measures to predict security returns; the design trade-offs among

alternative proxies for mis-pricing; the relation of volume to

subsequent volatility; and whether the mis-pricing equilibrium can

withstand the activities of smart arbitrageurs.

The presenter stated that may empirical studies show that the cross

section of stock returns can be forecast using not only standard risk

measures, such as beta, but also market value or fundamental/price

ratios such as dividend yields or book-to-market ratios. The

interpretation of these forecasting regressions is controversial,

because these price-containing variables can be interpreted as proxies

for either risk or mis-valuation.

Subrahmanyam also pointed out that, so far, this debate has been

pursued without an explicit theoretical model. This paper presents an

analysis of how well, in this situation, beta and fundamental/price

ratios predict the cross-section of security returns.

The model implies that even when covariance risk is priced,

fundamental-scaled price measures can be better forecasters of

future returns than covariance risk measures such as beta. Intuitively,

the reason that fundamental/price ratios have incremental power to

predict returns is that a high fundamental/price ratio (e.g. high book-

to-market ratios) can arise from high risk and/or overreaction to an

adverse signal. If price is low due to a risk premium, on average it

rises back to the unconditional unexpected terminal cash flow. If

there is an overreaction to genuine adverse information, then the

price will on average recover only in part. Since high book-to-market

reflects both mispricing and risk, whereas beta reflects only risk,

book-to-market can be a better predictor of returns.

Subrahmanyam concluded with an examination of the profitability of

trading by arbitrageurs and overconfident individuals. He further

mentioned that it would be interesting to extend the approach to

address the issues of market segmentation and closed-end fund

discounts.

Sudipto Bhattacharya, (FMG/LSE) the discussant for this paper,

pointed out that the model presented in the paper introduces

investor psychology to theory of capital asset pricing by integrating

risk measures (CAPM) with overconfidence (mispricing). He asked

whether the misvaluation effects identified in the model could persist

in the long run and hypothesised that investors may become

overconfident about a theory of how the stock market functions

rather than about the realization of a signal.

Bhattacharya further commented that it would also be interesting to

investigate the correlation between signals and future returns as well

as the implication of univariate equilibrium conditions. He was

excited about the further research suggested by Subrahmanyam,

especially regarding how different groups of investors fasten their

overconfidence upon one analysis versus another, and how the social

process of theory adoption influences security prices.

The third session dealt with Analysts Forecasts and Pricing in the

New Economy and was chaired by Bob Nobay (FMG/LSE). Peter

David Wysocki (University of Michigan) presented a joint paper with

Scott A Richardson (also University of Michigan Business School) and

Siew Hong Teoh (Ohio State University) on The Walkdown to

Beatable Analyst Forecasts: The Role of Equity Issuance and Insider

Trading Incentives.

Motivated by the observation that in the last couple of years,

earnings announcements that exceed analysts forecasts and

subsequent jumps in share prices have become more frequent,

Wysocki asked whether this fact can be statistically explained, and

whether there is a plausible story to be told for why this should

happen.

In this context, he concentrated on the question of whether firms

play an earnings-guidance game where managers release

information selectively to bolster the share price, thereby producing

some benefit either directly for themselves or for the future equity

issuance programmes of the firm.

Comparing US companies in the 1980s to the 1990s, he observed

that there has been a trend towards stock-based compensation that

should give managers incentives to influence the stock price. In

addition, he alluded to mainly firm-initiated rules on insider trading,

restricting it to time periods immediately after earnings

announcements; the argument here apparently being that in these

periods, there would be less insider information to trade on.

Furthermore, changes in US rules on insider trading initiated by

regulators in May 1991 imply that managers now have a more

precise target date for when to exercise their stock options. Given

these structural changes in the 1990s, Wysocki argued that there are

now higher incentives to achieve beatable forecasts. One would also

expect a higher likelihood of observing pessimistic forcasts for firms

with greater needs to sell stock after an earnings announcement:

firms that want to issue stock in the future have an incentive to

generate a greater number of positive earnings surprises.

Issue 41 July 2001 MARKET TECHNICIAN

11

The paper calculates ex-post forecast errors from the Institutional

Brokers Estimate System (I/B/E/S), which contains individual

analysts, monthly forecasts, from 1983 to 1998. The short-term

errors (e.g. one month prior to the earnings announcement) are then

regressed on sub-period dummies, controlling for various other

variables, to determine whether there has been a shift in pessimism.

The results suggest that there has indeed been a shift towards small

negative forecast errors in the 1990s. Further, from a cross-section

regression, Wysocki found that the effect is indeed stronger for firms

that issue equity after the earnings announcement, and/or where

insiders sell the announcement.

From this evidence, he concluded that institutional changes have

made managers more likely to behave opportunistically and guide

analysts expectations through earnings announcements in order to

facilitate favourable insider trades.

In his discussion of the presentation Pascal Frantz (LSE) reviewed the

main features of the paper, and suggested that there may be several

other reasons why forecasts are systematically pessimistic. He also

proposed examining whether the magnitudes of differences of

observed share price movements were economically significant, and

whether they actually allow non-negligible profits to be made.

Next Enrico Perotti (University of Amsterdam) presented joint work

with Sylvia Rossetto (University of Amsterdam), entitled The Pricing

of Internet Stock Portals as Platforms of Strategic Entry Options.

The main motivation behind the presentation was to show how one

could calculate valuations for companies that have a significant

proportion of their value tied up in growth opportunities.

Perotti presented a model based on the concepts of strategic real

options and platform investment. Platform investment is the creation

of an innovative distribution and production infrastructure, which

increases access to customers and as a result reduces entry costs in

related products. Examples include the development of a software

operating system or an internet portal web page. Having a platform

technology confers strategic pre-emptive advantages, creating a set

of entry options into uncertain markets.

In the model, two firms compete as parallel monopolies in a market

with two differentiated products, and contemplate cross-entry into

the other firms market. Demand in the market evolves stochastically

over time and follows a geometric brownian motion. In the absence

of strategic considerations, the standard argument applies there is a

positive value to waiting due to the fact that profits are convex in

demand. Here, however, strategic interaction creates a first-mover

advantage. In the model, there is a tension between these two

effects. Asymmetry is introduced by giving one firm the platform

technology, essentially lower costs of cross-entry, and the other one

conventional technology.

Depending on parameter values, there are three different possible

outcomes: (a) there is no cross entry, (b) the platform firm cross-

enters, but the conventional firm does not follow, producing a de

facto monopolist, or (c) the platform firm leads and the conventional

firm follows. In the latter two cases, the platform firm will derive an

advantage from its technology. The central result that emerges is one