Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

ML cd −y+ (1−θ) z

Caricato da

ThinhDescrizione originale:

Titolo originale

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

ML cd −y+ (1−θ) z

Caricato da

ThinhCopyright:

Formati disponibili

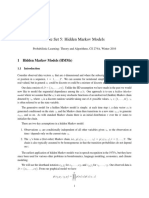

ECE 534 RANDOM PROCESSES FALL 2011

PROBLEM SET 5 Due Tuesday, November 1

5. Two faces of Markov processesinference and dynamics

Assigned Reading: Sections 4.9 and 4.10, Chapter 5, and Chapter 6 through section 8.

Problems to be handed in:

1 Estimation of the parameter of an exponential in additive exponential noise Suppose

an observation Y has the form Y = Z +N, where Z and N are independent, Z has the exponential

distribution with parameter , N has the exponential distribution with parameter one, and > 0

is an unknown parameter. We consider two approaches to nding

ML

(y).

(a) Show that f

cd

(y, z|) =

e

y+(1)z

0 z y

0 else

(b) Find f(y|). The direct approach to nding

ML

(y) is to maximize f(y|) (or its log) with

respect to . You neednt attempt the maximization.

(c) Derive the EM algorithm for nding

ML

(y). You may express your answer in terms of the

function dened by:

(y, ) = E[Z|y, ] =

1

1

y

exp((1)y)1

= 1

y

2

= 1

You neednt implement the algorithm.

2 Maximum likelihood estimation for HMMs Consider an HMM with unobserved data

Z = (Z

1

, . . . , Z

T

), observed data Y = (Y

1

, . . . , Y

T

), and parameter vector = (, A, B). Explain

how the forward-backward algorithm or the Viterbi algorithm can be used or modied to compute

the following:

(a) The ML estimator,

Z

ML

, of Z based on Y, assuming any initial state and any transitions i j

are possible for Z. (Hint: Your answer should not depend on or A.)

(b) The ML estimator,

Z

ML

, of Z based on Y, subject to the constraints that

Z

ML

takes values in

the set {z : P{Z = z} > 0}. (Hint: Your answer should depend on and A only through which

coordinates of and A are nonzero.)

(c) The ML estimator,

Z

1,ML

, of Z

1

based on Y.

(d) The ML estimator,

Z

to,ML

, of Z

to

based on Y, for some xed t

o

with 1 t

o

T.

3 Estimation of a critical transition time of hidden state in HMM Consider an HMM

with unobserved data Z = (Z

1

, . . . , Z

T

), observed data Y = (Y

1

, . . . , Y

T

), and parameter vector

= (, A, B). Let F {1, . . . , N

s

} and let

F

be the rst time t such that Z

t

F with the

convention that

F

= T + 1 if (Z

t

F for 1 t T).

(a) Describe how to nd the conditional distribution of

F

given Y, under the added assumption

that (a

i,j

= 0 for all (i, j) such that i F and j F), i.e. under the assumption that F is an

absorbing set for Z.

(b) Describe how to nd the conditional distribution of

F

given Y, without the added assumption

made in part (a).

1

4 On distributions of three discrete-time Markov processes For each of the Markov pro-

cesses with indicated one-step transition probability diagrams, determine the set of equilibrium

distributions and whether lim

t

n

(t) exists for all choices of the initial distribution, (0), and

all states n.

(c)

1 1

1

0.5

0.5

0 1 2 3

(a)

. . .

0 1

2

3

(b)

1/2

2/3

1/3 1

1/2 3/4

1/4

. . .

0 1

2

3

3/4

1/4

2/3

1/3

1/2

1/2

5 On distributions of three continuous-time Markov processes For each of the Markov

processes with indicated one-step transition probability diagrams, determine the set of equilibrium

distributions and whether lim

t

n

(t) exists for all choices of the initial distribution, (0), and

all states n. (The answer for (c) may depend on the value of c; assume c 0.)

2

(a)

1

2 3

4

2

3

9

5

0 1

2

3 . . .

(c)

1 1 1 1

1+c 1+c/2 1+c/3 1+c/4

0 1

2

3

(b)

. . .

1 1 1 1

2 2 2

6 Generating a random spanning tree Let G = (V, E) be an undirected, connected graph

with n vertices and m edges (so |V | = n and |E| = m). Suppose that m n, so the graph has at

least one cycle. A spanning tree of G is a subset T of E with cardinality n 1 and no cycles. Let

S denote the set of all spanning trees of G. We shall consider a Markov process with state space S;

the one-step transition probabilities are described as follows. Given a state T, an edge e is selected

at random from among the mn+1 edges in E T, with all such edges having equal probability.

The set T {e} then has a single cycle. One of the edges in the cycle (possibly edge e) is selected

at random, with all edges in the cycle having equal probability of being selected, and is removed

from T {e} to produce the next state, T

.

(a) Is the Markov process irreducible (for any choice of G satisfying the conditions given)? Justify

your answer.

(b) Is the Markov process aperiodic (for any graph G satisfying the conditions given)?

(c) Show that the one-step transition probability matrix P = (p

T,T

: T, T

S) is symmetric.

(d) Show that the equilibrium distribution assigns equal probability to all states in S. Hence, a

method for generating an approximately uniformly distributed spanning tree is to run the Markov

process a long time and occasionally sample it.

2

Potrebbero piacerti anche

- cd −θz − (y−z)Documento3 paginecd −θz − (y−z)ThinhNessuna valutazione finora

- ECE534 Exam II SolutionsDocumento1 paginaECE534 Exam II SolutionsThinhNessuna valutazione finora

- Monte-Carlo Methods for Estimating Values of Stochastic ProcessesDocumento11 pagineMonte-Carlo Methods for Estimating Values of Stochastic ProcessescarreragerardoNessuna valutazione finora

- AE353 Homework #1: State Space and Matrix ExponentialDocumento5 pagineAE353 Homework #1: State Space and Matrix ExponentialMarcos Paulo Aragão SantosNessuna valutazione finora

- Flatness and Motion Planning: The Car With: N TrailersDocumento6 pagineFlatness and Motion Planning: The Car With: N TrailersgeneralgrievousNessuna valutazione finora

- Technion - Israel Institute of Technology: Theory of Vibration (034011Documento10 pagineTechnion - Israel Institute of Technology: Theory of Vibration (034011b_miniraoNessuna valutazione finora

- Chapter 4Documento39 pagineChapter 4Joseph IbrahimNessuna valutazione finora

- AST Topic 03: Response of Linear SystemsDocumento61 pagineAST Topic 03: Response of Linear SystemsRehman SaleemNessuna valutazione finora

- Hidden Markov ModelsDocumento5 pagineHidden Markov ModelssitukangsayurNessuna valutazione finora

- State Space Model and Kalman FilterDocumento8 pagineState Space Model and Kalman Filterdenmwa2030ceNessuna valutazione finora

- 08 Aos674Documento30 pagine08 Aos674siti hayatiNessuna valutazione finora

- University of Kwazulu-Natal Electrical, Electronic and Computer Engineering Ene4Cs - Control Systems 2Documento3 pagineUniversity of Kwazulu-Natal Electrical, Electronic and Computer Engineering Ene4Cs - Control Systems 2LEBONessuna valutazione finora

- Systems and Signals Laplace Transform PracticeDocumento4 pagineSystems and Signals Laplace Transform PracticeDr. DriftNessuna valutazione finora

- One Parameter is Always Enough: How a Single Real-Valued Parameter Can Fit Any Scatter PlotDocumento6 pagineOne Parameter is Always Enough: How a Single Real-Valued Parameter Can Fit Any Scatter PlotjoaormedeirosNessuna valutazione finora

- Dynamical Systems: An LTCC CourseDocumento53 pagineDynamical Systems: An LTCC CourseRoy VeseyNessuna valutazione finora

- EM Algorithm TutorialDocumento35 pagineEM Algorithm TutorialbprtxrcqilmqsvsdhrNessuna valutazione finora

- Camacho 2007Documento32 pagineCamacho 2007Luis CarvalhoNessuna valutazione finora

- Stat443 Final2018 PDFDocumento4 pagineStat443 Final2018 PDFTongtong ZhaiNessuna valutazione finora

- Chapter 2 - Lecture SlidesDocumento74 pagineChapter 2 - Lecture SlidesJoel Tan Yi JieNessuna valutazione finora

- Computing Permanents of Complex Matrices in Nearly Linear TimeDocumento12 pagineComputing Permanents of Complex Matrices in Nearly Linear TimeSimos SoldatosNessuna valutazione finora

- Estimation of Parameters2Documento44 pagineEstimation of Parameters2Namrata GulatiNessuna valutazione finora

- Random Functions and Functional Data ClusteringDocumento9 pagineRandom Functions and Functional Data ClusteringmimiNessuna valutazione finora

- HMM for NLP: Hidden Markov ModelsDocumento13 pagineHMM for NLP: Hidden Markov ModelstanvirNessuna valutazione finora

- Matlab Exercises: IP Summer School at UW: 1 Basic Matrix ManipulationDocumento6 pagineMatlab Exercises: IP Summer School at UW: 1 Basic Matrix ManipulationDierk LüdersNessuna valutazione finora

- MIT 6.011 Quiz 1 Signals and SystemsDocumento8 pagineMIT 6.011 Quiz 1 Signals and SystemsThắng PyNessuna valutazione finora

- Mathematics For Physicist: Adhi Harmoko SaputroDocumento60 pagineMathematics For Physicist: Adhi Harmoko SaputroMila AprianiNessuna valutazione finora

- The Fokker-Planck Equation ExplainedDocumento12 pagineThe Fokker-Planck Equation ExplainedslamNessuna valutazione finora

- Statistical Inference ExercisesDocumento3 pagineStatistical Inference ExercisesognobogvoshnoNessuna valutazione finora

- Equivalenceof StateModelsDocumento7 pagineEquivalenceof StateModelsSn ProfNessuna valutazione finora

- CS229 Problem Set #1 Solutions: Supervised LearningDocumento10 pagineCS229 Problem Set #1 Solutions: Supervised Learningsuhar adiNessuna valutazione finora

- LTIDocumento67 pagineLTIElectron FlowNessuna valutazione finora

- Section-3.8 Gauss JacobiDocumento15 pagineSection-3.8 Gauss JacobiKanishka SainiNessuna valutazione finora

- Randomization of Affine Diffusion ProcessesDocumento40 pagineRandomization of Affine Diffusion ProcessesBartosz BieganowskiNessuna valutazione finora

- MATLab Tutorial #5 PDFDocumento7 pagineMATLab Tutorial #5 PDFsatyamgovilla007_747Nessuna valutazione finora

- Computational Aspects of Maximum Likelihood Estimation of Autoregressive Fractionally Integratedmoving Average ModelsDocumento16 pagineComputational Aspects of Maximum Likelihood Estimation of Autoregressive Fractionally Integratedmoving Average ModelsSuci Schima WulandariNessuna valutazione finora

- P M - E L S: Ractice ID Term XAM Inear Ystems Jo Ao P. HespanhaDocumento2 pagineP M - E L S: Ractice ID Term XAM Inear Ystems Jo Ao P. HespanhaGokulnathKuppusamyNessuna valutazione finora

- FIN-403 Final Exam Sample QuestionsDocumento6 pagineFIN-403 Final Exam Sample QuestionstestingNessuna valutazione finora

- Maximum Likelihood Estimation ExplainedDocumento6 pagineMaximum Likelihood Estimation Explainednimishth925Nessuna valutazione finora

- Dynamics and Differential EquationsDocumento30 pagineDynamics and Differential EquationsHusseinali HusseinNessuna valutazione finora

- Chapter 2 - Lecture NotesDocumento20 pagineChapter 2 - Lecture NotesJoel Tan Yi JieNessuna valutazione finora

- Automatic Control III Homework Assignment 3 2015Documento4 pagineAutomatic Control III Homework Assignment 3 2015salimNessuna valutazione finora

- Remove Trend and SeasonalityDocumento5 pagineRemove Trend and Seasonalityaset999Nessuna valutazione finora

- TMRCA EstimatesDocumento9 pagineTMRCA Estimatesdavejphys100% (1)

- Parameter Estimation of Switching SystemsDocumento11 pagineParameter Estimation of Switching SystemsJosé RagotNessuna valutazione finora

- Linear Stationary ModelsDocumento16 pagineLinear Stationary ModelsKaled AbodeNessuna valutazione finora

- Signals & Systems Questions Set 01Documento13 pagineSignals & Systems Questions Set 01Sachin Singh NegiNessuna valutazione finora

- Ma Kai-Kuang (Tutorial+Lectures)Documento157 pagineMa Kai-Kuang (Tutorial+Lectures)Ang KhengNessuna valutazione finora

- Parameter Estimation and Signal Processing TechniquesDocumento10 pagineParameter Estimation and Signal Processing Techniqueshu jackNessuna valutazione finora

- Unit 3Documento113 pagineUnit 3Jai Sai RamNessuna valutazione finora

- Assignment 1 Communication Theory EE304: Submit QTS.: 4, 7, 10 (A, B, E), 12, 14, 16 and 17Documento6 pagineAssignment 1 Communication Theory EE304: Submit QTS.: 4, 7, 10 (A, B, E), 12, 14, 16 and 17ayuNessuna valutazione finora

- Unit Iv: Continuous and Discrete Time SystemsDocumento32 pagineUnit Iv: Continuous and Discrete Time SystemsAnbazhagan SelvanathanNessuna valutazione finora

- Project 1Documento3 pagineProject 1Tharun NaniNessuna valutazione finora

- ME564/EE560/Aero550 HW4 Linearization and SimulationDocumento2 pagineME564/EE560/Aero550 HW4 Linearization and SimulationNASO522Nessuna valutazione finora

- Lecture 8: State-Space Models Based On Slides By: Probabilis C Graphical ModelsDocumento29 pagineLecture 8: State-Space Models Based On Slides By: Probabilis C Graphical ModelsRohit PandeyNessuna valutazione finora

- sns_2022_중간Documento2 paginesns_2022_중간juyeons0204Nessuna valutazione finora

- Analytic Torsion and L2-Torsion of Compact Locally Symmetric ManifoldsDocumento49 pagineAnalytic Torsion and L2-Torsion of Compact Locally Symmetric ManifoldsLeghrieb RaidNessuna valutazione finora

- Template Chaos CircuitDocumento14 pagineTemplate Chaos CircuitEnrique PriceNessuna valutazione finora

- Green's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)Da EverandGreen's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)Nessuna valutazione finora

- Transmutation and Operator Differential EquationsDa EverandTransmutation and Operator Differential EquationsNessuna valutazione finora

- Sol 7Documento3 pagineSol 7ThinhNessuna valutazione finora

- Sol 6Documento4 pagineSol 6ThinhNessuna valutazione finora

- Quiz SolDocumento1 paginaQuiz SolThinhNessuna valutazione finora

- Ps 6Documento2 paginePs 6ThinhNessuna valutazione finora

- Sol 4Documento3 pagineSol 4ThinhNessuna valutazione finora

- Sol 3Documento4 pagineSol 3ThinhNessuna valutazione finora

- ECE 534: Quiz: Monday September 12, 2011 7:00 P.M. - 8:00 P.M. 269 Everitt LaboratoryDocumento1 paginaECE 534: Quiz: Monday September 12, 2011 7:00 P.M. - 8:00 P.M. 269 Everitt LaboratoryThinhNessuna valutazione finora

- Sol 2Documento3 pagineSol 2ThinhNessuna valutazione finora

- Sol 1Documento3 pagineSol 1ThinhNessuna valutazione finora

- Ps 7Documento2 paginePs 7ThinhNessuna valutazione finora

- Ps 4Documento2 paginePs 4ThinhNessuna valutazione finora

- Ps 1Documento2 paginePs 1ThinhNessuna valutazione finora

- Ps 2Documento2 paginePs 2ThinhNessuna valutazione finora

- 534 CoversDocumento2 pagine534 CoversThinhNessuna valutazione finora

- Ps 3Documento2 paginePs 3ThinhNessuna valutazione finora

- Final SolDocumento3 pagineFinal SolThinhNessuna valutazione finora

- ECE 534: Exam I: Monday October 10, 2011 7:00 P.M. - 8:15 P.M. 269 Everitt LaboratoryDocumento1 paginaECE 534: Exam I: Monday October 10, 2011 7:00 P.M. - 8:15 P.M. 269 Everitt LaboratoryThinhNessuna valutazione finora

- FinalDocumento8 pagineFinalThinhNessuna valutazione finora

- ECE 534: Exam I: Monday October 10, 2011 7:00 P.M. - 8:15 P.M. 269 Everitt LaboratoryDocumento1 paginaECE 534: Exam I: Monday October 10, 2011 7:00 P.M. - 8:15 P.M. 269 Everitt LaboratoryThinhNessuna valutazione finora

- ECE 534: Exam II: Monday November 14, 2011 7:00 P.M. - 8:15 P.M. 103 TalbotDocumento2 pagineECE 534: Exam II: Monday November 14, 2011 7:00 P.M. - 8:15 P.M. 103 TalbotThinhNessuna valutazione finora

- Minimax OptimizationDocumento126 pagineMinimax OptimizationThinhNessuna valutazione finora

- Webinar ZigBee 3-0 Launch FINAL PDFDocumento54 pagineWebinar ZigBee 3-0 Launch FINAL PDFHayadi HamudaNessuna valutazione finora

- SM G318ML Tshoo 7Documento70 pagineSM G318ML Tshoo 7Martín Cabrera0% (1)

- Greensleeves 4 VersionsDocumento8 pagineGreensleeves 4 VersionspholixNessuna valutazione finora

- Murakami - Analysis of Stress Intensity Factors of Modes I, II and III For Inclined Surface Cracks of Arbitrary ShapeDocumento14 pagineMurakami - Analysis of Stress Intensity Factors of Modes I, II and III For Inclined Surface Cracks of Arbitrary ShapeDavid C HouserNessuna valutazione finora

- Ericsson Training PDFDocumento118 pagineEricsson Training PDFNuwan100% (1)

- Newtonian Mechanics (Physics Chap 2)Documento46 pagineNewtonian Mechanics (Physics Chap 2)anon_815277876Nessuna valutazione finora

- 08 Catalog Krisbow9 Handtool ProductDocumento76 pagine08 Catalog Krisbow9 Handtool ProductNajmi BalfasNessuna valutazione finora

- David KaplanDocumento5 pagineDavid Kaplanmerch100% (1)

- SQL Server Versions in Distribution, Parallelism and Big Data - Paper - 2016Documento9 pagineSQL Server Versions in Distribution, Parallelism and Big Data - Paper - 2016ngo thanh hungNessuna valutazione finora

- Universal JointDocumento17 pagineUniversal Jointakmalzuhri96Nessuna valutazione finora

- 577roof Bolt TypesDocumento15 pagine577roof Bolt TypesOmar HelalNessuna valutazione finora

- X28HC256Documento25 pagineX28HC256schwagerino100% (1)

- 4.0L Cec System: 1988 Jeep CherokeeDocumento17 pagine4.0L Cec System: 1988 Jeep CherokeefredericdiNessuna valutazione finora

- Landslides and Engineered Slopes - Chen 2008 PDFDocumento2.170 pagineLandslides and Engineered Slopes - Chen 2008 PDFLupu Daniel100% (2)

- EE4534 Modern Distribution Systems With Renewable Resources - OBTLDocumento7 pagineEE4534 Modern Distribution Systems With Renewable Resources - OBTLAaron TanNessuna valutazione finora

- Electron Configurations and PropertiesDocumento28 pagineElectron Configurations and PropertiesAinthu IbrahymNessuna valutazione finora

- Corrosion Performance of Alloys in Coal Combustion EnvironmentsDocumento11 pagineCorrosion Performance of Alloys in Coal Combustion Environmentsdealer82Nessuna valutazione finora

- Math 7-Q4-Module-3Documento16 pagineMath 7-Q4-Module-3Jeson GaiteraNessuna valutazione finora

- Part A1 Chapter 2 - ASME Code Calculations Stayed Surfaces Safety Valves FurnacesDocumento25 paginePart A1 Chapter 2 - ASME Code Calculations Stayed Surfaces Safety Valves Furnacesfujiman35100% (1)

- 2011 REV SAE Suspension Kiszco PDFDocumento112 pagine2011 REV SAE Suspension Kiszco PDFRushik KudaleNessuna valutazione finora

- "How To" Manual: Heat PumpsDocumento15 pagine"How To" Manual: Heat PumpsKarbonKale100% (3)

- Horizontal Directional Drill: More Feet Per Day. Performance Redefined. Narrow When NeededDocumento2 pagineHorizontal Directional Drill: More Feet Per Day. Performance Redefined. Narrow When NeededA2 BUDNessuna valutazione finora

- Euphonix - An OverviewDocumento5 pagineEuphonix - An OverviewNathan BreedloveNessuna valutazione finora

- Composite Materials For Civil Engineering Structures US Army Corps of EngineersDocumento66 pagineComposite Materials For Civil Engineering Structures US Army Corps of EngineersRicardo AlfaroNessuna valutazione finora

- Field Artillery Journal - Jul 1918Documento187 pagineField Artillery Journal - Jul 1918CAP History LibraryNessuna valutazione finora

- Bentley RM Bridge Advanced Detalii Module AditionaleDocumento4 pagineBentley RM Bridge Advanced Detalii Module AditionalephanoanhgtvtNessuna valutazione finora

- Discretization Methods of Fractional Parallel PIDDocumento4 pagineDiscretization Methods of Fractional Parallel PIDBaherNessuna valutazione finora

- Gcse Physics: WavesDocumento16 pagineGcse Physics: WavesThamiso GolwelwangNessuna valutazione finora

- Yield Management and ForecastingDocumento23 pagineYield Management and ForecastingMandeep KaurNessuna valutazione finora