Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Final Solutions

Caricato da

Anonymous jSTkQVC27bDescrizione originale:

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Final Solutions

Caricato da

Anonymous jSTkQVC27bCopyright:

Formati disponibili

I give my permission to post my exam score and my final grade on the CSC-506 web site.

Signed_____________________________

Name____________________________

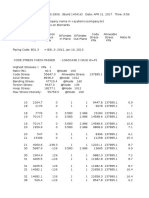

CSC-506: Architecture of Parallel Computers 1st Summer, 1999 Final Exam Question 1: (27 points 3 per step) A symmetrical multiprocessor computer system is implemented using the MESI cache coherency algorithm. Each processor has 64 kilobytes of addressable memory and a 2-way set-associative write-back cache with 256 sets and 16 bytes per line. The LRU replacement policy is used. Assume that each cache is empty when we start executing programs on the two processors, and they make the following sequence of references. Use the tables provided to give the tag, set, line number, MESI state, and data for non-empty cache lines, and the contents of memory after each step of the sequence. Be sure to fill in the complete contents of occupied cache lines and memory at each step. Use hexadecimal notation for all numbers. Initial Memory Memory Data Loc 0 4 8 C 0B5x 1B5x 2B5x 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000

1. Processor 0 reads from location 0B5C Processor 0 cache Tag RAM Tag Set Line MESI 0 B5 0 E

0 0000

Data RAM 4 8 0000 0000

C 0000 Memory Loc 0 0000 0000 0000 Memory Data 4 8 0000 0000 0000 0000 0000 0000 C 0000 0000 0000

Processor 1 cache Tag RAM Tag Set Line MESI

Data RAM 4 8

0B5x 1B5x 2B5x

CSC506/NCSU/GQK/1999

Page 1

2. Processor 1 writes 1111 to location 0B54 Processor 0 cache Tag RAM Tag Set Line MESI 0 B5 0 I

0 ----

Data RAM 4 8 -------

C ---Memory Loc 0B5x 1B5x 2B5x C 0000 0 0000 0000 0000 Memory Data 4 8 0000 0000 0000 0000 0000 0000 C 0000 0000 0000

Processor 1 cache Tag RAM Tag Set Line MESI 0 B5 0 M

0 0000

Data RAM 4 8 1111 0000

3. Processor 0 reads from location 0B58 Processor 0 cache Tag RAM Tag Set Line MESI 0 B5 0 S

0 0000

Data RAM 4 8 1111 0000

C 0000 Memory Loc 0B5x 1B5x 2B5x C 0000 0 0000 0000 0000 Memory Data 4 8 1111 0000 0000 0000 0000 0000 C 0000 0000 0000

Processor 1 cache Tag RAM Tag Set Line MESI 0 B5 0 S

0 0000

Data RAM 4 8 1111 0000

CSC506/NCSU/GQK/1999

Page 2

4. Processor 1 writes 2222 to location 1B54 Processor 0 cache Tag RAM Tag Set Line MESI 0 B5 0 S

0 0000

Data RAM 4 8 1111 0000

C 0000 Memory Loc 0B5x 1B5x 2B5x C 0000 0000 0 0000 0000 0000 Memory Data 4 8 1111 0000 0000 0000 0000 0000 C 0000 0000 0000

Processor 1 cache Tag RAM Tag Set Line MESI 0 1 B5 B5 0 1 S M

0 0000 0000

Data RAM 4 8 1111 2222 0000 0000

5. Processor 0 writes 3333 to location 1B5C Processor 0 cache Tag RAM Tag Set Line MESI 0 1 B5 B5 0 1 S M

0 0000 0000

Data RAM 4 8 1111 2222 0000 0000

C 0000 3333 Memory Loc 0B5x 1B5x 2B5x C 0000 ---0 0000 0000 0000 Memory Data 4 8 1111 2222 0000 0000 0000 0000 C 0000 0000 0000

Processor 1 cache Tag RAM Tag Set Line MESI 0 1 B5 B5 0 1 S I

0 0000 ----

Data RAM 4 8 1111 ---0000 ----

CSC506/NCSU/GQK/1999

Page 3

6. Processor 0 reads from location 0B5C Processor 0 cache Tag RAM Tag Set Line MESI 0 1 B5 B5 0 1 S M

0 0000 0000

Data RAM 4 8 1111 2222 0000 0000

C 0000 3333 Memory Loc 0B5x 1B5x 2B5x C 0000 ---0 0000 0000 0000 Memory Data 4 8 1111 2222 0000 0000 0000 0000 C 0000 0000 0000

Processor 1 cache Tag RAM Tag Set Line MESI 0 1 B5 B5 0 1 S I

0 0000 ----

Data RAM 4 8 1111 ---0000 ----

7. Processor 1 writes 4444 to location 2B50 Processor 0 cache Tag RAM Tag Set Line MESI 0 1 B5 B5 0 1 S M

0 0000 0000

Data RAM 4 8 1111 2222 0000 0000

C 0000 3333 Memory Loc 0B5x 1B5x 2B5x C 0000 0000 0 0000 0000 0000 Memory Data 4 8 1111 2222 0000 0000 0000 0000 C 0000 0000 0000

Processor 1 cache Tag RAM Tag Set Line MESI 0 2 B5 B5 0 1 S M

0 0000 4444

Data RAM 4 8 1111 0000 0000 0000

Note: Some students erroneously victimized the line 0 with tag 0 here because of strict LRU when line 1 was available because it was marked invalid.

CSC506/NCSU/GQK/1999

Page 4

8. Processor 0 reads from location 2B5C Processor 0 cache Tag RAM Tag Set Line MESI 0 2 B5 B5 0 1 S S

0 0000 4444

Data RAM 4 8 1111 0000 0000 0000

C 0000 0000 Memory Loc 0B5x 1B5x 2B5x C 0000 0000 0 0000 0000 4444 Memory Data 4 8 1111 2222 0000 0000 0000 0000 C 0000 3333 0000

Processor 1 cache Tag RAM Tag Set Line MESI 0 2 B5 B5 0 1 S S

0 0000 4444

Data RAM 4 8 1111 0000 0000 0000

9. Processor 0 writes 5555 to location 2B5C Processor 0 cache Tag RAM Tag Set Line MESI 0 2 B5 B5 0 1 S M

0 0000 4444

Data RAM 4 8 1111 0000 0000 0000

C 0000 5555 Memory Loc 0B5x 1B5x 2B5x C 0000 ---0 0000 0000 4444 Memory Data 4 8 1111 2222 0000 0000 0000 0000 C 0000 3333 0000

Processor 1 cache Tag RAM Tag Set Line MESI 0 2 B5 B5 0 1 S I

0 0000 ----

Data RAM 4 8 1111 ---0000 ----

CSC506/NCSU/GQK/1999

Page 5

Question 2. (15 points) The following 8 x 8 Omega (shuffle-exchange) network shows P3 accessing M1. In the box provided, identify ALL other connections that are blocked by the switch while P3 is accessing M1. DO NOT identify any connections that involve either P3 or M1, because they are blocked at the processor or at the memory rather than in the switch.

P0 P1 P2 P3 P4 P5 P6 P7

M0 M1 M2 M3 M4 M5 M6 M7

Processor

to

Memory M0 M0 M0 M2 M3 M M M M M

1. P1 2. P5 3. P7 4. P7 5. P7 6. P 7. P 8. P 9. P 10. P

CSC506/NCSU/GQK/1999

Page 6

Question 3. A two-processor SMP system uses a shared bus to access main memory. The data bus is 32 bits wide and operates at a clock rate of 100 MHz. Each processor has a private write-back cache. The internal clock rate of each processor is 500 MHz and average cache access rate is 0.3 instruction fetches, 0.5 data fetches per instruction. This unified cache uses 8 words per line and has an access time of 1 clock (2 ns). The main memory uses 100 MHz SDRAM with a RAS access time of 5 clocks. Assume that the processing rate is limited by the data access rate (that is, 100% utilization of the processor-to-cache bus). Also assume that we access a requested (missed) word first from the SDRAM on a cache miss, and that the processor can proceed (as soon as the requested word reaches the cache) while the remaining words of the cache line are filled. a) (5 points) What is the effective MIPS rate of each processor running as a uniprocessor with a cache hit rate of 100%? 0.3 + 0.5 = 0.8 accesses per instruction. 2 ns/access x 0.8 accesses per instruction = 1.6 ns per instruction 625 MIPS.

b) (5 points) What is the effective MIPS rate of each processor running as a uniprocessor with a cache hit rate of 99%? teff = 2 + (1 - 0.99)(10)(5) = 2.5 ns. 2.5 ns per access x 0.8 accesses per inst. = 2 ns per instruction 500 MIPS.

CSC506/NCSU/GQK/1999

Page 7

c) (5 points) Without considering 2nd order effects (bus contention slowing down each of the processors), what is the memory bus utilization with the two processors running in the multiprocessor configuration with a hit rate of 99%? Each processor uses the bus for (10)(5) = 50 ns access plus 7 x 10 ns = 120 ns cache fill time out of every 100 accesses (0.01 miss rate). Out of the 100 accesses, 99 are at the cache hit time of 2 ns and one is at the miss time of 2 + 50 ns main memory access time. Therefore, each processor is using the bus (1)(50 + 70)/((100)(2) + (1)(50)) = 48%. With two processors, the bus utilization is (2)(48%) = 96%.

d) (5 points) What would the bus utilization be if we changed to a transaction bus that runs at 500 MHz? Assume that we divide the memory into a sufficient number of banks so that we do not have contention for the actual memory. Also assume that we use one bus cycle to make a memory request and that we complete the memory access of 8 words before we reconnect to the bus to transfer the data words to the processor and cache. Each processor would use the bus for 1 cycle to make the request and 8 cycles to transfer the eight data words for a total of 9 cycles once out of every 100 accesses (.01 miss rate). Out of the 100 accesses, 99 are at the cache hit time of 2 ns and one is at the miss time of 2 (cache time) + 2 (memory request over the bus) + 50 (access first word) + 70 (read remainder of the cache line) + 2 (transfer the first word over the bus). Therefore, each processor is using the bus (2 + 16)/(200 + 2 + 50 + 70 + 2) = 5.6%. With two processors, the bus utilization is (2)(5.6%) = 11.1%.

e) (5 points) What is the MIPS rate for each of the processors using the transaction bus as described in part d)? Our effective memory access time is longer because we have to wait the additional time to access main memory on a cache miss. teff = 2 + (1 - 0.99)(2 + 50 + 70 + 2) = 3.24 ns. 3.24 ns per access x 0.8 accesses per inst. = 2.592 ns per instruction 386 MIPS.

CSC506/NCSU/GQK/1999

Page 8

Question 4. Our processor runs with a 100 MHz clock and uses an instruction pipeline with the following stages: instruction fetch = 2 clocks, instruction decode = 1 clock, fetch operands = 2 clocks, execute = 1 clock, and store result = 2 clocks. Assume a sequence of instructions where one of the inputs to every instruction depends on the results of the previous instruction. a) (5 points) What is the MIPS rate of the processor where we do not implement internal forwarding? b) (5 points) What is the MIPS rate of the processor where we do implement internal forwarding? 1 Clock Operation Inst Fetch Inst Decode Op Fetch Execute Op Store 2 3 4 5 6 7 8 9 10 11 12 13 14

2 1

3 2

4 3

4W 4W

5 4

5W 5W

3W 3W 3W 2 2

4W 4W 4W 3

2W 2W 2W 1 1 1

3W 3W 3W 2 2 2

a) We lose 3 cycles in the operand fetch unit waiting for the results of the previous instruction to be stored. So we complete one instruction every 5 clocks. With a clock rate of 100 MHz, we get 100/5 = 20 MIPS. 1 Clock Operation Inst Fetch Inst Decode Op Fetch Execute Op Store 2 3 4 5 6 7 8 9 10 11 12 13 14

2 1

3 2

4 3

5 4

6 5

7 6

2 1

3 2

4 3

5 4

6 5

b) The execute stage can forward its output directly to one of the inputs of the next instruction as well as to the operand store unit. We lose no cycles waiting for previous results and so our processing rate is limited only by the longest stage in the pipeline, which is two cycles. With a clock rate of 100 MHz, we get 100/2 = 50 MIPS.

CSC506/NCSU/GQK/1999

Page 9

Question 5. Use the following collision vector: 0 1 0 1 1 0 1

a) (5 points) Draw the reduced state-diagram. b) (5 points) Show the maximum-rate cycle and pipeline efficiency.

0101101

3,6 8

1111111

1101101

3,6

The maximum rate cycle is shown in bold. It follows the sequence 3, 3, 3 . . . cycles for a total of 1 initiation every 3 cycles, giving 1/3 = 33% efficiency for the pipeline. It is not the greedy cycle because we must wait and initiate at the third clock to get into the cycle, bypassing the first available initiation from the initial state.

CSC506/NCSU/GQK/1999

Page 10

Question 6. A company builds a vector computer with four parallel vector processors that each run at a clock rate of 500 MHz. The floating point add pipeline is 6 stages and the floating point multiply unit is 11 stages. Assume that memory bandwidth is sufficient to feed the pipelines. a) (5 points) What is the effective speedup of vector additions for input vectors of length 4? b) (5 points) What is the effective speedup of vector multiplications for input vectors of length 1000?

Speedup

Best Serial Time Parallel Execution Time

Considering the number of stages in a pipeline (k) and the length of the input vectors (n), this is:

S (k ) =

nk k + ( n 1)

The total length of the add pipeline is 6 stages, and we have 4 pipelines operating in parallel (the four parallel processors). The best serial time is nk, the number of vector elements times the 6 stages. The parallel time is the time it takes to get all of the vector elements through the four parallel pipelines. So our actual speedup is then: a) For the additions on vectors of length 4, the vectors are split into 4 subvectors of length 1, so each processor gets a single element:

S= 4( 6) =4 6 + (1 1)

b) For the multiplication on vectors of length 1000, each processor gets subvectors of length 1000/4 = 250. The speedup for the set of four 11-stage pipelines is then: S= 1000(11) = 42.3 11 + ( 250 1)

Question 7. (3 points) Why shouldnt we use a normal STORE instruction to clear a lock (set it to zero) in processor systems that do not implement strong consistency? We need to use explicit synchronizing instructions in order to provide weak or release consistency. If the store instruction were declared a synchronizing instruction, then the processor would need to ensure that all instructions completed processing in a pipeline before starting execution of any store instruction. This would severely impact performance.

CSC506/NCSU/GQK/1999

Page 11

Potrebbero piacerti anche

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Da EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Valutazione: 4.5 su 5 stelle4.5/5 (119)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDa EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaValutazione: 4.5 su 5 stelle4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingDa EverandThe Little Book of Hygge: Danish Secrets to Happy LivingValutazione: 3.5 su 5 stelle3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDa EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryValutazione: 3.5 su 5 stelle3.5/5 (231)

- Grit: The Power of Passion and PerseveranceDa EverandGrit: The Power of Passion and PerseveranceValutazione: 4 su 5 stelle4/5 (587)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDa EverandNever Split the Difference: Negotiating As If Your Life Depended On ItValutazione: 4.5 su 5 stelle4.5/5 (838)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDa EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeValutazione: 4 su 5 stelle4/5 (5794)

- Team of Rivals: The Political Genius of Abraham LincolnDa EverandTeam of Rivals: The Political Genius of Abraham LincolnValutazione: 4.5 su 5 stelle4.5/5 (234)

- Shoe Dog: A Memoir by the Creator of NikeDa EverandShoe Dog: A Memoir by the Creator of NikeValutazione: 4.5 su 5 stelle4.5/5 (537)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDa EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyValutazione: 3.5 su 5 stelle3.5/5 (2219)

- The Emperor of All Maladies: A Biography of CancerDa EverandThe Emperor of All Maladies: A Biography of CancerValutazione: 4.5 su 5 stelle4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDa EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreValutazione: 4 su 5 stelle4/5 (1090)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDa EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersValutazione: 4.5 su 5 stelle4.5/5 (344)

- Her Body and Other Parties: StoriesDa EverandHer Body and Other Parties: StoriesValutazione: 4 su 5 stelle4/5 (821)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDa EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceValutazione: 4 su 5 stelle4/5 (894)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDa EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureValutazione: 4.5 su 5 stelle4.5/5 (474)

- The Unwinding: An Inner History of the New AmericaDa EverandThe Unwinding: An Inner History of the New AmericaValutazione: 4 su 5 stelle4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)Da EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Valutazione: 4 su 5 stelle4/5 (98)

- On Fire: The (Burning) Case for a Green New DealDa EverandOn Fire: The (Burning) Case for a Green New DealValutazione: 4 su 5 stelle4/5 (73)

- Direct Approach to Physical Data Vault DesignDocumento32 pagineDirect Approach to Physical Data Vault DesignJohnNessuna valutazione finora

- OPE Displacement Urea Solution LineDocumento80 pagineOPE Displacement Urea Solution LineAnonymous jSTkQVC27bNessuna valutazione finora

- KMC PDFDocumento5 pagineKMC PDFAnonymous jSTkQVC27bNessuna valutazione finora

- 14th Finance CommissionDocumento5 pagine14th Finance CommissionSarfaraj KhanNessuna valutazione finora

- Protein-Misfolding Diseases - 2002Documento2 pagineProtein-Misfolding Diseases - 2002isaacfg1Nessuna valutazione finora

- Finite Element Method: January 12, 2004Documento26 pagineFinite Element Method: January 12, 2004happyshamu100% (1)

- Carbon Capture Fallacy 2015 PDFDocumento7 pagineCarbon Capture Fallacy 2015 PDFAnonymous jSTkQVC27bNessuna valutazione finora

- Concrete Mix Design GuideDocumento11 pagineConcrete Mix Design GuideAnonymous jSTkQVC27bNessuna valutazione finora

- Assignment9 - Intro To Geography UCONN Fall 2016Documento1 paginaAssignment9 - Intro To Geography UCONN Fall 2016Anonymous jSTkQVC27bNessuna valutazione finora

- OijadinDocumento29 pagineOijadinbbot909Nessuna valutazione finora

- WTO and Global Food SecurityDocumento5 pagineWTO and Global Food SecurityAnonymous jSTkQVC27bNessuna valutazione finora

- Notes Pp1 26Documento26 pagineNotes Pp1 26Anonymous jSTkQVC27bNessuna valutazione finora

- MathDocumento1 paginaMathAnonymous jSTkQVC27bNessuna valutazione finora

- I Need A Book: This Is The Free MethodDocumento2 pagineI Need A Book: This Is The Free MethodAnonymous jSTkQVC27bNessuna valutazione finora

- Carbon Capture Fallacy 2015 PDFDocumento7 pagineCarbon Capture Fallacy 2015 PDFAnonymous jSTkQVC27bNessuna valutazione finora

- Edit registration details formDocumento1 paginaEdit registration details formAnonymous jSTkQVC27bNessuna valutazione finora

- 017 InstDocumento5 pagine017 InstAnonymous jSTkQVC27bNessuna valutazione finora

- B E Regulation of Admission and Payment of Fees GSFC University FINALDocumento14 pagineB E Regulation of Admission and Payment of Fees GSFC University FINALAnonymous jSTkQVC27bNessuna valutazione finora

- 19-22 Apr On Semiconductor ShuteshDocumento1 pagina19-22 Apr On Semiconductor ShuteshAnonymous jSTkQVC27bNessuna valutazione finora

- Municipal MarchDocumento19 pagineMunicipal MarchJuanitoPerezNessuna valutazione finora

- GOES Global Online Enrollment SystemDocumento1 paginaGOES Global Online Enrollment SystemAnonymous jSTkQVC27bNessuna valutazione finora

- 56456474Documento1 pagina56456474Anonymous jSTkQVC27bNessuna valutazione finora

- FrenchDocumento1 paginaFrenchAnonymous jSTkQVC27bNessuna valutazione finora

- JHGHJDocumento1 paginaJHGHJAnonymous jSTkQVC27bNessuna valutazione finora

- NEXUS-5000 Series - NEXUS 5548UP Proposed As Core Switch-2 Nos. Without Expansion Module + Nexus 2K As Top of RACK SwitchDocumento29 pagineNEXUS-5000 Series - NEXUS 5548UP Proposed As Core Switch-2 Nos. Without Expansion Module + Nexus 2K As Top of RACK SwitchAnonymous jSTkQVC27bNessuna valutazione finora

- SfdsdsDocumento1 paginaSfdsdsAnonymous jSTkQVC27bNessuna valutazione finora

- GHDGDDocumento1 paginaGHDGDAnonymous jSTkQVC27b100% (1)

- E740199 KXPA100 Owner's Manual Rev A5Documento55 pagineE740199 KXPA100 Owner's Manual Rev A5Anonymous jSTkQVC27bNessuna valutazione finora

- Discover Gann's Secret Time FactorDocumento1 paginaDiscover Gann's Secret Time FactorAnonymous jSTkQVC27bNessuna valutazione finora

- BVCVBCBDocumento1 paginaBVCVBCBAnonymous jSTkQVC27bNessuna valutazione finora

- Discover Gann's Secret Time FactorDocumento1 paginaDiscover Gann's Secret Time FactorAnonymous jSTkQVC27bNessuna valutazione finora

- Advanced Data Structures Lab ManualDocumento84 pagineAdvanced Data Structures Lab ManualKarthikeya Sri100% (1)

- JVM Core Dump IssueDocumento11 pagineJVM Core Dump Issuesuresh_tNessuna valutazione finora

- External DPL FunctionsDocumento13 pagineExternal DPL FunctionsGopinath RNessuna valutazione finora

- Ccna 1 Module 2 v4.0Documento7 pagineCcna 1 Module 2 v4.0ccnatrainingNessuna valutazione finora

- Technical Note 538: Increasing The Log Level For The Actuate E.Reporting Server and Actuate Reportcast or Active PortalDocumento9 pagineTechnical Note 538: Increasing The Log Level For The Actuate E.Reporting Server and Actuate Reportcast or Active PortalNasdaq NintyNessuna valutazione finora

- Web technologies projects practicalsDocumento2 pagineWeb technologies projects practicalsRaghav SingalNessuna valutazione finora

- Krunk ErDocumento32 pagineKrunk ErXgxgx YxyyxxcNessuna valutazione finora

- Extract Paragraphs From PDFDocumento2 pagineExtract Paragraphs From PDFTerranceNessuna valutazione finora

- Role Based Access Controls - How Cloud ERP Builds on EBS FoundationDocumento34 pagineRole Based Access Controls - How Cloud ERP Builds on EBS FoundationManoj Sharma100% (1)

- Repair Suspect Database in SQL Server - Parte 2Documento2 pagineRepair Suspect Database in SQL Server - Parte 2João Heytor K. PereiraNessuna valutazione finora

- Endsem With Sol PDFDocumento16 pagineEndsem With Sol PDFMita BiswasNessuna valutazione finora

- Cse Btech2016-17Documento25 pagineCse Btech2016-17Vruttant Balde (Nyuke)Nessuna valutazione finora

- Module 6 - Normalization PDFDocumento10 pagineModule 6 - Normalization PDFJacob Satorious ExcaliburNessuna valutazione finora

- Ubuntu Server Guide PDFDocumento284 pagineUbuntu Server Guide PDF龙王元尊Nessuna valutazione finora

- C Interview TipsDocumento109 pagineC Interview TipsKiran100% (1)

- DataGrokr Technical AssignmentDocumento4 pagineDataGrokr Technical AssignmentSidkrishNessuna valutazione finora

- 8051 Memory Map PDFDocumento6 pagine8051 Memory Map PDFCh'ng ShufenNessuna valutazione finora

- Setting The DB2 Configuration ParametersDocumento6 pagineSetting The DB2 Configuration ParametersRobson Silva AguiarNessuna valutazione finora

- Data - Structures Using C PDFDocumento122 pagineData - Structures Using C PDFjegadeeswarNessuna valutazione finora

- Cockroach DBDocumento8 pagineCockroach DBabcdNessuna valutazione finora

- Oracle9i: Advanced PL/SQL: Student GuideDocumento10 pagineOracle9i: Advanced PL/SQL: Student GuidevineetNessuna valutazione finora

- 4GB Toshiba eMMC ModuleDocumento33 pagine4GB Toshiba eMMC Modulelin100% (1)

- An Introduction To Ipsec: Paul AsadoorianDocumento29 pagineAn Introduction To Ipsec: Paul Asadoorianjaymit123Nessuna valutazione finora

- Suv400s3 UsDocumento2 pagineSuv400s3 UsLjubisa PejinNessuna valutazione finora

- Actual Stored Procedure CodeDocumento7 pagineActual Stored Procedure CodeJason HallNessuna valutazione finora

- LogDocumento7 pagineLogAlexandra SavanNessuna valutazione finora

- CH3 AnswerDocumento11 pagineCH3 AnswerAmandeep SinghNessuna valutazione finora

- KT User GuideDocumento20 pagineKT User GuideJahedul IslamNessuna valutazione finora

- MutexDocumento2 pagineMutexFatima BalochNessuna valutazione finora