Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Analog Computation

Caricato da

sachin bCopyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Analog Computation

Caricato da

sachin bCopyright:

Formati disponibili

operand in parallel in every cycle.

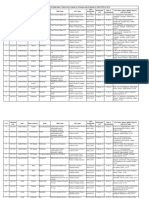

Figure 1 illustrates the difference in memory architectures for early general-purpose processors and DSP processors. Since many DSP algorithms (such as FIR filters) consume two data operands per instruction (e.g., a data sample and a coefficient), a further optimization commonly used is to include a small bank of RAM near the processor core that is used as an instruction cache. When a small group of instructions is executed repeatedly (i.e., in a loop), the cache is loaded with those instructions, freeing the instruction bus to be used for data fetches instead of instruction fetches thus enabling the processor to execute a MAC in a single cycle. High memory bandwidth requirements are often further supported via dedicated hardware for calculating memory addresses. These address generation units operate in parallel with the DSP processor s main execution units, enabling it to access data at new locations in memory (for example, stepping through a vector of coefficients) without pausing to calculate the new address. Memory accesses in DSP algorithms tend to exhibit very predictable patterns; for example, for each sample in an FIR filter, the filter coefficients are accessed sequentially from start to finish for each sample, then accesses start over from the beginning of the coefficient vector when processing the next input sample. This is in contrast to other types of computing tasks, such as database processing, where accesses to memory are less predictable. DSP processor address generation units take advantage of this predictability by supporting specialized addressing modes that enable the processor to efficiently access data in the patterns commonly found in DSP algorithms. The most common of these modes is register-indirect addressing with post-increment, which is used to automatically increment the address pointer for algorithms where repetitive computations are performed on a series of data stored sequentially in memory. Without this feature, the programmer would need to spend instructions explicitly incrementoperand in parallel in every cycle. Figure 1 illustrates the difference in memory architectures for early general-purpose processors and DSP processors. Since many DSP algorithms (such as FIR filters) consume two data operands per instruction (e.g., a data sample and a coefficient), a further optimization commonly used is to include a small bank of RAM near the processor core that is used as an instruction cache. When a small group of instructions is executed repeatedly (i.e., in a loop), the cache is loaded with those instructions, freeing the instruction bus to be used for data fetches instead of instruction fetches thus enabling the processor to execute a MAC in a single cycle. High memory bandwidth requirements are often further supported via dedicated hardware for calculating memory addresses. These address generation units operate in parallel with the DSP processor s main execution units, enabling it to access data at new locations in memory (for example, stepping through a vector of coefficients) without pausing to calculate the new address. Memory accesses in DSP algorithms tend to exhibit very predictable patterns; for example, for each sample in an

FIR filter, the filter coefficients are accessed sequentially from start to finish for each sample, then accesses start over from the beginning of the coefficient vector when processing the next input sample. This is in contrast to other types of computing tasks, such as database processing, where accesses to memory are less predictable. DSP processor address generation units take advantage of this predictability by supporting specialized addressing modes that enable the processor to efficiently access data in the patterns commonly found in DSP algorithms. The most common of these modes is register-indirect addressing with post-increment, which is used to automatically increment the address pointer for algorithms where repetitive computations are performed on a series of data stored sequentially in memory. Without this feature, the programmer would need to spend instructions explicitly incrementing the address pointer. Many DSP processors also support circular addressing, which allows the processor to access a block of data sequentially and then automatically wrap around to the beginning address exactly the pattern used to access coefficients in FIR filtering. Circular addressing is also very helpful in implementing first-in, first-out buffers, commonly used for I/O and for FIR filter delay lines. Data Format Most DSP processors use a fixed-point numeric data type instead of the floating-point format most commonly used in scientific applications. In a fixed-point format, the binary point (analogous to the decimal point in base 10 math) is located at a fixed location in the data word. This is in contrast to floating-point formats, in which numbers are expressed using an exponent and a mantissa; the binary point essentoperand in parallel in every cycle. Figure 1 illustrates the difference in memory architectures for early general-purpose processors and DSP processors. Since many DSP algorithms (such as FIR filters) consume two data operands per instruction (e.g., a data sample and a coefficient), a further optimization commonly used is to include a small bank of RAM near the processor core that is used as an instruction cache. When a small group of instructions is executed repeatedly (i.e., in a loop), the cache is loaded with those instructions, freeing the instruction bus to be used for data fetches instead of instruction fetches thus enabling the processor to execute a MAC in a single cycle. High memory bandwidth requirements are often further supported via dedicated hardware for calculating memory addresses. These address generation units operate in parallel with the DSP processor s main execution units, enabling it to access data at new locations in memory (for example, stepping through a vector of coefficients) without pausing to calculate the new address. Memory accesses in DSP algorithms tend to exhibit very predictable patterns; for example, for each sample in an FIR filter, the filter coefficients are accessed sequentially from start to finish for each sample, then accesses start over from the beginning of the coefficient vector when processing the next input sample. This is in contrast to other types of computing tasks, such as database processing, where accesses to memory are less predictable. DSP

processor address generation units take advantage of this predictability by supporting specialized addressing modes that enable the processor to efficiently access data in the patterns commonly found in DSP algorithms. The most common of these modes is register-indirect addressing with post-increment, which is used to automatically increment the address pointer for algorithms where repetitive computations are performed on a series of data stored sequentially in memory. Without this feature, the programmer would need to spend instructions explicitly incrementing the address pointer. Many DSP processors also support circular addressing, which allows the processor to access a block of data sequentially and then automatically wrap around to the beginning address exactly the pattern used to access coefficients in FIR filtering. Circular addressing is also very helpful in implementing first-in, first-out buffers, commonly used for I/O and for FIR filter delay lines. Data Format Most DSP processors use a fixed-point numeric data type instead of the floating-point format most commonly used in scientific applications. In a fixed-point format, the binary point (analogous to the decimal point in base 10 math) is located at a fixed location in the data word. This is in contrast to floating-point formats, in which numbers are expressed using an exponent and a mantissa; the binary point essentially floats based on the value of the exponent. Floating-point formats allow a much wider range of values to be represented, and virtually eliminate the hazard of numeric overflow in most applications. DSP apially floats based on the value of the exponent. Floating-point formats allow a much wider range of values to be represented, and virtually eliminate the hazard of numeric overflow in most applications. DSP aping the address pointer. Many DSP processors also support circular addressing, which allows the processor to access a block of data sequentially and then automatically wrap around to the beginning address exactly the pattern used to access coefficients in FIR filtering. Circular addressing is also very helpful in implementing first-in, first-out buffers, commonly used for I/O and for FIR filter delay lines. Data Format Most DSP processors use a fixed-point numeric data type instead of the floating-point format most commonly used in scientific applications. In a fixed-point format, the binary point (analogous to the decimal point in base 10 math) is located at a fixed location in the data word. This is in contrast to floating-point formats, in which numbers are expressed using an exponent and a mantissa; the binary point essentially floats based on the value of the exponent. Floating-point formats allow a much wider range of values to be represented, and virtually eliminate the hazard of numeric overflow in most applications. DSP apallowing product upgrades or fixes. They are often more cost-effective (and less risky) than custom hardware, particularly for low-volume applications, where the development cost of custom ICs may be prohibitive. And in comparison to other types of microprocessors, DSP processors often have an advantage in terms of speed,

cost, and energy efficiency. In this article, we trace the evolution of DSP processors, from early architectures to current state-of-the-art devices. We highlight some of the key differences among architectures, and compare their strengths and weaknesses. Finally, we discuss the growing class of generalpurpose processors that have been enhanced to address the needs of DSP applications. DSP Algorithms Mold DSP Architectures From the outset, DSP processor architectures have been molded by DSP algorithms. For nearly every feature found in a DSP processor, there are associated DSP algorithms whose computation is in some way eased by inclusion of this feature. Therefore, perhaps the best way to understand the evolution of DSP architectures is to examine typical DSP algorithms and identify how their computational requirements have influenced the architectures of DSP processors. As a case study, we will consider one of the most common signal processing algorithms, the FIR filter. Fast Multipliers The FIR filter is mathematically expressed as , where is a vector of input data, and is a vector of filter coefficients. For each tap of the filter, a data sample is multiplied by a filter coefficient, with the result added to a running sum for all of the taps (for an introduction to DSP concepts and filter theory, refer to [2]). Hence, the main component of the FIR filter algorithm is a dot product: multiply and add, multiply and add. These operations are not unique to the FIR filter algorithm; in fact, multiplication (often combined with accumulation of products) is one of the most common operations performed in signal processing convolution, IIR filtering, and Fourier transforms also all involve heavy use of multiply-accumulate operations. Originally, microprocessors implemented multiplications by a series of shift and add operations, each of which consumed one or more clock cycles. In 1982, however, Texas Instruments (TI) introduced the first commercially successful DSP processor, the TMS32010, which incorporated specialized hardware to enable it to compute a multiplication in a single clock cycle. As might be expected, faster multiplication hardware yields faster performance in many DSP algorithms, and for this reason all modern DSP processors include at least one dedicated singlecycle multiplier or combined multiply-accumulate (MAC) unit [1]. Sxh x h The Evolution of DSP Processors By Jennifer Eyre and Jeff Bier, Berkeley Design Technology, Inc. (BDTI) (Note: A version of this white paper has appeared in IEEE Signal Processing Maga zine) Copyright 2000 Berkeley Design Technology, Inc. PAGE 2 of 9 Multiple Execution Units DSP applications typically have very high computational requirements in comparison to other types of computing tasks, since they often must execute DSP algorithms (such as FIR filtering) in real time on lengthy segments of signals sampled at 10-100 KHz or higher. Hence, DSP processors

often include several independent execution units that are capable of operating in parallel for example, in addition to the MAC unit, they typically contain an arithmeticlogic unit (ALU) and a shifter. Efficient Memory Accesses Executing a MAC in every clock cycle requires more than just a single-cycle MAC unit. It also requires the ability to fetch the MAC instruction, a data sample, and a filter coefficient from memory in a single cycle. Hence, good DSP performance requires high memory bandwidth higher than was supported on the general-purpose microprocessors of the early 1980 s, which typically contained a single bus connection to memory and could only make one access per clock cycle. To address the need for increased memory bandwidth, early DSP processors developed different memory architectures that could support multiple memory accesses per cycle. The most common approach (still commonly used) was to use two or more separate banks of memory, each of which was accessed by its own bus and could be read or written during every clock cycle. Often, instructions were stored in one memory bank, while data was stored in another. With this arrangement, the processor could fetch an instruction and a data operand in parallel in every cycle. Figure 1 illustrates the difference in memory architectures for early general-purpose processors and DSP processors. Since many DSP algorithms (such as FIR filters) consume two data operands per instruction (e.g., a data sample and a coefficient), a further optimization commonly used is to include a small bank of RAM near the processor core that is used as an instruction cache. When a small group of instructions is executed repeatedly (i.e., in a loop), the cache is loaded with those instructions, freeing the instruction bus to be used for data fetches instead of instruction fetches thus enabling the processor to execute a MAC in a single cycle. High memory bandwidth requirements are often further supported via dedicated hardware for calculating memory addresses. These address generation units operate in parallel with the DSP processor s main execution units, enabling it to access data at new locations in memory (for example, stepping through a vector of coefficients) without pausing to calculate the new address. M????????????????????????????????????????????????????????????????????????????? ?????????????????????????????????????????????????????????????????????????? ??????????????????????????????????????????????????????????????????????????? ??????????????????????????????????????????????????????????????????????????? ??? ???????? ??????????????????????????????????????????????????????????? ??? ?????????????? ?????? ??? ????????????????????? ????????????? ??? ?????????? ??? ?????????????? ????????????????????????????????????????????????????????????????????????????? ???? ??????????? ??? ?????????????? ???? ????????????? ???????????? ??? ????? ?? ? ???? ???????????????????????????????????????????????????????????????????????? ???????????????????????????????????????????????????????????????????????????? ???????????????????????????????????????????????????????????????????? ???? ??? ???? ???????????? ??? ???? ?????? ????????? ????? ???? ????????? ?? ??????????????????????????????????????????????????????????????????????????? ?????????????????????????????????????????????????????????????????????????

?????????????????????????????????????????????????????????????????????????? ??????????????????????????????????????????????????????????????????????????? ?????????????????????????????????????????????????????????????????????????????? ????????????????????????????????????????????????????????????????????????????? ??????????????????????????????????????? ?????????????????????????????????????????????????????????????????? ??????????????????????????????????????????????????????????????????????? ???????? ????? ????? ?????? ???????????????? ???????? ??? ????? ?????????? ????? ???? ????? ??? ??????? ???? ?????? ?????????????? ??????? ???? ???emory accesses in D SP algorithms tend to exhibit very predictable patterns; for example, for each sample in an FIR filter, the filter coefficients are accessed sequentially from start to finish for each sample, then accesses start over from the beginning of the coefficient vector when processing the next input sample. This is in contrast to other types of computing tasks, such as database processing, where accesse??????????????????????????????????????????????????????????????????? ?????????? ?????????????????????????????????????????????????????????????????????????? ??????????????????????????????????????????????????????????????????????????? ??????????????????????????????????????????????????????????????????????????? ??? ???????? ??????????????????????????????????????????????????????????? ??? ?????????????? ?????? ??? ????????????????????? ????????????? ??? ?????????? ??? ?????????????? ????????????????????????????????????????????????????????????????????????????? ???? ??????????? ??? ?????????????? ???? ????????????? ???????????? ??? ????? ?? ? ???? ???????????????????????????????????????????????????????????????????????? ???????????????????????????????????????????????????????????????????????????? ???????????????????????????????????????????????????????????????????? ???? ??? ???? ???????????? ??? ???? ?????? ????????? ????? ???? ????????? ?? ??????????????????????????????????????????????????????????????????????????? ????????????????????????????????????????????????????????????????????????? ?????????????????????????????????????????????????????????????????????????? ??????????????????????????????????????????????????????????????????????????? ?????????????????????????????????????????????????????????????????????????????? ????????????????????????????????????????????????????????????????????????????? ??????????????????????????????????????? ?????????????????????????????????????????????????????????????????? ??????????????????????????????????????????????????????????????????????? ???????? ????? ????? ?????? ???????????????? ???????? ??? ????? ?????????? ????? ???? ????? ??? ??????? ???? ?????? ?????????????? ??????? ???? ???s to memory are les s predictable. DSP processor address generation units take advantage of this predictability by supporting specialized addressing modes that enable the processor to efficiently access data in the patterns commonly found in DSP algorithms. The most common of these modes is register-indirect addressing with post-increment, which is used to automatically increment the address pointer for algorithms where repetitive computations are performed on a series of data stored sequentially in memory. Without this feature, the programmer would need to spend instructions explicitly incrementing the address pointer. Many DSP processors also support circular addressing, which allows the processor to access a block of data sequentially and then automatically wrap around to the beginning address exactly

the pattern used to access coefficients in FIR filtering. Circular addressing is also very helpful in implementing first-in, first-out buffers, commonly used for

Potrebbero piacerti anche

- Physics 11th Dec2021Documento2 paginePhysics 11th Dec2021sachin bNessuna valutazione finora

- Transmission Line ConceptsDocumento3 pagineTransmission Line Conceptssachin bNessuna valutazione finora

- Selection of MaterialsDocumento2 pagineSelection of Materialssachin bNessuna valutazione finora

- IshiDocumento1 paginaIshisachin bNessuna valutazione finora

- EC-VII-Sem-Microwave EngineeringDocumento2 pagineEC-VII-Sem-Microwave Engineeringsachin bNessuna valutazione finora

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDa EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceValutazione: 4 su 5 stelle4/5 (895)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDa EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeValutazione: 4 su 5 stelle4/5 (5794)

- Shoe Dog: A Memoir by the Creator of NikeDa EverandShoe Dog: A Memoir by the Creator of NikeValutazione: 4.5 su 5 stelle4.5/5 (537)

- Grit: The Power of Passion and PerseveranceDa EverandGrit: The Power of Passion and PerseveranceValutazione: 4 su 5 stelle4/5 (588)

- The Yellow House: A Memoir (2019 National Book Award Winner)Da EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Valutazione: 4 su 5 stelle4/5 (98)

- The Little Book of Hygge: Danish Secrets to Happy LivingDa EverandThe Little Book of Hygge: Danish Secrets to Happy LivingValutazione: 3.5 su 5 stelle3.5/5 (400)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDa EverandNever Split the Difference: Negotiating As If Your Life Depended On ItValutazione: 4.5 su 5 stelle4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDa EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureValutazione: 4.5 su 5 stelle4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDa EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryValutazione: 3.5 su 5 stelle3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerDa EverandThe Emperor of All Maladies: A Biography of CancerValutazione: 4.5 su 5 stelle4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDa EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaValutazione: 4.5 su 5 stelle4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDa EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersValutazione: 4.5 su 5 stelle4.5/5 (345)

- On Fire: The (Burning) Case for a Green New DealDa EverandOn Fire: The (Burning) Case for a Green New DealValutazione: 4 su 5 stelle4/5 (74)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDa EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyValutazione: 3.5 su 5 stelle3.5/5 (2259)

- Team of Rivals: The Political Genius of Abraham LincolnDa EverandTeam of Rivals: The Political Genius of Abraham LincolnValutazione: 4.5 su 5 stelle4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaDa EverandThe Unwinding: An Inner History of the New AmericaValutazione: 4 su 5 stelle4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDa EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreValutazione: 4 su 5 stelle4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Da EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Valutazione: 4.5 su 5 stelle4.5/5 (121)

- Her Body and Other Parties: StoriesDa EverandHer Body and Other Parties: StoriesValutazione: 4 su 5 stelle4/5 (821)

- Purposive Communication NotesDocumento53 paginePurposive Communication NotesJohara Bayabao85% (13)

- Document J SonDocumento3 pagineDocument J Sonanon_766932578Nessuna valutazione finora

- Figurative LanguageDocumento32 pagineFigurative LanguageMichelle Lee100% (1)

- RPH Latest Exam Oriented 8QDocumento2 pagineRPH Latest Exam Oriented 8QFaryz Tontok Tinan 오빠Nessuna valutazione finora

- HackpwdDocumento2 pagineHackpwdjamesyu100% (2)

- CH 5Documento5 pagineCH 5MohammadAboHlielNessuna valutazione finora

- Verbs?: Asu+A Minä Asu+N Sinä Asu+T Hän Asu+U Me Asu+Mme Te Asu+Tte He Asu+VatDocumento1 paginaVerbs?: Asu+A Minä Asu+N Sinä Asu+T Hän Asu+U Me Asu+Mme Te Asu+Tte He Asu+VatLin SawNessuna valutazione finora

- EDUC 105-AMBROCIO (Reflections)Documento5 pagineEDUC 105-AMBROCIO (Reflections)YeshËin LØuiseNessuna valutazione finora

- Searching, SortingDocumento30 pagineSearching, SortingPurushothama ReddyNessuna valutazione finora

- Form 137-E For K To 12 Curr. With LRNDocumento8 pagineForm 137-E For K To 12 Curr. With LRNDivine Grace Samortin100% (5)

- State Wise List of Registered FPOs Details Under Central Sector Scheme For Formation and Promotion of 10,000 FPOs by SFAC As On 31-12-2023Documento200 pagineState Wise List of Registered FPOs Details Under Central Sector Scheme For Formation and Promotion of 10,000 FPOs by SFAC As On 31-12-2023rahul ojhhaNessuna valutazione finora

- Olj-Iq TestDocumento5 pagineOlj-Iq TestEllie BNessuna valutazione finora

- EAPP Quiz 1Documento36 pagineEAPP Quiz 1Raquel FiguracionNessuna valutazione finora

- Pengaruh Sistem Reward, Motivasi Dan Keterampilan Komunikasi Terhadap Produktivitas Kerja Agen Asuransi Sequis Life JakartaDocumento10 paginePengaruh Sistem Reward, Motivasi Dan Keterampilan Komunikasi Terhadap Produktivitas Kerja Agen Asuransi Sequis Life Jakartarifqah nur riNessuna valutazione finora

- Demonic Spirits Names of The Evil SpiritDocumento8 pagineDemonic Spirits Names of The Evil SpiritJacques BeaubrunNessuna valutazione finora

- Zahiriddin Muhammad Babur The Great CommanderDocumento4 pagineZahiriddin Muhammad Babur The Great CommanderEditor IJTSRDNessuna valutazione finora

- Azhagi Unicode KB Oriya Transliteration SchemeDocumento5 pagineAzhagi Unicode KB Oriya Transliteration Schemegksahu000Nessuna valutazione finora

- Presentation GitangliDocumento41 paginePresentation GitanglinadihaaNessuna valutazione finora

- Novena Prayer EpDocumento4 pagineNovena Prayer EpshefecacNessuna valutazione finora

- Timothy Daniels - Building Cultural Nationalism in Malaysia - Identity, Representation and Citizenship (East Asia History, Politics, Sociology, Culture) (2004)Documento273 pagineTimothy Daniels - Building Cultural Nationalism in Malaysia - Identity, Representation and Citizenship (East Asia History, Politics, Sociology, Culture) (2004)Patricia ChengNessuna valutazione finora

- Exadata Storage Expansion x8 DsDocumento16 pagineExadata Storage Expansion x8 DskisituNessuna valutazione finora

- Sample Letters of RecommendationDocumento4 pagineSample Letters of Recommendationabidsultan0Nessuna valutazione finora

- Min Oh 1995 PHD ThesisDocumento183 pagineMin Oh 1995 PHD ThesisPriya DasNessuna valutazione finora

- Betts - Dating The Exodus PDFDocumento14 pagineBetts - Dating The Exodus PDFrupert11jgNessuna valutazione finora

- Unit 4 STDocumento22 pagineUnit 4 STSenorita xdNessuna valutazione finora

- Đề KT HK2 TA6 Smart WorldDocumento12 pagineĐề KT HK2 TA6 Smart WorldHiếuu NguyễnNessuna valutazione finora

- Assignment 06 Introduction To Computing Lab Report TemplateDocumento10 pagineAssignment 06 Introduction To Computing Lab Report Template1224 OZEAR KHANNessuna valutazione finora

- Abap For BWDocumento48 pagineAbap For BWsaurabh840Nessuna valutazione finora

- Survival Creole: Bryant C. Freeman, PH.DDocumento36 pagineSurvival Creole: Bryant C. Freeman, PH.DEnrique Miguel Gonzalez Collado100% (1)

- Ebook PDF Cognitive Science An Introduction To The Science of The Mind 2nd Edition PDFDocumento41 pagineEbook PDF Cognitive Science An Introduction To The Science of The Mind 2nd Edition PDFdavid.roberts311100% (43)