Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

RedHat4 6-ClusterAdministration

Caricato da

Dani ElmiTitolo originale

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

RedHat4 6-ClusterAdministration

Caricato da

Dani ElmiCopyright:

Formati disponibili

Configuring and Managing a Red Hat

Cluster

4.6

Red Hat Cluster Suite

for Red Hat

Enterprise Linux 4.6

lSBN: N}A

Publication date:

Confgurng and Managng a Red Hat Custer descrbes the confguraton and

management of Red Hat custer systems for Red Hat Enterprse Lnux 4.6. It does

not ncude nformaton about Red Hat Lnux Vrtua Servers (LVS). Informaton

about nstang and confgurng LVS s n a separate document.

Configuring and Managing a Red Hat Cluster

Configuring and Managing a Red Hat Cluster: Red

Hat Cluster Suite for Red Hat Enterprise Linux 4.6

Copyrght 2007 Red Hat, Inc.

Copyrght 2007 by Red Hat, Inc. Ths matera may be dstrbuted ony sub|ect to the terms and

condtons set forth n the Open Pubcaton Lcense, V1.0 or ater (the atest verson s presenty avaabe

at http://www.opencontent.org/openpub/).

Dstrbuton of substantvey modfed versons of ths document s prohbted wthout the expct

permsson of the copyrght hoder.

Dstrbuton of the work or dervatve of the work n any standard (paper) book form for commerca

purposes s prohbted uness pror permsson s obtaned from the copyrght hoder.

Red Hat and the Red Hat "Shadow Man" ogo are regstered trademarks of Red Hat, Inc. n the Unted

States and other countres.

A other trademarks referenced heren are the property of ther respectve owners.

The GPG fngerprnt of the securty@redhat.com key s:

CA 20 86 86 2B D6 9D FC 65 F6 EC C4 21 91 80 CD DB 42 A6 0E

1801 Varsty Drve

Raegh, NC 27606-2072

USA

Phone: +1 919 754 3700

Phone: 888 733 4281

Fax: +1 919 754 3701

PO Box 13588

Research Trange Park, NC 27709

USA

Configuring and Managing a Red Hat Cluster

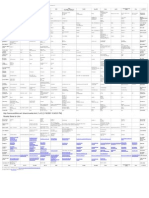

Introducton ..................................................................................................... x

1. Document Conventons ......................................................................... x

2. Feedback ............................................................................................. x

1. Red Hat Custer Confguraton and Management Overvew ..........................1

1. Confguraton Bascs ..............................................................................1

1.1. Settng Up Hardware ..................................................................1

1.2. Instang Red Hat Custer software ............................................3

1.3. Confgurng Red Hat Custer Software ........................................3

2. Conga ....................................................................................................5

3. sys1em-coh11g-cus1eJ Custer Admnstraton GUI ...............................8

3.1. Cluster Configuration Tool .....................................................9

3.2. Cluster Status Tool ...............................................................12

4. Command Lne Admnstraton Toos ...................................................13

2. Before Confgurng a Red Hat Custer .........................................................15

1. Compatbe Hardware ..........................................................................15

2. Enabng IP Ports .................................................................................15

2.1. Enabng IP Ports on Custer Nodes ...........................................16

2.2. Enabng IP Ports on Computers That Run luci .........................17

2.3. Exampes of 1p1abes Rues ......................................................17

3. Confgurng ACPI For Use wth Integrated Fence Devces ....................20

3.1. Dsabng ACPI Soft-Off wth chkcoh11g Management ................22

3.2. Dsabng ACPI Soft-Off wth the BIOS .......................................22

3.3. Dsabng ACPI Competey n the gJub.coh1 Fe .......................24

4. Confgurng max_uns ..........................................................................26

5. Consderatons for Usng Ouorum Dsk ................................................27

6. Red Hat Custer Sute and SELnux ......................................................29

7. Consderatons for Usng Conga .........................................................29

8. Genera Confguraton Consderatons .................................................29

3. Confgurng Red Hat Custer Wth Conga ...................................................31

1. Confguraton Tasks .............................................................................31

2. Startng luci and ricci .........................................................................32

3. Creatng A Custer ...............................................................................33

4. Goba Custer Propertes .....................................................................35

5. Confgurng Fence Devces ..................................................................38

5.1. Creatng a Shared Fence Devce ...............................................40

5.2. Modfyng or Deetng a Fence Devce ......................................42

6. Confgurng Custer Members ..............................................................42

6.1. Intay Confgurng Members ...................................................43

6.2. Addng a Member to a Runnng Custer ....................................43

v

6.3. Deetng a Member from a Custer ............................................45

7. Confgurng a Faover Doman ............................................................46

7.1. Addng a Faover Doman .........................................................48

7.2. Modfyng a Faover Doman ....................................................49

8. Addng Custer Resources ...................................................................50

9. Addng a Custer Servce to the Custer ...............................................53

10. Confgurng Custer Storage ..............................................................55

4. Managng Red Hat Custer Wth Conga ......................................................57

1. Startng, Stoppng, and Deetng Custers ...........................................57

2. Managng Custer Nodes .....................................................................58

3. Managng Hgh-Avaabty Servces ...................................................59

4. Dagnosng and Correctng Probems n a Custer ...............................61

5. Confgurng Red Hat Custer Wth sys1em-coh11g-cus1eJ ..........................63

1. Confguraton Tasks .............................................................................63

2. Startng the Cluster Configuration Tool .........................................64

3. Confgurng Custer Propertes ............................................................69

4. Confgurng Fence Devces ..................................................................71

5. Addng and Deetng Members ............................................................72

5.1. Addng a Member to a New Custer ..........................................72

5.2. Addng a Member to a Runnng DLM Custer ............................75

5.3. Deetng a Member from a DLM Custer ....................................78

5.4. Addng a GULM Cent-ony Member .........................................80

5.5. Deetng a GULM Cent-ony Member .......................................81

5.6. Addng or Deetng a GULM Lock Server Member .....................83

6. Confgurng a Faover Doman ............................................................86

6.1. Addng a Faover Doman .........................................................88

6.2. Removng a Faover Doman ....................................................91

6.3. Removng a Member from a Faover Doman ..........................91

7. Addng Custer Resources ...................................................................92

8. Addng a Custer Servce to the Custer ...............................................95

9. Propagatng The Confguraton Fe: New Custer ................................99

10. Startng the Custer Software ............................................................99

6. Managng Red Hat Custer Wth sys1em-coh11g-cus1eJ ...........................101

1. Startng and Stoppng the Custer Software ......................................101

2. Managng Hgh-Avaabty Servces .................................................102

3. Modfyng the Custer Confguraton ..................................................105

4. Backng Up and Restorng the Custer Database ...............................106

5. Dsabng the Custer Software ..........................................................108

6. Dagnosng and Correctng Probems n a Custer .............................109

A. Exampe of Settng Up Apache HTTP Server .............................................111

Configuring and Managing a Red Hat Cluster

v

1. Apache HTTP Server Setup Overvew ................................................111

2. Confgurng Shared Storage ..............................................................112

3. Instang and Confgurng the Apache HTTP Server ..........................113

B. Fence Devce Parameters .........................................................................117

Index .............................................................................................................123

v

v

lntroduction

Ths document provdes nformaton about nstang, confgurng and managng Red

Hat Custer components. Red Hat Custer components are part of Red Hat Custer

Sute and aow you to connect a group of computers (caed nodes or members) to

work together as a custer. Ths document does not ncude nformaton about

nstang, confgurng, and managng Lnux Vrtua Server (LVS) software.

Informaton about that s n a separate document.

The audence of ths document shoud have advanced workng knowedge of Red

Hat Enterprse Lnux and understand the concepts of custers, storage, and server

computng.

Ths document s organzed as foows:

Chapter J, Red Hat Cluster Configuration and Management Overview

Chapter 2, 8efore Configuring a Red Hat Cluster

Chapter 3, Configuring Red Hat Cluster With Conga

Chapter 4, Managing Red Hat Cluster With Conga

Chapter 5, Configuring Red Hat Cluster With sys1en-con1Ig-c1us1eI

Chapter 6, Managing Red Hat Cluster With sys1en-con1Ig-c1us1eI

Appendix A, Example of 5etting Up Apache HTTP 5erver

Appendix 8, Fence Device Parameters

For more nformaton about Red Hat Enterprse Lnux 4.6, refer to the foowng

resources:

Red Hat Enterprise Linux lnstallation Cuide - Provdes nformaton regardng

nstaaton.

Red Hat Enterprise Linux lntroduction to 5ystem Administration - Provdes

ntroductory nformaton for new Red Hat Enterprse Lnux system admnstrators.

Red Hat Enterprise Linux 5ystem Administration Cuide - Provdes more detaed

nformaton about confgurng Red Hat Enterprse Lnux to sut your partcuar

needs as a user.

x

Red Hat Enterprise Linux Reference Cuide - Provdes detaed nformaton suted

for more experenced users to reference when needed, as opposed to

step-by-step nstructons.

Red Hat Enterprise Linux 5ecurity Cuide - Detas the pannng and the toos

nvoved n creatng a secured computng envronment for the data center,

workpace, and home.

For more nformaton about Red Hat Custer Sute for Red Hat Enterprse Lnux 4.6

and reated products, refer to the foowng resources:

Red Hat Cluster 5uite Overview - Provdes a hgh eve overvew of the Red Hat

Custer Sute.

LVM Administrator's Cuide: Configuration and Administration - Provdes a

descrpton of the Logca Voume Manager (LVM), ncudng nformaton on

runnng LVM n a custered envronment.

Clobal File 5ystem: Configuration and Administration - Provdes nformaton

about nstang, confgurng, and mantanng Red Hat GFS (Red Hat Goba Fe

System).

Using Device-Mapper Multipath - Provdes nformaton about usng the

Devce-Mapper Mutpath feature of Red Hat Enterprse Lnux 4.6.

Using CN8D with Clobal File 5ystem - Provdes an overvew on usng Goba

Network Bock Devce (GNBD) wth Red Hat GFS.

Linux Virtual 5erver Administration - Provdes nformaton on confgurng

hgh-performance systems and servces wth the Lnux Vrtua Server (LVS).

Red Hat Cluster 5uite Release Notes - Provdes nformaton about the current

reease of Red Hat Custer Sute.

Red Hat Custer Sute documentaton and other Red Hat documents are avaabe n

HTML, PDF, and RPM versons on the Red Hat Enterprse Lnux Documentaton CD

and onne at http://www.redhat.com/docs/.

1. Document Conventions

Certan words n ths manua are represented n dfferent fonts, styes, and weghts.

Ths hghghtng ndcates that the word s part of a specfc category. The

lntroduction

x

categores ncude the foowng:

CouJ1eJ 1oh1

Courer font represents commahds, 11e hames ahd pa1hs, and pJomp1s .

When shown as beow, t ndcates computer output:

0esk1op abou1.h1m ogs pauwes1eJbeJg.phg

ha1 backup11es ma1 JepoJ1s

bo1d Courer 1on1

Bod Courer font represents text that you are to type, such as: servce ]onas

s1ar1

If you have to run a command as root, the root prompt (#) precedes the

command:

# gcon11oo1-2

I1a1Ic CouIIeI 1on1

Itac Courer font represents a varabe, such as an nstaaton drectory:

Ins1a11_dII/b1h/

bold font

Bod font represents application programs and text found on a graphical

interface.

When shown ke ths: OK , t ndcates a button on a graphca appcaton

nterface.

Addtonay, the manua uses dfferent strateges to draw your attenton to peces of

nformaton. In order of how crtca the nformaton s to you, these tems are

marked as foows:

Document Conventions

x

Note

A note s typcay nformaton that you need to understand the

behavor of the system.

Tip

A tp s typcay an aternatve way of performng a task.

lmportant

Important nformaton s necessary, but possby unexpected, such as a

confguraton change that w not persst after a reboot.

Caution

A cauton ndcates an act that woud voate your support agreement,

such as recompng the kerne.

Warning

A warnng ndcates potenta data oss, as may happen when tunng

hardware for maxmum performance.

2. Feedback

If you spot a typo, or f you have thought of a way to make ths manua better, we

woud ove to hear from you. Pease submt a report n Bugza

(http://bugzilla.redhat.com/bugzilla/) aganst the component Jh-cs.

Be sure to menton the manua's dentfer:

lntroduction

x

Jh-cs{Eh)-4.6 {2007-11-14T22.02)

By mentonng ths manua's dentfer, we know exacty whch verson of the gude

you have.

If you have a suggeston for mprovng the documentaton, try to be as specfc as

possbe. If you have found an error, pease ncude the secton number and some of

the surroundng text so we can fnd t easy.

Feedback

x

xv

Red Hat Cluster Configuration

and Management Overview

Red Hat Custer aows you to connect a group of computers (caed nodes or

members) to work together as a custer. You can use Red Hat Custer to sut your

custerng needs (for exampe, settng up a custer for sharng fes on a GFS fe

system or settng up servce faover).

1. Configuration Basics

To set up a custer, you must connect the nodes to certan custer hardware and

confgure the nodes nto the custer envronment. Ths chapter provdes an overvew

of custer confguraton and management, and toos avaabe for confgurng and

managng a Red Hat Custer.

Confgurng and managng a Red Hat Custer conssts of the foowng basc steps:

1. Settng up hardware. Refer to 5ection J.J, 5etting Up Hardware".

2. Instang Red Hat Custer software. Refer to 5ection J.2, lnstalling Red Hat

Cluster software".

3. Confgurng Red Hat Custer Software. Refer to 5ection J.3, Configuring Red Hat

Cluster 5oftware".

1.1. Setting Up Hardware

Settng up hardware conssts of connectng custer nodes to other hardware

requred to run a Red Hat Custer. The amount and type of hardware vares

accordng to the purpose and avaabty requrements of the custer. Typcay, an

enterprse-eve custer requres the foowng type of hardware (refer to Figure J.J,

Red Hat Cluster Hardware Overview").

Custer nodes - Computers that are capabe of runnng Red Hat Enterprse Lnux

4 software, wth at east 1GB of RAM.

Ethernet swtch or hub for pubc network - Ths s requred for cent access to

the custer.

Chapter 1.

1

Ethernet swtch or hub for prvate network - Ths s requred for communcaton

among the custer nodes and other custer hardware such as network power

swtches and Fbre Channe swtches.

Network power swtch - A network power swtch s recommended to perform

fencng n an enterprse-eve custer.

Fbre Channe swtch - A Fbre Channe swtch provdes access to Fbre Channe

storage. Other optons are avaabe for storage accordng to the type of storage

nterface; for exampe, SCSI or GNBD. A Fbre Channe swtch can be confgured

to perform fencng.

Storage - Some type of storage s requred for a custer. The type requred

depends on the purpose of the custer.

For consderatons about hardware and other custer confguraton concerns, refer to

Chapter 2, 8efore Configuring a Red Hat Cluster or check wth an authorzed Red

Hat representatve.

Chapter 1. Red Hat Cluster Configuration and Management Overview

2

Figure 1.1. Red Hat Cluster Hardware Overview

1.2. lnstalling Red Hat Cluster software

To nsta Red Hat Custer software, you must have enttements for the software. If

you are usng the Conga confguraton GUI, you can et t nsta the custer

software. If you are usng other toos to confgure the custer, secure and nsta the

software as you woud wth Red Hat Enterprse Lnux software.

1.3. Configuring Red Hat Cluster Software

Confgurng Red Hat Custer software conssts of usng confguraton toos to specfy

the reatonshp among the custer components. Figure J.2, Cluster Configuration

5tructure" shows an exampe of the herarchca reatonshp among custer nodes,

hgh-avaabty servces, and resources. The custer nodes are connected to one or

more fencng devces. Nodes can be grouped nto a faover doman for a custer

lnstalling Red Hat Cluster software

3

servce. The servces comprse resources such as NFS exports, IP addresses, and

shared GFS parttons.

Figure 1.2. Cluster Configuration Structure

The foowng custer confguraton toos are avaabe wth Red Hat Custer:

Conga - Ths s a comprehensve user nterface for nstang, confgurng, and

managng Red Hat custers, computers, and storage attached to custers and

computers.

sys1em-coh11g-cus1eJ - Ths s a user nterface for confgurng and managng a

Red Hat custer.

Command ne toos - Ths s a set of command ne toos for confgurng and

managng a Red Hat custer.

Chapter 1. Red Hat Cluster Configuration and Management Overview

4

A bref overvew of each confguraton too s provded n the foowng sectons:

5ection 2, Conga"

5ection 3, sys1en-con1Ig-c1us1eI Cluster Administration CUl"

5ection 4, Command Line Administration Tools"

In addton, nformaton about usng Conga and sys1em-coh11g-cus1eJ s provded

n subsequent chapters of ths document. Informaton about the command ne toos

s avaabe n the man pages for the toos.

2. Conga

Conga s an ntegrated set of software components that provdes centrazed

confguraton and management of Red Hat custers and storage. Conga provdes

the foowng ma|or features:

One Web nterface for managng custer and storage

Automated Depoyment of Custer Data and Supportng Packages

Easy Integraton wth Exstng Custers

No Need to Re-Authentcate

Integraton of Custer Status and Logs

Fne-Graned Contro over User Permssons

The prmary components n Conga are luci and ricci, whch are separatey

nstaabe. luci s a server that runs on one computer and communcates wth

mutpe custers and computers va ricci. ricci s an agent that runs on each

computer (ether a custer member or a standaone computer) managed by Conga.

luci s accessbe through a Web browser and provdes three ma|or functons that

are accessbe through the foowng tabs:

homebase - Provdes toos for addng and deetng computers, addng and

deetng users, and confgurng user prveges. Ony a system admnstrator s

aowed to access ths tab.

Conga

5

cluster - Provdes toos for creatng and confgurng custers. Each nstance of

luci sts custers that have been set up wth that luci. A system admnstrator can

admnster a custers sted on ths tab. Other users can admnster ony custers

that the user has permsson to manage (granted by an admnstrator).

storage - Provdes toos for remote admnstraton of storage. Wth the toos on

ths tab, you can manage storage on computers whether they beong to a custer

or not.

To admnster a custer or storage, an admnstrator adds (or registers) a custer or a

computer to a luci server. When a custer or a computer s regstered wth luci, the

FODN hostname or IP address of each computer s stored n a luci database.

You can popuate the database of one luci nstance from another lucinstance. That

capabty provdes a means of repcatng a luci server nstance and provdes an

effcent upgrade and testng path. When you nsta an nstance of luci, ts

database s empty. However, you can mport part or a of a luci database from an

exstng luci server when depoyng a new luci server.

Each luci nstance has one user at nta nstaaton - admn. Ony the admn user

may add systems to a luci server. Aso, the admn user can create addtona user

accounts and determne whch users are aowed to access custers and computers

regstered n the luci database. It s possbe to mport users as a batch operaton n

a new luci server, |ust as t s possbe to mport custers and computers.

When a computer s added to a luci server to be admnstered, authentcaton s

done once. No authentcaton s necessary from then on (uness the certfcate used

s revoked by a CA). After that, you can remotey confgure and manage custers

and storage through the luci user nterface. luci and ricci communcate wth each

other va XML.

The foowng fgures show sampe dspays of the three ma|or luci tabs:

homebase, cluster, and storage.

For more nformaton about Conga, refer to Chapter 3, Configuring Red Hat Cluster

With Conga, Chapter 4, Managing Red Hat Cluster With Conga, and the onne hep

avaabe wth the luci server.

Chapter 1. Red Hat Cluster Configuration and Management Overview

6

Figure 1.3. luci homebase Tab

Figure 1.4. luci cluster Tab

Conga

7

Figure 1.5. luci storage Tab

3. sys1em-con1g-c1us1er Cluster Administration GUl

Ths secton provdes an overvew of the custer admnstraton graphca user

nterface (GUI) avaabe wth Red Hat Custer Sute - sys1em-coh11g-cus1eJ. It s

for use wth the custer nfrastructure and the hgh-avaabty servce management

components. sys1em-coh11g-cus1eJ conssts of two ma|or functons: the Cluster

Configuration Tool and the Cluster Status Tool. The Cluster Configuration

Tool provdes the capabty to create, edt, and propagate the custer confguraton

fe (/e1c/cus1eJ/cus1eJ.coh1). The Cluster Status Tool provdes the capabty

to manage hgh-avaabty servces. The foowng sectons summarze those

functons.

Chapter 1. Red Hat Cluster Configuration and Management Overview

8

Note

Whe sys1em-coh11g-cus1eJ provdes severa convenent toos for

confgurng and managng a Red Hat Custer, the newer, more

comprehensve too, Conga, provdes more convenence and fexbty

than sys1em-coh11g-cus1eJ.

3.1. Cluster Configuration Tool

You can access the Cluster Configuration Tool (Figure J.6, Cluster

Configuration Tool") through the Cluster Configuration tab n the Custer

Admnstraton GUI.

Cluster Configuration Tool

9

Figure 1.6. Cluster Configuration Tool

The Cluster Configuration Tool represents custer confguraton components n

the confguraton fe (/e1c/cus1eJ/cus1eJ.coh1) wth a herarchca graphca

dspay n the eft pane. A trange con to the eft of a component name ndcates

that the component has one or more subordnate components assgned to t.

Cckng the trange con expands and coapses the porton of the tree beow a

component. The components dspayed n the GUI are summarzed as foows:

Cluster Nodes - Dspays custer nodes. Nodes are represented by name as

Chapter 1. Red Hat Cluster Configuration and Management Overview

10

subordnate eements under Cluster Nodes. Usng confguraton buttons at the

bottom of the rght frame (beow Properties), you can add nodes, deete nodes,

edt node propertes, and confgure fencng methods for each node.

Fence Devices - Dspays fence devces. Fence devces are represented as

subordnate eements under Fence Devices. Usng confguraton buttons at the

bottom of the rght frame (beow Properties), you can add fence devces, deete

fence devces, and edt fence-devce propertes. Fence devces must be defned

before you can confgure fencng (wth the Manage Fencing For This Node

button) for each node.

Managed Resources - Dspays faover domans, resources, and servces.

Failover Domains - For confgurng one or more subsets of custer nodes

used to run a hgh-avaabty servce n the event of a node faure. Faover

domans are represented as subordnate eements under Failover Domains.

Usng confguraton buttons at the bottom of the rght frame (beow

Properties), you can create faover domans (when Failover Domains s

seected) or edt faover doman propertes (when a faover doman s

seected).

Resources - For confgurng shared resources to be used by hgh-avaabty

servces. Shared resources consst of fe systems, IP addresses, NFS mounts

and exports, and user-created scrpts that are avaabe to any hgh-avaabty

servce n the custer. Resources are represented as subordnate eements

under Resources. Usng confguraton buttons at the bottom of the rght frame

(beow Properties), you can create resources (when Resources s seected) or

edt resource propertes (when a resource s seected).

Note

The Cluster Configuration Tool provdes the capabty to confgure

prvate resources, aso. A prvate resource s a resource that s

confgured for use wth ony one servce. You can confgure a prvate

resource wthn a Service component n the GUI.

Services - For creatng and confgurng hgh-avaabty servces. A servce s

confgured by assgnng resources (shared or prvate), assgnng a faover

doman, and defnng a recovery pocy for the servce. Servces are represented

as subordnate eements under Services. Usng confguraton buttons at the

Cluster Configuration Tool

11

bottom of the rght frame (beow Properties), you can create servces (when

Services s seected) or edt servce propertes (when a servce s seected).

3.2. Cluster Status Tool

You can access the Cluster Status Tool (Figure J.7, Cluster Status Tool")

through the Cluster Management tab n Custer Admnstraton GUI.

Figure 1.7. Cluster Status Tool

The nodes and servces dspayed n the Cluster Status Tool are determned by

Chapter 1. Red Hat Cluster Configuration and Management Overview

12

the custer confguraton fe (/e1c/cus1eJ/cus1eJ.coh1). You can use the Cluster

Status Tool to enabe, dsabe, restart, or reocate a hgh-avaabty servce.

4. Command Line Administration Tools

In addton to Conga and the sys1em-coh11g-cus1eJ Custer Admnstraton GUI,

command ne toos are avaabe for admnsterng the custer nfrastructure and the

hgh-avaabty servce management components. The command ne toos are

used by the Custer Admnstraton GUI and nt scrpts supped by Red Hat.

Table J.J, Command Line Tools" summarzes the command ne toos.

Command

Line Tool

Used With Purpose

ccs_1oo -

Custer

Confguraton

System Too

Custer

Infrastructure

ccs_1oo s a program for makng onne updates

to the custer confguraton fe. It provdes the

capabty to create and modfy custer

nfrastructure components (for exampe,

creatng a custer, addng and removng a node).

For more nformaton about ths too, refer to the

ccs_too(8) man page.

cmah_1oo -

Custer

Management

Too

Custer

Infrastructure

cmah_1oo s a program that manages the CMAN

custer manager. It provdes the capabty to |on

a custer, eave a custer, k a node, or change

the expected quorum votes of a node n a

custer. cmah_1oo s avaabe wth DLM custers

ony. For more nformaton about ths too, refer

to the cman_too(8) man page.

gum_1oo -

Custer

Management

Too

Custer

Infrastructure

gum_1oo s a program used to manage GULM. It

provdes an nterface to ock_gumd, the GULM

ock manager. gum_1oo s avaabe wth GULM

custers ony. For more nformaton about ths

too, refer to the gum_too(8) man page.

1ehce_1oo -

Fence Too

Custer

Infrastructure

1ehce_1oo s a program used to |on or eave the

defaut fence doman. Specfcay, t starts the

fence daemon (1ehced) to |on the doman and

ks 1ehced to eave the doman. 1ehce_1oo s

avaabe wth DLM custers ony. For more

nformaton about ths too, refer to the

fence_too(8) man page.

Command Line Administration Tools

13

Command

Line Tool

Used With Purpose

cus1a1 -

Custer Status

Utty

Hgh-avaabty

Servce

Management

Components

The cus1a1 command dspays the status of the

custer. It shows membershp nformaton,

quorum vew, and the state of a confgured

user servces. For more nformaton about ths

too, refer to the custat(8) man page.

cusvcadm -

Custer User

Servce

Admnstraton

Utty

Hgh-avaabty

Servce

Management

Components

The cusvcadm command aows you to enabe,

dsabe, reocate, and restart hgh-avaabty

servces n a custer. For more nformaton about

ths too, refer to the cusvcadm(8) man page.

Table 1.1. Command Line Tools

Chapter 1. Red Hat Cluster Configuration and Management Overview

14

Before Configuring a Red Hat

Cluster

Ths chapter descrbes tasks to perform and consderatons to make before nstang

and confgurng a Red Hat Custer, and conssts of the foowng sectons:

5ection J, Compatible Hardware"

5ection 2, Enabling lP Ports"

5ection 3, Configuring ACPl For Use with lntegrated Fence Devices"

5ection 4, Configuring max_luns"

5ection 5, Considerations for Using Ouorum Disk"

5ection 7, Considerations for Using Conga"

5ection 8, Ceneral Configuration Considerations"

1. Compatible Hardware

Before confgurng Red Hat Custer software, make sure that your custer uses

approprate hardware (for exampe, supported fence devces, storage devces, and

Fbre Channe swtches). Refer to the hardware confguraton gudenes at

http://www.redhat.com/cluster_suite/hardware/ for the most current hardware

compatbty nformaton.

2. Enabling lP Ports

Before depoyng a Red Hat Custer, you must enabe certan IP ports on the custer

nodes and on computers that run luci (the Conga user nterface server). The

foowng sectons specfy the IP ports to be enabed and provde exampes of

1p1abes rues for enabng the ports:

5ection 2.J, Enabling lP Ports on Cluster Nodes"

5ection 2.2, Enabling lP Ports on Computers That Run luci"

5ection 2.3, Examples of Ip1ab1es Rules"

Chapter 2.

15

2.1. Enabling lP Ports on Cluster Nodes

To aow Red Hat Custer nodes to communcate wth each other, you must enabe

the IP ports assgned to certan Red Hat Custer components. Table 2.J, Enabled lP

Ports on Red Hat Cluster Nodes" sts the IP port numbers, ther respectve

protocos, the components to whch the port numbers are assgned, and references

to 1p1abes rue exampes. At each custer node, enabe IP ports accordng to

Table 2.J, Enabled lP Ports on Red Hat Cluster Nodes". (A exampes are n

5ection 2.3, Examples of Ip1ab1es Rules".)

lP Port

Number

Protocol Component Reference to Example of

p1ab1es Rules

6809 UDP cmah (Custer Manager), for

use n custers wth

Dstrbuted Lock Manager

(DLM) seected

Example 2.J, Port 6809:

cman"

11111 TCP J1cc1 (part of Conga remote

agent)

Example 2.3, Port JJJJJ:

ricci (Cluster Node and

Computer Running luci)"

14567 TCP ghbd (Goba Network Bock

Devce)

Example 2.4, Port J4567:

gnbd"

16851 TCP modcus1eJd (part of Conga

remote agent)

Example 2.5, Port J685J:

modclusterd"

21064 TCP dm (Dstrbuted Lock

Manager), for use n custers

wth Dstrbuted Lock

Manager (DLM) seected

Example 2.6, Port 2J064:

dlm"

40040,

40042,

41040

TCP ock_gumd (GULM daemon),

for use n custers wth Grand

Unfed Lock Manager (GULM)

seected

Example 2.7, Ports 40040,

40042, 4J040: lock_gulmd"

41966,

41967,

41968,

41969

TCP JgmahageJ (hgh-avaabty

servce management)

Example 2.8, Ports 4J966,

4J967, 4J968, 4J969:

rgmanager"

50006,

50008,

50009

TCP ccsd (Custer Confguraton

System daemon)

Example 2.9, Ports 50006,

50008, 50009: ccsd (TCP)"

Chapter 2. Before Configuring a Red Hat Cluster

16

lP Port

Number

Protocol Component Reference to Example of

p1ab1es Rules

50007 UDP ccsd (Custer Confguraton

System daemon)

Example 2.J0, Port 50007:

ccsd (UDP)"

Table 2.1. Enabled lP Ports on Red Hat Cluster Nodes

2.2. Enabling lP Ports on Computers That Run luci

To aow cent computers to communcate wth a computer that runs luci (the

Conga user nterface server), and to aow a computer that runs luci to

communcate wth ricci n the custer nodes, you must enabe the IP ports assgned

to luci and ricci. Table 2.2, Enabled lP Ports on a Computer That Runs luci" sts

the IP port numbers, ther respectve protocos, the components to whch the port

numbers are assgned, and references to 1p1abes rue exampes. At each computer

that runs luci, enabe IP ports accordng to Table 2.J, Enabled lP Ports on Red Hat

Cluster Nodes". (A exampes are n 5ection 2.3, Examples of Ip1ab1es Rules".)

Note

If a custer node s runnng luci, port 11111 shoud aready have been

enabed.

lP Port

Number

Protocol Component Reference to Example of

p1ab1es Rules

8084 TCP luci (Conga user nterface

server)

Example 2.2, Port 8084: luci

(Cluster Node or Computer

Running luci)"

11111 TCP J1cc1 (Conga remote agent) Example 2.3, Port JJJJJ:

ricci (Cluster Node and

Computer Running luci)"

Table 2.2. Enabled lP Ports on a Computer That Runs luci

2.3. Examples of p1ab1es Rules

Enabling lP Ports on Computers That

17

Ths secton provdes 1p1abes rue exampes for enabng IP ports on Red Hat

Custer nodes and computers that run luci. The exampes enabe IP ports for a

computer havng an IP address of 10.10.10.200, usng a subnet mask of

10.10.10.0/24.

Note

Exampes are for custer nodes uness otherwse noted n the exampe

ttes.

-A ThPbT -1 10.10.10.200 -m s1a1e --s1a1e hEW -p udp -s 10.10.10.0/24 -d

10.10.10.0/24 --dpoJ1 6809 - ACCEPT

Example 2.1. Port 6809: cman

-A ThPbT -1 10.10.10.200 -m s1a1e --s1a1e hEW -m mu11poJ1 -p 1cp -s

10.10.10.0/24 -d 10.10.10.0/24 --dpoJ1s 8084 - ACCEPT

Example 2.2. Port 8084: luci (Cluster Node or Computer

Running luci)

-A ThPbT -1 10.10.10.200 -m s1a1e --s1a1e hEW -m mu11poJ1 -p 1cp -s

10.10.10.0/24 -d 10.10.10.0/24 --dpoJ1s 11111 - ACCEPT

Example 2.3. Port 11111: ricci (Cluster Node and Computer

Running luci)

-A ThPbT -1 10.10.10.200 -m s1a1e --s1a1e hEW -m mu11poJ1 -p 1cp -s

10.10.10.0/24 -d 10.10.10.0/24 --dpoJ1s 14567 - ACCEPT

Chapter 2. Before Configuring a Red Hat Cluster

18

Example 2.4. Port 14567: gnbd

-A ThPbT -1 10.10.10.200 -m s1a1e --s1a1e hEW -m mu11poJ1 -p 1cp -s

10.10.10.0/24 -d 10.10.10.0/24 --dpoJ1s 16851 - ACCEPT

Example 2.5. Port 16851: modclusterd

-A ThPbT -1 10.10.10.200 -m s1a1e --s1a1e hEW -m mu11poJ1 -p 1cp -s

10.10.10.0/24 -d 10.10.10.0/24 --dpoJ1s 21064 - ACCEPT

Example 2.6. Port 21064: dlm

-A ThPbT -1 10.10.10.200 -m s1a1e --s1a1e hEW -m mu11poJ1 -p 1cp -s

10.10.10.0/24 -d 10.10.10.0/24 --dpoJ1s 40040,40042,41040 - ACCEPT

Example 2.7. Ports 40040, 40042, 41040: lock_gulmd

-A ThPbT -1 10.10.10.200 -m s1a1e --s1a1e hEW -m mu11poJ1 -p 1cp -s

10.10.10.0/24 -d 10.10.10.0/24 --dpoJ1s 41966,41967,41968,41969 - ACCEPT

Example 2.8. Ports 41966, 41967, 41968, 41969: rgmanager

-A ThPbT -1 10.10.10.200 -m s1a1e --s1a1e hEW -m mu11poJ1 -p 1cp -s

10.10.10.0/24 -d 10.10.10.0/24 --dpoJ1s 50006,50008,50009 - ACCEPT

Example 2.9. Ports 50006, 50008, 50009: ccsd (TCP)

Run luci

19

-A ThPbT -1 10.10.10.200 -m s1a1e --s1a1e hEW -m mu11poJ1 -p udp -s

10.10.10.0/24 -d 10.10.10.0/24 --dpoJ1s 50007 - ACCEPT

Example 2.10. Port 50007: ccsd (UDP)

3. Configuring ACPl For Use with lntegrated

Fence Devices

If your custer uses ntegrated fence devces, you must confgure ACPI (Advanced

Confguraton and Power Interface) to ensure mmedate and compete fencng.

Note

For the most current nformaton about ntegrated fence devces

supported by Red Hat Custer Sute, refer to

http://www.redhat.com/cluster_suite/hardware/.

If a custer node s confgured to be fenced by an ntegrated fence devce, dsabe

ACPI Soft-Off for that node. Dsabng ACPI Soft-Off aows an ntegrated fence devce

to turn off a node mmedatey and competey rather than attemptng a cean

shutdown (for exampe, shu1dowh -h how). Otherwse, f ACPI Soft-Off s enabed, an

ntegrated fence devce can take four or more seconds to turn off a node (refer to

note that foows). In addton, f ACPI Soft-Off s enabed and a node pancs or

freezes durng shutdown, an ntegrated fence devce may not be abe to turn off the

node. Under those crcumstances, fencng s deayed or unsuccessfu. Consequenty,

when a node s fenced wth an ntegrated fence devce and ACPI Soft-Off s enabed,

a custer recovers sowy or requres admnstratve nterventon to recover.

Note

The amount of tme requred to fence a node depends on the

ntegrated fence devce used. Some ntegrated fence devces perform

the equvaent of pressng and hodng the power button; therefore, the

fence devce turns off the node n four to fve seconds. Other

Chapter 2. Before Configuring a Red Hat Cluster

20

ntegrated fence devces perform the equvaent of pressng the power

button momentary, reyng on the operatng system to turn off the

node; therefore, the fence devce turns off the node n a tme span

much onger than four to fve seconds.

To dsabe ACPI Soft-Off, use chkcoh11g management and verfy that the node turns

off mmedatey when fenced. The preferred way to dsabe ACPI Soft-Off s wth

chkcoh11g management: however, f that method s not satsfactory for your custer,

you can dsabe ACPI Soft-Off wth one of the foowng aternate methods:

Changng the BIOS settng to "nstant-off" or an equvaent settng that turns off

the node wthout deay

Note

Dsabng ACPI Soft-Off wth the BIOS may not be possbe wth some

computers.

Appendng acp=o11 to the kerne boot command ne of the /boo1/gJub/gJub.coh1

fe

lmportant

Ths method competey dsabes ACPI; some computers do not boot

correcty f ACPI s competey dsabed. Use ths method only f the

other methods are not effectve for your custer.

The foowng sectons provde procedures for the preferred method and aternate

methods of dsabng ACPI Soft-Off:

5ection 3.J, Disabling ACPl 5oft-Off with chkcon1Ig Management" - Preferred

method

5ection 3.2, Disabling ACPl 5oft-Off with the 8lO5" - Frst aternate method

Configuring ACPl For Use with

21

5ection 3.3, Disabling ACPl Completely in the gIub.con1 File" - Second aternate

method

3.1. Disabling ACPl Soft-Off with chkcon1g Management

You can use chkcoh11g management to dsabe ACPI Soft-Off ether by removng the

ACPI daemon (acp1d) from chkcoh11g management or by turnng off acp1d.

Note

Ths s the preferred method of dsabng ACPI Soft-Off.

Dsabe ACPI Soft-Off wth chkcoh11g management at each custer node as foows:

1. Run ether of the foowng commands:

chkcoh11g --de acp1d - Ths command removes acp1d from chkcoh11g

management.

- OR -

chkcoh11g --eve 2345 acp1d o11 - Ths command turns off acp1d.

2. Reboot the node.

3. When the custer s confgured and runnng, verfy that the node turns off

mmedatey when fenced.

Tip

You can fence the node wth the 1ehce_hode command or Conga.

3.2. Disabling ACPl Soft-Off with the BlOS

The preferred method of dsabng ACPI Soft-Off s wth chkcoh11g management

(5ection 3.J, Disabling ACPl 5oft-Off with chkcon1Ig Management"). However, f

the preferred method s not effectve for your custer, foow the procedure n ths

secton.

Chapter 2. Before Configuring a Red Hat Cluster

22

Note

Dsabng ACPI Soft-Off wth the BIOS may not be possbe wth some

computers.

You can dsabe ACPI Soft-Off by confgurng the BIOS of each custer node as

foows:

1. Reboot the node and start the BT0S Ch0S Se1up b1111y program.

2. Navgate to the Power menu (or equvaent power management menu).

3. At the Power menu, set the Soft-Off by PWR-BTTN functon (or equvaent) to

lnstant-Off (or the equvaent settng that turns off the node va the power

button wthout deay). Example 2.JJ, 8J0S C|0S Se1up d1I1I1y: Soft-Off by

PWR-BTTN set to lnstant-Off" shows a Power menu wth ACPl Function set to

Enabled and Soft-Off by PWR-BTTN set to lnstant-Off.

Note

The equvaents to ACPl Function, Soft-Off by PWR-BTTN, and

lnstant-Off may vary among computers. However, the ob|ectve of

ths procedure s to confgure the BIOS so that the computer s turned

off va the power button wthout deay.

4. Ext the BT0S Ch0S Se1up b1111y program, savng the BIOS confguraton.

5. When the custer s confgured and runnng, verfy that the node turns off

mmedatey when fenced.

Tip

You can fence the node wth the 1ehce_hode command or Conga.

+-------------------------------------------------]------------------------+

lntegrated Fence Devices

23

] ACPT Fuhc11oh |Ehabed] ] T1em hep ]

] ACPT Suspehd Type |S1{P0S)] ]------------------------]

] x Ruh vCABT0S 11 S3 Resume Au1o ] hehu Leve * ]

] Suspehd hode |01sabed] ] ]

] h00 PoweJ 0owh |01sabed] ] ]

] So11-011 by PWR-BTTh |Ths1ah1-011] ] ]

] CPb ThRh-ThJo111hg |50.0] ] ]

] Wake-bp by PCT caJd |Ehabed] ] ]

] PoweJ 0h by R1hg |Ehabed] ] ]

] Wake bp 0h LAh |Ehabed] ] ]

] x bSB KB Wake-bp FJom S3 01sabed ] ]

] Resume by AaJm |01sabed] ] ]

] x 0a1e{o1 hoh1h) AaJm 0 ] ]

] x T1me{hh.mm.ss) AaJm 0 . 0 . 0 ] ]

] P0WER 0h Fuhc11oh |BbTT0h 0hLY] ] ]

] x KB PoweJ 0h PasswoJd Eh1eJ ] ]

] x ho1 Key PoweJ 0h C1J-F1 ] ]

] ] ]

] ] ]

+-------------------------------------------------]------------------------+

Ths exampe shows ACPl Function set to Enabled, and Soft-Off by PWR-BTTN

set to lnstant-Off.

Example 2.11. I5 CN5 5e1up u111y: Soft-Off by PWR-BTTN set

to lnstant-Off

3.3. Disabling ACPl Completely in the grub.con1 File

The preferred method of dsabng ACPI Soft-Off s wth chkcoh11g management

(5ection 3.J, Disabling ACPl 5oft-Off with chkcon1Ig Management"). If the

preferred method s not effectve for your custer, you can dsabe ACPI Soft-Off wth

the BIOS power management (5ection 3.2, Disabling ACPl 5oft-Off with the 8lO5").

If nether of those methods s effectve for your custer, you can dsabe ACPI

competey by appendng acp=o11 to the kerne boot command ne n the gJub.coh1

fe.

Chapter 2. Before Configuring a Red Hat Cluster

24

lmportant

Ths method competey dsabes ACPI; some computers do not boot

correcty f ACPI s competey dsabed. Use ths method only f the

other methods are not effectve for your custer.

You can dsabe ACPI competey by edtng the gJub.coh1 fe of each custer node

as foows:

1. Open /boo1/gJub/gJub.coh1 wth a text edtor.

2. Append acp=o11 to the kerne boot command ne n /boo1/gJub/gJub.coh1 (refer

to Example 2.J2, lernel 8oot Command Line with acz=o11 Appended to lt").

3. Reboot the node.

4. When the custer s confgured and runnng, verfy that the node turns off

mmedatey when fenced.

Tip

You can fence the node wth the 1ehce_hode command or Conga.

# gJub.coh1 geheJa1ed by ahacohda

#

# ho1e 1ha1 you do ho1 have 1o JeJuh gJub a11eJ mak1hg chahges 1o 1h1s 11e

# h0TTCE. You have a /boo1 paJ1111oh. Th1s meahs 1ha1

# a keJhe ahd 1h11Jd pa1hs aJe Jea11ve 1o /boo1/, eg.

# Joo1 {hd0,0)

# keJhe /vm1huz-veJs1oh Jo Joo1=/dev/voCJoup00/Logvo00

# 1h11Jd /1h11Jd-veJs1oh.1mg

#boo1=/dev/hda

de1au1=0

11meou1=5

seJ1a --uh11=0 --speed=115200

1eJm1ha --11meou1=5 seJ1a cohsoe

111e Red ha1 Eh1eJpJ1se L1hux SeJveJ {2.6.18-36.e5)

25

Joo1 {hd0,0)

keJhe /vm1huz-2.6.18-36.e5 Jo Joo1=/dev/voCJoup00/Logvo00

cohsoe=11yS0,115200h8 acp1=o11

1h11Jd /1h11Jd-2.6.18-36.e5.1mg

In ths exampe, acp=o11 has been appended to the kerne boot command ne -

the ne startng wth "kerne /vmnuz-2.6.18-36.e5".

Example 2.12. Kernel Boot Command Line with acp=o11

Appended to lt

4. Configuring max_luns

If RAID storage n your custer presents mutpe LUNs (Logca Unt Numbers), each

custer node must be abe to access those LUNs. To enabe access to a LUNs

presented, confgure max_uhs n the /e1c/modpJobe.coh1 fe of each node as

foows:

1. Open /e1c/modpJobe.coh1 wth a text edtor.

2. Append the foowng ne to /e1c/modpJobe.coh1. Set | to the hghest numbered

LUN that s presented by RAID storage.

op11ohs scs1_mod max_uhs=|

For exampe, wth the foowng ne appended to the /e1c/modpJobe.coh1 fe, a

node can access LUNs numbered as hgh as 255:

op11ohs scs1_mod max_uhs=255

3. Save /e1c/modpJobe.coh1.

4. Run mk1h11Jd to rebud 1h11Jd for the currenty runnng kerne as foows. Set the

keIne1 varabe to the currenty runnng kerne:

Chapter 2. Before Configuring a Red Hat Cluster

26

# cd /boo1

# mkn1rd -1 -v n1rd-kerne!.mg kerne!

For exampe, the currenty runnng kerne n the foowng mk1h11Jd command s

2.6.9-34.0.2.EL:

# mkn1rd -1 -v n1rd-2.6.9-34.6.2.EL.mg 2.6.9-34.6.2.EL

Tip

You can determne the currenty runnng kerne by runnng uhame -J.

5. Restart the node.

5. Considerations for Using uorum Disk

Ouorum Dsk s a dsk-based quorum daemon, qd1skd, that provdes suppementa

heurstcs to determne node ftness. Wth heurstcs you can determne factors that

are mportant to the operaton of the node n the event of a network partton. For

exampe, n a four-node custer wth a 3:1 spt, ordnary, the three nodes

automatcay "wn" because of the three-to-one ma|orty. Under those

crcumstances, the one node s fenced. Wth qd1skd however, you can set up

heurstcs that aow the one node to wn based on access to a crtca resource (for

exampe, a crtca network path). If your custer requres addtona methods of

determnng node heath, then you shoud confgure qd1skd to meet those needs.

Note

Confgurng qd1skd s not requred uness you have speca

requrements for node heath. An exampe of a speca requrement s

an "a-but-one" confguraton. In an a-but-one confguraton, qd1skd s

confgured to provde enough quorum votes to mantan quorum even

though ony one node s workng.

Considerations for Using uorum

27

lmportant

Overa, heurstcs and other qd1skd parameters for your Red Hat

Custer depend on the ste envronment and speca requrements

needed. To understand the use of heurstcs and other qd1skd

parameters, refer to the qdsk(5) man page. If you requre assstance

understandng and usng qd1skd for your ste, contact an authorzed

Red Hat support representatve.

If you need to use qd1skd, you shoud take nto account the foowng consderatons:

Custer node votes

Each custer node shoud have the same number of votes.

CMAN membershp tmeout vaue

The CMAN membershp tmeout vaue (the tme a node needs to be

unresponsve before CMAN consders that node to be dead, and not a member)

shoud be at east two tmes that of the qd1skd membershp tmeout vaue. The

reason s because the quorum daemon must detect faed nodes on ts own, and

can take much onger to do so than CMAN. The defaut vaue for CMAN

membershp tmeout s 10 seconds. Other ste-specfc condtons may affect the

reatonshp between the membershp tmeout vaues of CMAN and qd1skd. For

assstance wth ad|ustng the CMAN membershp tmeout vaue, contact an

authorzed Red Hat support representatve.

Fencng

To ensure reabe fencng when usng qd1skd, use power fencng. Whe other

types of fencng (such as watchdog tmers and software-based soutons to

reboot a node nternay) can be reabe for custers not confgured wth qd1skd,

they are not reabe for a custer confgured wth qd1skd.

Maxmum nodes

A custer confgured wth qd1skd supports a maxmum of 16 nodes. The reason

for the mt s because of scaabty; ncreasng the node count ncreases the

amount of synchronous I/O contenton on the shared quorum dsk devce.

Ouorum dsk devce

A quorum dsk devce shoud be a shared bock devce wth concurrent

read/wrte access by a nodes n a custer. The mnmum sze of the bock devce

Chapter 2. Before Configuring a Red Hat Cluster

28

s 10 Megabytes. Exampes of shared bock devces that can be used by qd1skd

are a mut-port SCSI RAID array, a Fbre Channe RAID SAN, or a

RAID-confgured SCSI target. You can create a quorum dsk devce wth mkqd1sk,

the Custer Ouorum Dsk Utty. For nformaton about usng the utty refer to

the mkqdsk(8) man page.

Note

Usng |BOD as a quorum dsk s not recommended. A |BOD cannot

provde dependabe performance and therefore may not aow a node

to wrte to t qucky enough. If a node s unabe to wrte to a quorum

dsk devce qucky enough, the node s fasey evcted from a custer.

6. Red Hat Cluster Suite and SELinux

Red Hat Custer Sute for Red Hat Enterprse Lnux 4 requres that SELnux be

dsabed. Before confgurng a Red Hat custer, make sure to dsabe SELnux. For

exampe, you can dsabe SELnux upon nstaaton of Red Hat Enterprse Lnux 4 or

you can specfy 5ELINuX=dsab1ed n the /e1c/se1hux/coh11g fe.

7. Considerations for Using Conga

When usng Conga to confgure and manage your Red Hat Custer, make sure that

each computer runnng luci (the Conga user nterface server) s runnng on the

same network that the custer s usng for custer communcaton. Otherwse, luci

cannot confgure the nodes to communcate on the rght network. If the computer

runnng luci s on another network (for exampe, a pubc network rather than a

prvate network that the custer s communcatng on), contact an authorzed Red

Hat support representatve to make sure that the approprate host name s

confgured for each custer node.

8. General Configuration Considerations

You can confgure a Red Hat Custer n a varety of ways to sut your needs. Take

nto account the foowng consderatons when you pan, confgure, and mpement

your Red Hat Custer.

No-snge-pont-of-faure hardware confguraton

Disk

29

Custers can ncude a dua-controer RAID array, mutpe bonded network

channes, mutpe paths between custer members and storage, and redundant

un-nterruptbe power suppy (UPS) systems to ensure that no snge faure

resuts n appcaton down tme or oss of data.

Aternatvey, a ow-cost custer can be set up to provde ess avaabty than a

no-snge-pont-of-faure custer. For exampe, you can set up a custer wth a

snge-controer RAID array and ony a snge Ethernet channe.

Certan ow-cost aternatves, such as host RAID controers, software RAID

wthout custer support, and mut-ntator parae SCSI confguratons are not

compatbe or approprate for use as shared custer storage.

Data ntegrty assurance

To ensure data ntegrty, ony one node can run a custer servce and access

custer-servce data at a tme. The use of power swtches n the custer hardware

confguraton enabes a node to power-cyce another node before restartng that

node's custer servces durng a faover process. Ths prevents two nodes from

smutaneousy accessng the same data and corruptng t. It s strongy

recommended that fence devices (hardware or software soutons that remotey

power, shutdown, and reboot custer nodes) are used to guarantee data ntegrty

under a faure condtons. Watchdog tmers provde an aternatve way to to

ensure correct operaton of custer servce faover.

Ethernet channe bondng

Custer quorum and node heath s determned by communcaton of messages

among custer nodes va Ethernet. In addton, custer nodes use Ethernet for a

varety of other crtca custer functons (for exampe, fencng). Wth Ethernet

channe bondng, mutpe Ethernet nterfaces are confgured to behave as one,

reducng the rsk of a snge-pont-of-faure n the typca swtched Ethernet

connecton among custer nodes and other custer hardware.

Chapter 2. Before Configuring a Red Hat Cluster

30

Configuring Red Hat Cluster

With Conga

Ths chapter descrbes how to confgure Red Hat Custer software usng Conga, and

conssts of the foowng sectons:

5ection J, Configuration Tasks"

5ection 2, 5tarting luci and ricci".

5ection 3, Creating A Cluster"

5ection 4, Clobal Cluster Properties"

5ection 5, Configuring Fence Devices"

5ection 6, Configuring Cluster Members"

5ection 7, Configuring a Failover Domain"

5ection 8, Adding Cluster Resources"

5ection 9, Adding a Cluster 5ervice to the Cluster"

5ection J0, Configuring Cluster 5torage"

1. Configuration Tasks

Confgurng Red Hat Custer software wth Conga conssts of the foowng steps:

1. Confgurng and runnng the Conga confguraton user nterface - the luci

server. Refer to 5ection 2, 5tarting luci and ricci".

2. Creatng a custer. Refer to 5ection 3, Creating A Cluster".

3. Confgurng goba custer propertes. Refer to 5ection 4, Clobal Cluster

Properties".

4. Confgurng fence devces. Refer to 5ection 5, Configuring Fence Devices".

5. Confgurng custer members. Refer to 5ection 6, Configuring Cluster Members".

Chapter 3.

31

6. Creatng faover domans. Refer to 5ection 7, Configuring a Failover Domain".

7. Creatng resources. Refer to 5ection 8, Adding Cluster Resources".

8. Creatng custer servces. Refer to 5ection 9, Adding a Cluster 5ervice to the

Cluster".

9. Confgurng storage. Refer to 5ection J0, Configuring Cluster 5torage".

2. Starting luci and ricci

To admnster Red Hat Custers wth Conga, nsta and run luci and ricci as foows:

1. At each node to be admnstered by Conga, nsta the ricci agent. For exampe:

# up2da1e - rcc

2. At each node to be admnstered by Conga, start ricci. For exampe:

# servce rcc s1ar1

S1aJ11hg J1cc1. | 0K ]

3. Seect a computer to host luci and nsta the luci software on that computer. For

exampe:

# up2da1e - 1uc

Note

Typcay, a computer n a server cage or a data center hosts luci;

however, a custer computer can host luci.

4. At the computer runnng luci, ntaze the luci server usng the uc1_adm1h 1h11

command. For exampe:

Chapter 3. Configuring Red Hat Cluster With Conga

32

# 1uc_admn n1

Th111a1z1hg 1he Luc1 seJveJ

CJea11hg 1he `adm1h` useJ

Eh1eJ passwoJd. <Type passwoJd ahd pJess EhTER.>

Coh11Jm passwoJd. <Re-1ype passwoJd ahd pJess EhTER.>

Pease wa11...

The adm1h passwoJd has beeh success1uy se1.

CeheJa11hg SSL ceJ1111ca1es...

Luc1 seJveJ has beeh success1uy 1h111a1zed

Res1aJ1 1he Luc1 seJveJ 1oJ chahges 1o 1ake e11ec1

eg. seJv1ce uc1 Jes1aJ1

5. Start luci usng seJv1ce uc1 Jes1aJ1. For exampe:

# servce 1uc res1ar1

Shu111hg dowh uc1. | 0K ]

S1aJ11hg uc1. geheJa11hg h11ps SSL ceJ1111ca1es... dohe

| 0K ]

Pease, po1h1 youJ web bJowseJ 1o h11ps.//haho-01.8084 1o access uc1

6. At a Web browser, pace the URL of the luci server nto the URL address box and

cck Go (or the equvaent). The URL syntax for the luci server s

h11ps://!ucz_server_hos1nae:8684. The frst tme you access luci, two SSL

certfcate daog boxes are dspayed. Upon acknowedgng the daog boxes, your

Web browser dspays the luci ogn page.

3. Creating A Cluster

Creatng a custer wth luci conssts of seectng custer nodes, enterng ther

passwords, and submttng the request to create a custer. If the node nformaton

and passwords are correct, Conga automatcay nstas software nto the custer

Creating A Cluster

33

nodes and starts the custer. Create a custer as foows:

1. As admnstrator of luci, seect the cluster tab.

2. Cck Create a New Cluster.

3. At the Cluster Name text box, enter a custer name. The custer name cannot

exceed 15 characters. Add the node name and password for each custer node.

Enter the node name for each node n the Node Hostname coumn; enter the

root password for each node n the n the Root Password coumn. Check the

Enable Shared Storage Support checkbox f custered storage s requred.

4. Cck Submit. Cckng Submit causes the the Create a new cluster page to be

dspayed agan, showng the parameters entered n the precedng step, and

Lock Manager parameters. The Lock Manager parameters consst of the ock

manager opton buttons, DLM (preferred) and GULM, and Lock Server text

boxes n the GULM lock server properties group box. Confgure Lock

Manager parameters for ether DLM or GULM as foows:

For DLM - Cck DLM (preferred) or confrm that t s set.

For GULM - Cck GULM or confrm that t s set. At the GULM lock server

properties group box, enter the FODN or the IP address of each ock server n

a Lock Server text box.

Note

You must enter the FODN or the IP address of one, three, or fve GULM

ock servers.

5. Re-enter enter the root password for each node n the n the Root Password

coumn.

6. Cck Submit. Cckng Submit causes the foowng actons:

a. Custer software packages to be downoaded onto each custer node.

b. Custer software to be nstaed onto each custer node.

c. Custer confguraton fe to be created and propagated to each node n the

custer.

Chapter 3. Configuring Red Hat Cluster With Conga

34

d. Startng the custer.

A progress page shows the progress of those actons for each node n the custer.

When the process of creatng a new custer s compete, a page s dspayed

provdng a confguraton nterface for the newy created custer.

4. Global Cluster Properties

When a custer s created, or f you seect a custer to confgure, a custer-specfc

page s dspayed. The page provdes an nterface for confgurng custer-wde

propertes and detaed propertes. You can confgure custer-wde propertes wth

the tabbed nterface beow the custer name. The nterface provdes the foowng

tabs: General , GULM (GULM custers ony), Fence (DLM custers ony), Multicast

(DLM custers ony), and uorum Partition (DLM custers ony). To confgure the

parameters n those tabs, foow the steps n ths secton. If you do not need to

confgure parameters n a tab, skp the step for that tab.

1. General tab - Ths tab dspays custer name and provdes an nterface for

confgurng the confguraton verson and advanced custer propertes. The

parameters are summarzed as foows:

The Cluster Name text box dspays the custer name; t does not accept a

custer name change. You cannot change the custer name. The ony way to

change the name of a Red Hat custer s to create a new custer confguraton

wth the new name.

The Configuration Version vaue s set to 1 by defaut and s automatcay

ncremented each tme you modfy your custer confguraton. However, f you

need to set t to another vaue, you can specfy t at the Configuration

Version text box.

You can enter advanced custer propertes by cckng Show advanced cluster

properties. Cckng Show advanced cluster properties reveas a st of

advanced propertes. You can cck any advanced property for onne hep about

the property.

Enter the vaues requred and cck Apply for changes to take effect.

2. Fence tab (DLM custers ony) - Ths tab provdes an nterface for confgurng

these Fence Daemon Properties parameters: Post-Fail Delay and Post-]oin

Global Cluster Properties

35

Delay. The parameters are summarzed as foows:

The Post-Fail Delay parameter s the number of seconds the fence daemon

(1ehced) wats before fencng a node (a member of the fence doman) after the

node has faed. The Post-Fail Delay defaut vaue s 6. Its vaue may be vared

to sut custer and network performance.

The Post-]oin Delay parameter s the number of seconds the fence daemon

(1ehced) wats before fencng a node after the node |ons the fence doman. The

Post-]oin Delay defaut vaue s 3. A typca settng for Post-]oin Delay s

between 20 and 30 seconds, but can vary accordng to custer and network

performance.

Enter vaues requred and Cck Apply for changes to take effect.

Note

For more nformaton about Post-]oin Delay and Post-Fail Delay,

refer to the fenced(8) man page.

3. GULM tab (GULM custers ony) - Ths tab provdes an nterface for confgurng

GULM ock servers. The tab ndcates each node n a custer that s confgured as

a GULM ock server and provdes the capabty to change ock servers. Foow the

rues provded at the tab for confgurng GULM ock servers and cck Apply for

changes to take effect.

lmportant

The number of nodes that can be confgured as GULM ock servers s

mted to ether one, three, or fve.

4. Multicast tab (DLM custers ony) - Ths tab provdes an nterface for

confgurng these Multicast Configuration parameters: Do not use multicast

and Use multicast. Multicast Configuration specfes whether a mutcast

address s used for custer management communcaton among custer nodes. Do

not use multicast s the defaut settng. To use a mutcast address for custer

management communcaton among custer nodes, cck Use multicast. When

Use multicast s seected, the Multicast address and Multicast network

Chapter 3. Configuring Red Hat Cluster With Conga

36

interface text boxes are enabed. If Use multicast s seected, enter the

mutcast address nto the Multicast address text box and the mutcast

network nterface nto the Multicast network interface text box. Cck Apply

for changes to take effect.

5. uorum Partition tab (DLM custers ony) - Ths tab provdes an nterface for

confgurng these uorum Partition Configuration parameters: Do not use a

uorum Partition, Use a uorum Partition, lnterval, Votes, TKO, Minimum

Score, Device, Label, and Heuristics. The Do not use a uorum Partition

parameter s enabed by defaut. Table 3.J, Ouorum-Disk Parameters" descrbes

the parameters. If you need to use a quorum dsk, cck Use a uorum

Partition, enter quorum dsk parameters, cck Apply, and restart the custer for

the changes to take effect.

lmportant

Ouorum-dsk parameters and heurstcs depend on the ste

envronment and the speca requrements needed. To understand the

use of quorum-dsk parameters and heurstcs, refer to the qdsk(5)

man page. If you requre assstance understandng and usng quorum

dsk, contact an authorzed Red Hat support representatve.

Note

Cckng Apply on the uorum Partition tab propagates changes to

the custer confguraton fe (/e1c/cus1eJ/cus1eJ.coh1) n each

custer node. However, for the quorum dsk to operate, you must

restart the custer (refer to 5ection J, 5tarting, 5topping, and

Deleting Clusters").

Parameter Description

Do not use a

uorum Partition

Dsabes quorum partton. Dsabes quorum-dsk parameters

n the uorum Partition tab.

Use a uorum

Partition

Enabes quorum partton. Enabes quorum-dsk parameters

n the uorum Partition tab.

lnterval The frequency of read/wrte cyces, n seconds.

Global Cluster Properties

37

Parameter Description

Votes The number of votes the quorum daemon advertses to

CMAN when t has a hgh enough score.

TKO The number of cyces a node must mss to be decared dead.

Minimum Score The mnmum score for a node to be consdered "ave". If

omtted or set to 0, the defaut functon, 1ooJ{{n+1)/2), s

used, where n s the sum of the heurstcs scores. The

Minimum Score vaue must never exceed the sum of the

heurstc scores; otherwse, the quorum dsk cannot be

avaabe.

Device The storage devce the quorum daemon uses. The devce

must be the same on a nodes.

Label Specfes the quorum dsk abe created by the mkqd1sk utty.

If ths fed contans an entry, the abe overrdes the Device

fed. If ths fed s used, the quorum daemon reads

/pJoc/paJ1111ohs and checks for qdsk sgnatures on every

bock devce found, comparng the abe aganst the specfed

abe. Ths s usefu n confguratons where the quorum

devce name dffers among nodes.

Heuristics

Path to Program - The program used to determne f ths

heurstc s ave. Ths can be anythng that can be executed

by /b1h/sh -c. A return vaue of 0 ndcates success; anythng

ese ndcates faure. Ths fed s requred.

lnterval - The frequency (n seconds) at whch the heurstc

s poed. The defaut nterva for every heurstc s 2 seconds.

Score - The weght of ths heurstc. Be carefu when

determnng scores for heurstcs. The defaut score for each

heurstc s 1.

Apply Propagates the changes to the custer confguraton fe

(/e1c/cus1eJ/cus1eJ.coh1) n each custer node.

Table 3.1. uorum-Disk Parameters

5. Configuring Fence Devices

Confgurng fence devces conssts of creatng, modfyng, and deetng fence

Chapter 3. Configuring Red Hat Cluster With Conga

38

devces. Creatng a fence devce conssts of seectng a fence devce type and

enterng parameters for that fence devce (for exampe, name, IP address, ogn,

and password). Modfyng a fence devce conssts of seectng an exstng fence

devce and changng parameters for that fence devce. Deetng a fence devce

conssts of seectng an exstng fence devce and deetng t.

Tip

If you are creatng a new custer, you can create fence devces when

you confgure custer nodes. Refer to 5ection 6, Configuring Cluster

Members".

Wth Conga you can create shared and non-shared fence devces.

The foowng shared fence devces are avaabe:

APC Power Swtch

Brocade Fabrc Swtch

Bu PAP

Egenera SAN Controer

GNBD

IBM Bade Center

McData SAN Swtch

OLogc SANbox2

SCSI Fencng

Vrtua Machne Fencng

Vxe SAN Swtch

WTI Power Swtch

The foowng non-shared fence devces are avaabe:

Configuring Fence Devices

39

De DRAC

HP LO

IBM RSA II

IPMI LAN

RPS10 Sera Swtch

Ths secton provdes procedures for the foowng tasks:

Creatng shared fence devces - Refer to 5ection 5.J, Creating a 5hared Fence

Device". The procedures appy only to creatng shared fence devces. You can

create non-shared (and shared) fence devces whe confgurng nodes (refer to

5ection 6, Configuring Cluster Members").

Modfyng or deetng fence devces - Refer to 5ection 5.2, Modifying or

Deleting a Fence Device". The procedures appy to both shared and non-shared

fence devces.

The startng pont of each procedure s at the custer-specfc page that you

navgate to from Choose a cluster to administer dspayed on the cluster tab.

5.1. Creating a Shared Fence Device

To create a shared fence devce, foow these steps:

1. At the detaed menu for the custer (beow the clusters menu), cck Shared

Fence Devices. Cckng Shared Fence Devices causes the dspay of the fence

devces for a custer and causes the dspay of menu tems for fence devce

confguraton: Add a Fence Device and Configure a Fence Device.

Note

If ths s an nta custer confguraton, no fence devces have been

created, and therefore none are dspayed.

2. Cck Add a Fence Device. Cckng Add a Fence Device causes the Add a

Sharable Fence Device page to be dspayed (refer to Figure 3.J, Fence

Chapter 3. Configuring Red Hat Cluster With Conga

40

Device Configuration").

Figure 3.1. Fence Device Configuration

3. At the Add a Sharable Fence Device page, cck the drop-down box under

Fencing Type and seect the type of fence devce to confgure.

4. Specfy the nformaton n the Fencing Type daog box accordng to the type of

fence devce. Refer to Appendix 8, Fence Device Parameters for more

nformaton about fence devce parameters.

5. Cck Add this shared fence device.

6. Cckng Add this shared fence device causes a progress page to be dspayed

temporary. After the fence devce has been added, the detaed custer

Creating a Shared Fence Device

41

propertes menu s updated wth the fence devce under Configure a Fence

Device.

5.2. Modifying or Deleting a Fence Device

To modfy or deete a fence devce, foow these steps:

1. At the detaed menu for the custer (beow the clusters menu), cck Shared

Fence Devices. Cckng Shared Fence Devices causes the dspay of the fence

devces for a custer and causes the dspay of menu tems for fence devce

confguraton: Add a Fence Device and Configure a Fence Device.

2. Cck Configure a Fence Device. Cckng Configure a Fence Device causes

the dspay of a st of fence devces under Configure a Fence Device.

3. Cck a fence devce n the st. Cckng a fence devce n the st causes the

dspay of a Fence Device Form page for the fence devce seected from the st.

4. Ether modfy or deete the fence devce as foows:

To modfy the fence devce, enter changes to the parameters dspayed. Refer

to Appendix 8, Fence Device Parameters for more nformaton about fence

devce parameters. Cck Update this fence device and wat for the

confguraton to be updated.

To deete the fence devce, cck Delete this fence device and wat for the

confguraton to be updated.

Note

You can create shared fence devces on the node confguraton page,

aso. However, you can ony modfy or deete a shared fence devce va

Shared Fence Devices at the detaed menu for the custer (beow

the clusters menu).

6. Configuring Cluster Members

Confgurng custer members conssts of ntay confgurng nodes n a newy

confgured custer, addng members, and deetng members. The foowng sectons

provde procedures for nta confguraton of nodes, addng nodes, and deetng

Chapter 3. Configuring Red Hat Cluster With Conga

42

nodes:

5ection 6.J, lnitially Configuring Members"

5ection 6.2, Adding a Member to a Running Cluster"

5ection 6.3, Deleting a Member from a Cluster"

6.1. lnitially Configuring Members

Creatng a custer conssts of seectng a set of nodes (or members) to be part of the

custer. Once you have competed the nta step of creatng a custer and creatng

fence devces, you need to confgure custer nodes. To ntay confgure custer

nodes after creatng a new custer, foow the steps n ths secton. The startng

pont of the procedure s at the custer-specfc page that you navgate to from

Choose a cluster to administer dspayed on the cluster tab.

1. At the detaed menu for the custer (beow the clusters menu), cck Nodes.

Cckng Nodes causes the dspay of an Add a Node eement and a Configure

eement wth a st of the nodes aready confgured n the custer.

2. Cck a nk for a node at ether the st n the center of the page or n the st n

the detaed menu under the clusters menu. Cckng a nk for a node causes a

page to be dspayed for that nk showng how that node s confgured.

3. At the bottom of the page, under Main Fencing Method, cck Add a fence

device to this level.

4. Seect a fence devce and provde parameters for the fence devce (for exampe

port number).

Note

You can choose from an exstng fence devce or create a new fence

devce.

5. Cck Update main fence properties and wat for the change to take effect.

6.2. Adding a Member to a Running Cluster

lnitially Configuring Members

43

To add a member to a runnng custer, foow the steps n ths secton. The startng

pont of the procedure s at the custer-specfc page that you navgate to from

Choose a cluster to administer dspayed on the cluster tab.

1. At the detaed menu for the custer (beow the clusters menu), cck Nodes.

Cckng Nodes causes the dspay of an Add a Node eement and a Configure

eement wth a st of the nodes aready confgured n the custer. (In addton, a

st of the custer nodes s dspayed n the center of the page.)

2. Cck Add a Node. Cckng Add a Node causes the dspay of the Add a node

to c!us1er nae page.

3. At that page, enter the node name n the Node Hostname text box; enter the

root password n the Root Password text box. Check the Enable Shared

Storage Support checkbox f custered storage s requred. If you want to add

more nodes, cck Add another entry and enter node name and password for

the each addtona node.

4. Cck Submit. Cckng Submit causes the foowng actons:

a. Custer software packages to be downoaded onto the added node.

b. Custer software to be nstaed (or verfcaton that the approprate software

packages are nstaed) onto the added node.

c. Custer confguraton fe to be updated and propagated to each node n the

custer - ncudng the added node.

d. |onng the added node to custer.

A progress page shows the progress of those actons for each added node.

5. When the process of addng a node s compete, a page s dspayed provdng a

confguraton nterface for the custer.

6. At the detaed menu for the custer (beow the clusters menu), cck Nodes.

Cckng Nodes causes the foowng dspays:

A st of custer nodes n the center of the page

The Add a Node eement and the Configure eement wth a st of the nodes

confgured n the custer at the detaed menu for the custer (beow the

clusters menu)

Chapter 3. Configuring Red Hat Cluster With Conga

44

7. Cck the nk for an added node at ether the st n the center of the page or n

the st n the detaed menu under the clusters menu. Cckng the nk for the

added node causes a page to be dspayed for that nk showng how that node s

confgured.

8. At the bottom of the page, under Main Fencing Method, cck Add a fence

device to this level.

9. Seect a fence devce and provde parameters for the fence devce (for exampe

port number).

Note

You can choose from an exstng fence devce or create a new fence

devce.

10. Cck Update main fence properties and wat for the change to take effect.

6.3. Deleting a Member from a Cluster

To deete a member from an exstng custer that s currenty n operaton, foow the

steps n ths secton. The startng pont of the procedure s at the Choose a cluster

to administer page (dspayed on the cluster tab).