Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Wide Baseline Image Matching

Caricato da

Vũ Tiến DũngCopyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Wide Baseline Image Matching

Caricato da

Vũ Tiến DũngCopyright:

Formati disponibili

Wide-Baseline Image Matching Using Line Signatures

Lu Wang, Ulrich Neumann and Suya You Computer Science Department, University of Southern California

{luwang, uneumann, suyay}@graphics.usc.edu

Abstract

We present a wide-baseline image matching approach based on line segments. Line segments are clustered into local groups according to spatial proximity. Each group is treated as a feature called a Line Signature. Similar to local features, line signatures are robust to occlusion, image clutter, and viewpoint changes. The descriptor and similarity measure of line signatures are presented. Under our framework, the feature matching is not only robust against afne distortion but also a considerable range of 3D viewpoint changes for non-planar surfaces. When compared to matching approaches based on existing local features, our method shows improved results with low-texture scenes. Moreover, extensive experiments validate that our method has advantages in matching structured non-planar scenes under large viewpoint changes and illumination variations.

1. Introduction

Most wide-baseline image matching methods are based on local features [12]. They usually fail with low-texture scenes that are common in man-made environments. Fortunately, line segments are often abundant in these situations, and complex object boundaries can usually be approximated with sets of line segments. We present an approach that can successfully match low-texture wide-baseline images based on line segments. It works in a completely uncalibrated setting with unknown epipolar geometry. Our approach clusters detected line segments into local groups according to spatial proximity. Each group is treated as a feature called a Line Signature. Similar to local features, line signatures are robust to occlusion and clutter. They are also robust to events between the segments, which is an advantage over the features based on connected regions [12]. Moreover, their description depends mainly on the geometric conguration of segments, so they are invariant to illumination. However, different to existing afne invariant features, we cannot assume afne distortion inside each feature area since neighboring line segments are often not coplanar. Therefore, it may be more appropriate to

regard line signatures as semi-local features. There are two challenges in constructing robust features based on line segment clustering. The rst is to ensure feature repeatability under unstable line segment detection. In our approach, this is handled by multi-scale polygonization and grouping in line segment extraction, the clustering criterion considering relative saliency between segments, and the matching strategy allowing unmatched segments. The second challenge is to design a distinctive feature descriptor robust to large viewpoint changes taking into account that the segments may not be coplanar and their endpoints are inaccurate. Our approach describes line signatures based on pairwise relationships between line segments whose similarity is measured with a two-case algorithm robust not only against large afne transformation but also a considerable range of 3D viewpoint changes for non-planar surfaces. Extensive experiments validate that line signatures are better than existing local features in matching low textured images, and non-planar scenes with salient structures under large viewpoint changes. Moreover, since line segments and point features provide complimentary information, we can combine them to deal with a broader range of images.

2. Related Work

Many line matching approaches match individual segments based on their position, orientation and length, and take a nearest line strategy [14]. They are better suited to image tracking or small-baseline stereo. Some methods start with matching individual segments and resolve ambiguities by enforcing a weak constraint that adjacent line matches have similar disparities [6], or by checking the consistency of segment relationships, such as left of, right of, connectedness, etc [7, 17]. These methods require known epipolar geometry and still cannot handle large image deformation. Many of them are also computationally expensive for solving global graph matching problems [7]. The approach in [15] is limited to the scenes with dominant homographies. Some methods rely on intensity [16] or color [2] distribution of pixels on both sides of line segments to generate initial line segment matches. They are not robust to large illumination changes. Moreover, [16] requires known

epipolar geometry. Perceptual grouping of segments is widely used in object recognition and detection [9, 10, 5]. It is based on perceptual properties such as connectedness, convexity, and parallelism so that the segments are more likely on the same object. Although this strategy is useful to reduce searching space in detecting the same objects in totally different backgrounds, it is not suited to image matching since it is quite often that the curve fragments detected on the boundary of an object are indistinctive but they can form a distinctive feature with several fragments on neighboring objects whose spatial conguration is stable under a considerable range of viewpoint changes (Fig.2 shows an example). Therefore, in our approach, the grouping is based only on the proximity and relative saliency of segments with feature repeatability and distinctiveness the only concerns. A line signature usually contains segments on different objects. This property is similar to many region features where the regions refer to any subset of the image unlike those in image segmentation [12]. With indistinctive features, the approach in [5] resorts to the bag-of-features paradigm which is also not suited to image matching. Moreover, the description and similarity measure of segment groups in most object recognition systems are not designed to handle large image deformation. The paper is organized as follows: The detection of line signatures is presented in Section 3. Section 4 describes their similarity measure. Section 5 provides a codebook approach to improve matching speed. An efcient algorithm for removing outliers in image matching is presented in Section 6. Some results and conclusion are given in Section 7.

3.2. Line Segment Clustering

The segment clustering is based on the spatial proximity and relative saliency between segments. For a segment i of saliency value s, we search the neighborhood of one of its endpoints for the top k segments that are closest to this endpoint (based on the closest point not the vertical distance) and whose saliency values s r s, where r is a ratio. The k segments and i form a line signature. Segment i and the endpoint are called its central segment and its center respectively. Similarly, another line signature can be constructed centered at the other endpoint of i. For example, in Fig.1(a) the line signature centered at the endpoint p1 of segment i consists of segments {i, c, a, b}, when k = 3. Note although segment e is closer to p1 than a and b, it is not selected because it is not salient compared to i. The line signature centered at p2 includes {i, d, a, c}.

(a)

(b)

(c)

Figure 1. (a)Line segment clustering. (d)Overlapping segments. (f)Parallel segments.

3. The Detection of Line Signatures

3.1. Line Segment Extraction

Edge pixels are detected with the approach in [18] that is less sensitive to the selection of thresholds than the Canny detector. Edge pixels are linked into connected curves that are then divided into straight line segments with a method similar to [1]. Since no single scale (threshold on line tting errors) can divide all the curves in two images in consistent ways, a multi-scale scheme is applied in which each curve is polygonized with a set of scales and all possible segments are kept. Because a segment in one image may be broken into several fragments in another image, two segments are merged if their gap is smaller than their length and their line tting error is smaller than a threshold inversely-related to the gap. Similarly, multiple thresholds are applied and all possible grouping results are kept. Each segment is given an orientation parallel to the line and with the gradient of its most edge pixels pointing from its left side to its right side. It also has a saliency and a gradient magnitude that are the sum and the average of the gradient magnitude of its edgels.

Spatial proximity improves repeatability since the geometric conguration of nearby segments usually undergoes moderate variations over a large range of viewpoint changes. Our clustering approach is scale invariant. Compared to the method in [13] where a rectangular window with a xed size relative to the length of the central segment is used to group contours, it is more robust to large image distortion and can guarantee feature distinctiveness. Relative saliency is neglected in many perceptual grouping systems [9, 10, 5]. Nevertheless, it is important in handling the instability of segment extraction. Weak segments in one image often disappear in another image (e.g. e in Fig.1(a)). However, if the central segment of a line signature in one image exists in another image, its other segments are less likely to disappear since they have comparable or higher saliency (s r s). Based on this, line signatures centered at corresponding segments in two images are more likely to be similar. In addition, this strategy gives us features of different scales since with a strong central segment the other segments in a line signature are also strong, while those associated with weak central segments are usually also weak. In our experiments, the ratio r = 0.5. However, the results are not sensitive to it in a reasonable range. The number k is called the rank of a line signature. Increasing k can improve its distinctiveness, but will decrease its repeatability and increase the computation in matching. In experiments, we found k = 5 is a balanced choice. A practical issue during line signature construction is illustrated in Fig.1(b). Due to multi-scale polygonization

and grouping, segments ab, bc and de can be regarded as three separate segments, or one segment ae. Therefore, for these two possibilities, we will construct two different rank3 line signatures centered at the endpoint f of segment f g : {f g, ab, bc, de} and {f g, ae, jk, hl}. Another practical issue is depicted in Fig.1(c) where several parallel segments are very close, a common case in man-made scenes. This conguration is usually unstable and line signatures composed mostly of such segments are indistinctive. Therefore, from a set of nearby parallel segments, our approach only selects the most salient one into a line signature. Fig.2 shows two rank-5 line signatures whose central segments (in blue) are corresponding segments in two images. Their centers are indicated with yellow dots and their other segments are highlighted with red color. The other detected line segments are in green. Although most segments in the two line signatures can be matched, two of them(ab in (a) and cd in (b)) cannot. To be more robust to unstable segment extraction and clustering, the similarity measure between line signatures should allow unmatched segments.

ing the ceiling corner are on different planes but their conguration gives important information to match the images. Our approach measures the similarity based on the pairwise relationships between segments. Similar strategies are also used in [19] and [8] where pairwise congurations between edgels or line segments are used to describe the shape of symbols. Unlike [5] in which only the relationships of segments with the central segment in a feature are described, our approach is more distinctive by describing the relationship between every two segments.

4.1. The Description of a Pair of Line Segments

Many methods [7, 17, 2] describe the relationship of two segments with terms such as left of, right of, connected, etc. It is described with an angle and length ratio between the segments in [8], and a vector connecting their middle points in [5]. All these methods are not very distinctive. We describe the conguration of two line segments by distinguishing two cases. In the rst case, they are coplanar and in a local area so that their transformation between images is afne. As shown in Fig.3(a), the lines of two segments p 1 p2 and q1 q2 (the arrows represent their orientations (Section 3.1)) intersect at c. The signed length ratios 2 2 r1 = ( p 1 c p1 p2 )/|p1 p2 | and r2 = (q1 c q1 q2 )/|q1 q2 | are afne invariant, so they are good choices to describe the two-segment conguration. Moreover, they neatly encode the information of connectedness (r1 , r2 = {0, 1}) and intersection (r1 , r2 (0, 1)) which are important structural constraints. Since the invariance of r1 and r2 is equivalent to an afnity, we can judge if the transformation is afne with a threshold on the changes of r1 and r2 . If the two segments are not coplanar or the perspective effect is signicant, any conguration is possible if the underlying transformation can be arbitrarily large. However, since the two segments are proximate, in most cases the variations of the relative positions between their endpoints are moderate in a large range of viewpoint changes. The limit on the extent of transformation provides important constraints in measuring similarity, which is also the theory behind the SIFT descriptor [11] and used in the psychological model of [4]. In the SIFT descriptor, the limit on the changes of pixel positions relative to its center is set with the bins of its histogram. It was reported in [11] that although SIFT features are only scale invariant they are more robust against changes in 3D viewpoint for non-planar surfaces than many afne invariant features. For two line segments, there are 6 pairs of relationships between 4 endpoints. We select one of them, the vector p 1 p2 in Fig.3(a), as the reference to achieve scale and rotation invariance. Each of the other 5 endpoint pairs is described with the angle and the length ratio (relative to p 1 p2 ) of the vector connecting its two points. Specically, the attributes are l1 = |q1 q2 |/|p1 p2 |, l2 = |q1 p1 |/|p1 p2 |,

(a)

(b)

Figure 2. Two corresponding rank-5 line signatures in two real images. The blue segments are the central segments and the red ones are their other segments.

4. The Similarity Measure of Line Signatures

The similarity between two line signatures is measured based on the geometric conguration of their segments. The approach by checking if the two segment groups satisfy an epipolar geometry is impractical since the endpoints of the segments are often inaccurate while innite lines provide no epipolar constraint. Moreover, the segment matches between two line signatures are few and some segments may share endpoints, so the number of matched endpoints is usually insufcient to decide an epipolar geometry. It is also infeasible to compute the similarity based on if the segments satisfy an afne matrix or a homography because the segments in a line signature are often not coplanar. Fig.5(a)-(b) provide a good example where the neighboring lines form-

l3 = |q1 p2 |/|p1 p2 |, l4 = |q2 p1 |/|p1 p2 |, l5 = |q2 p2 |/|p1 p2 |, and 15 in Fig.3(a). Note although the absolute locations of points q1 and q2 can be described with only 4 coordinates, it is necessary to use 10 attributes to describe the locations of each point relative to all the other points since the judgment on shape similarity may be different with different reference points. An example is shown in Fig.3(b) where the position change from q1 to q1 relative to p2 is not large (5 and l3 are small) whereas the change relative to p1 is very large (2 > /2). In addition, we use an extra attribute g = g2 /g1 to describe the appearance information, where g1 and g2 are the average gradient magnitude of the two segments. It is robust to illumination changes and helps to further improve the distinctiveness. Therefore, the feature vector to describe a two-segment conguration contains totally 13 attributes: v = {r12 , l15 , 15 , g }. Note there is no conict with the degree of freedom. The 12 attributes describing the geometric conguration are computed from the 8 coordinates of 4 endpoints. They are dependent, and are essentially intermediate results stored in the feature vector to make the following similarity computation more efcient. Similar strategy of increasing the dimensionality of the original feature space is also used in the kernel-based SVM approach [3].

In our approach, the afne similarity Sa is computed with: Sa = dr1 + dr2 + d1 + dl1 + dg , if = true; , else, (1)

(a)

(b)

(c)

(d)

(e)

(f)

Figure 3. (a)Description of a segment pair. (b)Different reference points generate different judgment. (c)Impractical afne transformation. (d)Impractical deformation. (e)Quantization of the feature space of the general similarity. (f)The general similarity is but the afne similarity is not.

4.2. The Similarity Measure of Segment Pairs

Assume the feature vectors of two segment pairs are v = {r12 , l15 , 15 , g } and v = {r12 , l15 , 15 , g } respectively. As mentioned before, if |r1 r1 | and |r2 r2 | are smaller than a threshold Tr , the underlying transformation can be regarded as afne. In this case, we call the similarity of the two segment pairs as afne similarity; otherwise, it is called general similarity. Afne Similarity: To be completely afne invariant, afne similarity should be based only on ri and ri . However, from experiments we found matching results can be improved by limiting the range of the changes of length ratio l1 and angle 1 , which increases feature distinctiveness.

where |r r | dri = 1 iTr i , i {1, 2}; |1 | d1 = 1 T 1 ; max(l1 ,l1 )/min(l1 ,l1 )1 ; d l1 = 1 Tl max ( g,g ) /min ( g,g ) 1 dg = 1 ; Tg {dri , d1 , dl1 , dg } 0 & (1 )(1 ) 0. (2) Tr , T , Tl and Tg are thresholds. If the change of an attribute is larger than its threshold ({dri , d1 , dl1 , dg } < 0), the deformation between the segment pairs is regarded as impractical and their similarity is . Note these thresholds play the similar roles as the bin dimensions in the histograms of local features in which the location change of a pixel between two images is regarded as impractical if it falls into different bins. These thresholds are also used to normalize the contribution of different attributes so that dri , d1 , dl1 , dg [0, 1]. In addition, they greatly reduces the computation since if the change of any attribute is larger than a threshold the similarity is without the need of the rest computation, which leads to the codebook approach discussed in Section 5. Through extensive experiments, we found Tr = 0.3, T = /2, Tl = Tg = 3 are good choices. The approach is not sensitive to them in a reasonable range. The condition (1 )(1 ) 0 (1 , 1 [0, 2 ]) is used to avoid the situation in Fig.3(c) where the deformation from q 1 q2 to q1 q2 is afne but rarely happens in practice. Note an alternative way to measure afne similarity is to t an afnity to the 4 endpoints of the segments and estimate the tting error. However, computing afne matrix for every combination of two segment pairs between two images is inefcient compared to estimating ri just once for each segment pair in each image. General Similarity: It is measured based on the relative positions between the 4 endpoints: 5 5 d li + di + dg , if {dli , di , dg } 0 & !C ; Sg = i=1 i=1 , else, (3) where dli , di and dg are computed in the same way as in Eq.2. Since we are handling line segments not disconnected points, the situation C depicted in Fig.3(d) where q2 jumps across segment p1 p2 to q2 (in these cases segments p1 p2 and q2 q2 intersect) is impractical. Overall Similarity: Combining the above two cases, the overall similarity S of two segment pairs is computed with: S= Sa , if |ri ri | Tr ; 1 4 Sg , else. (4)

The coefcient 1/4 gives Sg a lower weight so that its maximal contribution to S is 2.75 smaller than that of Sa 5. This is to reect that afnity is a stronger constraint so segment pairs satisfying an afnity are more likely to be matched.

4.3. The Similarity of Line Signatures

Given two line signatures, their similarity is the sum of the similarity between their corresponding segment pairs. However, the mapping between their segments is unknown except the central segments. The approach in [5] sorts the segments in each feature according to the coordinates of their middle points, and the segment mapping is determined directly by the ordering. However, this ordering is not robust under large image deformation and inaccurate endpoint detection. In addition, as mentioned before, some segments in a line signature may not have their counterparts in the corresponding line signature due to unstable segment detection and clustering. Instead of ordering segments, our approach nds the optimal segment mapping that maximizes the similarity measure between the two line signatures. Denote a one-to-one mapping between segments as M = {(l1 , l1 ), ..., (lk , lk )} where {l1 , ...lk } and {l1 , ...lk } are subsets of the segments in the st and the second line signatures. Note that the central segments must be a pair in M . Assume the similarity of two segment pairs (li , lj ) and (li , lj ) is Sij , where (li , li ) M and (lj , lj ) M . The similarity of the two line signatures is computed with: SLS = max

M i<j

Sij .

(5)

In the following section, we will show that the combinatorial optimization in Eq.5 can be largely avoided with a codebook-based approach.

5. Fast Matching with the Codebook

For most segment pairs, their similarities are because their differences on some attributes are larger than the thresholds. Therefore, by quantizing the feature space into many types (subspaces) with the difference between different types on at least one attribute larger than its threshold, segment pairs with similarity can be found directly based on their types without explicit computation. This is similar to the codebook approaches used in [5] and many other object detection systems in which the feature space quantization is based on clustering the features detected in training images, whereas it is uniform in our approach. Since the similarity measure has two cases, the quantization is conducted in two feature spaces: the space spanned by {ri , 1 , l1 } for afne similarity and the space spanned by {i , li } for general similarity. From experiments, we found for a segment pair whose segments are not nearly

intersected, if its general similarity with another segment pair is , their afne similarity is almost always . If its two segments are intersected or close to be intersected, this may be wrong as shown in Fig.3(f) where the general similarity is because q1 jumps across p1p2 to q1 but the afne similarity is not, which usually happens due to inaccurate endpoint detection. Therefore, the quantization of the feature space spanned by {ri , 1 , l1 } is conducted only in its subspace where ri [0.3, 1.3]. The segment pairs within this subspace have types in two feature spaces, while the others have only one type in the space of {i , li } which is enough to judge if the similarities are . The space of {ri , 1 , l1 } is quantized as follows: The range [0.3, 1.3] of r1 and r2 , and the range [0, 2 ] of 1 are uniformly divided into 5 and 12 bins respectively. The range of l1 is not divided. Therefore, there are 300 types. The quantization of the space spanned by {i , li } is not straightforward since it is large and high dimensional. Based on experiments, we found the following approach is effective: The image space is divided into 6 regions accord ing to p 1 p2 as shown in Fig.3(e). Thus there are 36 different distributions of the two endpoints of q 1 q2 in these regions. When they are on different sides of p1 p2 , the intersection of the two lines can be above, on or below the segment p 1 p2 . The reason to distinguish the 3 cases is that they cannot change to each other without being the condition C in Eq.3. For example, if q 1 q2 changes to q1 q2 in Fig.3(e), point p1 will jump across q1 q2 . The range of 1 is uniformly divided into 12 bins. Thus, there are 264 types (Note some congurations are meaningless, e.g., when q1 and q2 are in region 6 and 2 respectively, 1 can only be in (0, /2)). Therefore, there are totally 564 types (more types can be obtained with ner quantization but it requires more memory). Each segment pair has one or two types depending on if ri [0.3, 1.3]. The types of a segment pair are called its primary keys. In addition, according to the deformation tolerance decided by the thresholds Tr , T , the condition (1 )(1 ) 0 in Eq.2, and the condition C in Eq.3, we can predict the possible types of a segment pair in another image after image deformation (e.g. an endpoint in region 2 can only change to region 1 or 3). These predicted types are called its keys. The similarity of a segment pair with another segment pair is not only if one of its primary keys is one of the keys of the other segment pair. For each line signature, the keys of the segment pairs consisting of its central segment and each of its other segments are counted in a histogram of 564 bins with each bin representing a type. In addition, each bin is associated with a list storing all the segments that fall into it. To measure the similarity of two line signatures, assume the segment pair consisting of segment i and the central segment in the rst line signature has a primary key of p. The segments in the second line signature that may be matched with i can

be directly read from the list associated with the p-th bin of its histogram. From experiments, we found the average number of such candidate segments is only 1.6. Only these segments are checked to see if one of them can really be matched with i (by checking if the differences on all feature attributes are smaller than the corresponding thresholds). If there is one, we set a variable ci = 1; otherwise ci = 0. An approximate similarity of two line signatures is computed with SLS = i ci . Only if SLS 3 (meaning at least 3 segments besides the central segment in the rst line signature may have corresponding segments in the second signature), Eq.5 is used to compute the accurate similarity SLS ; otherwise SLS is 0. In average, for each line signature in the rst image, only 2% of the line signatures in the second image have SLS 3. Eq.5 is solved with an exhaustive search but the searching space is greatly reduced since the average number of candidate segment matches is small.

with all the existing matches in R, the reliable ones will be added into R. To reduce the risk that the top one candidate on L is actually wrong, each of the top 5 candidates will be used as a tentative seed to grow a set R. The one with the most number of matches will be output as the nal result. After the above consistency checking, most of the outliers will be removed. To further reduce the outliers and estimate the epipolar geometry, the approach in [2] based on grouping coplanar segments and RANSAC can be used.

7. Experimental Results and Conclusion

To decide the parameters in our approach (given in previous sections), 30 various image pairs are selected and the parameters are tuned independently to maximize the total number of correct line signature matches detected from them. Throughout the following experiments, these parameters are xed. Fig.4 shows 2 image pairs from the line matching papers [2] and [16]. The red segments with the same labels at their middle points are corresponding line segments detected with our approach (please zoom in to check the labels). The correctness of the matches is judged visually. For (c)-(d), the approach in [2] detects 21 matches (16 correct), and the number increases to 41 (35 correct) after matching propagation, while our method detects 39 matches (all correct) without matching propagation. For (e)-(f), the method in [16] detects 53 matches (77% are correct) with known epipolar geometry, while our method detects 153 matches (96% are correct) with unknown epipolar geometry.

6. Wide-baseline Image Matching

To match two images, for each line signature in an image, its top two most similar line signatures in the other image are found whose similarity values are S1 and S2 respectively. If S1 > T1 and S1 S2 > T2 , this line signature and its most similar line signature produce a putative correspondence, and the segment matches in their optimal mapping M in Eq.5 are putative line segment matches. T1 = 25 and T2 = 5 in our experiments. To remove outliers, we cannot directly use RANSAC based on epipolar geometry since the endpoints of line segments are inaccurate. In [2], putative segment matches are rst ltered with a topological lter based on the sidedness constraints between line segments. Among the remaining matches, coplanar segments are grouped using homographies. The intersection points of all pairs of segments within a group are computed and used as point correspondences based on which the epipolar geometry is estimated with RANSAC. Instead of using the topological lter in [2] which is computationally expensive, we provide a more efcient approach to remove most of the outliers. All the putative line signature correspondences are put into a list L and sorted by descending the value of S1 S2 . Due to the high distinctiveness of line signatures, the several candidates on the top of L almost always contain correct matches if there is one in L. Let R as the set of line segment matches of the two images, and initialize R = . Two segment matches are regarded as consistent if the similarity of the two segment pairs based on them is not . A segment match in the optimal mapping M of a line signature correspondence is called a reliable match if the similarity of the two segment pairs formed by its segments and the central segments is larger than 3.5. Starting from the top of L, for a line signature correspondence, if all the segment matches in its optimal mapping M are consistent

(a)

(b)

(c)

(d)

Figure 4. Two image pairs in the papers of [2] and [16]. The red segments with the same labels at their middle points in each image pair are corresponding line segments detected with our approach.

The next examples are more challenging than those in Fig.4, and we compare the performance of line signature with three well-known local features: SIFT, MSER, and Harris-Afne features [12]. The MSER and Harris-Afne regions are described with the SIFT descriptor with the

threshold 0.6 (also used in [2]). The comparison is based on three cases: low-texture, non-planar, and planar scenes. Fig.5(a)-(d) shows the matching results of our approach on two low-texture image pairs. The comparison of our approach with the three local features is demonstrated in Table 1 which reports the number of correct matches over the number of detected matches. SIFT, Harris-afne and MSER nd nearly no matches for these two image pairs, while our approach detects many and all of them are correct. Note that (c)-(d) have large illumination change, so the methods in [2] and [16] may also have difculties with this image pair because they depend on color and intensity distributions. Fig.5(e)-(h) are two pairs of non-planar scenes under large viewpoint changes. From Table 1, we can see line signature has much better performance on them than the other local features. The image pair (g)-(h) has large non-uniform illumination variation besides the viewpoint change. Fig.5(i)-(j) show the robustness of line signature against extreme illumination changes. Fig.6(a)-(e) are an image sequence from the ZuBuD image database. By matching the rst image with each of the rest images, Fig.6(f) plots the number of correct matches of different features against increasing viewpoint change. It demonstrates that line signature has advantages in matching non-planar structured scenes under large 3D viewpoint changes. Fig.5(k)-(n) are two image pairs of planar scenes from Mikolajczyks dataset [12] in which the image deformation is a homography. For (k)-(l), our approach detects 63 matches (all correct), which is better than SIFT (0/11) and Harris Afne (8/10) but worse than MSER (98/98). For (m)(n), our approach detects 20 matches (19 correct), which demonstrates its robustness to large scale changes although it is not as good as SIFT in the extreme cases. In general, for planar scenes, our approach has comparable performance with state-of-the-art local features on structured images. However, it is worse than most of them on textured images such as the bark sequence in [12]. The main reason for this is that our current line segment detection is not robust for very small patterns. Fig.7 shows two handwritings with clutters. Our approach detects 18 matches (all correct) while all the other methods mentioned above can hardly nd any matches. This also demonstrates the advantage of making no hard assumption such as epipolar geometry or homographies. The computation time of our approach depends on the number of detected segments. In our current implementation, to match the image pair of Fig.4(a)-(b), it takes less than 2 seconds including line segment detection with a 2GHz CPU. In average, it takes about 10 seconds to match images with 3000 segments such as Fig.5(e)-(f). In conclusion, we have presented a new semi-local feature which is based on local clustering of line segments. Extensive experiments validate that it has better performance

than existing local features in matching low-texture images, and non-planar structured scenes under large viewpoint changes. It is robust to large scale changes and illumination variations, therefore it also has advantages over existing wide-baseline line matchers [2] and [16]. By improving the robustness of line segment detection, we expect signicant improvement of the matching performance. In addition, besides line segments, elliptical arches may also be used as shape primitives, which can help matching circular objects.

8. Acknowledgements

This research was supported in part by Chevron through the CISOFT center at USC. We appreciate helpful discussions with Ram Nevatia and Gerard G. Medioni. a-b 2/5 0/0 0/1 11/11 c-d 2/7 0/0 2/2 53/53 e-f 9/13 0/1 2/2 76/76 g-h 1/2 1/4 0/7 50/50 i-j 40/45 0/3 9/11 110/118

SIFT Harris-Aff. MSER Line Sig.

Table 1. Comparison of different features on images in Fig.5. It reports the number of correct over the number of detected matches.

References

[1] N. Ansari and E. J. Delp. On detecting dominant points. Pattern Recognition, (3):441 451, 1990. [2] H. Bay, V. Ferrari, and L. V. Gool. Wide-baseline stereo matching with line segments. CVPR, pages 329336, 2005. [3] B. Boser, I. Guyon, and V. Vapnik. A training algorithm for optimal margin classiers. 5th Annual ACM Workshop on COLT. [4] S. Edelman, N. Intrator, and T. Poggio. Complex cells and object recognition. Unpublished manuscript: http://kybele.psych.cornell.edu/ edelman/archive.html, 1997. [5] V. Ferrari, L. Fevrier, F. Jurie, and C. Schmid. Groups of adjacent contour segments for object detection. PAMI, 30(1):3651, 2008. [6] G.Medioni and R.Nevatia. Segment-based stereo matching. CVGIP, 1985. [7] R. Horaud and T. Skordas. Stereo correspondence through feature grouping and maximal cliques. PAMI, pages 1168 1180, 1989. [8] B. Huet and E. Hancock. Line pattern retrieval using relational histograms. PAMI, (12):13631370, 1999. [9] D. Jacobs. Groper: a grouping based object recognition system for two-dimensional objects. IEEE Workshop on Computer Vision, pages 164169, 1987. [10] D. Lowe. Three-dimensional object recognition from single two-dimensional images. Articial Intelligence, 1987. [11] D. Lowe. Distinctive image features from scale-invariant keypoints. IJCV, 60(2):91110, 2004.

(a)

(b)

(c)

(d)

(e)

(a)

(b)

(c)

(d)

(f)

Figure 6. An image sequence in ZuBuD image database and its plot of the number of correct matches.

(e) (f)

(a) (g) (h)

(b)

Figure 7. The matching result of our method on two handwritings.

(i)

(j)

(k)

(l)

(m)

(n)

[12] K. Mikolajczyk, T. Tuytelaars, C. Schmid, A. Zisserman, J. Matas, F. Schaffalitzky, T. Kadir, and L. V. Gool. A comparison of afne region detectors. IJCV, 65(1):4372, 2005. [13] R. Nelson and A. Selinger. Large-scale tests of a keyed, appearance-based 3-d object recognition system. Vision Research, (15):246988, 1998. [14] R.Deriche and O.Faugeras. Tracking line segments. European Conference on Computer Vision, 1990. [15] C. Sagues and J. Guerrero. Robust line matching in image pairs of scenes with dominant planes. Optical Engineering, (6):067204/112, 2006. [16] C. Schmid and A. Zisserman. Automatic line matching cross views. CVPR, 1997. [17] V. Venkateswar and R. Chellappa. Hierarchical stereo and motion correspondence using feature groupings. IJCV, (3):245269, 1995. [18] L. Wang, S. You, and U. Neumann. Supporting range and segment-based hysteresis thresholding in edge detection. ICIP, 2008. [19] S. Yang. Symbol recognition via statistical integration of pixel-level constraint histograms: a new descriptor. PAMI, (2):278281, 2005.

Figure 5. Matching results of our approach for different scenes.

Potrebbero piacerti anche

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDa EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeValutazione: 4 su 5 stelle4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDa EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreValutazione: 4 su 5 stelle4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDa EverandNever Split the Difference: Negotiating As If Your Life Depended On ItValutazione: 4.5 su 5 stelle4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDa EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceValutazione: 4 su 5 stelle4/5 (895)

- Grit: The Power of Passion and PerseveranceDa EverandGrit: The Power of Passion and PerseveranceValutazione: 4 su 5 stelle4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeDa EverandShoe Dog: A Memoir by the Creator of NikeValutazione: 4.5 su 5 stelle4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDa EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersValutazione: 4.5 su 5 stelle4.5/5 (344)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDa EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureValutazione: 4.5 su 5 stelle4.5/5 (474)

- Her Body and Other Parties: StoriesDa EverandHer Body and Other Parties: StoriesValutazione: 4 su 5 stelle4/5 (821)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Da EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Valutazione: 4.5 su 5 stelle4.5/5 (121)

- The Emperor of All Maladies: A Biography of CancerDa EverandThe Emperor of All Maladies: A Biography of CancerValutazione: 4.5 su 5 stelle4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingDa EverandThe Little Book of Hygge: Danish Secrets to Happy LivingValutazione: 3.5 su 5 stelle3.5/5 (399)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDa EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyValutazione: 3.5 su 5 stelle3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)Da EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Valutazione: 4 su 5 stelle4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDa EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaValutazione: 4.5 su 5 stelle4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDa EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryValutazione: 3.5 su 5 stelle3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnDa EverandTeam of Rivals: The Political Genius of Abraham LincolnValutazione: 4.5 su 5 stelle4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealDa EverandOn Fire: The (Burning) Case for a Green New DealValutazione: 4 su 5 stelle4/5 (73)

- The Unwinding: An Inner History of the New AmericaDa EverandThe Unwinding: An Inner History of the New AmericaValutazione: 4 su 5 stelle4/5 (45)

- 12a Statistics 2 - October 2020 Examination Paper PDFDocumento24 pagine12a Statistics 2 - October 2020 Examination Paper PDFFt. BASICNessuna valutazione finora

- Program Logic FormulationDocumento57 pagineProgram Logic Formulationアテンヂド 彩92% (12)

- Homework #6, Sec 11.4 and 12.1Documento7 pagineHomework #6, Sec 11.4 and 12.1Masaya Sato100% (1)

- Maths BookDocumento247 pagineMaths Booktalha gill100% (1)

- Text Week1 PDFDocumento65 pagineText Week1 PDFGustavo BravoNessuna valutazione finora

- Visual-Inertial Simultaneous Localization, Mapping and Sensor-To-Sensor Self-CalibrationDocumento9 pagineVisual-Inertial Simultaneous Localization, Mapping and Sensor-To-Sensor Self-CalibrationVũ Tiến DũngNessuna valutazione finora

- Weiss 11 RealDocumento7 pagineWeiss 11 RealVũ Tiến DũngNessuna valutazione finora

- Visual Inertial Tracking On Android For AR AppDocumento7 pagineVisual Inertial Tracking On Android For AR AppVũ Tiến DũngNessuna valutazione finora

- Videocamera Calibration Global Sip 2013 PaperDocumento4 pagineVideocamera Calibration Global Sip 2013 PaperVũ Tiến DũngNessuna valutazione finora

- Prelims MathsDocumento27 paginePrelims MathsVũ Tiến DũngNessuna valutazione finora

- SIFT - David Lowe - PaperDocumento28 pagineSIFT - David Lowe - Papercacr_72Nessuna valutazione finora

- Force MethodsDocumento33 pagineForce Methodsjackie_9227Nessuna valutazione finora

- Lab 4 Electric PotentialDocumento4 pagineLab 4 Electric PotentialJorge Tenorio0% (1)

- OM Notes PDFDocumento278 pagineOM Notes PDFAliNessuna valutazione finora

- Adaptive Control 2Documento30 pagineAdaptive Control 2kanchiNessuna valutazione finora

- 1.6 PPT - Query OptimizationDocumento53 pagine1.6 PPT - Query OptimizationamaanNessuna valutazione finora

- Factor & Remainder Theorem CAPE Integrated MathematicsDocumento15 pagineFactor & Remainder Theorem CAPE Integrated MathematicsDequanNessuna valutazione finora

- Covariance and Correlation: Multiple Random VariablesDocumento2 pagineCovariance and Correlation: Multiple Random VariablesAhmed AlzaidiNessuna valutazione finora

- PDADocumento52 paginePDAIbrahim AnsariNessuna valutazione finora

- A First Course in Discrete Mathematics - I. AndersonDocumento200 pagineA First Course in Discrete Mathematics - I. AndersonB . HassanNessuna valutazione finora

- KRAFTA - 1996 - Urban Convergence Morphology Attraction - EnvBDocumento12 pagineKRAFTA - 1996 - Urban Convergence Morphology Attraction - EnvBLucas Dorneles MagnusNessuna valutazione finora

- Krajewski Om9 PPT 13Documento83 pagineKrajewski Om9 PPT 13Burcu Karaöz100% (1)

- Fundamental Concepts of Probability: Probability Is A Measure of The Likelihood That ADocumento43 pagineFundamental Concepts of Probability: Probability Is A Measure of The Likelihood That ARobel MetikuNessuna valutazione finora

- Ch5 State Space Search Methods MasterDocumento35 pagineCh5 State Space Search Methods MasterAhmad AbunassarNessuna valutazione finora

- 007 Examples Constraints and Lagrange EquationsDocumento12 pagine007 Examples Constraints and Lagrange EquationsImran AnjumNessuna valutazione finora

- Don Bosco 12-MATHS-PRE - BOARD-2023-24Documento6 pagineDon Bosco 12-MATHS-PRE - BOARD-2023-24nhag720207Nessuna valutazione finora

- Logical EquivalenceDocumento21 pagineLogical EquivalenceTHe Study HallNessuna valutazione finora

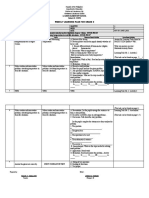

- Melcs Day Objectives Topic/s Classroom-Based Activities Home-Based Activities 1Documento2 pagineMelcs Day Objectives Topic/s Classroom-Based Activities Home-Based Activities 1Raquel CarteraNessuna valutazione finora

- Polynomial Approximation and Floating-Point NumbersDocumento101 paginePolynomial Approximation and Floating-Point NumbersMuhammad AmmarNessuna valutazione finora

- Ee101 DGTL 1 PDFDocumento144 pagineEe101 DGTL 1 PDFShubham KhokerNessuna valutazione finora

- Poisson Random Variables: Scott She EldDocumento15 paginePoisson Random Variables: Scott She EldzahiruddinNessuna valutazione finora

- G11-M4-GEN MATH (New)Documento10 pagineG11-M4-GEN MATH (New)Rizah HernandezNessuna valutazione finora

- Solving Elitmus Cryptarithmetic Questions in Logical Reasioning Section-Method-II - Informguru - Elitmus Information CenterDocumento5 pagineSolving Elitmus Cryptarithmetic Questions in Logical Reasioning Section-Method-II - Informguru - Elitmus Information CenterIshant BhallaNessuna valutazione finora

- Referensi Pendidikan Matematika (Analisis Kesalahan Belajar KPK Dan FPB)Documento11 pagineReferensi Pendidikan Matematika (Analisis Kesalahan Belajar KPK Dan FPB)Dwi Kurniawan 354313Nessuna valutazione finora

- Logic Design and Digital CircuitsDocumento3 pagineLogic Design and Digital CircuitsShawn Michael SalazarNessuna valutazione finora

- HW9 SolsDocumento4 pagineHW9 SolsDidit Gencar Laksana0% (1)