Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Estimation

Caricato da

dmugalloyTitolo originale

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Estimation

Caricato da

dmugalloyCopyright:

Formati disponibili

MAXIMUM-LIKELIHOOD ESTIMATION In statistics, maximum-likelihood estimation (MLE) is a method of estimating the parameters of a statistical model.

When applied to a data set and given a statistical model, maximumlikelihood estimation provides estimates for the model's parameters. The method of maximum likelihood corresponds to many well-known estimation methods in statistics. For example, one may be interested in the heights of adult female giraffes, but be unable to measure the height of every single giraffe in a population due to cost or time constraints. Assuming that the heights are normally (Gaussian) distributed with some unknown mean and variance, the mean and variance can be estimated with MLE while only knowing the heights of some sample of the overall population. MLE would accomplish this by taking the mean and variance as parameters and finding particular parametric values that make the observed results the most probable (given the model). In general, for a fixed set of data and underlying statistical model, the method of maximum likelihood selects values of the model parameters that produce a distribution that gives the observed data the greatest probability (i.e., parameters that maximize the likelihood function). Maximum-likelihood estimation gives a unified approach to estimation, which is well-defined in the case of the normal distribution and many other problems. However, in some complicated problems, difficulties do occur: in such problems, LEAST SQUARES METHOD The method of least squares is a standard approach to the approximate solution of overdetermined systems, i.e., sets of equations in which there are more equations than unknowns. "Least squares" means that the overall solution minimizes the sum of the squares of the errors made in the results of every single equation. The most important application is in data fitting. The best fit in the least-squares sense minimizes the sum of squared residuals, a residual being the difference between an observed value and the fitted value provided by a model. When the problem has substantial uncertainties in the independent variable (the 'x' variable), then simple regression and least squares methods have problems; in such cases, the methodology required for fitting errors-in-variables models may be considered instead of that for least squares. Least squares problems fall into two categories: linear or ordinary least squares and non-linear least squares, depending on whether or not the residuals are linear in all unknowns. The linear least-squares problem occurs in statistical regression analysis; it has a closed-form solution. A closed-form solution (or closed-form expression) is any formula that can be evaluated in a finite number of standard operations. The non-linear problem has no closed-form solution and is usually solved by iterative refinement; at each iteration the system is approximated by a linear one, thus the core calculation is similar in both cases.

The least-squares method was first described by Carl Friedrich Gauss around 1794.[1] Least squares corresponds to the maximum likelihood criterion if the experimental errors have a normal distribution and can also be derived as a method of moments estimator.

Moment-generating function

In probability theory and statistics, the moment-generating function of a random variable is an alternative specification of its probability distribution (however, note that not all random variables have moment-generating functions). Thus, it provides the basis of an alternative route to analytical results compared with working directly with probability density functions or cumulative distribution functions. There are particularly simple results for the momentgenerating functions of distributions defined by the weighted sums of random variables. In addition to univariate distributions, moment-generating functions can be defined for vector- or matrix-valued random variables, and can even be extended to more general cases. The moment-generating function does not always exist even for real-valued arguments, unlike the characteristic function. There are relations between the behavior of the moment-generating function of a distribution and properties of the distribution, such as the existence of moments.

Potrebbero piacerti anche

- AmiranDocumento2 pagineAmirandmugalloy100% (1)

- Bidding Document - Cold Storage For Meat and Associated Facilities Final-1Documento184 pagineBidding Document - Cold Storage For Meat and Associated Facilities Final-1dmugalloy100% (1)

- Expectation Maximization AlgoDocumento10 pagineExpectation Maximization AlgoZahid DarNessuna valutazione finora

- Parametric FamilyDocumento62 pagineParametric FamilyMaster ZenNessuna valutazione finora

- Ols 2Documento19 pagineOls 2sanamdadNessuna valutazione finora

- Linear Least SquaresDocumento10 pagineLinear Least Squareswatson191Nessuna valutazione finora

- Least SquareDocumento25 pagineLeast SquareNetra Bahadur KatuwalNessuna valutazione finora

- A Pattern Is An Abstract Object, Such As A Set of Measurements Describing A Physical ObjectDocumento12 pagineA Pattern Is An Abstract Object, Such As A Set of Measurements Describing A Physical Objectriddickdanish1Nessuna valutazione finora

- What Is ModeDocumento4 pagineWhat Is Modeapi-150547803Nessuna valutazione finora

- What Is The ModeDocumento4 pagineWhat Is The Modeapi-150547803Nessuna valutazione finora

- Estimation Strategies For The Regression Coefficient Parameter Matrix in Multivariate Multiple RegressionDocumento20 pagineEstimation Strategies For The Regression Coefficient Parameter Matrix in Multivariate Multiple RegressionRonaldo SantosNessuna valutazione finora

- Regression AnalysisDocumento11 pagineRegression Analysisjoshuapeter961204Nessuna valutazione finora

- Nonlinear Least Squares Data Fitting in Excel SpreadsheetsDocumento15 pagineNonlinear Least Squares Data Fitting in Excel SpreadsheetsAlbyziaNessuna valutazione finora

- ML Model Paper 2 SolutionDocumento15 pagineML Model Paper 2 SolutionVIKAS KUMARNessuna valutazione finora

- Maximum LikelihoodDocumento16 pagineMaximum Likelihoodsup2624rakNessuna valutazione finora

- Chin Odilo Quantitative MethodesDocumento10 pagineChin Odilo Quantitative MethodesChin Odilo AsherinyuyNessuna valutazione finora

- Using The Generalized Schur Form To Solve A Multivariate Linear Rational Expectations Model (Paul Klein)Documento19 pagineUsing The Generalized Schur Form To Solve A Multivariate Linear Rational Expectations Model (Paul Klein)Andrés JiménezNessuna valutazione finora

- Chapter 6: How To Do Forecasting by Regression AnalysisDocumento7 pagineChapter 6: How To Do Forecasting by Regression AnalysisSarah Sally SarahNessuna valutazione finora

- 14 Aos1260Documento31 pagine14 Aos1260Swapnaneel BhattacharyyaNessuna valutazione finora

- Finding ModeDocumento4 pagineFinding Modeapi-140032165Nessuna valutazione finora

- Paper MS StatisticsDocumento14 paginePaper MS Statisticsaqp_peruNessuna valutazione finora

- Basic Econometric Models: Linear Regression: Econometrics Is The Application ofDocumento4 pagineBasic Econometric Models: Linear Regression: Econometrics Is The Application ofA KNessuna valutazione finora

- Mohit Final REASEARCH PAPERDocumento20 pagineMohit Final REASEARCH PAPERMohit MathurNessuna valutazione finora

- ShimuraDocumento18 pagineShimuraDamodharan ChandranNessuna valutazione finora

- Tensor Decompositions For Learning Latent Variable Models: Mtelgars@cs - Ucsd.eduDocumento54 pagineTensor Decompositions For Learning Latent Variable Models: Mtelgars@cs - Ucsd.eduJohn KirkNessuna valutazione finora

- Unit-Iii 3.1 Regression ModellingDocumento7 pagineUnit-Iii 3.1 Regression ModellingSankar Jaikissan100% (1)

- RegressionDocumento3 pagineRegressionYram AustriaNessuna valutazione finora

- Mathematical model@ABHISHEK: Examples of Mathematical ModelsDocumento8 pagineMathematical model@ABHISHEK: Examples of Mathematical ModelsakurilNessuna valutazione finora

- Robust RegressionDocumento7 pagineRobust Regressionharrison9Nessuna valutazione finora

- Ordinary Least Squares: Linear ModelDocumento13 pagineOrdinary Least Squares: Linear ModelNanang ArifinNessuna valutazione finora

- Modal Assurance CriterionDocumento8 pagineModal Assurance CriterionAndres CaroNessuna valutazione finora

- Interview MLDocumento24 pagineInterview MLRitwik Gupta (RA1911003010405)Nessuna valutazione finora

- Termpaper EconometricsDocumento21 pagineTermpaper EconometricsBasanta RaiNessuna valutazione finora

- Numericke Metode U Inzenjerstvu Predavanja 2Documento92 pagineNumericke Metode U Inzenjerstvu Predavanja 2TonyBarosevcicNessuna valutazione finora

- A General Stochastic Approach To Solving Problems With Hard and Soft ConstraintsDocumento14 pagineA General Stochastic Approach To Solving Problems With Hard and Soft ConstraintskcvaraNessuna valutazione finora

- Simulation OptimizationDocumento6 pagineSimulation OptimizationAndres ZuñigaNessuna valutazione finora

- Skewness and KurtosisDocumento23 pagineSkewness and KurtosisRena VasalloNessuna valutazione finora

- Discriminative Generative: R Follow ADocumento18 pagineDiscriminative Generative: R Follow AChrsan Ram100% (1)

- Expectation-Maximization AlgorithmDocumento13 pagineExpectation-Maximization AlgorithmSaviourNessuna valutazione finora

- Kerja Kursus Add MatDocumento8 pagineKerja Kursus Add MatSyira SlumberzNessuna valutazione finora

- Approximation Models in Optimization Functions: Alan D Iaz Manr IquezDocumento25 pagineApproximation Models in Optimization Functions: Alan D Iaz Manr IquezAlan DíazNessuna valutazione finora

- Graphical Models, Exponential Families, and Variational InferenceDocumento305 pagineGraphical Models, Exponential Families, and Variational InferenceXu ZhimingNessuna valutazione finora

- Analysis, and Result InterpretationDocumento6 pagineAnalysis, and Result InterpretationDainikMitraNessuna valutazione finora

- Hidden Markov Models in Univariate GaussiansDocumento34 pagineHidden Markov Models in Univariate GaussiansIvoTavaresNessuna valutazione finora

- Support Vector Machines and LinearDocumento12 pagineSupport Vector Machines and LinearChetan KashyapNessuna valutazione finora

- Standard Error: Sampling DistributionDocumento5 pagineStandard Error: Sampling DistributionErica YamashitaNessuna valutazione finora

- A Unified Framework For High-Dimensional Analysis of M - Estimators With Decomposable RegularizersDocumento45 pagineA Unified Framework For High-Dimensional Analysis of M - Estimators With Decomposable RegularizersCharles Yang ZhengNessuna valutazione finora

- ml97 ModelselectionDocumento44 pagineml97 Modelselectiondixade1732Nessuna valutazione finora

- Best Generalisation Error PDFDocumento28 pagineBest Generalisation Error PDFlohit12Nessuna valutazione finora

- Chapter 2Documento5 pagineChapter 2rmf94hkxt9Nessuna valutazione finora

- Regression Analysis: From Wikipedia, The Free EncyclopediaDocumento10 pagineRegression Analysis: From Wikipedia, The Free Encyclopediazeeshan17Nessuna valutazione finora

- Multiple Linear RegressionDocumento30 pagineMultiple Linear RegressionJonesius Eden ManoppoNessuna valutazione finora

- A New SURE Approach To Image Denoising: Inter-Scale Orthonormal Wavelet ThresholdingDocumento28 pagineA New SURE Approach To Image Denoising: Inter-Scale Orthonormal Wavelet Thresholdingsmahadev88Nessuna valutazione finora

- Unit IiiDocumento27 pagineUnit Iiimahih16237Nessuna valutazione finora

- Abstracts MonteCarloMethodsDocumento4 pagineAbstracts MonteCarloMethodslib_01Nessuna valutazione finora

- Entropy: Generalized Maximum Entropy Analysis of The Linear Simultaneous Equations ModelDocumento29 pagineEntropy: Generalized Maximum Entropy Analysis of The Linear Simultaneous Equations ModelWole OyewoleNessuna valutazione finora

- A Field Guide to Solving Ordinary Differential EquationsDa EverandA Field Guide to Solving Ordinary Differential EquationsNessuna valutazione finora

- CurrentRatings PDFDocumento0 pagineCurrentRatings PDFVirajitha MaddumabandaraNessuna valutazione finora

- Specification For Tender For Primary GIS Up To 40.5kV Single Busbar (SBB)Documento28 pagineSpecification For Tender For Primary GIS Up To 40.5kV Single Busbar (SBB)dmugalloyNessuna valutazione finora

- CM Line Catalog ENUDocumento68 pagineCM Line Catalog ENUdmugalloyNessuna valutazione finora

- SubmPowCables FINAL 10jun13 EnglDocumento20 pagineSubmPowCables FINAL 10jun13 EnglManjul TakleNessuna valutazione finora

- Schneider LAPP Solar CablesDocumento2 pagineSchneider LAPP Solar CablesdmugalloyNessuna valutazione finora

- Schneider Jinko Solar ModuleDocumento2 pagineSchneider Jinko Solar ModuledmugalloyNessuna valutazione finora

- Schneider Conext CL Contractual WarrantyDocumento5 pagineSchneider Conext CL Contractual WarrantydmugalloyNessuna valutazione finora

- Ldp604 Project Planning Design and Implementation Revision Exam QuestionsDocumento7 pagineLdp604 Project Planning Design and Implementation Revision Exam QuestionsdmugalloyNessuna valutazione finora

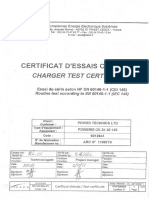

- Charger Test CertificateDocumento4 pagineCharger Test CertificatedmugalloyNessuna valutazione finora

- Citizens Guide To Public Procurement Procedures PDFDocumento50 pagineCitizens Guide To Public Procurement Procedures PDFdmugalloyNessuna valutazione finora

- Technical Offer For 11kv Sm6 SwitchgearDocumento6 pagineTechnical Offer For 11kv Sm6 SwitchgeardmugalloyNessuna valutazione finora

- Ldp616 Gender Issues in Development Exam RevsDocumento56 pagineLdp616 Gender Issues in Development Exam Revsdmugalloy100% (1)

- Bid 1000000297Documento6 pagineBid 1000000297dmugalloyNessuna valutazione finora

- Kenya GB 2015Documento19 pagineKenya GB 2015dmugalloyNessuna valutazione finora

- Types of Evaluation-ReviewedDocumento23 pagineTypes of Evaluation-RevieweddmugalloyNessuna valutazione finora

- Result Based MonitoringDocumento11 pagineResult Based MonitoringdmugalloyNessuna valutazione finora

- Project EvaluationDocumento9 pagineProject EvaluationProsper ShumbaNessuna valutazione finora

- Principles of TQM - February 2016Documento3 paginePrinciples of TQM - February 2016dmugalloyNessuna valutazione finora

- PRQ20150620 Tender Document For G-SectionDocumento184 paginePRQ20150620 Tender Document For G-SectiondmugalloyNessuna valutazione finora

- Chapter 2 Issues in Ex-Ante and Ex-Post Evaluations: Outline of This ChapterDocumento86 pagineChapter 2 Issues in Ex-Ante and Ex-Post Evaluations: Outline of This ChapterdmugalloyNessuna valutazione finora

- LDP 607-Barriers To Impementation of TQM - February 2016Documento1 paginaLDP 607-Barriers To Impementation of TQM - February 2016dmugalloyNessuna valutazione finora

- Munyoroku - The Role of Organization Structure On Strategy Implementation Among Food Processing Companies in NairobiDocumento69 pagineMunyoroku - The Role of Organization Structure On Strategy Implementation Among Food Processing Companies in NairobidmugalloyNessuna valutazione finora

- Business Case and Summary 203357Documento53 pagineBusiness Case and Summary 203357dmugalloyNessuna valutazione finora

- The BMW Group Is The World's Leading Provider of Premium Products and Premium Services For Individual Mobility.Documento6 pagineThe BMW Group Is The World's Leading Provider of Premium Products and Premium Services For Individual Mobility.dmugalloyNessuna valutazione finora

- Facilities Maintenance Training ReportDocumento2 pagineFacilities Maintenance Training ReportdmugalloyNessuna valutazione finora

- 1 s2.0 S2214157X13000117 Main PDFDocumento7 pagine1 s2.0 S2214157X13000117 Main PDFMickael SoaresNessuna valutazione finora

- Cat Part B Answer All The Questions.: TH THDocumento2 pagineCat Part B Answer All The Questions.: TH THdmugalloyNessuna valutazione finora

- National TimerDocumento193 pagineNational TimerdmugalloyNessuna valutazione finora