Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Parallel Iterative Block and Direct Block Methods For 2-Space Dimension Problems On Distributed Memory Architecture

Caricato da

Md. Rajibul IslamTitolo originale

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Parallel Iterative Block and Direct Block Methods For 2-Space Dimension Problems On Distributed Memory Architecture

Caricato da

Md. Rajibul IslamCopyright:

Formati disponibili

THE 6TH INTERNATIONAL CONFERENCE ON INFORMATION TECHNOLOGY IN ASIA, 2009

Parallel Iterative Block and Direct Block Methods for

2-Space Dimension Problems on Distributed Memory

Architecture

NORMA ALIAS, TAN YIN SAN, ROZIHA DARWIS, NORIZA SATAM, ZARITH SAFIZA A.

GHAFFAR, NORHAFIZA HAMZAH, MD RAJIBUL ISLAM

Institute of Ibnu Sina, Department of Mathematics, Faculty of Science,

Universiti Teknologi Malaysia,

81310 Skudai, Johor, Malaysia

norma@ibnusina.utm.my, ibnu.qurra.iis@gmail.com

Abstract - In numerical simulations of partial differential − λθu i −1, j ,k +1 + ( 1 + 4λθ )u i , j ,k +1 − λθu i +1, j ,k +1 − λθu i , j −1,k +1

equations, it is often the case that we have to solve the

matrix equations accrued from finite difference models of − λθu i , j +1,k +1 = λ( 1 − θ )u i −1, j ,k +

the equations. For computational purposes, we can (2)

( 1 − 4λ( 1 − θ ))u i , j ,k + λ( 1 − θ )u i +1, j ,k

iterate the solution system in such a way that the resulting

matrices on the left hand side become easy to handle

+ λ( 1 − θ )u i , j +1,k + λ( 1 − θ )u i , j −1,k + θtF

such as diagonal matrices or small matrices, for example i , j ,k + 1

2

the block systems. This indicates that we can apply

various group computational molecules to simulate the

with θ = 0, 1

2

or 1.

partial differential equations numerically. In this paper,

we present two problems of group schemes, specifically 1.2 Crack Propagation Problem

the Alternating Group Explicit (AGE) method and the

Crack Propagation. We offer reasonable assessments and Fracture mechanics (Bui, 2006) is used to investigate

contrasts on behalf of the numerical experiments of these the failure of brittle materials, which is to study material

two methods ported to run through Parallel Virtual behavior and design against brittle failure and fatigue.

Machine (PVM) on distributed memory architecture. Log scale

da/dn

Keywords: Alternating Group Explicit (AGE) Method,

Element Stiffness method, Parallel Performance

measurement, Distributed Memory Architecture, Parallel

Virtual Machine (PVM).

I II III

1 Introduction

1.1 Diffusion equation of parabolic PDE ∆K n ∆K ∆K c

Log scale

We notice that an approximation to parabolic partial Figure 1: Failure of material view in three steps

differential equations can be derived from the standard

five-point finite difference approximation (Dahlquist & The engineering study of fracture mechanics (Stanley,

Bjorck 1974) to provide the two-dimensional diffusion 1997) does not emphasize how a crack is initiated; the

partial differential equation, purpose is to develop methods of predicting how a crack

propagates. Following are three steps failure of material: -

∂U ∂ 2U ∂ 2U i. Crack Initiation: Initial crack occurs in this stage.

= + + F(x, y,t) (1) This might be due to handling, tooling of the

∂t ∂x 2 ∂y 2

material, threads, and slip bands.

with a specified initial and boundary conditions on a

ii. Crack Propagation: During this stage, the crack

unit. One commonly used implicit finite difference

continues growing as a result of continuously

scheme based on the centred difference in time and space

1 applied stresses.

formulation about the point (i,j,k+ ) transforms iii. Failure: Failure occurs when the crack cannot

2 withstand the applied stress on the material and

equation (1) into

happen quickly.

Adaptive finite element mesh is analysing two-

dimension elastoplastic microfacture during crack

170 Copyright © 2009 by University Malaysia Sarawak

THE 6TH INTERNATIONAL CONFERENCE ON INFORMATION TECHNOLOGY IN ASIA, 2009

n

propagation. Adaptive crack propagation calculation (7)

using finite element method is based on displacement

Ω= ∑Ω

e −1

e

formulation each element border. Consider that the extension of the element of a material

is given by:

)

2 Alternating Group Explicit (AGE) ) Fdx

du = ⇒

du

=

F (8)

AE dx AE

Based on the Douglas-Rachford formula, the AGE )

where du is the extension of an element of length dx

fractional scheme takes the form,

due to force, F . A and E are the Young’s Modulus

(k + 1 )

( G + rI )u 4 = ( rI − G − 2 G − 2 G − 2 G )u ( k ) + 2 f and constant cross sectional area of the element

1 (r) 1 2 3 4 (r)

respectively. If F is constant over the element then

(k + 1 ) (k + 1 )

(3)

2 = G u ( k ) + ru dF

(G

2

+ rI )u

(r) 2 (r) (r)

4 = 0 . Hence equation (8) becomes:-

dx

3

(k + 1 )

)

(k + )

4 = G u ( k ) + ru

d 2u (9)

(G + rI )u 2 AE 2 = 0

3 (r) 3 (r) (r) dx

3

(k + ) Equation (9) is governing equation for an axial element.

( k + 1) (k ) 4

(G

4

+ rI )u

(r)

=G u

4 (r)

+ ru

(r ) Integrating over the length l , get:-

)

du (10)

AE =C 1

A is split into the sum of its constituent symmetric and dx

) (11)

positive definite matrices G1, G2 , G3, where, AEu = C1 x + C 2

Therefore,

) x (12)

u = (u j − u i ) + u i

(4) l

G1 + G2 =

After differentiation, we find from equation (12)

du (u j − u i ) (13)

=

dx t

and (u j − ui )

at du

x = xi → f i = − AE = − AE

dx xi l

G3 + G4 = (5) at x = x → f = AE du = AE (u j − u i )

j j

dx x j l

The above can be expressed in matrix notation as

f i AE 1 − 1 u i (14)

=

l − 1 1 u j

with diag(G1+G2) = diag(G3+G4) = 1 diag(A). fi

2 Finally, take

AGE fractional scheme is based on four intermediate AE 1 − 1 (15)

[K ] =

1 1 3 l − 1 1

levels, (k+ 4 ), (k+ 2 ), (k+ 4 ) and (k+1). Using explicit

Then, for each element of domain can be written in,

(2 × 2) blocks for matrices (G1+G2) and (G3+G4), we have [K e ]{∆a e }= {re } (16)

a group of (2 × 2) block systems which can be made where,

explicit as follows, [K e ] = Elemental stiffness matrix,

r1 {∆a} = Constantly incremental displacement for border

r a (6)

1 1 condition.

a1 r1

r1 a1

{re } = Vector force including body force.

C1 = a1 r1

O Elemental stiffness matrices tangent with all integration

O

is carried out by using valuable gaussian integration

r1 a1

technique. Elemental stiffness matrices is collected with

a1 r1

standard finite element method to form global stiffness

matrices,

n

(17)

3 Direct method of Crack Propagation [K ] = k ∑

e=i

e

The initial step for modelling finite element started by The problem solution is based on incremental iteration

elemental triangle of 6 nodes is used since it can fill in the technique where the equation is non linear based on the

most discrete finite element for dividing the model to law of elastoplastic material.

small finite element, Ω e . Composition of these elements

will form a domain model, Ω,

Copyright © 2009 by University Malaysia Sarawak 171

THE 6TH INTERNATIONAL CONFERENCE ON INFORMATION TECHNOLOGY IN ASIA, 2009

4 Parallelizing Strategies 5 Numerical Result

4.2 Parallel AGE method Numerical results of iterative and direct methods can

be categorised into sequential and parallel algorithm

As the AGE method is fully explicit, its feature can be using performance evaluation.

fully utilized for parallelization. Firstly, domain Ω is Figure 3 and 4 shows the result of direct method based

p

distributed to Ω sub domains by the master processor. on displacement and stress.

The partitioning is based on domain decomposition

technique.

Figure 3: Displacement of each node for: (a) e=5 (b)

e=10 (c) e=15

Figure 2: Domain decomposition for processor, pi , j

Figure 4: Comparison between stress at each element

Secondly, the sub domains Ω p of AGE method is

with: (a) e=5 (b) e=10 (c) e=15

assigned into p processors in block ordering as illustrated

in Figure 3. The domain decomposition for AGE method

is implemented in five time levels. The communication 5.1 Numerical Analysis

activities between the slave processors are needed for the The following definitions are used to measure the

computations in the next iterations. The parallelization of parallel performance of the three methods,

AGE is achieved by assigning the explicit block (2 × 2). T

This proven that, the computations is involved are Speed-up: S p = ,1 and

Tp

independent between processors. The parallelism strategy

is straightforward with no overlapping sub domains. Sp

Efficiency: .C p =

Based on the limited parallelism, this scheme can be p

effective in reducing computational complexity and data Where T1 is the execution time on one processor, Tp is

storage accesses in distributed parallel computer systems. the execution time on p processors. The important factors

The AGE sweeps involved tridiagonal systems, which in effecting the performance in message-passing paradigm

turn entails at each stage the solution of (2 × 2) block on a distributed memory machine are communication

systems. The iterative procedure is continued until patterns and computational/ communication ratios.

convergence is reached. Parallel algorithm for GSRB is chosen as a control

scheme.

4.2 Parallel Direct method Effectiveness is used to make comparison among

various parallel algorithms. The formula that is based on

In the master-worker model techniques, configuration the calculation of speedup and the efficiency, given by

of a central ‘master’ program communicates with a the following,

number of ‘workers’ (Krysl and Belyschko 1997). At the Speedup

beginning of calculation, the master processor receives all Effectiveness =

p.Time(t )

the input data from user. Then all the data input are

broadcasts from master processor node to all other

processor node (Nikishkov and Kawka 1998). The system 5.2 Communication cost

stiffness matrix is partitioned into a number of equally The important factors affecting performance in

sized smaller domain matrices, each of which is allocated message passing on distributed memory computer

to a separate processor as shown in Figure 2. Each systems are communication patterns and computational

processor conducts part of duty that is required (Lewis or communication ratios. The communication time will

and Hesham 1992). The domain matrices are solved depend on many factors including network structure and

independently. As soon as the calculation complete, network contention. Parallel execution time tpara is

domain matrices from each processor send to master divided into two parts, computational time (tcomp) and

processor for assembly to form global stiftness matrix as communication time (tcomm). tcomp is the time taken to

in Figure 3. When a slave completes one task, it requests compute arithmetic operations such as multiplication and

another task from the master process (Wilkinson and addition operations in parallel algorithm. As all the

Allen 1999). processors doing the operation at the same speed,

172 Copyright © 2009 by University Malaysia Sarawak

THE 6TH INTERNATIONAL CONFERENCE ON INFORMATION TECHNOLOGY IN ASIA, 2009

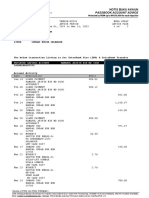

calculation for the tcomm is depending upon the size of Speedup Efficiency

message. The communication cost comes from two major Pro. AGE DIRECT GSRB AGE DIRECT GSRB

phases in sending a message; start-up phase and data 1 1 1 1 1 1 1.00000

2 1.916 1.9 1.70729 0.9577 0.95 0.85365

transmission phase. The total time to send K units of data

3 2.687 2.763356 2.39576 0.8957 0.921119 0.79859

for a given system can be written as, 4 3.439 3.475574 2.96449 0.8598 0.868893 0.74112

tcomm = tstartup + K tdata + tidle 5 4.085 4.18518 3.47141 0.8170 0.81704 0.69428

where tcomm is time needed to communicate a 6 4.737 4.83705 4.02634 0.7895 0.78951 0.67106

message of K bytes. Sometimes, tstartup is referred as the

network latency time (start-up time), and a time to send Table 1: Parallel performances of AGE, Direct and GSRB

a message with no data. It includes time to pack the method

message at source and unpack the message at the

destination as well as to start a point-to-point

communication. Meanwhile, tdata is time to transmit

units of information; the reciprocal of td is the

bandwidth. It is also the transmission time to send one

bytes of data.

The tstartup and tdata are assumed as constants and

measured in bits/sec. tidle is the time for message Figure 5: Speedup and efficiency vs. no. of processors

latency and waiting time for all processors to complete

the works. The measurement of these communication P parallel comp ratio comm comm1 idle

5 31.995 27.645 6.36 4.3497 2.5472 1.8025

costs are done via simple codes that extract the time % 86.41 13.59 7.96 5.63

during message exchange. The research focus on, 10 18.136 13.823 3.20 4.3133 2.5472 1.7661

t parallel = time for parallel execution. % 76.22 23.78 14.04 9.74

15 12.987 9.2151 2.44 3.7719 2.5472 1.2247

t comm1 = α tdata + β tstartup

% 70.96 29.04 19.61 9.43

where, α and β dependent on m and L. 20 10.75 6.9113 1.80 3.8387 2.5472 1.2915

Communication cost for parallel processing is: % 64.29 35.71 23.69 12.01

L m tdata + L ( tstartup + tidle )

Where m is units of data sending across processor Table 2: Time execution, communication, idle times, ratio

and L is number of steps gained during overall of computational time and communication time for the

execution. diffusion model problem.

6 Performance Evaluation and p

2

parallel

3310.85

comp

3009.0

ratio

9.97

comm

301.80

comm1

42.488

idle

259.31

Discussion % 90.88 9.12 1.28 7.83

3 2276.44 2006.0 7.42 270.41 36.557 233.85

In this work, we are using low cost cluster to execute % 88.12 10.56 1.61 10.27

4 1709.95 1504.5 7.32 205.43 40.958 164.47

parallel program. To solve this problem we use 6 to 20 % 87.99 12.01 2.40 9.62

numbers of processors to get numerical result and parallel

performance evaluations. Table 3: Time execution, communication, idle times, ratio

Table 1 depicts the speedup and efficiency for parallel of computational time and communication time for the

AGE, GSRB and direct methods. The speedup and crack propagation problem.

efficiency values of AGE and direct methods get better as

the size of processors increases which are consistent with 7

6

1.2

the results in Figure 5 and 6. Comparable speedups are

5

0.8

Efficiency

Speedup

4

0.6

3

obtained for all applications with 6 processors. It can be 2

1

0.4

0.2

observed that the efficiency of AGE method decreases 0

1 5 10 15 20

0

1 5 10 15 20

Num ber of Processors

faster than the direct method. This could be explained by Num ber of Processors

the fact that several factors lead to increase in idle time (a) (b)

0.03 0.1

such as network load, delay and load imbalance. 0.025

0.09

Temporal performance

0.08

0.07

The stable and highly accurate AGE algorithms are

Effectiveness

0.02

0.06

0.015 0.05

0.04

found to be well suited for parallel implementation on the 0.01

0.005

0.03

0.02

0.01

PVM platform where the data decomposition run 0

1 5 10

Num ber of Processors

15 20

0

1 5 10 15

Number of Processor s

20

asynchronously and concurrently at every time level. The

(c) (d)

AGE sweeps involve tridiagonal systems, which require

the solution of (2 × 2) block systems. Figure 6: Parallel performance measurement for diffusion

model problem in terms of (a) Speedup, (b) Efficiency,

(c) Effectiveness and (d) Temporal performance.

Copyright © 2009 by University Malaysia Sarawak 173

THE 6TH INTERNATIONAL CONFERENCE ON INFORMATION TECHNOLOGY IN ASIA, 2009

It can be observed in Figure 5 that processors’ number

increment will result in higher rate of speedup. Unlike

speedup, efficiency ratio will decrease as more processors

added to the parallel system. Meanwhile, analysis in the

aspect of effectiveness and temporal performance shows

positive impact as processors’ number increase. References

[1] Alias, N.: Pembinaan dan Perlaksanaan Algoritma

7 Conclusions Selari bagi Kaedah TTHS dan TTKS dalam

Penyelesaian Persamaan Parabolik pada Sistem

In this work, we have presented the experimental Komputer Selari Ingatan Teragih. Universiti

results illustrating the parallel implementation of iterative Kebangsaan Malaysia. 2004.

block and direct method using PVM programming [2] Alias, N., Sahimi, M.S., Abdullah, A.R.: Parallel

environment. The contribution of this paper is the Strategies For The Iterative Alternating

parallelization of iterative and direct methods using block Decomposition Explicit Interpolation-Conjugate

system is alternative to solve the large-sparse matrices Gradient Method In solving Heat Conductor

system problem governing for finite difference and finite Equation On A Distributed Parallel Computer

element methods. Systems. Proceedings of The 3rd International

Conference On Numerical Analysis in Engineering.

Parallel algorithms for AGE and direct method are

3: 31-38. 2003.

inherently explicit, the domain decomposition strategy is

[3] B. Wilkinson and M. Allen. Parallel Programming

efficiently utilized and straightforward to implement on

Techniques and Apllications Using Networked

distributed memory architecture. Based on the analysis, it

Workstations and Parallel Computers. Prentice-Hall,

is noted that higher speedups could be expected for large-

1999.

scale problems. Both block decomposition techniques

[4] Dahlquist, G., and Bjorck, A., 1974, Numerical

and parallel implementations’ advantages have fault

Methods. Englewood Cliffs, N. Jersey: Prentice-Hall.

tolerant features.

1974

These schemes can be effective in reducing data storage [5] Evans, D.J., Sahimi, M.S.: The Alternating Group

accesses for computation and communication cost on a Explicit Iterative Method (AGE) to Solve Parabolic

distributed computer systems. and Hyperbolic Partial Differential Equations. In

The novelty of this paper is to implement of parallel Annual Review of Numerical Fluid Mechanics and

iterative and direct methods in high performance Heat Transfer, Vol. 2. Hemisphere Publication

evaluations on distributed memory architecture. Corporation, New York Washington Philadelphia

London .1989.

[6] G.P.Nikishkov, M.Kawka, A.Makinouchi, G.Yagawa

and S.Yoshimura, Porting an industrial sheet metal

forming code to a distributed memory parallel

computer. Computers and Structures , 67 pp. 439-

449. 1998.

[7] Krysl P., Belytschko, T.:Modeling of 3D propagating

cracks by the element-free Galerkin method, Proc. of

Int. Conf. ICES'97, Costa Rica, 128-133. 1997.

[8] Ted Lewis and Hesham El-Rewini, Introduction to

Parallel Programming, Prentice Hall, 1992.

[9] Tan, Y.S.: Parallel Algorithm for One-dimensional

Finite Element Discretization of Crack Propagation.

Universiti Teknologi Malaysia. 2008.

174 Copyright © 2009 by University Malaysia Sarawak

Potrebbero piacerti anche

- Mathematical Simulation For 3-Dimensional Temperature Visualization On Open Source-Based Grid Computing PlatformDocumento6 pagineMathematical Simulation For 3-Dimensional Temperature Visualization On Open Source-Based Grid Computing PlatformMd. Rajibul Islam100% (3)

- A Dynamic Pde Solver For Breasts' Cancerous Cell Visualization On Distributed Parallel Computing SystemsDocumento6 pagineA Dynamic Pde Solver For Breasts' Cancerous Cell Visualization On Distributed Parallel Computing SystemsMd. Rajibul IslamNessuna valutazione finora

- An Efficient Authoring Activities Infrastructure Design Through Grid Portal TechnologyDocumento6 pagineAn Efficient Authoring Activities Infrastructure Design Through Grid Portal TechnologyMd. Rajibul IslamNessuna valutazione finora

- Biometric Template Protection Using Watermarking With Hidden Password EncryptionDocumento8 pagineBiometric Template Protection Using Watermarking With Hidden Password EncryptionMd. Rajibul IslamNessuna valutazione finora

- Grid Portal Technology For Web Based Education of Parallel Computing Courses, Applications and ResearchesDocumento6 pagineGrid Portal Technology For Web Based Education of Parallel Computing Courses, Applications and ResearchesMd. Rajibul IslamNessuna valutazione finora

- Multimodality To Improve Security and Privacy in Fingerprint Authentication SystemDocumento5 pagineMultimodality To Improve Security and Privacy in Fingerprint Authentication SystemMd. Rajibul Islam100% (1)

- A Secured Fingerprint Authentication SystemDocumento10 pagineA Secured Fingerprint Authentication SystemMd. Rajibul Islam100% (6)

- Early Detection of Breast Cancer Using Wave Elliptic Equation With High Performance ComputingDocumento4 pagineEarly Detection of Breast Cancer Using Wave Elliptic Equation With High Performance ComputingMd. Rajibul IslamNessuna valutazione finora

- Webcam Based Fingerprint Authentication For Personal Identification SystemDocumento11 pagineWebcam Based Fingerprint Authentication For Personal Identification SystemMd. Rajibul Islam100% (3)

- The Visualization of 3-Dimensional Brain Tumors' Growth On Distributed Parallel Computer SystemsDocumento8 pagineThe Visualization of 3-Dimensional Brain Tumors' Growth On Distributed Parallel Computer SystemsMd. Rajibul IslamNessuna valutazione finora

- Precise Fingerprint Enrolment Through Projection Incorporated Subspace Based On Principal Component Analysis (PCA)Documento7 paginePrecise Fingerprint Enrolment Through Projection Incorporated Subspace Based On Principal Component Analysis (PCA)Md. Rajibul Islam100% (1)

- Fingerprint Synthesis Towards Constructing An Enhanced Authentication System Using Low Resolution WebcamDocumento10 pagineFingerprint Synthesis Towards Constructing An Enhanced Authentication System Using Low Resolution WebcamMd. Rajibul Islam100% (2)

- Fingerprint Authentication System Using A Low-Priced WebcamDocumento9 pagineFingerprint Authentication System Using A Low-Priced WebcamMd. Rajibul Islam100% (2)

- The Yellow House: A Memoir (2019 National Book Award Winner)Da EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Valutazione: 4 su 5 stelle4/5 (98)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDa EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceValutazione: 4 su 5 stelle4/5 (895)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDa EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeValutazione: 4 su 5 stelle4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingDa EverandThe Little Book of Hygge: Danish Secrets to Happy LivingValutazione: 3.5 su 5 stelle3.5/5 (399)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDa EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaValutazione: 4.5 su 5 stelle4.5/5 (266)

- Shoe Dog: A Memoir by the Creator of NikeDa EverandShoe Dog: A Memoir by the Creator of NikeValutazione: 4.5 su 5 stelle4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDa EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureValutazione: 4.5 su 5 stelle4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDa EverandNever Split the Difference: Negotiating As If Your Life Depended On ItValutazione: 4.5 su 5 stelle4.5/5 (838)

- Grit: The Power of Passion and PerseveranceDa EverandGrit: The Power of Passion and PerseveranceValutazione: 4 su 5 stelle4/5 (588)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDa EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryValutazione: 3.5 su 5 stelle3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerDa EverandThe Emperor of All Maladies: A Biography of CancerValutazione: 4.5 su 5 stelle4.5/5 (271)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDa EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyValutazione: 3.5 su 5 stelle3.5/5 (2259)

- On Fire: The (Burning) Case for a Green New DealDa EverandOn Fire: The (Burning) Case for a Green New DealValutazione: 4 su 5 stelle4/5 (73)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDa EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersValutazione: 4.5 su 5 stelle4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnDa EverandTeam of Rivals: The Political Genius of Abraham LincolnValutazione: 4.5 su 5 stelle4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaDa EverandThe Unwinding: An Inner History of the New AmericaValutazione: 4 su 5 stelle4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDa EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreValutazione: 4 su 5 stelle4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Da EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Valutazione: 4.5 su 5 stelle4.5/5 (121)

- Her Body and Other Parties: StoriesDa EverandHer Body and Other Parties: StoriesValutazione: 4 su 5 stelle4/5 (821)

- DSynchronize (ENG)Documento3 pagineDSynchronize (ENG)Rekha Rajarajan100% (1)

- He Sas 23Documento10 pagineHe Sas 23Hoorise NShineNessuna valutazione finora

- Lic Nach MandateDocumento1 paginaLic Nach Mandatefibiro9231Nessuna valutazione finora

- Quemador BrahmaDocumento4 pagineQuemador BrahmaClaudio VerdeNessuna valutazione finora

- Libro INGLÉS BÁSICO IDocumento85 pagineLibro INGLÉS BÁSICO IRandalHoyos100% (1)

- Ahmed Amr P2Documento8 pagineAhmed Amr P2Ahmed AmrNessuna valutazione finora

- Introduction To The Iceberg ModelDocumento5 pagineIntroduction To The Iceberg ModelAbhay Tiwari100% (1)

- ARCASIA Students Design Competition TORDocumento4 pagineARCASIA Students Design Competition TORDeena McgeeNessuna valutazione finora

- EE360 - Magnetic CircuitsDocumento48 pagineEE360 - Magnetic Circuitsبدون اسمNessuna valutazione finora

- Planning Theory Syllabus - 2016Documento24 paginePlanning Theory Syllabus - 2016LakshmiRaviChanduKolusuNessuna valutazione finora

- 3.1 MuazuDocumento8 pagine3.1 MuazuMon CastrNessuna valutazione finora

- Graduate Macro Theory II: The Real Business Cycle Model: Eric Sims University of Notre Dame Spring 2017Documento25 pagineGraduate Macro Theory II: The Real Business Cycle Model: Eric Sims University of Notre Dame Spring 2017Joab Dan Valdivia CoriaNessuna valutazione finora

- Module 2 DIPDocumento33 pagineModule 2 DIPdigital loveNessuna valutazione finora

- Experion Legacy IO Link Module Parameter Reference Dictionary LIOM-300Documento404 pagineExperion Legacy IO Link Module Parameter Reference Dictionary LIOM-300BouazzaNessuna valutazione finora

- In Search of Begum Akhtar PDFDocumento42 pagineIn Search of Begum Akhtar PDFsreyas1273Nessuna valutazione finora

- TIB Bwpluginrestjson 2.1.0 ReadmeDocumento2 pagineTIB Bwpluginrestjson 2.1.0 ReadmemarcmariehenriNessuna valutazione finora

- RHB Islamic Bank BerhadDocumento2 pagineRHB Islamic Bank BerhadVape Hut KlangNessuna valutazione finora

- Pedagogical Leadership. Baird - CoughlinDocumento5 paginePedagogical Leadership. Baird - CoughlinChyta AnindhytaNessuna valutazione finora

- Soil Liquefaction Analysis of Banasree Residential Area, Dhaka Using NovoliqDocumento7 pagineSoil Liquefaction Analysis of Banasree Residential Area, Dhaka Using NovoliqPicasso DebnathNessuna valutazione finora

- Workplace Risk Assessment PDFDocumento14 pagineWorkplace Risk Assessment PDFSyarul NizamzNessuna valutazione finora

- INSTRUCTIONAL SUPERVISORY PLAN 1st Quarter of SY 2023 2024 Quezon ISDocumento7 pagineINSTRUCTIONAL SUPERVISORY PLAN 1st Quarter of SY 2023 2024 Quezon ISayongaogracelyflorNessuna valutazione finora

- Input and Output Statements PDFDocumento11 pagineInput and Output Statements PDFRajendra BuchadeNessuna valutazione finora

- PLASSON UK July 2022 Price Catalogue v1Documento74 paginePLASSON UK July 2022 Price Catalogue v1Jonathan Ninapaytan SanchezNessuna valutazione finora

- Ch.1 Essential Concepts: 1.1 What and How? What Is Heat Transfer?Documento151 pagineCh.1 Essential Concepts: 1.1 What and How? What Is Heat Transfer?samuel KwonNessuna valutazione finora

- Daily Lesson Log (English)Documento8 pagineDaily Lesson Log (English)Julius Baldivino88% (8)

- Course Outline Principles of MarketingDocumento3 pagineCourse Outline Principles of MarketingKhate Tria De LeonNessuna valutazione finora

- Num Sheet 1Documento1 paginaNum Sheet 1Abinash MohantyNessuna valutazione finora

- IVISOR Mentor IVISOR Mentor QVGADocumento2 pagineIVISOR Mentor IVISOR Mentor QVGAwoulkanNessuna valutazione finora

- Placa 9 - SHUTTLE A14RV08 - 71R-A14RV4-T840 - REV A0 10ABR2012Documento39 paginePlaca 9 - SHUTTLE A14RV08 - 71R-A14RV4-T840 - REV A0 10ABR2012Sergio GalliNessuna valutazione finora

- Exp - P7 - UPCTDocumento11 pagineExp - P7 - UPCTSiddesh PatilNessuna valutazione finora