Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Lesson01 PDF 02

Caricato da

Hyuntae KimCopyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Lesson01 PDF 02

Caricato da

Hyuntae KimCopyright:

Formati disponibili

Todays Lesson 1

Basic Econometrics (1):

Ordinary Least Squares

I Representations of Classical Linear Regression Equa-

tions and the Classical Assumptions

I.1 Four Representations of Classical Linear Regression Equa-

tions

There are four conventional ways to describe classical linear regression equations as the

following.

(i) Scalar form

t

= ,

1

+ r

2t

,

2

+ r

3t

,

3

+ c

t

. c

t

s iid(0. o

2

)

(ii) Vector form for each observation

t

=

_

1 r

2t

r

3t

_

_

_

,

1

,

2

,

3

_

_

+ c

t

. c

t

s iid(0. o

2

)

= r

0

t

, + c

t

where r

t

=

_

1 r

2t

r

3t

_

0

and , =

_

,

1

,

2

,

3

_

0

(iii) Vector form for each variable

_

2

.

.

.

T

_

_

. .

1

=

_

_

1

1

.

.

.

1

_

_

. .

,

1

+

A

1

_

_

r

21

r

22

.

.

.

r

2T

_

_

. .

,

2

+

A

2

_

_

r

31

r

32

.

.

.

r

3T

_

_

. .

,

3

+

_

_

c

1

c

2

.

.

.

c

T

_

_

. .

A

3

c

where c s (0. o

2

1

T

)

1

(iv) Matrix form

_

2

.

.

.

T

_

_

. .

1

=

_

_

1 r

21

r

31

1 r

22

r

32

.

.

.

.

.

.

.

.

.

1 r

2T

r

3T

_

_

. .

_

_

,

1

,

2

,

3

_

_

. .

+

_

_

c

1

c

2

.

.

.

c

T

_

_

. .

A , c

I.2 The Classical Assumptions

The classical assumptions are assumptions about the explanatory variables and the

stochastic error terms.

A. 1 A is strictly exogenous and has full column rank (no multicollinearity)

A. 2 The disturbances are mutually independent and the variance is constant at each

sample point, which can be combined in the single statement, cjA (0. o

2

1

T

)

Given the assumptions, we make two additional assumptions: (i) A is nonstochastic,

and (ii) the error terms are normally distributed, i.e. c `(0. o

2

1

T

). These assump-

tion may look very restrictive, but the properties of the estimators derived under the

stronger assumptions are extremely useful to understand the large-sample properties of

the estimators under the classical assumptions.

II OLS Estimator

The OLS estimator

^

, minimizes the residual sum of squares n

0

n where n = 1 A/.

Namely

^

, = arg min

b

1oo = n

0

n

Then,

1oo = n

0

n

= (1 Ab)

0

(1 Ab)

= 1

0

1 b

0

A

0

1 1

0

Ab +b

0

A

0

Ab

= 1

0

1 2b

0

A

0

1 +b

0

A

0

Ab

2

since the transpose of a scalar is the scalar and thus b

0

A

0

1 = 1

0

Ab. The rst order

conditions are

J1oo

Jb

= 2A

0

1 +2A

0

Ab = 0

(A

0

A) b = A

0

1

, which gives the OLS estimator

^

, = (A

0

A)

1

A

0

1

Remark 1 Let `

X

= 1

T

A(A

0

A)

1

A

0

. It can be easily seen that It follows that

`

X

1 is the vector of residuals when 1 is regressed on A. Also note that ^ c = `

X

1 =

`

X

(A, + c) = `

X

c. Then `

X

is a symmetric (`

0

X

=`

X

) and idempotent (`

X

`

X

=`

X

)

matrix with the properties that `

X

A = 0

T

and `

X

^ c = `

X

1 = ^ c since `

X

A = 0

T

.

Remark 2 The trace of an nn square matrix G, denoted by t:(G), is dened to be the

sum of the elements on the diagonal elements of G. Then, by denition, t:(c

0

) = c for

any scalar c.

Remark 3 t:(1C) = t:(1C) = t:(C1).

Remark 4 Estimator of o

2

On letting / denote the number of the regressors, ^ o

2

=

^ c

0

^ c,(1 /) is an unbiased estimator of o

2

since

E[^ c

0

^ c] = E[c

0

`

0

X

`

X

c]

= E[c

0

`

X

c] since `

X

is idempotent and symmetric

= E[t: (c

0

`

X

c)] by the Remark (2)

= E[t: (cc

0

`

X

)] by the Remark (3)

= o

2

t: (`

X

)

= o

2

t: (1

T

) o

2

t:

_

A(A

0

A)

1

A

0

= o

2

1 o

2

t:

_

(A

0

A)

1

A

0

A

= o

2

(1 /)

and hence we have

E

_

^ c

0

^ c

(1 /)

_

= o

2

.

3

By making an additional assumption that c is normally distributed, we have

^

, = (A

0

A)

1

A

0

1

= (A

0

A)

1

A

0

(A, + c)

= , + (A

0

A)

1

A

0

c

N(,. o

2

(A

0

A)

1

)

For each individual regression coecients,

^

,

i

N(,

i

. o

2

(A

0

A)

1

i

)

where o

2

(A

0

A)

1

i

is the (i. i) element of o

2

(A

0

A)

1

. In general, however, o

2

is unknown,

and thus the estimated variance of

^

, is

\

\ c:

_

^

,

_

= ^ o

2

(A

0

A)

1

II.1 Gauss-Markov Theorem

The OLS estimator is seen to be linear combinations of the dependent variables and

hence linear combinations of the error terms. As one could see above, it belongs to

the class of linear unbiased estimators. Its distinguishing feature is that its sampling

variance is the minimum that can be achieved by any linear unbiased estimator under

the classical assumptions. The Gauss-Markov Theorem is the fundamental least-square

theorem. It states that conditioned on the classical assumptions made, any other linear

unbiased estimator of the , cannot have smaller sampling variances than that of the

least-squares estimator. It is said to be a BLUE(best linear unbiased estimator).

III Measures of Goodness of Fit

The vector of the dependent variable 1 can be decomposed into the part explained by

the regressors and the unexplained part.

1 =

^

1 + ^ c where

^

1 = A

^

,

Remark 5

^

1 and ^ c are orthogonal because

^

1

0

^ c =

^

,

0

A

0

^ c

4

=

^

,

0

A

0

`

X

c

= 0 since A

0

`

X

= 0

TT

Then it follows from the Remark 5 that if

1 is the sample mean of the dependent

variable, then

1

0

1 1

1

2

. .

TSS

=

^

1

0

^

1 1

1

2

. .

ESS

+ ^ c

0

^ c

..

RSS

.

The coecient of multiple correlation 1 is dened as the positive square root of

1

2

=

1oo

1oo

= 1

1oo

1oo

1

2

is the proportion of the total variation of 1 explained by the linear combination of

the regressors. This value is increasing by including any additional regressors even if the

added regressors are irrelevant to the dependent. However, the adjusted 1

2

, denoted by

1

2

may decrease with the addition of variables of low explanatory power.

1

2

= 1

1oo

1oo

1 1

1 /

Two of the frequently used criteria for comparing the t of various specications is

the Schwarz criterion

oC = ln

^ c

0

^ c

1

+

/

1

ln 1

and the Akaike information criterion

1C = ln

^ c

0

^ c

1

+

2/

1

It is important to note that these criterions favor a model with smaller sum of the

squared residuals, but each criterion adds on a penalty for model complexity measured

by the number of the regressors. Most statistics computer programs routinely produce

those criterions.

5

Potrebbero piacerti anche

- Ordinary Least Squares: Rómulo A. ChumaceroDocumento50 pagineOrdinary Least Squares: Rómulo A. ChumaceroMatías Andrés AlfaroNessuna valutazione finora

- Asymptotic Properties of Solutions To Hyperbolic Equations: Michael RuzhanskyDocumento10 pagineAsymptotic Properties of Solutions To Hyperbolic Equations: Michael RuzhanskyDandi BachtiarNessuna valutazione finora

- Lecture 2: Linear Random e Ects and The Hausman TestDocumento6 pagineLecture 2: Linear Random e Ects and The Hausman TestnickedwinjohnsonNessuna valutazione finora

- ODE Systems of LCC Type and StabilityDocumento13 pagineODE Systems of LCC Type and StabilitylambdaStudent_eplNessuna valutazione finora

- State EstimationDocumento34 pagineState EstimationFengxing ZhuNessuna valutazione finora

- OLSDocumento18 pagineOLSTzak TzakidisNessuna valutazione finora

- Section II. Geometry of Determinants 347Documento10 pagineSection II. Geometry of Determinants 347Worse To Worst SatittamajitraNessuna valutazione finora

- Chapter10 G PDFDocumento88 pagineChapter10 G PDFSavya MittalNessuna valutazione finora

- Simpli Ed Derivation of The Heston ModelDocumento6 pagineSimpli Ed Derivation of The Heston Modelmirando93Nessuna valutazione finora

- 6 Observers: 6.1 Full-Order Observer DesignDocumento7 pagine6 Observers: 6.1 Full-Order Observer DesignSherigoal ElgoharyNessuna valutazione finora

- Explicit Method For Solving Parabolic PDE: T L X Where X U C T UDocumento9 pagineExplicit Method For Solving Parabolic PDE: T L X Where X U C T UAshokNessuna valutazione finora

- X X B X B X B y X X B X B N B Y: QMDS 202 Data Analysis and ModelingDocumento6 pagineX X B X B X B y X X B X B N B Y: QMDS 202 Data Analysis and ModelingFaithNessuna valutazione finora

- Discrete Time Random Processes: 4.1 (A) UsingDocumento16 pagineDiscrete Time Random Processes: 4.1 (A) UsingSudipta GhoshNessuna valutazione finora

- On The Solution of The Differential Equation Occurring in The Problem of Heat Convection in Laminar Flow Through A TubeDocumento4 pagineOn The Solution of The Differential Equation Occurring in The Problem of Heat Convection in Laminar Flow Through A TubeJess McAllister AlicandoNessuna valutazione finora

- Tri Go No Me Tri ADocumento9 pagineTri Go No Me Tri AEmilio Sordo ZabayNessuna valutazione finora

- Mathematical Tools LEC NOTES PDFDocumento41 pagineMathematical Tools LEC NOTES PDFtweeter_shadowNessuna valutazione finora

- Multiple Regression Analysis: I 0 1 I1 K Ik IDocumento30 pagineMultiple Regression Analysis: I 0 1 I1 K Ik Iajayikayode100% (1)

- Analisis Regresi Sederhana Dan Berganda (Teori Dan Praktik)Documento53 pagineAnalisis Regresi Sederhana Dan Berganda (Teori Dan Praktik)Rizki Fadlina HarahapNessuna valutazione finora

- Appendix C Lorentz Group and The Dirac AlgebraDocumento13 pagineAppendix C Lorentz Group and The Dirac AlgebraapuntesfisymatNessuna valutazione finora

- Sengupta Mixing of AnswersDocumento16 pagineSengupta Mixing of AnswersSubhrodip SenguptaNessuna valutazione finora

- Answer Key, Problem Set 1-Full Version: Chemistry 122 Mines, Spring, 2012Documento10 pagineAnswer Key, Problem Set 1-Full Version: Chemistry 122 Mines, Spring, 2012Jules BrunoNessuna valutazione finora

- Real Analysis and Probability: Solutions to ProblemsDa EverandReal Analysis and Probability: Solutions to ProblemsNessuna valutazione finora

- Appendix D Basic Basic Engineering Engineering Calculations CalculationsDocumento25 pagineAppendix D Basic Basic Engineering Engineering Calculations CalculationsaakashtrivediNessuna valutazione finora

- Stability Analysis For VAR SystemsDocumento11 pagineStability Analysis For VAR SystemsCristian CernegaNessuna valutazione finora

- Prime Spacing and The Hardy-Littlewood Conjecture B: ) Log ( ) Log (Documento17 paginePrime Spacing and The Hardy-Littlewood Conjecture B: ) Log ( ) Log (idiranatbicNessuna valutazione finora

- A Cantor Function ConstructedDocumento12 pagineA Cantor Function ConstructedEgregio TorNessuna valutazione finora

- Trigo IdentitiesDocumento29 pagineTrigo IdentitiesKunalKaushikNessuna valutazione finora

- CLS ENG 23 24 XI Phy Target 1 Level 1 Chapter 1Documento25 pagineCLS ENG 23 24 XI Phy Target 1 Level 1 Chapter 1sarthakyedlawar04Nessuna valutazione finora

- Kevin Dennis and Steven Schlicker - Sierpinski N-GonsDocumento7 pagineKevin Dennis and Steven Schlicker - Sierpinski N-GonsIrokkNessuna valutazione finora

- Unit I Mathematical Tools 1.1 Basic Mathematics For Physics: I. Quadratic Equation and Its SolutionDocumento16 pagineUnit I Mathematical Tools 1.1 Basic Mathematics For Physics: I. Quadratic Equation and Its SolutionJit AggNessuna valutazione finora

- Analysis of Continuous Systems Diffebential and Vabiational FobmulationsDocumento18 pagineAnalysis of Continuous Systems Diffebential and Vabiational FobmulationsDanielNessuna valutazione finora

- Equations of StateDocumento42 pagineEquations of StateEng MohammedNessuna valutazione finora

- Vibrations of StructuresDocumento9 pagineVibrations of StructuresRafaAlmeidaNessuna valutazione finora

- Inverse Mapping Theorem PDFDocumento9 pagineInverse Mapping Theorem PDFadityabaid4Nessuna valutazione finora

- Chapter 02Documento14 pagineChapter 02Joe Di NapoliNessuna valutazione finora

- Final Exam Solutions: N×N N×P M×NDocumento37 pagineFinal Exam Solutions: N×N N×P M×NMorokot AngelaNessuna valutazione finora

- 3fa2s 2012 AbrirDocumento8 pagine3fa2s 2012 AbrirOmaguNessuna valutazione finora

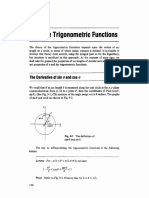

- 9 The Trigonometric Functions: The Derivative of Sin and CosDocumento9 pagine9 The Trigonometric Functions: The Derivative of Sin and CosChandler ManlongatNessuna valutazione finora

- Introduction To Linear TransformationDocumento7 pagineIntroduction To Linear TransformationpanbuuNessuna valutazione finora

- Chapter 3 (Seborg Et Al.)Documento20 pagineChapter 3 (Seborg Et Al.)Jamel CayabyabNessuna valutazione finora

- Process Systems Steady-State Modeling and DesignDocumento12 pagineProcess Systems Steady-State Modeling and DesignajayikayodeNessuna valutazione finora

- I. Exercise 1: R Na0 R A0Documento4 pagineI. Exercise 1: R Na0 R A0jisteeleNessuna valutazione finora

- HW 9 SolutionsDocumento7 pagineHW 9 SolutionsCody SageNessuna valutazione finora

- Final Exam: N+ N Sin NDocumento9 pagineFinal Exam: N+ N Sin NtehepiconeNessuna valutazione finora

- Linear Algebra Chapter 3 - DeTERMINANTSDocumento24 pagineLinear Algebra Chapter 3 - DeTERMINANTSdaniel_bashir808Nessuna valutazione finora

- Case 1Documento13 pagineCase 1HimmahSekarEagNessuna valutazione finora

- Pend PDFDocumento4 paginePend PDFVivek GodanNessuna valutazione finora

- Classical Least Squares TheoryDocumento38 pagineClassical Least Squares TheoryRyan TagaNessuna valutazione finora

- Chapter 10Documento23 pagineChapter 10ahmetshalaNessuna valutazione finora

- 5 Amt 2 Rev SolDocumento7 pagine5 Amt 2 Rev SolZakria ToorNessuna valutazione finora

- Process Systems Steady-State Modeling and DesignDocumento12 pagineProcess Systems Steady-State Modeling and DesignbeichNessuna valutazione finora

- Concourse 18.03 - Lecture #9: MX CX KX X X XDocumento4 pagineConcourse 18.03 - Lecture #9: MX CX KX X X XAna Petrovic TomicNessuna valutazione finora

- MixMax GeneratorDocumento7 pagineMixMax GeneratorJenny SniNessuna valutazione finora

- Linear Independence and The Wronskian: F and G Are Multiples of Each OtherDocumento27 pagineLinear Independence and The Wronskian: F and G Are Multiples of Each OtherDimuthu DharshanaNessuna valutazione finora

- Qualify For BasisDocumento3 pagineQualify For BasisAliAlMisbahNessuna valutazione finora

- Chapter 3 Laplace TransformDocumento20 pagineChapter 3 Laplace TransformKathryn Jing LinNessuna valutazione finora

- HW 1Documento5 pagineHW 1tneshoeNessuna valutazione finora

- Problem Set #1. Due Sept. 9 2020.: MAE 501 - Fall 2020. Luc Deike, Anastasia Bizyaeva, Jiarong Wu September 2, 2020Documento3 pagineProblem Set #1. Due Sept. 9 2020.: MAE 501 - Fall 2020. Luc Deike, Anastasia Bizyaeva, Jiarong Wu September 2, 2020Francisco SáenzNessuna valutazione finora

- Ten-Decimal Tables of the Logarithms of Complex Numbers and for the Transformation from Cartesian to Polar Coordinates: Volume 33 in Mathematical Tables SeriesDa EverandTen-Decimal Tables of the Logarithms of Complex Numbers and for the Transformation from Cartesian to Polar Coordinates: Volume 33 in Mathematical Tables SeriesNessuna valutazione finora

- The Big Problems FileDocumento197 pagineThe Big Problems FileMichael MazzeoNessuna valutazione finora

- Sticky Price ModelsDocumento16 pagineSticky Price ModelsHyuntae KimNessuna valutazione finora

- Debreu - Theory of ValueDocumento120 pagineDebreu - Theory of ValueHyuntae KimNessuna valutazione finora

- Chen02 - Multivariable and Ventor AnalysisDocumento164 pagineChen02 - Multivariable and Ventor AnalysisHyuntae KimNessuna valutazione finora

- Fundamentals of AnalysisDocumento100 pagineFundamentals of AnalysisHyuntae KimNessuna valutazione finora

- D11 - D12-0525-MAT3017 - Statistical Inferences and Series of FunctionDocumento2 pagineD11 - D12-0525-MAT3017 - Statistical Inferences and Series of FunctionVÉÑÔMNessuna valutazione finora

- 7th Grade Center, Shape, Spread Descriptions 7.12ADocumento6 pagine7th Grade Center, Shape, Spread Descriptions 7.12AAmritansha SinhaNessuna valutazione finora

- Analysing Moderated Mediation Effects: Marketing ApplicationsDocumento42 pagineAnalysing Moderated Mediation Effects: Marketing ApplicationsVasan MohanNessuna valutazione finora

- Tugas Besar Adi Gemilang (H12114021)Documento19 pagineTugas Besar Adi Gemilang (H12114021)AdiGemilangNessuna valutazione finora

- Maths IV PDE&Prob StatisticsDocumento3 pagineMaths IV PDE&Prob StatisticsIshaNessuna valutazione finora

- TVPS 3 (Tablas Correctoras)Documento14 pagineTVPS 3 (Tablas Correctoras)Rosa Vilanova100% (3)

- Chap 2Documento28 pagineChap 2Abdoul Quang CuongNessuna valutazione finora

- Statistics: Introduction To RegressionDocumento14 pagineStatistics: Introduction To RegressionJuanNessuna valutazione finora

- Tabel Student T PDFDocumento3 pagineTabel Student T PDFStefany Luke100% (1)

- BA9201 Statistics For Managemant JAN 2012Documento10 pagineBA9201 Statistics For Managemant JAN 2012Sivakumar NatarajanNessuna valutazione finora

- Q1. (Maximum Marks:4) (Non-Calculator)Documento15 pagineQ1. (Maximum Marks:4) (Non-Calculator)Yashika AgarwalNessuna valutazione finora

- Statistical Methods For Decision MakingDocumento11 pagineStatistical Methods For Decision MakingsurajkadamNessuna valutazione finora

- Chapter 7 - Regression AnalysisDocumento111 pagineChapter 7 - Regression AnalysisNicole Agustin100% (1)

- Demand ForecastingDocumento26 pagineDemand Forecastingparmeshwar mahatoNessuna valutazione finora

- Likelihood Ratio, Wald, and Lagrange Multiplier (Score) TestsDocumento23 pagineLikelihood Ratio, Wald, and Lagrange Multiplier (Score) TestsMandar Priya PhatakNessuna valutazione finora

- Chapter 3 - Methodology Final Visalakshi PDFDocumento34 pagineChapter 3 - Methodology Final Visalakshi PDFSatya KumarNessuna valutazione finora

- Homework # 4 Question # 1Documento8 pagineHomework # 4 Question # 1Abdul MueedNessuna valutazione finora

- Scikit Learn DocsDocumento2.060 pagineScikit Learn Docsgabbu_Nessuna valutazione finora

- Process Capability Sixpack Report For 11Documento1 paginaProcess Capability Sixpack Report For 11tapanNessuna valutazione finora

- Statistic DataDocumento2 pagineStatistic DataasbiniNessuna valutazione finora

- Forecasting For Economics and Business 1St Edition Gloria Gonzalez Rivera Solutions Manual Full Chapter PDFDocumento38 pagineForecasting For Economics and Business 1St Edition Gloria Gonzalez Rivera Solutions Manual Full Chapter PDFmiguelstone5tt0f100% (12)

- Lab 11 ANOVA 2way WorksheetDocumento2 pagineLab 11 ANOVA 2way WorksheetPohuyistNessuna valutazione finora

- Section 1: Multiple Choice Questions (1 X 12) Time: 50 MinutesDocumento7 pagineSection 1: Multiple Choice Questions (1 X 12) Time: 50 MinutesGiri PrasadNessuna valutazione finora

- AAIC SyllabusDocumento19 pagineAAIC SyllabusViswam ReddyNessuna valutazione finora

- Om Chap 4Documento61 pagineOm Chap 4Games FunNessuna valutazione finora

- Comparision Table MAD Mape TS Range Moving Average Simple Exponential Smoothening Moving Average Week Demand Level Forecast Error Absolute ErrorDocumento32 pagineComparision Table MAD Mape TS Range Moving Average Simple Exponential Smoothening Moving Average Week Demand Level Forecast Error Absolute ErrorMahima SharmaNessuna valutazione finora

- Uji Akar Unit - Pendekatan Augmented Dickey-Fuller (ADF)Documento16 pagineUji Akar Unit - Pendekatan Augmented Dickey-Fuller (ADF)fikhi cahyaniNessuna valutazione finora

- Testing HypothisisDocumento41 pagineTesting HypothisisProtikNessuna valutazione finora

- UE20EC352-Machine Learning & Applications Unit 3 - Non Parametric Supervised LearningDocumento117 pagineUE20EC352-Machine Learning & Applications Unit 3 - Non Parametric Supervised LearningSai Satya Krishna PathuriNessuna valutazione finora

- Part 3: CFA & SEM Models: Michael FriendlyDocumento30 paginePart 3: CFA & SEM Models: Michael FriendlySyarief Fajaruddin syarieffajaruddin.2020Nessuna valutazione finora