Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

An Efficient Method of Data Inconsistency Check For Very Large Relations

Caricato da

Sameer NandanDescrizione originale:

Titolo originale

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

An Efficient Method of Data Inconsistency Check For Very Large Relations

Caricato da

Sameer NandanCopyright:

Formati disponibili

IJCSNS International Journal of Computer Science and Network Security, VOL.7 No.

10, October 2007

167

An Efficient Method of Data Inconsistency Check for Very Large Relations

Hyontai Sug,

Dongseo University, Busan, South Korea redundant information due to the small number of tables. For example, consider the following example database for a video DVD rental shop. The shops database has a table called rentalTable to store the DVD rental information. The table has an attribute set like {renter_number, renter_name, renter_address, DVD_number, rent_date, return_date} where words in slanted characters represent the attributes role as a primary key. And the designer of the database does not want to have a separate table to store the renters information, because most of the customers are one-time customers, and reports to be printed out need all the information in the rentalTable. Moreover, separate table structures like rental_info{DVD_number, rent_date, return_date} and renter_info{renter_number, renter_name, renter_address} make inputting data and printing reports from the two tables harder. But due to the structure of rentalTable, if some customers rent more than once, redundant information like renter_name, renter_address can exist in the table. This redundancy may cause data inconsistency problem, if the redundant information has not been updated unanimously. The problem become worse when the structure of databases is more complex and the number of tables is increased, which is very common in real world databases. One may suggest to using a sorting method to find out inconsistent data. But, manual checking after sorting is very difficult when the number of tuples is very large and the domains of corresponding attributes are large. If we assume that we want to check inconsistent data between two attributes and each attribute has k and q number of values, the possible number of combination of attribute values is kq. So, if k and q are large numbers, manual checking for data inconsistency will be very difficult. This paper suggests a consistency checking method to deal with such situation. We suggest a method to solve the problems of data inconsistency based on an approach developed from association rules and functional dependencies between attributes. We will first discuss related works in section 2, in section 3 we present our method in detail and in section 4 we illustrate our method through experimentation. Finally in section 5, we present conclusions.

Summary

In order to deal with the data inconsistency problem in very large relations, a new method is suggested to help users find inconsistent data conveniently based on the functional dependencies of the corresponding relation variables. The possible wrong data are found by applying an association rule finding algorithm in a limited way. An experiment showed very promising result.

Key words:

Data inconsistency check, very large relations.

1. Introduction

As computers become very popular nowadays, lots of databases are constructed and used because of high availability of database management systems without having to pay much to acquire the systems. For example, one of very widely used database management systems, MySQL can be freely used unless the system is used for commercial purpose [1]. Another very popular database management system for relatively small databases, Microsoft ACCESS [2] is very widely used because of good user interfaces that enable users to construct tables and make queries easily. Even Microsoft SQL server [3] for middle-sized databases is relatively very easy to use because of its similarity in user interfaces with those of Microsoft ACCESS. This ease of use of relational database management systems has contributed to let many people who do not have exact knowledge on normal forms of relation variables design relational databases with ease. But, this ease of use might play a role of some bad point in the respect of data management. Because relational databases are consisted of relations that resemble conventional tables, users or designers of the databases consider that the structure of databases is very simple so that they do not pay much attention to the structures of the relations. They might consider the relations are just like conventional tables and they want to store data in a small number of tables as much as possible, because they usually think that the complexity of making queries from the tables is increased as the number of tables is increased, as the author experienced from many people. Therefore, it is highly possible that the relations might contain some

Manuscript received October 5th, 2007. Manuscript reviced October 20th, 2007.

168

IJCSNS International Journal of Computer Science and Network Security, VOL.7 No.10, October 2007 Support_Number(Y Z)/Support_Number(Y). Note that Y Z means items in a tuple. If there is a functional dependency between attributes of Y and Z, the confidence value should be 100%. On the other hand, even though there is a functional dependency between attributes of Y and Z, if the confidence of association rule Y => Z is less than 100%, then there should be inconsistency in data. For example, if there is a functional dependency between attributes A and B, and there are two tuples where one tuple has values A=a1, B=b1, and the other tuple has values A=a1, B=b2, then the confidence for rule A=a1 => B=b1 or A=a1 => B=b2 is 50% and data is in inconsistency. The functional dependencies we want to check has the property that each right and left hand side of the functional dependency consists of one attribute. If some functional dependencies have several attributes in their right hand side, we need to separate the attributes of the right hand side one by one. But, the separation doesnt matter, because we can always decompose the right hand side by Armstrongs axiom [10]. For example, the functional dependency A{B, C} is equivalent to functional dependencies AB and A C. If each attribute in left hand side and right hand side has k and q number of values, the possible number of rules is kq. So, if k and q are large numbers, the resulting combination of attribute values will be very large also. For example, if k and q are 30, then the possible combination is 900 so that manual inspection will be very difficult. To find inconsistent data in a given relation we apply the following steps for each user-selected functional dependencies in the relation. (i) Select a functional dependency (FD) for data inconsistency check. (ii) Run association rule algorithm for the attributes in the given FD with the parameter of minimum support number of 1. (iii) Generate rules for the right hand side of the FD. (iv) Find inconsistent data with association rule with confidence less than 100%. Note that in (ii) we apply association rule finding algorithm in limited way so that the computing time to find frequent patterns with very small value of minimum support number, 1, cannot cause any significant computing time. We adapt a hash-table based association rule finding algorithm like DHP, because the algorithm can generate short association rules with length two very fast compared to other association rule finding algorithms.

2. Related work

Because we want to apply association rule discovery algorithms in a limited way, let's take a look at the algorithm briefly. Association rules are rules about how often sets of items occur together. These rules produce information on patterns or regularities that exist in a database. For example, suppose we have collected a set of transactional data in a supermarket for some period of time. We might find an association rule from the data stating `instant coffee => non-dairy creamer (80%), which means that 80% of customers who buy instant coffee also buy non-dairy creamer. Such rules can be useful for decision making on sales, for example, determining an appropriate layout for items in the store. Basically, association rule finding algorithms search exhaustively to find association patterns. So, there can be many association rules and much computing time can be needed for large target databases, because of the exhaustive nature of the algorithm. So, supplying appropriate minimum support based on the target database size is important. There are many good algorithms to find association patterns efficiently; a standard association algorithm, Apriori, a large main memory-based algorithm like AprioriTid [4], the hash-table based algorithm, DHP [5], random sample based algorithms [6], tree structure-based algorithm [7], and a parallel version of the algorithm [8]. ART [9] tried to achieve scalability by using decision list, but the exhaustive nature of the algorithm makes a limit to the applicability of the algorithm.

3. Proposing method

The following is a formal definition of the problem. Let I = {i1, i2, ..., in} be a set of items that are consisted of attribute-value pairs with attributes of an interested functional dependency in a relation R. Each tuple in R has a unique primary key. For an itemset X I, Support_Number(X) = the number of tuples in R containing X. An association rule is an implication of the form Y => Z where each Y and Z has different attributes, Y I, Z I, and Y Z . The consistency of stored attribute values in a relation is represented with confidences of found association rules. The confidence C% of the association rule Y => Z implies that among all the tuples which contain itemset Y, C% of them also contains itemset Z. So, the confidence of a association rule Y => Z is computed as the ratio:

IJCSNS International Journal of Computer Science and Network Security, VOL.7 No.10, October 2007

169

Moreover, in (iii) because we generate rules with fixed right hand side, the number of rules generated is much less than conventional association rule generation algorithms. For example, if the number of attribute values in right hand side is two, we have only half number of rules than conventional association rule algorithms.

it is highly possible that some data in the databases are redundant so that the databases may contain inconsistent data. This paper suggests an effect method to find such inconsistent data based on functional dependencies between attributes in a relation. We apply an association rule algorithm with respect to the attribute sets in the functional dependencies in a limited way to effectively find out the inconsistent data. We showed by experiment that it is very effective in finding such inconsistencies.

4. Experimentation

An experiment was run using a database in UCI machine learning repository [11] called 'census-income' to see the effectiveness of the method. In the original data the number of instances for training is 199,523 in size of 99MB data file. Class probabilities for label -50000 and 50000+ are 93.8% and 6.2% respectively. The database was selected because it is relatively very large and contains lots of manifest facts. The total number of attributes is 41. Among them eight attributes are continuous attributes. We selected two attributes among the 41 attributes for the experiment. We assumed that a functional dependency exists between the selected two attributes, attribute sex and attribute class. The used computer is VAIO notebook computer with 512 MB main memory. It took only a few seconds of computing time. We found the following rules. z z z z IF sex = female THEN (freq=101,321, cf=0.97) IF sex = female THEN (freq=2,663, cf=0.03) IF sex = male THEN (freq=85,820, cf=0.9) IF sex = male THEN (freq=9,719, cf=0.1) class = -50000. class = 50000+. class = -50000. class = 50000+.

References

[1] B. Forta, MySQL Crash Course (Sams Teach Yourself in 10 Minutes), Sams, 2005. [2] J. Viescas, J. Conrad, Microsoft Office Access 2007 Inside Out, Microsoft Press, 2007. [3] R. Rankins, P. Bertucci, C. Gallelli, A.T. Silverstein, Microsoft SQL Server 2005 Unleashed, Sams, 2006. [4] R. Agrawal, H. Mannila, R. Srikant, H. Toivonen, A.I. Verkamo, Fast Discovery of Association Rules, In Advances in Knowledge Discovery and Data Mining, U.M. Fayyad, G. Piatetsky-Shapiro, P. Smith, R. Uthurusamy ed., pp.307-328, AAAI Press/The MIT Press, 1996. [5] J.S. Park, M. Chen, P.S. Yu, Using a Hash-Based Method with Transaction Trimming for Mining Association Rules, IEEE Transactions on Knowledge and Data Engineering, vol.9, no.5, pp. 813-825, Sep. 1997. [6] H. Toivonen, Discovery of Frequent Patterns in Large Data Collections, phD thesis, Department of Computer Science, University of Helsinki, Finland, 1996. [7] J. Han, J. Pei, Y. Yin, Mining Frequent Patterns without Candidate Generation, SIGMOD'00, Dallas, TX, May 2000. [8] A. Savasere, E. Omiecinski, S. Navathe, An Efficient Algorithm for Mining Association Rules in Large Databases, College of Computing, Georgia Institute of Technology, Technical Report No.: GIT-CC-95-04. [9] F. Berzal, J. Cubero, D. Sanchez, and J.M. Serrano, ART: A Hybrid Classification Model, Machine Learning, vol.54, pp.67-92, 2004. [10] C. J. Date, An Introduction to Database Systems, 8th ed. Addison-Wesley, pp.338-339, 2004. [11] ] S. Hettich, S.D. Bay, The UCI KDD archive, Technical Report, University of California, Irvine, Department of Information and Computer Science, 1999. Hyontai Sug received the B.S. degree in Computer Science and Statistics from Pusan National University, M.S. degree in Computer Science from Hankuk University of Foreign Studies, and Ph.D. degree in Computer and Information Science and Engineering from University of Florida in 1983, 1986, and 1998 respectively. During 1986-1992, he worked for Agency of Defense Development as a researcher, and during 1999-2001, he was a full-time lecturer of Pusan University of Foreign Studies. He is now with Dongseo University as an associate professor since 2001.

In the above rules freq and cf mean frequency and confidence respectively. If the functional dependency sex class is true, there should be no contradicting data like the above. Next step will be to correct the inconsistent data.

5. Conclusions

As computers become very popular nowadays, lots of databases are constructed and used because of the high availability of database management systems without having to pay much to get the systems. So, many databases are constructed and lots of data are being stored. But, it is difficult to say all the databases are designed well to meet the constraints of, so called, the normal forms, because it is highly possible that some databases or possibly many databases are not designed with much consideration about normalization due to the fact that not all of the designers of the databases are good designers. So,

Potrebbero piacerti anche

- Gate Dbms NotesDocumento14 pagineGate Dbms Notesrodda1Nessuna valutazione finora

- PreviewpdfDocumento50 paginePreviewpdfMarcosGouvea100% (1)

- Dimensional, Object, Post-Relational ModelsDocumento21 pagineDimensional, Object, Post-Relational ModelsPraveen KumarNessuna valutazione finora

- Relational DatabasesDocumento13 pagineRelational DatabasesasarenkNessuna valutazione finora

- Application of Particle Swarm Optimization To Association Rule MiningDocumento11 pagineApplication of Particle Swarm Optimization To Association Rule MiningAnonymous TxPyX8cNessuna valutazione finora

- Finding Association Rules that Optimize Accuracy and SupportDocumento12 pagineFinding Association Rules that Optimize Accuracy and Supportdavid jhonNessuna valutazione finora

- Ans: A: 1. Describe The Following: Dimensional ModelDocumento8 pagineAns: A: 1. Describe The Following: Dimensional ModelAnil KumarNessuna valutazione finora

- L - B F E A: Earning Ased Requency Stimation LgorithmsDocumento20 pagineL - B F E A: Earning Ased Requency Stimation Lgorithmsirfan_icil2099Nessuna valutazione finora

- Parallel Data Mining of Association RulesDocumento10 pagineParallel Data Mining of Association RulesAvinash MudunuriNessuna valutazione finora

- DBMS Unit 3 NotesDocumento29 pagineDBMS Unit 3 Notesashwanimpec20Nessuna valutazione finora

- Designing A Network Search SystemDocumento5 pagineDesigning A Network Search SystemMisbah UddinNessuna valutazione finora

- Adina Institute of Science & Technology: Department of Computer Science & Engg. M.Tech CSE-II Sem Lab Manuals MCSE - 203Documento22 pagineAdina Institute of Science & Technology: Department of Computer Science & Engg. M.Tech CSE-II Sem Lab Manuals MCSE - 203rajneesh pachouriNessuna valutazione finora

- Database Models OverviewDocumento11 pagineDatabase Models OverviewIroegbu Mang JohnNessuna valutazione finora

- Hashing Structure Disadvantages and Collision SolutionsDocumento4 pagineHashing Structure Disadvantages and Collision SolutionsBea Marella CapundanNessuna valutazione finora

- Optimal Joins Using Compressed Quadtrees: Diego Arroyuelo, Gonzalo Navarro, Juan L. Reutter, Javiel Rojas-LedesmaDocumento52 pagineOptimal Joins Using Compressed Quadtrees: Diego Arroyuelo, Gonzalo Navarro, Juan L. Reutter, Javiel Rojas-LedesmatriloNessuna valutazione finora

- Fast Algorithms For Mining Association RulesDocumento2 pagineFast Algorithms For Mining Association Rulesगोपाल शर्माNessuna valutazione finora

- Ijcs 2016 0303008 PDFDocumento16 pagineIjcs 2016 0303008 PDFeditorinchiefijcsNessuna valutazione finora

- A New SQL-like Operator For Mining Association Rules: Rosa MeoDocumento12 pagineA New SQL-like Operator For Mining Association Rules: Rosa Meobuan_11Nessuna valutazione finora

- A Method For Comparing Content Based Image Retrieval MethodsDocumento8 pagineA Method For Comparing Content Based Image Retrieval MethodsAtul GuptaNessuna valutazione finora

- The Impact of Threshold Parameters in Transactional Data AnalysisDocumento6 pagineThe Impact of Threshold Parameters in Transactional Data AnalysisCyruz LapinasNessuna valutazione finora

- Slicing approach to privacy preserving data publishingDocumento19 pagineSlicing approach to privacy preserving data publishingkeerthana1234Nessuna valutazione finora

- Bayesian Network HomeworkDocumento5 pagineBayesian Network Homeworkafmtozdgp100% (1)

- Paper-16 - A Study & Emergence of Temporal Association Mining Similarity-ProfiledDocumento7 paginePaper-16 - A Study & Emergence of Temporal Association Mining Similarity-ProfiledRachel WheelerNessuna valutazione finora

- Basic Data Science Interview Questions ExplainedDocumento38 pagineBasic Data Science Interview Questions Explainedsahil kumarNessuna valutazione finora

- Authenticated Data Structures For Graph and Geometric SearchingDocumento16 pagineAuthenticated Data Structures For Graph and Geometric SearchingRony PestinNessuna valutazione finora

- Algorithms and Applications For Universal Quantification in Relational DatabasesDocumento30 pagineAlgorithms and Applications For Universal Quantification in Relational Databasesiky77Nessuna valutazione finora

- Data Vault Modeling: A Resilient Method for Data WarehousingDocumento14 pagineData Vault Modeling: A Resilient Method for Data WarehousingRajnish Kumar Ravi50% (2)

- Computer Science - V: Disadvantages of Database System AreDocumento13 pagineComputer Science - V: Disadvantages of Database System AreJugal PatelNessuna valutazione finora

- Interoperable & Efficient: Linked Data For The Internet of ThingsDocumento15 pagineInteroperable & Efficient: Linked Data For The Internet of ThingsEstivenAlarconPillcoNessuna valutazione finora

- Abr 10534Documento8 pagineAbr 10534laashtgNessuna valutazione finora

- 4.on Demand Quality of Web Services Using Ranking by Multi Criteria-31-35Documento5 pagine4.on Demand Quality of Web Services Using Ranking by Multi Criteria-31-35iisteNessuna valutazione finora

- Anomalous Topic Discovery in High Dimensional Discrete DataDocumento4 pagineAnomalous Topic Discovery in High Dimensional Discrete DataBrightworld ProjectsNessuna valutazione finora

- CBAR: An Efficient Method For Mining Association Rules: Yuh-Jiuan Tsay, Jiunn-Yann ChiangDocumento7 pagineCBAR: An Efficient Method For Mining Association Rules: Yuh-Jiuan Tsay, Jiunn-Yann Chiangmahapreethi2125Nessuna valutazione finora

- Unit 13 Structured Query Formulation: StructureDocumento17 pagineUnit 13 Structured Query Formulation: StructurezakirkhiljiNessuna valutazione finora

- IM Ch03 Relational DB Model Ed12Documento36 pagineIM Ch03 Relational DB Model Ed12Chong Fong KimNessuna valutazione finora

- Database Notes for GATE CSE AspirantsDocumento74 pagineDatabase Notes for GATE CSE AspirantsSmit ThakkarNessuna valutazione finora

- Dennis Shasha, Vladimir Lanin, Jeanette Schmidt (Shasha@nyu-Csd2.edu, Lanin@nyu-Csd2.edu, Schmidtj@nyu - Edu)Documento31 pagineDennis Shasha, Vladimir Lanin, Jeanette Schmidt (Shasha@nyu-Csd2.edu, Lanin@nyu-Csd2.edu, Schmidtj@nyu - Edu)Sunil SinghNessuna valutazione finora

- Discovery of Multivalued Dependencies From Relations: Savnik@informatik - Uni-Freiburg - deDocumento25 pagineDiscovery of Multivalued Dependencies From Relations: Savnik@informatik - Uni-Freiburg - deRohit YadavNessuna valutazione finora

- PVT DBDocumento12 paginePVT DBomsingh786Nessuna valutazione finora

- Chapter 1Documento57 pagineChapter 1Levale XrNessuna valutazione finora

- Detection of Forest Fire Using Wireless Sensor NetworkDocumento5 pagineDetection of Forest Fire Using Wireless Sensor NetworkAnonymous gIhOX7VNessuna valutazione finora

- Discovering and Reconciling Value Conflicts For Data IntegrationDocumento24 pagineDiscovering and Reconciling Value Conflicts For Data IntegrationPrakash SahuNessuna valutazione finora

- Manisha 3001 Week 12Documento22 pagineManisha 3001 Week 12Suman GaihreNessuna valutazione finora

- The Nested Relational Data Model Is Not A Good IdeaDocumento10 pagineThe Nested Relational Data Model Is Not A Good IdeaArun arunNessuna valutazione finora

- Decision Trees For Handling Uncertain Data To Identify Bank FraudsDocumento4 pagineDecision Trees For Handling Uncertain Data To Identify Bank FraudsWARSE JournalsNessuna valutazione finora

- Decision Models For Record LinkageDocumento15 pagineDecision Models For Record LinkageIsaias PrestesNessuna valutazione finora

- Eliminating Redundant Frequent Pattern S in Non - Taxonomy Data SetsDocumento5 pagineEliminating Redundant Frequent Pattern S in Non - Taxonomy Data Setseditor_ijarcsseNessuna valutazione finora

- For 100% Result Oriented IGNOU Coaching and Project Training Call CPD: 011-65164822, 08860352748Documento9 pagineFor 100% Result Oriented IGNOU Coaching and Project Training Call CPD: 011-65164822, 08860352748Kamal TiwariNessuna valutazione finora

- Efficient Graph-Based Algorithms For Discovering and Maintaining Association Rules in Large DatabasesDocumento24 pagineEfficient Graph-Based Algorithms For Discovering and Maintaining Association Rules in Large DatabasesAkhil Jabbar MeerjaNessuna valutazione finora

- Association Rule Mining Using Modified Bpso: Amit Kumar Chandanan, Kavita & M K ShuklaDocumento8 pagineAssociation Rule Mining Using Modified Bpso: Amit Kumar Chandanan, Kavita & M K ShuklaTJPRC PublicationsNessuna valutazione finora

- An Efficient Constraint Based Soft Set Approach For Association Rule MiningDocumento7 pagineAn Efficient Constraint Based Soft Set Approach For Association Rule MiningManasvi MehtaNessuna valutazione finora

- Image Content With Double Hashing Techniques: ISSN No. 2278-3091Documento4 pagineImage Content With Double Hashing Techniques: ISSN No. 2278-3091WARSE JournalsNessuna valutazione finora

- Value Added Association Rules: T.Y. Lin San Jose State University Drlin@sjsu - EduDocumento9 pagineValue Added Association Rules: T.Y. Lin San Jose State University Drlin@sjsu - EduTrung VoNessuna valutazione finora

- Record Matching Over Query Results From Multiple Web DatabasesDocumento93 pagineRecord Matching Over Query Results From Multiple Web DatabaseskumarbbcitNessuna valutazione finora

- Hierarchical Database Model: What Is RDBMS? Explain Its FeaturesDocumento24 pagineHierarchical Database Model: What Is RDBMS? Explain Its FeaturesitxpertsNessuna valutazione finora

- 1 QB104321 - 2013 - RegulationDocumento4 pagine1 QB104321 - 2013 - RegulationVenkanna Huggi HNessuna valutazione finora

- Database Security Protection Via Inference DetectionDocumento6 pagineDatabase Security Protection Via Inference DetectionmanimegalaielumalaiNessuna valutazione finora

- Real-World Data Is Dirty: Data Cleansing and The Merge/Purge ProblemDocumento39 pagineReal-World Data Is Dirty: Data Cleansing and The Merge/Purge ProblempoderrrNessuna valutazione finora

- DATABASE From the conceptual model to the final application in Access, Visual Basic, Pascal, Html and Php: Inside, examples of applications created with Access, Visual Studio, Lazarus and WampDa EverandDATABASE From the conceptual model to the final application in Access, Visual Basic, Pascal, Html and Php: Inside, examples of applications created with Access, Visual Studio, Lazarus and WampNessuna valutazione finora

- Optimized Cloud Resource Management and Scheduling: Theories and PracticesDa EverandOptimized Cloud Resource Management and Scheduling: Theories and PracticesNessuna valutazione finora

- Cat 2Documento18 pagineCat 2Sameer NandanNessuna valutazione finora

- What Is Static ConstructorDocumento74 pagineWhat Is Static ConstructorSameer NandanNessuna valutazione finora

- GK CAPSULE FOR IBPS PO - IIIDocumento41 pagineGK CAPSULE FOR IBPS PO - IIIPawan KumarNessuna valutazione finora

- Shakuntla Devi PuzzleDocumento182 pagineShakuntla Devi Puzzleragangani100% (6)

- Hi SaravananDocumento4 pagineHi SaravananSameer NandanNessuna valutazione finora

- 7.wireless Data Encryption and Decryption Using ZigbeeDocumento4 pagine7.wireless Data Encryption and Decryption Using ZigbeeSameer Nandan100% (2)

- ProgramsDocumento73 pagineProgramsSameer NandanNessuna valutazione finora

- Net Experience Resume SampleDocumento3 pagineNet Experience Resume Samplesanjay_chaudhariNessuna valutazione finora

- 5.the Wireless Sensor Network For Home-Care System Using ZigbeeDocumento4 pagine5.the Wireless Sensor Network For Home-Care System Using ZigbeeSameer NandanNessuna valutazione finora

- VivekDocumento3 pagineVivekSameer NandanNessuna valutazione finora

- Computer NetworksDocumento36 pagineComputer NetworksSameer NandanNessuna valutazione finora

- EceDocumento21 pagineEceSameer NandanNessuna valutazione finora

- 4.applications of Short-Range Wireless Technologies To Industrial AutomationDocumento3 pagine4.applications of Short-Range Wireless Technologies To Industrial AutomationSameer NandanNessuna valutazione finora

- 10.sub Band Adaptive Filter For Aliasing.Documento2 pagine10.sub Band Adaptive Filter For Aliasing.Sameer NandanNessuna valutazione finora

- 2.multi-Stage Real Time Weather Monitoring Via Zigbee in Smart HomesindustriesDocumento3 pagine2.multi-Stage Real Time Weather Monitoring Via Zigbee in Smart HomesindustriesSameer NandanNessuna valutazione finora

- 1.zigbee Device Access Control With Reliable Data TransmissionDocumento3 pagine1.zigbee Device Access Control With Reliable Data TransmissionSameer NandanNessuna valutazione finora

- 15.sliced Ridglet Transform For Image Denoising.Documento2 pagine15.sliced Ridglet Transform For Image Denoising.Sameer NandanNessuna valutazione finora

- 3.zigbee Wireless Vehicle Identification and Authentication SystemDocumento3 pagine3.zigbee Wireless Vehicle Identification and Authentication SystemSameer NandanNessuna valutazione finora

- 8.eigen Vector For Face Recognition Using Dimensionality Reduction PCA.Documento1 pagina8.eigen Vector For Face Recognition Using Dimensionality Reduction PCA.Sameer NandanNessuna valutazione finora

- 10.sub Band Adaptive Filter For Aliasing.Documento2 pagine10.sub Band Adaptive Filter For Aliasing.Sameer NandanNessuna valutazione finora

- 9.image Water Marking Using Wavelet Transforms.Documento1 pagina9.image Water Marking Using Wavelet Transforms.Sameer NandanNessuna valutazione finora

- 2.implementation of Target Tracking Using Kalman Filter.Documento1 pagina2.implementation of Target Tracking Using Kalman Filter.Sameer NandanNessuna valutazione finora

- 14.spoken Digit Recognition Using MATLAB.Documento1 pagina14.spoken Digit Recognition Using MATLAB.Sameer NandanNessuna valutazione finora

- 9.image Water Marking Using Wavelet Transforms.Documento1 pagina9.image Water Marking Using Wavelet Transforms.Sameer NandanNessuna valutazione finora

- 5.MPEG Video CompressionDocumento1 pagina5.MPEG Video CompressionSameer NandanNessuna valutazione finora

- StegnoGraphy hides data in imagesDocumento2 pagineStegnoGraphy hides data in imagesSameer NandanNessuna valutazione finora

- 4.design and Implementation of Image Denoising Using Curvlet Transform.Documento1 pagina4.design and Implementation of Image Denoising Using Curvlet Transform.Sameer NandanNessuna valutazione finora

- 13.channel Estimation For Wireless Communication.Documento1 pagina13.channel Estimation For Wireless Communication.Sameer NandanNessuna valutazione finora

- Voice Guider and Medicine Reminder For Old Aged People and PatientsDocumento5 pagineVoice Guider and Medicine Reminder For Old Aged People and PatientsSameer NandanNessuna valutazione finora

- Image Fusion by Using Wavelet TransformDocumento2 pagineImage Fusion by Using Wavelet TransformSameer NandanNessuna valutazione finora

- Reynaers Product Overview CURTAIN WALLDocumento80 pagineReynaers Product Overview CURTAIN WALLyantoNessuna valutazione finora

- Bio-Climatic Tower/Eco-Tower: Bachelor of ArchitectureDocumento12 pagineBio-Climatic Tower/Eco-Tower: Bachelor of ArchitectureZorawar Singh Basur67% (3)

- The Art of Grooming - 230301 - 222106Documento61 pagineThe Art of Grooming - 230301 - 222106ConstantinNessuna valutazione finora

- Vehicle and Driver Vibration - PPTDocumento16 pagineVehicle and Driver Vibration - PPTAnirban MitraNessuna valutazione finora

- KTO12 Curriculum ExplainedDocumento24 pagineKTO12 Curriculum ExplainedErnesto ViilavertNessuna valutazione finora

- KL 8052N User ManualDocumento33 pagineKL 8052N User ManualBiomédica HONessuna valutazione finora

- Load of Pedstrain On FobDocumento26 pagineLoad of Pedstrain On FobPOOJA VNessuna valutazione finora

- Appendix 1c Bridge Profiles Allan TrussesDocumento43 pagineAppendix 1c Bridge Profiles Allan TrussesJosue LewandowskiNessuna valutazione finora

- Operator Interface SERIES 300 Device Platform EAGLE OS ET-316-TXDocumento6 pagineOperator Interface SERIES 300 Device Platform EAGLE OS ET-316-TXDecoNessuna valutazione finora

- Cisco and Duo Presentation 8.2.18Documento8 pagineCisco and Duo Presentation 8.2.18chris_ohaboNessuna valutazione finora

- General Psychology Module 1.1Documento6 pagineGeneral Psychology Module 1.1Niño Gabriel MagnoNessuna valutazione finora

- Qc-Sop-0 - Drilling of PoleDocumento7 pagineQc-Sop-0 - Drilling of PoleAmeerHamzaWarraichNessuna valutazione finora

- #1 Introduction To C LanguageDocumento6 pagine#1 Introduction To C LanguageAtul SharmaNessuna valutazione finora

- Sap - HR Standard Operating Procedure: Facility To Reset Password of ESSDocumento6 pagineSap - HR Standard Operating Procedure: Facility To Reset Password of ESSPriyadharshanNessuna valutazione finora

- Functional Molecular Engineering Hierarchical Pore-Interface Based On TD-Kinetic Synergy Strategy For Efficient CO2 Capture and SeparationDocumento10 pagineFunctional Molecular Engineering Hierarchical Pore-Interface Based On TD-Kinetic Synergy Strategy For Efficient CO2 Capture and SeparationAnanthakishnanNessuna valutazione finora

- High Level Cyber Security Assessment - Detailed ReportDocumento57 pagineHigh Level Cyber Security Assessment - Detailed Reportdobie_e_martinNessuna valutazione finora

- Cahyadi J Malia Tugas MID TPODocumento9 pagineCahyadi J Malia Tugas MID TPOCahyadi J MaliaNessuna valutazione finora

- ProjectDocumento5 pagineProjectMahi MalikNessuna valutazione finora

- How many times do clock hands overlap in a dayDocumento6 pagineHow many times do clock hands overlap in a dayabhijit2009Nessuna valutazione finora

- Template Project Overview StatementDocumento4 pagineTemplate Project Overview StatementArdan ArasNessuna valutazione finora

- 8279Documento32 pagine8279Kavitha SubramaniamNessuna valutazione finora

- CASE ANALYSIS: DMX Manufacturing: Property of STIDocumento3 pagineCASE ANALYSIS: DMX Manufacturing: Property of STICarmela CaloNessuna valutazione finora

- Leadership EthiqueDocumento16 pagineLeadership EthiqueNOURDINE EZZALMADINessuna valutazione finora

- IQ, OQ, PQ: A Quick Guide To Process ValidationDocumento9 pagineIQ, OQ, PQ: A Quick Guide To Process ValidationGonzalo MazaNessuna valutazione finora

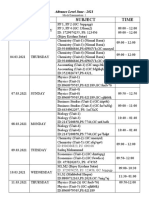

- Mock Examination Routine A 2021 NewDocumento2 pagineMock Examination Routine A 2021 Newmufrad muhtasibNessuna valutazione finora

- Paper Velocity String SPE-30197-PADocumento4 paginePaper Velocity String SPE-30197-PAPablo RaffinNessuna valutazione finora

- Hegemonic Masculinity As A Historical Problem: Ben GriffinDocumento24 pagineHegemonic Masculinity As A Historical Problem: Ben GriffinBolso GatoNessuna valutazione finora

- ĐỀ CƯƠNG ÔN TẬP HỌC KÌ 1-LỚP 12Documento15 pagineĐỀ CƯƠNG ÔN TẬP HỌC KÌ 1-LỚP 12Anh Duc VuNessuna valutazione finora