Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Lecture Notes by Leslie and Nickson 2005

Caricato da

ahmetcakiroglu90Descrizione originale:

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Lecture Notes by Leslie and Nickson 2005

Caricato da

ahmetcakiroglu90Copyright:

Formati disponibili

Collected Lecture Slides for COMP 202

Formal Methods of Computer Science

Neil Leslie and Ray Nickson

11 October, 2005

This document was created by concatenating the lecture slides into a single

sequence: one chapter per topic, and one section per titled slide.

There is no additional material apart from what was distributed in lectures.

ii

Neil Leslie and Ray Nickson assert their moral right

to be identied as the authors of this work.

c _ Neil Leslie and Ray Nickson, 2005

Contents

Problems, Algorithms, and Programs 1

1 Introduction 3

1.1 COMP202 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.2 Course information . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.3 Assessment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.4 Tutorial and Marking Groups . . . . . . . . . . . . . . . . . . . . 4

1.5 What is Computer Science? . . . . . . . . . . . . . . . . . . . . . 4

1.6 Some Powerful Ideas . . . . . . . . . . . . . . . . . . . . . . . . . 5

1.7 Related Areas . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

1.8 Lecture schedule . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.9 Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

2 Problems and Algorithms 7

2.1 Problems and Algorithms . . . . . . . . . . . . . . . . . . . . . . 7

2.2 Problems, Programs and Proofs . . . . . . . . . . . . . . . . . . . 7

2.3 Describing problems . . . . . . . . . . . . . . . . . . . . . . . . . 7

2.4 Example: Comparing Strings . . . . . . . . . . . . . . . . . . . . 8

2.5 Understanding the Problem . . . . . . . . . . . . . . . . . . . . . 9

2.6 Designing an Algorithm . . . . . . . . . . . . . . . . . . . . . . . 9

2.7 Dening the Algorithm Iteratively . . . . . . . . . . . . . . . . . 10

2.8 Dening the Algorithm Recursively . . . . . . . . . . . . . . . . . 10

3 Two Simple Programming Languages 11

3.1 Imperative and Applicative Languages . . . . . . . . . . . . . . . 11

3.2 The Language of While-Programs . . . . . . . . . . . . . . . . . . 11

3.3 Comparing strings again . . . . . . . . . . . . . . . . . . . . . . . 12

3.4 The Applicative Language . . . . . . . . . . . . . . . . . . . . . . 12

3.5 Those strings again ... . . . . . . . . . . . . . . . . . . . . . . . . 13

3.6 Using head and tail . . . . . . . . . . . . . . . . . . . . . . . . . . 14

4 Reasoning about Programs 15

4.1 Some Questions about Languages . . . . . . . . . . . . . . . . . . 15

4.2 Syntax . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

4.3 Semantics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

iii

iv CONTENTS

4.4 Comparing Strings One More Time . . . . . . . . . . . . . . . . . 16

4.5 Laws for Program Verication . . . . . . . . . . . . . . . . . . . . 18

4.6 Reasoning with Assertions . . . . . . . . . . . . . . . . . . . . . . 18

I Regular Languages 21

5 Preliminaries 23

5.1 Part I: Regular Languages . . . . . . . . . . . . . . . . . . . . . . 23

5.2 Formal languages . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

5.3 Alphabet . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

5.4 String . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

5.5 Language, Word . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

5.6 Operations on Strings and Languages . . . . . . . . . . . . . . . 25

5.7 A proof . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

5.8 Statement of the conjecture . . . . . . . . . . . . . . . . . . . . . 26

5.9 Base case . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

5.10 Induction step . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

5.11 A theorem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

6 Dening languages using regular expressions 29

6.1 Regular expressions . . . . . . . . . . . . . . . . . . . . . . . . . . 29

6.2 Arithmetic expressions . . . . . . . . . . . . . . . . . . . . . . . . 29

6.3 Simplifying conventions . . . . . . . . . . . . . . . . . . . . . . . 30

6.4 Operator precedence . . . . . . . . . . . . . . . . . . . . . . . . . 30

6.5 Operator associativity . . . . . . . . . . . . . . . . . . . . . . . . 30

6.6 Care! . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

6.7 Dening regular expressions . . . . . . . . . . . . . . . . . . . . . 31

6.8 Simplifying conventions . . . . . . . . . . . . . . . . . . . . . . . 31

6.9 What the regular expressions describe . . . . . . . . . . . . . . . 32

6.10 Derived forms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

7 Regular languages 35

7.1 Regular Languages . . . . . . . . . . . . . . . . . . . . . . . . . . 35

7.2 Example languages . . . . . . . . . . . . . . . . . . . . . . . . . . 35

7.3 Finite languages are regular . . . . . . . . . . . . . . . . . . . . . 37

7.4 An algorithm . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

7.5 EVEN-EVEN . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

7.6 Deciding membership of EVEN-EVEN . . . . . . . . . . . . . . . 38

7.7 Using fewer states . . . . . . . . . . . . . . . . . . . . . . . . . . 38

7.8 Using even fewer states . . . . . . . . . . . . . . . . . . . . . . . 38

7.9 Uses of regular expressions . . . . . . . . . . . . . . . . . . . . . . 38

CONTENTS v

8 Finite automata 41

8.1 Finite Automata . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

8.2 Formal denition . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

8.3 Explanation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

8.4 An automaton . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

8.5 The language accepted by M

1

. . . . . . . . . . . . . . . . . . . . 43

8.6 Another automaton . . . . . . . . . . . . . . . . . . . . . . . . . . 43

8.7 A pictorial representation of FA . . . . . . . . . . . . . . . . . . . 43

8.8 Examples of constructing an automaton . . . . . . . . . . . . . . 44

8.9 Constructing M

L

. . . . . . . . . . . . . . . . . . . . . . . . . . . 44

8.10 A machine to accept Language(11(0 +1)

) . . . . . . . . . . . . 45

8.11 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

9 Non-deterministic Finite automata 47

9.1 Non-deterministic Finite Automata . . . . . . . . . . . . . . . . . 47

9.2 Preliminaries . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

9.3 Formal denition . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

9.4 Just like before. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

9.5 Example NFA . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

9.6 M

5

accepts 010 . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

9.7 M

5

accepts 01 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

9.8 Comments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

9.9 NFAs are as powerful as FAs . . . . . . . . . . . . . . . . . . . . 50

9.10 Proof . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

9.11 Using the graphical representation . . . . . . . . . . . . . . . . . 50

9.12 FAs are as powerful as NFAs . . . . . . . . . . . . . . . . . . . . 51

9.13 Useful observations . . . . . . . . . . . . . . . . . . . . . . . . . . 51

9.14 Construction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

9.15 Constructing

and Q

. . . . . . . . . . . . . . . . . . . . . . . . 52

9.16 Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

9.17 NFA with transitions . . . . . . . . . . . . . . . . . . . . . . . 53

9.18 Motivation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

9.19 Formal denition . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

9.20 Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

9.21 Graphical representation of M

6

. . . . . . . . . . . . . . . . . . . 54

9.22 Easy theorem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

9.23 Harder theorem . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

9.24

the new transition function . . . . . . . . . . . . . . . . . . . . 55

9.25 F

the new set of accepting states . . . . . . . . . . . . . . . . . . 56

9.26 Example: an NFA equivalent to M

6

. . . . . . . . . . . . . . . . 56

9.27 Graphical representation of M

12

. . . . . . . . . . . . . . . . . . 56

9.28 Regular expression to NFA with transitions . . . . . . . . . . . 56

9.29 Proof outline . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

9.30 Base cases . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

9.31 Induction steps . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

9.32 Proof . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

vi CONTENTS

9.33 Proof summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

9.34 Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

10 Kleenes theorem 63

10.1 A Theorem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

10.2 Kleenes theorem . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

11 Closure Properties of Regular Languages 65

11.1 Closure properties of regular language . . . . . . . . . . . . . . . 65

11.2 Formally . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

11.3 Complement . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

11.4 Union . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

11.5 Concatenation . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

11.6 Kleene closure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

11.7 Intersection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

11.8 Summary of the proofs . . . . . . . . . . . . . . . . . . . . . . . . 67

12 Non-regular languages 69

12.1 Non-regular Languages . . . . . . . . . . . . . . . . . . . . . . . . 69

12.2 Pumping lemma . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

12.3 Pumping lemma informally . . . . . . . . . . . . . . . . . . . . . 70

12.4 Proving the pumping lemma . . . . . . . . . . . . . . . . . . . . . 71

12.5 A non-regular language . . . . . . . . . . . . . . . . . . . . . . . 72

12.6 Pumping lemma re-cap . . . . . . . . . . . . . . . . . . . . . . . . 72

12.7 Another non-regular language . . . . . . . . . . . . . . . . . . . . 72

12.8 And another . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

12.9 Comment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

13 Regular languages: summary 75

13.1 Regular languages: summary . . . . . . . . . . . . . . . . . . . . 75

13.2 Kleenes theorem . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

II Context Free Languages 77

14 Introducing Context Free Grammars 79

14.1 Beyond Regular Languages . . . . . . . . . . . . . . . . . . . . . 79

14.2 Sentences with Nested Structure . . . . . . . . . . . . . . . . . . 79

14.3 A Simple English Grammar . . . . . . . . . . . . . . . . . . . . . 80

14.4 Parse Trees . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

14.5 Context Free Grammars . . . . . . . . . . . . . . . . . . . . . . . 81

14.6 Formal denition of CFG . . . . . . . . . . . . . . . . . . . . . . 82

CONTENTS vii

15 Regular and Context Free Languages 83

15.1 Regular and Context Free Languages . . . . . . . . . . . . . . . . 83

15.2 CF Reg ,= . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

15.3 CF , Reg . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

15.4 CF Reg . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

15.5 Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

15.6 Regular Grammars . . . . . . . . . . . . . . . . . . . . . . . . . . 85

15.7 Parsing using Regular Grammars . . . . . . . . . . . . . . . . . . 85

15.8 Derivations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

15.9 Derivations in Regular Grammars . . . . . . . . . . . . . . . . . . 86

15.10Derivations in Arbitrary CFGs . . . . . . . . . . . . . . . . . . . 86

15.11Parse Trees . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

15.12Derivations and parse trees . . . . . . . . . . . . . . . . . . . . . 88

15.13Ambiguity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

16 Normal Forms 89

16.1 Lambda Productions . . . . . . . . . . . . . . . . . . . . . . . . . 89

16.2 Eliminating Productions . . . . . . . . . . . . . . . . . . . . . 89

16.3 Circularities . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

16.4 Productions on the Start Symbol . . . . . . . . . . . . . . . . . 91

16.5 Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

16.6 Unit Productions . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

16.7 Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

17 Recursive Descent Parsing 93

17.1 Recognising CFLs (Parsing) . . . . . . . . . . . . . . . . . . . . . 93

17.2 Top-down Parsing . . . . . . . . . . . . . . . . . . . . . . . . . . 93

17.3 Top-down Parsing . . . . . . . . . . . . . . . . . . . . . . . . . . 94

17.4 Recursive Descent Parsing . . . . . . . . . . . . . . . . . . . . . . 94

17.5 Building a Parser for Nested Lists . . . . . . . . . . . . . . . . . 94

17.6 Parser for Nested Lists . . . . . . . . . . . . . . . . . . . . . . . . 95

17.7 Building a Parse Tree . . . . . . . . . . . . . . . . . . . . . . . . 96

17.8 Parser for Nested Lists . . . . . . . . . . . . . . . . . . . . . . . . 96

17.9 LL(1) Grammars . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

17.10First and Follow sets . . . . . . . . . . . . . . . . . . . . . . . . . 98

17.11Transforming CFGs to LL(1) form . . . . . . . . . . . . . . . . . 98

18 Pushdown Automata 101

18.1 Finite and Innite Automata . . . . . . . . . . . . . . . . . . . . 101

18.2 Pushdown Automata . . . . . . . . . . . . . . . . . . . . . . . . . 102

18.3 Formal Denition of PDA . . . . . . . . . . . . . . . . . . . . . . 103

18.4 Deterministic and Nondeterministic PDAs . . . . . . . . . . . . . 104

18.5 CFG PDA . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

18.6 Top-Down construction . . . . . . . . . . . . . . . . . . . . . . . 105

18.7 S aS [ T T b [ bT . . . . . . . . . . . . . . . . . . . . . . . 105

18.8 Bottom-Up construction . . . . . . . . . . . . . . . . . . . . . . . 106

viii CONTENTS

18.9 S aS [ T; T b [ bT . . . . . . . . . . . . . . . . . . . . . . 106

18.10PDA CFG . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 106

18.11PDA to CFG Formally . . . . . . . . . . . . . . . . . . . . . . . . 107

18.12Example (L

6

from slide 69) . . . . . . . . . . . . . . . . . . . . . 107

19 Non-CF Languages 109

19.1 Not All Languages are Context Free . . . . . . . . . . . . . . . . 109

19.2 Context Sensitive Grammars . . . . . . . . . . . . . . . . . . . . 109

19.3 Example (1) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

19.4 Example (2) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

19.5 Generating the empty string . . . . . . . . . . . . . . . . . . . . . 110

19.6 CFL CSL . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

20 Closure Properties 113

20.1 Closure Properties . . . . . . . . . . . . . . . . . . . . . . . . . . 113

20.2 Union of Context Free Languages is Context Free . . . . . . . . . 113

20.3 Concatenation of CF Languages is Context Free . . . . . . . . . 114

20.4 Kleene Star of a CF Language is CF . . . . . . . . . . . . . . . . 115

20.5 Intersections and Complements of CF Languages . . . . . . . . . 115

21 Summary of CF Languages 117

21.1 Why context-free? . . . . . . . . . . . . . . . . . . . . . . . . . . 117

21.2 Phrase-structure grammars . . . . . . . . . . . . . . . . . . . . . 117

21.3 Special cases of Phrase-structure grammars . . . . . . . . . . . . 118

21.4 Derivations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

21.5 Parsing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

21.6 Recursive descent and LL(1) grammars . . . . . . . . . . . . . . 119

21.7 Pushdown automata . . . . . . . . . . . . . . . . . . . . . . . . . 119

21.8 Closure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

21.9 Constructions on CFLs . . . . . . . . . . . . . . . . . . . . . . . . 120

III Turing Machines 121

22 Turing Machines I 123

22.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

22.2 Motivation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 124

22.3 A crisis in the foundations of mathematics . . . . . . . . . . . . . 124

22.4 The computable functions: Turings approach . . . . . . . . . . . 124

22.5 What a computer does . . . . . . . . . . . . . . . . . . . . . . . . 124

22.6 What have we achieved? . . . . . . . . . . . . . . . . . . . . . . . 125

22.7 The computable functions: Churchs approach . . . . . . . . . . . 125

22.8 First remarkable fact . . . . . . . . . . . . . . . . . . . . . . . . . 126

22.9 Church-Turing thesis . . . . . . . . . . . . . . . . . . . . . . . . . 127

22.10Second important fact . . . . . . . . . . . . . . . . . . . . . . . . 127

22.11The universal machine . . . . . . . . . . . . . . . . . . . . . . . . 127

CONTENTS ix

22.12Third important fact . . . . . . . . . . . . . . . . . . . . . . . . . 128

23 Turing machines II 129

23.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

23.2 Informal description . . . . . . . . . . . . . . . . . . . . . . . . . 130

23.3 How Turing machines behave: a trichotomy . . . . . . . . . . . . 130

23.4 Informal example . . . . . . . . . . . . . . . . . . . . . . . . . . . 130

23.5 Towards a formal denition . . . . . . . . . . . . . . . . . . . . . 131

23.6 Alphabets . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

23.7 The head and the tape . . . . . . . . . . . . . . . . . . . . . . . . 132

23.8 The states . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

23.9 The transition function . . . . . . . . . . . . . . . . . . . . . . . 132

23.10Conguration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

23.11Formal denition . . . . . . . . . . . . . . . . . . . . . . . . . . . 133

23.12Representing the computation . . . . . . . . . . . . . . . . . . . . 133

23.13A simple machine . . . . . . . . . . . . . . . . . . . . . . . . . . . 133

23.14Graphical representation of M

1

. . . . . . . . . . . . . . . . . . . 134

23.15Some traces . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

23.16Turing machines can accept regular languages . . . . . . . . . . . 134

23.17Proof . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

23.18Another machine . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

23.19Graphical representation of M

2

. . . . . . . . . . . . . . . . . . . 135

23.20A trace . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

24 Turing Machines III 137

24.1 An example machine . . . . . . . . . . . . . . . . . . . . . . . . . 137

24.2 Some denitions . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

24.3 Computable and computably enumerable languages . . . . . . . . 138

24.4 Deciders and recognizers . . . . . . . . . . . . . . . . . . . . . . . 138

24.5 A decidable language . . . . . . . . . . . . . . . . . . . . . . . . . 139

24.6 Another decidable language . . . . . . . . . . . . . . . . . . . . . 139

24.7 A corollary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

24.8 Even more decidable sets . . . . . . . . . . . . . . . . . . . . . . 140

24.9 An undecidable set . . . . . . . . . . . . . . . . . . . . . . . . . . 140

25 Turing Machines IV 141

25.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

25.2 A

FA

is decidable . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

25.3 A

PDA

is decidable . . . . . . . . . . . . . . . . . . . . . . . . . . 142

25.4 The halting problem . . . . . . . . . . . . . . . . . . . . . . . . . 142

25.5 Other undecidable problems . . . . . . . . . . . . . . . . . . . . . 143

25.6 Closure properties . . . . . . . . . . . . . . . . . . . . . . . . . . 143

25.7 Computable and computably enumerable languages . . . . . . . . 144

25.8 A language which is not c.e. . . . . . . . . . . . . . . . . . . . . . 145

x CONTENTS

26 Turing Machines V 147

26.1 A hierarchy of classes of language . . . . . . . . . . . . . . . . . . 147

26.2 A hierarchy of classes of grammar . . . . . . . . . . . . . . . . . . 147

26.3 Grammars for natural languages . . . . . . . . . . . . . . . . . . 148

26.4 A hierarchy of classes of automaton . . . . . . . . . . . . . . . . . 148

26.5 Deterministic and nondeterministic automata . . . . . . . . . . . 149

26.6 Nondeterminstic Turing Machines . . . . . . . . . . . . . . . . . . 149

26.7 NTM=TM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 149

26.8 More variations on Turing Machines . . . . . . . . . . . . . . . . 150

27 Summary of the course 151

27.1 Part 0 Algorithms, and Programs . . . . . . . . . . . . . . . . . 151

27.2 Part I Formal languages and automata . . . . . . . . . . . . . . 151

27.3 Part II Context-Free Languages . . . . . . . . . . . . . . . . . . 152

27.4 Part III Turing Machines . . . . . . . . . . . . . . . . . . . . . 152

27.5 COMP 202 exam . . . . . . . . . . . . . . . . . . . . . . . . . . . 152

27.6 What next? . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 153

Part

Problems, Algorithms, and

Programs

1

Chapter 1

Introduction

1.1 COMP202

Formal Methods of Computer Science

COMP202 introduces a selection of topics, focusing on the use of formal no-

tations and formal models in the specication, design and analysis of programs,

languages, and machines.

The focus is on language: syntax, semantics, and translation.

Covers fundamental aspects of Computer Science which have many impor-

tant applications and are essential to advanced study in computer science.

1.2 Course information

Lecturer

Ray Nickson, CO 442, Phone 4635657,

Email nickson@mcs.vuw.ac.nz

Tutors

Alan Taalolo, CO 242, Phone 4636750,

Email alan@mcs.vuw.ac.nz

others to be advised

Web page

http://www.mcs.vuw.ac.nz/Courses/COMP202/

Lectures

Monday, Wednesday and Thursday, 4.10-5pm in Hunter 323.

3

4 CHAPTER 1. INTRODUCTION

Tutorials

One hour per week.

Text book

Introduction to Computer Theory (2nd Edition), by Daniel Cohen, Pub-

lished by Wiley in 1997 (available from the University bookshop, approx.

cost $135).

1.3 Assessment

Problem sets (10%)

The basis for tutorial discussion, and containing questions to write up for

marking.

Programming projects (20%)

Due in weeks 6 and 11.

Test (15%)

Two hours, on Thursday 1 September. Exact time to be conrmed.

Covers material from the rst half of the course.

Exam (55%)

Three hours.

To pass COMP 202 you must achieve at least 40% in the nal

exam, and gain a total mark of at least 50%.

1.4 Tutorial and Marking Groups

Tutorials start NEXT WEEK (on Tuesday 12 July).

There will be ve groups for tutorials and marking; we expect all tutorials

to be held in Cotton 245 (subject to conrmation).

Group Time

1 Tuesday 12-12.50pm

2 Tuesday 2.10-3pm

3 Thursday 2.10-3pm

4 Friday 11-11.50am

5 Friday 12-12.50pm DELETED

Please sign up for a group on the sheets posted outside Cotton 245.

You need to sign up for a group even if you dont intend to attend

tutorials, as these will also be your marking groups.

1.5 What is Computer Science?

Computer Science involves (amongst other things):

Describing complex systems, structures and processes.

1.6. SOME POWERFUL IDEAS 5

Reasoning about such descriptions, to establish desired properties.

Animating/executing descriptions to obtain resulting behaviour.

Transforming one description into another.

These are common threads in much of Computer Science.

Understanding them will form the main aim of this course.

1.6 Some Powerful Ideas

Computer Science has produced several powerful ideas to address these kinds

of problems.

Languages and notations: programming languages, command languages, data

denition languages, class diagrams, . . .

Mathematical models: graphs to model networks, trees to model program

structures, . . .

COMP202 concentrates on the idea of language.

We will study techniques for describing and reasoning about dierent lan-

guages, from simple to complicated.

We will keep in mind the idea that understanding language and performing

computation are closely related activities.

1.7 Related Areas

The course material is drawn mainly from, and used in:

Programming language design, denition and implementation.

Software specication, construction and verication.

Formal languages and automata theory.

It also draws on mathematics (especially algebra and discrete maths),

logic, and linguistics.

It has applications in areas such as Problem solving, User interface

design, Networking protocols, Databases, Programming language de-

sign, and indeed in most computer applications.

6 CHAPTER 1. INTRODUCTION

1.8 Lecture schedule

0. Problems and programs [Weeks 12]

Describing problems, Describing algorithms and programs, Proving prop-

erties of algorithms and programs.

1. Regular Languages [Weeks 36]

Dening and recognising nite languages, Properties of strings and lan-

guages, Regular expressions, Finite automata, Properties of regular lan-

guages.

Text book: Part I.

2. Context Free Languages [Weeks 710]

Context free grammars, Push down automata, Parsing.

Text book: Part II.

3. Computability Theory [Weeks 11-12]

Recursively enumerable languages, Turing Machines, Computable func-

tions.

Text book: Part III.

1.9 Conclusion

COMP 202 will look at various techniques for dening, understanding,

and processing languages.

It will be a mixture of theory and practice.

Mastery of concepts and techniques will be assessed by tests and exams;

problem sets (tutorials and assignments) will test your ability to explore

more deeply what you have learned; and projects will give you the oppor-

tunity to apply knowledge in practice.

The Course Requirements document provides denitive information, and

has links to various rules and policies about which you should be aware.

READ IT!

Chapter 2

Problems and Algorithms

2.1 Problems and Algorithms

Recall that our focus in this course will be on languages.

Before we start studying how to precisely dene languages, let us agree on

some language and notation that we will use to talk about those denitions

(metalanguage).

In particular, let us describe the (semiformal) languages that we will use to:

dene problems

express algorithms

prove properties.

2.2 Problems, Programs and Proofs

How can we specify a problem (independently of its solution)?

How shall we describe a solution to a problem?

program

machine

What does it mean to say that a given program/machine solves a given

problem?

How can we convince ourselves (and others) that a given program/machine

solves a given problem?

2.3 Describing problems

How can we describe a problem for which we wish to write a computer pro-

gram?

7

8 CHAPTER 2. PROBLEMS AND ALGORITHMS

E.g. P

1

: add two natural numbers.

P

2

: sort a list of integers into ascending order.

P

3

: nd the position of an integer x in a list l.

P

4

: what is the shortest route from Wellington to Auckland?

P

5

: is the text in le f a valid Java program?

P

6

: translate a C++ program into machine code.

In each case, we describe a mapping from inputs to outputs.

+----------+

| |

Input ---->| P |----> Output

| |

+----------+

To be more precise, we need to specify:

1. How many inputs, and what kinds of values they can have.

2. How many outputs, and what kinds of values they can have.

3. Which of the possible inputs are actually allowed.

4. What output is required/acceptable for each allowable input.

1,2. To dene number and kinds of inputs and outputs, we need to dene types.

Need basic types, eg integer, character, Boolean.

Combine these to give tuples, sequences/lists, sets, ...

Use mathematical types, rather that arrays & records,

Input and output domains = signature.

3. To dene what inputs are allowed, specify a subset of the input domain

by placing a precondition on the input values.

4. To dene the required/acceptable output, specify a mapping from inputs

to outputs.

Maybe a function; in general, a relation.

It is formalized by giving a postcondition linking inputs and outputs.

2.4 Example: Comparing Strings

Consider the following simple problem:

Determine whether two given character strings are identical.

2.5. UNDERSTANDING THE PROBLEM 9

If you need such an operation in a program youre writing, you might:

Use a built-in operation (e.g. s.equal).

Look for existing code, or a published algorithm.

Design and code an algorithm from scratch.

Before doing any of these, you should make sure you know exactly what

problem youre trying to solve, by dening the signature, precondition, and

postcondition.

2.5 Understanding the Problem

Dene the interface via a signature:

input Strings s and t

output Boolean r

or

Equal : String String Bool

Formalise the output constraint:

r s = t

where

s = t is dened by [s[ = [t[ and (i 1 .. [s[)s[i] = t[i]

Notation:

s[i] is the ith element of s (starting from 0)

[s[ is the length of s

2.6 Designing an Algorithm

In designing an algorithm to determine whether two strings are identical, we

need to consider:

What style of algorithm to write:

Iterative:

while ... do

...

or recursive:

Equal(s, t)

=

...

Equal(..., ...)

...

10 CHAPTER 2. PROBLEMS AND ALGORITHMS

Which condition to test rst:

if [s[ = [t[ then

while ... do

...

else

...

for each index position i in s do

if s[i] = t[i] then

...

else

...

...

What operations to use in accessing the strings.

Indexing/length

s[i] Return ith element of s (starting from 0).

[s[ Return length of s.

Head/tail

head(s) Return rst element of s.

tail(s) Return all but rst element of s.

empty(s) Return true i s is an empty string.

2.7 Dening the Algorithm Iteratively

Algorithm Equal;

input String s, String t;

output Boolean r where r s = t

r := true;

if [s[ = [t[ then

for k from 0 to [s[ 1 do

if s[k] ,= t[k] then

r := false

else

r := false

2.8 Dening the Algorithm Recursively

Equal(s, t)

=

if empty(s) and empty(t) then true

elsif empty(s) or empty(t) then false

elsif head(s) ,= head(t) then false

else Equal(tail(s), tail(t))

Chapter 3

Two Simple Programming

Languages

3.1 Imperative and Applicative Languages

We will dene two simple programming languages: one imperative, the other

applicative.

The language of while-programs

imperative: has assignments and explicit ow of control

similar in style to Pascal, Ada, C++, Java

corresponds to the iterative algorithm style

The language of applicative programs

applicative: concerned with applying functions to arguments and

evaluating expressions

similar in style to Lisp, Scheme, and to functional languages such as

Haskell (COMP304).

corresponds to the recursive algorithm style

3.2 The Language of While-Programs

assignment statements x := e

x is a variable, e is an expression

We (usually) dont worry about declarations

no-operation statement skip

Does nothing at all: just like ; by itself in C++

11

12 CHAPTER 3. TWO SIMPLE PROGRAMMING LANGUAGES

sequence S

1

; S

2

Note that ; is a separator (like Pascal, unlike C++)

selection if cond then S

1

else S

2

Usually no need for

Will omit else skip

Can chain conditions:

if C

1

then S

1

elsif C

2

then S

2

else S

n

iteration while cond do S

procedures: (a bit like static methods in Java)

procedure name(parameters);

begin

S

end

The heading names the program, and lists its inputs and outputs.

Can declare local variables (with types) after the heading: usually we

wont bother.

The program can be invoked from other programs simply by naming it: if

we have dened

procedure A(in x; out y); begin y:=x end

we can then call

A(2, z)

with the same eect as z := 2.

3.3 Comparing strings again

procedure Equal(in s, t; out r);

begin

if [s[ = [t[ then

k := 0; r := true;

while k < [s[ do

if s[k] ,= t[k] then

r := false;

k := k + 1

else

r := false

end

3.4 The Applicative Language

Purely applicative (or functional ) languages have no assignable variables.

3.5. THOSE STRINGS AGAIN ... 13

They are more like mathematical functions.

Programming in this style may seem unfamiliar, but it is much easier to get

right than imperative programming.

The basic constructs are function denition and function call. For ex-

ample:

add(x, y)

= x +y

double(x)

= add(x, x)

Instead of a selection statement, we have a conditional expression:

if cond then E

1

else E

2

is an expression whose value is E

1

or E

2

according to the value of cond.

For example:

abs(x)

=

if x 0 then

x

else

x

Instead of a looping construct, we use recursion.

The denition of a function f can include calls on f itself (mathematically a

no-no, but familiar from recursive procedures/methods in imperative program-

ming).

For example:

mul(x, y)

=

if x < 0 then

mul(abs(x), y)

elsif x = 0 then

0

else

add(y, mul(x 1, y))

3.5 Those strings again ...

Equal(s, t)

=

if [s[ = [t[ then Equal

(s, t, 0) else false

Equal

(s, t, k)

=

if k [s[ then true

elsif s[k] ,= t[k] then false

14 CHAPTER 3. TWO SIMPLE PROGRAMMING LANGUAGES

else Equal

(s, t, k + 1)

3.6 Using head and tail

Equal(s, t)

=

if isempty(s) and isempty(t) then true

elsif isempty(s) or isempty(t) then false

elsif head(s) ,= head(t) then false

else Equal(tail(s), tail(t))

Chapter 4

Reasoning about Programs

4.1 Some Questions about Languages

1. Is program P a valid program in language L?

2. How many valid sentences are there in language L?

3. What sentences are in the intersection of languages L

1

and L

2

?

4. How hard is the decision question for language L?

5. What does program P mean?

6. Does program P terminate when given input x?

7. What output does it produce?

8. Do programs P and Q do the same thing?

9. Is there a program in language L

1

that does the same thing as program

P in language L

2

?

10. Are languages L

1

and L

2

equally expressive?

4.2 Syntax

The rst four questions are about syntax.

They concern the form of sentences in a language, not the meanings.

In Parts I and II (weeks 310) of the course, we will look at issues of syntax:

What is a language?

How can we dene the set of all valid sentences?

How can we (mechanically) decide whether a sentence is valid in a lan-

guage?

15

16 CHAPTER 4. REASONING ABOUT PROGRAMS

What relationships are there between dierent models of languages?

4.3 Semantics

Semantics tells us what a (syntactically valid) programmeans: what will happen

if it is run with any given input.

Dening semantics is harder than dening syntax: we need a suitable (math-

ematical) model of program execution.

Such a model may be operational, denotational, or axiomatic:

an operational model denes some machine and a procedure for translating

programs into instructions for that machine (Part III of the course)

a denotational model denes mathematical functions that directly capture

the behaviour of the program (COMP 304)

an axiomatic model provides rules for reasoning about the specications

that a program satises.

We will develop a simple axiomatic model for while-programs.

4.4 Comparing Strings One More Time

procedure Equal(in s, t; out r);

begin

0 if |s| = |t| then

1 k := 0;

2 r := true;

3 while k < |s| do

4 if s[k] = t[k] then

5 r := false;

7 k := k + 1

8 else

9 r := false

10 end

How can we convince ourselves that it is correct?

Testing.

Mathematical reasoning.

We can reason about dierent input cases (case analysis) and the corre-

sponding execution sequences:

If [s[ , = [t[, the test at line 0 fails, and the program sets r to false (line 9),

which is the correct output in this case.

If [s[ = [t[, the test succeeds, and the program sets r to true (line 2), then

goes into the loop (lines 37).

4.4. COMPARING STRINGS ONE MORE TIME 17

Now this kind of reasoning breaks down, because we dont know how many

times the program will go round the loop. We have to reason in a way that is

independent of the number of iterations performed.

This means we need induction.

We need to identify some property that:

holds every time execution reaches the top of the loop (line 3)

guarantees the required result when the loop exits (after line 7).

When execution reaches the top of the loop, we know:

[s[ = [t[

0 k [s[

r = true i s[0 .. k 1] = t[0 .. k 1]

i.e. r (i 0 .. k 1)s[i] = t[i]

This is called a loop invariant.

We need to show that:

1. The loop invariant holds on entry to the loop.

2. If the loop invariant holds at the top of the loop, and the loop body is

executed, it will hold again at the top of the loop.

3. If the loop invariant holds at the top of the loop, and the loop exits, the

required property (the postcondition) holds afterwards.

Together, these constitute a proof by induction that the loop does what is

required.

1. Invariant holds on entry:

[s[ = [t[ by the if condition.

0 k [s[, since k = 0 (line 1) and 0 [s[ by denition.

k = 0 means that (i 0 .. k 1)s[i] = t[i] is trivially true; and

r = true.

2. Invariant is maintained by the loop:

[s[ = [t[ is unchanged, because s and t dont change.

0 k < [s[ is true initially (induction hypothesis), and k < [s[ (loop

condition), so 0 k [s[ 1.

r s[0 .. k 1] = t[0 .. k 1] initially (induction hypothesis).

18 CHAPTER 4. REASONING ABOUT PROGRAMS

Now, if s[k] = t[k] (line 4), r remains unchanged, and s[0..k] = t[0..k] i

s[0..k 1] = t[0..k 1].

On the other hand, if s[k] ,= t[k], r becomes false (line 5), and so does

s[0..k] = t[0..k].

In either case, 0 k [s[ 1 r s[0..k] = t[0..k].

Now, the next iteration of the loop will increment k, so whatever is true

of k now will be true of k 1 at the start of the next iteration.

So the loop invariant holds again, as required.

3. Postcondition holds at loop exit:

When [s[ = [t[,

0 k [s[,

r (s[0 .. k 1] = t[0 .. k 1]), and

k ,< [s[

all hold,

k = [s[, so r s[0 .. [s[ = 1] = t[0 .. [s[ 1].

But s[0 .. [s[ = 1] = s and t[0 .. [s[ 1] = t (remember [s[ = [t[), hence

r s = t.

Thus, the program will set r to true if s = t, and to false if s ,= t.

4.5 Laws for Program Verication

In verifying the above program, we used several kinds of knowledge and reason-

ing:

Standard laws of mathematics/logic.

Properties of operations used in the specication and program.

E.g. indexing, string length, string equality.

Laws for reasoning about program execution: assignments and control

structures.

Let us know make our use of the laws about execution a little more precise.

4.6 Reasoning with Assertions

Our reasoning was based on intuition about what must be true at particular

points in the execution.

For example, before line 3 we knew that [s[ = [t[, because we were inside the

then part of the if statement at line 0.

We also knew that k = 0 and r = true because of lines 1 and 2.

We can formalize that intuition by annotating the lines of the program with

assertions: logical statements that are known to be true immediately before the

line is executed.

Verication then proceeds by showing that assertions are indeed satised.

4.6. REASONING WITH ASSERTIONS 19

procedure Equal(in s, t; out r);

begin

{I0} if |s| = |t| then

{I1} k := 0;

{I2} r := true;

{I3} while k < |s| do

{I4} if s[k] = t[k] then

{I5} r := false

{I6} else skip;

{I7} k := k + 1

{I8} else

{I9} r := false

{I10} end

I

0

is just the precondition: in this case,

I

0

= true.

I

1

is true when we are inside the then part of the if.

A law for if tells us I

1

= I

0

[s[ = [t[; that is,

I

1

= [s[ = [t[.

Law for if Pif C then P CS

1

else P CS

2

I

3

is the loop invariant. There is no easy way to nd a loop invariant: the

only way is to understand how the loop works. In this case, we know that

0 k [s[, and that the purpose of the loop is to decide whether s = t up to

but not including element k. We also know that [s[ = [t[, from I

1

. Hence:

I

3

= 0 k [s[ (r s[0 .. k 1] = t[0 .. k 1]) [s[ = [t[.

We must show that the loop invariant holds initially; that is, I

1

k :=

0; I

2

r := trueI

3

is correctly annotated.

This requires reasoning about a sequence (;)

of assignment statements (:=).

Law for ; PS

1

Q QS

2

R

PS

1

; S

2

R

Our S

1

is k := 0 and our S

2

is r := true.

Our P, Q, and R are respectively I

1

, I

2

, and I

3

.

We must nd I

2

such that I

1

k := 0I

2

and I

2

r := trueI

3

.

Law for := Q[e/x]x := eQ

For I

2

r := trueI

3

, we need I

2

to be I

3

[true/r]: that is, I

2

= 0 k

[s[ (true s[0 .. k 1] = t[0 .. k 1]) [s[ = [t[.

20 CHAPTER 4. REASONING ABOUT PROGRAMS

Now, I

1

k := 0I

2

follows as long as I

1

is I

2

[0/k]:

indeed, 0 0 [s[ (true s[0 .. 0 1] = t[0 .. 0 1]) [s[ = [t[ is the same as

I

1

by simplication.

Immediately inside the loop, the loop invariant (I

3

) still holds, and k

[s[ 1, by the loop condition. (We are using a Law for while here, but lets

skip the formality.)

Hence:

I

4

= (r s[0 .. k 1] = t[0 .. k 1]) [s[ = [t[ k [s[ 1.

The if law tells us that s[k] ,= t[k] at I

5

, so:

I

5

= I

4

s[k] ,= t[k].

The assignment law justies adding r = false (check it!):

I

6

= I

5

r = false.

Before line 7, either the then part was taken, in which case I

6

holds; or, it

was not taken, in which case, I

4

still holds (skip law). So:

I

7

= (I

6

) (s[k] = t[k] I

4

).

After line 7, we require I

3

to hold again, to maintain the loop invariant.

Hence, we must prove: I

7

k := k + 1I

3

.

The assignment law will justify this (check it).

After the loop exits, we know that the loop invariant I

3

still holds, and that

k = [s[. Thus:

I

8

= 0 [s[ [s[ r s[0..[s[ 1] = t[0..[s[ 1] [s[ = [t[.

In the else branch of the main if, we get:

I

9

= [s[ , = [t[.

In that case, we assign r := false.

Finally, at end the two branches of the outer if come together:

I

10

= (I

8

) (I

9

r = false)

which implies r s = t (exercise).

Part I

Regular Languages

21

Chapter 5

Preliminaries

5.1 Part I: Regular Languages

In this part of COMP 202 we will begin to look at formal languages, and at how

they can be dened.

In this lecture we will introduce some of the basic notions required. In

subsequent lectures we will look at:

dening languages using regular expressions

dening languages using nite automata

We will also explore the relationship between these ways of dening lan-

guages.

5.2 Formal languages

What we say formal we mean that we are concerned simply with the form (or

the syntax) of the language.

We are not concerned with the meaning (or semantics) of the symbols.

The theory of syntax is very much more straightforward and better under-

stood than the theory of semantics.

We are not concerned with trying to understand natural languages like

English or M aori, nor even with the question of whether the tools we develop to

deal with formal languages are appropriate for dealing with natural languages.

5.3 Alphabet

Denition 1 (Alphabet) An alphabet is a nite set of symbols.

Example: Alphabets

23

24 CHAPTER 5. PRELIMINARIES

1. 1, 0

2. true, false, pencil, &&&&, ???

3. ), k, , , f

4. enter, leave, left-down, right-down, left-up, right-up

In the textbook, Cohen separates the elements of a set with space rather

than a comma.

The symbols themselves are meaningless, so we may as well pick a convenient

alphabet: Cohen uses a b in his examples.

Conventionally we use and (occasionally) as names for alphabets.

5.4 String

Denition 2 (String) A string over an alphabet is a nite sequence of symbols

from that alphabet.

Example: Some strings

Examples: if we have an alphabet = ), k, , , f then

1. )

2. k

3. kkf

4.

5. k))k)f

6. k)

are strings over .

String number 4 is the empty string. We need a better notation for the

empty string than simply not writing anything, so we use a meta-symbol for it.

Dierent authors use dierent meta-symbols, the most common ones being

, , and . Cohen chooses , so we might as well, too.

Note that is a string over any alphabet, but is not a symbol in the

alphabet.

5.5 Language, Word

Denition 3 (Language) A language is a set of strings.

Denition 4 (Word) A word is a string in a language.

5.6. OPERATIONS ON STRINGS AND LANGUAGES 25

Much of the rest of this course is devoted to investigating the related prob-

lems of:

how we can dene languages, and

how we can show whether or not a given string is a word in a language.

5.6 Operations on Strings and Languages

Denition 5 (Concatenation) If s and t are strings then the concatenation

of s and t, written st, is a string.

st consists of the symbols of s followed immediately by the symbols of t.

We freely allow ourselves to concatenate single symbols onto strings.

We can concatenate two languages. If L

1

and L

2

are languages then:

L

1

L

2

= st[s L

1

, t L

2

Denition 6 (Length of a string) The length of a string is the number of

occurrences of symbols in it.

If s is a string we write [s[ for the length of s.

We can give a recursive algorithm for [ [:

[[ = 0

if x is a single symbol and y a string, then [xy[ = 1 +[y[

Denition 7 (Kleene closure (Kleene *)) If S is a set then S

is the set

consisting of all the sequences of elements of S

Note: is in S

.

We can dene S

inductively:

S

if x S and y S

then xy S

If is an alphabet then

is a language over .

If L is a language then L

is a language.

Example: Kleene closure

if = 1, 0 then

= , 1, 0, 11, 10, 01, 00, . . .

if L = kk, k then L

= , kk, k, kkkk, kkk, kkk,

kk, . . .

Question: what is (

?

26 CHAPTER 5. PRELIMINARIES

Denition 8 (Kleene +) If S is a set then S

+

is the set consisting of all the

non-empty sequences of elements of S

Note: is not in S

+

, unless it was in S.

We can dene S

+

inductively:

if x S then x S

+

if x S and y S

+

then xy S

+

We can also dene S

+

as SS

5.7 A proof

Throughout this course we will perform proofs by induction, so we begin here

with a simple one.

When we say that a string is a sequence of symbols over an alphabet we

mean that a string is either:

the empty string , or

a symbol from followed by a string over

So, if we want to prove properties of strings over some alphabet we can

proceed as follows:

prove the property holds of the empty string , and

prove the property holds of a string xy where x is an arbitrary element of

and y an arbitrary string over assuming the property holds of y.

More formally, in order to show P(s) for an arbitrary string s over an alpha-

bet , show:

Base case P()

Induction step P(xy), given P(y), where x is an arbitrary element of , y an

arbitrary string over

This sort of pattern of inductive proof occurs in very many situations in the

mathematics required for computer science.

5.8 Statement of the conjecture

The conjecture that we will prove by induction is:

Conjecture 1 [st[ = [s[ +[t[

In English: the length of s concatenated with t is the sum of the lengths of

s and t.

We proceed by induction on s, where P(s) is [st[ = [s[ +[t[.

5.9. BASE CASE 27

5.9 Base case

P() is [t[ = [[ +[t[.

We show that the LHS and the RHS of this equality are just the same.

On the LHS we use the fact that t = t, to give us [t[

On the RHS we observe that [[ = 0 and 0 +n = n to give us [t[.

Thus LHS = RHS, and the base case is established.

5.10 Induction step

P(xy) is [(xy)t[ = [xy[ + [t[.

We must show [(xy)t[ = [xy[ +[t[, given [yt[ = [y[ +[t[.

On the LHS we use the associativity of concatenation to observe that (xy)t =

x(yt).

Next we use the denition of [ [ to see that the LHS is 1 +[yt[.

The RHS is [xy[ + [t[ We use the denition of [ [ to see that the RHS is

1 +[y[ +[t[.

Now we use the induction hypothesis [yt[ = [y[ + [t[ to show that the RHS

is 1 +[yt[.

So we have shown that LHS = RHS in the induction step.

5.11 A theorem

We have established both the base case and the induction step, so we have

completed the proof.

Now our conjecture can be upgraded to a theorem:

Theorem 1 [st[ = [s[ +[t[

28 CHAPTER 5. PRELIMINARIES

Chapter 6

Dening languages using

regular expressions

6.1 Regular expressions

In this lecture we will see how we can dene a language using regular expressions.

The rst step is to give an inductive denition of what a regular expression

is.

Before we dene regular expressions we will give a similar denition for

expressions of arithmetic, to illustrate the method used.

6.2 Arithmetic expressions

We will now give a formal inductive description of the expressions we have in

arithmetic.

if n N then n is an arithmetic expression;

if e

1

and e

2

are expressions then so are

(e

1

)

(e

1

+e

2

),

(e

1

e

2

),

(e

1

e

2

),

(e

1

/e

2

),

(e

e2

1

) (we could have chosen to use a symbol, such as ^, rather than

just use layout)

This denition forces us to include lots of brackets:

((1+2)+3)

29

30CHAPTER 6. DEFINING LANGUAGES USING REGULAR EXPRESSIONS

(1+(2+3))

are expressions, but

1+(2+3)

1+2+3

are not.

6.3 Simplifying conventions

We do not need to use all these brackets if we adopt some simplifying conven-

tions.

First we allow ourselves to drop the outermost brackets, so we can write:

(1+2)+3

rather than:

((1+2)+3)

We also adopt some conventions about the precedence and associativity of

the operators.

6.4 Operator precedence

+ and are of lowest precedence

and / are next

^ is highest

So we can write:

2 + 3 4

5

rather than:

2 + (3 (4

5

))

6.5 Operator associativity

We also adopt a convention that all the operators are left associative. This

means that if we have a sequence of operators of the same precedence we ll in

the brackets to the left.

So we can write:

1 + 2 + 3

rather than:

(1 + 2) + 3

We can have right associative operators, or non-associative operators.

6.6. CARE! 31

6.6 Care!

Be careful not to confuse the two statements:

1. # is a left associative operator

2. # is an associative operation

1 means that we can write x#y#z instead of (x#y)#z.

2 means that (xyz).(x#y)#z = x#(y#z).

In the examples above + and / are both left associative. However, addition

is associative, but division is not:

60/6/2 = 5

60/(6/2) = 20

6.7 Dening regular expressions

If is an alphabet then:

the empty language and the empty string are regular expressions

if r then r is a regular expression

if r is a regular expression then so is (r

)

if r and s are regular expressions then so are

(r +s)

(rs)

6.8 Simplifying conventions

We allow ourselves to dispense with outermost brackets.

has the highest precedence and + the lowest.

We take all operators to be left-associative, so we write:

r +s +t instead of (r +s) +t

rst instead of (rs)t

32CHAPTER 6. DEFINING LANGUAGES USING REGULAR EXPRESSIONS

6.9 What the regular expressions describe

So far we have said what the form of a regular expression is, without explaining

what it describes. This is as if we had explained the form of the arithmetic

expressions without explaining what the operations of addition, subtraction and

so on were.

The regular expressions describe sets of strings over , that is languages, so

we must explain what languages the regular expressions describe.

We write Language(r) for the language described by regular expression r.

When we are being sloppy we use r for both the regular expression and the

language it describes.

Language()

The language described by is the empty language .

Language()

The language described by is .

Note , =

Language(r), r

The language described by r, r is r

Example:

If = 0, 1 then:

Language(0) = 0

Language(1) = 1

Language(r

)

The language described by r

is the Kleene closure of the language de-

scribed by r.

Example:

If = 0, 1 then:

Language(0

) = , 0, 00, 000, . . .

Language(1

) = , 1, 11, 111, . . .

Language(r +s)

The language described by r + s is the union of the languages described

by r and s.

Example:

If = 0, 1 then:

Language(1 +0) = 1, 0

Language(1

+0

) = , 0, 1, 00, 11, 000, 111, . . .

6.10. DERIVED FORMS 33

Language(rs)

The language described by rs is the concatenation of the languages de-

scribed by r and s.

Example:

If = 0, 1 then:

Language(10) = 10

Language((1 +0)(1 +0)) = 11, 10, 01, 00

Language((1 +0)0

) = 1, 0, 10, 00, 100, 000, 1000, . . .

6.10 Derived forms

Sometimes we use these derived forms:

r

+

=

def

rr

r

n

=

def

rr . . . rr

n rs

34CHAPTER 6. DEFINING LANGUAGES USING REGULAR EXPRESSIONS

Chapter 7

Regular languages

7.1 Regular Languages

In the last lecture we introduced the regular expressions and the operations that

the regular expressions correspond to.

The regular expressions allow us to describe languages. A language which

can be described by a regular expression is called a regular language.

If is an alphabet, r is a symbol, and r is a regular expression, then:

Language() is

Language() is

Language(r), r is r

Language(r

) is (Language(r))

Language(r +s) is Language(r) Language(s)

Language(rs) is Language(r)Language(s)

7.2 Example languages

In this lecture we will look at the languages which we can describe with regular

expressions. We will usually restrict attention to = 0, 1

Example: Language(0 +1)

Language(0 +1)

= Language(0) Language(1)

= 0 1

= 0, 1

35

36 CHAPTER 7. REGULAR LANGUAGES

Example: Language(1 +0)

Language(1 +0)

= Language(1) Language(0)

= 1 0

= 1, 0

We see that 1 +0 and 0 +1 describe the same language

Two regular expressions which describe the same language are equal regular

expressions, so we can write:

1 +0 = 0 +1

We can be more general, however. For all regular expressions, r, s, we have:

r +s = s + r

because for all sets S

1

, S

2

we have S

1

S

2

= S

2

S

1

The dierence between 1 +0 = 0 +1 and r +s = r +s is just the same as

the dierence between 3 + 2 = 2 + 3 and x +y = y +x.

You would not infer x +x = x x from 2 +2 = 2 2 in ordinary arithmetic.

Example: Language((0 +1)

)

Language((0 +1)

)

= (Language(0 +1))

= (Language(0) Language(1))

= (0 1)

= 0, 1

= , 0, 1, 00, 01, 10, 11, . . .

Example: Language((0

)

Language((0

)

= (Language(0

))

= (Language(0

)Language(1

))

= (c

1

. . . c

n

[c

i

0, n N, 1, 11, . . .)

= , 1, 11, . . . , 0, 01, 011, . . .

= , 0, 1, 00, 01, 10, 11, . . .

The reasoning above shows that: (0 +1)

= (0

How would you generalise the reasoning given above to show for all regular

expressions, r, s:

(r +s)

= (r

?

7.3. FINITE LANGUAGES ARE REGULAR 37

7.3 Finite languages are regular

A nite language is one which has a nite number of words.

Theorem 2 Every nite language is a regular language.

Proof

A language is regular if it can be described by a regular expression.

A nite language can be written as: w

1

, . . . , w

n

for some n N. This

means we can write it as: w

1

. . . w

n

.

Note: Recall that if n = 0 in the above then we are dealing with the empty

language, which is certainly regular.

If we can represent each of the w

i

as a regular expression w

i

, then the

language is described by w

1

+. . . +w

n

.

Each word is just a string of symbols s

1

. . . s

m

for some m N. So each

word is the only word in the language s

1

. . . s

m

.

Note: Recall that if m = 0 in the above then we are dealing with the empty

string, which is certainly a regular expression.

Hence, every nite language can be described by a regular expression.

Example: Finite languages

1, 11, 111, 1111 is Language(1 +11 +111 +1111)

00, 01, 10, 11 is Language(00 +01 +10 +11)

Notice that 00, 01, 10, 11 is also Language((1 + 0)(1 + 0)), but to show

that the language is regular we only need to provide one regular expression

describing it.

7.4 An algorithm

The proof that every nite language is regular provides us with an algorithm

which takes a nite language and returns a regular expression which describes

the language.

Many of the proofs that we see in this course have an algorithmic character.

Such algorithmic proofs are often called constructive, and provide the basis for

a program.

Conversely every program is a constructive proof (even if we are usually not

interested in what it is a proof of).

7.5 EVEN-EVEN

We now describe a language which Cohen introduces, which he calls EVEN-

EVEN.

EVEN-EVEN = Language((00 +11 + (01 +10)(00 +11)

(01 +10))

)

It may not be immediately obvious what language this is (or rather, if there

is a simple description of this language).

However every word of EVEN-EVEN contains an even number of 0s and an

even number of 1s.

38 CHAPTER 7. REGULAR LANGUAGES

Furthermore EVEN-EVEN contains every string with an even number of 0s

and an even number of 1s.

7.6 Deciding membership of EVEN-EVEN

Suppose we are given the task of writing a program to decide whether a given

string is in EVEN-EVEN. How would we go about this task?

One method would be to use two counters, n

0

and n

1

, and to go through

the string counting all the 0s and all 1s. If n

0

and n

1

are both even at the end

of the string then the string is in EVEN-EVEN.

If we call the pair n

0

, n

1

) a state of the program then our program will

need to be able to go through as many states as there are symbols in a string

to decide membership.

Is there a program which uses fewer states?

7.7 Using fewer states

We dont actually care now many 0s and 1s there are, so we could use two

boolean ags b

0

and b

1

. As we go through the string we ip the appropriate ag

as we read each symbol. If both the ags end up in the same state that they

started in then the string is in EVEN-EVEN.

If we call the pair b

0

, b

1

) a state of the program then our program will need

to be able to go through at most four distinct states to decide membership.

7.8 Using even fewer states

Suppose, instead of reading the symbols one by one, we read them two by two.

Now we need only use one boolean ag, and we do not have to ip it all the

time.

If both the symbols we read are the same then we leave the ag alone. If

they dier then we ip the ag. Two dierent symbols means we have just read

and odd number of 0s and 1s. If we had read an even number before we have

now read an odd number, if we had read an odd number before we have now

read an even number.

If the ag ends up in the same state it began in then the string is in EVEN-

EVEN.

The program need go through at most two distinct states to decide mem-

bership.

7.9 Uses of regular expressions

Regular expressions turn out to have practical uses in a variety of places.

7.9. USES OF REGULAR EXPRESSIONS 39

We can informally think of a regular expression as describing the most typical

string in a language.

The tokens of a programming language can usually be described by regular

expressions.

For example, a type name might be an uppercase letter followed by a se-

quence of uppercase letters, lowercase letters or underscores, and so on.

The task of identifying the tokens in a program is called lexical analysis,

and is the rst step in compiling the program. The UNIX utility lex is a

lexical-analyser generator: given regular expressions describing the tokens of

a language it generates a program which will perform lexical analysis. Since

regular expressions describe typical strings they are the basis for many searching

and matching utilities.

The UNIX utility grep allows the user to specify a regular expression to

search for in a le.

Many text editors provide a facility similar to grep for searching for strings.

Programming languages which are oriented towards text processing usually

allow us to describe strings using regular expressions.

40 CHAPTER 7. REGULAR LANGUAGES

Chapter 8

Finite automata

8.1 Finite Automata

We leave regular expressions and introduce nite automata.

Finite automata provide us with another way to describe languages.

Note Cohen uses the term nite automaton (FA) where many other au-

thors use the term deterministic nite automaton (DFA). After we have intro-

duced deterministic nite automata we will introduce non-deterministic nite

automata. In common with everybody else, Cohen calls these NFAs.

8.2 Formal denition

Denition 9 (Finite automaton) A nite automaton is a 5-tuple (Q, , , q

0

, F)

where:

Q is a nite set: the states

is a nite set: the alphabet

is a function from Q to Q: the transition function

q

0

Q: the start state

F Q: the nal or accepting states.

Note: F may be Q, or F may be the empty set.

8.3 Explanation

While this is all very well it does not help us see what nite automata do, or

how.

Suppose we have a string made up of symbols from . We begin in the start

state q

0

. We read the rst symbol s from the string, and then enter the state

41

42 CHAPTER 8. FINITE AUTOMATA

given by (q

0

, s). We then read the next symbol from the string and use the

transition function to move to a new state, repeating this process until we reach

the end of the string.

If we have read all the string, and are in one of the accepting states we say

that the automaton accepts the string.

The language accepted by the automaton is the set of all the strings it

accepts.

So automata give us a way to describe languages.

8.4 An automaton

Suppose we have an automaton M

1

= (Q, , , q

0

, F), where:

Q = S

1

, S

2

, S

3

= 0, 1

(S

1

, 1) = S

2

(S

2

, 1) = S

3

q

0

= S

1

F = S

3

Lets see if M

1

accepts 1.

We begin in state S

1

and we read 1. (S

1

, 1) = S

2

so we enter S

2

.

Our string is empty, but S

2

is not a nal state, so M

1

does not accept 1.

Lets see if M

1

accepts 11. We begin in state S

1

and we read 1. (S

1

, 1) = S

2

so we enter S

2

.

Now we read 1. (S

2

, 1) = S

3

so we enter S

3

.

Our string is empty, and S

3

is an accepting state so M

1

accepts 11.

Lets see if M

1

accepts 0. We begin in state S

1

and we read 0. is a partial

function, and there is no value given for (S

1

, 0). We cannot make any progress

here, and so 0 is not in the language accepted by M

1

. We can think of the

machine as crashing on this string.

Note: here we dier from Cohen. He insists (initially, at least) that be

total, and adds a black hole state to all his machines, whereas we allow to

be partial.

Cohen would have an new state S

4

, and would extend with:

(S

1

, 0) = S

4

(S

2

, 0) = S

4

(S

4

, 0) = S

4

(S

4

, 1) = S

4

Since S

4

is not an accepting state, and since once we enter it there is no way

to leave, this extension does not change the language accepted by the machine.

8.5. THE LANGUAGE ACCEPTED BY M

1

43

8.5 The language accepted by M

1

A moments reection will show that the only string which M

1

accepts is 11,

and so the language M

1

accepts is Language(11).

8.6 Another automaton

Suppose we have an automaton M

2

= (Q, , , q

0

, F), where:

Q = S

1

, S

2

, S

3

= 0, 1

(S

1

, 1) = S

2

(S

2

, 1) = S

3

(S

3

, 0) = S

3

(S

3

, 1) = S

3

q

0

= S

1

F = S

3

M

2

is nearly the same as M

1

, but now we have transitions from S

3

to itself on

reading either a 0 or a 1.

What language does M

2

accept?

Clearly the only way to get from S

1

to S

3

is to begin with two 1s. If the

string is just 11 it will be accepted.

What about 111? This will be accepted too, as (S

3

, 1) = S

3

.

What about 110? This will be accepted too, as (S

3

, 0) = S

3

.

In fact any extension of 11 will be accepted, so we see that M

2

accepts

Language(11(0 +1)

).

8.7 A pictorial representation of FA

It is often easier to see what an FA does if we draw a picture of it.

We can draw a nite automaton out as a labelled, directed graph. Each state

of the machine is a node, and the transition function tells us which nodes are

connected by which edges. We mark the start state, and any accepting states

in some special way.

M

1

can be represented as:

` _

1

1

\

2

1

` _

3

+

M

2

can be represented as:

` _

1

1

\

2

1

` _

3

+

1,0

44 CHAPTER 8. FINITE AUTOMATA

Note: Sometimes the start state is pointed to by an arrow, and the accepting

states are drawn as double circles or squares, e.g.:

\

1

1

\

2

1

\

3

1,0

or

\

1

1

\

2

1

3

1,0

8.8 Examples of constructing an automaton

If L is a language over some alphabet , then L, the complement of L, is

s[s

&s , L.

If we have some automaton M

L

, which accepts L, then we can construct an

automaton M

L

which accepts L.

M

L

will accept just the strings which M

L

does not, and will not accept just

the strings which M

L

does.

To recognise the complement of a language, we can think of ourselves as

going through the same steps as we would take to recognise the language, but

making the opposite decision at each state.

We then expect M

L

and M

L

, to have the same states, but an accepting state

of M

L

will not be an accepting state of M

L

and vice versa.

8.9 Constructing M

L

M

2

from above accepts Language(11(0 +1)

).

Our rst suggestion for a machine, M

3

to accept Language(11(0 +1)

) is

to have:

the same set of states,

the same transition function,

the complement of the set of accepting states of M

2

.

M

3

= (Q, , , q

0

, F), where:

Q = S

1

, S

2

, S

3

= 0, 1

(S

1

, 1) = S

2

(S

2

, 1) = S

3

(S

3

, 0) = S

3

(S

3

, 1) = S

3

8.10. A MACHINE TO ACCEPT LANGUAGE(11(0 +1)

) 45

q

0

= S

1

F = S

1

, S

2

F(M

3

) is QF(M

2

), as you would expect. Graphically:

` _

1

,+

1

` _

2

+

1

\

3

1,0

This automaton correctly accepts and the string 1.

What about the string 0?

The machine crashes, so, close, but no cigar.

The problem is that M

2

and M

3

both crash on the same strings: M

3

should

accept the strings that M

2

crashes on, and vice versa.

What we have to do is to convert our initial machine into a machine which

accepts the same language and whose transition function is total. We can always

do this. (How?)

Then we construct a third machine whose set of accepting states is the

complement of that of the second machine.

8.10 A machine to accept Language(11(0 +1)

)

M

4

= (Q, , , q

0

, F), where:

Q = S

1

, S

2

, S

3

, S

4

= 0, 1

q

0

= S

1

F = S

1

, S

2

, S

4

(S

1

, 0) = S

4

(S

1

, 1) = S

2

(S

2

, 0) = S

4

(S

2

, 1) = S

3

(S

3

, 0) = S

3

(S

3

, 1) = S

3

(S

4

, 0) = S

4

(S

4

, 1) = S

4

Graphically:

` _

1

,+

`

0

1

` _

2

+

1

_

0

\

3

1,0

` _

4

+

1,0

46 CHAPTER 8. FINITE AUTOMATA

8.11 Summary

We have outlined a method which allows us to construct a machine which accepts

L from a machine which accepts L.

We can think of this construction a proof of the theorem:

Theorem 3 If a language L can be dened using an FA, then so can the lan-

guage L

Chapter 9

Non-deterministic Finite

automata

9.1 Non-deterministic Finite Automata

We now introduce a variant on the Finite Automaton, the Non-deterministic

nite automaton (NFA).

The dierence between an NFA and an FA is that, in an NFA more than

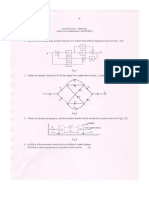

one arc leading from a state may be labelled by the same symbol.